Social Robots Outdo the Not-So-Social Media for Self-Disclosure: Safe Machines Preferred to Unsafe Humans?

Abstract

:1. Introduction

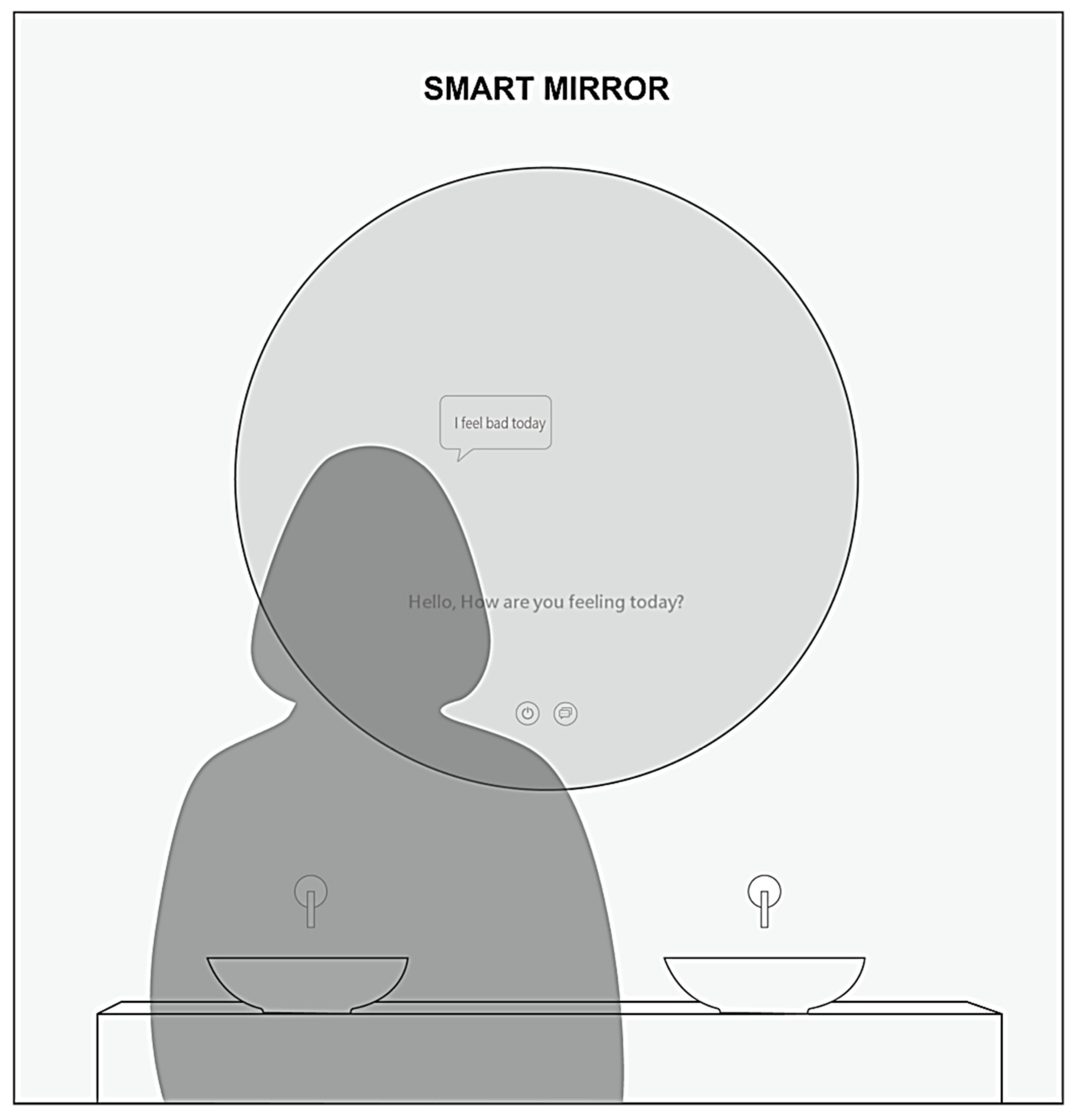

2. Social Media Aggravates the Problem

3. Social Robots as Possible Alternative

4. Method

4.1. Participants and Design

4.2. Procedure

5. Apparatus and Materials

5.1. Video Materials

- Internet video in memory of the Wenchuan Sichuan earthquake 10th anniversary (cut from 00:02–01:19). Available from https://www.bilibili.com/video/av23087386/ (accessed on 13 June 2019)

- Dazzz2009 (31 December 2008). Internet video record of 512 earthquake in Dujiangyan (cut from 01:20–01:59). Available from https://www.youtube.com/watch?v=vz0ngbl81fmandlist=plf2ppwdjsx1d6r vuw0vagfzhvir_nro_8andindex=2 (accessed on 13 June 2019)

- Lantian777 (16 May 2008). Internet video 10 min after Wenchuan Sichuan earthquake (in full). Available from https://www.youtube.com/watch?v=PI5KL7nvU28 (accessed on 14 June 2019)

5.2. Chat-Group Responses

- (1)

- Data crawling: On Douban, we sampled the texts from group discussions since 2016 around the topic “Let me talk to you about the philosophy behind breaking up and disconnecting.” Information extraction concerned author, time, and contents. We used the request library and tools in the Python programming language to set up a circular structure and record information, which was written to MS Excel documents.

- (2)

- Data cleaning (word segmentation/de-terminating/tense restoration): We wrote the xls document to the Python IDLE editor and used NLTK/Beautiful soup/NumPy libraries to process the text: 1. Use the word segmentation tool to remove punctuation, paragraphs, etc. 2. Remove function words, such as ‘and,’ ‘or,’ ‘the.’ 3. Restore verb tenses and convert parts of speech.

- (3)

- Sentiment analysis: We used “The Taiwan University Chinese Semantic Dictionary” (NTUSD) to score the text data after word segmentation, and calculated the total score. Total score = (word score × positive emotion score)—(word score × negative emotion score). Then the positive, neutral, and negative sentences in 10,115 text sentences were counted.

- (4)

- Statistical results (Figure 3): There were 3633 (36.00%) positive statements, 3562 (34.96%) neutral statements, and 2895 (28.69%) negative statements in total.

- (5)

- Typical feedback: From the responses under (4), we compiled a list of hot topics (e.g., wronged, cheated, dissatisfied) (Figure 4) and combined them into ‘typical social-media replies’ to send to our participants. For example, “People bring this on themselves” or “You have to pull yourself together and keep strong” (Appendix B).

5.3. Measures

6. Results

Manipulation Check

7. Effects of Media on Valence

7.1. GLM Repeated Measures for Bipolar Valence Before-After

7.2. GLM Univariate (Oneway-ANOVA) for ΔValence (Bipolar)

7.3. GLM Multivariate (Oneway-MANOVA) for ΔValence (Unipolar)

7.4. Exploration: Variance of Valence (VV) as Indicator of Emotional Instability

8. Discussion

9. Conclusions

About 10 years ago I set up a fairly popular website for people to play the ancient oriental game of Go, Baduk or Weiqi 围棋 against what was at the time a fairly strong AI program (good old fashioned AI). The website had a rather simple chat feature, with two comments: ‘good call’ and iirc ‘try harder’ based on a simple extrapolation of the game position. Searching the logs one day, to get a handle on usage, I was rather disturbed to find that one player had developed a long conversation, with lengthy self-disclosure, always promoted by one of these expressions, usually the latter. (S)he appeared to consider there was a living person responding. (Jonathan Chetwynd, personal communication, 25 September 2021)

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix A.1. Social Media Questionnaire in Chinese

Appendix A.2. Social Media Questionnaire in English

Appendix B

- Let me hug you. Don’t be sad.

- How do you feel now?

- Are you ok?

- You can talk to me if you are upset.

- It would be very sad for me to see such content.

- It was really sad.

- You have to pull yourself together and keep strong.

- Yeah, it makes me sad to see them in pain in the video.

- Human beings are small in the face of disaster.

- We should cherish life, life is unpredictable.

- We never know which will come first, the accident or tomorrow.

- We still have to believe in ourselves.

- Don’t worry so much. Everything will be fine.

- I understand you. I have a similar experience.

- Love you, hug you!

- Well, it’s okay, why are you so sad about it?

- You are a crybaby.

- That’s a bit of a stretch.

- It’s been so long, why make you so sad?

- It serves them right.

- Social media exaggerates it.

- People bring this on themselves.

- Humans are inexorable.

- It serves you right.

- In fact, I doubt that you are really sad?

- Think before you act.

- It’s all your fault.

- I am so tired from your reply.

- What you say is so boring.

References

- United Nations Educational, Scientific and Cultural Organization (UNESCO). COVID-19 Educational Disruption and Response; UNESCO: Paris, France, 2020; Available online: https://en.unesco.org/news/covid-19-educational-disruption-and-response (accessed on 10 December 2021).

- Fung, D. IACAPAP Update: President’s Message on COVID-19; Executive Committee of the International Association of Child and Adolescent Psychiatry and Allied Professionals (IACAPAP): Geneva, Switzerland, 12 April 2020; Available online: https://iacapap.org/iacapap-update-covid-19/ (accessed on 10 December 2021).

- Xafis, V. ‘What is inconvenient for you is life-saving for me’: How health inequities are playing out during the COVID-19 pandemic. Asian Bioeth. Rev. 2020, 12, 223–234. [Google Scholar] [CrossRef]

- Owens, M.; Watkins, E.; Bot, M.; Brouwer, I.A.; Roca, M.; Kohls, E.; Visser, M. Nutrition and depression: Summary of findings from the EU-funded MooDFOOD depression prevention randomised controlled trial and a critical review of the literature. Nutr. Bull. 2020, 45, 403–414. [Google Scholar] [CrossRef]

- Laban, G.; Morrison, V.; Kappas, A.; Cross, E.S. Informal caregivers disclose increasingly more to a social robot over time. CHI Conf. Hum. Factors Comput. Syst. Ext. Abstr. 2022, 329, 1–7. [Google Scholar] [CrossRef]

- De Jong Gierveld, J.; Van Tilburg, T.G. Social isolation and loneliness. In Encyclopedia of Mental Health, 2nd ed.; Friedman, H.S., Ed.; Academic Press: Oxford, UK, 2016; pp. 175–178. [Google Scholar]

- Wang, Q.; Yang, X.; Xi, W. Effects of group arguments on rumor belief and transmission in online communities: An information cascade and group polarization perspective. Inf. Manag. 2018, 55, 441–449. [Google Scholar] [CrossRef]

- Lee, E.J. Deindividuation effects on group polarization in Computer-Mediated Communication: The role of group identification, public-self-awareness, and perceived argument quality. J. Commun. 2007, 57, 385–403. [Google Scholar] [CrossRef]

- Robinson, N.L.; Cottier, T.V.; Kavanagh, D.J. Psychosocial health interventions by social robots: Systematic review of randomized controlled trials. J. Med. Internet Res. 2019, 21, e13203. [Google Scholar] [CrossRef] [PubMed]

- Scoglio, A.A.; Reilly, E.D.; Gorman, J.A.; Drebing, C.E. Use of social robots in mental health and well-being research: Systematic review. J. Med. Internet Res. 2019, 21, e13322. [Google Scholar] [CrossRef] [PubMed]

- Duan, E.Y.; Yoon, J.M.; Liang, E.Z.; Hoorn, J.F. Self-disclosure to a robot: Only for those who suffer the most. Robotics 2021, 10, 98. [Google Scholar] [CrossRef]

- Laban, G.; Ben-Zion, Z.; Cross, E.S. Social robots for supporting Post-Traumatic Stress Disorder diagnosis and treatment. Front. Psychiatry 2022, 12, 752874. [Google Scholar] [CrossRef] [PubMed]

- Wang, R.; Wang, X.; Hao, F.; Zhang, L.; Liu, S.; Wang, L.; Lin, Y. Social identity–aware opportunistic routing in mobile social networks. Trans. Emerg. Telecommun. Technol. 2018, 29, e3297. [Google Scholar] [CrossRef]

- Gillespie, T.; Boczkowski, P.J.; Foot, K.A. Media Technologies: Essays on Communication, Materiality, and Society; MIT: Cambridge, MA, USA, 2014. [Google Scholar]

- Pariser, E. The Filter Bubble: How the New Personalized Web Is Changing What We Read and How We Think; Penguin: London, UK, 2011. [Google Scholar]

- Gillani, N.; Yuan, A.; Saveski, M.; Vosoughi, S.; Roy, D. Me, my echo chamber, and I: Introspection on social media polarization. In Proceedings of the 2018 World Wide Web Conference (WWW’18), Lyon, France, 23–27 April 2018; Champin, P.-A., Gandon, F., Eds.; pp. 823–831. [Google Scholar] [CrossRef]

- Festinger, L. A theory of social comparison processes. Hum. Relat. 1954, 7, 117–140. [Google Scholar] [CrossRef]

- Isenberg, D.J. Group polarization. J. Personal. Soc. Psychol. 1986, 50, 1141–1151. [Google Scholar] [CrossRef]

- Iandoli, L.; Primario, S.; Zollo, G. The impact of group polarization on the quality of online debate in social media: A systematic literature review. Technol. Forecast. Soc. Chang. 2021, 170, 120924. [Google Scholar] [CrossRef]

- Bordia, P.; Rosnow, R.L. Rumor rest stops on the information highway transmission patterns in a computer-mediated rumor chain. Hum. Commun. Res. 1998, 25, 163–179. [Google Scholar] [CrossRef]

- Kata, A. A postmodern Pandora’s Box: Anti-vaccination misinformation on the Internet. Vaccine 2009, 28, 1709–1716. [Google Scholar] [CrossRef] [PubMed]

- Lewandowsky, S.; Ecker, U.K.H.; Seifert, C.M.; Schwarz, N.; Cook, J. Misinformation and its correction: Continued influence and successful debiasing. Psychol. Sci. Public Interest 2012, 13, 106–131. [Google Scholar] [CrossRef] [PubMed]

- Guo, B.; Ding, Y.; Yao, L.; Liang, Y.; Yu, Z. The future of false information detection on social media. ACM Comput. Surv. 2020, 53, 1–36. [Google Scholar] [CrossRef]

- Walter, N.; Brooks, J.J.; Saucier, C.J.; Suresh, S. Evaluating the impact of attempts to correct health misinformation on social media: A meta-analysis. Health Commun. 2021, 36, 1776–1784. [Google Scholar] [CrossRef] [PubMed]

- Croft, C.D.; Zimmer-Gembeck, M.J. Friendship conflict, conflict responses, and instability. J. Early Adolesc. 2014, 34, 1094–1119. [Google Scholar] [CrossRef]

- Casale, S.; Fioravanti, G. Factor structure and psychometric properties of the Italian version of the fear of missing out scale in emerging adults and adolescents. Addict. Behav. 2020, 102, 106179. [Google Scholar] [CrossRef]

- Stead, H.; Bibby, P.A. Personality, fear of missing out and problematic Internet use and their relationship to subjective well-being. Comput. Hum. Behav. 2017, 76, 534–540. [Google Scholar] [CrossRef]

- Fioravanti, G.; Casale, S.; Benucci, S.B.; Prostamo, A.; Falone, A.; Ricca, V.; Rotella, F. Fear of missing out and social networking sites use and abuse: A meta-analysis. Comput. Hum. Behav. 2021, 122, 106839. [Google Scholar] [CrossRef]

- Rademacher, M.A.; Wang, K.Y. Strong-tie social connections. In Encyclopedia of Social Media and Politics; Harvey, K., Ed.; Sage: Los Angeles, CA, USA, 2014; pp. 1213–1216. Available online: https://us.sagepub.com/en-us/nam/encyclopedia-of-social-media-and-politics/book239101 (accessed on 13 December 2021).

- Balkundi, P.; Bentley, J.; Kilduff, M.J. Culture, labor markets and attitudes: A meta-analytic test of tie-strength theory. Acad. Manag. Proc. 2012, 2012, 14728. [Google Scholar] [CrossRef]

- Amedie, J. The Impact of Social Media on Society. Pop Cult. Intersect. 2015, 2, 1–20. Available online: http://scholarcommons.scu.edu/engl_176/2 (accessed on 23 November 2021).

- Brown, J.D.; Cai, H. Thinking and feeling in the People’s Republic of China: Testing the generality of the “laws of emotion”. Int. J. Psychol. 2010, 45, 111–121. [Google Scholar] [CrossRef]

- Lerner, J.S.; Li, Y.; Valdesolo, P.; Kassam, K.S. Emotion and decision making. Annu. Rev. Psychol. 2015, 66, 799–823. [Google Scholar] [CrossRef]

- Lapidot-Lefler, N.; Barak, A. Effects of anonymity, invisibility, and lack of eye contact on toxic online disinhibition. Comput. Hum. Behav. 2012, 28, 434–443. [Google Scholar] [CrossRef]

- Firth, J.; Torous, J.; Stubbs, B.; Firth, J.A.; Steiner, G.Z.; Smith, L.; Alvarez-Jimenez, M.; Gleeson, J.; Vancampfort, D.; Armitage, C.J.; et al. The “online brain”: How the Internet may be changing our cognition. World Psychiatry 2019, 18, 119–129. [Google Scholar] [CrossRef]

- Schlenker, B.R. Self-presentation. In Handbook of Self and Identity; Leary, M.R., Tangney, J.P., Eds.; Guilford: New York, NY, USA, 2012; pp. 542–570. [Google Scholar]

- Fullwood, J.B.M.; Chen-Wilson, C.-H. Self-concept clarity and online self-presentation in adolescents. Cyberpsychology Behav. Soc. Netw. 2016, 19, 716–720. [Google Scholar] [CrossRef]

- Seabrook, E.M.; Kern, M.L.; Rickard, N.S. Social networking sites, depression, and anxiety: A systematic review. JMIR Ment. Health 2016, 3, e50. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Brown, B.B.; Clasen, D.R.; Eicher, S.A. Perceptions of peer pressure, peer conformity dispositions, and self-reported behavior among adolescents. Dev. Psychol. 1986, 22, 521–530. [Google Scholar] [CrossRef]

- Marino, C.; Gini, G.; Angelini, F.; Vieno, A.; Spada, M.M. Social norms and e-motions in problematic social media use among adolescents. Addict. Behav. Rep. 2020, 11, 100250. [Google Scholar] [CrossRef]

- Bloemen, N.; De Coninck, D. Social media and fear of missing out in adolescents: The role of family characteristics. Soc. Media + Soc. 2020, 6, 1–11. [Google Scholar] [CrossRef]

- Myers, T.; Crowther, J. Social comparison as a predictor of body dissatisfaction: A meta-analytic review. J. Abnorm. Psychol. 2009, 118, 683–698. [Google Scholar] [CrossRef] [PubMed]

- Fan, X.; Deng, N.; Dong, X.; Lin, Y.; Wang, J. Do others’ self-presentation on social media influence individual’s subjective well-being? A moderated mediation model. Telemat. Inform. 2019, 41, 86–102. [Google Scholar] [CrossRef]

- Hong, F.Y.; Chiu, S.-L. Factors influencing Facebook usage and Facebook addictive tendency in university students: The role of online psychological privacy and Facebook usage motivation. Stress Health 2016, 32, 117–127. [Google Scholar] [CrossRef]

- Lusk, B. Digital natives and social media behaviors: An overview. Prev. Res. 2010, 17, 3–6. [Google Scholar]

- Bhat, C.S.; Ragan, M.A.; Selvaraj, P.R.; Shultz, B.J. Online bullying among high-school students in India. Int. J. Adv. Couns. 2017, 39, 112–124. [Google Scholar] [CrossRef]

- Schlosser, A. Self-disclosure versus self-presentation on social media. Curr. Opin. Psychol. 2020, 31, 1–6. [Google Scholar] [CrossRef]

- Suler, J. The online disinhibition effect. CyberPsychol. Behav. 2004, 7, 321–326. [Google Scholar] [CrossRef] [PubMed]

- Kiesler, S.; Sproull, L. Group decision making and communication technology. Organ. Behav. Hum. Decis. Processes 1992, 52, 96–123. [Google Scholar] [CrossRef]

- Mishna, F.; Saini, M.; Solomon, S. Ongoing and online: Children and youth’s perceptions of cyber bullying. Child. Youth Serv. Rev. 2009, 31, 1222–1228. [Google Scholar] [CrossRef]

- Kowalski, R.M.; Giumetti, G.W.; Schroeder, A.N.; Lattanner, M.R. Bullying in the digital age: A critical review and meta-analysis of cyberbullying research among youth. Psychol. Bull. 2014, 140, 1073–1137. [Google Scholar] [CrossRef]

- Pettalia, J.L.; Levin, E.; Dickinson, J. Cyberbullying: Eliciting harm without consequence. Comput. Hum. Behav. 2013, 29, 2758–2765. [Google Scholar] [CrossRef]

- Šincek, D. The revised version of the committing and experiencing cyber-violence scale and its relation to psychosocial functioning and online behavioral problems. Societies 2021, 11, 107. [Google Scholar] [CrossRef]

- DeAngelis, T. A Second Life for Practice? Monit. Psychol. 2012, 43, 48. Available online: https://www.apa.org/monitor/2012/03/avatars (accessed on 10 December 2021).

- Naneva, S.; Sarda Gou, M.; Webb, T.L.; Prescott, T.J. A systematic review of attitudes, Anxiety, acceptance, and trust towards social robots. Int. J. Soc. Robot. 2020, 12, 1179–1201. [Google Scholar] [CrossRef]

- Laban, G.; George, J.-N.; Morrison, V.; Cross, E.S. Tell me more! Assessing interactions with social robots from speech. Paladyn J. Behav. Robot. 2021, 12, 136–159. [Google Scholar] [CrossRef]

- Laban, G.; Kappas, A.; Morrison, V.; Cross, E.S. Protocol for a mediated long-term experiment with a social robot. PsyArXiv 2021. [Google Scholar] [CrossRef]

- Stroessner, S.J.; Benitez, J. The social perception of humanoid and non-humanoid robots. Int. J. Soc. Robot. 2019, 11, 305–315. [Google Scholar] [CrossRef]

- Blut, M.; Wang, C.; Wünderlich, N.V.; Brock, C. Understanding anthropomorphism in service provision: A meta-analysis of physical robots, chatbots, and other AI. J. Acad. Mark. Sci. 2021, 49, 632–658. [Google Scholar] [CrossRef]

- Seeger, A.-M.; Pfeiffer, J.; Heinzl, A. Texting with humanlike conversational agents: Designing for anthropomorphism. J. Assoc. Inf. Syst. 2021, 22, 8. [Google Scholar] [CrossRef]

- Pu, L.; Moyle, W.; Jones, C.; Todorovic, M. The effectiveness of social robots for older adults: A systematic review and meta-analysis of randomized controlled studies. Gerontologist 2019, 59, e37–e51. [Google Scholar] [CrossRef] [PubMed]

- Bolls, P.; Lang, A.; Potter, R. The effects of message valence and listener arousal on attention, memory, and facial muscular responses to radio advertisements. Commun. Res. 2001, 28, 627–651. [Google Scholar] [CrossRef]

- Lang, A.; Shin, M.; Lee, S. Sensation seeking, motivation, and substance use: A dual system approach. Media Psychol. 2005, 7, 1–29. [Google Scholar] [CrossRef]

- Siedlecka, E.; Denson, T. Experimental methods for inducing basic emotions: A qualitative review. Emot. Rev. 2019, 11, 87–97. [Google Scholar] [CrossRef]

- Sweeney, P. Trusting social robots. AI Ethics 2022, 1–8. [Google Scholar] [CrossRef]

- Felber, N.A.; Pageau, F.; McLean, A.; Wangmo, T. The concept of social dignity as a yardstick to delimit ethical use of robotic assistance in the care of older persons. Med. Health Care Philos. 2022, 25, 99–110. [Google Scholar] [CrossRef]

- Roberts, J.J. Privacy Groups Claim These Popular Dolls Spy on Kids. Fortune, 9 December 2016. Available online: http://fortune.com/2016/12/08/my-friend-cayla-doll/; (accessed on 16 January 2022).

- Suzuki, Y.; Galli, L.; Ikeda, A.; Itakura, S.; Kitazaki, M. Measuring empathy for human and robot hand pain using electroencephalography. Sci. Rep. 2015, 5, 15924. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jourard, S.M. The Transparent Self; Van Nostrand Reinhold: New York, NY, USA, 1971; Available online: https://sidneyjourard.com/TRANSPARENTSELFFINAL.PDF (accessed on 27 July 2022).

| Variables | Mood Induction | ||

| Means | t | p | N |

| Positive Valence-before | 11.84 | <0.001 | 72 |

| Negative Valence-before | 23.60 | <0.001 | 72 |

| Positive Valence-before | 10.99 | <0.001 | 61 |

| Negative Valence-before | 24.27 | <0.001 | 61 |

| Variables | Treatment | ||

| Means | t | p | N |

| Positive Valence-after | 23.42 | <0.001 | 72 |

| Negative Valence-after | 14.91 | <0.001 | 72 |

| Positive Valence-after | 25.28 | <0.001 | 61 |

| Negative Valence-after | 17.22 | <0.001 | 61 |

| Variables | Before-After Treatment | ||

|---|---|---|---|

| Means | t | p | N |

| Negative Valence before-after | 10.88 | <0.001 | 72 |

| Positive Valence before-after | −9.10 | <0.001 | 72 |

| Negative Valence before-after | 10.89 | <0.001 | 61 |

| Positive Valence before-after | −10.20 | <0.001 | 61 |

| Robots vs. Writing vs. Social Media | ||||||

|---|---|---|---|---|---|---|

| V | F | df1,2 | p | ηp2 | N | |

| Interaction Media × Valence before-after | 0.08 | 3.01 | 2,69 | 0.056 | 0.08 | 72 |

| 0.14 | 4.83 | 2,58 | 0.011 | 0.14 | 61 | |

| Main effect Media (RWS) | 2.02 | 2,69 | 0.141 | 0.06 | 72 | |

| 1.96 | 2,58 | 0.150 | 0.06 | 61 | ||

| Main effect Valence before-after | 0.64 | 124.90 | 1,69 | 0.000 | 0.64 | 72 |

| 0.73 | 152.76 | 1,58 | 0.000 | 0.73 | 61 | |

| Robots vs. Writing vs. Social Media | ||||||

|---|---|---|---|---|---|---|

| Difference between Means | t | df | p | CI | n | |

| Robot | 2.00 | −7.87 | 20 | 0.000 | −2.39|−1.03 | 21 |

| Writing | 1.26 | −6.58 | 16 | 0.000 | −2.31|−0.860 | 17 |

| Social Media | 1.12 | −7.41 | 22 | 0.000 | −2.15|−0.930 | 23 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Luo, R.L.; Zhang, T.X.Y.; Chen, D.H.-C.; Hoorn, J.F.; Huang, I.S. Social Robots Outdo the Not-So-Social Media for Self-Disclosure: Safe Machines Preferred to Unsafe Humans? Robotics 2022, 11, 92. https://doi.org/10.3390/robotics11050092

Luo RL, Zhang TXY, Chen DH-C, Hoorn JF, Huang IS. Social Robots Outdo the Not-So-Social Media for Self-Disclosure: Safe Machines Preferred to Unsafe Humans? Robotics. 2022; 11(5):92. https://doi.org/10.3390/robotics11050092

Chicago/Turabian StyleLuo, Rowling L., Thea X. Y. Zhang, Derrick H.-C. Chen, Johan F. Hoorn, and Ivy S. Huang. 2022. "Social Robots Outdo the Not-So-Social Media for Self-Disclosure: Safe Machines Preferred to Unsafe Humans?" Robotics 11, no. 5: 92. https://doi.org/10.3390/robotics11050092

APA StyleLuo, R. L., Zhang, T. X. Y., Chen, D. H.-C., Hoorn, J. F., & Huang, I. S. (2022). Social Robots Outdo the Not-So-Social Media for Self-Disclosure: Safe Machines Preferred to Unsafe Humans? Robotics, 11(5), 92. https://doi.org/10.3390/robotics11050092