Robotic Nursing Assistant Applications and Human Subject Tests through Patient Sitter and Patient Walker Tasks †

Abstract

:1. Introduction

2. Description of Algorithms

2.1. Navigation Algorithm

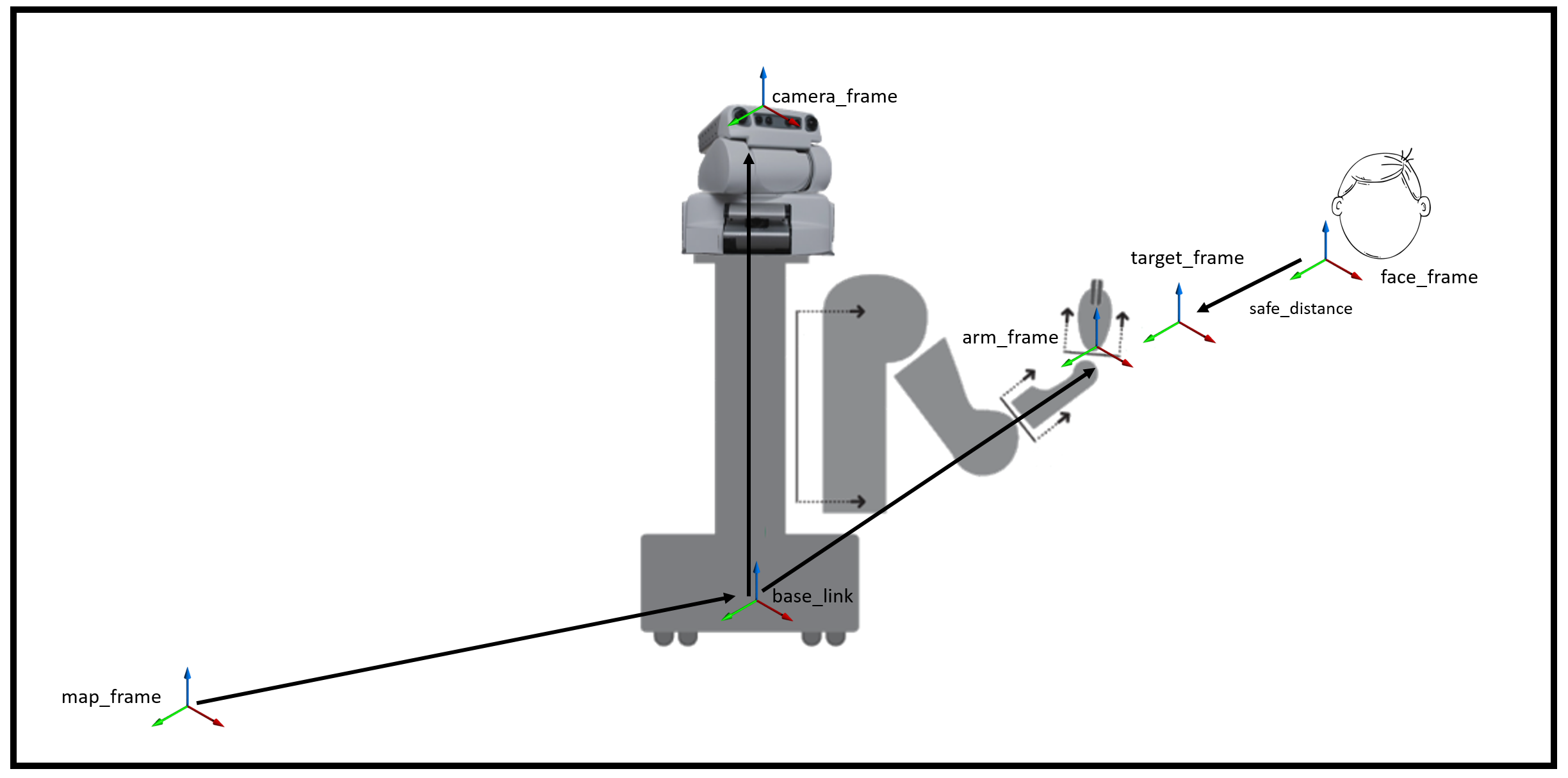

2.2. Object Position Detection Algorithm

2.3. Human Face Detection Algorithm

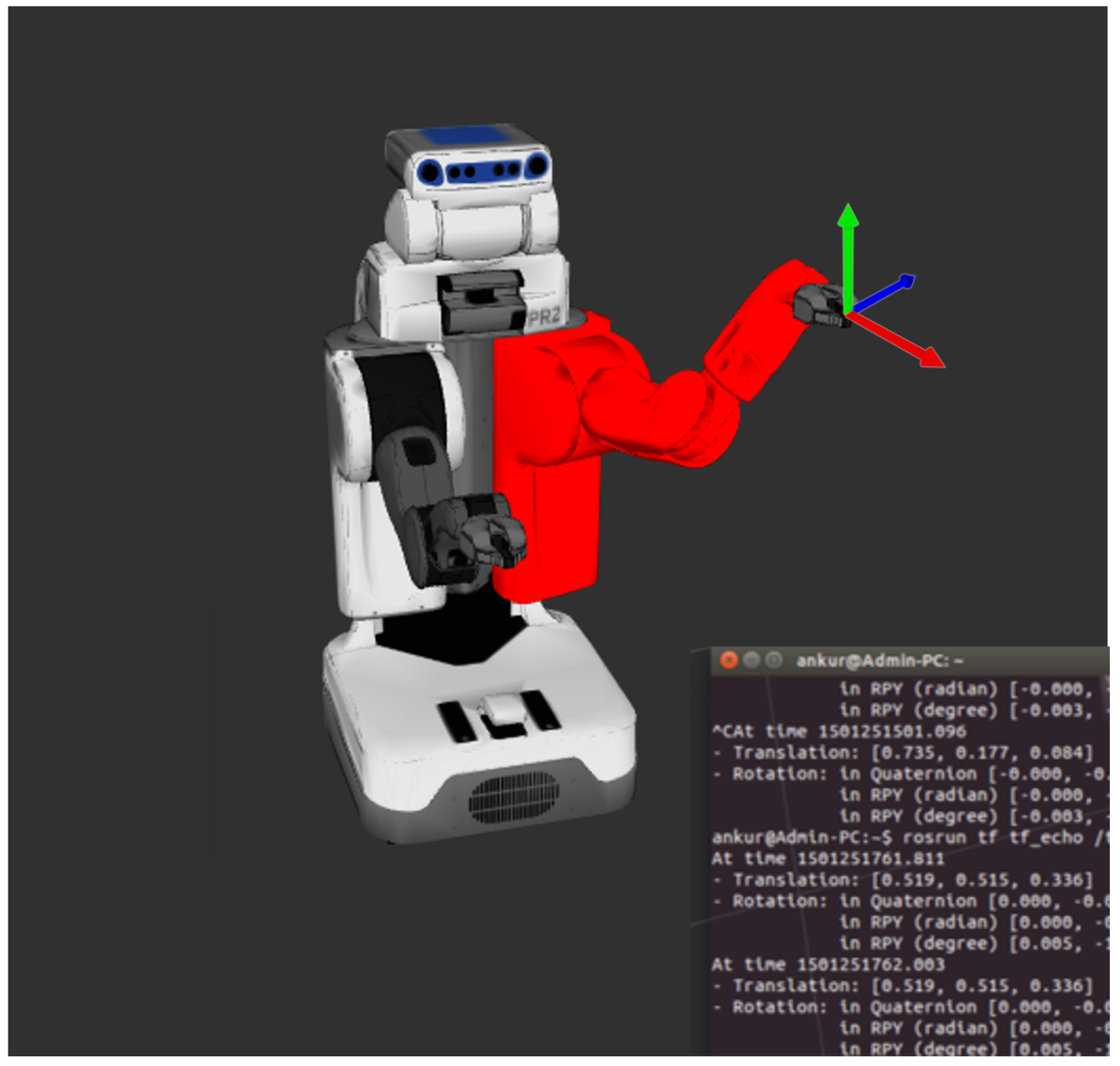

2.4. Motion Planning Algorithm for the Robot Arm

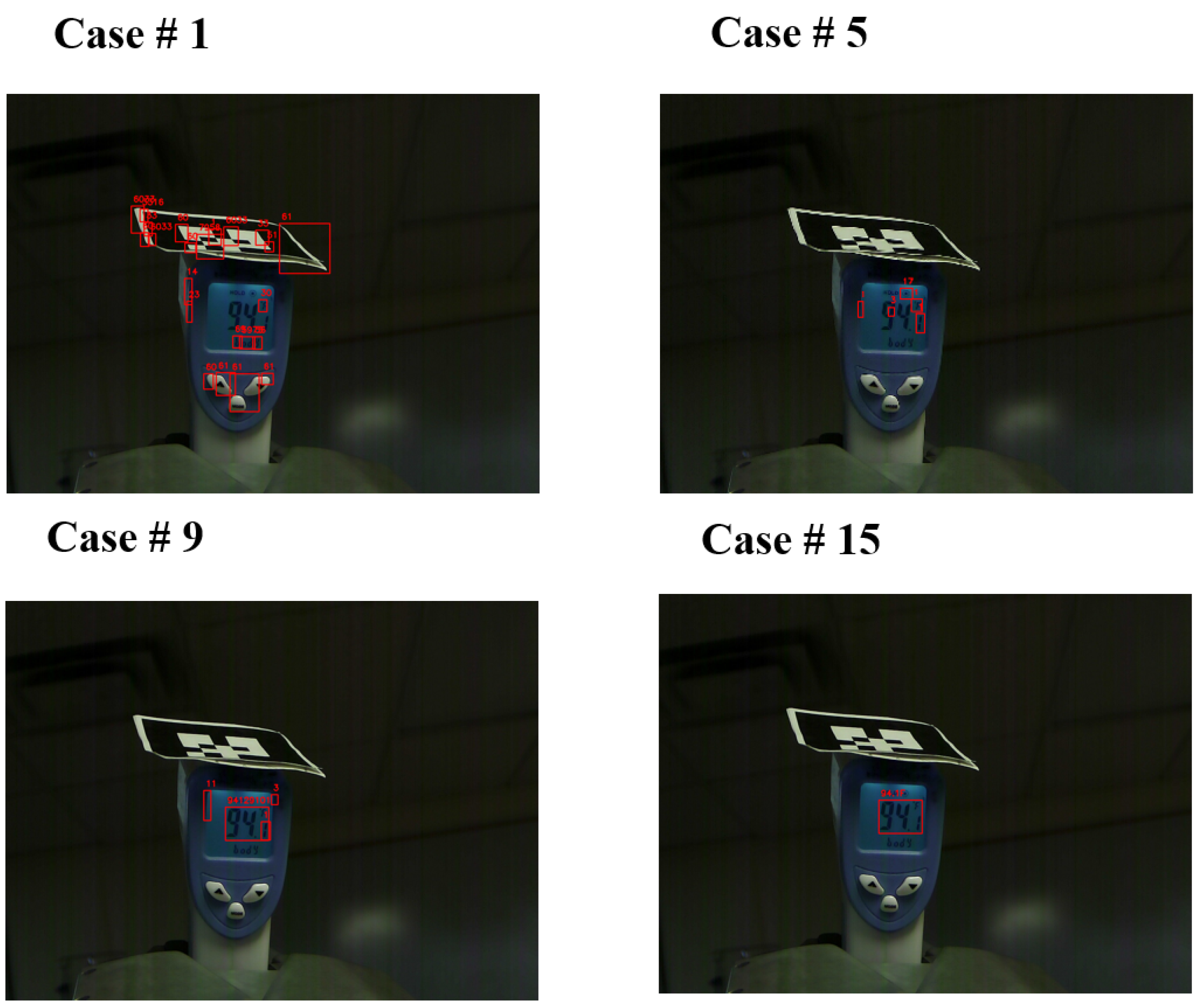

2.5. Thermometer Digit Detection Algorithm using OCR

2.6. Patient Walker Algorithm

3. Hardware and Workspace Description

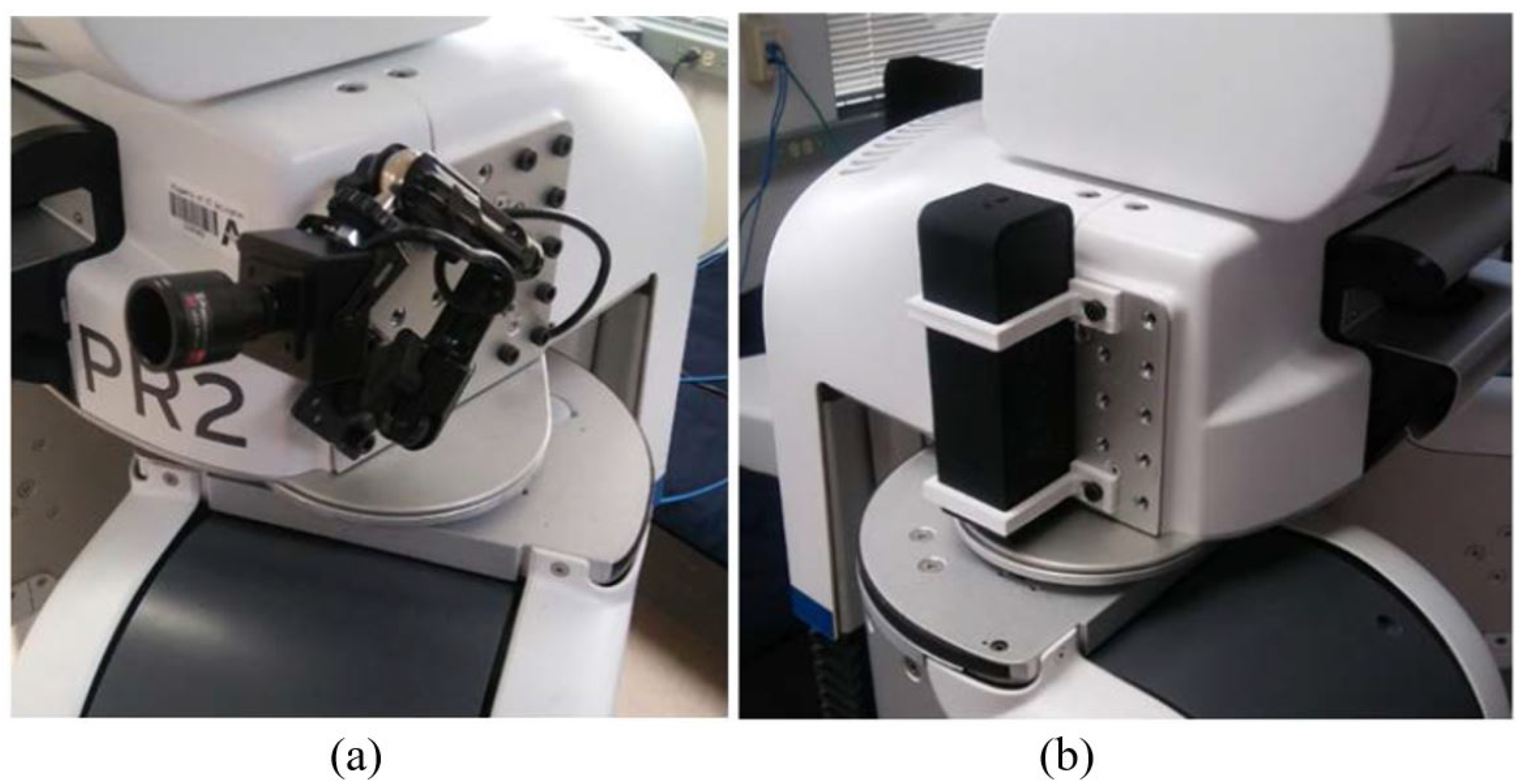

3.1. PR2 Robotic Platform

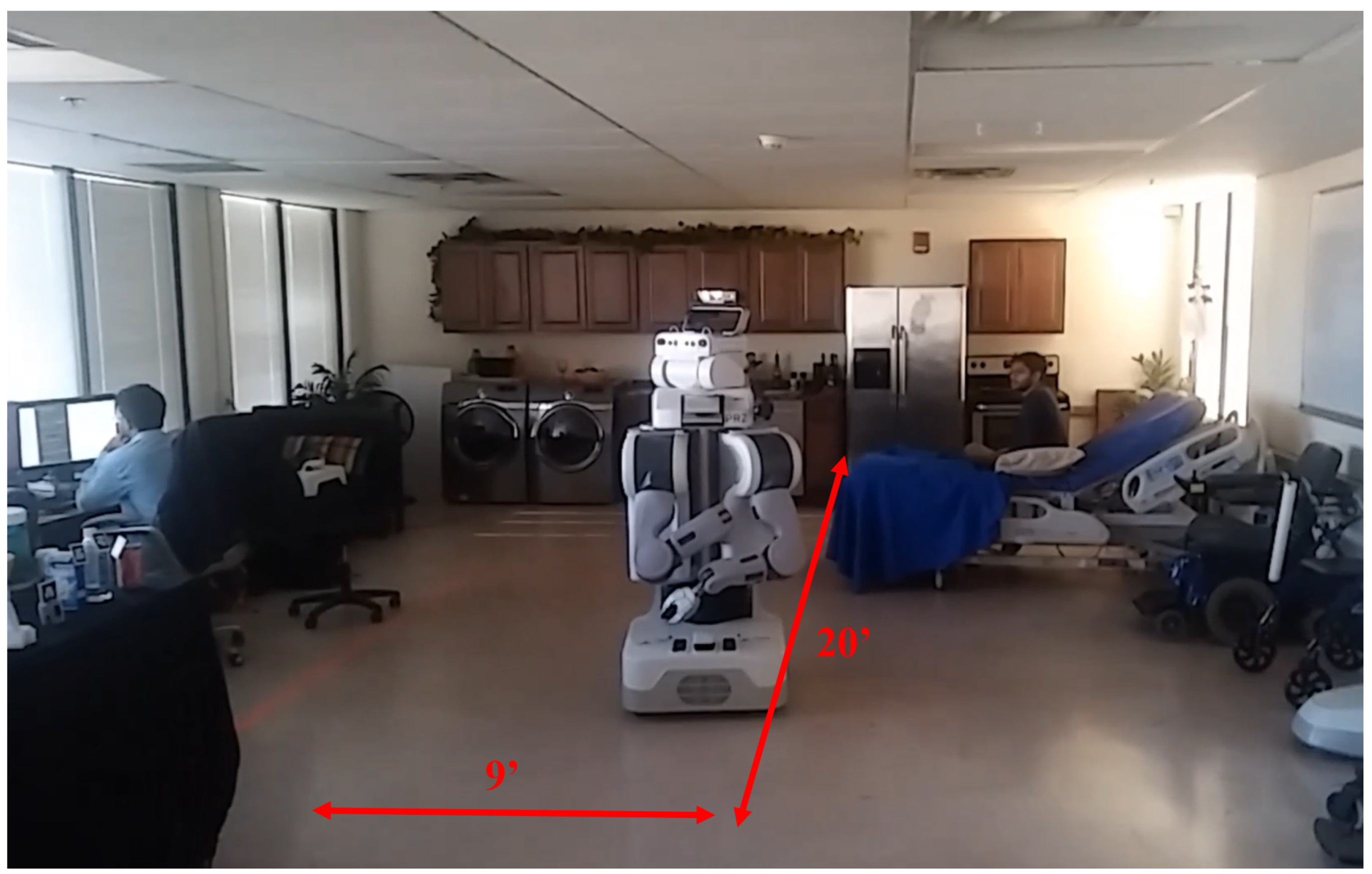

3.2. Experiment Workspace

3.3. Thermometer

3.4. Patient Walker

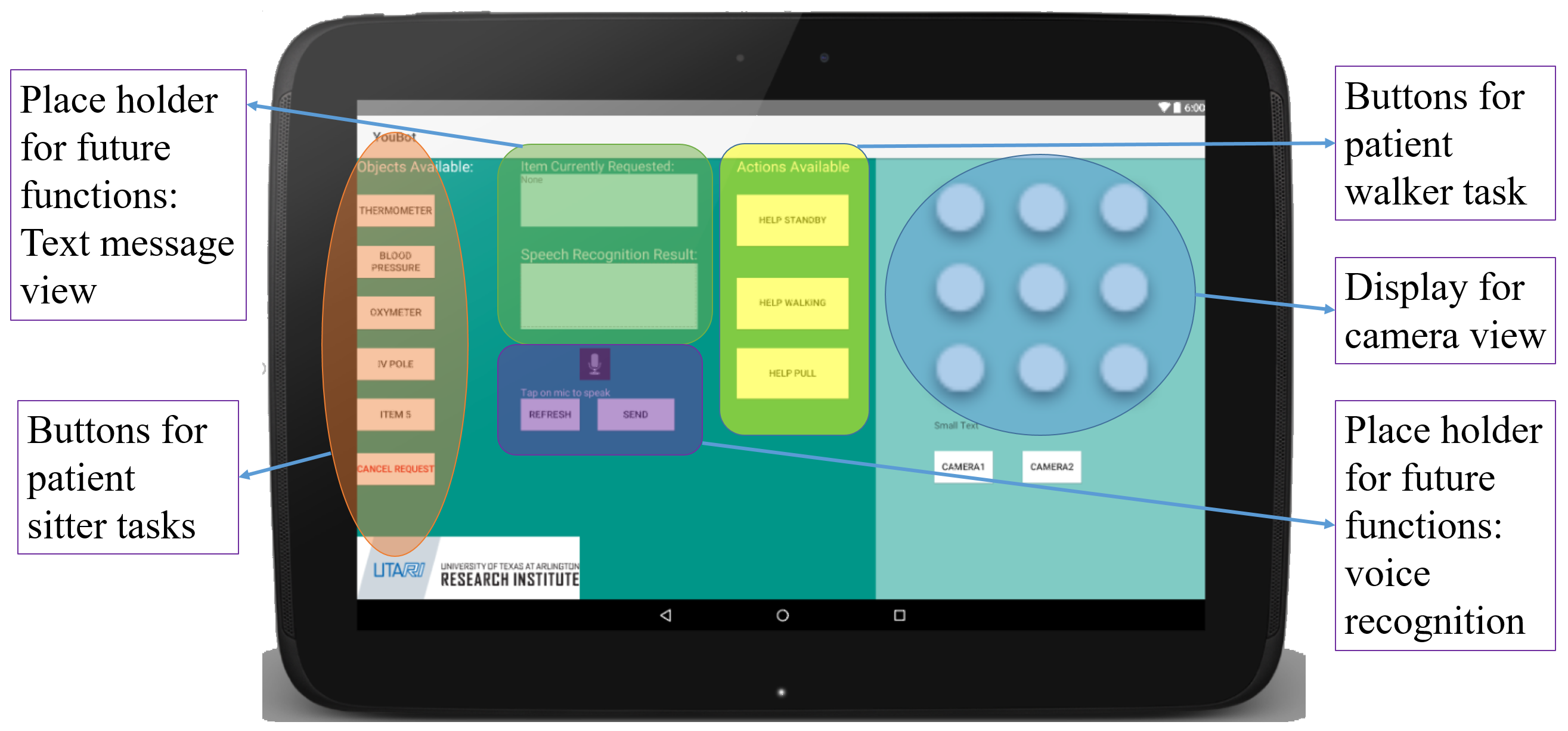

3.5. Tablet and Android App User Interface

4. Parameter Selection and Analysis for Defined Nursing Tasks

4.1. Temperature Measurement Task

4.2. Patient Walker Task

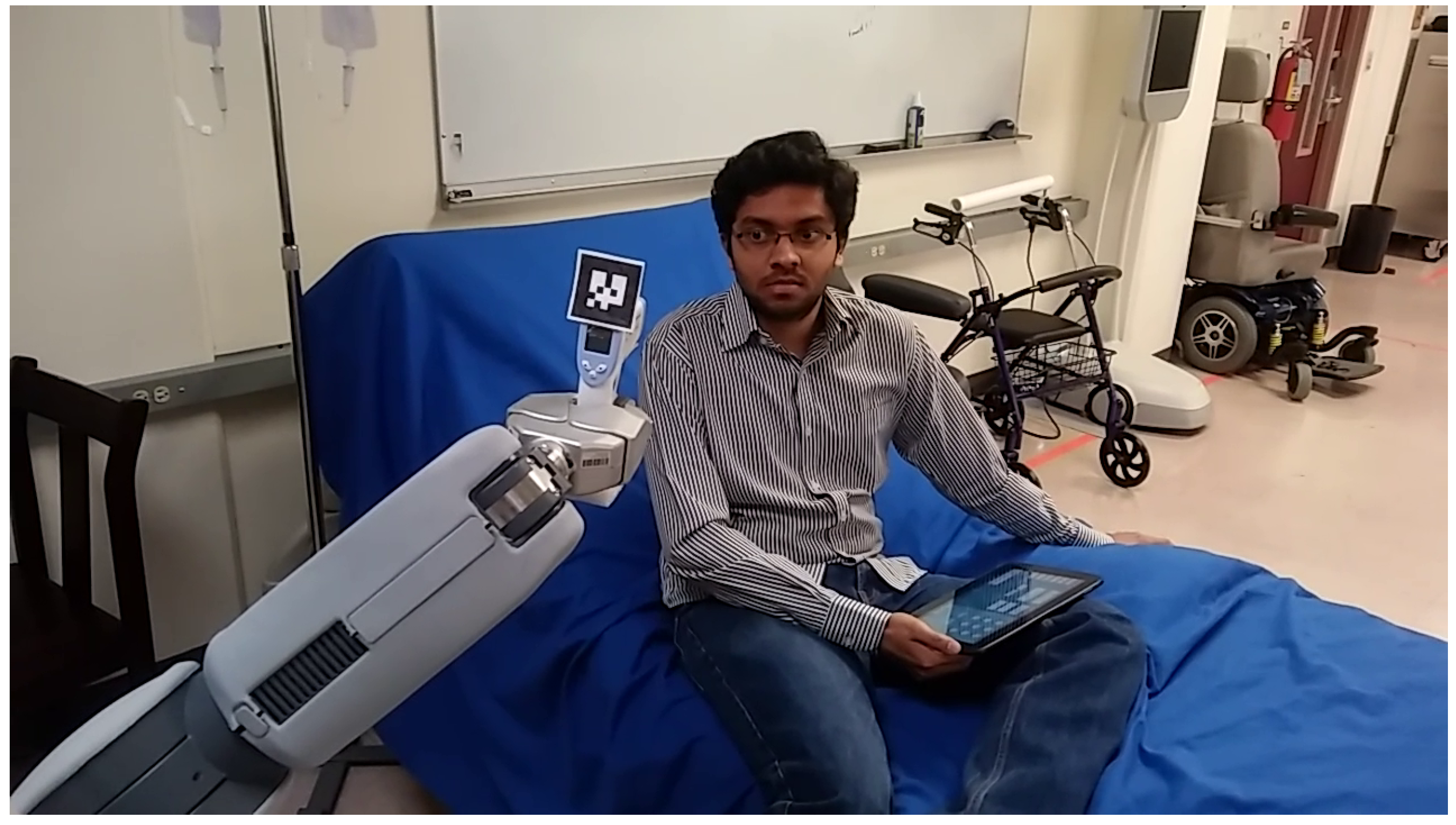

5. Human Subject Tests and Results

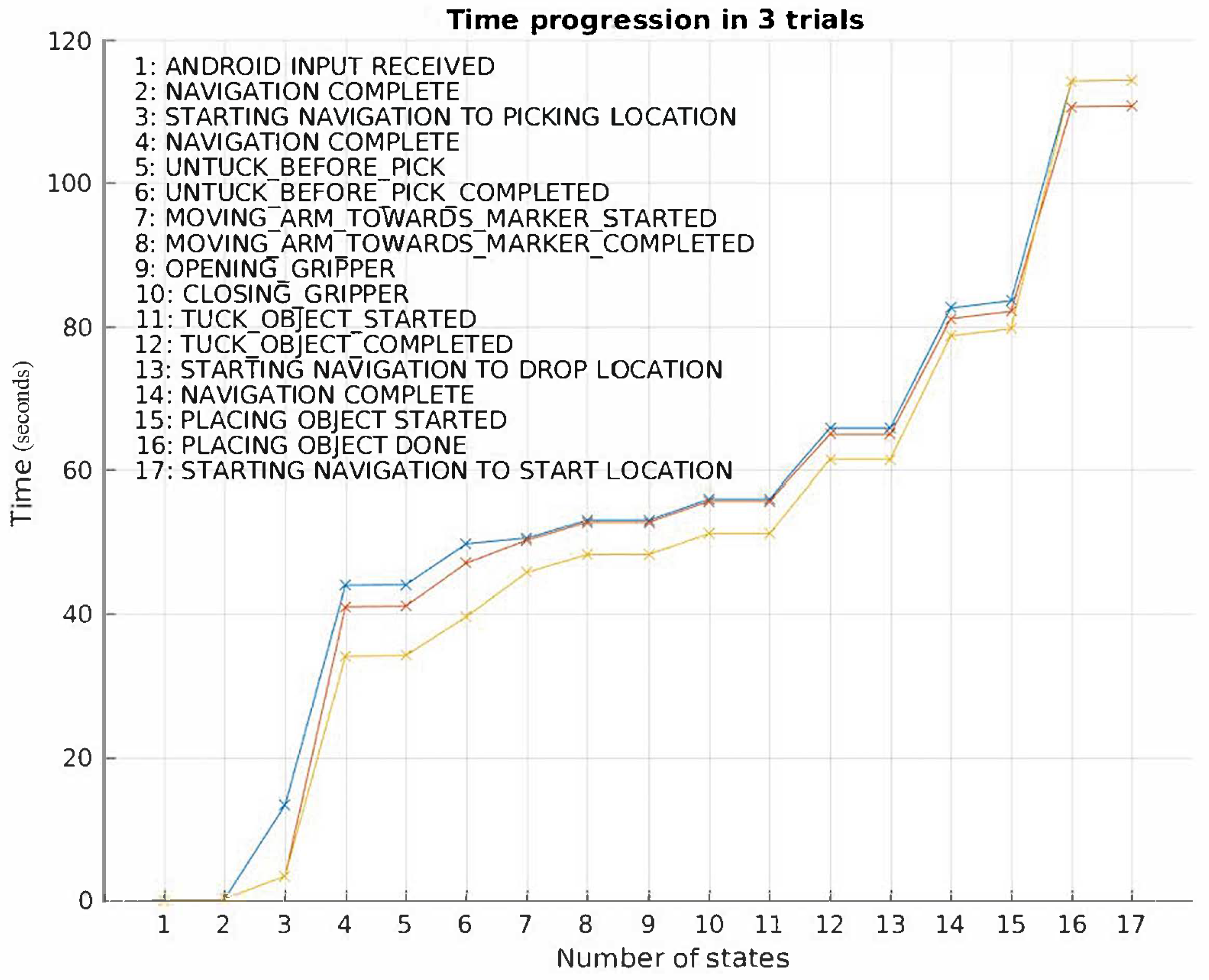

5.1. Object Fetching Task

- A human subject is asked to sit or lie on a hospital bed (pretending to be a patient in a hospital). The subject is asked to use buttons on the tablet to interact with the PR2 during the experiment.

- The PR2 robot’s starting position is nearby the patient, about 6 feet (1.8 m) away.

- The PR2 robot detects a human face and start tracking the subject’s face position.

- The PR2 robot says “Please interact with the tablet”.

- The subject pushes a button on the tablet to request a fetch task. Objects that can be fetched are a soda bottle, water, or cereal box. Once the PR2 receives the tablet input, first, it moves to its starting pose to start the experiment (step 2 in Figure 11).

- The PR2 robot acknowledges the subject’s command from the tablet and starts moving toward a table located about 20 feet (6.1 m) away from the bed.

- The PR2 robot stops near the table and picks up the requested object on the table (Figure 13).

- The PR2 robot brings the object near to the bed, about 3 to 4 feet (0.9–1.2 m) away from the subject.

- The subject is asked to take the object from the robot.

- The robot releases the object (Figure 14).

- This task is repeated a total of three times for each subject.

- The robot’s navigation velocity is programmed to a max limit of 0.3 m/s forward and 0.1 m/s backward. The average time to fetch objects from a travel distance of 29 feet (8.8 m) is in the range of 120–160 s (average 136.66 s with a standard deviation of 17.98 s).

- Considering that the time for a person to complete the same fetching task is a few seconds, the robot’s speed needs to be improved for better efficiency.

- The fetching tasks are completed with a success rate of 94.12% out of 34 trials (11 subjects × 3 trials + 1 additional trial for one subject). This rate is based on the robot returning the correct object directly from the tablet input. The failures (only to occurrences) include both the robot returning the wrong object due to wrong detection (computer vision) and the robot returning with nothing due to a bad grasp.

- The robot was stuck two times during navigation due to moving over the bed sheet. The robot is sensitive to obstacles under the wheels. When the wheels pass over the cloth, they pull the cloth closer to the robot, blocking some of the sensors and this impedes the path planning.

- In one trial, the subject pushes multiple buttons unknowingly. Multiple item retrieval messages are sent to the robot. Each additional input is seen as a correction or change of command and overwrites the prior item message.

- The robot’s arm hits the table two times when reaching out for objects on two separate trials. The path planning for arm manipulation is not appropriate with a reduced distance between the robot and table.

- Comments are collected from the human subjects. Some examples of those comments are as follows:

- –

- The fetching speed is slow.”

- –

- Face tracking is a good feature making the robot more human like in interaction, however the constant tracking and searching can cause negative effects. Depending on the requirements of the patient profile the face tracking behavior should vary.”

5.2. Temperature Measurement Task

- Two times, the patients lay down quite low on the bed. It takes longer for the PR2 to find the subject’s face.

- Three times, subjects pushed the button twice.

- One time, the PR2 hit the table when lifting the arm during the thermometer pick-up phase.

- One subject removed glasses while the PR2 pointed the thermometer.

- Some examples of human subjects’ comments are:

- –

- “It looks like the robot from the Jetsons”.

- –

- “The speed of the robot is too slow and that the tablet interface can be improved”.

- –

- “Can the supplies be put on the robot?”

5.3. Patient Walker Task

- Patient cannot be sure when to press the button (Test 1).

- PR2 has a hard time navigating to the walker (Test 1).

- PR2 has a hard time finding the walker (Test 1).

- One of the grippers misses the walker handle (Test 1).

- Patient says turning is tricky (Test 1).

- Patient forgets to turn off the walker mode (Test 4).

- Initialization is failed, and the experiment is started over (Test 5).

- During navigation to the walker, the PR2 failed. The experiment is restarted (Test 5).

- During navigation to the walker, the PR2 failed again. Experiment is restarted (Test 5).

- Patient says that rotation is hard and tricky (Test 6).

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Landro, L. Nurses Shift, Aiming for More Time with Patients. Available online: https://www.wsj.com/articles/nurses-shift-aiming-for-more-time-with-patients-1405984193 (accessed on 3 July 2018).

- Hillman, M.; Hagan, K.; Hagan, S.; Jepson, J.; Orpwood, R. A wheelchair mounted assistive robot. Proc. ICORR 1999, 99, 86–91. [Google Scholar]

- Park, K.H.; Bien, Z.; Lee, J.J.; Kim, B.K.; Lim, J.T.; Kim, J.O.; Lee, H.; Stefanov, D.H.; Kim, D.J.; Jung, J.W.; et al. Robotic smart house to assist people with movement disabilities. Auton. Robot. 2007, 22, 183–198. [Google Scholar] [CrossRef]

- Driessen, B.; Evers, H.; Woerden, J. MANUS—A wheelchair-mounted rehabilitation robot. Proc. Inst. Mech. Eng. Part J. Eng. Med. 2001, 215, 285–290. [Google Scholar]

- Kim, D.J.; Lovelett, R.; Behal, A. An empirical study with simulated ADL tasks using a vision-guided assistive robot arm. In Proceedings of the 2009 IEEE International Conference on Rehabilitation Robotics, Kyoto, Japan, 23–26 June 2009; pp. 504–509. [Google Scholar]

- Tsumaki, Y.; Kon, T.; Suginuma, A.; Imada, K.; Sekiguchi, A.; Nenchev, D.N.; Nakano, H.; Hanada, K. Development of a skincare robot. In Proceedings of the 2008 IEEE International Conference on Robotics and Automation, Pasadena, CA, USA, 19–23 May 2008; pp. 2963–2968. [Google Scholar]

- Koga, H.; Usuda, Y.; Matsuno, M.; Ogura, Y.; Ishii, H.; Solis, J.; Takanishi, A.; Katsumata, A. Development of oral rehabilitation robot for massage therapy. In Proceedings of the 2007 6th International Special Topic Conference on Information Technology Applications in Biomedicine, Tokyo, Japan, 8–11 November 2007; pp. 111–114. [Google Scholar]

- Kidd, C.D.; Breazeal, C. Designing a sociable robot system for weight maintenance. In Proceedings of the IEEE Consumer Communications and Networking Conference, Las Vegas, NV, USA, 8–10 January 2006; pp. 253–257. [Google Scholar]

- Kang, K.I.; Freedman, S.; Mataric, M.J.; Cunningham, M.J.; Lopez, B. A hands-off physical therapy assistance robot for cardiac patients. In Proceedings of the 9th International Conference on Rehabilitation Robotics, Chicago, IL, USA, 28 June–1 July 2005; pp. 337–340. [Google Scholar]

- Wada, K.; Shibata, T.; Saito, T.; Tanie, K. Robot assisted activity for elderly people and nurses at a day service center. In Proceedings of the 2002 IEEE International Conference on Robotics and Automation (Cat. No. 02CH37292), Washington, DC, USA, 11–15 May 2002; Volume 2, pp. 1416–1421. [Google Scholar]

- Obayashi, K.; Kodate, N.; Masuyama, S. Socially assistive robots and their potential in enhancing older people’s activity and social participation. J. Am. Med. Dir. Assoc. 2018, 19, 462–463. [Google Scholar] [CrossRef] [PubMed]

- Kim, E.S.; Berkovits, L.D.; Bernier, E.P.; Leyzberg, D.; Shic, F.; Paul, R.; Scassellati, B. Social robots as embedded reinforcers of social behavior in children with autism. J. Autism Dev. Disord. 2013, 43, 1038–1049. [Google Scholar] [CrossRef] [PubMed]

- Tapus, A.; Fasola, J.; Mataric, M.J. Socially assistive robots for individuals suffering from dementia. In Proceedings of the ACM/IEEE 3rd Human-Robot Interaction International Conference, Workshop on Robotic Helpers: User Interaction, Interfaces and Companions in Assistive and Therapy Robotics, Amsterdam, The Netherlands, 12–15 March 2008. [Google Scholar]

- Tapus, A. Improving the quality of life of people with dementia through the use of socially assistive robots. In Proceedings of the 2009 Advanced Technologies for Enhanced Quality of Life, Iasi, Romania, 22–26 July 2009; pp. 81–86. [Google Scholar]

- Zemg, J.-J.; Yang, R.Q.; Zhang, W.-J.; Weng, X.-H.; Qian, J. Research on semi-automatic bomb fetching for an EOD robot. Int. J. Adv. Robot. Syst. 2007, 4, 27. [Google Scholar]

- Bluethmann, W.; Ambrose, R.; Diftler, M.; Askew, S.; Huber, E.; Goza, M.; Rehnmark, F.; Lovchik, C.; Magruder, D. Robonaut: A robot designed to work with humans in space. Auton. Robot. 2003, 14, 179–197. [Google Scholar] [CrossRef]

- Diftler, M.A.; Ambrose, R.O.; Tyree, K.S.; Goza, S.; Huber, E. A mobile autonomous humanoid assistant. In Proceedings of the 4th IEEE/RAS International Conference on Humanoid Robots, Santa Monica, CA, USA, 10–12 November 2004; Volume 1, pp. 133–148. [Google Scholar]

- Taipalus, T.; Kosuge, K. Development of service robot for fetching objects in home environment. In Proceedings of the 2005 International Symposium on Computational Intelligence in Robotics and Automation, Espoo, Finland, 27–30 June 2005; pp. 451–456. [Google Scholar]

- Nguyen, H.; Anderson, C.; Trevor, A.; Jain, A.; Xu, Z.; Kemp, C.C. El-e: An assistive robot that fetches objects from flat surfaces. In Proceedings of the Robotic Helpers, International Conference on Human-Robot Interaction, Amsterdam, The Netherlands, 12 March 2008. [Google Scholar]

- Natale, L.; Torres-Jara, E. A sensitive approach to grasping. In Proceedings of the Sixth International Workshop on Epigenetic Robotics, Paris, France, 20–22 September 2006; pp. 87–94. [Google Scholar]

- Saxena, A.; Driemeyer, J.; Ng, A.Y. Robotic grasping of novel objects using vision. Int. J. Robot. Res. 2008, 27, 157–173. [Google Scholar] [CrossRef] [Green Version]

- Pettinaro, G.C.; Gambardella, L.M.; Ramirez-Serrano, A. Adaptive distributed fetching and retrieval of goods by a swarm-bot. In Proceedings of the ICAR’05, 12th International Conference on Advanced Robotics, Seattle, WA, USA, 18–20 July 2005; pp. 825–832. [Google Scholar]

- Morris, A.; Donamukkala, R.; Kapuria, A.; Steinfeld, A.; Matthews, J.T.; Dunbar-Jacob, J.; Thrun, S. A robotic walker that provides guidance. In Proceedings of the 2003 IEEE International Conference on Robotics and Automation (Cat. No. 03CH37422), Taipei, Taiwan, 14–19 September 2003; Volume 1, pp. 25–30. [Google Scholar]

- Dubowsky, S.; Genot, F.; Godding, S.; Kozono, H.; Skwersky, A.; Yu, H.; Yu, L.S. PAMM-A robotic aid to the elderly for mobility assistance and monitoring: A “helping-hand” for the elderly. In Proceedings of the 2000 ICRA. Millennium Conference. IEEE International Conference on Robotics and Automation, Symposia Proceedings (Cat. No. 00CH37065). San Francisco, CA, USA, 24–28 April 2000; Volume 1, pp. 570–576. [Google Scholar]

- Wakita, K.; Huang, J.; Di, P.; Sekiyama, K.; Fukuda, T. Human-walking-intention-based motion control of an omnidirectional-type cane robot. IEEE/ASME Trans. Mechatron. 2011, 18, 285–296. [Google Scholar] [CrossRef]

- Wang, H.; Sun, B.; Wu, X.; Wang, H.; Tang, Z. An intelligent cane walker robot based on force control. In Proceedings of the 2015 IEEE International Conference on Cyber Technology in Automation, Control, and Intelligent Systems (CYBER), Shenyang, China, 8–12 June 2015; pp. 1333–1337. [Google Scholar]

- Lacey, G.; Dawson-Howe, K.M. The application of robotics to a mobility aid for the elderly blind. Robot. Auton. Syst. 1998, 23, 245–252. [Google Scholar] [CrossRef]

- Huang, J.; Di, P.; Fukuda, T.; Matsuno, T. Motion control of omni-directional type cane robot based on human intention. In Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 273–278. [Google Scholar]

- Yuen, S.G.; Novotny, P.M.; Howe, R.D. Quasiperiodic predictive filtering for robot-assisted beating heart surgery. In Proceedings of the 2008 IEEE International Conference on Robotics and Automation, Pasadena, CA, USA, 19–23 May 2008; pp. 3875–3880. [Google Scholar]

- Harmo, P.; Knuuttila, J.; Taipalus, T.; Vallet, J.; Halme, A. Automation and telematics for assisting people living at home. Ifac Proc. Vol. 2005, 38, 13–18. [Google Scholar] [CrossRef] [Green Version]

- Abdullah, M.F.L.; Poh, L.M. Mobile robot temperature sensing application via bluetooth. Int. J. Smart Home 2011, 5, 39–48. [Google Scholar]

- Der Loos, V.; Machiel, H.; Ullrich, N.; Kobayashi, H. Development of sensate and robotic bed technologies for vital signs monitoring and sleep quality improvement. Auton. Robot. 2003, 15, 67–79. [Google Scholar] [CrossRef]

- Kuo, I.H.; Broadbent, E.; MacDonald, B. Designing a robotic assistant for healthcare applications. In Proceedings of the 7th Conference of Health Informatics, Rotorua, New Zealand, 15–17 October 2008. [Google Scholar]

- Cremer, S.; Doelling, K.; Lundberg, C.L.; McNair, M.; Shin, J.; Popa, D. Application requirements for Robotic Nursing Assistants in hospital environments. Sensors -Next-Gener. Robot. III 2016, 9859, 98590E. [Google Scholar]

- Das, S.K.; Sahu, A.; Popa, D.O. Mobile app for human-interaction with sitter robots. In Smart Biomedical and Physiological Sensor Technology XIV; International Society for Optics and Photonics: Anaheim, CA, USA, 2017; Volume 10216, p. 102160D. [Google Scholar]

- Dalal, A.V.; Ghadge, A.M.; Lundberg, C.L.; Shin, J.; Sevil, H.E.; Behan, D.; Popa, D.O. Implementation of Object Fetching Task and Human Subject Tests Using an Assistive Robot. In Proceedings of the ASME 2018 Dynamic Systems and Control Conference (DSCC 2018), Atlanta, GA, USA, 30 September–3 October 2018. DSCC2018-9248. [Google Scholar]

- Ghadge, A.M.; Dalal, A.V.; Lundberg, C.L.; Sevil, H.E.; Behan, D.; Popa, D.O. Robotic Nursing Assistants: Human Temperature Measurement Case Study. In Proceedings of the Florida Conference for Recent Advances in Robotics (FCRAR 2019), Lakeland, FL, USA, 9–10 May 2019. [Google Scholar]

- Fina, L.; Lundberg, C.L.; Sevil, H.E.; Behan, D.; Popa, D.O. Patient Walker Application and Human Subject Tests with an Assistive Robot. In Proceedings of the Florida Conference for Recent Advances in Robotics (FCRAR 2020), Melbourne, FL, USA, 14–16 May 2020. [Google Scholar]

- ROS Wiki. Navigation Package Summary. Available online: http://wiki.ros.org/navigation (accessed on 10 April 2018).

- ROS Wiki. Amcl Package Summary. Available online: http://wiki.ros.org/amcl (accessed on 10 April 2018).

- ROS Wiki. ar_track_alvar Package Summary. Available online: http://wiki.ros.org/ar_track_alvar (accessed on 10 April 2018).

- ROS Wiki. Face_detector Package Summary. Available online: http://wiki.ros.org/face_detector (accessed on 10 April 2018).

- OpenCV. Face Detection using Haar Cascades. Available online: https://docs.opencv.org/trunk/d7/d8b/tutorial_py_face_detection.html (accessed on 10 April 2018).

- Robots and Androids. Robot Face Recognition. Available online: http://www.robots-and-androids.com/robot-face-recognition.html (accessed on 10 April 2018).

- ROS Wiki. tf Library Package Summary. Available online: http://wiki.ros.org/tf (accessed on 10 April 2018).

- OMPL. The Open Motion Planning Library. Available online: http://ompl.kavrakilab.org/ (accessed on 10 April 2018).

- MoveIt! Website Blog. Moveit! Setup Assistant. Available online: http://docs.ros.org/indigo/api/moveit_tutorials/html/doc/setup_assistant/setup_assistant_tutorial.html (accessed on 10 April 2018).

- Muda, N.; Ismail, N.K.N.; Bakar, S.A.A.; Zain, J.M. Optical character recognition by using template matching (alphabet). In Proceedings of the National Conference on Software Engineering & Computer Systems 2007 (NACES 2007), Kuantan, Malaysia, 20–21 August 2007. [Google Scholar]

- Bradski, G.; Kaehler, A. Learning OpenCV: Computer Vision with the OpenCV Library; O’Reilly Media, Inc.: Newton, MA, USA, 2008. [Google Scholar]

- GitHub. Tesseract Open Source OCR Engine. Available online: https://github.com/tesseract-ocr/tesseract (accessed on 10 April 2018).

- Cremer, S.; Ranatunga, I.; Popa, D.O. Robotic waiter with physical co-manipulation capabilities. In Proceedings of the 2014 IEEE International Conference on Automation Science and Engineering (CASE), New Taipei, Taiwan, 18–22 August 2014; pp. 1153–1158. [Google Scholar]

- Rockel, S.; Klimentjew, D. ROS and PR2 Introduction. Available online: https://tams.informatik.uni-hamburg.de/people/rockel/lectures/ROS_PR2_Introduction.pdf (accessed on 10 April 2018).

- Willow Garage. PR2 Overview. Available online: http://www.willowgarage.com/pages/pr2/overview (accessed on 10 April 2018).

| Cntr Limits | Struct Size | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Th | AR | Width | Height | Rect | Sq | Fill | Crop | DR | #Cntr | AS | |

| Case 1 | No | 0,1.5 | 0,150 | 10,200 | 5,5 | 5,5 | 1,1 | No | 33.33% | 29 | 21345722.64 |

| Case 2 | No | 0,1.5 | 0,150 | 10,200 | 5,5 | 5,5 | 1,1 | Yes | 33.33% | 5 | 20020376.20 |

| Case 3 | No | 0,1.5 | 0,150 | 10,200 | 5,5 | 5,5 | 25,25 | Yes | 0.00% | 5 | 19322476.00 |

| Case 4 | No | 0,1.5 | 0,150 | 10,200 | 5,5 | 5,5 | 50,50 | Yes | 33.33% | 5 | 23890805.00 |

| Case 5 | No | 0,1.5 | 0,150 | 10,200 | 5,5 | 5,5 | 75,75 | Yes | 33.33% | 5 | 25687678.40 |

| Case 6 | No | 0,1.5 | 0,150 | 10,200 | 5,5 | 5,5 | 100,100 | Yes | 33.33% | 5 | 24408772.20 |

| Case 7 | No | 0,1.5 | 0,150 | 10,200 | 10,10 | 5,5 | 75,75 | Yes | 100.00% | 8 | 27724953.75 |

| Case 8 | No | 0,1.5 | 0,150 | 10,200 | 10,10 | 10,10 | 75,75 | Yes | 100.00% | 8 | 27822773.50 |

| Case 9 | No | 0,1.5 | 0,150 | 10,200 | 15,15 | 10,10 | 75,75 | Yes | 100.00% | 9 | 29880588.00 |

| Case 10 | No | 0,1.5 | 10,150 | 20,200 | 15,15 | 10,10 | 75,75 | Yes | 100.00% | 7 | 29329435.71 |

| Case 11 | No | 0,1.5 | 20,150 | 30,200 | 15,15 | 10,10 | 75,75 | Yes | 100.00% | 6 | 27701941.00 |

| Case 12 | No | 0,1.5 | 30,150 | 40,200 | 15,15 | 10,10 | 75,75 | Yes | 100.00% | 6 | 27701941.00 |

| Case 13 | No | 0.5,2 | 30,150 | 40,200 | 15,15 | 10,10 | 75,75 | Yes | 100.00% | 6 | 27701941.00 |

| Case 14 | No | 0.5,3 | 30,150 | 40,200 | 15,15 | 10,10 | 75,75 | Yes | 100.00% | 6 | 27701941.00 |

| Case 15 | Yes | 0.5,3 | 30,150 | 40,200 | 15,15 | 10,10 | 75,75 | Yes | 100.00% | 3 | 37202478.67 |

| Input | Output | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 0.35 | 0.45 | 0.30 | 0.30 | 2 | 30.17 | 51.56 | 10.40 | 11.33 | 13.70 | 27.29 | 1.20 | 5.59 | 0.35 | 0.14 | 0.02 |

| 2 | 0.30 | 0.45 | 0.30 | 0.30 | 2 | 23.88 | 42.20 | 12.27 | 16.18 | 16.04 | 25.79 | 0.98 | 4.14 | 0.30 | 0.14 | 0.01 |

| 3 | 0.25 | 0.60 | 0.30 | 0.30 | 2 | 23.17 | 45.23 | 10.55 | 17.25 | 13.77 | 27.63 | 0.83 | 4.42 | 0.24 | 0.13 | 0.01 |

| 4 | 0.25 | 0.52 | 0.30 | 0.30 | 2 | 21.72 | 41.99 | 11.94 | 20.01 | 15.20 | 26.83 | 0.76 | 6.66 | 0.24 | 0.13 | 0.01 |

| 5 | 0.25 | 0.45 | 0.60 | 0.30 | 2 | 28.16 | 42.50 | 10.89 | 16.31 | 13.77 | 27.57 | 0.60 | 4.01 | 0.24 | 0.17 | 0.01 |

| 6 | 0.25 | 0.45 | 0.45 | 0.30 | 2 | 23.58 | 38.46 | 11.49 | 13.90 | 15.86 | 24.99 | 0.98 | 3.09 | 0.24 | 0.15 | 0.01 |

| 7 | 0.25 | 0.45 | 0.30 | 0.60 | 2 | 22.77 | 45.35 | 10.24 | 16.26 | 13.37 | 27.81 | 0.83 | 4.50 | 0.24 | 0.13 | 0.01 |

| 8 | 0.25 | 0.45 | 0.30 | 0.42 | 2 | 22.83 | 45.60 | 10.25 | 17.69 | 14.53 | 26.83 | 1.17 | 8.31 | 0.24 | 0.13 | 0.01 |

| 9 | 0.25 | 0.45 | 0.30 | 0.30 | 2.50 | 22.95 | 44.36 | 10.58 | 18.96 | 13.37 | 28.12 | 0.83 | 5 | 0.24 | 0.13 | 0.01 |

| 10 | 0.25 | 0.45 | 0.30 | 0.30 | 2.25 | 22.78 | 41.18 | 11.87 | 17.32 | 15.76 | 25.07 | 1.10 | 3.94 | 0.24 | 0.13 | 0.01 |

| 11 | 0.25 | 0.45 | 0.30 | 0.30 | 2 | 23.18 | 43.94 | 9.92 | 14.74 | 13.75 | 27.89 | 1.19 | 5.42 | 0.24 | 0.13 | 0.01 |

| Actual Temp. ( F) | System Output | Detection % | Correct Digit % | # of False Positives | |

|---|---|---|---|---|---|

| Subject 1 | 76.5 | 215.151 | 33% | 0% | 5 |

| Subject 2 | 73.2 | 2 | 33% | 0% | 0 |

| Subject 3 | 72 | 72.1 | 66% | 66% | 1 |

| Subject 4 | 79.2 | 79.2 | 100% | 100% | 0 |

| Subject 5 | 77.7 | 77.7 | 100% | 100% | 0 |

| Subject 6 | 76.3 | 43.7631 | 100% | 0% | 3 |

| Subject 7 | 77.9 | 77.191 | 100% | 66% | 2 |

| Subject 8 | 76.3 | 7 | 33% | 0% | 0 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lundberg, C.L.; Sevil, H.E.; Behan, D.; Popa, D.O. Robotic Nursing Assistant Applications and Human Subject Tests through Patient Sitter and Patient Walker Tasks. Robotics 2022, 11, 63. https://doi.org/10.3390/robotics11030063

Lundberg CL, Sevil HE, Behan D, Popa DO. Robotic Nursing Assistant Applications and Human Subject Tests through Patient Sitter and Patient Walker Tasks. Robotics. 2022; 11(3):63. https://doi.org/10.3390/robotics11030063

Chicago/Turabian StyleLundberg, Cody Lee, Hakki Erhan Sevil, Deborah Behan, and Dan O. Popa. 2022. "Robotic Nursing Assistant Applications and Human Subject Tests through Patient Sitter and Patient Walker Tasks" Robotics 11, no. 3: 63. https://doi.org/10.3390/robotics11030063

APA StyleLundberg, C. L., Sevil, H. E., Behan, D., & Popa, D. O. (2022). Robotic Nursing Assistant Applications and Human Subject Tests through Patient Sitter and Patient Walker Tasks. Robotics, 11(3), 63. https://doi.org/10.3390/robotics11030063