Nut Unfastening by Robotic Surface Exploration

Abstract

:1. Introduction

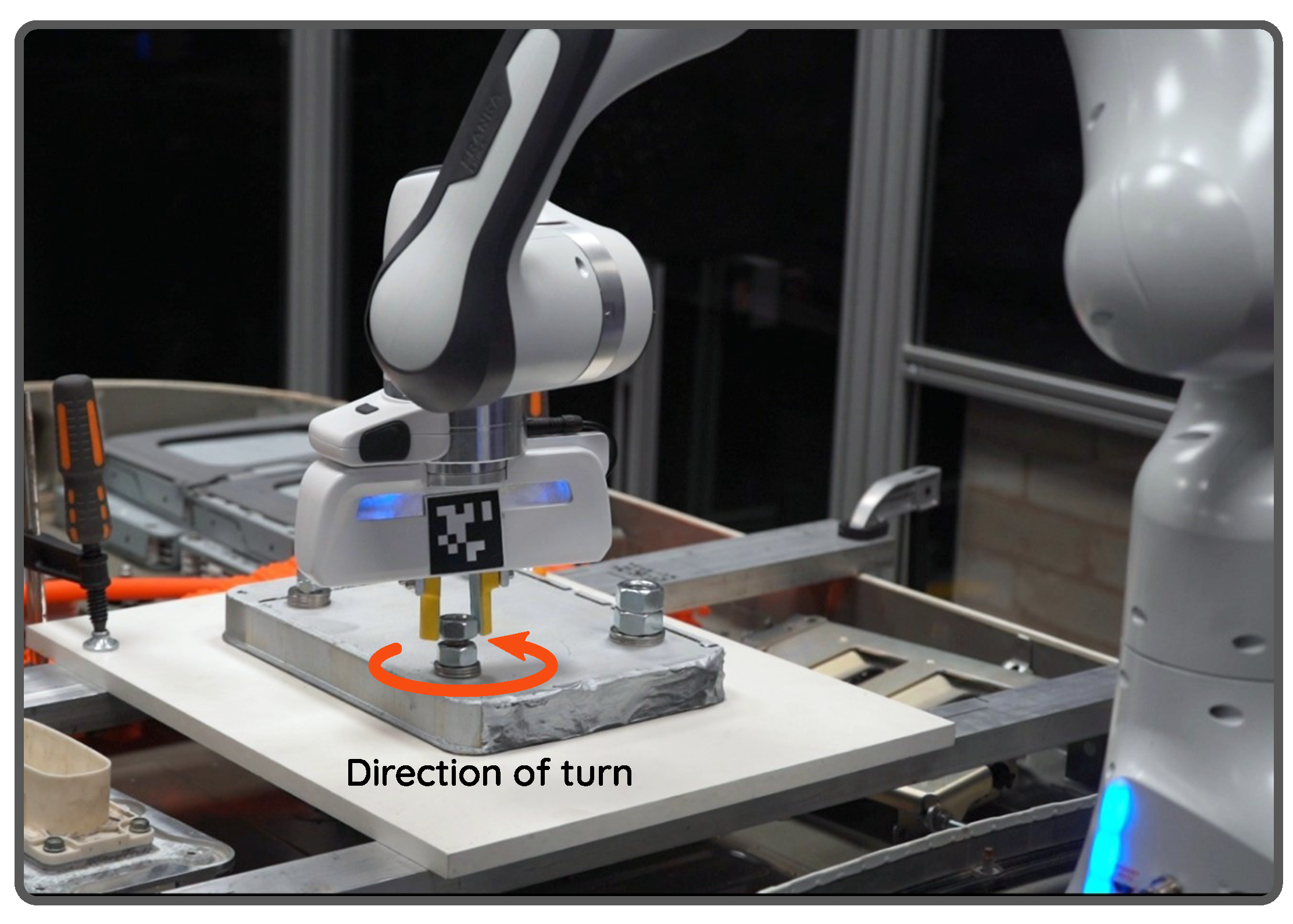

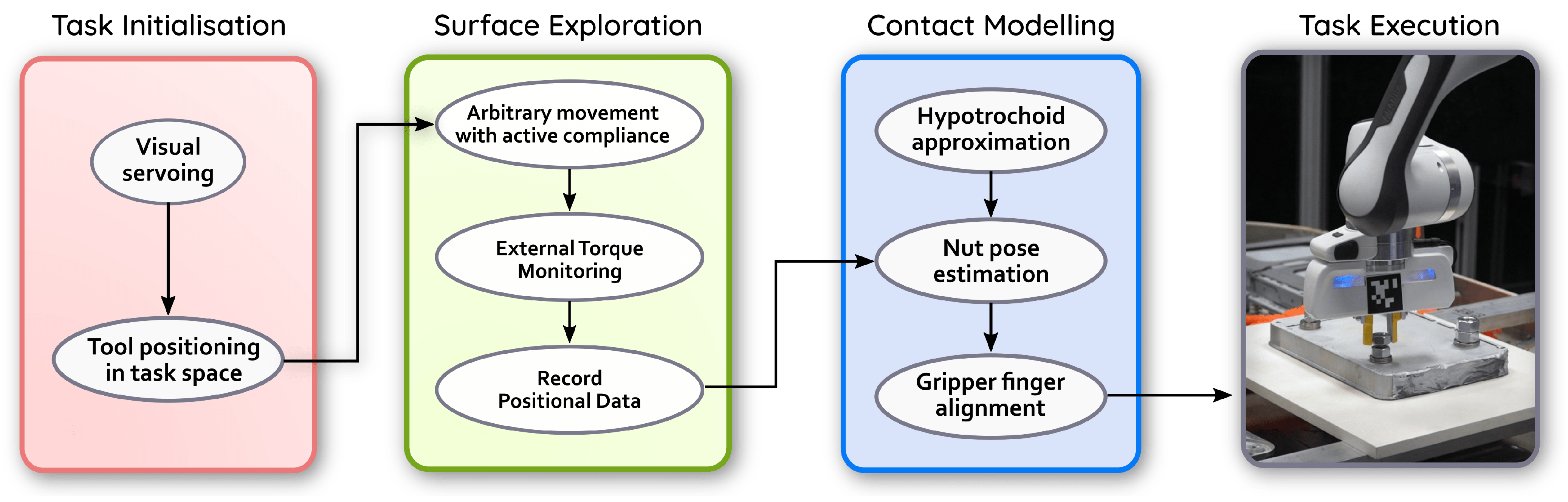

- We have developed a decentralised and generalised framework for nut unfastening. In contrast to the existing conventional methodologies that use motorised screwdrivers, our approach uses robot fingers. This makes our approach agnostic to the size of the nut. To the best of our knowledge, no known studies have ever performed this task using robot fingers.

- We have developed a surface exploration control strategy combining torque monitoring with active compliance in order to guide the robot fingers to explore the task space. The positional data observed from this step are used by our contact modelling scheme to identify the location of the nut. For the sake of contact modelling, a scaling iterative closest point (SICP) technique along with hypotrochoid approximation is integrated with our exploration scheme. This allows us to identify suitable faces to accurately position robot fingers to grasp and rotate sequentially to accomplish unfastening.

2. Related Work

3. Materials and Methods

3.1. Guiding the Robot into Task-Space

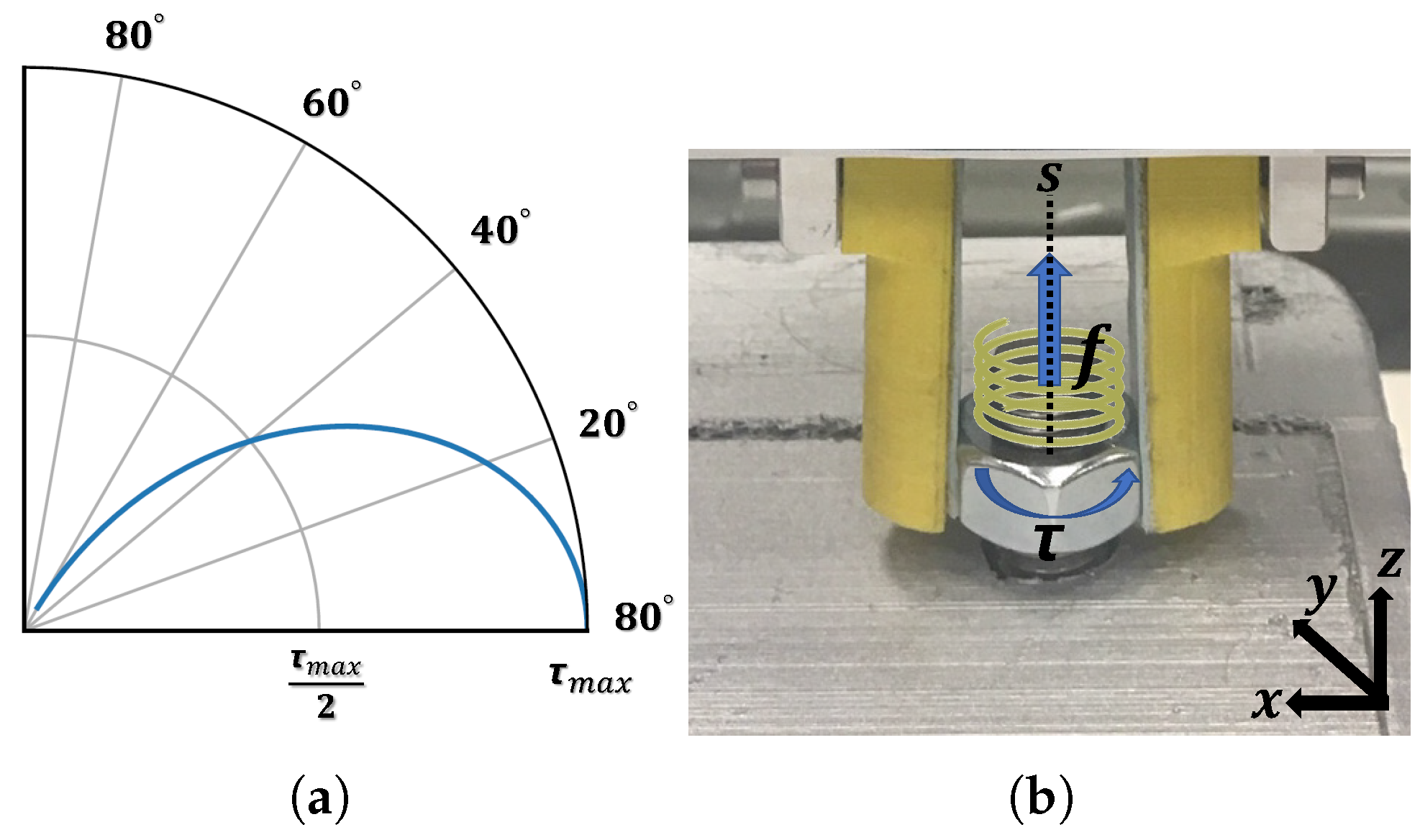

3.2. Surface Exploration

| Algorithm 1 Surface exploration and data collection. |

|

3.3. Contact Modelling

3.4. Nut Unfastening

| Algorithm 2 Gripping and nut unfastening. |

|

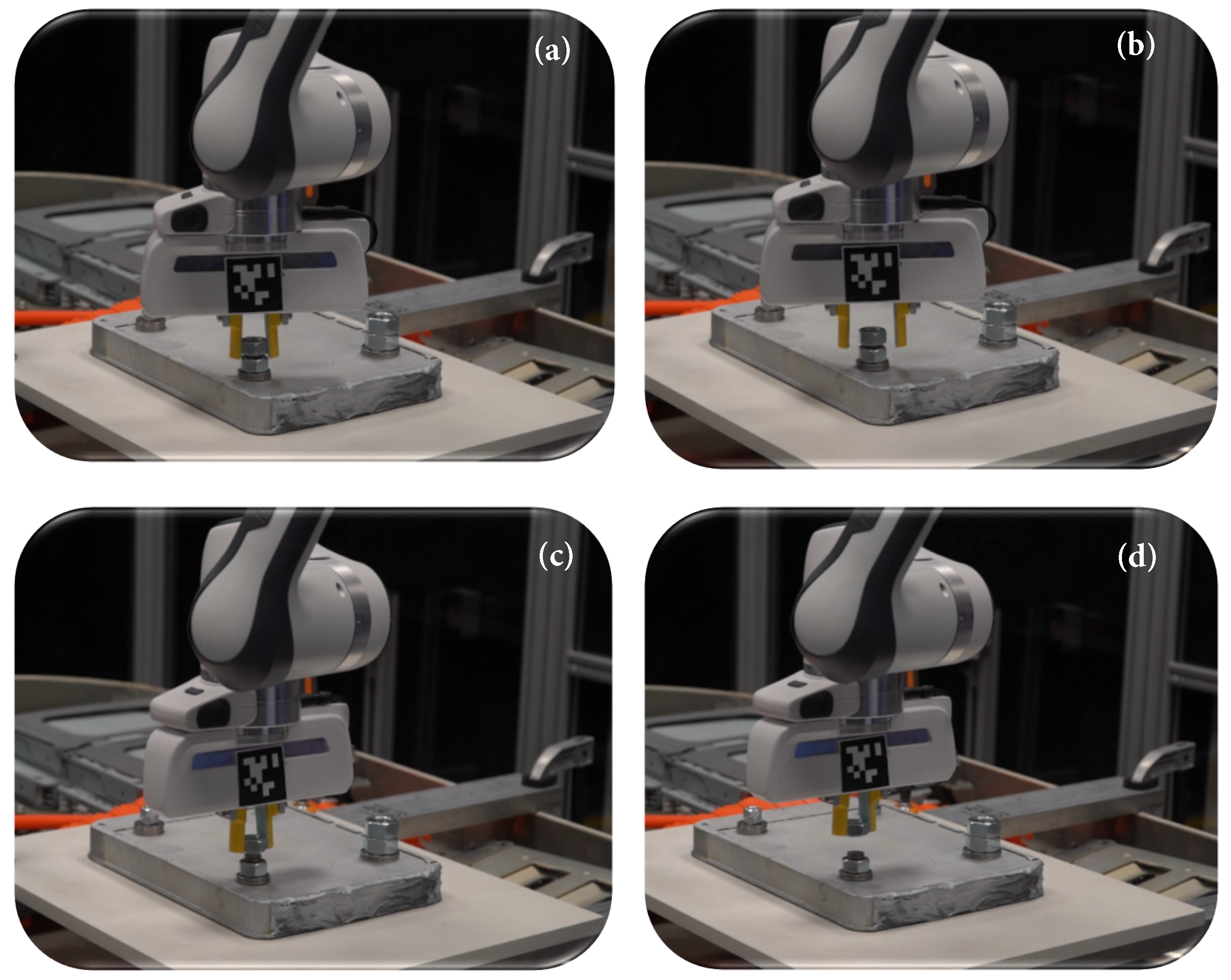

4. Experimental Evaluations

4.1. Experimental Setup

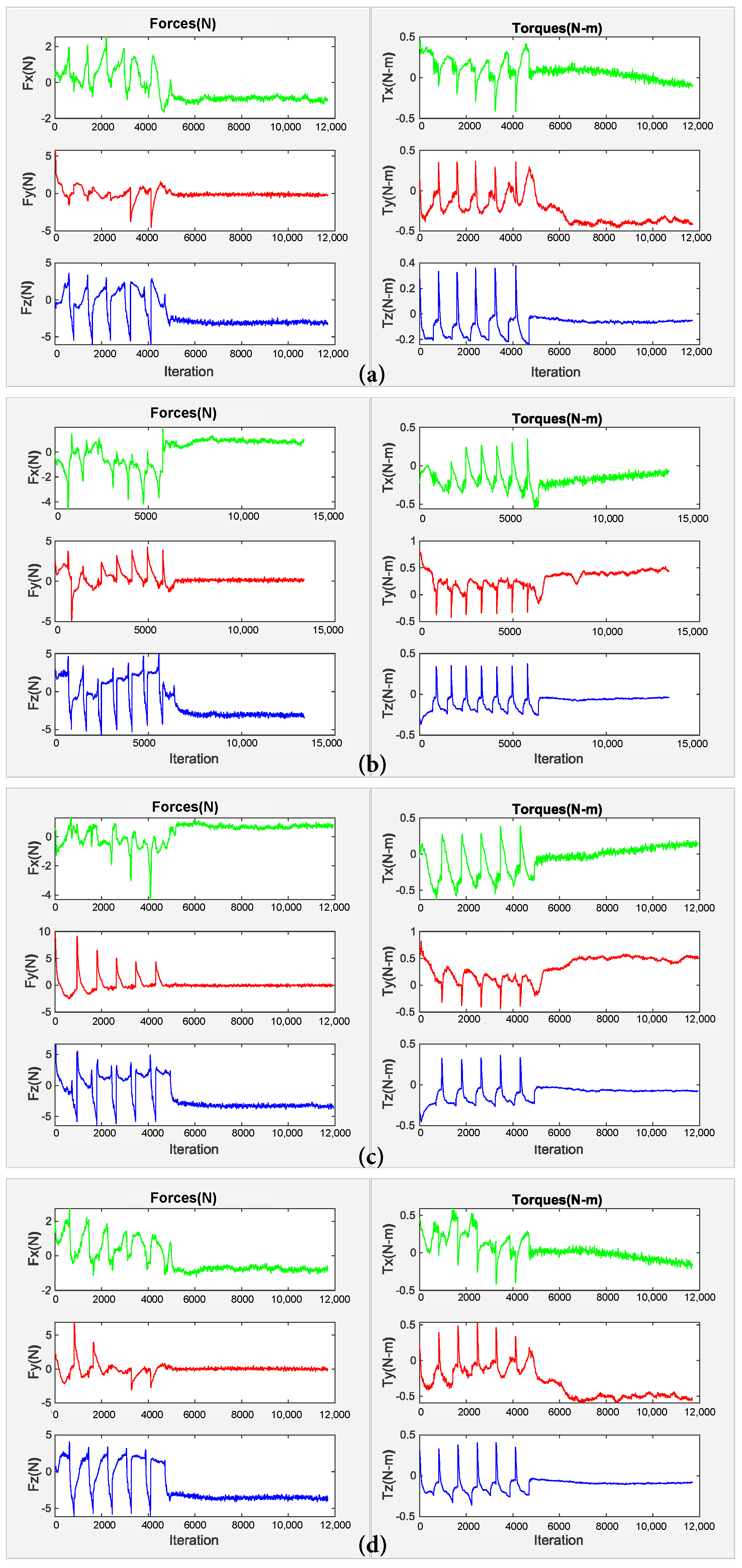

4.2. Shape Exploration Analysis

4.3. Unfastening Analysis

4.4. Discussion

- (a)

- Failure due to size of the probing tool;

- (b)

- Hardware failure (reflex errors). It happens when the estimated external torques or forces exceed the limits;

- (c)

- Missing a side of the nut during exploration;

- (d)

- Coarse pose calculation, due to the impedance;

- (e)

- Jammed nut.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Cazzola, P.; Gorner, M.; Schuitmaker, R.; Maroney, E. Global EV Outlook 2016; International Energy Agency: Paris, France, 2016. [Google Scholar]

- Jia, Z.; Bhatia, A.; Aronson, R.M.; Bourne, D.; Mason, M.T. A survey of automated threaded fastening. IEEE Trans. Autom. Sci. Eng. 2018, 16, 298–310. [Google Scholar] [CrossRef]

- Li, R.; Pham, D.T.; Huang, J.; Tan, Y.; Qu, M.; Wang, Y.; Kerin, M.; Jiang, K.; Su, S.; Ji, C.; et al. Unfastening of Hexagonal Headed Screws by a Collaborative Robot. IEEE Trans. Autom. Sci. Eng. 2020, 17, 1455–1468. [Google Scholar] [CrossRef]

- Cruz-Ramírez, S.R.; Mae, Y.; Arai, T.; Takubo, T.; Ohara, K. Vision-Based Hierarchical Recognition for Dismantling Robot Applied to Interior Renewal of Buildings. Comput.-Aided Civ. Infrastruct. Eng. 2011, 26, 336–355. [Google Scholar] [CrossRef]

- Gil, P.; Pomares, J.; Diaz, S.v.P.C.; Candelas, F.; Torres, F. Flexible multi-sensorial system for automatic disassembly using cooperative robots. Int. J. Comput. Integr. Manuf. 2007, 20, 757–772. [Google Scholar] [CrossRef] [Green Version]

- Seliger, G.; Keil, T.; Rebafka, U.; Stenzel, A. Flexible disassembly tools. In Proceedings of the 2001 IEEE International Symposium on Electronics and the Environment, 2001 IEEE ISEE (Cat. No. 01CH37190), Denver, CO, USA, 9 May 2001; pp. 30–35. [Google Scholar]

- Chen, W.H.; Wegener, K.; Dietrich, F. A robot assistant for unscrewing in hybrid human-robot disassembly. In Proceedings of the IEEE International Conference on Robotics and Biomimetics (ROBIO 2014), Bali, Indonesia, 5–10 December 2014; pp. 536–541. [Google Scholar]

- Apley, D.W.; Seliger, G.; Voit, L.; Shi, J. Diagnostics in disassembly unscrewing operations. Int. J. Flex. Manuf. Syst. 1998, 10, 111–128. [Google Scholar] [CrossRef]

- Zuo, B.R.; Stenzel, A.; Seliger, G. A novel disassembly tool with screwnail endeffectors. J. Intell. Manuf. 2002, 13, 157–163. [Google Scholar] [CrossRef]

- Wegener, K.; Chen, W.H.; Dietrich, F.; Dröder, K.; Kara, S. Robot assisted disassembly for the recycling of electric vehicle batteries. Procedia CIRP 2015, 29, 716–721. [Google Scholar] [CrossRef]

- Mironov, D.; Altamirano, M.; Zabihifar, H.; Liviniuk, A.; Liviniuk, V.; Tsetserukou, D. Haptics of screwing and unscrewing for its application in smart factories for disassembly. In International Conference on Human Haptic Sensing and Touch Enabled Computer Applications, Proceedings of the 11th International Conference, EuroHaptics 2018, Pisa, Italy, 13–16 June 2018; Springer: Cham, Switzerland, 2018; pp. 428–439. [Google Scholar]

- DiFilippo, N.M.; Jouaneh, M.K. A system combining force and vision sensing for automated screw removal on laptops. IEEE Trans. Autom. Sci. Eng. 2017, 15, 887–895. [Google Scholar] [CrossRef]

- Berger, E.; Grehl, S.; Vogt, D.; Jung, B.; Amor, H.B. Experience-based torque estimation for an industrial robot. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 144–149. [Google Scholar]

- Pfeiffer, K.; Escande, A.; Kheddar, A. Nut fastening with a humanoid robot. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 6142–6148. [Google Scholar]

- Pitipong, S.; Pornjit, P.; Watcharin, P. An automated four-DOF robot screw fastening using visual servo. In Proceedings of the IEEE/SICE International Symposium on System Integration, Sendai, Japan, 21–22 December 2010; pp. 379–383. [Google Scholar]

- Adams, G. Next Generation Mobile Robotic Drilling and Fastening Systems. In Proceedings of the SAE 2014 Aerospace Manufacturing and Automated Fastening Conference & Exhibition, Salt Lake City, UT, USA, 23–25 September 2014. [Google Scholar] [CrossRef] [Green Version]

- Hu, Z.; Wan, W.; Koyama, K.; Harada, K. A Mechanical Screwing Tool for 2-Finger Parallel Grippers—Design, Optimization, and Manipulation Policies. arXiv 2020, arXiv:2006.10366. [Google Scholar]

- Yokokohji, Y.; Kawai, Y.; Shibata, M.; Aiyama, Y.; Kotosaka, S.; Uemura, W.; Noda, A.; Dobashi, H.; Sakaguchi, T.; Yokoi, K. Assembly Challenge: A robot competition of the Industrial Robotics Category, World Robot Summit—Summary of the pre-competition in 2018. Adv. Robot. 2019, 33, 876–899. [Google Scholar] [CrossRef] [Green Version]

- Dhayagude, N.; Gao, Z.; Mrad, F. Fuzzy logic control of automated screw fastening. Robot. Comput.-Integr. Manuf. 1996, 12, 235–242. [Google Scholar] [CrossRef]

- Seneviratne, L.D.; Visuwan, P.; Althoefer, K. Weightless neural network based monitoring of screw fastenings. In Human and Environment Friendly Robots with High Intelligence and Emotional Quotients (Cat. No. 99CH36289), Proceedings of the 1999 IEEE/RSJ International Conference on Intelligent Robots and Systems, Kyongju, Korea, 17–21 October 1999; IEEE: Piscataway, NJ, USA, 1999; Volume 1, pp. 561–566. [Google Scholar]

- Chaumette, F.; Hutchinson, S. Visual Servoing and Visual Tracking. In Springer Handbook of Robotics; Christensen, H.I., Ed.; Springer: Berlin/Heidelberg, Germany, 2008; Chapter 24; pp. 563–583. [Google Scholar]

- Siciliano, B.; Sciavicco, L.; Villani, L.; Oriolo, G. Robotics: Modelling, Planning and Control; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Li, Q.; Schurmann, C.; Haschke, R.; Ritter, H. A Control Framework for Tactile Servoing. In Robotics: Science and Systems; Citeseer: Princeton, NJ, USA, 2013. [Google Scholar] [CrossRef]

- Du, S.; Zheng, N.; Xiong, L.; Ying, S.; Xue, J. Scaling iterative closest point algorithm for registration of m–D point sets. J. Vis. Commun. Image Represent. 2010, 21, 442–452. [Google Scholar] [CrossRef]

- Pratt, V. Direct Least-squares Fitting of Algebraic Surfaces. SIGGRAPH Comput. Graph. 1987, 21, 145–152. [Google Scholar] [CrossRef]

- Marchand, É.; Spindler, F.; Chaumette, F. ViSP for visual servoing: A generic software platform with a wide class of robot control skills. IEEE Robot. Autom. Mag. 2005, 12, 40–52. [Google Scholar] [CrossRef] [Green Version]

- Adjigble, M.; Marturi, N.; Ortenzi, V.; Rajasekaran, V.; Corke, P.; Stolkin, R. Model-free and learning-free grasping by local contact moment matching. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 2933–2940. [Google Scholar]

| Bolt Model | Outer Diameter (mm) | Side Width (mm) | Side Height (mm) | Weight (kg) |

|---|---|---|---|---|

| M10 | 19 | 9 | 8 | 0.011 |

| M12 | 21 | 11 | 10 | 0.017 |

| M16 | 27 | 14 | 12.5 | 0.030 |

| M20 | 33 | 17 | 15.5 | 0.061 |

| Nut | Number of Failures 1 | Failure Causes | Avg. Time 2 (s) | |||

|---|---|---|---|---|---|---|

| Explore | Unfasten | Explore | Unfasten | Explore | Unfasten | |

| M20 | 0 | 0 | N/A | N/A | 46 | 54 |

| M16 | 1 | 0 | a, b | N/A | 42 | 54 |

| M12 | 2 | 0 | a, d | N/A | 38 | 53 |

| M10 | 3 | 2 | a, b, c | e | 37 | 52 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rastegarpanah, A.; Ner, R.; Stolkin, R.; Marturi, N. Nut Unfastening by Robotic Surface Exploration. Robotics 2021, 10, 107. https://doi.org/10.3390/robotics10030107

Rastegarpanah A, Ner R, Stolkin R, Marturi N. Nut Unfastening by Robotic Surface Exploration. Robotics. 2021; 10(3):107. https://doi.org/10.3390/robotics10030107

Chicago/Turabian StyleRastegarpanah, Alireza, Rohit Ner, Rustam Stolkin, and Naresh Marturi. 2021. "Nut Unfastening by Robotic Surface Exploration" Robotics 10, no. 3: 107. https://doi.org/10.3390/robotics10030107

APA StyleRastegarpanah, A., Ner, R., Stolkin, R., & Marturi, N. (2021). Nut Unfastening by Robotic Surface Exploration. Robotics, 10(3), 107. https://doi.org/10.3390/robotics10030107