Multi-Task Deep Learning Model for Classification of Dental Implant Brand and Treatment Stage Using Dental Panoramic Radiograph Images

Abstract

1. Introduction

2. Patients and Methods

2.1. Study Design

2.2. Ethics Statement

2.3. Data Acquisition and Preprocessing

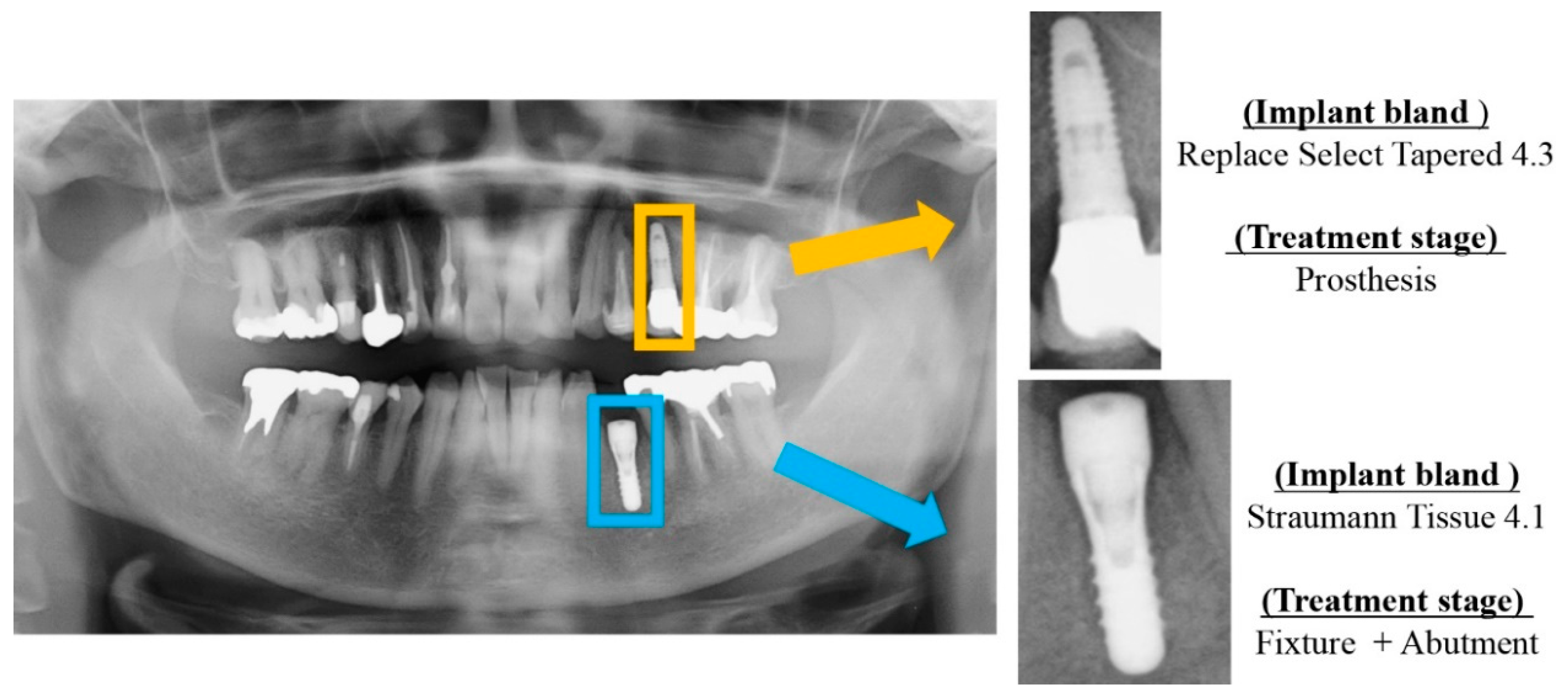

2.3.1. Classification of Dental Implant Brand

- (1)

- Full OSSEOTITE 4.0: Full OSSEOTITE Tapered Certain (Zimmer Biomet, Palm Beach Gardens, FL, USA), diameter of 4 mm; lengths of 8.5, 10, 11 and 11.5 mm.

- (2)

- Astra EV 4.2: Astra Tech Implant System OsseoSpeed EV (Dentsply IH AB, Molndal, Sweden), diameter of 4.2 mm; lengths of 9 and 11 mm.

- (3)

- Astra TX 4.0: Astra Tech Implant System OsseoSpeed TX (Dentsply IH AB, Molndal, Sweden), diameter of 4 mm; lengths of 8, 9 and 11 mm.

- (4)

- Astra TX 4.5: Astra Tech Implant System OsseoSpeed TX (Dentsply IH AB, Molndal, Sweden), diameter of 4.5 mm; lengths of 9 and 11 mm.

- (5)

- Astra MicroThread 4.0: Astra Tech Implant System MicroThread, (Dentsply IH AB, Molndal, Sweden), diameter of 4 mm; lengths of 8, 9 and 11 mm.

- (6)

- Astra MicroThread 4.5: Astra Tech Implant System MicroThread, (Dentsply IH AB, Molndal, Sweden), diameter of 4.5 mm; lengths of 9 and 11 mm.

- (7)

- Brånemark Mk III 4.0: Brånemark System Mk III TiUnite (Nobelbiocare, Göteborg, Sweden), diameter of 4 mm; lengths of 8.5, 10 and 11.5 mm.

- (8)

- FINESIA 4.2: FINESIA BL HA TP (Kyocera Co., Kyoto, Japan), diameter of 4.2 mm; lengths of 8 and 10 mm.

- (9)

- Replace Select Tapered 4.3: Replace Select Tapered (Nobelbiocare, Göteborg, Sweden), diameter of 4.3 mm; lengths of 8, 10 and 11.5 mm.

- (10)

- Nobel Replace CC 4.3: Nobel Replace Conical Connection, (Nobelbiocare, Göteborg, Sweden), diameter of 4.3 mm; lengths of 8, 10 and 11.5 mm.

- (11)

- Straumann Tissue 4.1: Standard Plus Implant Tissue Level implants (Straumann Group, Basei, Switzerland), diameter of 4.1 mm; lengths of 8 and 10 mm.

- (12)

- Straumann Bone Level 4.1: Standard Plus Implant Bone Level implants (Straumann Group, Basei, Switzerland), diameter of 4.1 mm; lengths of 8 and 10 mm.

2.3.2. Classification of Dental Implant Treatment Stages

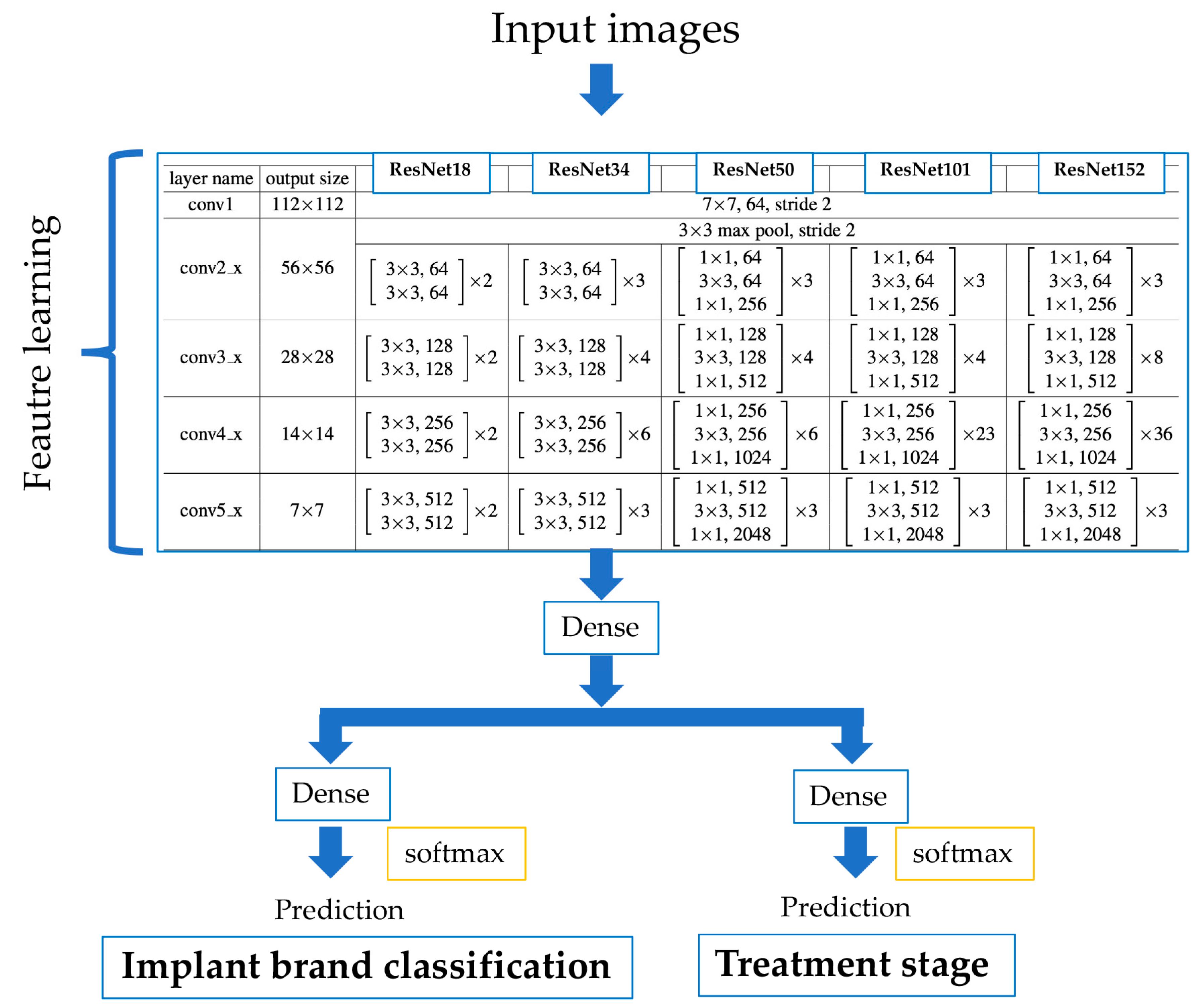

2.4. CNN Model Architecture

2.5. Model Training

2.6. Deep Learning Procedure

2.7. Multi-Task

2.8. Performance Metrics

2.9. Statistical Analysis

2.10. Visualization of Computer-Assisted Diagnostic System

3. Results

3.1. Implant Brand Classification Performance

3.2. Implant Treatment Stage Classification Performance

3.3. Comparison the Multi-Task and Single-Task Models in Classification Performance

3.4. Visualization of Model Classification

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Howe, M.S.; Keys, W.; Richards, D. Long-term (10-year) dental implant survival: A systematic review and sensitivity meta-analysis. J. Dent. 2019, 84, 9–21. [Google Scholar] [CrossRef]

- Albrektsson, T.; Zarb, G.; Worthington, P.; Eriksson, A.R. The long-term efficacy of currently used dental implants: A review and proposed criteria of success. Int. J. Oral Maxillofac. Implant. 1986, 1, 11–25. [Google Scholar]

- Tsigarida, A.; Chochlidakis, K.; Fraser, D.; Lampraki, E.; Einarsdottir, E.R.; Barmak, A.B.; Papaspyridakos, P.; Ercoli, C. Peri-Implant Diseases and Biologic Complications at Implant-Supported Fixed Dental Prostheses in Partially Edentulous Patients. J. Prosthodont. 2020, 29, 429–435. [Google Scholar] [CrossRef] [PubMed]

- Do, T.A.; Le, H.S.; Shen, Y.W.; Huang, H.L.; Fuh, L.J. Risk factors related to late failure of dental implant—A systematic review of recent studies. Int. J. Environ. Res. Public Health 2020, 17, 3931. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.H.; Kim, Y.T.; Jeong, S.N.; Kim, N.H.; Lee, D.W. Incidence and pattern of implant fractures: A long-term follow-up multicenter study. Clin. Implant Dent. Relat. Res. 2018, 20, 463–469. [Google Scholar] [CrossRef] [PubMed]

- Sukegawa, S.; Kanno, T.; Akamatsu, H.; Shibata, A.; Takahashi, Y.; Furuki, Y. Surgical management of severe bruxism and masseteric hypertrophy in the patient with repeated implant failures: A case report. J. Oral Maxillofac. Surgery Med. Pathol. 2016, 28, 416–420. [Google Scholar] [CrossRef]

- Sukegawa, S.; Saika, M.; Tamamura, R.; Nakano, K.; Takabatake, K.; Kawai, H.; Nagatsuka, H.; Furuki, Y. Clinical retrospective study of dental implant removal: Do patients who require implant removal desire implant prosthesis again? Med. Oral Patol. Oral Y Cir. Bucal 2020, 25, e784–e790. [Google Scholar] [CrossRef] [PubMed]

- Kim, C.; Kim, D.; Jeong, H.; Yoon, S.-J.; Youm, S. Automatic Tooth Detection and Numbering Using a Combination of a CNN and Heuristic Algorithm. Appl. Sci. 2020, 10, 5624. [Google Scholar] [CrossRef]

- Jokstad, A.; Braegger, U.; Brunski, J.B.; Carr, A.B.; Naert, I.; Wennerberg, A. Quality of dental implants. Int. Dent. J. 2003, 53, 409–443. [Google Scholar] [CrossRef]

- Yamamoto, N.; Sukegawa, S.; Kitamura, A.; Goto, R.; Noda, T.; Nakano, K.; Takabatake, K.; Kawai, H.; Nagatsuka, H.; Kawasaki, K.; et al. Deep learning for osteoporosis classification using hip radiographs and patient clinical covariates. Biomolecules 2020, 10, 1534. [Google Scholar] [CrossRef]

- Kılıc, M.C.; Bayrakdar, I.S.; Çelik, Ö.; Bilgir, E.; Orhan, K.; Aydın, O.B.; Kaplan, F.A.; Sağlam, H.; Odabaş, A.; Aslan, A.F.; et al. Artificial intelligence system for automatic deciduous tooth detection and numbering in panoramic radiographs. Dentomaxillofac. Radiol. 2021, 50, 20200172. [Google Scholar] [CrossRef] [PubMed]

- Ren, J.; Fan, H.; Yang, J.; Ling, H. Detection of Trabecular Landmarks for Osteoporosis Prescreening in Dental Panoramic Radiographs. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBS.), Institute of Electrical and Electronics Engineers Inc., Montreal, QC, Canada, 20–24 July 2020; pp. 2194–2197. [Google Scholar]

- Endres, M.G.; Hillen, F.; Salloumis, M.; Sedaghat, A.R.; Niehues, S.M.; Quatela, O.; Hanken, H.; Smeets, R.; Beck-Broichsitter, B.; Rendenbach, C.; et al. Development of a deep learning algorithm for periapical disease detection in dental radiographs. Diagnostics 2020, 10, 430. [Google Scholar] [CrossRef]

- Lee, J.H.; Kim, D.H.; Jeong, S.N. Diagnosis of cystic lesions using panoramic and cone beam computed tomographic images based on deep learning neural network. Oral Dis. 2020, 26, 152–158. [Google Scholar] [CrossRef] [PubMed]

- Kats, L.; Vered, M.; Zlotogorski-Hurvitz, A.; Harpaz, I. Atherosclerotic carotid plaque on panoramic radiographs: Neural network detection. Int. J. Comput. Dent. 2019, 22, 163–169. [Google Scholar]

- Murata, M.; Ariji, Y.; Ohashi, Y.; Kawai, T.; Fukuda, M.; Funakoshi, T.; Kise, Y.; Nozawa, M.; Katsumata, A.; Fujita, H.; et al. Deep-learning classification using convolutional neural network for evaluation of maxillary sinusitis on panoramic radiography. Oral Radiol. 2019, 35, 301–307. [Google Scholar] [CrossRef] [PubMed]

- Samala, R.K.; Chan, H.P.; Hadjiiski, L.M.; Helvie, M.A.; Cha, K.H.; Richter, C.D. Multi-task transfer learning deep convolutional neural network: Application to computer-aided diagnosis of breast cancer on mammograms. Phys. Med. Biol. 2017, 62, 8894–8908. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, IEEE Computer Society, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Huang, F.; Ash, J.; Langford, J.; Schapire, R. Learning Deep ResNet Blocks Sequentially using Boosting Theory. In Proceedings of the 35th International Conference on Machine Learning (ICML 2018), Stockholm, Sweden, 10–15 July 2018; Volume 80, pp. 3272–3290. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Stone, M. Cross-Validatory Choice and Assessment of Statistical Predictions. J. R. Stat. Soc. Ser. B 1974, 36, 111–133. [Google Scholar] [CrossRef]

- Fritz, C.O.; Morris, P.E.; Richler, J.J. Effect size estimates: Current use, calculations, and interpretation. J. Exp. Psychol. Gen. 2012, 141, 2–18. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-based Localization. Int. J. Comput. Vis. 2016, 128, 336–359. [Google Scholar] [CrossRef]

- Lee, J.H.; Jeong, S.N. Efficacy of deep convolutional neural network algorithm for the identification and classification of dental implant systems, using panoramic and periapical radiographs: A pilot study. Medicine 2020, 99, e20787. [Google Scholar] [CrossRef]

- Lee, J.-H.; Kim, Y.-T.; Lee, J.-B.; Jeong, S.-N. A Performance Comparison between Automated Deep Learning and Dental Professionals in Classification of Dental Implant Systems from Dental Imaging: A Multi-Center Study. Diagnostics 2020, 10, 910. [Google Scholar] [CrossRef] [PubMed]

- Sukegawa, S.; Yoshii, K.; Hara, T.; Yamashita, K.; Nakano, K.; Yamamoto, N.; Nagatsuka, H.; Furuki, Y. Deep neural networks for dental implant system classification. Biomolecules 2020, 10, 984. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.-E.; Nam, N.-E.; Shim, J.-S.; Jung, Y.-H.; Cho, B.-H.; Hwang, J.J. Transfer Learning via Deep Neural Networks for Implant Fixture System Classification Using Periapical Radiographs. J. Clin. Med. 2020, 9, 1117. [Google Scholar] [CrossRef] [PubMed]

- Hadj Saïd, M.; Le Roux, M.-K.; Catherine, J.-H.; Lan, R. Development of an Artificial Intelligence Model to Identify a Dental Implant from a Radiograph. Int. J. Oral Maxillofac. Implants 2020, 35, 1077–1082. [Google Scholar] [CrossRef]

- Sener, O.; Koltun, V. Multi-Task Learning as Multi-Objective Optimization. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS 2018), Montreal, QC, Canada, 3–8 December 2018; pp. 525–536. [Google Scholar]

- Cubuk, E.D.; Zoph, B.; Mane, D.; Vasudevan, V.; Le, Q.V. AutoAugment: Learning Augmentation Policies from Data. In Proceedings of the Computer Vision and Pattern Recognition (CVPR 2018), Salt Lake City, UT, USA, 18–23 June 2018; pp. 113–123. [Google Scholar]

- Ruder, S. An Overview of Multi-Task Learning in Deep Neural Networks. arXiv 2017, arXiv:1706.05098. [Google Scholar]

- Kelley, K.; Preacher, K.J. On effect size. Psychol. Methods 2012, 17, 137–152. [Google Scholar] [CrossRef] [PubMed]

| Dental Implant Bland | Treatment Status | |||

|---|---|---|---|---|

| Fixture | Fixture + Abutment | Prosthesis | Total | |

| Full OSSEOTITE 4.0 | 279 | 25 | 123 | 427 |

| Astra EV 4.2 | 350 | 307 | 188 | 845 |

| Astra TX 4.0 | 1412 | 504 | 604 | 2520 |

| Astra TX 4.5 | 523 | 158 | 433 | 1114 |

| Astra MicroThread 4.0 | 337 | 82 | 285 | 704 |

| Astra MicroThread 4.5 | 220 | 94 | 66 | 380 |

| Brånemark Mk III 4.0 | 275 | 52 | 28 | 355 |

| FINESIA 4.2 | 137 | 141 | 54 | 332 |

| Replace Select Tapered 4.3 | 302 | 178 | 136 | 616 |

| Nobel Replace CC 4.3 | 1089 | 233 | 277 | 1599 |

| Straumann Tissue 4.1 | 225 | 288 | 142 | 655 |

| Straumann Bone Level 4.1 | 87 | 99 | 34 | 220 |

| Total Parameter | ResNet18 | ResNet34 | ResNet50 | ResNet101 | ResNet152 |

|---|---|---|---|---|---|

| Multi-task | 11,457,240 | 21,572,824 | 25,659,608 | 44,703,960 | 60,393,688 |

| Single-task (Bland + Treatment stage) | 22,906,785 | 43,137,953 | 51,303,841 | 89,392,545 | 120,772,001 |

| Bland | 11,455,701 | 21,571,285 | 25,656,533 | 44,700,885 | 60,390,613 |

| Treatment stage | 11,451,084 | 21,566,668 | 25,647,308 | 44,691,660 | 60,381,388 |

| Multi-Task | ResNet18 | ResNet34 | ResNet50 | ResNet101 | ResNet152 |

| Accuracy | 0.9803 | 0.9851 | 0.9869 | 0.9899 | 0.9908 |

| Precision | 0.9780 | 0.9847 | 0.9870 | 0.9895 | 0.9914 |

| Recall | 0.9727 | 0.9808 | 0.9812 | 0.9860 | 0.9886 |

| F1-score | 0.9749 | 0.9826 | 0.9838 | 0.9875 | 0.9899 |

| AUC | 0.9997 | 0.9998 | 0.9998 | 0.9999 | 0.9999 |

| Single-Task | ResNet18 | ResNet34 | ResNet50 | ResNet101 | ResNet152 |

| Accuracy | 0.9787 | 0.9800 | 0.9800 | 0.9841 | 0.9851 |

| Precision | 0.9737 | 0.9790 | 0.9816 | 0.9822 | 0.9839 |

| Recall | 0.9726 | 0.9743 | 0.9746 | 0.9798 | 0.9809 |

| F1-score | 0.9724 | 0.9762 | 0.9776 | 0.9805 | 0.9820 |

| AUC | 0.9996 | 0.9997 | 0.9996 | 0.9997 | 0.9998 |

| Multi/Single (%) | ResNet18 | ResNet34 | ResNet50 | ResNet101 | ResNet152 |

| Accuracy | 100.16 | 100.52 | 100.71 | 100.59 | 100.57 |

| Precision | 100.45 | 100.59 | 100.56 | 100.74 | 100.76 |

| Recall | 100.01 | 100.66 | 100.68 | 100.63 | 100.79 |

| F1-score | 100.25 | 100.65 | 100.64 | 100.71 | 100.80 |

| AUC | 100.01 | 100.01 | 100.02 | 100.02 | 100.01 |

| Multi-Task | ResNet18 | ResNet34 | ResNet50 | ResNet101 | ResNet152 |

| Accuracy | 0.9947 | 0.9958 | 0.9963 | 0.9971 | 0.9972 |

| Precision | 0.9949 | 0.9958 | 0.9965 | 0.9971 | 0.9972 |

| Recall | 0.9933 | 0.9946 | 0.9949 | 0.9960 | 0.9963 |

| F1-score | 0.9941 | 0.9952 | 0.9957 | 0.9965 | 0.9967 |

| AUC | 0.9998 | 1.0000 | 0.9999 | 0.9999 | 0.9999 |

| Single-Task | ResNet18 | ResNet34 | ResNet50 | ResNet101 | ResNet152 |

| Accuracy | 0.9942 | 0.9951 | 0.9960 | 0.9964 | 0.9955 |

| Precision | 0.9947 | 0.9949 | 0.9952 | 0.9970 | 0.9957 |

| Recall | 0.9922 | 0.9940 | 0.9944 | 0.9948 | 0.9943 |

| F1-score | 0.9934 | 0.9944 | 0.9948 | 0.9959 | 0.9950 |

| AUC | 0.9998 | 0.9999 | 0.9999 | 0.9998 | 0.9999 |

| Multi/Single (%) | ResNet18 | ResNet34 | ResNet50 | ResNet101 | ResNet152 |

| Accuracy | 100.05 | 100.07 | 100.03 | 100.07 | 100.17 |

| Precision | 100.02 | 100.10 | 100.13 | 100.01 | 100.14 |

| Recall | 100.11 | 100.07 | 100.04 | 100.12 | 100.20 |

| F1-score | 100.07 | 100.08 | 100.09 | 100.06 | 100.17 |

| AUC | 100.01 | 100.01 | 100.00 | 100.01 | 100.00 |

| Bland Classification | ||||||

| Multi-task | SD | Single-task | SD | p value | Effect size | |

| accuracy | 0.9785 | 0.0123 | 0.9706 | 0.0209 | 0.0332 | 0.4484 |

| precision | 0.9794 | 0.0079 | 0.9724 | 0.0138 | 0.0195 | 0.6017 |

| recall | 0.9718 | 0.0150 | 0.9648 | 0.0200 | 0.0948 | 0.3902 |

| f1-score | 0.9739 | 0.0150 | 0.9662 | 0.0206 | 0.0371 | 0.4221 |

| AUC | 0.9995 | 0.0009 | 0.9989 | 0.0020 | 0.0115 | 0.3940 |

| Treatment stage | ||||||

| Multi-task | SD | Single-task | SD | p value | Effect size | |

| accuracy | 0.9963 | 0.0009 | 0.9924 | 0.0061 | <0.0001 | 0.8183 |

| precision | 0.9961 | 0.0009 | 0.9924 | 0.0072 | 0.0004 | 0.6876 |

| recall | 0.9950 | 0.0013 | 0.9910 | 0.0050 | <0.0001 | 0.9834 |

| f1-score | 0.9956 | 0.0011 | 0.9914 | 0.0063 | <0.0001 | 0.8387 |

| AUC | 0.9999 | 0.0001 | 0.9997 | 0.0002 | 0.0015 | 0.7457 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sukegawa, S.; Yoshii, K.; Hara, T.; Matsuyama, T.; Yamashita, K.; Nakano, K.; Takabatake, K.; Kawai, H.; Nagatsuka, H.; Furuki, Y. Multi-Task Deep Learning Model for Classification of Dental Implant Brand and Treatment Stage Using Dental Panoramic Radiograph Images. Biomolecules 2021, 11, 815. https://doi.org/10.3390/biom11060815

Sukegawa S, Yoshii K, Hara T, Matsuyama T, Yamashita K, Nakano K, Takabatake K, Kawai H, Nagatsuka H, Furuki Y. Multi-Task Deep Learning Model for Classification of Dental Implant Brand and Treatment Stage Using Dental Panoramic Radiograph Images. Biomolecules. 2021; 11(6):815. https://doi.org/10.3390/biom11060815

Chicago/Turabian StyleSukegawa, Shintaro, Kazumasa Yoshii, Takeshi Hara, Tamamo Matsuyama, Katsusuke Yamashita, Keisuke Nakano, Kiyofumi Takabatake, Hotaka Kawai, Hitoshi Nagatsuka, and Yoshihiko Furuki. 2021. "Multi-Task Deep Learning Model for Classification of Dental Implant Brand and Treatment Stage Using Dental Panoramic Radiograph Images" Biomolecules 11, no. 6: 815. https://doi.org/10.3390/biom11060815

APA StyleSukegawa, S., Yoshii, K., Hara, T., Matsuyama, T., Yamashita, K., Nakano, K., Takabatake, K., Kawai, H., Nagatsuka, H., & Furuki, Y. (2021). Multi-Task Deep Learning Model for Classification of Dental Implant Brand and Treatment Stage Using Dental Panoramic Radiograph Images. Biomolecules, 11(6), 815. https://doi.org/10.3390/biom11060815