The purpose of this article is to present a certain view of understanding and (pattern) recognition, based on the bit length of a unique but ordered coding that distinguishes between objects belonging and not belonging to a given class. Once done, we will define an entropy measuring the effectiveness of the concepts we use for classification, and show that learning is equivalent to decreasing the entropy of the representation of the input.

One of the bottlenecks in today’s artificial intelligence (AI) algorithms is to conceptualize the unknown in a way which makes it automatically implementable by an AI. In this respect, the borderline recognitions after a learning process also belong to the category “unknown”. A flexible AI should be able to determine when it did not recognize something and, accordingly, to run a safety protocol.

The person pushing a bicycle is neither a pedestrian nor a vehicle, still he or she should not be overrun by an automatic self-driven car. To render to one of the pre-defined classes an unexpected, so far unexperienced, and briefly unknown perception is neither smart nor intelligent. Intelligence starts where such indefinite situations are recognized and acted upon.

We model in the present article a finite universe of objects, each indexable (countable), and referred to by an at most N bits long binary digital code. These possible objects can be divided into two categories in the simplest version: belonging to a pre-defined and recognized class, and all the others to the unrecognized ones. This situation is analogous to the division of phase space in statistical physics to a subsystem under observation and to an unobserved environment. Since the best separation minimizes the correlations among these two parts, the minimum in mutual information indicates the ideal subsystem—environment partition. For infinitely large systems, this leads to the canonical description, for example, by fixing the average energy in the subsystem being equal to that of the reservoir system. In finite systems, there are fluctuations around this value, and the temperature cannot be sharply defined.

The structure of this article is as follows. We start with the motivation that a comprehensive view of understanding and recognition is important for improving both natural and AI systems. Next, we turn to a mathematical formulation of understanding supported with proofs and examples. Then, we turn to the definition of the entropy of learning, proposing a formula that is minimal in the optimally trained state of the intelligent actor. We also demonstrate how a scientific method of dealing with a few unknowns is opposed to AI methods, where complex data must be handled in repeated steps. Then, learning as a time evolution of bitwise arrangements will be pictured and related to phase space shrinking—eventually to a concept of general entropy.

What Does Science Teach Us about Learning?

The learning process, during which new knowledge is acquired, is of primary importance in human evolution and societal development. Concepts of learning, understanding and wisdom were and are anxiously debated in the history of philosophy [

1]. In the natural sciences, in particular in the modern ages when these became released from the oppression of philosophy, understanding frequently appears as a simplification. Not that simplification alone would be an understanding, but the fundamental relations, newly discovered, could only be seen by paying the price of simplification. It is also an abstraction from unnecessary circumstances - but which exactly are unnecessary is more clear after one has found the right models.

In this vocabulary, knowledge is data, such as facts known about systems and processes, eventually comprised into finite bit-strings, and understanding is the model which is able to organize these facts in such a way to improve the speed and sharpness of our predictions. These predictions form a basis for interaction, transformation and, in the end, for technology. We declare an understanding when we divide the facts into two classes: relevant and irrelevant ones. Relevant for an understanding of the behavior, and relevant for being able to predict and influence future states of the piece of world under our study.

Complex systems are also often treated in science as collections of their simpler parts, with subsystems described by shorter bit-strings. When the total is nothing more than a simple sum of its ingredients, then analytic thinking triumphs. In some cases the “simple addition” may be replaced by more sophisticated composition rules, but any rule which defines a mathematical group or semi-group is associative. For associative rules, a formal logarithm can be derived which is then additive [

2]. Exactly the utilization of formal logarithms defines a powerful generalization of the entropy concept: the group entropy [

3].

Knowing the parts of a system and all of the interactions between those parts constitute a complete analytic model. The subsystems being simple it is only a question of computing power to make all possible predictions. Yet, we do not need them all, only the relevant ones. The goal of a Theory of Everything, the ultimate string model meanwhile was shattered by the enormous number of the possible ways it could be connected to reality, or at least to its most prominent representative, the Standard Model in particle physics [

4]. With a slight extension, on the cost of phenomenological parameters, even gravity may be added to the Standard Model [

5]. Accepting two dozen unexplained parameters, in principle, the Standard Model is understood and its predictions are experimentally verified. More worry arises upon not detecting deviations from it. Therefore, the Standard Model itself needs an explanation.

Computing power until the mid of 20th century was exhausted by formula writing and solving with pencils and paper. Since the dawn of computers, machines took over the bulk of computations with a speed surpassing all previous dreams. Even computer simulations of complex systems with nonlinear chaotic dynamics arose and man-made intelligent networks work by classifying complex data patterns. But one still doubts that computing machines would understand what they compute.

How to understand then complex systems? Historically, the first attempt to treat complex systems was by perturbation theory. This was doable even with paper and pencil tools. A simplified problem, solved analytically, is varied by adding small perturbing effects and the real behavior is determined by a series of further computations gradually. The main limitation of this strategy appears when perturbation series become divergent, like the quantum theory of strong interaction Quantum Chromodynamics (QCD) for processes at low momentum transfer. For such processes a massive use of computer power seemed to be the solution: as lattice gauge theory spread since the 1970s.

Such large scale simulations provide bits and numbers which have to be interpreted. These are virtual experiments not telling us more about the essentials than a real world experiment—but with a lower cost and higher insecurity about their relevance. That circumstance drives their proliferation. So why is it that knowing a bunch of numbers does not mean understanding?

An important example from high energy physics is the missing proof of the existence of a mass-gap in quantum Yang–Mills theory, declared to be one of the millennium problems by the Clay Institute, despite the numerical evidence. In further examples, the underlying level looks simpler, more understood, than the composite one: QCD looks theoretically simpler than nuclear physics, the Schrödinger equation than chemistry, the structure of amino acids than the mechanism of protein folding. Networks of simplified neurons are also more easily simulated than thinking and other higher brain functions.

A further difference lies in the following: a scientific model is usually understood term by term, all elements of basic equations represent separate physical actors. For example, in hydrodynamics, the pressure, energy density, shear and bulk viscosity all have their separate roles. In simulating complex dynamics, the individual terms are frequently just auxiliary variables without any special meaning for the behavior of the entire system. Completely different representations may yield the same result; in this way they are equally good. What did we understand here?

We would like to mention now some challenges for the AI learning. Despite the overwhelming popularity of deep neural networks (DNN) [

6] and other machine learning (ML) approaches, we do not grasp why a DNN performs better than the analytic, simplifying method of science. In table games (chess, go, nine men’s Morris, etc.) or by face recognition, DNNs are effective and are already faster than people, whose evolution prepared them for face recognition. What is common and what is different in image classification and solving Newton equations?

Mathematically, a feed-forward deep neural network is a series of functional mappings, between the input x and output y in the form of , whose parameters, W, weight possible paths of signal propagation. Learning is then a re-weighting, at the end of which the output y significantly and repeatedly differs for inputs belonging to one or another class, that we wanted to teach the AI. Such algorithms mostly lead to the required result, but still their performance does not seem to be based on a simplified model: their results can be repeated by copying all weights to another AI, but lesser or erroneous copies ruin the whole procedure soon.

Such problems reveal themselves when neural networks make errors unexpectedly. The well-trained set of weights are also the most vulnerable ones to adversarial attacks. Human knowledge, once learned, seems to be more robust against such pernicious effects. This leads us to a conclusion that present day AIs recognize and interpret their environments differently.

The learned weights are not in a one-to-one relation to understanding: some networks, if shown patterns in different order or trained from different initial states (reflecting a various history of previous learnings), end up with different weights. Not even close to each other. Is then DNN learning chaotic? An entropy producing process? That is hopefully a false conclusion. Details of patterns must be unimportant for recognition. The basis for functioning well is a distinction between the relevant and irrelevant combinations inside the complex data sets.

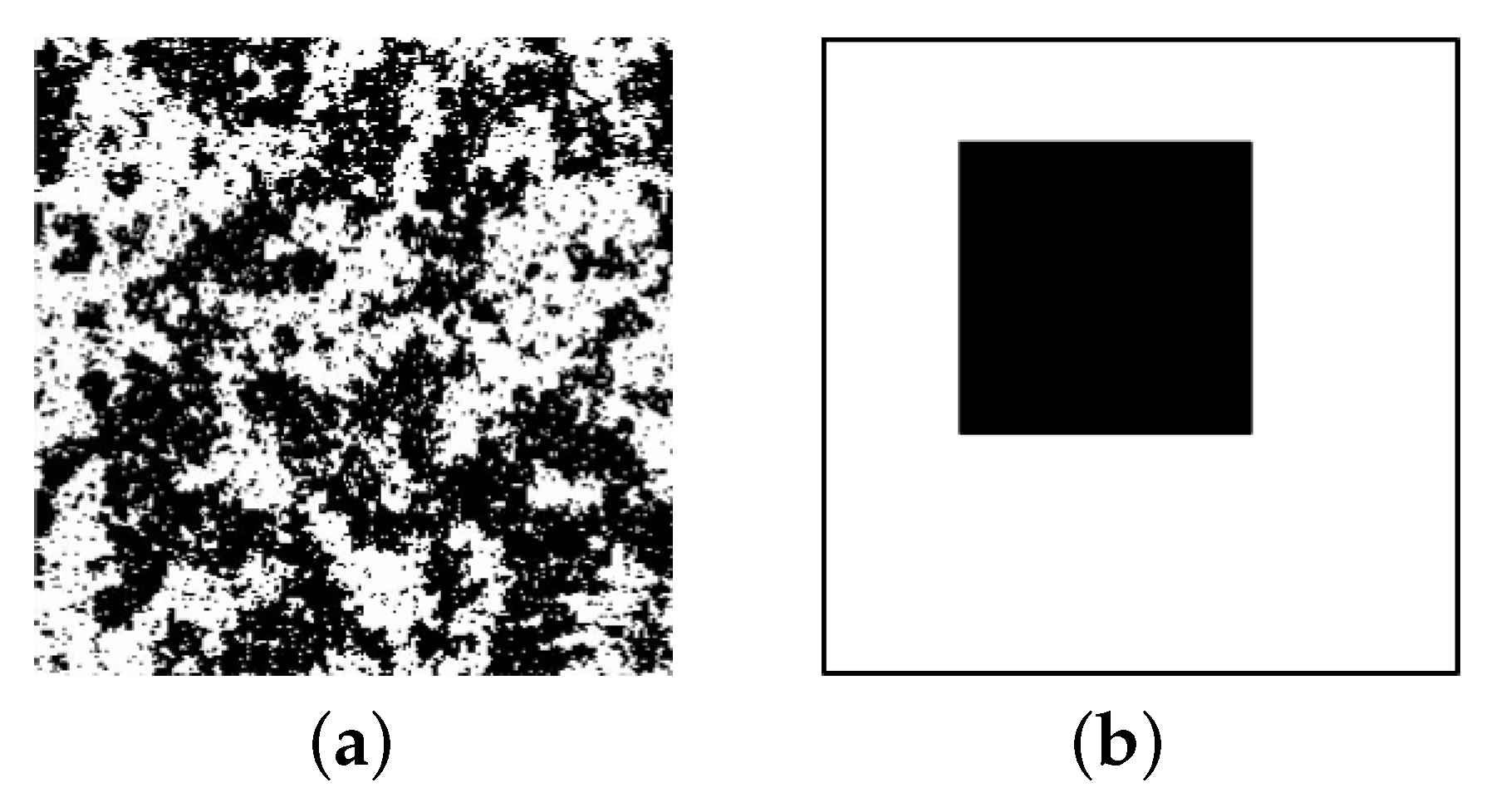

We want to describe an example of what understanding may mean for humans and AI systems, respectively. Let us follow how a picture shown in

Figure 1 exhibiting a girl and her mother appears to a computer. The digital image, describing the pixel colors in some coding, is a complete description at a given resolution, say 1 Megapixel. In the real world image of the painting there were brush strokes and chemical paints instead of pixels. That is the way how art copying works. A more economical and simpler procedure would be to name the objects seen on the canvas and their relations.

The analytic method defines subsystems in the whole, naming a girl, a woman, clouds and sand, a wind blown red dress, and so forth. Repeating this analysis to smaller and smaller subsystems of subsystems one could reach beyond the one Megapixel description in data size. However, not all details are necessary for recognition and categorizing; in the human world, a depth of a few levels is sufficient. “Summer retreat”, the title of the painting, comprises the relevant information in this case.

It is demonstrated by this example that the same thing can be analyzed in different ways, attaching coordinates in the space of possible objects or possible positions and colors of pixels, too. Both serve as an acceptable reconstruction of the same image. Understanding means, however, that we select common features of all images inside a given collection of them. Saying that the top left pixel is red can be less relevant than indexing it as a Pino Daeni painting with a mother and her daughter on a beach.

By knowing what is common in several images, it is much easier to decide whether a randomly drawn exemplar belongs to our cherished collection or not. Or asking for a characteristic example from the chosen collection (category) of images, a drawing with a cat passes the test while another one with a dog would not. AI systems must perform exactly such jobs.

In all of these examples, recognition is mapped to a selection of subsets in a larger set. We test the understanding by performing AI tasks: classification, regression, lossless data compression, encoding.

There are preceding works in computer science dealing with the description of understanding. The hunt for mathematizing or at least algorithmizing the cognitive abilities of humans dates back to the beginning of learning theory [

7,

8]: an approximately correct model is defined mathematically. The closest to our approach is representation learning [

9,

10] where the aim is to select an appropriate representation for complex inputs which optimally facilitates the design of a machine learning architecture. Representations can be disentangled using symmetry groups; for recent works, see [

11].

There are several attempts to go beyond the limitations of present-day AI (for example and for references cf. [

12]).

The physics example is the use of one exact renormalization group (RG) [

13]. In computing science, RG methods were applied by [

14,

15] and in connection with Boltzmann Machines in [

16].