1. Introduction

Loop quantum gravity (LQG) is a canonical quantisation program for general relativity (GR) that attempts to keep general covariance as manifest as possible [

1,

2,

3,

4]. In its kinematical sector, the theory is well-formulated: a Hilbert space

spanned by spin-network states—labelled by graphs whose oriented edges carry irreducible SU(2) representations and whose vertices carry SU(2) invariant intertwiners [

3]. The dynamics, however, are far more elusive. It is encoded in constraints—operators imposing equations (or, in the Master constraint approach [

5,

6,

7], a single equation) on the physical states. Solutions are timeless a priori. Finding solutions and giving them a spacetime interpretation remains a formidable open problem despite various efforts (see, for example, [

8,

9,

10,

11,

12,

13,

14,

15,

16]).

Path integral formulations of gravity have been considered for a long time since they avoid the spacetime split inherent in the canonical approach. Spinfoam (SF) theories (for example, see [

17,

18,

19,

20,

21,

22,

23,

24,

25,

26]), in particular, originated from the observation that gravity can be obtained by breaking the symmetries of a topological gauge theory. These theories side-step part of the difficulties of canonical LQG by constructing transition amplitudes between spin-network states as a sum over (discrete quantum) histories.

To regularise the gravitational path integral, one triangulates the manifold by a simplicial complex

T and works on its dual 2-complex

. A spinfoam is precisely such a 2-complex whose faces

f are labelled by irreps

of the gauge group and whose edges

e carry intertwiners

between the irreps of the faces meeting in

e. The regularised partition function can be written in factorised form [

19,

20]

where

v,

e and

f are dual to 4-simplices, tetrahedra and triangles of

T, respectively. Mirroring the role of a vertex in ordinary Feynman diagrams, the vertex amplitude

encodes the local dynamics of quantum geometry [

27]. The precise nature of the gauge group,

,

and

depends on the spacetime dimension, signature and theory.

A serious problem is that is very hard to calculate in practice. The sum contains infinitely many terms in principle. Moreover, even calculating the vertex amplitude can be difficult, since it typically is a highly oscillatory function, which involves multidimensional sums or integrals in its definition and depends on the data of the vertex-spin network, i.e., the - and -label surrounding v.

The development of various numerical methods [

28], each tailored to suit different regimes, have played a pivotal role in driving advancements in spinfoam models. Highly optimised, high-performance computing libraries such as

sl2cfoam [

29] and its successor

sl2cfoam-next [

30,

31] enable efficient evaluation of Engle–Pereira–Rovelli–Livine (EPRL) amplitudes [

27,

32] and have been used to study various aspects of spinfoam models [

33,

34,

35]. Monte Carlo methods, enhanced by Lefschetz thimble techniques to deform integration contours, have also been utilised in the context of spinfoams [

36]. Different sampling methods of representation labels, such as importance sampling and random sampling of the bulk spins, as well as utilising generative flow networks, have been utilised to further enhance the convergence of spinfoam amplitudes involving several simplices or efficiently computing expectation values of observables [

37,

38]. Monte Carlo methods were also used to compute the vertex amplitude for a given set of coherent states as boundary data [

39]. Recently, tensor-network methods and techniques from many-body quantum physics have been utilised to significantly reduce both the computational complexity and memory requirements for computing vertex amplitudes for both SU(2) and EPRL spinfoam vertex amplitudes [

40].

On the large-spin or asymptotic regime, numerical programs such as the complex critical points program [

41,

42,

43] have been developed to identify semi-classical geometries in the limit of large representations, while a hybrid program was proposed in [

44] to bridge between the quantum and semi-classical regimes. Numerical methods [

45,

46], based on symmetry reductions, have also been employed in the study of the renormalisation aspects of spinfoam models.

Despite this considerable effort in both analytical and numerical approaches (see also [

47,

48,

49,

50,

51,

52]), the computation of vertex amplitudes remains a challenging problem. This motivates the exploration of alternative approaches to address the inherent computational complexity. The goal of the present work is to establish a new approach to accelerating spinfoam computations by showing that the vertex amplitude is an object that can be learned from the data using deep learning. In the simplest form, a trained neural network would approximate

by interpolating from training data. In a more ambitious form, the neural network would learn to extrapolate much beyond its training domain in certain spin regimes. Further, another aim of this work is to complement the numerical implementations of exact analytical methods by helping identify dominant configurations, guiding importance sampling and enabling efficient pre-selection in possibly large parameter spaces.

While neural networks have been utilised in the context of spinfoam computations to calculate expectation values of observables [

38] by learning the boundary configurations which contribute large amplitudes, the current work, as far as we are aware, is the first of its kind where the vertex amplitude itself is learned using a neural network. Consequently, we aim for a proof-of-principle, not an application to state-of-the-art spinfoam models. To keep things as non-technical as possible, we consider a very well-studied and non-trivial model, the Euclidean Barrett–Crane model (BC model) [

53,

54,

55,

56,

57]. While interesting, this model is nowadays understood to be unphysical [

58]. The action for the path integral for this theory derives from the Plebanski (or

-theory plus simplicity constraints) action [

59]

where

B is an so(4) -valued 2-form,

is an so(4) connection, and

is a matrix-valued Lagrange multiplier enforcing the simplicity constraints that reduce topological

-theory to the familiar Palatini action [

55,

57].

1Exploiting

, the BC model strongly imposes the simplicity constraints, thereby restricting every face to a balanced representation

. While this drastic reduction yields a manageable state sum, it also freezes the intertwiner degrees of freedom, eliminating physical degrees of freedom and effectively rendering the model unphysical [

58]. More refined models, such as the EPRL or Engle–Pereira–Rovelli–Livine–Freidel–Krasnov (EPRL-FK) [

60] models, impose the constraints weakly. They reintroduce an SU(2) intertwiner label, depend non-trivially on the Barbero–Immirzi parameter

and reproduce the BC model (intertwiner) in the limits

[

25].

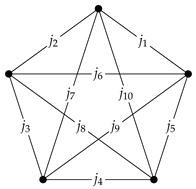

We will consider the BC model on a simplicial complex. Its vertex amplitude then takes the characteristic and compact form [

61]

where

denotes the Riemannian 10

j symbol and

k an integer, which parametrises the choice of triangle weight in the measure.

2 The Riemannian 10

j symbol is a function of 10 spins obtained by evaluating the following closed SU(2) × SU(2) spin-network on a flat connection [

62]

where the vertices are labelled by Barrett–Crane intertwiners. One can also define a modified 10

j symbol using the modified Barrett–Crane intertwiners as the weighted sum of SU(2) spin-networks [

62]. Since the value of a disjoint union of spin-networks is simply the product of their values, it follows that the modified 10

j symbol is [

62]

Given the choice of splitting shown above, the modified 10

j symbol can be shown to be always non-negative and is related to the 10

j symbol by [

62]

Given that

is the vertex amplitude in this model, several algebraic and numerical algorithms [

63,

64,

65] for evaluating

, as well as its large spin asymptotics, have been extensively developed and studied, yet the computational cost still scales unfavourably with the magnitudes of the spins.

In this work, we will explore a data-driven alternative: casting the evaluation of the 10j symbol, and thus the vertex amplitude of the Barrett–Crane model, as a supervised learning problem for deep neural networks. Concretely, we (i) generate a comprehensive training set of exact 10j symbol values using an optimised algorithm, (ii) break down the learning task into classification and regression tasks and (iii) quantify the fidelity and generalisation of the learned amplitude on spins that lie outside the training domain. The goals of this work are twofold. First, we demonstrate a proof-of-principle: (high dimensional) vertex amplitudes of spinfoam models are amenable to modern deep learning techniques. Second, we lay the groundwork for accelerating numerical investigations of more realistic models. Because the EPRL/FK vertex reduces to its BC counterpart in specific limits, the aim is for the models and tools used and developed in this work to be ported, with appropriate modifications capturing the -dependence, to the state-of-the-art SF models.

The presentation of the work is as follows:

- (i)

In

Section 2, we start by clearly stating the goals of the current work, as well as presenting the methodology to be used.

- (ii)

In

Section 2.1, we discuss the generation of training data and the pre-processing of the produced datasets prior to the training process.

- (iii)

Section 2.2 discusses the specific architecture of the networks used in this work and encoding schemes used to facilitate the learning process.

- (iv)

Section 2.3 concerns the training protocols for both the classification and regression tasks conducted in this work, as well as the evaluation metrics to which we evaluate the networks post-training.

- (v)

In

Section 3, we present the results of the training for both classification and regression tasks.

- (vi)

Lastly, in

Section 4, we review the issues encountered in this work, the limitations of the current work and how it relates to future models to be considered and present some avenues for exploration of these problems.

2. Methodology

As mentioned, this study serves as a proof-of-principle to address the question of whether or not a neural network can be utilised to learn the vertex amplitude of a given spinfoam model, in this case, the Euclidean Barrett–Crane model. The approach we use is one of supervised learning (SL). We approach the problem in terms of two tasks, which can be roughly outlined as follows. First, a classification task where, given a data set of spin configurations and corresponding amplitudes , we train a classifer to determine whether the corresponding amplitude is zero or not. Next, a regression task where, once again, given the dataset described above, we train a regressor to predict the correct amplitude for the given spin configuration . We then construct a meta “Expert" network , which combines both and to provide the correct predicted amplitude for the given configuration .

We will proceed by detailing the components of the implementation in natural order. That is, we first briefly describe data acquisition and pre-processing, followed by the network architectures and encoding schemes. Next, we outline the training protocol, hyper-parameter choices and monitored metrics. Following that, for each task, we present the results for the mentioned evaluation metrics. A discussion regarding limitations, technical details and ablation studies is conducted in the Discussion (see

Section 4).

2.1. Data Generation and Processing

The core component of the vertex amplitude in the Euclidean Barrett–Crane model is the

, which can be negative, positive or zero. To simplify our task, we will focus on learning the square of the

for both classification and regression. For classification, the appropriate sign factor can be easily reconstructed from the given spin configuration. This effectively allows us to reduce the complexity of the classification task while remaining able to reconstruct the correct sign of the learned

. For regression, the square root of the prediction can be taken at inference. The tools and software used in this work are all Python based. As such, to facilitate the training process, the algorithm presented in [

63] and the corresponding implementation in C provided therein

3 has been rewritten in Python and accelerated by utilising just-in-time compilation using

numba [

66] to obtain a comparatively fast compile time compared to the C implementation.

For both tasks, we respectively train the networks within a specified spin cutoff. That is, for a given cutoff spin , then the spin configurations to be considered are ones such that , where , and the superscript is explicitly stated in to indicate the cutoff to which the configuration belongs. The following datasets are created:

- (i)

For classification: a dataset containing pairs of spin configurations and their corresponding signs denoted as . Since , then for any , it follows that . This dataset contains data points for all possible configurations in a given cutoff.

- (ii)

For regression: another dataset , which then contains pairs of spin configurations and their corresponding , where is a small correction factor. Unlike , this dataset contains data points for all configurations in a given cutoff, which have a non-zero value. Thus, always.

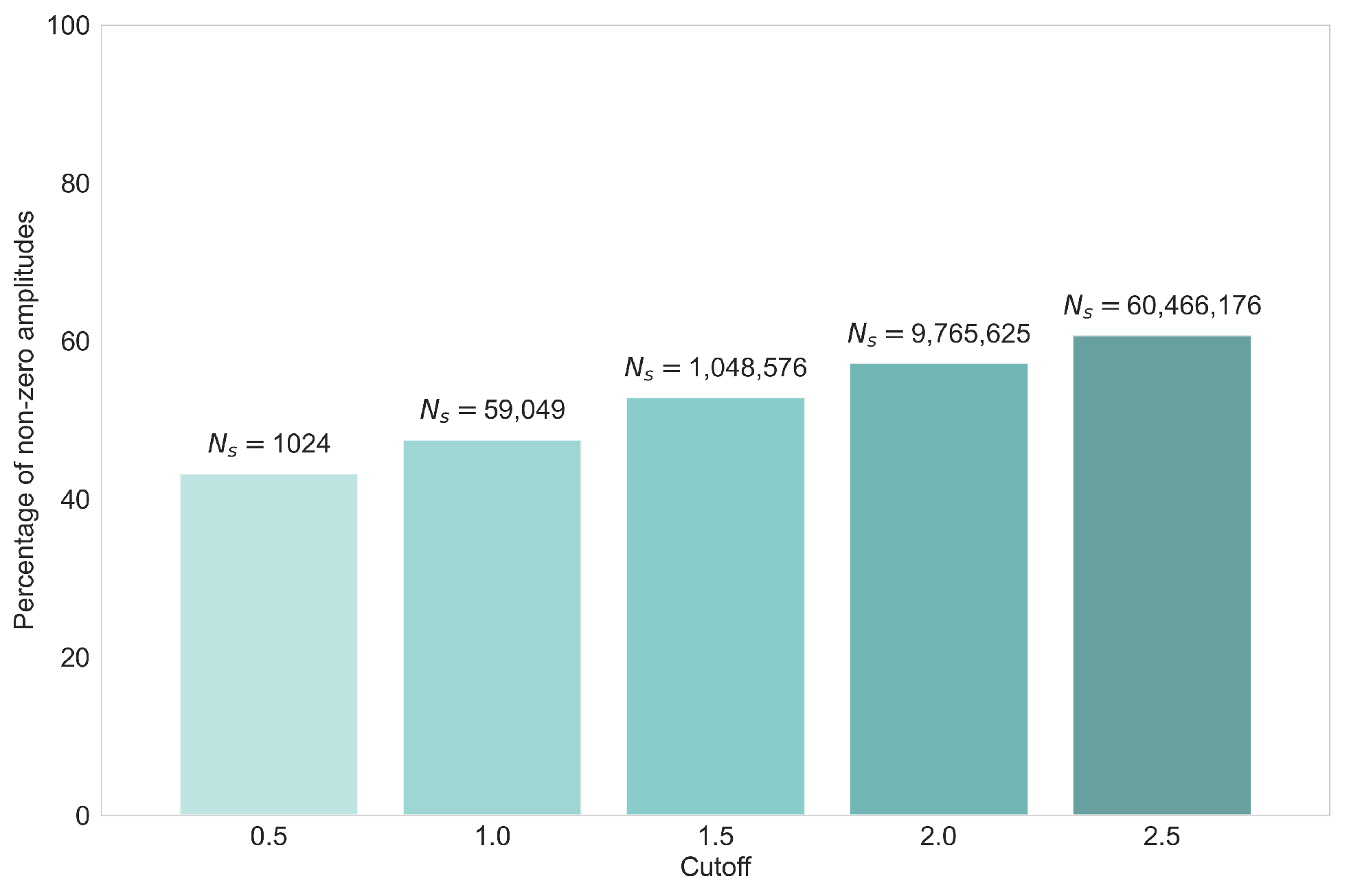

For small enough cutoffs, such datasets can be obtained simply by full enumeration of the space . To understand the distribution of the potential training data, one can compare the number of non-zero for all in different cutoffs.

It is immediately evident, then, as shown in

Figure 1, that there is an imbalance in the number of configurations, which yield a zero amplitude compared to non-zero amplitudes. In fact, as the cutoff increases, the percentage of non-zero amplitude configurations increases drastically, reaching over 60% already at

. Further, the value of

can vary by more than 22 orders of magnitude in even small cutoffs as

. This, therefore, poses the following hurdles: (i) a class imbalance for classification and (ii) a very large range in the values of

for the regression.

2.2. Network Architecture and Encoding Schemes

As this study is a proof-of-principle, little emphasis was put on constructing a sophisticated network architecture for either task. Remarkably, as will be shown, simple architectures suffice for both tasks. A multi-layer perceptron (MLP) [

67,

68] was used for both the classification and regression tasks. For classification, the classifier

consisted of an MLP with one hidden layer (depth 1) and 128 hidden nodes (width 128). A rectified linear unit (ReLU) activation function

was used to introduce the non-linearity. The input for the classifier

was simply the spin configuration

. The architecture for the classifier remained constant irrespective of the training cutoff. The classifier maintained a number of trainable parameters

of only 1537 parameters.

For the regressor

, an MLP was used as well. Unlike

, the depth and width of

varied depending on the

chosen during training. For example, at

,

had a depth of 6 and a width of 256, while for

, it had a depth of 5 and a width of 512. Different activation functions were considered and tested. Ultimately, a Gaussian error linear units activation function was used

where

is the cumulative distribution function for the Gaussian distribution. Unlike ReLU activation, the derivative of the GELU function is continuous at the origin. A smooth activation allows the optimiser to track higher-order curvature and avoids the kinks that piece-wise linear units, such as ReLU, introduce. Further, while ReLU either passes the signal unchanged (

x) or blocks it (0), the GELU multiplies the input

x by the probability that a unit drawn from a standard Gaussian is below

x. Small inputs are only tempered, not annihilated, which preserves information carried by low-magnitude features (inputs).

While the classifier takes as input the configuration , the regressor does not. The input for is encoded using an indexing function . For a single , let such that . Let be the standard r-th basis vector such that . We define the one-hot encoding of a single spin to be the map such that , which places a 1 exactly at the coordinate that corresponds to the value of j. The one-hot encoding for a configuration is then . Simply put, while the classifier takes as input a configuration of 10 values in a given , the regressor takes as input values at the same .

The motive behind the encoding is driven by empirical observations during training. Namely, the network performance was poor for un-encoded inputs due to it attempting to associate the inputs in a purely arithmetic manner (e.g., the network prediction being merely the mean of the inputs). Consequently, we convert every spin to a pure indicator vector rather than feed its numeric value directly. While this may not always be an optimal encoding depending on the problem, it is, nevertheless, sufficient for our case, as it removes any potential artificial ordinality and avoids adding arbitrary distances, relations or embeddings that the network might exploit as if they were physically meaningful, allowing it to view the spin values merely as categorical symbols. The encoding nevertheless provides compatibility with transfer learning across cutoffs. Thus, the chosen encoding provides a minimal, symmetry-respecting

4 representation that supports generalisation and compatibility across cutoffs. Note that one can, in principle, choose any faithful encoding map, not necessarily restricted to the one mentioned above, and this may play a crucial role in the performance of the network. Other encodings, such as learned embeddings or sinusoidal schemes, could be explored in future work depending on the target model and computational constraints.

2.3. Training Protocol and Hyperparameter Choices

This work utilises PyTorch [

69] for all neural network-related aspects. A non-exhaustive preliminary assessment conducted via automatic hyperparameter tuning using

optuna [

70] was carried out to determine an estimate for the best hyperparameters in this work. The following parameters, shared for both tasks, were thus obtained. The networks in both tasks were trained with a mini-batch AdamW optimiser [

71] with a weight decay of

and a 1-cycle learning rate schedule with a peak step size of

for the case of the regressor and

for the case of the classifier. The batch size for the classification task was 8, while a batch size of 256 was chosen for the regression task. For both tasks, training takes place in the low spin regime, as the behaviour of the network evaluations can be extrapolated to further training on higher spins. In what follows, we outline task-specific evaluation metrics and further training protocols.

2.3.1. Classification Metrics and Protocol

In what follows, we often refer to the

for a given

as a

label. In this binary classification task, the label can either be 0 or 1. The binary classification task was optimised with respect to a weighted binary cross-entropy loss function

as standard for such classification tasks, where

denotes the actual binary label (0 or 1) of the

i-th observation,

denotes the probability of the

i-th observation to be in class 1 and

N is the total number of observations made. Note that

and

are class weights used to neutralise the imbalance between zero and non-zero amplitudes. This is done by amplifying the contribution of underrepresented classes during training. Such a weighted scheme is one way to ensure that the network maintains strong performance across both precision and recall, especially for higher cutoffs where the proportion of non-zero configurations increases. As will be shown in the sections that follow, the evaluation metrics confirm that this approach successfully mitigates the imbalance. Note that

and

can simply be chosen to be inversely proportional to the respective class frequencies.

The training protocol is done cutoff-wise. First, a network is trained and evaluated at a cutoff of 0.5. Since the network architecture is static between cutoffs, transfer learning was utilised and the network was then retrained, starting with previously optimised parameters from the preceding cutoff, on a cutoff of 1.0 and then once again evaluated. This protocol continued until a cutoff of 2.0. The training dataset size starts at 75% of all available configurations at a cutoff of 0.5. As transfer learning was conducted successively for higher cutoffs, the training dataset size was decreased incrementally to establish a minimal dataset size: the smallest dataset size required to achieve the highest evaluation metrics values. Note that the datasets were collected blindly, and no active binning in terms of cutoffs was employed. While this is possible, it has not been done intentionally to test the model’s capacity to train from blind data.

Several metrics were evaluated after each cutoff training cycle as follows:

- (i)

Hard accuracy: this is defined as

which essentially counts, on the test sample of size

N, how many network predicted labels

exactly match the true labels

. Here,

is a decision threshold function which returns 1 if

and 0 otherwise.

- (ii)

Soft accuracy: this is defined as

which essentially computes how “far off” the network prediction was from the true labels for a given test batch of size

N.

- (iii)

Precision [

72]: which is defined as

where

denotes true positives (data points with labels 1, which have been correctly predicted by the network), and

denotes false positives (data points with labels 0, which have been incorrectly predicted by the network to have label 1), which conveys the fraction of all predicted non-zero states that are correct. Essentially, this metric focuses on the quality of positive predictions, giving a measure of how trustworthy the network’s prediction is when predicting non-zero labels.

- (iv)

Recall [

72]: the ability to correctly predict all non-zero configurations, which is defined as

where

denotes false negatives (data points with labels 1, which have been incorrectly predicted by the network to have a label 0). Having a high recall value indicates that the network rarely fails to correctly label a non-zero configuration.

- (v)

score [

72]: defined as

is the harmonic mean of precision and recall, symmetrically representing both precision and recall in one metric. The highest obtainable F-1 score of 1.0 indicates perfect precision and recall.

Additionally, for every cutoff training round, a confusion matrix, evaluated on the entire configuration space, is computed. This gives a clear picture of the number of FN and FP values for the network.

2.3.2. Regression Metrics and Protocol

Unlike the classification task, the regressor was trained on a single chosen cutoff. No transfer learning was utilised. Therefore, a regressor trained at a cutoff of 0.5 would only be evaluated on states . This is due to the dynamic nature of the network’s architecture used in this task, which makes transfer learning, although not impossible, much harder. For all cutoffs, the training data consisted of 85% of all available non-zero configurations at the current cutoff. Since the labels in this task can have a rather large range, the training dataset was not blindly collected. Rather, the entire space was first enumerated, after which the amplitudes were binned according to their magnitude. The produced dataset of 85% of all non-zero contributing data points was stratified according to those bins, ensuring sufficient representation across all magnitudes available.

The loss function for this task was chosen to be the Huber loss [

73]

where

. For the case of

, this is equivalent to the smooth L1 loss function. Here,

denotes the network predicted log value of the square of the

symbol of the given configuration. For brevity, we will denote that with

and denote the true value with

. For any training cutoff, the following metrics were observed after training:

- (i)

Root mean squared error (RMSE) in log space: this is simply defined as

where

Note that this metric may be sensitive to errors in large-valued labels. Applying it in log space is due to the large possible label magnitudes, which span several orders. This then ensures that large values do not disproportionately dominate the error.

- (ii)

Median absolute deviation (MAD) in log space: defined as

is a measure of error also in the log space. Unlike the

, this metric is resilient to outliers and better reflects the typical prediction deviation.

- (iii)

Mean absolute percentage error (MAPE): is given as

which can be interpreted in terms of the relative error of predictions over a test set of size

N. Note that this metric is not computed in log space.

- (iv)

Threshold accuracy: lastly, we measure the threshold accuracy, which is

which is an indicator of how many predictions in a test set of size

N have a relative error lower than a specified

threshold. In this work, we take

, and thus, the

will measure how many predictions fall within a 10% relative error to the true value. Once again, this metric is not computed in log space.

Additionally, we also compute the value, as well as a true vs. prediction plot, after every cutoff training cycle.

3. Training Results

The two criteria being sought in this work for both tasks are (i) whether the networks can perform well at the cutoff they are trained in and (ii) whether the networks can predict on test samples from a cutoff they have not been trained on. All computations were carried out on an Intel Xeon E3-1240 v5 with 4 cores at 3.5 GHz, and no distributed, parallelised or GPU computations were utilised. We begin with the classification task. Carrying out the protocol described in

Section 2.3.1, the results shown in

Table 1 were observed.

Table 1 shows the training and test loss for the classification task on different cutoffs. As shown, the training dataset size starts at 75% of the total number of available configurations at the cutoff

for

. As transfer learning is applied and training proceeds to higher cutoffs, the training dataset size decreases until 1.17% for

. Despite that, both training and test loss decrease steadily as the cutoff increases, indicating that the learning process is carried out successfully. The increase in the test and training loss for

is attributed to the relatively small training dataset size.

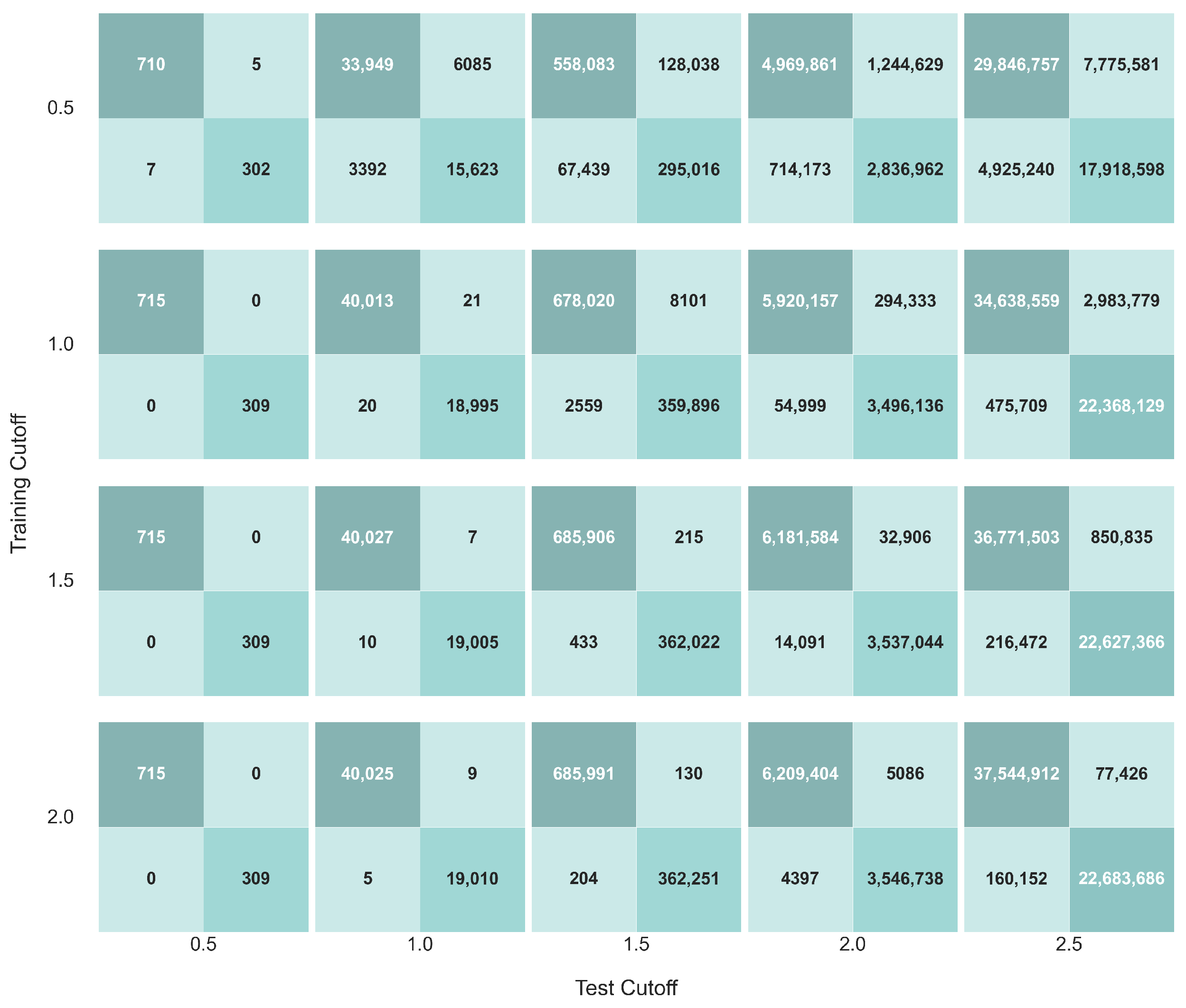

After each training cycle, the trained network was tested on all configurations for cutoffs

. The following metrics in

Table 2 were observed.

As shown in

Table 2, all classification metrics are within an excellent range for the cutoff that the network has been trained on. However, one striking aspect is that the simple classifier seems to be able to perform reasonably well when tested on higher cutoffs, which it has not been trained on. Additionally, no catastrophic forgetting

5 was observed. Further, as the classifier is trained on a higher cutoff, using transfer learning, the metrics for test cutoffs that fall above the training cutoff also improve drastically. For example, a classifier trained at

and shows an F-1 score of 0.7383 when tested on

, while the same classifier retrained using transfer learning up to

has an F-1 score of 0.9947 for a test cutoff of

. This can be further elucidated by looking at the confusion matrices for the classifier at different training stages, as shown in

Figure 2.

Figure 2 shows the confusion matrices for the classifier at different stages of training, tested on different cutoffs at each stage. In a given confusion matrix, the top left quadrant denotes the true negatives, the top right quadrant denotes the false negatives, the bottom left quadrant denotes the false positives and the bottom right quadrant denotes the true positives. It is evident that as the classifier progresses in the training stages, the number of false predictions in either class becomes lower. This can be more easily shown by looking at only the false negatives and false positives, as shown in

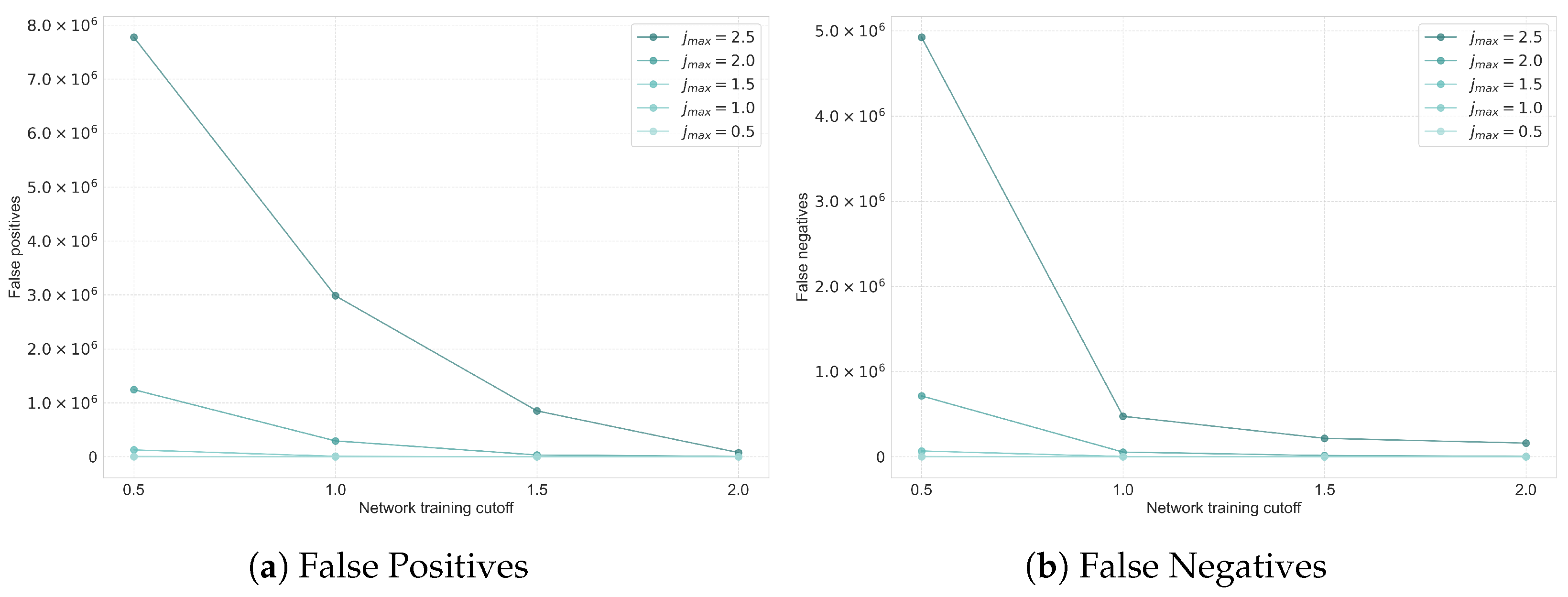

Figure 3.

As can be seen in

Figure 3, both the false positives and false negatives fall drastically. Perhaps most interestingly, the number of false negatives and false positives is seen to decrease even on cutoffs on which the classifier has not been trained on (see the trend-line of

in both figures), as the classifier training progresses to higher cutoffs. The takeaway here is that despite the simple architecture of the classifier and the extremely small size of training datasets for higher cutoffs, the classifier is able to generalise well to cutoffs beyond its training.

As discussed in the protocol in

Section 2.3.2, we approach the regression task differently from the classification. In what follows, we mainly focus on a regressor trained at cutoffs of 1.0 and 1.5. In the discussion section (

Section 4), we elaborate on higher cutoffs.

A regressor with a depth of 6 and width of 256 with a GELU activation was used for training at , while for , the regressor had a depth and width of 5 and 512, respectively. In both cases, training was conducted over 200 epochs, and the training dataset consisted of 85% of all configurations, which yield a non-zero (16,162 and 308,086 data points for the cutoffs 1.0 and 1.5, respectively). No transfer learning was applied. The figure below shows the training loss for both regressors.

As shown in

Figure 4, no irrecoverable or sustained spikes were observed. The regression metrics were observed to be as shown in

Table 3.

As shown in

Table 3, all metrics fall within a good range for such regression tasks. The regressor trained at a cutoff of 1.0 demonstrates excellent predictive accuracy, achieving a

of 2.7587% and a threshold accuracy

of 94.3045%, indicating that the vast majority of predictions fall within a 10% relative error margin of the true values when evaluating the model on all possible configurations, which yield non-zero labels at the cutoff. Further, its

and

values reflect a low dispersion of residuals and highlight the consistency of the regressor’s output. The high

score implies that nearly all variance in the target variable is captured by the regressor.

In comparison, the model trained at exhibited slightly less accurate performance, but nevertheless, yielded promising results. The and values indicate that it maintains a good predictive fidelity. While the and values are comparatively higher, they are still within acceptable bounds. Notably, the value for this regressor is even higher, indicating a better fit for the data. To further elucidate upon the performance of both regressors, a True vs. Prediction plot can be shown below.

Figure 5 shows the True vs. Prediction plots in log space for both regressors at the cutoff of 1.0 and 1.5 on the left and right, respectively. The data points evaluated in the plots constitute all data points at the cutoff which yield a non-zero label. As shown, most of the predicted labels align well with the true labels in both cases, with only a few predictions which fall far from the true label values. Overall, the regressors seem to be performing relatively well. No minimal training dataset investigation or transfer learning was applied in either case.

We also note that the trained regressors did not generalise well beyond their training domain. This can be attributed to different reasons. For example, a regressor initially trained on a dataset of some cutoff and subsequently fine-tuned on a dataset from the following cutoff is effectively exposed to only a small fraction of the data during transfer learning. This is due to the fact that the dataset corresponding to subsumes the dataset, but also includes an overwhelmingly larger volume of new samples, causing the regressor to be prone to catastrophic forgetting, wherein knowledge acquired during the initial training phase may be overwritten or degraded during fine-tuning.

This effect may also be caused, or at least exacerbated, by the use of one-hot encoding, which treats each input category as orthogonal and independent. As new categories may be introduced in the dataset, the model may assign high importance to these new features, diminishing the influence of earlier, sparsely repeated categories from the dataset. In all cases, such a behaviour is to be expected, as extrapolation is generally a non-trivial task and is made more difficult by the nature of the vertex amplitude functions being highly oscillatory in general.

Expert Network

So far, the task of computing the vertex amplitude using neural networks has been divided into classification and regression tasks. Further, we have focused on learning the sign of the for the classification task and the value of for the regression task. To produce the correct amplitude value for a given spin configuration, we need to:

- (i)

At inference time, exponentiate the regressor’s output to obtain instead of and then further take the square root to obtain the correct value.

- (ii)

Insert the correct sign factor based on the spins in the given

, as shown in Equation (

6) to obtain the correct sign of the computed

.

- (iii)

Insert the correct positive dimensionality multiplicative factor related to the spins in the given

, as shown in Equation (

3) to compute the complete vertex amplitude of the BC model.

As such, the last piece of the work includes creating a meta network, denoted , which combines both the classifier and the regressor. The output of this meta network, which we shall call an Expert, is merely the product of the outputs of and , along with all the relevant corrections mentioned above.

The reason for this is not only aesthetic. The grouping of such networks into an Expert

allows for having an ensemble of Experts for a given cutoff, each trained with different seeds and therefore different datasets, in the hope of increasing the predictive accuracy of the overall model and producing more precise error estimation. Further, one can combine Experts in a Mixture of Experts (MoE) approach [

74,

75], which, in simple terms, houses within it

M experts (or

M ensembles of

N experts each), each trained at a different cutoff. After appropriate training of the gating in such an MoE, this then results in one model that can accept any configuration that falls within the range of cutoffs it has been trained on and yield an accurate prediction based on the Experts it contains. This, however, will be left for future work.

4. Discussion

One of the most, and perhaps the most, pressing inherent issues in this work is the scarcity of data. Generally, SL is a greedy approach, and this only gets worse if the objective we attempt to learn is complicated (e.g., very large range, highly non-uniform, very sensitive to the inputs). This was already observed during the training of the regressor in this simple toy model. Different training methods, including transfer learning, different one-hot encoding and dataset processing, resulted in either catastrophic forgetting or low predictive accuracy. This, however, can of course be due to the network architecture. Nevertheless, the issue of the regression task being data greedy is expected to still persist. In that case, one needs to tailor the networks such that they require as few data points as possible. Further, data collection can be conducted by Reinforcement Learning (RL) methods. During this work, we have also collected data points by training an agent with a proximal policy optimisation algorithm (PPO) [

76] to find the highest valued amplitudes without exhaustive enumeration of all possible configurations. This can then cut down on unnecessarily computing vertex amplitudes, which may be irrelevant to the desired training process, while still being a computationally expensive process.

Spinfoam vertex amplitudes, for a given set of coherent states as boundary data, have been computed using Monte Carlo methods [

39]. One may be able to adapt the generative flow networks approach [

38] (initially used to compute expectation values of observables by learning the regions in which the amplitude is large) to facilitate a similar computation, as done in [

39]. Such flow networks may also provide another “agent”-like approach for data collection in the domain of direct learning of the vertex amplitude itself, as we have carried out. How to exactly set it up is not clear at the current time, but an interesting avenue to explore in future work.

Ultimately, we recognise that this work serves only as a proof-of-principle, and thus, we refrained from exploring or utilising all possible avenues to identify, resolve or optimise such issues and bottlenecks. This is left to be conducted for later work on more physically relevant models, but we are aware that this is a persistent issue in the nature of this approach. The resolution of this issue will also largely rely, in part, on utilising other efficient numerical methods to generate a sufficient amount of data for training, as this sets the bound of the amount of data available for training.

The next pressing issue is the regression problem. The learned data for the regressor in this work spanned a very large range, which only grew with the cutoff. This is due to the nature of the learned amplitude, as they are generally represented by highly oscillatory functions. This poses several serious concerns. For example, assuming the data acquisition process is not an issue (may already be not true for more realistic models such as the EPRL model at high spin), devising a training set which has enough representatives from each order of magnitude is a non-trivial task. This will also highly depend on the network and loss function used, as different architectures and losses can be less sensitive to very large data, while others might require more large data representatives in the dataset. It is therefore easy to see how this becomes a concern if one can not enumerate the entire space.

In the cutoffs presented in this work, the classifier maintained a static architecture, which included a number of trainable parameters of only 1537 parameters across all training. Despite that, it was demonstrated that it excelled in learning whether the given spin configuration would yield a non-zero squared or not. The regressors, on the other hand, had a number of trainable parameters of 350,093 and 1,091,725 for and , respectively. Compared to the total number of non-zero data points available at the same cutoffs (19,015 and 362,455, respectively), one sees that this approach is simply inefficient: with enough learnable parameters, one can fit any data. In this case, it is much faster to simply create a table of all possible amplitude values for all spins in the current cutoff. If a regressor trained on some cutoff can, to some degree of acceptable evaluation metrics, predict on a higher cutoff , then being larger than the number of the available states at the training cutoff can be overlooked. This, however, is not the case in this work.

Nevertheless, that does not mean that it is not possible. Ablation studies (here discussed for

) were conducted where different MLPs with different widths and depths were tested. It was observed that one can get to moderately acceptable evaluation metrics with MLPs of depth 3 and width 64 (

) and even MLPs with depth 1 and width 256 (

). While in both cases

is less than the total number of available data points, this does not immediately translate to all relevant evaluation metrics being consistently high or the training process being conducted smoothly. Further, different architectures, such as recurrent neural networks with gating

6 were observed to be good candidates for the task. Lastly, given the graph-based nature of the 10

j symbol, graph neural networks (GNNs) [

78] might also serve as a good candidate. However, this work has not explored such an architecture. Lastly, the regressors in this work did not produce satisfactory evaluation metrics when evaluated beyond the training domain. This is unsurprising, as this is an inherent limitation to such tasks, which is further made difficult by the objective function being learned, here the vertex amplitude, being of a difficult nature.

This leads to the following conclusion: while the regressors used in this work are inefficient, in principle, we believe that there are more efficient architectures to be explored with being less than the available training data. What they are, how well they train and whether they, if at all possible, generalise for higher cutoffs or not were not tasks of principal importance in this work. The reason being that it is unclear precisely how the tools developed in this work would translate to physically relevant models such as the EPRL model. The purpose of this work is to merely demonstrate a proof-of-principle, and exhaustive studies will be left for later work.

5. Conclusions

Spinfoam theories provide dynamics for non-perturbative loop quantum gravity by constructing transition amplitudes between spin-network states through a sum over their histories. The quantisation procedure implements simplicity constraints at the quantum level, resulting in a regularised partition function on a spinfoam . One of the main components of is the vertex amplitude , which, akin to QED, encodes the local dynamics of quantum geometry. In this work, we investigated the feasibility, as a proof-of-principle, of a data-driven approach, whereby the vertex amplitude of the Euclidean Barrett–Crane model is learned using deep neural networks, specifically the Riemannian 10j symbol, which is at the core of in this model. The amplitude is learned through a two-step process whereby first, a classifier is trained to predict whether, for a given set of spin configurations , the resulting is zero or not. Second, a regressor is trained to predict the exact numerical value of the . As a last step, we construct a meta network, which we denote as an Expert , which utilises both and to construct the correct amplitude by inserting the relevant sign and dimensionality factors.

For the classification task, a small MLP was trained on several cutoffs ranging from 0.5 to 2.0, each time utilising transfer learning. Despite the relative training dataset size being reduced from 75% to roughly 1% of all available states for cutoffs of 0.5 to 2.0, respectively, the classifier proved successful by being able to have high evaluation metrics (soft accuracy, hard accuracy, precision, recall and F-1 score) both within and well above the training cutoffs. The regression task proceeded with MLPs of dynamic architecture, which depended on the training cutoff. Within the learned cutoff, the regressors showed high evaluation metrics (RMSE, MAD in log space, MAPE and threshold accuracy with ) in both cutoffs presented (1.0 and 1.5). Generalisation to cutoffs beyond the training regime for the regressor case, however, was unsuccessful. This may, preliminarily, be attributed to (i) catastrophic forgetting during transfer learning from one cutoff to the next, due to the overwhelming volume of new data in subsequent cutoffs relative to the prior ones and (ii) the use of one-hot encoding, which introduces sparsity and treats new categories (i.e., higher spins) as orthogonal, potentially amplifying the model’s focus on newly introduced features at the expense of earlier ones. Therefore, the choice of encoding scheme may significantly influence any potential generalisation performance of such regressors.

An Expert was constructed to correctly output the full amplitude . We also discuss the limitations and hurdles encountered during this work, mainly the generalisation of the regressor to higher cutoffs and the training process with limited data. We propose an ensemble approach to increase the accuracy for the trained cutoffs and a reinforcement learning-inspired approach to collect relevant training data, where an agent is trained using a proximal policy optimisation algorithm to learn which configurations would yield amplitudes relevant to the training process at hand. We concluded this work by elucidating different network architectures which may be better suited for the regression task. However, as this work stands as a proof-of-principle, we refrain from exploring all avenues to resolve the issues encountered in this work; this will be pursued later for physically relevant models. Nevertheless, the current work stands as an addition to existing numerical methods to compute the vertex amplitude of spinfoam models and provides a proof-of-principle that vertex amplitudes of spinfoam models are amenable to modern deep learning techniques. The aim of the surrogate models to be developed in this data-driven approach is to complement the numerical implementations of exact analytical methods by helping identify dominant configurations, guiding importance sampling and Monte Carlo approximations and enabling efficient pre-selection in possibly large parameter spaces.