Development of Decision–Model and Strategies for Allaying Biased Choices in Design and Development Processes

Abstract

1. Introduction

2. Literature Review on Decision-Making Models in Engineering Design

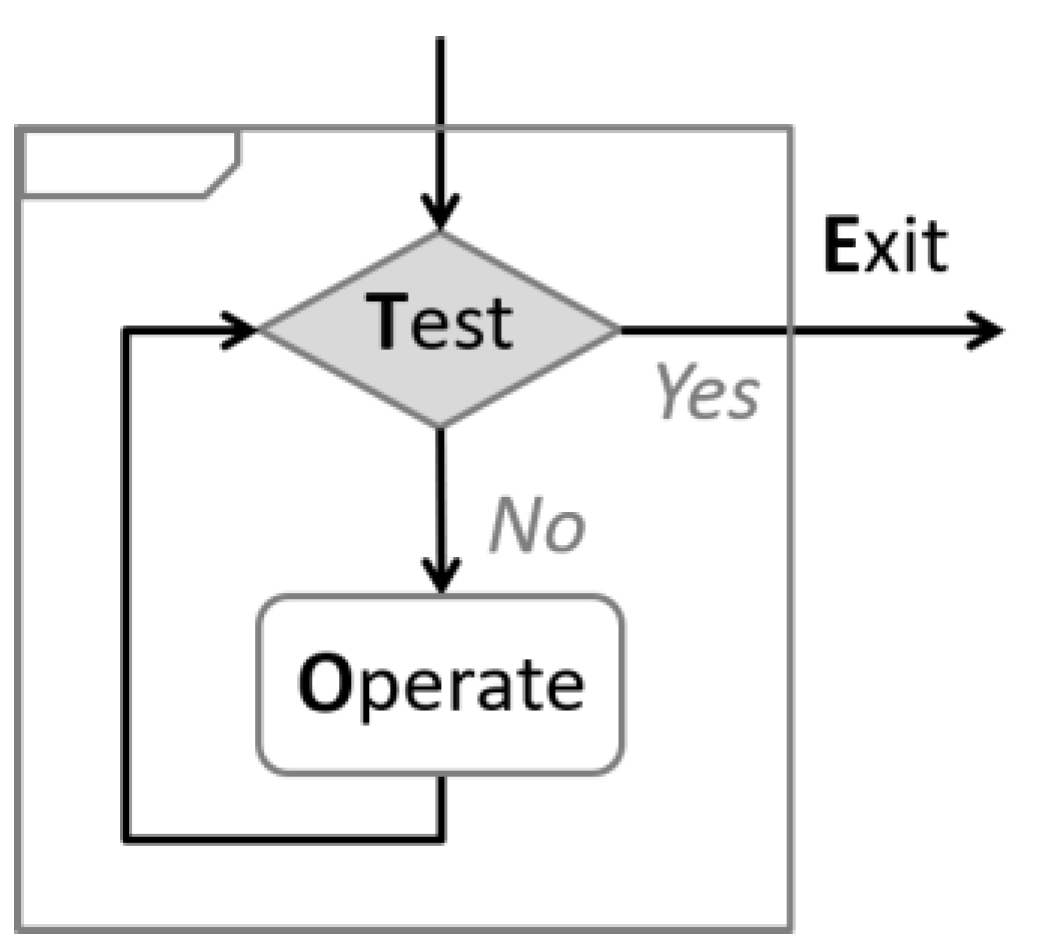

2.1. Miller et al. TOTE Model (Test Operate Test Exit)

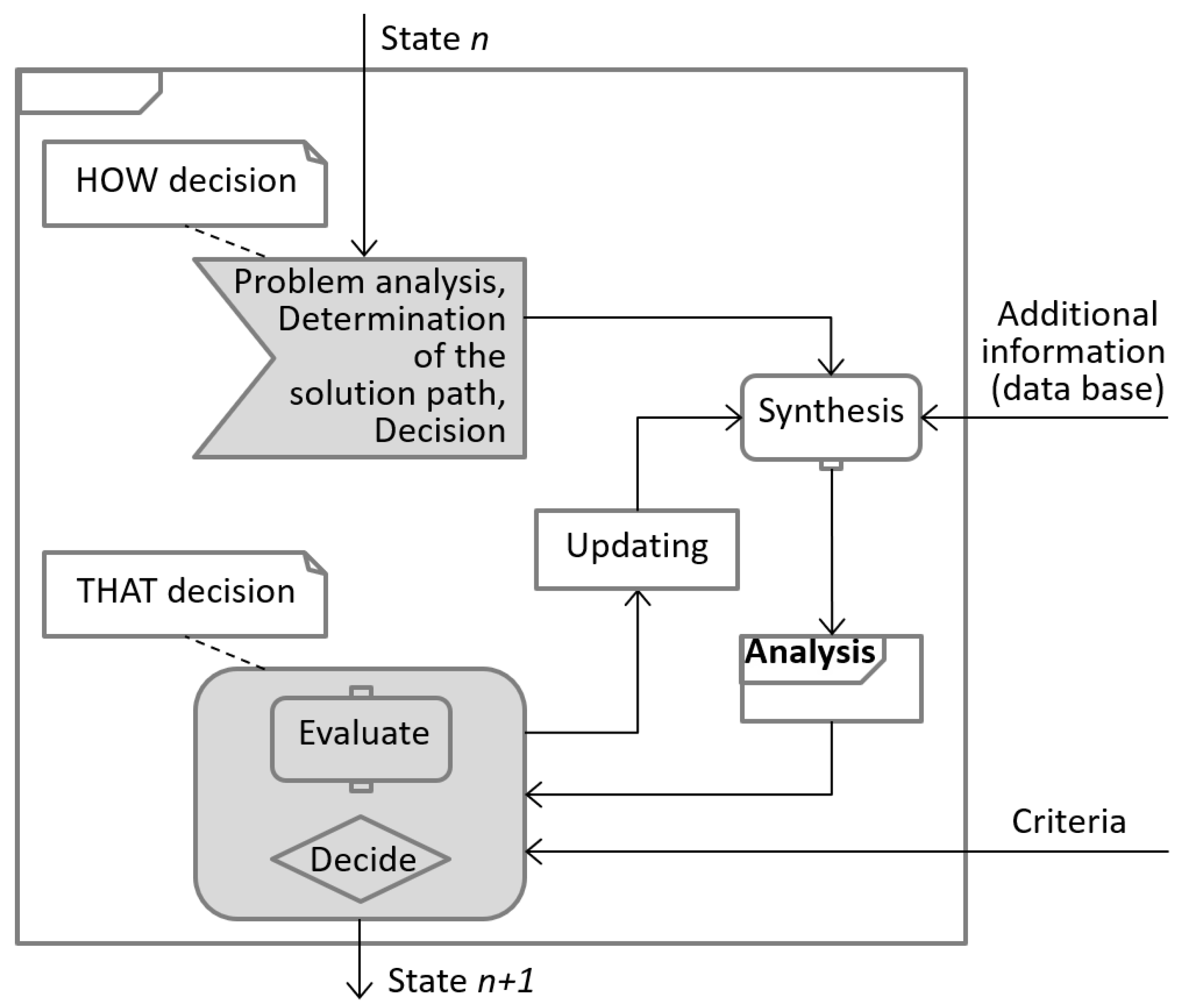

2.2. Höhne’s Model of Operations

2.3. Ahmed and Hansen’s Decision-Making Model

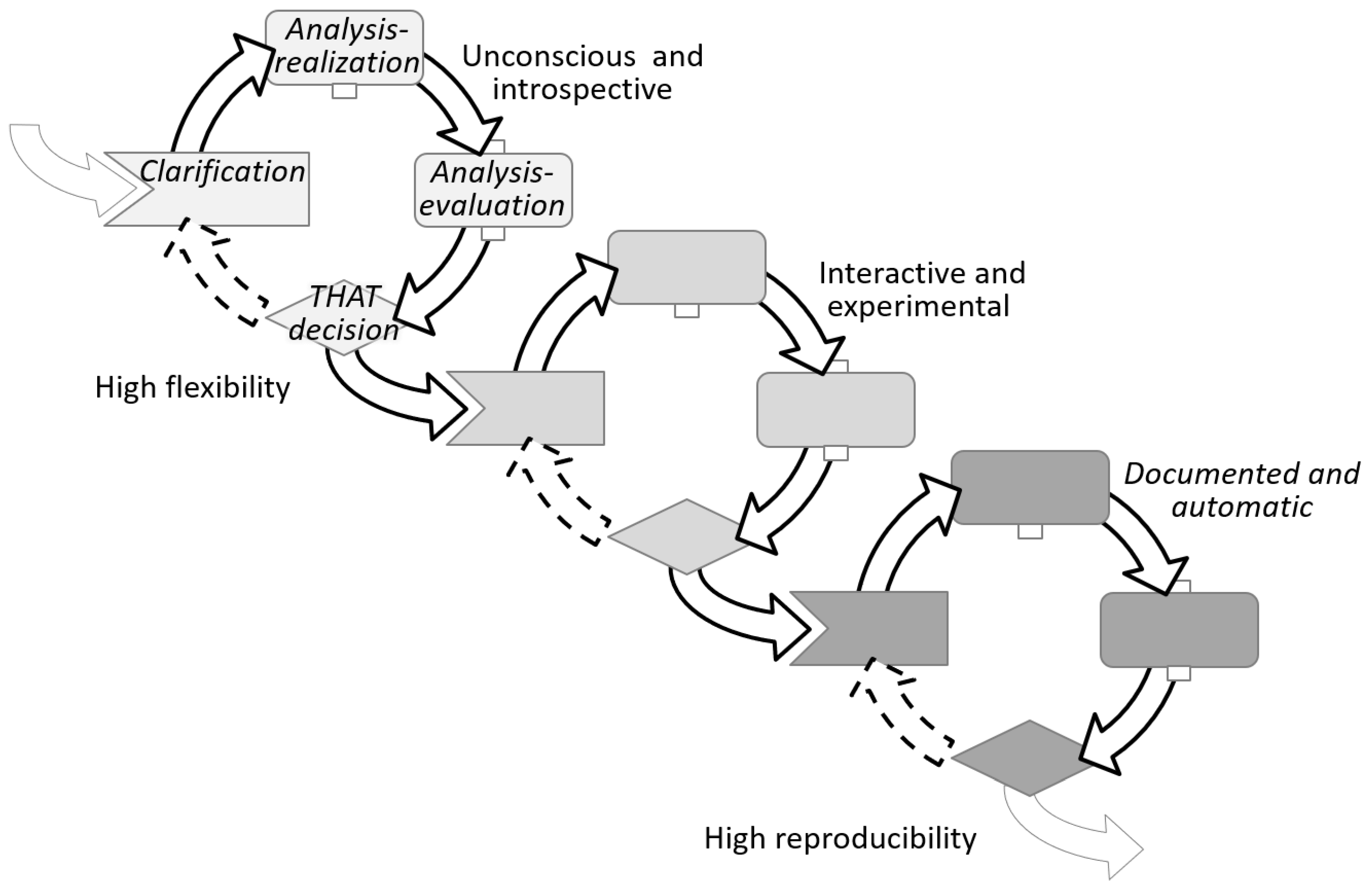

3. Development of the ABC Decision Model and Bias Allaying Strategies

- Clarification phase—HOW-decision

- Analysis–realization phase

- Analysis–evaluation phase

- THAT–decision phase

3.1. Four Evolving Phases of the ABC Decision Model

3.1.1. Clarification

- What could define a satisfactory status?

- What factors are or absence of factors is responsible for the unsatisfactory status?

- Who is responsible for the actual modus operandi?

- Is there a cause-and-effect relationship?

- Where are these factors located?

- Why are the factors producing unsatisfaction?

- When does the unsatisfaction occur, and how often?

- How much is it needed to change to an acceptable status?

- What actions are feasible?

- Who will be responsible for the new modus operandi?

- Who should be involved in the actions?

- Where should the actions take place?

- When and in what order should the actions take place?

- Why will the actions address the unsatisfaction?

- How should the actions be executed?

- What are the costs/tradeoffs of those actions?

- Awareness of the known criteria responsible for the unsatisfactory status

- An action plan to reduce uncertainty by:

- Gain knowledge on the unknown perceived as the decision roadblock

- Test the hypothesis that a given action will truly improve the status

3.1.2. Analysis–Realization

3.1.3. Analysis–Evaluation

3.1.4. THAT-Decision

- Yes: the top-ranked option shall be implemented. The option to follow is clear, but the way to implement the option may not be that clear; thus, a new decision cycle starts focusing on the implementation. In the latter decision cycles, the focus moves to prove the effectiveness or repeatability of the selected option.

- No, next: the top-ranked option lays out of reach or scope after holistic scrutiny—e.g., missing infrastructure or competence, conflict of interest, no business case, you cannot fire your boss, etc. It’s very challenging to run analysis–evaluations with all thinkable criteria incorporated within the model; thus, criteria will be left out to be considered only at the holistic scrutiny of the THAT-decision. Once the number one option is left aside, the decision focuses on the number two.

- No, refine: when the uncertainty of options seems too high and/or the gained knowledge seems too low, the decision can be to repeat the cycle again, this time looking for more details. However, this is a decision against time, a tradeoff between deciding and moving on vs. increasing knowledge and certainty on the available options.

- Else: often, decision cycles end with an else result. One reason could be that none of the options obtained reached the minimum threshold or that the unexpected analysis findings or recent external factors change the urgency of the goal or made it irrelevant. All else cases terminate—or completely redirect—the pursue of the satisfactory status.

3.2. Decisions, Biases and Mitigation Strategies

3.2.1. Biases Affecting Clarification

- Bias: Overconfidence

- ○

- Strategy: Breaking it down

- Bias: Optimism and the planning fallacy

- ○

- Strategy: Asking externals

3.2.2. Biases Affecting Analysis–Realization

- Bias: Bounded awareness

- ○

- Strategy: Looking at the bigger picture

- Bias: Confirmation

- ○

- Strategy: Devil’s Advocate

- Bias: Search type

- ○

- Strategy: Searching different

3.2.3. Biases Affecting Analysis–Evaluation

- Bias: Anchoring

- ○

- Strategy: Applying contrafactual thinking

- Bias: Inconsistency

- ○

- Strategy: Calibrating by continual comparisons

- Bias: Redundancy

- ○

- Strategy: Doing the math

3.2.4. Bias Affecting THAT-Decision

- Bias: Framing and loss aversion

- “A gain is more preferable than a loss of the same value.

- A sure gain is more preferable that a probabilistic gain of greater value.

- A probabilistic loss is more preferable than a sure–loss of lower value” [7].

- ○

- Strategy: Turning it around

- Bias: Sunk Cost fallacy

- ○

- Strategy: 100 times

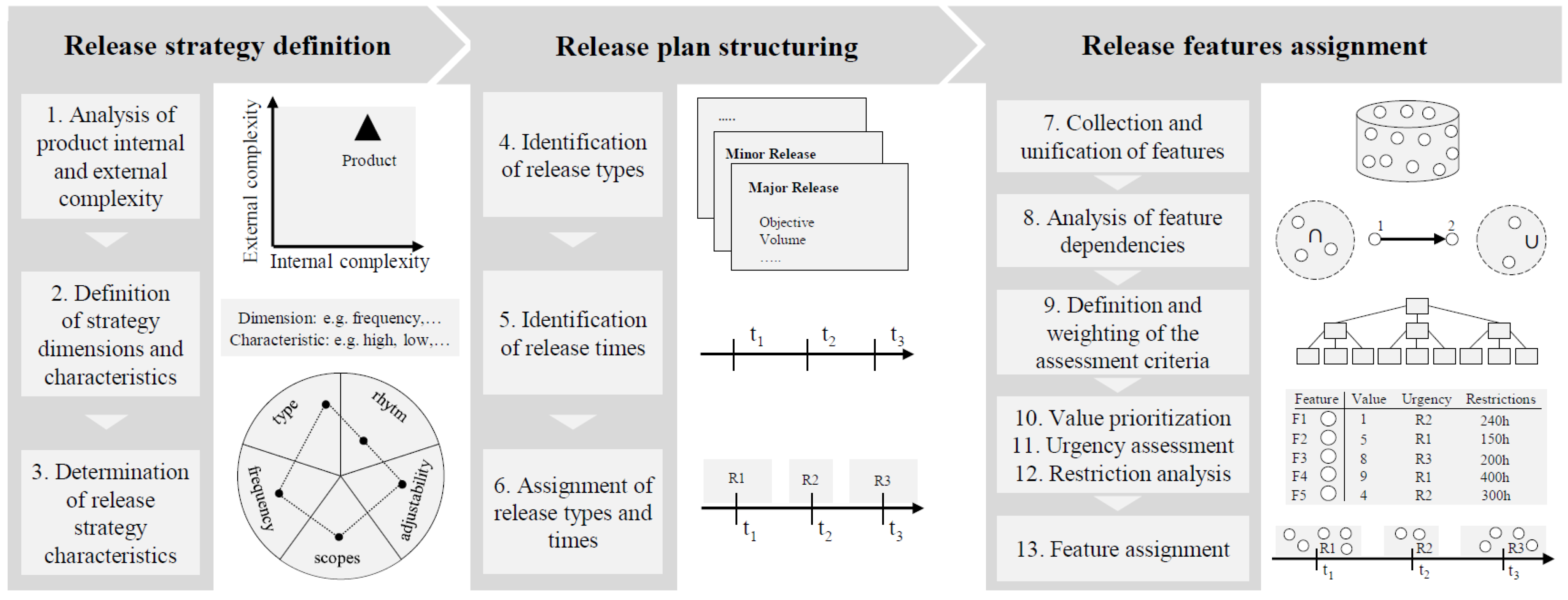

4. Implementation of the ABC Decisions and Strategies to the SRP Methodology

4.1. SRP Methodology Steps

- 1.

- Analysis of product internal and external complexity

- ○

- Assessment: This step focuses on planning and attaining business clarity; hence, this step can be considered a clarification phase.

- 2–3.

- Definition and determination of strategy dimensions and characteristics

- ○

- Assessment: Step two and three are mainly evaluating figures and ranking characteristics while managing system complexity; hence, these steps can be considered analysis–evaluation phases.

- 4–5.

- Identification of release types and times

- ○

- Assessment: The values assigned in these steps are mainly given by comparing and referring to former types and times; hence, these steps combine both analysis–realization and analysis–evaluation phases.

- 6.

- Assignment of release types and times

- ○

- Assessment: Step six is strongly affected by the know-how introspective done by decision-makers and the cut-decisions needed to assign the plan; hence, this step can be considered a clarification and THAT-decision phase.

- 7.

- Collection and unification of features

- ○

- Assessment: Step seven is about knowledge and control—as defined by Hammond and Summers— hence, this step can be considered an analysis–evaluation phase.

- 8.

- Analysis of feature dependencies

- ○

- Assessment: This step is about running analysis and searching for dependencies; hence, this step can be considered an analysis–realization phase.

- 9.

- Definition and weighting of the assessment criteria

- ○

- Assessment: Like step two and three, this step focuses on evaluating criteria and ranking dependencies while managing system complexity; hence, this step can be considered an analysis–evaluation phase.

- 10–13.

- Value prioritization, urgency assessment, restriction analysis and feature assignment

- ○

- Assessment: Steps 10 to 13 are about selecting values that have consequences on what feature will be pushed up on the planning list and what others will be lost at the bottom; hence, these steps can be considered THAT-decision phases.

4.2. Putting It All Together

- ○

- Prone bias >> Corresponding mitigation–strategies

- 1.

- Analysis of product internal and external complexity >> Clarification phase:

- ○

- Overconfidence bias >> Breaking it down

- ○

- Optimism and the planning fallacy >> Asking externals

- 2–3.

- Definition and determination of strategy dimensions and characteristics >> Analysis–evaluation phases:

- ○

- Anchoring bias >> Applying contrafactual thinking

- ○

- Inconsistency >> Calibrating by continual comparisons

- ○

- Redundancy >> Doing the math

- 4–5.

- Identification of release types and times >> Affecting analysis–realization and Analysis–evaluation phases:

- ○

- Bounded awareness >> Looking at the bigger picture

- ○

- Confirmation bias >> Devil’s Advocate

- ○

- Search type >> Searching different

- ○

- Anchoring bias >> Applying contrafactual thinking

- ○

- Inconsistency >> Calibrating by continual comparisons

- ○

- Redundancy >> Doing the math

- 6.

- Assignment of release types and times >> Clarification and THAT–decision phase:

- ○

- Overconfidence bias >> Breaking it down

- ○

- Optimism and the planning fallacy >> Asking externals

- ○

- Framing and loss aversion >> Turning it around

- ○

- Sunk–Cost fallacy >> 100 times

- 7.

- Collection and unification of features > > Analysis–evaluation phase:

- ○

- Anchoring bias >> Applying contrafactual thinking

- ○

- Inconsistency >> Calibrating by continual comparisons

- ○

- Redundancy >> Doing the math

- 8.

- Analysis of feature dependencies >> Affecting analysis–realization phase:

- ○

- Bounded awareness >> Looking at the bigger picture

- ○

- Confirmation bias >> Devil’s Advocate

- ○

- Search type >> Searching different

- 9.

- Definition and weighting of the assessment criteria > > Analysis–evaluation phase:

- ○

- Anchoring bias >> Applying contrafactual thinking

- ○

- Inconsistency >> Calibrating by continual comparisons

- ○

- Redundancy >> Doing the math

- 10–13.

- Value prioritization, urgency assessment, restriction analysis and feature assignment > > THAT–decision phases:

- ○

- Framing and loss aversion >> Turning it around

- ○

- Sunk Cost fallacy >> 100 times

5. ABC Decisions and Strategies Applied in the Industry

- Can the ABC strategies be applied in real-world industry projects?

- Would the strategies be followed beyond the workshop?

- Are the topics heuristics and allaying biased decision relevant among engineers?

- How could the impact that biases have on projects be quantified?

5.1. Industry and Group Demographics

5.2. Initial Survey Results

- Mostly objective decisions—Not influenced by personal feelings, tastes, or opinions—: 20%

- Mostly subjective—Based on, or influenced by personal feelings, tastes, or opinions—: 34%

- An even mix of objective and subjective: 42%

- Random decisions: 4%

- R&D decisions that made use of Heuristics: 56%

- R&D decisions that were misled or biased: 20%

5.3. Final Survey Resutls

- Mostly objective—Not influenced by personal feelings, tastes, or opinions—: 34%

- Mostly Subjective—Based on, or influenced by personal feelings, tastes, or opinions—: 13%

- An even mix of Objective and Subjective: 49%

- Random decisions: 4%

- R&D decisions that made use of Heuristics: 48%

- R&D decisions that were misled or biased: 38%

5.4. Surveys Assessment

- 1.

- Can the ABC strategies be applied in real-world industry projects? and

- 2.

- Would the strategies be followed beyond the workshop?

- 3.

- Are the topic heuristics and allaying biased decision relevant among engineers?

- 4.

- How could the impact that biases have on projects be quantified?

6. Discussion

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- VDI, VDI 2221, Düsseldorf: VDI-Gesellschaft Produkt- und Prozessgestaltung. 1993.

- VDI, VDI 2221, vol. 1, Düsseldorf: VDI-Gesellschaft Produkt- und Prozessgestaltung. 2019.

- Khodaei, H.; Ortt, R. Capturing dynamics in business model frameworks. J. Open Innov. Technol. Mark. Complex. 2019, 5, 8. [Google Scholar] [CrossRef]

- Fricke, G.; Pahl, G. Zusammenhang zwischen personenbedingtem Vorgehen und Lösungsgüte. In Proceedings of the ICED, Zürich, Switzerland, 27–29 August 1991. [Google Scholar]

- Pahl, G.; Beitz, W.; Feldhusen, J.; Grote, K.-H. Engineering Design: A Systematic Approach; Springer: Berlin/Heidelberg, Germany, 2007. [Google Scholar]

- Haidt, J. The emotional dog and its rational tail: A social intuitionist approach to moral judgment. Psychol. Rev. 2001, 108, 841. [Google Scholar] [CrossRef] [PubMed]

- Kahneman, D.; Tversky, A. Prospect theory: An analysis of decision under risk. Econometrica 1979, 47, 263–291. [Google Scholar] [CrossRef]

- Tversky, A.; Kahneman, D. Judgment under uncertainty: Heuristics and biases. Science 1974, 185, 1124–1131. [Google Scholar] [CrossRef] [PubMed]

- Baker, M.; Penny, D. 1500 Scientists Lift the Lid on Reproducibility; Macmillan Publishers Limited: San Francisco, CA, USA, 2016. [Google Scholar]

- Schwenk, C.-R. Devil’s advocacy in managerial decision-making. J. Manag. Stud. 1984, 21, 153–168. [Google Scholar] [CrossRef]

- Chesbrough, H. The new environment for business models. In Open Business Models; Business School Press: Boston, MA, USA; Harvard, MA, USA, 2006; pp. 49–80. [Google Scholar]

- Şahin, T.; Huth, T.; Axmann, J.; Vietor, T. A methodology for value-oriented strategic release planning to provide continuous product upgrading. In Proceedings of the IEEE International Conference on Industrial Engineering and Engineering Management, Singapore, 14–17 December 2020. [Google Scholar]

- Arnott, D. Decision Biases and Decision Support Systems Development; Monash University: Melbourne, VIC, Australia, 2002. [Google Scholar]

- Kinsey, M.; Kinateder, M.; Gwynne, S.M.V.; Hopkin, D.J. Burning biases: Mitigating cognitive biases in fire engineering. Fire Mater. 2020, 45, 543–552. [Google Scholar] [CrossRef]

- Miller, G.A.; Galanter, E.; Pribram, K. Plans and the Stucture of Behavior; Rinehardt & Winston: New York, NY, USA, 1960; pp. 1065–1067. [Google Scholar]

- Galle, P.; Kovacs, L. Replication protocol analysis: A method for the study of real-world design thinking. Des. Stud. 1996, 17, 181–200. [Google Scholar] [CrossRef]

- Hansen, C.; Andreasen, M. Basic thinking patterns of decision-making in engineering design. In Proceedings of the International Conference on Multi-Criteria Evaluation, Neukirchen, Austria, 1 January 2000. [Google Scholar]

- Ahmed, S.; Hansen, C.T. A decision-making model for engineering designers. In Proceedings of the Engineering Design Conference, London, UK, 9–11 July 2002. [Google Scholar]

- Höhne, G. Treatment of decision situations in the design process. In Proceedings of the International design conference—DESIGN 2004, Dubrovnik, Croatia, 18–21 May 2004. [Google Scholar]

- Hanse, C.T.; Andreasen, M. A mapping of design decision making. In Proceedings of the DESIGN—International Design Conference, Dubrovnik, Croatia, 18–21 May 2004. [Google Scholar]

- Oxford University Press. 2017. Available online: https://www.oxforddictionaries.com. (accessed on 11 November 2017). Searched term: Analyse.

- Oxford University Press. 2017. Available online: https://www.oxforddictionaries.com. (accessed on 11 November 2017). Searched term: Realization.

- Hammond, K.R.; Summers, D.A. Cognitive control. Psychol. Rev. 1972, 79, 58. [Google Scholar] [CrossRef]

- Ackoff, R. Ackoff’s Best; John Wiley & Sons: New York, NY, USA, 1999. [Google Scholar]

- Read, L.E. I, Pencil: My Family Tree as Told to Leonard, E. Read; The Freeman: New York, NY, USA, 1958; Volume 8, pp. 32–37. [Google Scholar]

- Sanchez Ruelas, J.; Stechert, C.; Vietor, T.; Schindler, W. Requirements Management and Uncertainty Analysis for Future Vehicle Architectures. In Proceedings of the FISITA, Beijing, China, 23–30 November 2012. [Google Scholar]

- Zadeh, L. Fuzzy sets. Inf. Control 1965, 8, 338–353. [Google Scholar] [CrossRef]

- Saaty, T. The Analytic Hierarchy Process: Planning, Priority Setting, Resource Allocation; McGraw-Hill: New York, NY, USA, 1980. [Google Scholar]

- Kahneman, D. Thinking, Fast and Slow; Farrar, Straus and Giroux: New York, NY, USA, 2011. [Google Scholar]

- Rozenblit, L.; Keil, F. The misunderstood limits of folk science: An illusion of explanatory depth. Cogn. Sci. 2002, 26, 521–562. [Google Scholar] [CrossRef] [PubMed]

- Hogarth, R.M. Judgement and Choice: The Phychology of Decision; John Wiley & Sons: Oxford, MA, USA, 1987. [Google Scholar]

- Rogers, T.; Milkman, K.; John, L.; Norton, M. Beyond good intentions: Prompting people to make plans improves follow-through on important tasks. Behav. Sci. Policy 2015, 2, 33–41. [Google Scholar] [CrossRef]

- Simons, D.; Chabris, C. The invisible gorilla. 2010. Available online: http://www.theinvisiblegorilla.com (accessed on 31 July 2018).

- Galbraith, R.C.; Underwood, B.J. Perceived frequency of concrete and abstract words. Mem. Cogn. 1973, 1, 56–60. [Google Scholar] [CrossRef] [PubMed]

- Mitchell, D.J.; Russo, J.E.; Pennington, N. Back to the future: Temporal perspective in the explanation of events. J. Behav. Decis. Mak. 1989, 2, 22–38. [Google Scholar] [CrossRef]

- Weinreich, S.; Şahin, T.; Inkermann, D.; Huth, T.; Vietor, T. Managing disruptive innovation by value-oriented portfolio planning. In Proceedings of the DESIGN Conference, Pittsburgh, PA, USA, 26–29 October 2020. [Google Scholar]

- Nayebi, M.; Ruhe, G. An open innovation approach in support of product release decisions. In Proceedings of the 7th International Workshop on Cooperative and Human Aspects of Software Engineering, Hyderabad, India, 2–3 June 2014. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sanchez Ruelas, J.G.; Şahin, T.; Vietor, T. Development of Decision–Model and Strategies for Allaying Biased Choices in Design and Development Processes. J. Open Innov. Technol. Mark. Complex. 2021, 7, 118. https://doi.org/10.3390/joitmc7020118

Sanchez Ruelas JG, Şahin T, Vietor T. Development of Decision–Model and Strategies for Allaying Biased Choices in Design and Development Processes. Journal of Open Innovation: Technology, Market, and Complexity. 2021; 7(2):118. https://doi.org/10.3390/joitmc7020118

Chicago/Turabian StyleSanchez Ruelas, Jafet G., Tarık Şahin, and Thomas Vietor. 2021. "Development of Decision–Model and Strategies for Allaying Biased Choices in Design and Development Processes" Journal of Open Innovation: Technology, Market, and Complexity 7, no. 2: 118. https://doi.org/10.3390/joitmc7020118

APA StyleSanchez Ruelas, J. G., Şahin, T., & Vietor, T. (2021). Development of Decision–Model and Strategies for Allaying Biased Choices in Design and Development Processes. Journal of Open Innovation: Technology, Market, and Complexity, 7(2), 118. https://doi.org/10.3390/joitmc7020118