An Autonomous Human Following Caddie Robot with High-Level Driving Functions

Abstract

:1. Introduction

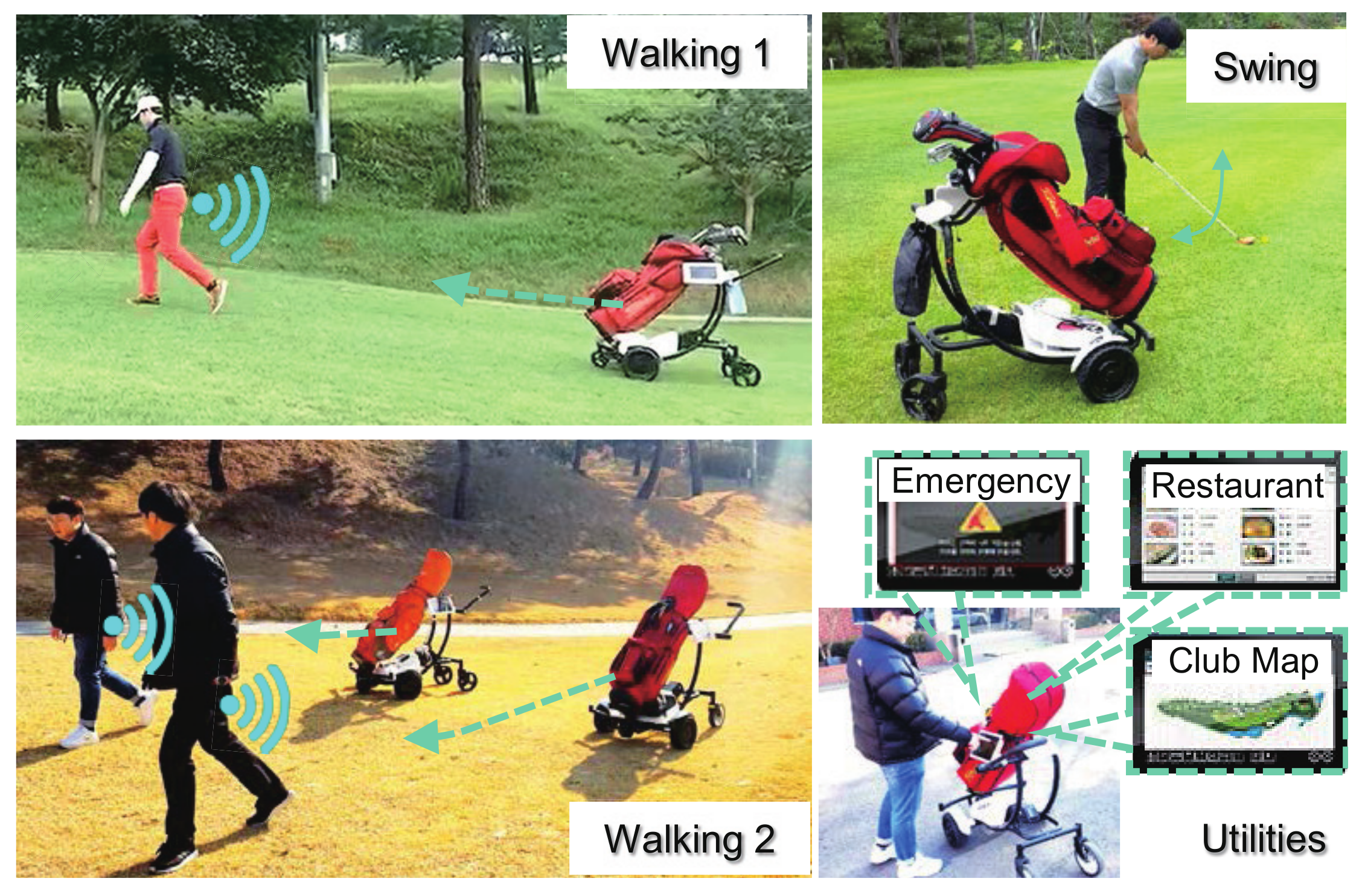

2. Description of the Caddie Robot

2.1. Functionalities of the Caddie Robot

2.2. System Configuration and Operating Principle

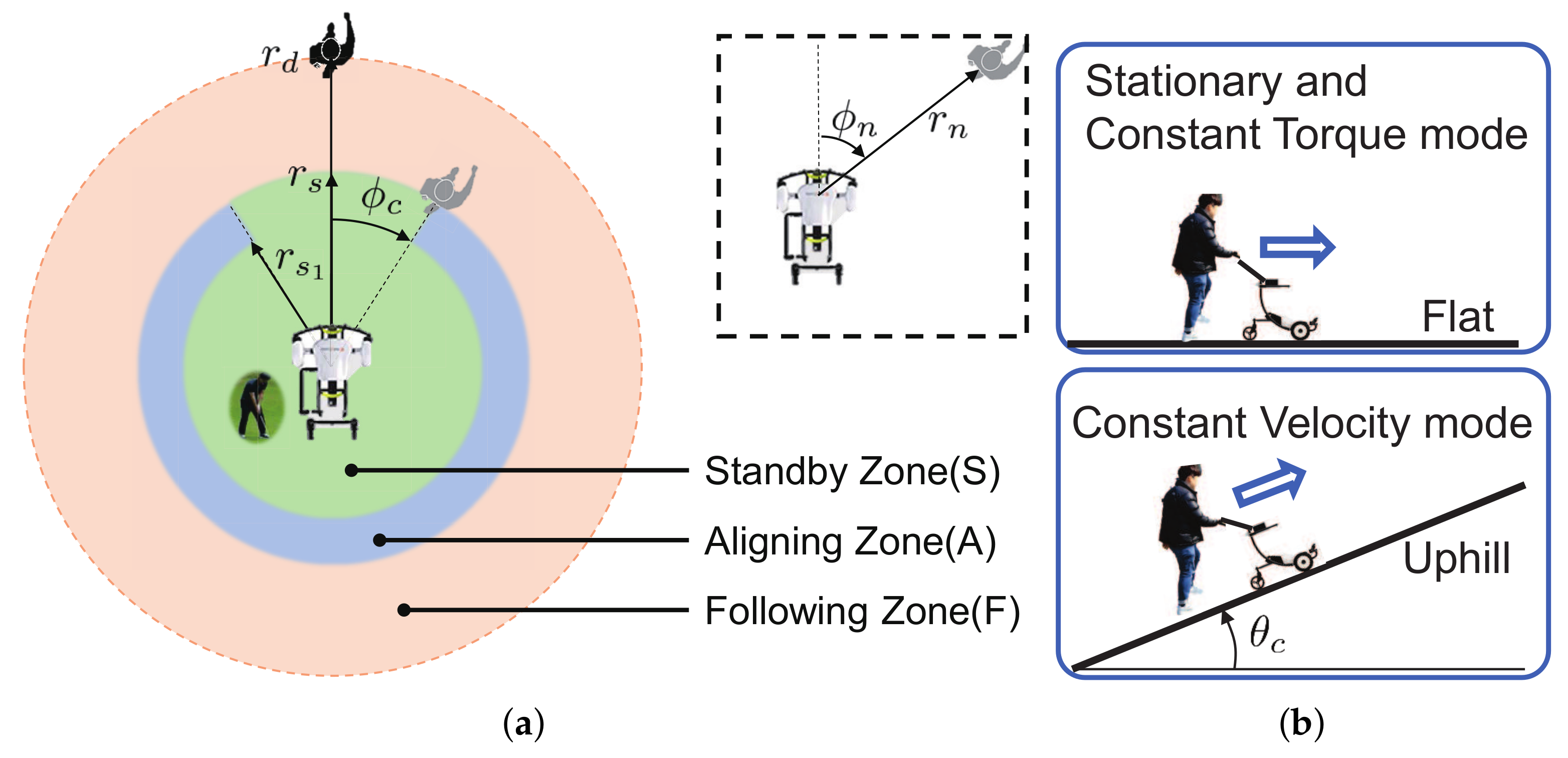

2.3. Driving Strategy for the Robot

2.3.1. Autonomous Driving Mode

- Standby Mode:

- Aligning Mode:

- Following Mode:

2.3.2. Manual Mode

- Constant Torque Mode:

- Constant Velocity Mode:

3. Driving and Control Algorithms

3.1. Overall Structure of Driving Controller for Caddie Robot

3.2. Control Configuration for Autonomous Driving Mode

3.3. Velocity and Orientation Reference Generation Algorithm

3.4. Controller Design for the Manual Mode Operation

3.5. Human Intention Recognition Based on Yaw Motion

4. Experimental Verification

4.1. Verification of Driving Performance

4.2. Experiment for the Whole Driving Scenario

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Haddadin, S.; Albu-Schäffer, A.; Hirzinger, G. Safe Physical Human-Robot Interaction: Measurements, Analysis and New Insights; Robotics Research, Kaneko, M., Nakamura, Y., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; pp. 395–407. [Google Scholar]

- Morato, C.; Kaipa, K.N.; Zhao, B.; Gupta, S.K. Toward Safe Human Robot Collaboration by Using Multiple Kinects Based Real-Time Human Tracking. J. Comput. Inf. Sci. Eng. 2014, 14, 011006. [Google Scholar] [CrossRef]

- Roy, N.; Baltus, G.; Fox, D.; Gemperle, F.; Goetz, J.; Hirsch, T.; Margaritis, D.; Montemerlo, M.; Pineau, J.; Schulte, J.; et al. Towards personal service robots for the elderly. In Proceedings of the Workshop on Interactive Robots and Entertainment (WIRE 2000); 2000; Volume 25, p. 184. Available online: http://www.fore.robot.cc/papers/thrun.nursebot-early.pdf (accessed on 15 August 2020).

- Nakaoka, S.; Nakazawa, A.; Yokoi, K.; Ikeuchi, K. Leg motion primitives for a dancing humanoid robot. In Proceedings of the IEEE International Conference on Robotics and Automation, New Orleans, LA, USA, 26 April–1 May 2004; Volume 1, pp. 610–615. [Google Scholar]

- Cigliano, P.; Lippiello, V.; Ruggiero, F.; Siciliano, B. Robotic Ball Catching with an Eye-in-Hand Single-Camera System. IEEE Trans. Control Syst. Technol. 2015, 23, 1657–1671. [Google Scholar] [CrossRef] [Green Version]

- Widodo, F.A.; Mutijarsa, K. Design and implementation of movement, dribbler and kicker for wheeled soccer robot. In Proceedings of the 2017 International Conference on Information Technology Systems and Innovation (ICITSI), Bandung, Indonesia, 23–24 October 2017; pp. 200–205. [Google Scholar]

- Choi, J.H.; Song, C.; Kim, K.; Oh, S. Development of Stone Throwing Robot and High Precision Driving Control for Curling. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 2434–2440. [Google Scholar]

- Anderson, M. A new spin on an old toy. IEEE Spectr. 2009, 46, 18–19. [Google Scholar] [CrossRef]

- Pereira, N.; Ribeiro, F.; Lopes, G.; Whitney, D.; Lino, J. Autonomous golf ball picking robot design and development. Ind. Robot Int. J. 2012, 39, 541–550. [Google Scholar] [CrossRef] [Green Version]

- Xu, C.; Nagaoka, T.; Ming, A.; Shimojo, M. Motion Control of Golf Swing Robot Based on Target Dynamics. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; pp. 2545–2550. [Google Scholar]

- Suzuki, S.; Haake, S.J.; Heller, B.W. Multiple modulation torque planning for a new golf-swing robot with a skilful wrist turn. Sports Eng. 2006, 9, 201–208. [Google Scholar] [CrossRef]

- Bulson, R.C.; Ciuffreda, K.J.; Hung, G.K. The effect of retinal defocus on golf putting. Ophthalmic Physiol. Opt. 2008, 28, 334–344. [Google Scholar] [CrossRef] [PubMed]

- Smith, A.D.; Chang, H.J.; Blanchard, E.J. An outdoor high-accuracy local positioning system for an autonomous robotic golf greens mower. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–15 May 2012; pp. 2633–2639. [Google Scholar]

- Tang, Y.; Xu, J.; Fang, M. Tracking feedback system of Golf Robotic Caddie based on the binocular vision. In Proceedings of the 2016 12th World Congress on Intelligent Control and Automation (WCICA), Guilin, China, 12–15 June 2016; pp. 1491–1495. [Google Scholar]

- Tempo Walk in Clubcar. Available online: https://www.clubcar.com/us/en/golf-operations/fleet-golf/tempo-walk.html (accessed on 15 August 2020).

- Gupta, M.; Kumar, S.; Behera, L.; Subramanian, V.K. A Novel Vision-Based Tracking Algorithm for a Human-Following Mobile Robot. IEEE Trans. Syst. Man Cybern. Syst. 2017, 47, 1415–1427. [Google Scholar] [CrossRef]

- Pang, L.; Zhang, L.; Yu, Y.; Yu, J.; Cao, Z.; Zhou, C. A human-following approach using binocular camera. In Proceedings of the 2017 IEEE International Conference on Mechatronics and Automation (ICMA), Kagawa, Japan, 6–9 August 2017; pp. 1487–1492. [Google Scholar]

- Zhu, Z.; Ma, H.; Zou, W. Human Following for Wheeled Robot with Monocular Pan-tilt Camera. arXiv 2019, arXiv:1909.06087. [Google Scholar]

- Chi, W.; Wang, J.; Meng, M.Q. A Gait Recognition Method for Human Following in Service Robots. IEEE Trans. Syst. Man Cybern. Syst. 2018, 48, 1429–1440. [Google Scholar] [CrossRef]

- Huskić, G.; Buck, S.; González, L.A.I.; Zell, A. Outdoor person following at higher speeds using a skid-steered mobile robot. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 3433–3438. [Google Scholar]

- Jiang, L.; Wang, W.; Chen, Y.; Jia, Y. Personalize Vison-based Human Following for Mobile Robots by Learning from Human-Driven Demonstrations. In Proceedings of the 2018 27th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Nanjing, China, 27–31 August 2018; pp. 726–731. [Google Scholar]

- Motion control of joystick interfaced electric wheelchair for improvement of safety and riding comfort. Mechatronics 2019, 59, 104–114. [CrossRef]

- Komada, S.; Ishida, M.; Ohnishi, K.; Hori, T. Disturbance observer-based motion control of direct drive motors. IEEE Trans. Energy Convers. 1991, 6, 553–559. [Google Scholar] [CrossRef]

- Fujimoto, H.; Saito, T.; Noguchi, T. Motion stabilization control of electric vehicle under snowy conditions based on yaw-moment observer. In Proceedings of the 8th IEEE International Workshop on Advanced Motion Control, AMC ’04, Kawasaki, Japan, 25–28 March 2004; pp. 35–40. [Google Scholar]

- Operation state observation and condition recognition for the control of power-assisted wheelchair. Mechatronics 2014, 24, 1101–1111. [CrossRef]

- Ciel GC Kernel Description. Available online: http://www.cielgolf.com/ (accessed on 18 August 2020).

| Parameter | Value |

|---|---|

| Kerb weight | 30 kg |

| Maximum intake weight | 50 kg |

| Dimensions | W: 1030 mm, D: 720 mm, H: 865 mm |

| Wheel Radius | 0.13 m |

| Operating hours | 3 h |

| Maximum velocity | 11 km/h |

| Maximum gradability | 25 |

| Maximum bank angle | 20 |

| Battery | lithium polymer 36 V 20 Ah |

| Parameter | Definition | Critical Value |

|---|---|---|

| S | Switch signal by the user | 1, 0 |

| E | RMS error of Pattern conformity | |

| v | Current velocity | |

| Hill angle measured by sensor | ||

| r | Remote distance from the robot to user | , , |

| Heading angle from the robot to user |

| Parameter | Value | Parameter | Value |

|---|---|---|---|

| 1.2 | 40 kg | ||

| 0.01 | 10 kg/s | ||

| 0.3 | 0.12 kg·m | ||

| 0.005 | 0.005 kg·m/s | ||

| 0.0122 s | 0.0796 s | ||

| 0.008 s | 0.1592 s | ||

| 1.5 m/s | 2.9 m | ||

| 1.8 m | 2.525 m | ||

| 1.09 rad/s | 0.3 g |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Choi, J.H.; Samuel, K.; Nam, K.; Oh, S. An Autonomous Human Following Caddie Robot with High-Level Driving Functions. Electronics 2020, 9, 1516. https://doi.org/10.3390/electronics9091516

Choi JH, Samuel K, Nam K, Oh S. An Autonomous Human Following Caddie Robot with High-Level Driving Functions. Electronics. 2020; 9(9):1516. https://doi.org/10.3390/electronics9091516

Chicago/Turabian StyleChoi, Jung Hyun, Kangwagye Samuel, Kanghyun Nam, and Sehoon Oh. 2020. "An Autonomous Human Following Caddie Robot with High-Level Driving Functions" Electronics 9, no. 9: 1516. https://doi.org/10.3390/electronics9091516

APA StyleChoi, J. H., Samuel, K., Nam, K., & Oh, S. (2020). An Autonomous Human Following Caddie Robot with High-Level Driving Functions. Electronics, 9(9), 1516. https://doi.org/10.3390/electronics9091516