Optimal User Selection for High-Performance and Stabilized Energy-Efficient Federated Learning Platforms

Abstract

:1. Introduction

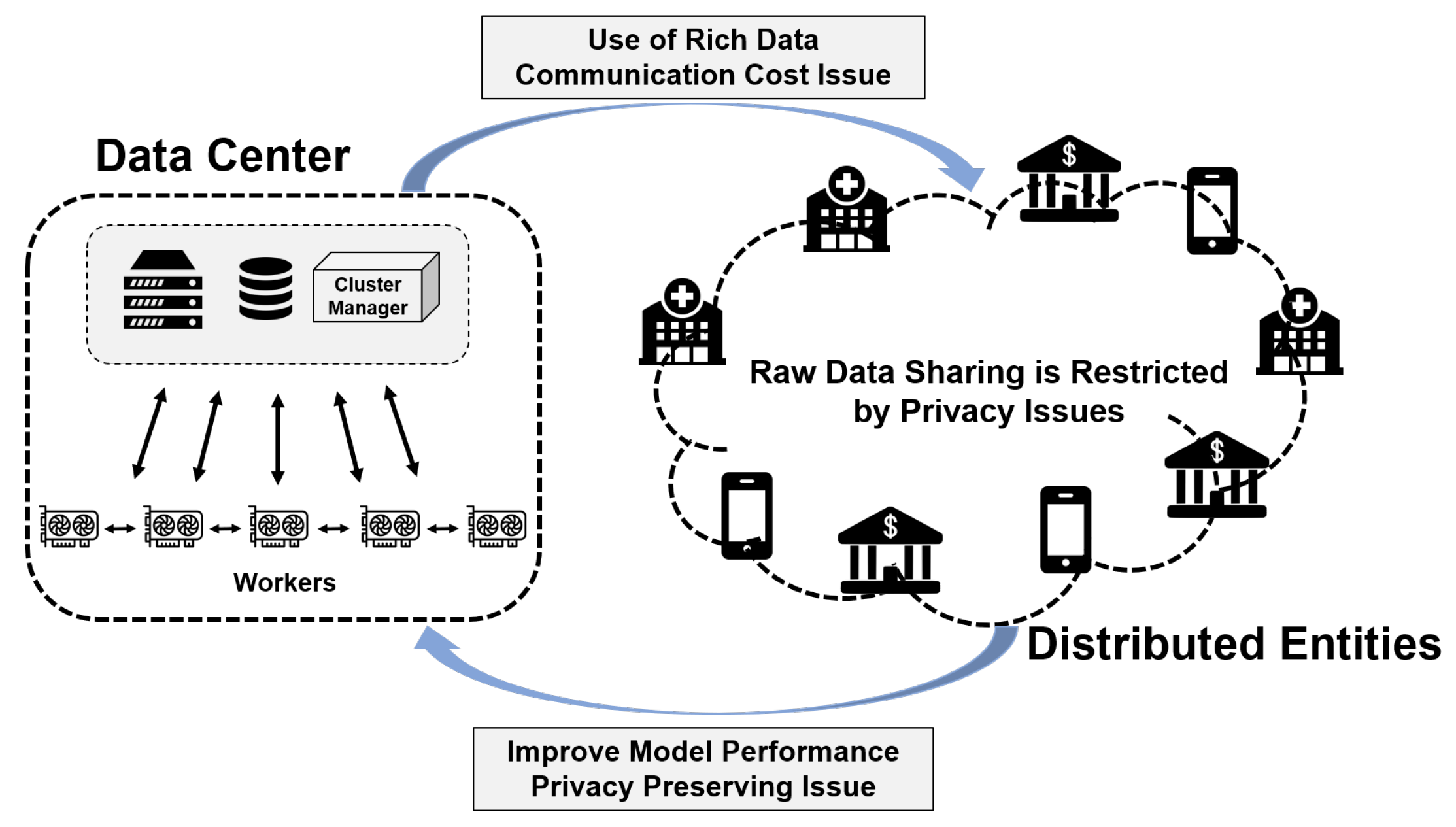

Motivation

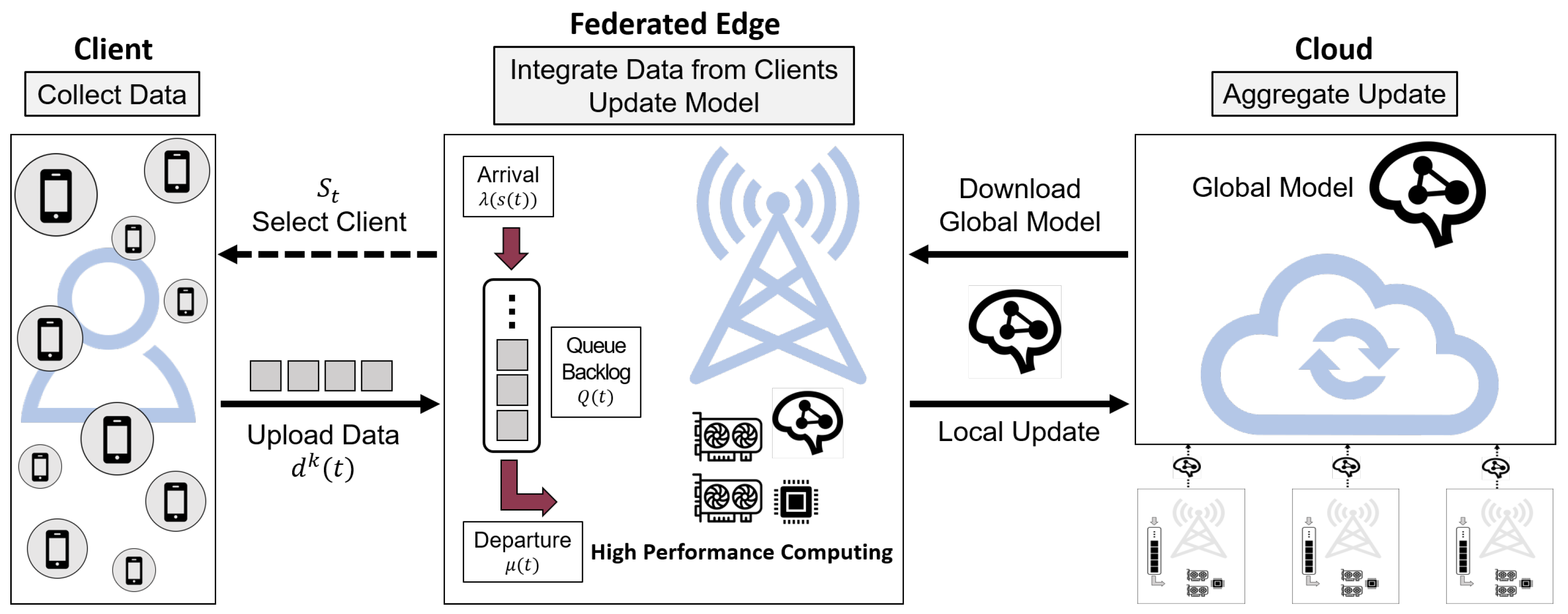

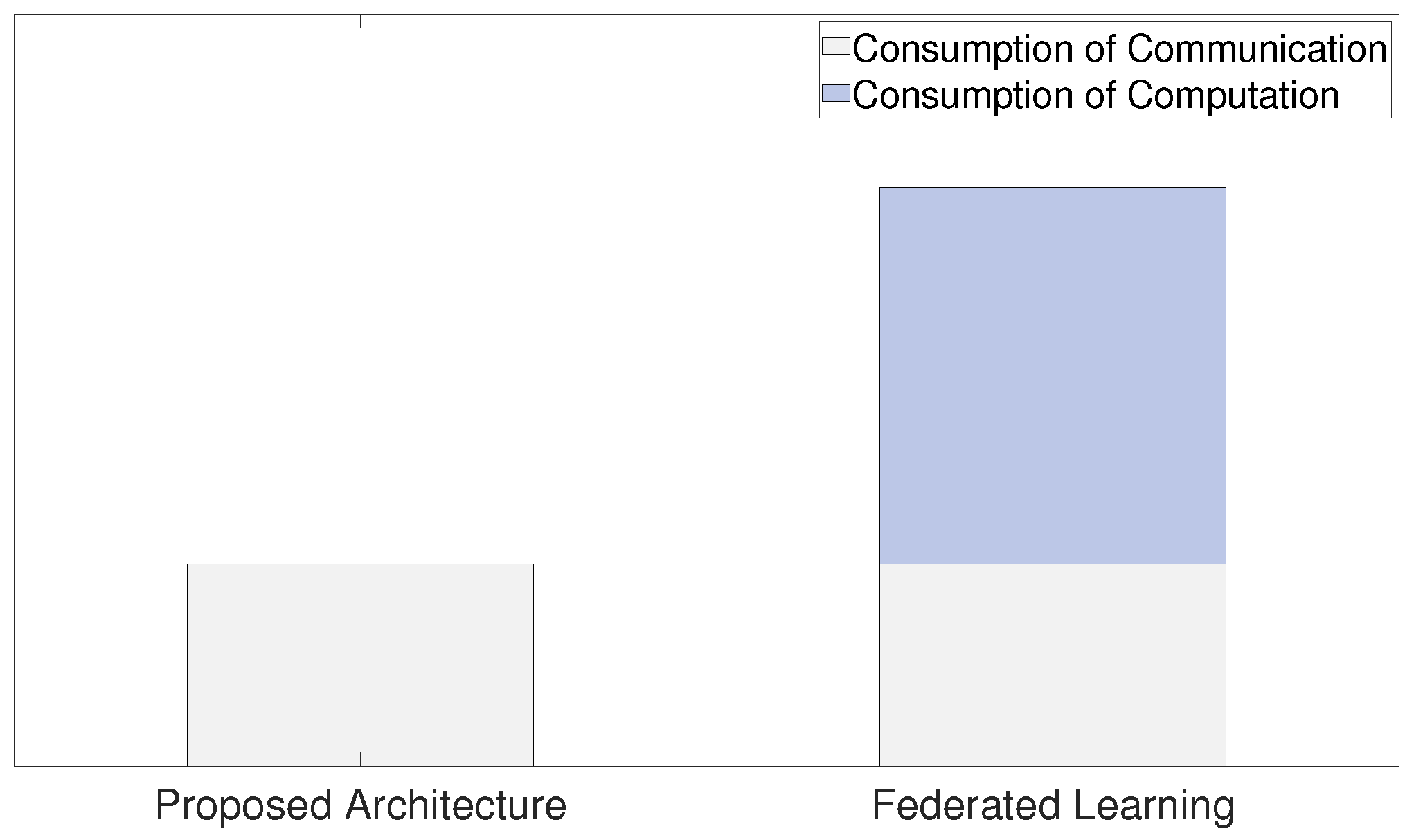

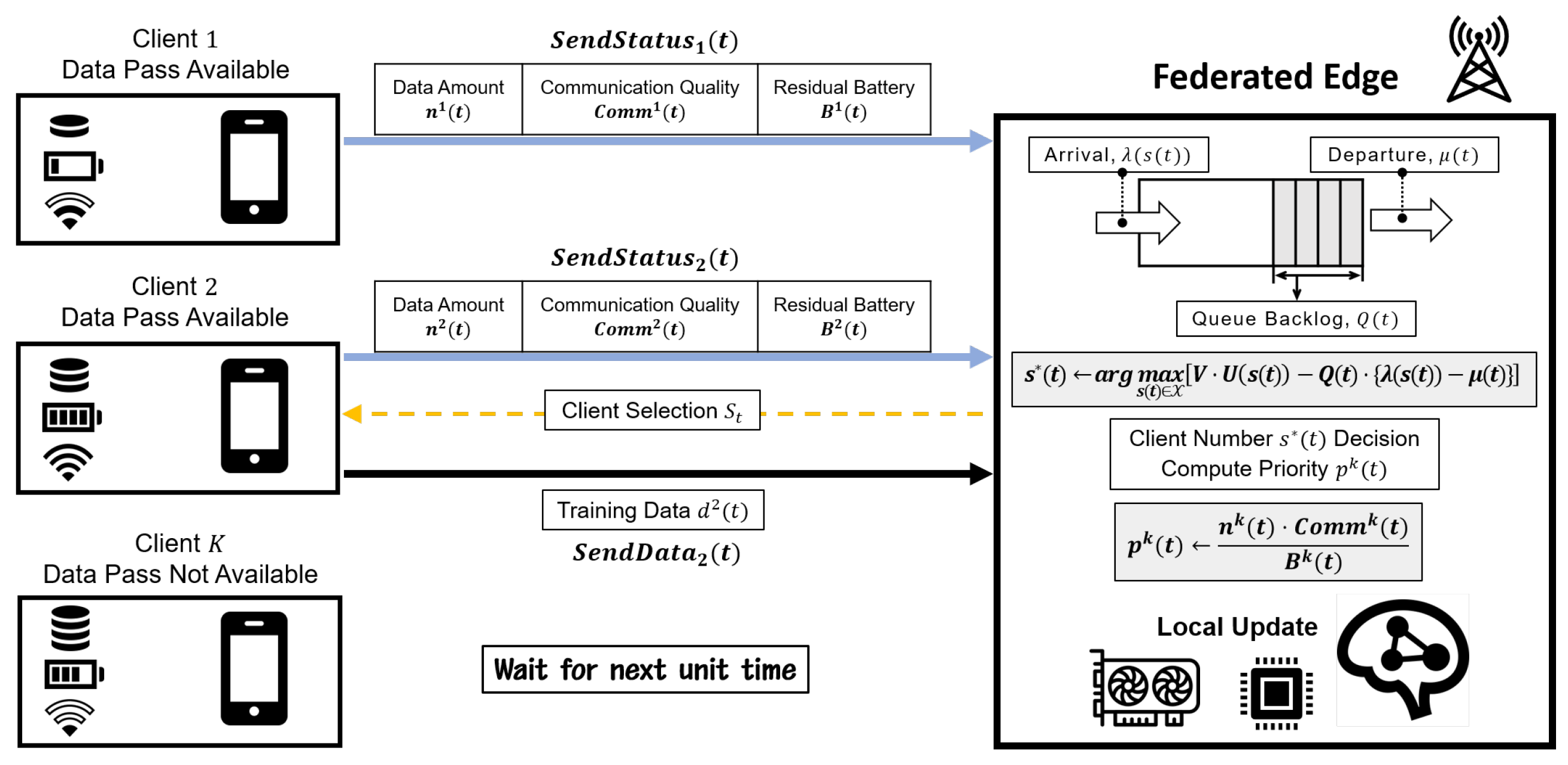

2. Federated Learning Edge

3. System Model of Proposed Method

3.1. Clients of the Federated Learning Edge Platform

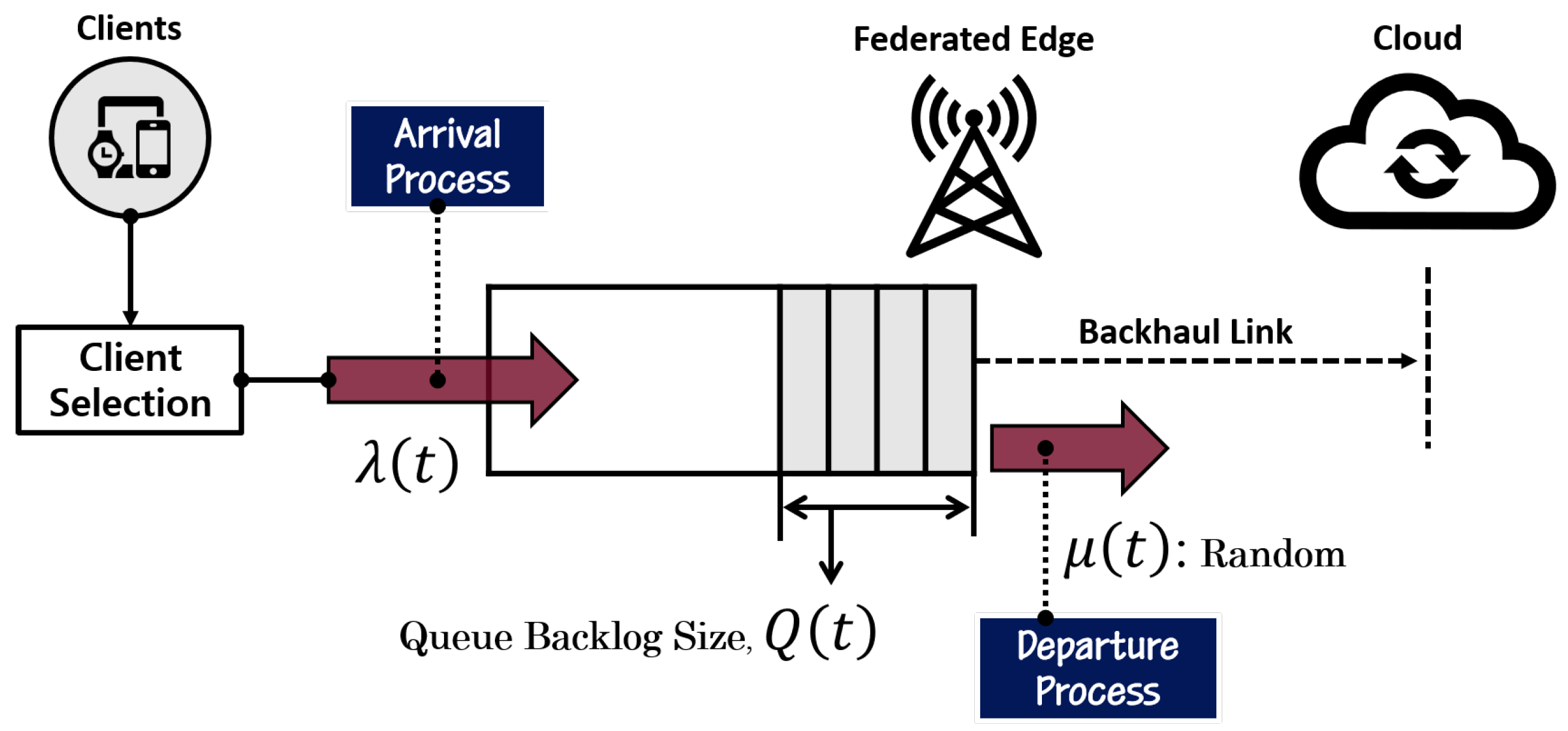

3.2. Queue-Equipped Federated Edge

3.3. Client Selection of Federated Edge

4. Proposed Algorithm

| Algorithm 1 Procedure at the federated edge. |

| 1: K: Total number of clients 2: : Federated edge queue size at t 3: : The set of client numbers 4: V: Trade-off factor between accuracy and queue-backlog 5: : The set of selected clients at t 6: : The array of priorities of clients at t 7: : i-th element of array at t 8: : Time-average optimal client number at t 9: : Data transmitted from client k at t 10: : Timeout value for receiving resource status from client k 11: 12: for each unit time 0, 1, 2, … do 13: Step1: Optimal number of clients decision 14: Observe 15: 16: for do 17: 18: if then 19: 20: // Optimal number of clients 21: end if 22: end for 23: Step2: Client selection 24: Initialize and , where 25: for each client 1, 2, …, K in parallel do 26: Call SendStatusk of client k 27: Start timer() 28: Wait until(receipt of reply from client k OR timeout) 29: if receipt of reply from client k then 30: SendStatusk 31: if then // If residual battery power is 0 32: 33: else 34: // Priority value of client 35: end if 36: else // No reply from client k until timeout 37: 38: end if 39: Reset timer() 40: end for 41: Sort in descending order 42: for each element , do // For selected clients 43: // is i-th element of sorted array 44: end for 45: for each client in parallel do 46: SendDatak 47: end for 48: max 1.6 49: end for |

| Algorithm 2 Procedure at each client k. |

| 1: SendStatusk : 2: (Data amount of client k at t) 3: (Communication quality of client k at t) 4: (Residual battery power of client k at t) 5: return () to federated edge 6: SendDatak : 7: (Defined amount of data to send) 8: return to federated edge |

4.1. Client Number Control by Lyapunov Optimization

4.2. Client Selection

5. Security and Privacy Discussions in FL

6. Performance Evaluation

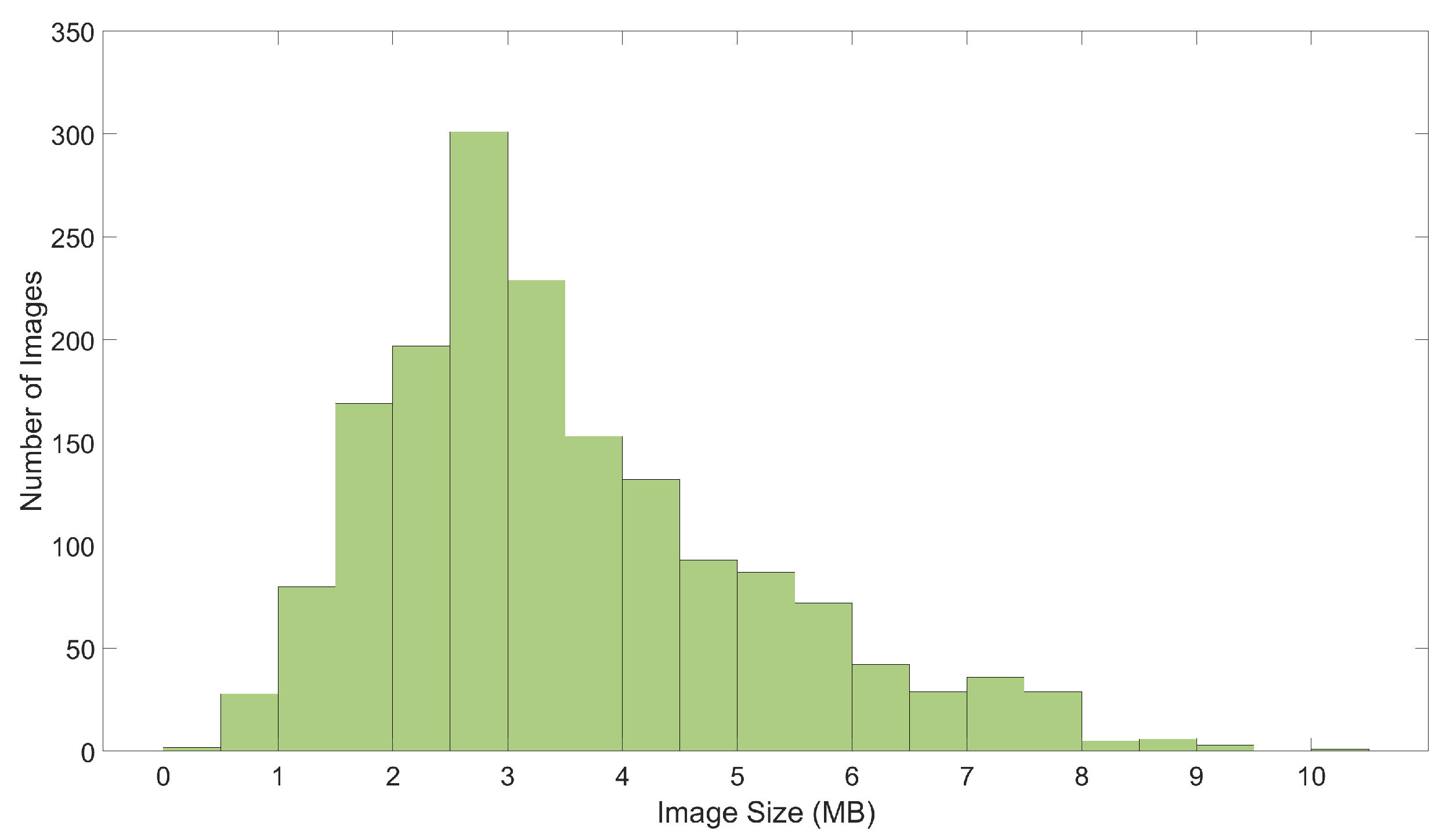

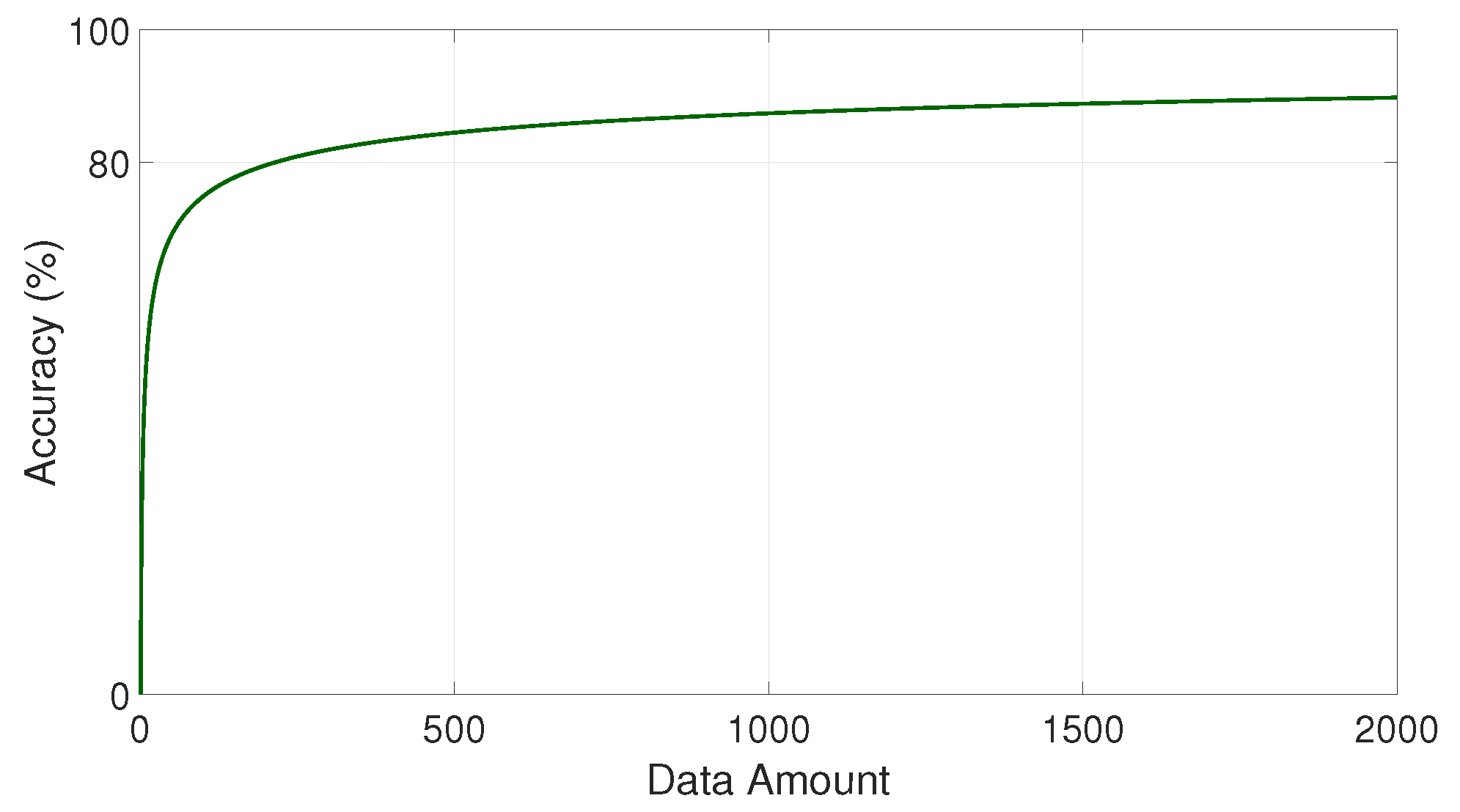

6.1. Experiment Setting

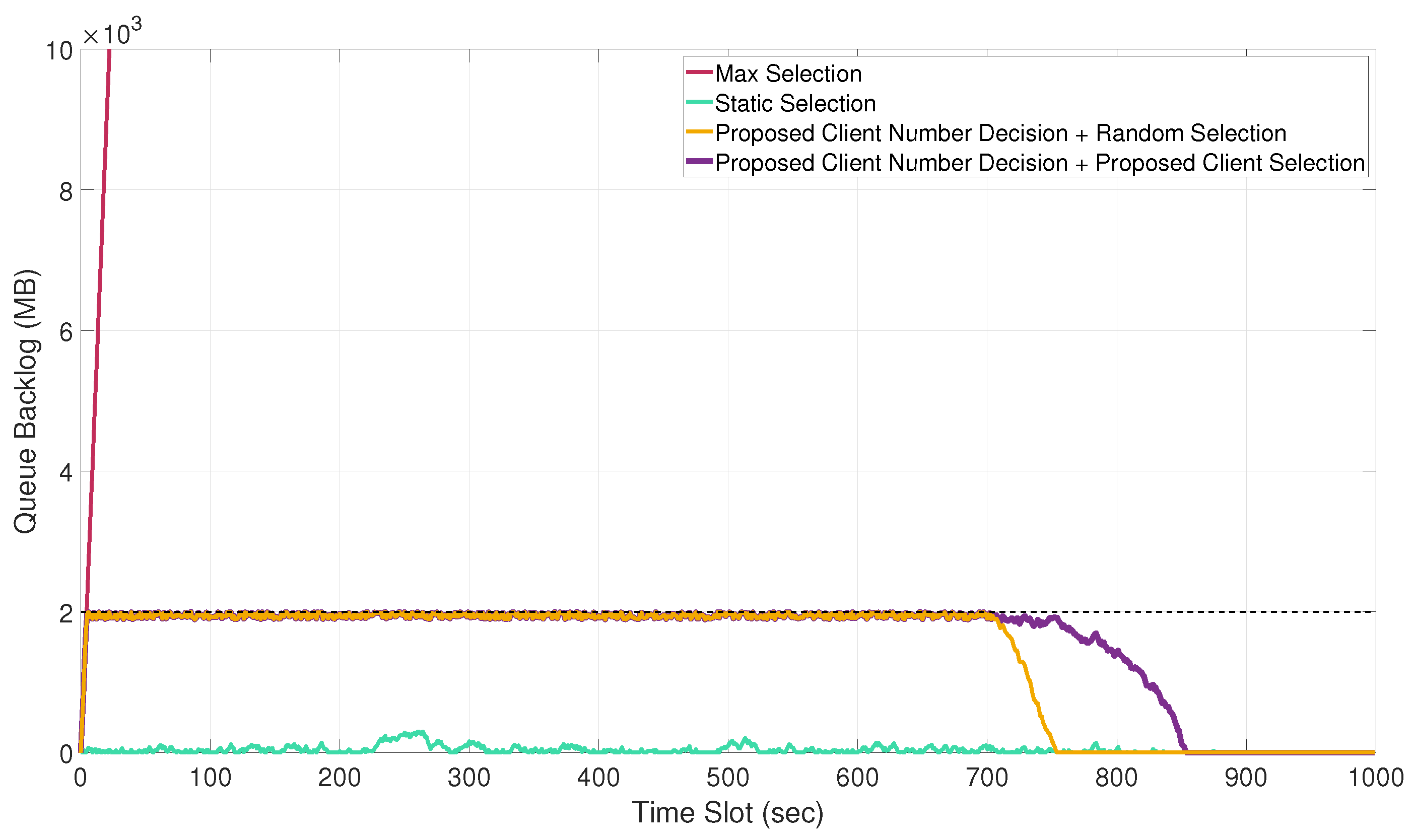

- Max Selection: The federated edge receives data from every client at every unit time.

- Static Selection: The federated edge selects the same amount of clients at every unit time. In this evaluation, five clients were used to transmit the data for each unit time.

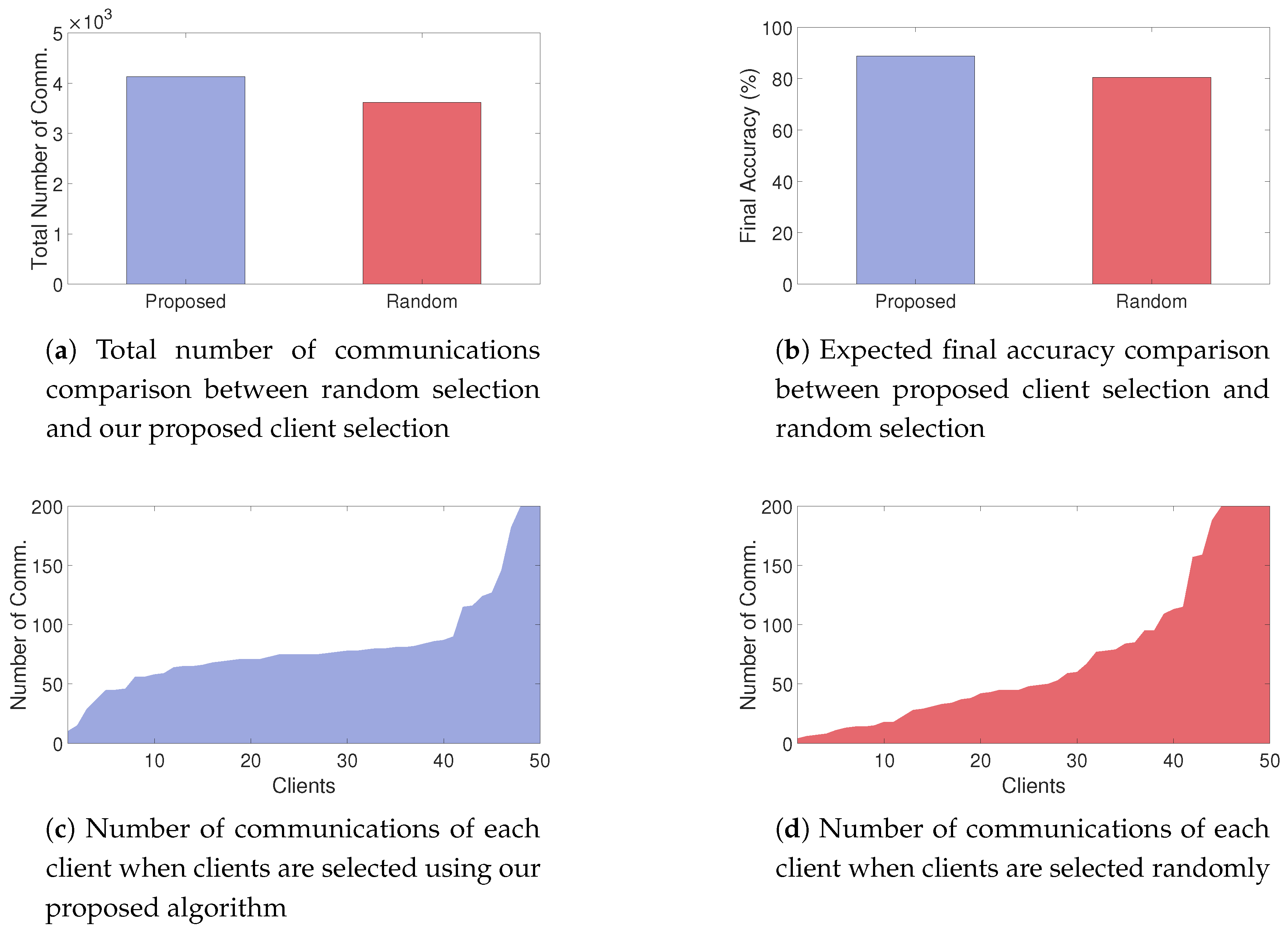

- Random Selection: The number of selected clients is decided in the same way as our proposed algorithm; however, it selects random clients without considering the resources of the clients.

6.2. Experimental Results

7. Concluding Remarks and Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Sze, V.; Chen, Y.H.; Yang, T.J.; Emer, J.E. Efficient Processing of Deep Neural Networks: A Tutorial and Survey. Proc. IEEE 2017, 105, 2295–2329. [Google Scholar] [CrossRef] [Green Version]

- Zhang, W.; Gupta, S.; Lian, X.; Liu, J. Staleness-Aware Async-SGD for Distributed Deep Learning. arXiv 2015, arXiv:1511.05950. [Google Scholar]

- Han, S.; Mao, H.; Dally, W.J. Deep Compression: Compressing Deep Neural Networks with Pruning, Trained Quantization and Huffman Coding. arXiv 2015, arXiv:1510.00149. [Google Scholar]

- Gupta, S.; Zhang, W.; Wang, F. Model Accuracy and Runtime Tradeoff in Distributed Deep Learning: A Systematic Study. In Proceedings of the IEEE International Conference on Data Mining (ICDM), Barcelona, Spain, 12–15 December 2016. [Google Scholar]

- Jeon, J.; Kim, D.; Kim, J. Cyclic Parameter Sharing for Privacy-Preserving Distributed Deep Learning Platforms. In Proceedings of the International Conference on Artificial Intelligence in Information and Communication (ICAIIC), Okinawa, Japan, 11–13 February 2019. [Google Scholar]

- Gupta, O.; Raskar, R. Distributed Learning of Deep Neural Network over Multiple Agents. J. Netw. Comput. Appl. 2018, 116, 1–8. [Google Scholar] [CrossRef] [Green Version]

- Jeon, J.; Kim, J.; Kim, J.; Kim, K.; Mohaisen, A.; Kim, J. Privacy-Preserving Deep Learning Computation for Geo-Distributed Medical Big-Data Platforms. In Proceedings of the IEEE/IFIP International Conference on Dependable Systems and Networks (DSN) Supplemental Volume, Portland, OR, USA, 24–27 June 2019. [Google Scholar]

- Jeon, J.; Kim, J. Privacy-Sensitive Parallel Split Learning. In Proceedings of the IEEE International Conference on Information Networking (ICOIN), Barcelona, Spain, 7–10 January 2020. [Google Scholar]

- Kairouz, P.; McMahan, H.B.; Avent, B.; Bellet, A.; Bennis, M.; Bhagoji, A.N.; Bonawitz, K.; Charles, Z.; Cormode, G.; Cummings, R.; et al. Advances and Open Problems in Federated Learning. arXiv 2019, arXiv:1912.04977. [Google Scholar]

- McMahan, H.B.; Moore, E.; Ramage, D.; Hampson, S.; Arcas, B.A. Communication-Efficient Learning of Deep Networks from Decentralized Data. In Proceedings of the International Conference on Artificial Intelligence and Statistics (AISTATS), Fort Lauderdale, FL, USA, 20–22 April 2017. [Google Scholar]

- Konečný, J.; McMahan, H.B.; Ramage, D. Federated Optimization: Distributed Optimization Beyond the Datacenter. In Proceedings of the NIPS Workshop on Optimization for Machine Learning, Montreal, QC, Canada, 11 December 2015. [Google Scholar]

- Li, T.; Sahu, A.K.; Talwalkar, A.; Smith, V. Federated Learning: Challenges, Methods, and Future Directions. IEEE Signal Process. Mag. 2020, 37, 50–60. [Google Scholar] [CrossRef]

- Wang, S.; Tuor, T.; Salonidis, T.; Leung, K.K.; Makaya, C.; He, T.; Chan, K. When Edge Meets Learning: Adaptive Federated Learning in Resource Constrained Edge Computing Systems. IEEE J. Sel. Areas Commun. 2019, 37, 1205–1221. [Google Scholar] [CrossRef] [Green Version]

- Zhu, G.; Wang, Y.; Huang, K. Broadband Analog Aggregation for Low-Latency Federated Edge Learning. IEEE Trans. Wirel. Commun. 2020, 19, 491–506. [Google Scholar] [CrossRef]

- Amiri, M.M.; Gündüz, D. Machine Learning at the Wireless Edge: Distributed Stochastic Gradient Descent Over-the-Air. In Proceedings of the IEEE International Symposium on Information Theory (ISIT), Paris, France, 7–12 July 2019. [Google Scholar]

- Bonawitz, K.; Ivanov, V.; Kreuter, B.; Marcedone, A.; McMahan, H.B.; Patel, S.; Ramage, D.; Segal, A.; Seth, K. Practical Secure Aggregation for Privacy-Preserving Machine Learning. In Proceedings of the ACM SIGSAC Conference on Computer and Communications Security (CCS), Dallas, TX, USA, 30 October–3 November 2017. [Google Scholar]

- Bonawitz, K.; Eichner, H.; Grieskamp, W.; Huba, D.; Ingerman, A.; Ivanov, V.; Kiddon, C.; Konečný, J.; Mazzocchi, S.; McMahan, H.B.; et al. Towards Federated Learning at Scale: System Design. In Proceedings of the Conference on Systems and Machine Learning (SysML), Palo Alto, CA, USA, 31 March–2 April 2019. [Google Scholar]

- Sattler, F.; Wiedemann, S.; Müller, K.R.; Samek, W. Robust and Communication-Efficient Federated Learning from Non-IID Data. IEEE Trans. Neural Netw. Learn. Syst. 2019. [Google Scholar] [CrossRef] [Green Version]

- Smith, V.; Chiang, C.K.; Sanjabi, M.; Talwalkar, A.S. Federated Multi-Task Learning. In Proceedings of the Conference on Neural Information Processing Systems (NIPS), Longbeach, CA, USA, 4–9 December 2017. [Google Scholar]

- Zhao, Y.; Li, M.; Lai, L.; Suda, N.; Civin, D.; Chandra, V. Federated Learning with Non-IID Data. arXiv 2018, arXiv:1806.005829. [Google Scholar]

- Nishio, T.; Yonetani, R. Client Selection for Federated Learning with Heterogeneous Resources in Mobile Edge. In Proceedings of the IEEE International Conference on Communications (ICC), Shanghai, China, 20–24 May 2019. [Google Scholar]

- Wadu, M.M.; Samarakoon, S.; Bennis, M. Federated Learning under Channel Uncertainty: Joint Client Scheduling and Resource Allocation. arXiv 2020, arXiv:2020.00802. [Google Scholar]

- Tran, N.H.; Bao, W.; Zomaya, A.; Nguyen, M.N.H.; Hong, C.S. Federated Learning over Wireless Networks: Optimization Model Design and Analysis. In Proceedings of the IEEE Conference on Computer Communications (INFOCOM), Paris, France, 29 April–2 May 2019. [Google Scholar]

- Jeong, E.; Oh, S.; Kim, H.; Park, J.; Bennis, M.; Kim, S. Communication-Efficient On-Device Machine Learning: Federated Distillation and Augmentation under Non-IID Private Data. arXiv 2018, arXiv:1811.11479. [Google Scholar]

- Samarakoon, S.; Bennis, M.; Saad, W.; Debbah, M. Distributed Federated Learning for Ultra-Reliable Low-Latency Vehicular Communications. IEEE Trans. Commun. 2019, 68, 1146–1159. [Google Scholar] [CrossRef] [Green Version]

- Samarakoon, S.; Bennis, M.; Saad, W.; Debbah, M. Federated Learning for Ultra-Reliable Low-Latency V2V Communications. In Proceedings of the IEEE Global Communications Conference (GLOBECOM), Abu Dhabi, UAE, 9–13 December 2018. [Google Scholar]

- Konečný, J.; McMahan, H.B.; Yu, F.X.; Richtárik, P.; Suresh, A.T.; Bacon, D. Federated Learning: Strategies for Improving Communication Efficiency. arXiv 2016, arXiv:1610.05492. [Google Scholar]

- Lin, Y.; Han, S.; Mao, H.; Wang, Y.; Dally, W.J. Deep Gradient Compression: Reducing the Communication Bandwidth for Distributed Training. In Proceedings of the Conference on Neural Information Processing Systems (NIPS), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Yang, H.H.; Arafa, A.; Quek, T.Q.S.; Poor, H.V. Age-Based Scheduling Policy for Federated Learning in Mobile Edge Networks. arXiv 2019, arXiv:1910.14648. [Google Scholar]

- Abad, M.S.H.; Ozfatura, E.; Gunduz, D.; Ercetin, O. Hierarchical Federated Learning Across Heterogeneous Cellular Networks. In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020. [Google Scholar]

- Park, J.; Samarakoon, S.; Bennis, M.; Debbah, M. Wireless Network Intelligence at the Edge. Proc. IEEE 2019, 107, 2204–2239. [Google Scholar] [CrossRef] [Green Version]

- Yang, Q.; Liu, Y.; Cheng, Y.; Kang, Y.; Chen, T.; Yu, H. Horizontal Federated Learning, Federated Learning Synthesis Lectures on Artificial Intelligence and Machine Learning; Morgan & Claypool: San Rafael, CA, USA, 2019; pp. 63–64. [Google Scholar]

- Wang, X.; Han, Y.; Wang, C.; Zhao, Q.; Chen, X.; Chen, M. In-Edge AI: Intelligentizing Mobile Edge Computing, Caching and Communication by Federated Learning. IEEE Netw. 2019, 33, 156–165. [Google Scholar] [CrossRef] [Green Version]

- Zhou, Z.; Yang, S.; Pu, L.; Yu, S. CEFL: Online Admission Control, Data Scheduling and Accuracy Tuning for Cost-Efficient Federated Learning Across Edge Nodes. IEEE Internet Things J. 2020. [Google Scholar] [CrossRef]

- Neely, M. Stochastic Network Optimization with Application to Communication and Queueing Systems; Morgan & Claypool: San Rafael, CA, USA, 2010. [Google Scholar]

- Kim, J.; Caire, G.; Molisch, A.F. Quality-Aware Streaming and Scheduling for Device-to-Device Video Delivery. IEEE/ACM Trans. Netw. 2016, 24, 2319–2331. [Google Scholar] [CrossRef]

- Figueroa, R.L.; Zeng-Treitler, Q.; Kandula, S.; Ngo, L.H. Predicting Sample Size Required for Classification Performance. BMC Med. Inform. Decis. Mak. 2012, 12, 1–10. [Google Scholar] [CrossRef] [Green Version]

- Liu, G.Y.; Chang, T.Y.; Chiang, Y.C.; Lin, P.C.; Mar, J. Path Loss Measurements of Indoor LTE System for the Internet of Things. Appl. Sci. 2017, 7, 537. [Google Scholar] [CrossRef] [Green Version]

| Parameter | Description |

|---|---|

| K | Total number of clients |

| Number of data of client k | |

| Communication quality of client k | |

| Residual battery power of client k | |

| Weight of client k | |

| Time-averaged optimal client number | |

| Possible client numbers at t | |

| Set of client numbers | |

| V | Trade-off factor between accuracy and queue-backlog |

| Utility function when client number is given | |

| Federated edge queue size at t | |

| Arrival process when client number is given | |

| Departure process at t | |

| f | Carrier frequency |

| Transmission power | |

| Path loss at t | |

| Distance between client k and federated edge at time t |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jeon, J.; Park, S.; Choi, M.; Kim, J.; Kwon, Y.-B.; Cho, S. Optimal User Selection for High-Performance and Stabilized Energy-Efficient Federated Learning Platforms. Electronics 2020, 9, 1359. https://doi.org/10.3390/electronics9091359

Jeon J, Park S, Choi M, Kim J, Kwon Y-B, Cho S. Optimal User Selection for High-Performance and Stabilized Energy-Efficient Federated Learning Platforms. Electronics. 2020; 9(9):1359. https://doi.org/10.3390/electronics9091359

Chicago/Turabian StyleJeon, Joohyung, Soohyun Park, Minseok Choi, Joongheon Kim, Young-Bin Kwon, and Sungrae Cho. 2020. "Optimal User Selection for High-Performance and Stabilized Energy-Efficient Federated Learning Platforms" Electronics 9, no. 9: 1359. https://doi.org/10.3390/electronics9091359