Abstract

Federated learning-enabled edge devices train global models by sharing them while avoiding local data sharing. In federated learning, the sharing of models through communication between several clients and central servers results in various problems such as a high latency and network congestion. Moreover, battery consumption problems caused by local training procedures may impact power-hungry clients. To tackle these issues, federated edge learning (FEEL) applies the network edge technologies of mobile edge computing. In this paper, we propose a novel control algorithm for high-performance and stabilized queue in FEEL system. We consider that the FEEL environment includes the clients transmit data to associated federated edges; these edges then locally update the global model, which is downloaded from the central server via a backhaul. Obtaining greater quantities of local data from the clients facilitates more accurate global model construction; however, this may be harmful in terms of queue stability in the edge, owing to substantial data arrivals from the clients. Therefore, the proposed algorithm varies the number of clients selected for transmission, with the aim of maximizing the time-averaged federated learning accuracy subject to queue stability. Based on this number of clients, the federated edge selects the clients to transmit on the basis of resource status.

1. Introduction

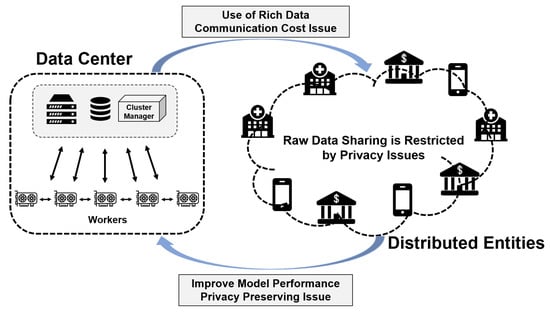

Deep neural networks have demonstrated strong performances in several machine learning tasks, including speech recognition, object detection, and natural language processing. Using large quantities of training data and complex neural network architectures make it possible to generate high-quality models, which has pushed these systems into applications requiring more computing resources as well as larger and richer datasets. To deal with the larger workloads, data centers have implemented distributed neural network training techniques []. To efficiently utilize high-performance computing (HPC) clusters in distributed training, a number of techniques including synchronous and asynchronous updates [], compression and quantization [], and hierarchical systems [] have been considered. However, traditional distributed learning requires the collection and sharing of data from multiple entities to a central data center. Collecting the data from multiple entities to a central data center limits the application of deep learning algorithms to several disciplines which have data privacy-preserving issues. For example, the data created by distributed mobile devices were wealthy as the data gathered by the central data center, but this rich data is privacy sensitive which may preclude integrating to the central data center. In addition, disciplines such as medicine and finance have employed deep learning algorithms with remarkable success; however, the shortage of data arising from the limitations of sharing privacy sensitive data between data sources is a critical issue [], as shown in Figure 1.

Figure 1.

Learning in data centers and distributed domains highlight different aspects of the issue.

In efforts to train models with integrated data, several distributed learning techniques have been investigated. For example, split learning [,] is a novel technique for training deep neural networks across multiple data sources; it avoids the sharing of raw data by splitting the sequences of model layers between the client side (data sources) and the server side. However, the utilization of computing resources in split learning is relatively inefficient, due to the sequential training processes of clients []. Moreover, communication costs between the clients and the central server increase proportionally to the training dataset size. Although a parallel approach for split learning has been considered [], communication costs are still dominant in the “cross-device” and “cross-silo” settings, instead of optimizing the distributed processing in the data center []. In contrast, federated learning (FL) [,] proposes an approach to learn a shared model by aggregating the local updates in a data center while leaving the training data on distributed clients. FL is robust for unbalanced, non-independent and non-identically distributed (non-IID) data, and for systems in which a large number of clients participate []. Furthermore, FL can tolerate the participant client drop-outs arising from an unstable environment (i.e., exhaustion of battery, unstable network status etc.) []. Several recent studies have applied a mobile edge computing (MEC) structure to FL, resulting in an architecture referred to as federated edge learning (FEEL) [,,]. FEEL could alleviate the high communication costs by hierarchical architecture.

Motivation

Although FL trains deep learning model while leaving the data in the client [], it still exhibits several problems, including high communication costs [], battery problems of client devices, and unbalanced datasets [,,]. In particular, the battery consumption incurred by clients as they compute local updates is known to be a constraint of FL procedures; thus, it should be taken into account. In addition, although FL is tolerant toward the dropping out of participating clients, the inclusion of more clients has advantages in terms of training accuracy, owing to the fact that such an inclusion covers larger training datasets. To optimize resource allocation, the authors of [,] proposed a client-scheduling algorithm for communication-efficient FL; however, they did not consider the energy consumption problems of power-hungry devices.

In this study, we implement a communication and computation cost adapted federated learning edge architecture and propose the queueing algorithm of federated edge. The federated edge, instead of the clients, performs local updates with data transmitted from clients to reduce the battery consumption and communication burden of the cloud incurred by clients by performing local training. In this structure, the edge acts as a buffer for data uploaded by associated clients; thus, the queueing system in the edge should be taken into consideration. Therefore, we propose an adaptive client number decision algorithm for a stabilized, highly accurate, queue-equipped federated learning edge system using a Lyapunov optimization function. Furthermore, to cover the larger training datasets provided by clients with heterogeneous resources, we propose an energy-aware client-selection method. Our proposed algorithm decides the number of clients and selects the clients. The number of clients is decided considering the queue of the edge and the clients are selected by considering the data amount, communication quality and residual battery power.

The remainder of this paper is organized as follows. Section 2 introduces the background research on FL and an overview of the related work. Section 3 describes the system architecture of our proposed method and Section 4 proposes the proposed adaptive client-selection algorithm. The discussions on security and privacy in FL are briefly introduced in Section 5. In Section 6, we provide a performance evaluation performed by simulations and present a discussion based on the simulation result. In Section 7 we conclude the paper.

2. Federated Learning Edge

FL is a learning technique that can train a deep learning model in a central server with the user data from distributed clients. By computing local updates to the global model from the clients and aggregating the updates in the central server, FL can utilize the computing resources of the distributed clients. FL can train a deep learning model from its distributed users; however, the process incurs large communication costs between the clients and server []. Furthermore, performing local updates of the global model is a computationally intensive workload for the client; thus, these updates can entail the consumption of a large proportion of a client’s battery. Moreover, the heterogeneity of clients in terms of computational power, data resources, and battery power lead to several issues in FL, such as the straggler problem or wasted resources. Although there are existing studies regarding FL that consider the issues of communication costs [] and resource allocation [,], most have focused on the environment in which the client and central server communicate directly.

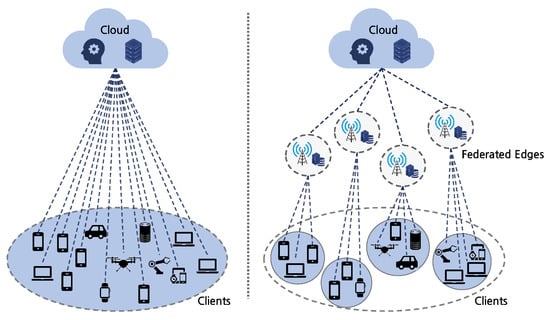

The communication burden on the server, generated by the updating of millions of clients, causes significant bottlenecks when scaling up distributed training. To address this communication bottleneck, several techniques, including compression [,] and efficient client selection [,] have been considered. Various studies have aimed at reducing the communication costs between the clients and server; however, implementing edge computing is the most efficient and practical way to manage numerous clients [,,,], as illustrated in Figure 2.

Figure 2.

Communication burden comparison for clouds of FL (top) and FEEL (bottom).

Several studies have applied an MCE structure to FL and proposed techniques to optimize the tradeoff between communication and computation overhead. In particular, Wang et al. [] proposed the cost-efficient method of federated learning across edge nodes. It optimizes communication and computation cost of the edge by load balancing and data scheduling which is leveraged by Lyapunov optimization, however, it uses only a subset of arrived data to train and communication cost exist between the edges while dispatching training data. Xiaofei et al. [] proposed deep reinforcement learning (DRL) integrated federated learning framework with the mobile edge system. DRL is suitable for optimizing communication and computation resource usage of mobile devices of MEC network, however, the energy consumption issue exists while training DRL agents placed in mobile devices. To the best of our knowledge, this paper is the first work that the edge node which updates the local model selects the optimal user while considering the data amount and battery status to maximize the time-averaged federated learning accuracy subject to queue stability.

3. System Model of Proposed Method

3.1. Clients of the Federated Learning Edge Platform

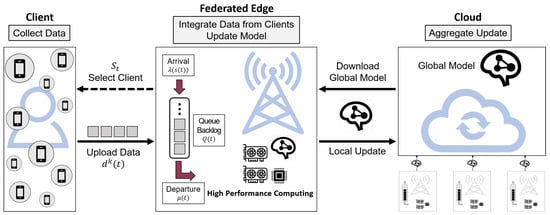

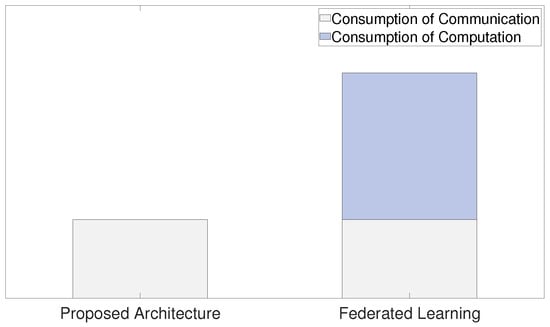

The system model of this work considers a federated learning edge environment that includes clients, federated edges, and a central cloud server, as illustrated in Figure 3. Clients communicate with the associated federated edge and, if selected by it, send it the collected data. A high-performance computing system and data queue are required in federated edges. Although traditional FL considers the environment in which data-acquiring clients perform local updates of the global model (downloaded from the central server), we consider the environment in which the federated edge gathers data from the associated clients and performs the local updates with this gathered data. Because the federated edge gathers data from the clients and uploads the local updates, it can relieve much of the communication burden of the central server and reduce the long delays incurred through the direct uploading/downloading of local updates/global models by clients. Furthermore, conducting local updates using the copious computation resources of a federated edge could alleviate the battery and straggler problems caused by the consumption of client batteries when performing local updates and the heterogeneity of the clients’ computation resources, respectively. Figure 4 shows the reduced battery consumption of client in our system model.

Figure 3.

System model of federated learning edge in our proposed algorithm.

Figure 4.

Power consumption of client compare between our system model and standard federated learning. Battery consumption of the client caused by local update computation could be reduced in our system model.

The central cloud server broadcasts the global model to the federated edges and aggregates the local updates they provide. Then, the cloud server updates the global model with these aggregated updates. Because the federated edges and the central cloud server are connected via a backhaul link, the federated edges upload the local updates to the cloud server if the communication quality of the backhaul is deemed sufficient for it.

By using federated learning edge architecture to separate the data gathering and local update computation processes between clients and federated edges, our proposed platform can alleviate communication bottlenecks in the central cloud server and reduce the energy demand upon battery-constrained clients.

3.2. Queue-Equipped Federated Edge

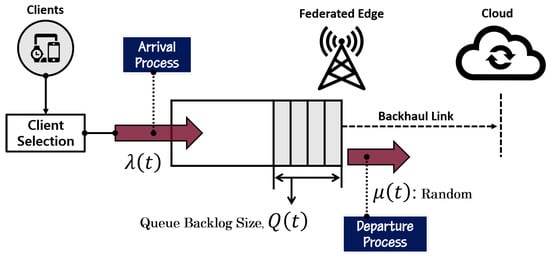

In this distributed architecture, the federated edge acts as a buffer, storing the data and computing the local update. Therefore, it is necessary to take into account the data queue existing in the federated edge and the subsequent transmission delays to the cloud server. A departure from the data queue represents the updating of the global model received from the central cloud server, and an arrival in the data queue represents a data transmission from a client, as shown in Figure 5. In the same way as the central server of traditional FL environments selects clients at random to perform the local update, the federated edge in the federated learning edge environment selects the clients which transmit data to it.

Figure 5.

Queue model of data queue in federated edge network.

In the queue-based system, it is necessary to ensure the stability of the queue. The accuracy of the global model increases as greater quantities of data become available for local updates. However, as the queue-backlog cannot extend indefinitely, it is essential to prevent an overflow of the queue. Moreover, as the queue is dependent upon the arrival and departure processes, a stabilized queue can be achieved by ensuring a higher rate of departures than arrivals. Because of the trade-off between accuracy and queue length, a stochastic, optimization theory-based, time-averaged utility maximization procedure, subject to the system/queue stability algorithm, can be designed.

3.3. Client Selection of Federated Edge

In practical environments, the resources of clients are heterogeneous. Selecting clients at random to upload data to the federated edge can result in the inefficient use of client resources. Therefore, to utilize the heterogeneous resources of clients, our proposed method is designed such that the federated edge selects clients according to their resources, instead of the random selection procedures used by traditional FL methods. In this paper, we take into account the data quantities to be transmitted as well as the residual battery power and communication quality of the data-acquiring clients. After the federated edge has decided upon the number of clients to receive the data from, it selects the clients on the basis of priorities determined by the resources of each client.

4. Proposed Algorithm

In this section, the details of our proposed client selection algorithm are introduced. Our proposed client selection algorithm includes two steps: deciding upon the number of clients and selecting as many clients as previously specified. The federated edge decides the number of clients to receive data from using the control-Lyapunov function for a stabilized data queue in federated learning edge. Next, the edge selects the clients according to a weighting that is calculated from the resource status of each client. Because we assume that the clients selected to send the data each transmit an equal amount of data to the federated edge, the number of clients can be decided using the data queue length of the federated edge. The notations for the parameters used in this paper, along with their descriptions, are summarized in Table 1. The procedures at the federated edge and each clients are presented in Algorithms 1 and 2, respectively. In each unit time t, the federated edge proceeds two steps which are deciding the optimal number of clients and selecting clients. Each client k proceeds function SendStatusk and SendDatak which sends the data amount, communication quality, and residual battery power to the federated edge and sends the training data to the federated edge if the functions are called, respectively.

| Algorithm 1 Procedure at the federated edge. |

| 1: K: Total number of clients 2: : Federated edge queue size at t 3: : The set of client numbers 4: V: Trade-off factor between accuracy and queue-backlog 5: : The set of selected clients at t 6: : The array of priorities of clients at t 7: : i-th element of array at t 8: : Time-average optimal client number at t 9: : Data transmitted from client k at t 10: : Timeout value for receiving resource status from client k 11: 12: for each unit time 0, 1, 2, … do 13: Step1: Optimal number of clients decision 14: Observe 15: 16: for do 17: 18: if then 19: 20: // Optimal number of clients 21: end if 22: end for 23: Step2: Client selection 24: Initialize and , where 25: for each client 1, 2, …, K in parallel do 26: Call SendStatusk of client k 27: Start timer() 28: Wait until(receipt of reply from client k OR timeout) 29: if receipt of reply from client k then 30: SendStatusk 31: if then // If residual battery power is 0 32: 33: else 34: // Priority value of client 35: end if 36: else // No reply from client k until timeout 37: 38: end if 39: Reset timer() 40: end for 41: Sort in descending order 42: for each element , do // For selected clients 43: // is i-th element of sorted array 44: end for 45: for each client in parallel do 46: SendDatak 47: end for 48: max 1.6 49: end for |

| Algorithm 2 Procedure at each client k. |

| 1: SendStatusk : 2: (Data amount of client k at t) 3: (Communication quality of client k at t) 4: (Residual battery power of client k at t) 5: return () to federated edge 6: SendDatak : 7: (Defined amount of data to send) 8: return to federated edge |

Table 1.

Notations.

4.1. Client Number Control by Lyapunov Optimization

Then, the queue at the edge can be formulated as follows:

where , , and indicate the queue-backlog size at the edge at time t, the amount of data arriving from the associated clients at time t, and the amount of data departing to the cloud server at time t, respectively. In this case, the departure at time t (that is, ) is not controllable because the edge can only transmit data from the queue to the extent that the wireless channel (between the edge and the cloud server) permits it. The quantity of data arriving from the clients to the edge is controllable, being dependent on the number of selected clients.

This section formulates the time-averaged FL accuracy maximization, which is subject to queue stability constraints; here our control action is the number of clients. The mathematical program for this time-averaged optimization can be formulated as follows:

where (3) represents the queue stability constraint. In (2), we can observe that our utility function when client number is given is denoted as . The utility function can be expressed as follows where stands for the expected accuracy of the learning model when client number is is given.

According to what Neely said in his book [], the general form of the optimization equation can be expressed as follows:

where , , , V, , , , and stand for time-average optimal solution, possible solution, set of possible solutions, accuracy-delay tradeoff factor, utility function when solution x is given, queue backlog, arrival process when solution x is given, and departure process when x is given, respectively.

According to the Lyapunov optimization framework, and by implementing the so-called drift-plus-penalty (DPP), this time-averaged optimization framework can be revised under the constant-gap approximation as follows []:

where , and represent the time-averaged optimal client number, the possible number of clients, the set of client numbers, the trade-off factor between accuracy and queue length, the utility function when client number is given, the queue length, the arrival process when client number is given, and the departure process, respectively.

In the aforementioned system model, the federated edge should receive data from data-acquiring clients after considering the data queue in the edge. Although the accuracy of the learning model increases when more data is used, achieving queue stability should take a higher priority. Under this condition, we can design a dynamic algorithm that selects the number of clients from which to receive data for each unit time, in a way that maximizes the time-averaged accuracy (subject to queue stability), as we did in (5).

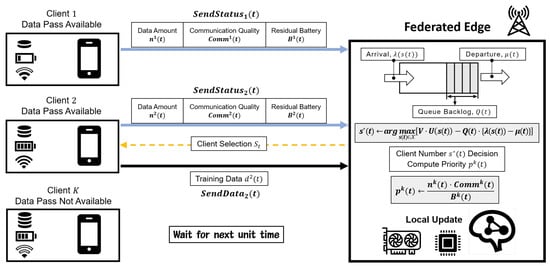

In every unit time, the clients which are available to send datasets notify the federated edge of their quantity of data, communication quality, and residual battery power. The condition of each client is determined internally according to its task priority. Then, the federated edge selects the number of clients to receive the data from, using a Lyapunov control-based time-averaged optimization function.

If the data queue of the federated edge contains a substantial amount of data, the optimization function selects fewer clients to transmit. Furthermore, if the data queue is almost empty, the optimization function selects numerous clients, thereby improving the accuracy of the model using larger datasets. Deciding the optimal client number is described in Line 13–22 of Algorithm 1. After observing queue-backlog in Line 14, through comparing the value of optimization function about all (Line 15–22), the federated edge decides optimal client number which maximizes the time-average accuracy and also stabilizes the queue.

4.2. Client Selection

After the number of clients to receive the data from has been decided upon by the optimization function, the federated edge selects the clients with which to upload the data to the edge. Traditional FL selects a random client for the update; however, this approach can lead to an inefficient use of the network and heterogeneous resources of the mobile clients. Therefore, our algorithm selects the clients on the basis of their data queue size, communication quality, and residual battery power; furthermore, it operates efficiently by considering the heterogeneous resources of the clients. Because the available clients send the information concerning their resources, the federated edge can select the clients according to their resource statuses without additional information exchange. The client selection flow is illustrated in Figure 6.

Figure 6.

The federated edge selects the number of clients to receive the data from and selects these clients from amongst those available, according to each client’s resource status.

The weight of client k, which is the criteria for selecting the clients, can be formulated based on their resource status, as follows:

where , , and represent the data amount of the client, its communication quality, and residual battery k, respectively. The weighting of each client increases when they have more data, better communication quality, or lower battery power. The battery power is inversely proportional to the weight because it is more effective to send the data before the client runs out of battery, thus we increase the accuracy of the learning model. The data amount is defined as the size of the data which could be represented by a byte. Communication quality is defined as channel state information. The residual battery could be represented by Wh.

The procedure of selecting clients is described in Line 23–49 of Algorithm 1. In Line 24 the federated edge initializes the set of selected clients at t to empty set and the array of priorities of clients at t with each element . Then the federated edge calls the SendStatusk function of all clients in Line 26 and starts the timer of each client k to check the timeout of clients in Line 27. If the federated edge receives the reply from client k before the timeout, the federated edge could get , , which are data amount, communication quality, and residual battery of client k, respectively, returned by SendStatusk (Line 30). Although the federated edge receives the resource status of client k, returned residual battery power could be 0, then the weight of client k be 0 (Line 31 and 32). On the other hand, the weight of client k is computed as (Line 34). If the federated edge didn’t receive a reply from client k before the timeout, the weight of client k is 0 (Line 37).

After the weight of each client is decided, the federated edge sorts the array of priorities in descending order (Line 41). Then the set of selected clients is composed of clients with the largest weight in array (Line 42–44). As the clients which to send the data to the federated edge are selected, the federated edge calls SendDatak function for each client k in set and returned data is (Line 45–47). Then the federated edge updates the queue-backlog by the amount of data departure at t and the amount of data arrival at t which is of (4.4) (Line 48).

Client k executes two functions SendStatusk and SendDatak if they are called by the federated edge as shown in Algorithm 2. Function SendStatusk returns , , and , which are the data amount of client k at t, communication quality of client k at t, and residual battery power of client k at t, respectively (Line 1–5). If the function SendDatak is called by the federated edge, it returns the defined amount of data to send (Line 6–8).

After the clients have been prioritized according to their weights , the top clients send the data to the federated edge. The federated edge continues the FL process with the data in its data queue and the local model downloaded from the central cloud server. The entire process of our proposed federated learning edge system, with adaptive client selection for stabilized and highly accurate edge platform, is described in Algorithms 1 and 2.

In our proposed algorithm, which is inspired by the Lyapunov optimization, shows low time complexity due to the fact that it (i) computes (5) by applying the given set of candidates and then (ii) find the argument which shows the minimum of the result of (5). Thus, its run-time computational complexity is .

5. Security and Privacy Discussions in FL

In FL, the security and privacy issues are de facto problems to be addressed. The reason why we are using FL is that we do not want to gather all data in a centralized single storage. It means that we want to maintain certain amounts of security and privacy. Thus, the related discussions are essential.

In our proposed system, we select certain amounts of clients for conducting local deep neural network training in each edge. For the operation, our proposed algorithm selects the clients in terms of pre-determined priority. If we can newly define the priority in terms of user-privacy or security-levels, we can re-build the FL system under the consideration of security and privacy. Therefore, there are some chances in order to consider security levels.

Preserving data privacy should be achieved in the scope of user or organization, not the data acquiring device. Therefore, if a user with multiple devices which acquire data or an organization with multiple data center could define privacy and security-levels in bounds of user or organization, not the device. For example, a user who owns several IoT devices such as smartphones, health care devices, smartwatches could conduct local updates using smart speaker which receives a steady supply of power. A smart speaker receives the data from each IoT devices and conducts the local update as the federated edge. Then upload the update to the cloud. Although the data of acquiring device are transmitted to the edge the data privacy of the user is preserved.

Essentially, if we conduct local model training, and then aggregate the local training parameters in order to build our desired global model, it already achieves privacy preserving because we did not reveal our own data themselves. On top of this impact about privacy-preserving, if we can define privacy-aware client selection, then we can consider more privacy and security concerns.

6. Performance Evaluation

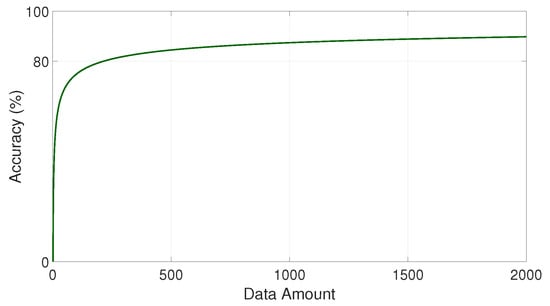

In this section, we evaluate our proposed adaptive client-selection method for stabilized and highly accurate federated learning edge platforms. We simulate the queue stability of federated edge which the expected total accuracy of the model is based on the learning curve [].

6.1. Experiment Setting

Our simulation is designed and implemented based on the environment described in Section 3. To evaluate our proposed algorithm, which stabilizes the data queue and achieves a high utility function, we assumed that the queue of a federated edge and 50 associated clients are used to transmit the data in the simulation.

The federated edge trains the global model received from the central cloud and the training data is fetched from the data queue of the federated edge. The collection of data, which represents a departure from the data queue, is decided upon by the FL protocol between the federated edge and central cloud. Although several parameters that control the local update procedure are controlled by the central cloud, the communication between the federated edge and central cloud is random. Therefore, we assumed that departures from the data queue of the federated edge are independent and identically random. An arrival to the data queue is determined from the quantities of data of selected clients.

We assumed 1 federated edge and total client number k = 50 in the simulation. Initially, the battery level of each client is set at random and the residual battery of every client decreases for each unit time. Wireless communications between federated edge and clients were modeled based on LTE networks. The federated edge is located at the center of the cell with a radius of 100 m, and 50 clients are distributed in the cell. The carrier frequency f, transmission power , and bandwidth is 2.5 GHz, 20 dBm, and 20 MHz, respectively. The communication quality between the federated edge and client k at time t is calculated as follows:

where and represents the path loss [] and distance between the federated edge and the client k at time t. The unit of path loss is dBm and follows a power law with the exponent N = 3. The distance varies randomly at every unit time from 1 m to 100 m. The noise and fading could affect the received power. Therefore value from 0 to 1 multiplied in (8). The communication quality is not good if the value is closer to 0, and the closer to 1, the better.

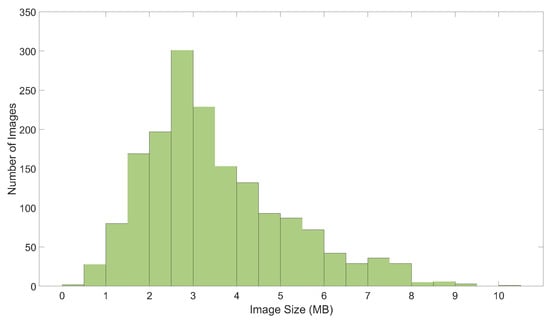

We assumed 100,000 training data, which are partitioned between 50 clients, each receiving 2000 data. The selected clients transmit 10 samples to the federated edge per unit time. Each data is an image of 3 MB size. Data size is decided by the image size distribution of the author’s mobile device. The model of the mobile device is Galaxy S8 which equipped 12M pixel camera and 3000 mAh battery. Figure 7 shows the image size distribution of 1700 data. The average size of data is 3 MB and decided as the data size for the simulation. The initial values of V and are and . In practice, can be determined depending on the system, then V can be properly selected. The utilization function is assumed as the anticipated accuracy when the number of clients is decided as . This expected accuracy is modeled by the learning curve [], as shown in Figure 8.

Figure 7.

Data size distribution of author’s mobile device.

Figure 8.

Expected accuracy under increasing amount of dataset.

In order to evaluate our proposed algorithm, we compared it against three client- selecting methods:

- Max Selection: The federated edge receives data from every client at every unit time.

- Static Selection: The federated edge selects the same amount of clients at every unit time. In this evaluation, five clients were used to transmit the data for each unit time.

- Random Selection: The number of selected clients is decided in the same way as our proposed algorithm; however, it selects random clients without considering the resources of the clients.

As a benchmark scheme to compare against our algorithm, we consider a FL procedure that selects random clients from a static number of clients, where the client fraction []. The number of clients for each unit time of the first two methods are constant, thus we can compare the queue stability and achieve a long queue backlog, which is related to the utility function under our proposed algorithm. In addition, the last case is considered to evaluate the resource-aware client selection of our proposed algorithm. It decides the number of clients in a similar way to our proposed algorithm, but selects random clients without considering their available resources. Every method selects the client randomly after deciding upon the number of clients to receive the data from; however, the last one decides the number of clients in a manner identical to that of our proposed algorithm. Our proposed algorithm decides the number of selected clients for each unit time according to the queue status, and selects the clients according to their resource statuses.

6.2. Experimental Results

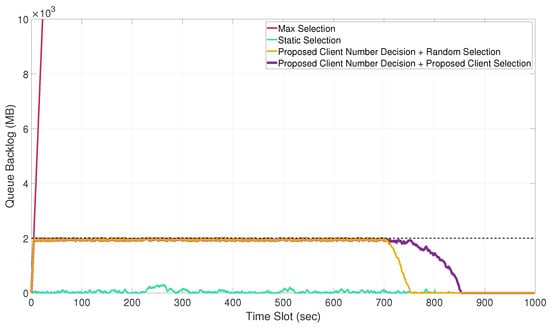

To evaluate our proposed algorithm, we chart the queue backlog plots against time t in Figure 9. When max selection and static selection are performed to decide the number of selected client, the federated edge system is unstable due to queue overflow and achieves an extremely stable queue, respectively. However, as the main object of our federated learning edge system is to maximize the learning accuracy, our proposed algorithm and random selection, which are controlled by the same client number decision, both select the same optimal number of clients to stabilize the queue. After , the queue backlog of random selection slightly decreases and after the queue backlog of our proposed algorithm also decreases. As time passes, depletion of battery and a lack of data to transmit from clients leads to a decrease in the available number of clients, this is less than the client number required to maximize utilization. Because the random selection selects the client without considering the resources of each client, some clients with sufficient data but insufficient battery or vice versa become unavailable at the later time slots. On the other hand, our proposed algorithm considers the resources available (in particular the battery level of the clients) and thereby achieves a larger utilization by receiving more data from the entire set of clients.

Figure 9.

Queue backlog variation plots (y) versus time slot (x). The decided number of clients of the proposed algorithm and random selection is same. However, proposed algorithm selects the clients which to transmit the data by our proposed client selection which considers the resource and random selection just randomly selects the clients.

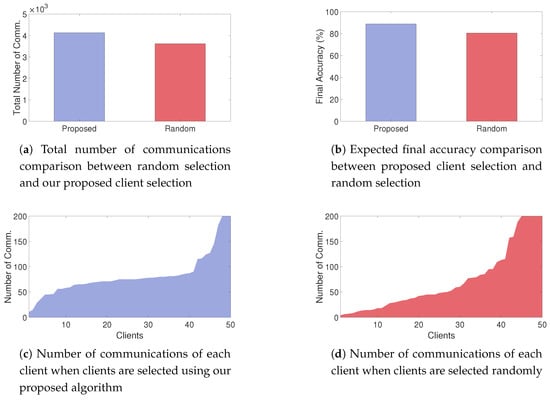

Figure 10a shows the total number of communications of all clients. Our proposed algorithm communicates 4133 times and random selection communicates 3621 times. As the total quantity of uploaded data is proportional to the number of communications, our proposed algorithm uploaded more data than random client selection. As a result, our proposed algorithm could receive more data from the clients which results to training more accurate model. Figure 10b shows the compare of expected learning accuracy of proposed client selection and random client selection based on learning curve and total number of received data. Proposed algorithm and random selection achieve 88% and 80%, respectively.

Figure 10.

(a) Total number of communications between the federated edge and associated clients; (b) represents the expected final accuracy based on the learning curve and total number of received data; (c,d) represent the number of communications for each client when clients are selected using our proposed algorithm and at random, respectively. (c,d) are sorted by number of communications. The variance in the number of communications for each client under our proposed algorithm and random client selection is 41.76 and 62.7, respectively.

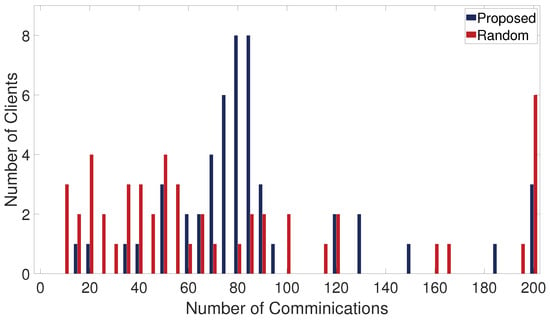

Our proposed algorithm could also improve the fairness of client selection. Improved fairness can decrease the likelihood of overfitting, which can arise from unbalanced training data exchange between clients. Figure 10c,d show the number of communications of each client when the clients are selected using our proposed algorithm and at random, respectively. Although random selection shows large differences in the number of communications between clients, our proposed client selection shows relatively fair communication numbers between clients. Comparing the two client-selection algorithms, we see that the variance value of the proposed algorithm is 41.76, this is reduced from the variance under random selection, which is 62.7. Figure 11 shows the histogram of the number of clients for each number of communications. The communication counts for each client of random selection are unbalanced. Because the communication counts differ widely between clients, some of the clients send large amounts of data and others send less. The top 10 clients of random selection, which comprise 20% of the total set of clients, communication 47% of the total communications. These clients are the clients which have more battery and more data but didn’t selected at the early time slots and selected frequently at the late time slots.

Figure 11.

Number of clients for each number of communications.

To conclude, our proposed algorithm improves not only the utilization of client resources while stabilizing the queue but also improves the fairness of client selection.

7. Concluding Remarks and Future Work

In this paper, we propose an adaptive client-selection algorithm that stabilizes the data queue of a federated edge while also realizing a highly accurate learning model in the energy-aware federated learning edge environment. By conducting the local computation in the federated edge instead of the power-consuming clients, clients instead consume a relatively small amount of battery power, only being required to transmit a dataset. In our proposed algorithm, the federated edge adaptively decides upon the number of clients to receive the data from, according to Lyapunov control-based time-averaged optimization function. This client selection algorithm maximizes the time-averaged accuracy (subject to stability), balancing the trade-off between accuracy and stability. Moreover, the federated edge selects the clients to send the data to according to the priorities of the clients, this is decided on the basis of their quantity of data, communication quality, and residual battery power. The evaluation results show that our proposed algorithm can maximize the time-averaged utilization subject to stability, balancing the trade-off between stability and accuracy. Specifically, by considering the battery power of clients, our algorithm makes use of the heterogeneous resources of clients and achieves a significant increase in fairness compared to random client-selection procedures. In addition, our proposed algorithm transmits more data than random client selection which leads to more accurate model and the reduced variance in the communication counts of clients demonstrates the increased fairness between the clients.

In future work, the federated edge could consider the scheduling of multiple data queues for multiple learning tasks. Furthermore, to employ the data of UAVs or vehicles, the federated edge could consider communication and computing resource allocation for clients with high mobility.

Author Contributions

J.J., M.C., J.K., and S.C. were the main researchers who initiated and organized the research reported in the paper, and all authors including S.P. and Y.-B.K. were responsible for analyzing the simulation results and writing the paper. S.P. designed a more realistic network scenario and a subsequent suitable algorithm modification. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Chung-Ang University Graduate Research Scholarship in 2018 (for Joohyung Jeon), by the Institute for Information & Communications Technology Promotion (IITP) grant funded by the Korea government (MSIT) under Grant 2017-0-00068, A Development of Driving Decision Engine for Autonomous Driving using Driving Experience Information, and also by Chung-Ang University Research Grant in 2017 for Young-Bin Kwon (1 September 2017–28 February 2018).

Acknowledgments

J.K., Y.-B.K. and S.C. are the corresponding authors of this paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Sze, V.; Chen, Y.H.; Yang, T.J.; Emer, J.E. Efficient Processing of Deep Neural Networks: A Tutorial and Survey. Proc. IEEE 2017, 105, 2295–2329. [Google Scholar] [CrossRef]

- Zhang, W.; Gupta, S.; Lian, X.; Liu, J. Staleness-Aware Async-SGD for Distributed Deep Learning. arXiv 2015, arXiv:1511.05950. [Google Scholar]

- Han, S.; Mao, H.; Dally, W.J. Deep Compression: Compressing Deep Neural Networks with Pruning, Trained Quantization and Huffman Coding. arXiv 2015, arXiv:1510.00149. [Google Scholar]

- Gupta, S.; Zhang, W.; Wang, F. Model Accuracy and Runtime Tradeoff in Distributed Deep Learning: A Systematic Study. In Proceedings of the IEEE International Conference on Data Mining (ICDM), Barcelona, Spain, 12–15 December 2016. [Google Scholar]

- Jeon, J.; Kim, D.; Kim, J. Cyclic Parameter Sharing for Privacy-Preserving Distributed Deep Learning Platforms. In Proceedings of the International Conference on Artificial Intelligence in Information and Communication (ICAIIC), Okinawa, Japan, 11–13 February 2019. [Google Scholar]

- Gupta, O.; Raskar, R. Distributed Learning of Deep Neural Network over Multiple Agents. J. Netw. Comput. Appl. 2018, 116, 1–8. [Google Scholar] [CrossRef]

- Jeon, J.; Kim, J.; Kim, J.; Kim, K.; Mohaisen, A.; Kim, J. Privacy-Preserving Deep Learning Computation for Geo-Distributed Medical Big-Data Platforms. In Proceedings of the IEEE/IFIP International Conference on Dependable Systems and Networks (DSN) Supplemental Volume, Portland, OR, USA, 24–27 June 2019. [Google Scholar]

- Jeon, J.; Kim, J. Privacy-Sensitive Parallel Split Learning. In Proceedings of the IEEE International Conference on Information Networking (ICOIN), Barcelona, Spain, 7–10 January 2020. [Google Scholar]

- Kairouz, P.; McMahan, H.B.; Avent, B.; Bellet, A.; Bennis, M.; Bhagoji, A.N.; Bonawitz, K.; Charles, Z.; Cormode, G.; Cummings, R.; et al. Advances and Open Problems in Federated Learning. arXiv 2019, arXiv:1912.04977. [Google Scholar]

- McMahan, H.B.; Moore, E.; Ramage, D.; Hampson, S.; Arcas, B.A. Communication-Efficient Learning of Deep Networks from Decentralized Data. In Proceedings of the International Conference on Artificial Intelligence and Statistics (AISTATS), Fort Lauderdale, FL, USA, 20–22 April 2017. [Google Scholar]

- Konečný, J.; McMahan, H.B.; Ramage, D. Federated Optimization: Distributed Optimization Beyond the Datacenter. In Proceedings of the NIPS Workshop on Optimization for Machine Learning, Montreal, QC, Canada, 11 December 2015. [Google Scholar]

- Li, T.; Sahu, A.K.; Talwalkar, A.; Smith, V. Federated Learning: Challenges, Methods, and Future Directions. IEEE Signal Process. Mag. 2020, 37, 50–60. [Google Scholar] [CrossRef]

- Wang, S.; Tuor, T.; Salonidis, T.; Leung, K.K.; Makaya, C.; He, T.; Chan, K. When Edge Meets Learning: Adaptive Federated Learning in Resource Constrained Edge Computing Systems. IEEE J. Sel. Areas Commun. 2019, 37, 1205–1221. [Google Scholar] [CrossRef]

- Zhu, G.; Wang, Y.; Huang, K. Broadband Analog Aggregation for Low-Latency Federated Edge Learning. IEEE Trans. Wirel. Commun. 2020, 19, 491–506. [Google Scholar] [CrossRef]

- Amiri, M.M.; Gündüz, D. Machine Learning at the Wireless Edge: Distributed Stochastic Gradient Descent Over-the-Air. In Proceedings of the IEEE International Symposium on Information Theory (ISIT), Paris, France, 7–12 July 2019. [Google Scholar]

- Bonawitz, K.; Ivanov, V.; Kreuter, B.; Marcedone, A.; McMahan, H.B.; Patel, S.; Ramage, D.; Segal, A.; Seth, K. Practical Secure Aggregation for Privacy-Preserving Machine Learning. In Proceedings of the ACM SIGSAC Conference on Computer and Communications Security (CCS), Dallas, TX, USA, 30 October–3 November 2017. [Google Scholar]

- Bonawitz, K.; Eichner, H.; Grieskamp, W.; Huba, D.; Ingerman, A.; Ivanov, V.; Kiddon, C.; Konečný, J.; Mazzocchi, S.; McMahan, H.B.; et al. Towards Federated Learning at Scale: System Design. In Proceedings of the Conference on Systems and Machine Learning (SysML), Palo Alto, CA, USA, 31 March–2 April 2019. [Google Scholar]

- Sattler, F.; Wiedemann, S.; Müller, K.R.; Samek, W. Robust and Communication-Efficient Federated Learning from Non-IID Data. IEEE Trans. Neural Netw. Learn. Syst. 2019. [Google Scholar] [CrossRef]

- Smith, V.; Chiang, C.K.; Sanjabi, M.; Talwalkar, A.S. Federated Multi-Task Learning. In Proceedings of the Conference on Neural Information Processing Systems (NIPS), Longbeach, CA, USA, 4–9 December 2017. [Google Scholar]

- Zhao, Y.; Li, M.; Lai, L.; Suda, N.; Civin, D.; Chandra, V. Federated Learning with Non-IID Data. arXiv 2018, arXiv:1806.005829. [Google Scholar]

- Nishio, T.; Yonetani, R. Client Selection for Federated Learning with Heterogeneous Resources in Mobile Edge. In Proceedings of the IEEE International Conference on Communications (ICC), Shanghai, China, 20–24 May 2019. [Google Scholar]

- Wadu, M.M.; Samarakoon, S.; Bennis, M. Federated Learning under Channel Uncertainty: Joint Client Scheduling and Resource Allocation. arXiv 2020, arXiv:2020.00802. [Google Scholar]

- Tran, N.H.; Bao, W.; Zomaya, A.; Nguyen, M.N.H.; Hong, C.S. Federated Learning over Wireless Networks: Optimization Model Design and Analysis. In Proceedings of the IEEE Conference on Computer Communications (INFOCOM), Paris, France, 29 April–2 May 2019. [Google Scholar]

- Jeong, E.; Oh, S.; Kim, H.; Park, J.; Bennis, M.; Kim, S. Communication-Efficient On-Device Machine Learning: Federated Distillation and Augmentation under Non-IID Private Data. arXiv 2018, arXiv:1811.11479. [Google Scholar]

- Samarakoon, S.; Bennis, M.; Saad, W.; Debbah, M. Distributed Federated Learning for Ultra-Reliable Low-Latency Vehicular Communications. IEEE Trans. Commun. 2019, 68, 1146–1159. [Google Scholar] [CrossRef]

- Samarakoon, S.; Bennis, M.; Saad, W.; Debbah, M. Federated Learning for Ultra-Reliable Low-Latency V2V Communications. In Proceedings of the IEEE Global Communications Conference (GLOBECOM), Abu Dhabi, UAE, 9–13 December 2018. [Google Scholar]

- Konečný, J.; McMahan, H.B.; Yu, F.X.; Richtárik, P.; Suresh, A.T.; Bacon, D. Federated Learning: Strategies for Improving Communication Efficiency. arXiv 2016, arXiv:1610.05492. [Google Scholar]

- Lin, Y.; Han, S.; Mao, H.; Wang, Y.; Dally, W.J. Deep Gradient Compression: Reducing the Communication Bandwidth for Distributed Training. In Proceedings of the Conference on Neural Information Processing Systems (NIPS), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Yang, H.H.; Arafa, A.; Quek, T.Q.S.; Poor, H.V. Age-Based Scheduling Policy for Federated Learning in Mobile Edge Networks. arXiv 2019, arXiv:1910.14648. [Google Scholar]

- Abad, M.S.H.; Ozfatura, E.; Gunduz, D.; Ercetin, O. Hierarchical Federated Learning Across Heterogeneous Cellular Networks. In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020. [Google Scholar]

- Park, J.; Samarakoon, S.; Bennis, M.; Debbah, M. Wireless Network Intelligence at the Edge. Proc. IEEE 2019, 107, 2204–2239. [Google Scholar] [CrossRef]

- Yang, Q.; Liu, Y.; Cheng, Y.; Kang, Y.; Chen, T.; Yu, H. Horizontal Federated Learning, Federated Learning Synthesis Lectures on Artificial Intelligence and Machine Learning; Morgan & Claypool: San Rafael, CA, USA, 2019; pp. 63–64. [Google Scholar]

- Wang, X.; Han, Y.; Wang, C.; Zhao, Q.; Chen, X.; Chen, M. In-Edge AI: Intelligentizing Mobile Edge Computing, Caching and Communication by Federated Learning. IEEE Netw. 2019, 33, 156–165. [Google Scholar] [CrossRef]

- Zhou, Z.; Yang, S.; Pu, L.; Yu, S. CEFL: Online Admission Control, Data Scheduling and Accuracy Tuning for Cost-Efficient Federated Learning Across Edge Nodes. IEEE Internet Things J. 2020. [Google Scholar] [CrossRef]

- Neely, M. Stochastic Network Optimization with Application to Communication and Queueing Systems; Morgan & Claypool: San Rafael, CA, USA, 2010. [Google Scholar]

- Kim, J.; Caire, G.; Molisch, A.F. Quality-Aware Streaming and Scheduling for Device-to-Device Video Delivery. IEEE/ACM Trans. Netw. 2016, 24, 2319–2331. [Google Scholar] [CrossRef]

- Figueroa, R.L.; Zeng-Treitler, Q.; Kandula, S.; Ngo, L.H. Predicting Sample Size Required for Classification Performance. BMC Med. Inform. Decis. Mak. 2012, 12, 1–10. [Google Scholar] [CrossRef]

- Liu, G.Y.; Chang, T.Y.; Chiang, Y.C.; Lin, P.C.; Mar, J. Path Loss Measurements of Indoor LTE System for the Internet of Things. Appl. Sci. 2017, 7, 537. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).