An Emotion-Aware Learning Analytics System Based on Semantic Task Automation

Abstract

1. Introduction

2. Background

2.1. Emotion Theories Applied to Education

2.2. Emotion Recognition Tools

2.3. Emotion Recognition in E-Learning

2.4. Learning Analytics

2.5. Semantic Task Automation

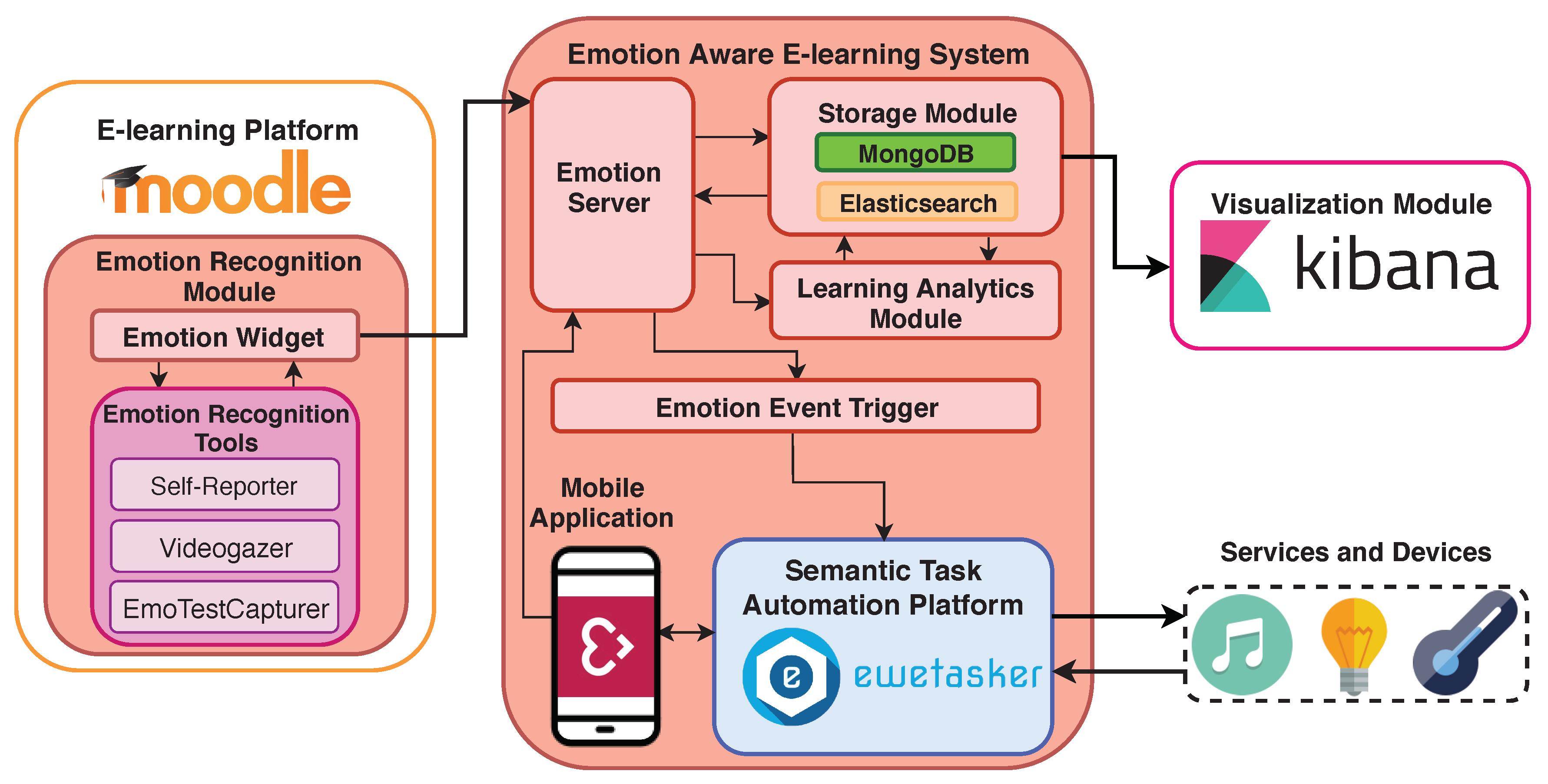

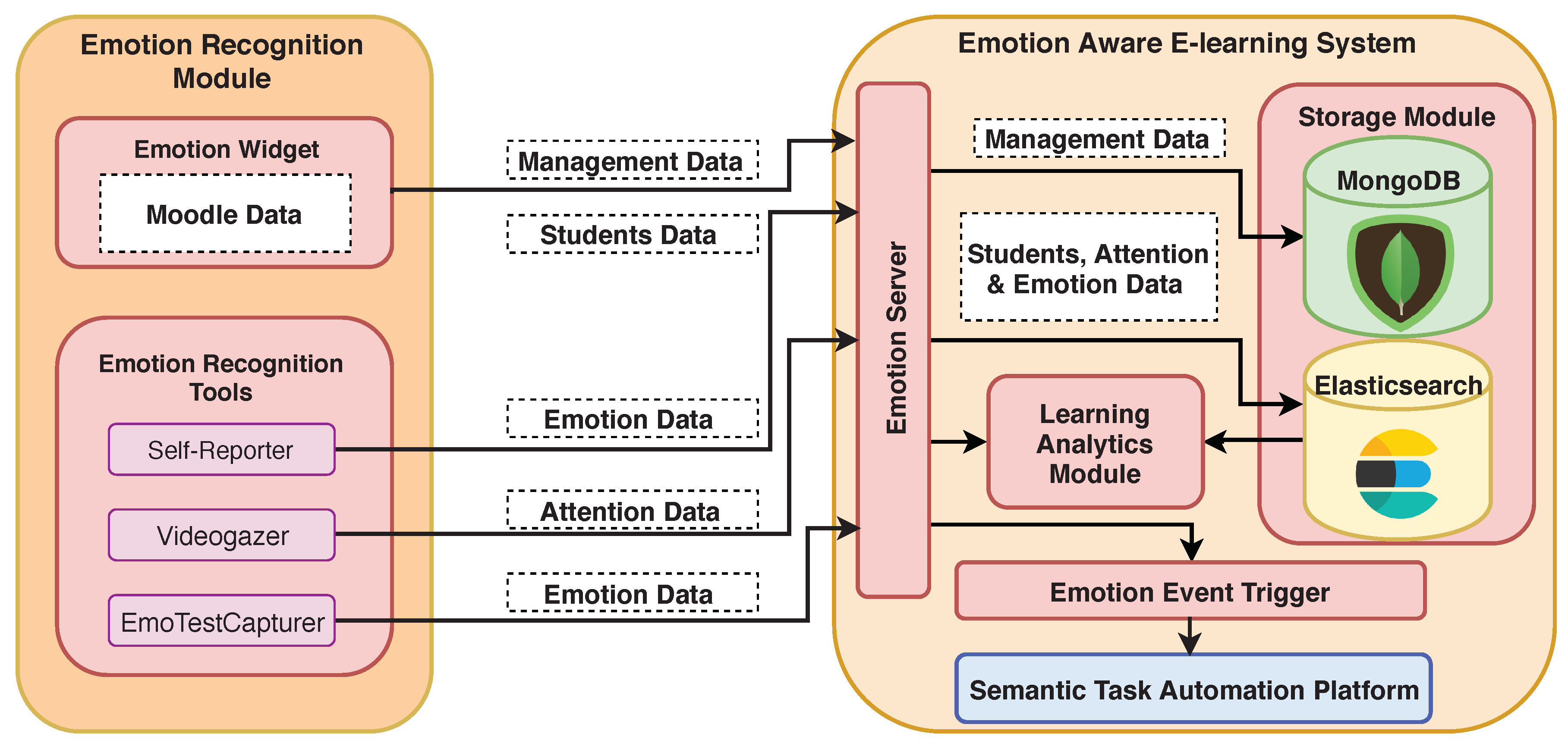

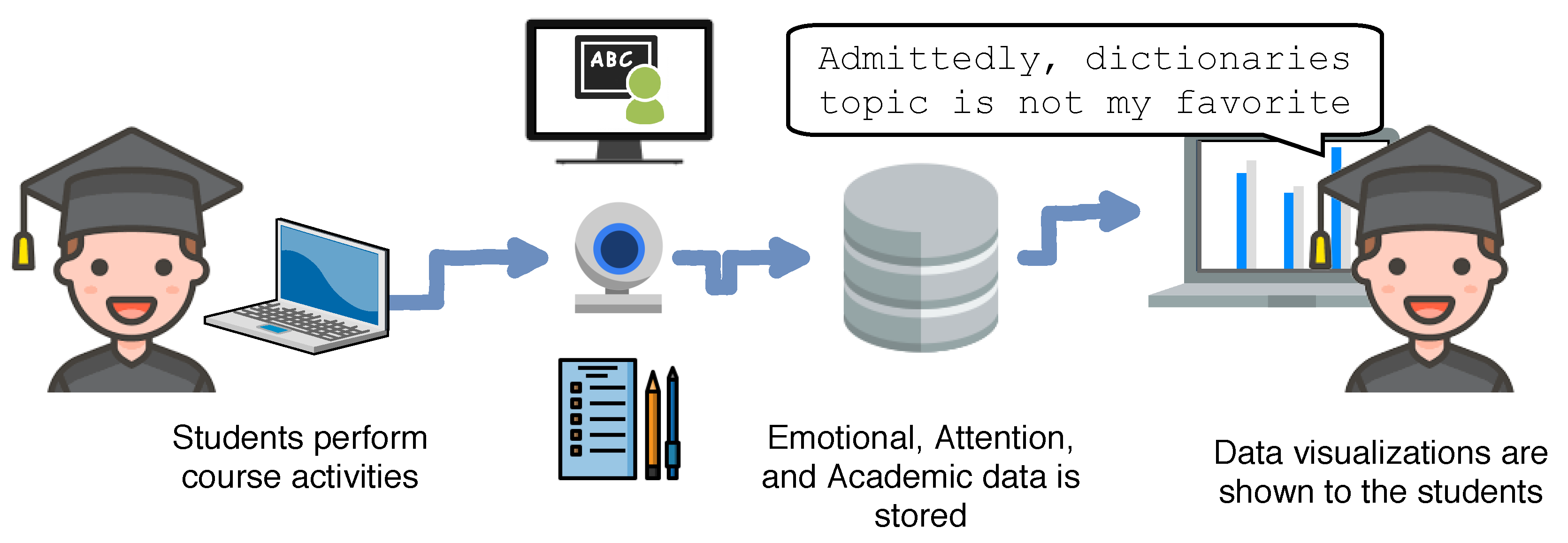

3. Emotion-Aware E-Learning Platform Architecture

3.1. Emotion Recognition Component

3.2. Emotion-Aware E-Learning Component

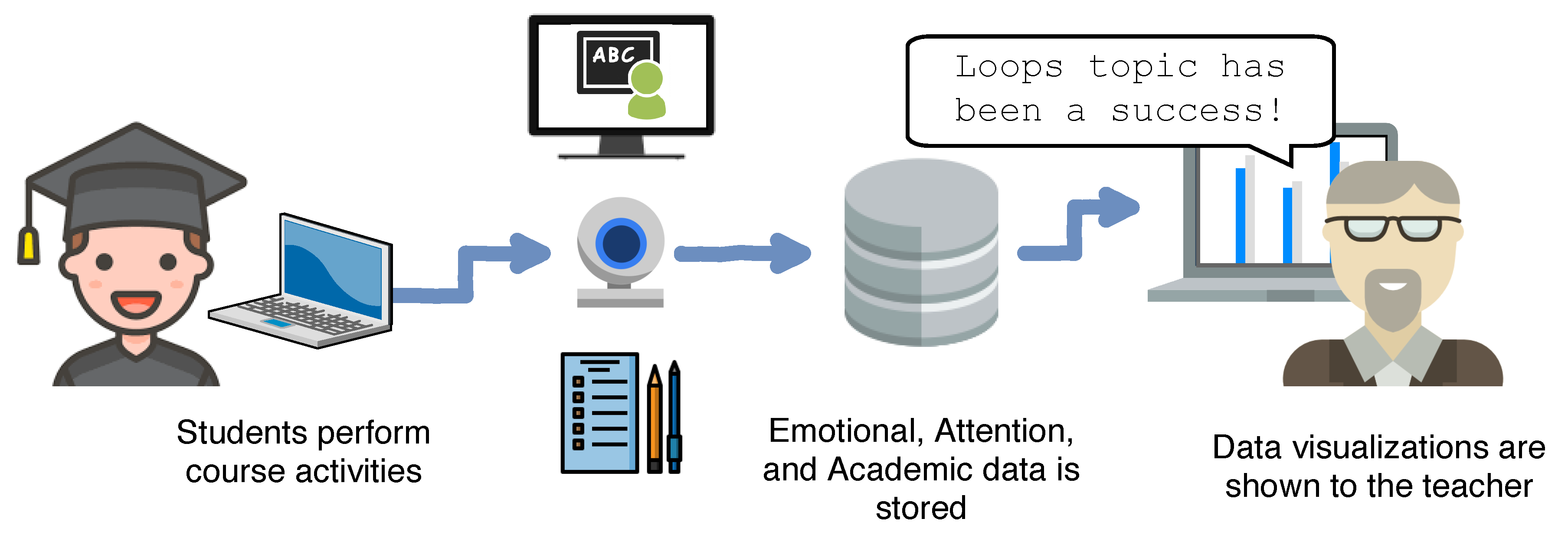

4. Case Study

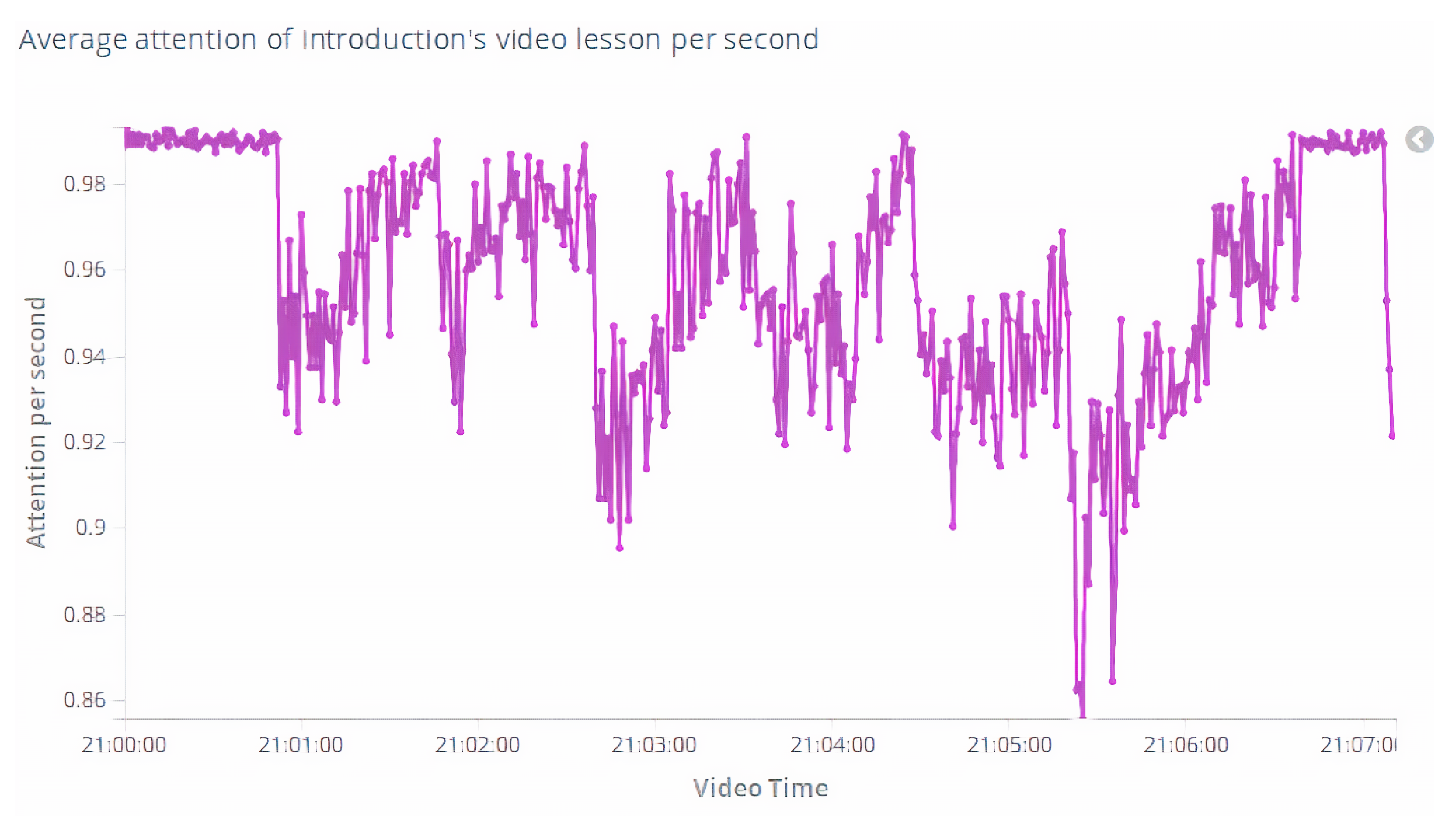

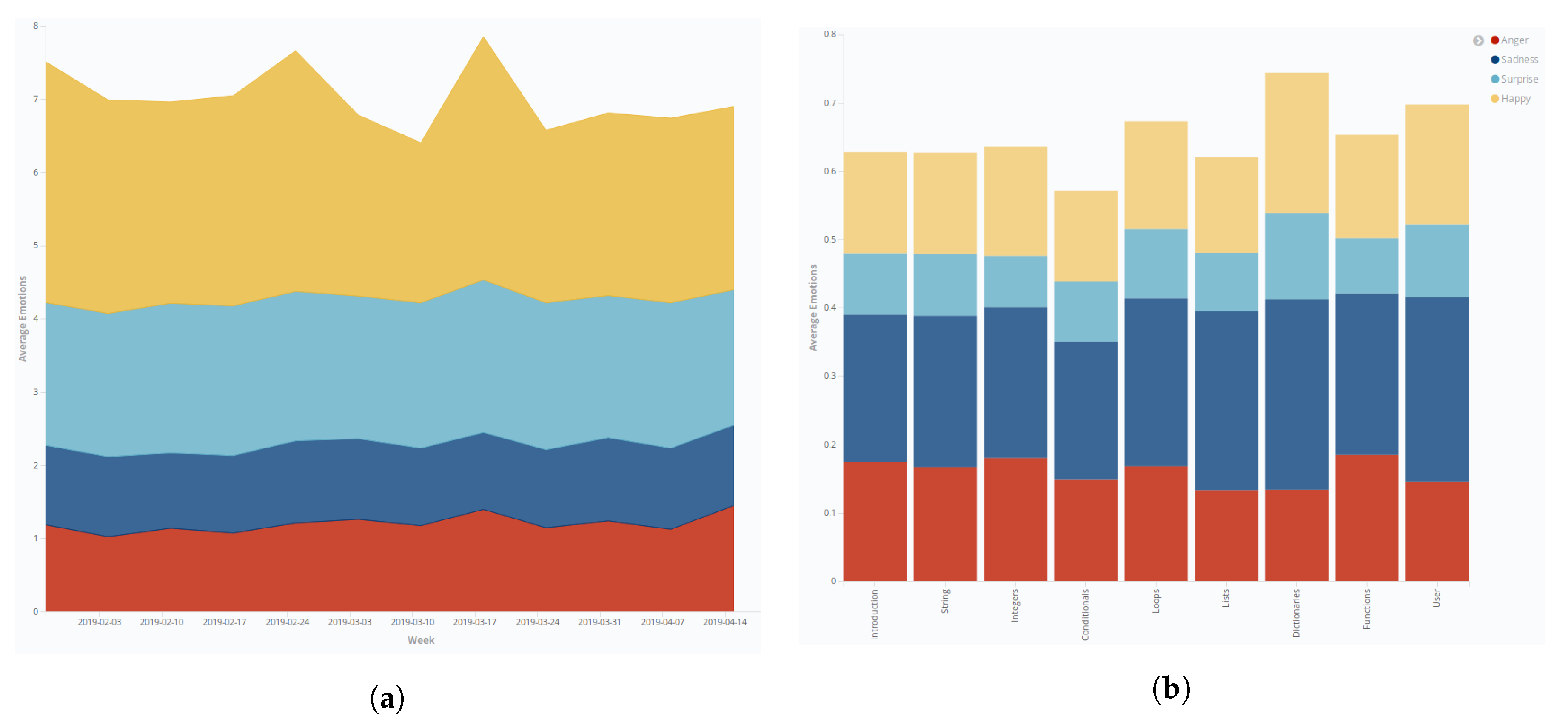

4.1. Academic and Emotional Dashboards for Teachers

4.2. Emotional Dashboard for Students

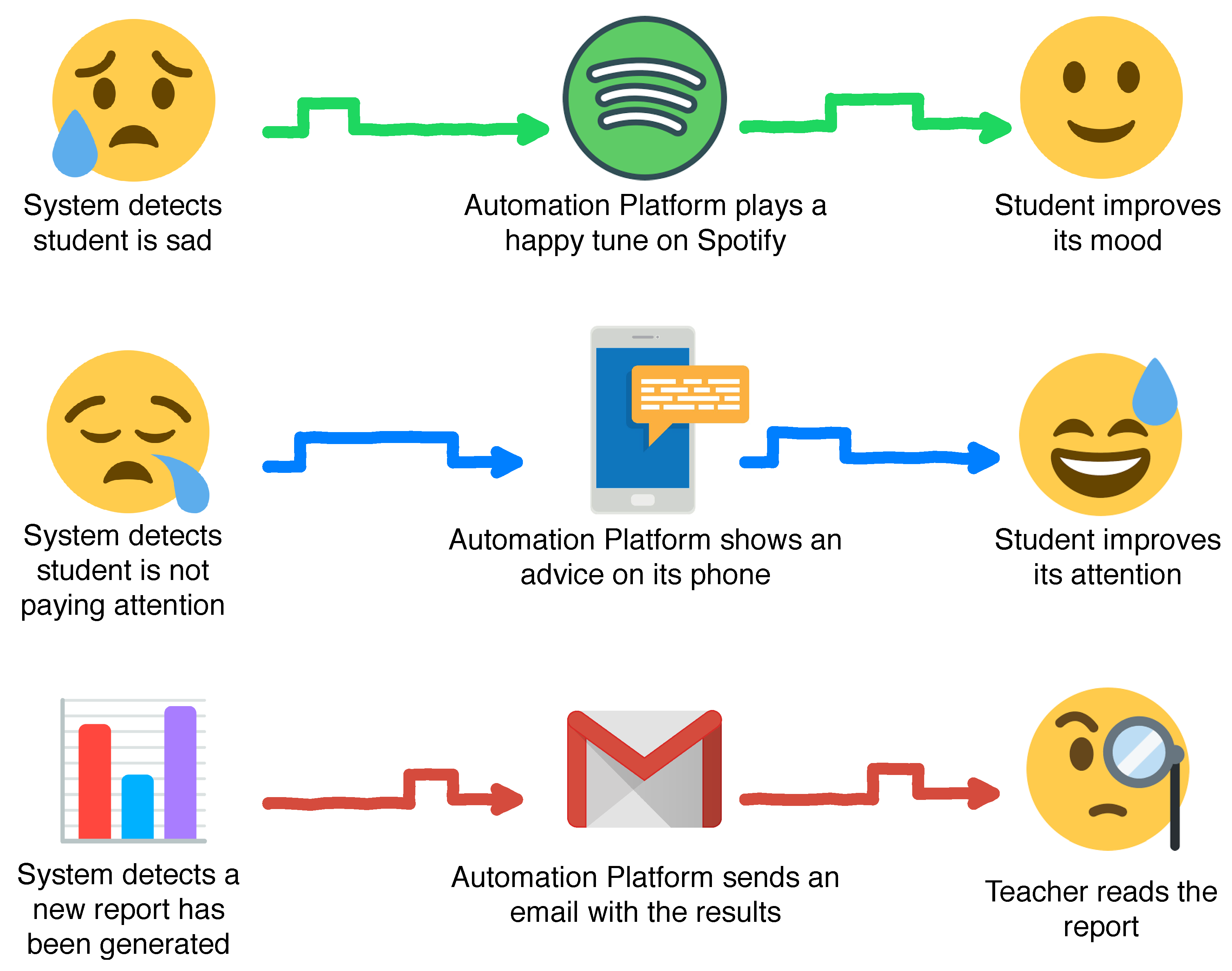

4.3. Smart Automation for Students and Teachers

5. Validation

5.1. Participants

5.2. Materials

- Camera (Gucee HD92) that feeds the video to the emotion recognizer submodule.

- Room lighting (WS2812B LED strip controlled by WeMos ESP8266 board) is used as an actuator on the light level of the room, with the possibility of using several lighting patterns.

- Google Chromecast [70] that transmits content in a local network.

- LG TV 49UJ651V. This device is used for displaying images.

- Google Home. The system uses this device to play music.

5.3. Methodology

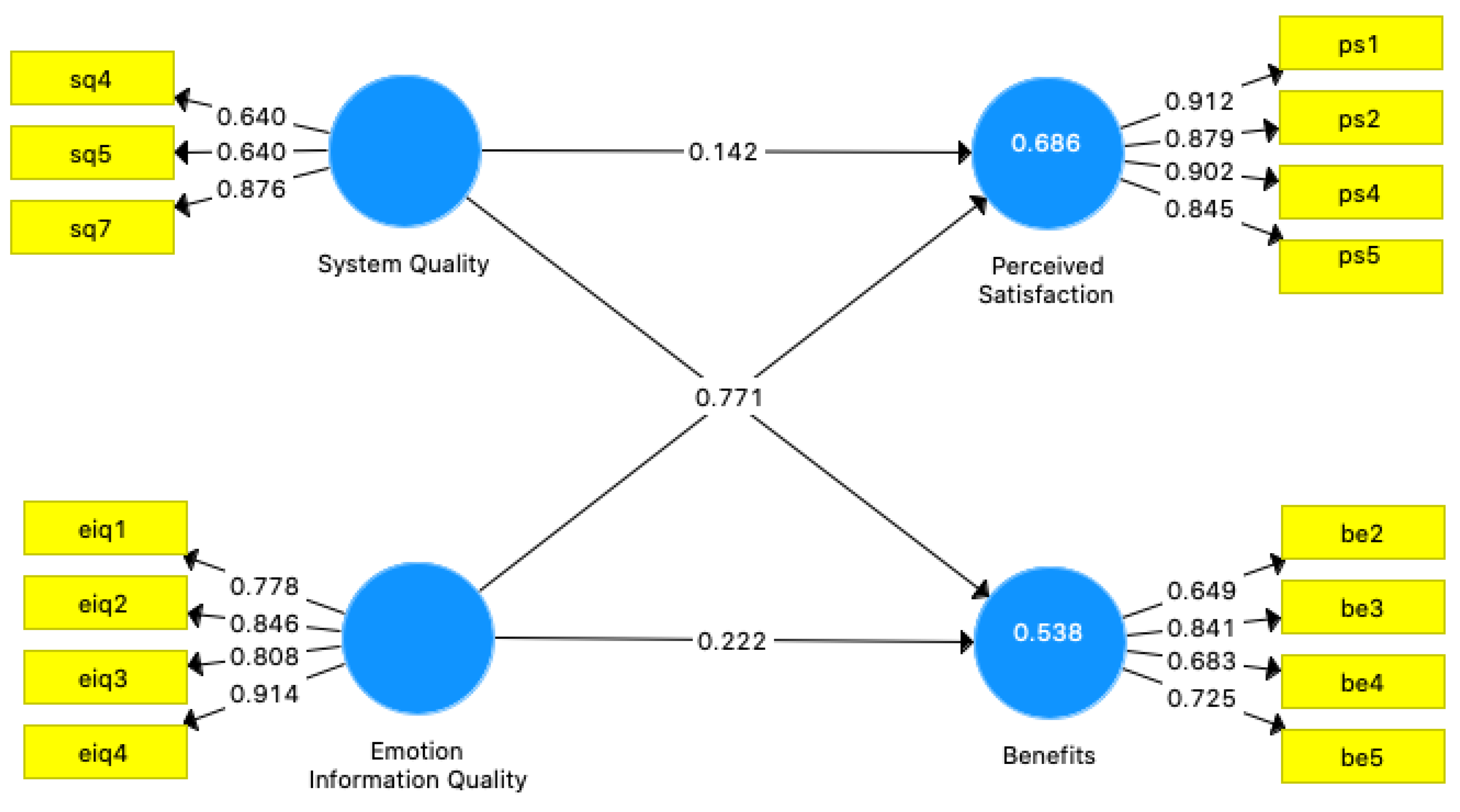

5.4. Results

- Assess the structural model for collinearity issues (VIF < 5).

- Assess the significance and relevance of the structural model relationships (t > 1.65).

- Assess the level of R2 (The cut-off levels are: 0.190 weak; 0.333 moderate; and 0.6702 substantial).

- Assess the level of Q (cut-off point larger than zero).

- Assess the model’s fit.

5.5. Teacher Validation

6. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Moore, J.L.; Dickson-Deane, C.; Galyen, K. e-Learning, online learning, and distance learning environments: Are they the same? Internet High. Educ. 2011, 14, 129–135. [Google Scholar] [CrossRef]

- Arkorful, V.; Abaidoo, N. The role of e-learning, advantages and disadvantages of its adoption in higher education. Int. J. Instr. Technol. Distance Learn. 2015, 12, 29–42. [Google Scholar]

- Garrison, D.R. E-Learning in the 21st Century: A Community of Inquiry Framework for Research and Practice; Routledge: London, UK, 2016. [Google Scholar]

- Welle-Strand, A.; Thune, T. E-learning policies, practices and challenges in two Norwegian organizations. Eval. Program Plan. 2003, 26, 185–192. [Google Scholar] [CrossRef]

- Andersson, A.; Grönlund, Å. A conceptual framework for e-learning in developing countries: A critical review of research challenges. Electron. J. Inf. Syst. Dev. Ctries. 2009, 38, 1–16. [Google Scholar] [CrossRef]

- Cantoni, V.; Cellario, M.; Porta, M. Perspectives and challenges in e-learning: Towards natural interaction paradigms. J. Vis. Lang. Comput. 2004, 15, 333–345. [Google Scholar] [CrossRef]

- Mayer, R.E. Searching for the role of emotions in e-learning. Learn. Instr. 2019, 101213. [Google Scholar] [CrossRef]

- Feidakis, M. A review of emotion-aware systems for e-learning in virtual environments. In Formative Assessment, Learning Data Analytics and Gamification; Elsevier: Amsterdam, The Netherlands, 2016; pp. 217–242. [Google Scholar]

- Siemens, G. Learning analytics: Envisioning a research discipline and a domain of practice. In Proceedings of the 2nd International Conference on Learning Analytics and Knowledge, Vancouver, BC, Canada, 29 April–2 May 2012; ACM: New York, NY, USA, 2012; pp. 4–8. [Google Scholar]

- Kelly III, J.E.; Hamm, S. Smart Machines: IBM’s Watson and the Era of Cognitive Computing; Columbia University Press: New York, NY, USA, 2013. [Google Scholar]

- Witten, I.H.; Frank, E. Data mining: Practical machine learning tools and techniques with Java implementations. ACM Sigmod Rec. 2002, 31, 76–77. [Google Scholar] [CrossRef]

- Bermudez-Edo, M.; Elsaleh, T.; Barnaghi, P.; Taylor, K. IoT-Lite: A lightweight semantic model for the Internet of Things. In Proceedings of the 2016 International IEEE Conferences on Ubiquitous Intelligence & Computing, Advanced and Trusted Computing, Scalable Computing and Communications, Cloud and Big Data Computing, Internet of People, and Smart World Congress (UIC/ATC/ScalCom/CBDCom/IoP/SmartWorld), Toulouse, France, 18–21 July 2016; pp. 90–97. [Google Scholar]

- Palavalli, A.; Karri, D.; Pasupuleti, S. Semantic internet of things. In Proceedings of the 2016 IEEE Tenth International Conference on Semantic Computing (ICSC), Laguna Hills, CA, USA, 4–6 February 2016; pp. 91–95. [Google Scholar]

- Kort, B.; Reilly, R.; Picard, R.W. An affective model of interplay between emotions and learning: Reengineering educational pedagogy-building a learning companion. In Proceedings of the IEEE International Conference on Advanced Learning Technologies, Madison, WI, USA, 6–8 August 2001; pp. 43–46. [Google Scholar]

- Cook, D.J.; Augusto, J.C.; Jakkula, V.R. Ambient intelligence: Technologies, applications, and opportunities. Pervasive Mob. Comput. 2009, 5, 277–298. [Google Scholar] [CrossRef]

- Muñoz, S.; Llamas, A.F.; Coronado, M.; Iglesias, C.A. Smart Office Automation Based on Semantic Event-Driven Rules. In Proceedings of the Intelligent Environments (Workshops), London, UK, 12–16 September 2016; pp. 33–42. [Google Scholar]

- Claes, F.; Cha, J. Partial least squares. Adv. Methods Mark. Res. 1994, 6446, 52–78. [Google Scholar]

- Sylwester, R. How emotions affect learning. Educ. Leadersh. 1994, 52, 60–65. [Google Scholar]

- Pintrich, P.R. A motivational science perspective on the role of student motivation in learning and teaching contexts. J. Educ. Psychol. 2003, 95, 667–686. [Google Scholar] [CrossRef]

- Värlander, S. The role of students’ emotions in formal feedback situations. Teach. High. Educ. 2008, 13, 145–156. [Google Scholar] [CrossRef]

- Cano, F. Consonance and dissonance in students’ learning experience. Learn. Instr. 2005, 15, 201–223. [Google Scholar] [CrossRef]

- de la Fuente Arias, J.; Justicia, F.J. The DEDEPRO [TM] Model for Regulating Teaching and Learning: Recent Advances. Electron. J. Res. Educ. Psychol. 2007, 5, 535–564. [Google Scholar]

- Pekrun, R. The impact of emotions on learning and achievement: Towards a theory of cognitive/motivational mediators. Appl. Psychol. 1992, 41, 359–376. [Google Scholar] [CrossRef]

- Ranellucci, J.; Hall, N.C.; Goetz, T. Achievement goals, emotions, learning, and performance: A process model. Motiv. Sci. 2015, 1, 98. [Google Scholar] [CrossRef]

- Seal, C.R.; Naumann, S.E.; Scott, A.N.; Royce-Davis, J. Social emotional development: A new model of student learning in higher education. Res. High. Educ. J. 2011, 10, 114–115. [Google Scholar]

- Frenzel, A.C.; Becker-Kurz, B.; Pekrun, R.; Goetz, T.; Lüdtke, O. Emotion transmission in the classroom revisited: A reciprocal effects model of teacher and student enjoyment. J. Educ. Psychol. 2018, 110, 628–639. [Google Scholar] [CrossRef]

- Shan, C.; Gong, S.; McOwan, P.W. Beyond Facial Expressions: Learning Human Emotion from Body Gestures. In Proceedings of the BMVC, Warwick, UK, 10–13 September 2007; pp. 1–10. [Google Scholar]

- Wiedemann, G.; Rayki, O.; Feinstein, E.; Hahlweg, K. The Family Questionnaire: Development and validation of a new self-report scale for assessing expressed emotion. Psychiatry Res. 2002, 109, 265–279. [Google Scholar] [CrossRef]

- Matlovic, T.; Gaspar, P.; Moro, R.; Simko, J.; Bielikova, M. Emotions detection using facial expressions recognition and EEG. In Proceedings of the 2016 11th International Workshop on Semantic and Social Media Adaptation and Personalization (SMAP), Thessaloniki, Greece, 20–21 October 2016; pp. 18–23. [Google Scholar]

- Araque, O.; Corcuera-Platas, I.; Sánchez-Rada, J.F.; Iglesias, C.A. Enhancing deep learning sentiment analysis with ensemble techniques in social applications. Expert Syst. Appl. 2017, 77, 236–246. [Google Scholar] [CrossRef]

- Pantic, M.; Bartlett, M. Machine Analysis of Facial Expressions. In Face Recognition; I-Tech Education and Publishing: Seattle, WA, USA, 2007; pp. 377–416. [Google Scholar]

- Sebe, N.; Cohen, I.; Gevers, T.; Huang, T.S. Multimodal approaches for emotion recognition: A survey. Proc. SPIE 2005, 5670, 5670-1–5670-12. [Google Scholar]

- Mehta, D.; Siddiqui, M.F.H.; Javaid, A.Y. Facial Emotion Recognition: A Survey and Real-World User Experiences in Mixed Reality. Sensors 2018, 18, 416. [Google Scholar] [CrossRef]

- Anagnostopoulos, C.N.; Iliou, T.; Giannoukos, I. Features and Classifiers for Emotion Recognition from Speech: A Survey from 2000 to 2011. Artif. Intell. Rev. 2015, 43, 155–177. [Google Scholar] [CrossRef]

- Ayadi, M.E.; Kamel, M.S.; Karray, F. Survey on speech emotion recognition: Features, classification schemes, and databases. Pattern Recognit. 2011, 44, 572–587. [Google Scholar] [CrossRef]

- Vinola, C.; Vimaladevi, K. A Survey on Human Emotion Recognition Approaches, Databases and Applications. ELCVIA Electron. Lett. Comput. Vis. Image Anal. 2015, 14, 24–44. [Google Scholar] [CrossRef]

- Picard, R.W.; Healey, J. Affective wearables. Pers. Technol. 1997, 1, 231–240. [Google Scholar] [CrossRef]

- Brouwer, A.M.; van Wouwe, N.; Mühl, C.; van Erp, J.; Toet, A. Perceiving blocks of emotional pictures and sounds: Effects on physiological variables. Front. Hum. Neurosci. 2013, 7, 295. [Google Scholar] [CrossRef]

- Derick, L.; Sedrakyan, G.; Munoz-Merino, P.J.; Delgado Kloos, C.; Verbert, K. Evaluating emotion visualizations using AffectVis, an affect-aware dashboard for students. J. Res. Innov. Teach. Learn. 2017, 10, 107–125. [Google Scholar] [CrossRef]

- Ruiz, S.; Charleer, S.; Urretavizcaya, M.; Klerkx, J.; Fernández-Castro, I.; Duval, E. Supporting learning by considering emotions: Tracking and visualization a case study. In Proceedings of the Sixth International Conference on Learning Analytics & Knowledge, Edinburgh, UK, 25–29 April 2016; ACM: New York, NY, USA, 2016; pp. 254–263. [Google Scholar]

- Ruiz, S.; Urretavizcaya, M.; Fernández-Castro, I.; López-Gil, J.M. Visualizing Students’ Performance in the Classroom: Towards Effective F2F Interaction Modelling. In Design for Teaching and Learning in a Networked World; Springer: Berlin/Heidelberg, Germany, 2015; pp. 630–633. [Google Scholar]

- GhasemAghaei, R.; Arya, A.; Biddle, R. A dashboard for affective e-learning: Data visualization for monitoring online learner emotions. In EdMedia+ Innovate Learning; Association for the Advancement of Computing in Education (AACE): Waynesville, NC, USA, 2016; pp. 1536–1543. [Google Scholar]

- Ez-Zaouia, M.; Lavoué, E. EMODA: A tutor oriented multimodal and contextual emotional dashboard. In Proceedings of the Seventh International Learning Analytics & Knowledge Conference, Vancouver, BC, Canada, 13–17 March 2017; ACM: New York, NY, USA, 2017; pp. 429–438. [Google Scholar]

- Robal, T.; Zhao, Y.; Lofi, C.; Hauff, C. Webcam-based Attention Tracking in Online Learning: A Feasibility Study. In Proceedings of the 23rd International Conference on Intelligent User Interfaces, Tokyo, Japan, 7–11 March 2018; ACM: New York, NY, USA, 2018; pp. 189–197. [Google Scholar]

- Papoutsaki, A.; Sangkloy, P.; Laskey, J.; Daskalova, N.; Huang, J.; Hays, J. WebGazer: Scalable Webcam Eye Tracking Using User Interactions. In Proceedings of the 25th International Joint Conference on Artificial Intelligence (IJCAI), New York, NY, USA, 9–15 July 2016; pp. 3839–3845. [Google Scholar]

- Chatti, M.A.; Dyckhoff, A.L.; Schroeder, U.; Thüs, H. A reference model for learning analytics. Int. J. Technol. Enhanc. Learn. 2013, 4, 318–331. [Google Scholar] [CrossRef]

- Arnold, K.E.; Pistilli, M.D. Course signals at Purdue: Using learning analytics to increase student success. In Proceedings of the 2nd International Conference on Learning Analytics and Knowledge, Vancouver, BC, Canada, 29 April–2 May 2012; ACM: New York, NY, USA, 2012; pp. 267–270. [Google Scholar]

- Pardos, Z.A.; Baker, R.S.; San Pedro, M.O.; Gowda, S.M.; Gowda, S.M. Affective States and State Tests: Investigating How Affect and Engagement during the School Year Predict End-of-Year Learning Outcomes. J. Learn. Anal. 2014, 1, 107–128. [Google Scholar] [CrossRef]

- Fidalgo-Blanco, Á.; Sein-Echaluce, M.L.; García-Peñalvo, F.J.; Conde, M.Á. Using Learning Analytics to improve teamwork assessment. Comput. Hum. Behav. 2015, 47, 149–156. [Google Scholar] [CrossRef]

- Mennicken, S.; Vermeulen, J.; Huang, E.M. From today’s augmented houses to tomorrow’s smart homes: New directions for home automation research. In Proceedings of the 2014 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Seattle, WA, USA, 13–17 September 2014; pp. 105–115. [Google Scholar]

- Ovadia, S. Automate the internet with “if this then that” (IFTTT). Behav. Soc. Sci. Libr. 2014, 33, 208–211. [Google Scholar] [CrossRef]

- Coronado, M.; Iglesias, C.A.; Serrano, E. Modelling rules for automating the Evented WEb by semantic technologies. Expert Syst. Appl. 2015, 42, 7979–7990. [Google Scholar] [CrossRef]

- Muñoz López, S.; Fernández, A.; Coronado, M.; Iglesias, C.A. Smart Office Automation based on Semantic Event-Driven Rules. In Proceedings of Workshop on Smart Offices and Other Workplaces, Colocated with 12th International Conference on Intelligent Environments (IE&039;16); Ambient Intelligence and Smart Environments; IOS Press: Amsterdam, The Netherlands, 2016; Volume 21, pp. 33–42. [Google Scholar]

- Coronado, M.; Iglesias, C.A. Task automation services: Automation for the masses. IEEE Internet Comput. 2016, 20, 52–58. [Google Scholar] [CrossRef]

- Horton, W. E-Learning by Design; John Wiley & Sons: Hoboken, NJ, USA, 2011. [Google Scholar]

- Bentley, T.; Johnston, L.; von Baggo, K. Putting some emotion into requirements engineering. In Proceedings of the 7th Australian Workshop on Requirements Engineering, Melbourne, Australia, 2–3 December 2002; pp. 227–244. [Google Scholar]

- Keller, J.; Suzuki, K. Learner motivation and e-learning design: A multinationally validated process. J. Educ. Media 2004, 29, 229–239. [Google Scholar] [CrossRef]

- Øygard, A.M. CmlTrcker, Javascript library for Precise Tracking of Facial Features via Constrained Local Models. Available online: https://github.com/auduno/clmtrackr (accessed on 14 April 2020).

- Banker, K. MongoDB in Action; Manning Publications Co.: Shelter Island, NY, USA, 2011. [Google Scholar]

- Dixit, B.; Kuc, R.; Rogozinski, M.; Chhajed, S. Elasticsearch: A Complete Guide; Packt Publishing Ltd.: Birmingham, UK, 2017. [Google Scholar]

- Srivastava, A. Kibana 7 Quick Start Guide: Visualize Your Elasticsearch Data with Ease; Packt Publishing Ltd.: Birmingham, UK, 2019. [Google Scholar]

- Muñoz, S.; Araque, O.; Sánchez-Rada, J.F.; Iglesias, C.A. An emotion aware task automation architecture based on semantic technologies for smart offices. Sensors 2018, 18, 1499. [Google Scholar] [CrossRef] [PubMed]

- Berners-Lee, T.; Connolly, D.; Kagal, L.; Scharf, Y.; Hendler, J. N3logic: A logical framework for the world wide web. arXiv 2007, arXiv:0711.1533. [Google Scholar] [CrossRef]

- McCarthy, C.; Pradhan, N.; Redpath, C.; Adler, A. Validation of the Empatica E4 wristband. In Proceedings of the 2016 IEEE EMBS International Student Conference (ISC), Ottawa, ON, Canada, 29–31 May 2016; pp. 1–4. [Google Scholar]

- Gilchrist, C. Learning iBeacon; Packt Publishing Ltd.: Birmingham, UK, 2014. [Google Scholar]

- Thompson, C. Python for Beginners. Available online: https://archive.moodle.net/mod/glossary/showentry.php?eid=93 (accessed on 14 April 2020).

- Borth, D.; Ji, R.; Chen, T.; Breuel, T.; Chang, S.F. Large-scale visual sentiment ontology and detectors using adjective noun pairs. In Proceedings of the 21st ACM International Conference on Multimedia, Barcelona, Spain, 21–25 October 2013; ACM: New York, NY, USA, 2013; pp. 223–232. [Google Scholar]

- Büchner, A. Moodle 3 Administration; Packt Publishing Ltd.: Birmingham, UK, 2016. [Google Scholar]

- UPM. Reglamento del Comité de Ética de Actividades de I+D+i de la Universidad Politécnica de Madrid. Available online: https://www.upm.es/sfs/Rectorado/Vicerrectorado%20de%20Investigacion/Servicio%20de%20Investigacion/Ayudas_y_Convocatorias/C.ETICA-REGLAMENTO.pdf (accessed on 17 September 2019).

- Williams, K. The Technology Ecosystem: Fueling Google’s Chromecast [WIE from Around the World]. IEEE Women Eng. Mag. 2014, 8, 30–32. [Google Scholar] [CrossRef]

- Oualline, S.; Oualline, G. Web Browsing with Firefox. In Practical Free Alternatives to Commercial Software; Springer: Berlin/Heidelberg, Germany, 2018; pp. 1–26. [Google Scholar]

- Ringle, C.M.; Wende, S.; Becker, J.M. SmartPLS 3; SmartPLS: Bönningstedt, Germany, 2015. [Google Scholar]

- Hair, J.F., Jr.; Sarstedt, M.; Hopkins, L.; Kuppelwieser, V.G. Partial least squares structural equation modeling (PLS-SEM). Eur. Bus. Rev. 2014, 26, 106–121. [Google Scholar] [CrossRef]

- Hair, J.F.; Anderson, R.E.; Babin, B.J.; Black, W.C. Multivariate Data Analysis: A Global Perspective; Pearson Education: London, UK, 2010; Volume 7. [Google Scholar]

- Urbach, N.; Ahlemann, F. Structural equation modeling in information systems research using partial least squares. J. Inf. Technol. Theory Appl. 2010, 11, 5–40. [Google Scholar]

- Fornell, C.; Larcker, D.F. Structural equation models with unobservable variables and measurement error: Algebra and statistics. J. Mark. Res. 1981, 18. [Google Scholar] [CrossRef]

- Henseler, J.; Ringle, C.M.; Sarstedt, M. A new criterion for assessing discriminant validity in variance-based structural equation modeling. J. Acad. Mark. Sci. 2015, 43, 115–135. [Google Scholar] [CrossRef]

- Duarte, P.A.O.; Raposo, M.L.B. A PLS model to study brand preference: An application to the mobile phone market. In Handbook of Partial Least Squares; Springer: Berlin/Heidelberg, Germany, 2010; pp. 449–485. [Google Scholar]

- Gefen, D.; Straub, D. A practical guide to factorial validity using PLS-Graph: Tutorial and annotated example. Commun. Assoc. Inf. Syst. 2005, 16, 91–109. [Google Scholar] [CrossRef]

- Al-Fraihat, D.; Joy, M.; Sinclair, J.; Masa’deh, R. Evaluating E-learning systems success: An empirical study. Comput. Hum. Behav. 2020, 102, 67–86. [Google Scholar] [CrossRef]

- Tenenhaus, M.; Vinzi, V.E.; Chatelin, Y.M.; Lauro, C. PLS path modeling. Comput. Stat. Data Anal. 2005, 48, 159–205. [Google Scholar] [CrossRef]

- Vinzi, V.E.; Trinchera, L.; Amato, S. PLS path modeling: From foundations to recent developments and open issues for model assessment and improvement. In Handbook of Partial Least Squares; Springer: Berlin/Heidelberg, Germany, 2010; pp. 47–82. [Google Scholar]

- Wetzels, M.; Odekerken-Schröder, G.; Van Oppen, C. Using PLS path modeling for assessing hierarchical construct models: Guidelines and empirical illustration. MIS Q. 2009, 33, 177–195. [Google Scholar] [CrossRef]

| No. | Question Formulation | Code |

|---|---|---|

| Q1 | The system is easy to use | SQ1 |

| Q2 | System reliability is correct | SQ2 |

| Q3 | The system provides interactive features between user and system | SQ3 |

| Q4 | The system provides custom information | SQ4 |

| Q5 | The system features are attractive for the user | SQ5 |

| Q6 | The system provides information in a rapid way | SQ6 |

| Q7 | System interface is clear and intuitive | SQ7 |

| Q8 | The system provides the needed information related to emotions | EIQ1 |

| Q9 | The information provided by the system is accessible at the required moment | EIQ2 |

| Q10 | The information related to emotions provided by the system is relevant for your task | EIQ3 |

| Q11 | The system provides enough information related to emotions | EIQ4 |

| Q12 | The information provided by the system is easy to understand | EIQ5 |

| Q13 | The system provides updated data of your emotions | EIQ6 |

| Q14 | The system enables me to accomplish the tasks more efficiently | US1 |

| Q15 | The system helps me learn effectively | US2 |

| Q16 | The system improves my learning performance | US3 |

| Q17 | The system features are improvements with regards to standard e-learning platforms | US4 |

| Q18 | Overall, the system is useful | US5 |

| Q19 | The system saves my time in searching for materials | BE1 |

| Q20 | The system has increased my knowledge and helped me to be successful in the course | BE2 |

| Q21 | The system makes communication easier with the teacher and other students | BE3 |

| Q22 | The system allows the teacher to adapt to students’ needs | BE4 |

| Q23 | The system has helped me to achieve the learning goals of the module | BE5 |

| Construct and Items | Outer Loading | Outer t-Statistic | CR | AVE |

|---|---|---|---|---|

| System quality | 0.767 | 0.529 | ||

| SQ4: The system provides custom information | 0.640 | 2.460 | ||

| SQ5: The system features are attractive for the user | 0.640 | 3.732 | ||

| SQ7: System interface is clear and intuitive | 0.876 | 2.603 | ||

| Emotional related information quality | 0.904 | 0.703 | ||

| EIQ1: The system provides the needed information related to emotions | 0.778 | 3.986 | ||

| EIQ2: The information provided by the system is accessible at the required moment | 0.846 | 1.238 | ||

| EIQ3: The related to emotions information provided by the system is relevant for your task | 0.808 | 1.747 | ||

| EIQ4: The system provides enough information related to emotions | 0.914 | 1.243 | ||

| Perceived usefulness | 0.935 | 0.783 | ||

| US1: The system enables me to accomplish the tasks more efficiently | 0.912 | 1.577 | ||

| US2: The system helps me learn effectively | 0.879 | 1.186 | ||

| US4: The system features are improvements with regard to standard e-learning platforms | 0.902 | 1.194 | ||

| US5: Overall, the system is useful | 0.845 | 1.177 | ||

| Benefits | 0.817 | 0.530 | ||

| BE2: The system has increased my knowledge and helped me to be successful in the course | 0.649 | 3.420 | ||

| BE3: The system makes communication easier with the teacher and other students | 0.841 | 2.846 | ||

| BE4: The system allows the teacher to adapt to students’ needs | 0.683 | 3.292 | ||

| BE5: The system has helped me to achieve the learning goals of the module | 0.725 | 2.074 |

| Emotion Information Quality | Benefits | System Quality | Perceived Satisfaction | |

|---|---|---|---|---|

| EIR1 | 0.778 | 0.428 | 0.466 | 0.664 |

| EIR2 | 0.846 | 0.250 | 0.045 | 0.691 |

| EIR3 | 0.808 | 0.387 | 0.261 | 0.575 |

| EIR4 | 0.914 | 0.373 | 0.314 | 0.791 |

| BE2 | 0.078 | 0.649 | 0.479 | 0.048 |

| BE3 | 0.317 | 0.841 | 0.643 | 0.290 |

| BE4 | 0.527 | 0.683 | 0.460 | 0.269 |

| BE5 | 0.283 | 0.725 | 0.439 | 0.173 |

| SQ4 | 0.084 | 0.408 | 0.640 | 0.087 |

| SQ5 | 0.308 | 0.248 | 0.640 | 0.347 |

| SQ7 | 0.387 | 0.731 | 0.876 | 0.384 |

| PS1 | 0.730 | 0.200 | 0.302 | 0.912 |

| PS2 | 0.657 | 0.138 | 0.325 | 0.879 |

| PS4 | 0.743 | 0.313 | 0.302 | 0.902 |

| PS5 | 0.753 | 0.336 | 0.464 | 0.845 |

| Fornell–Larcker | HTMT Correlations | |||||||

|---|---|---|---|---|---|---|---|---|

| EIQ | BE | SQ | PS | EIQ | BE | SQ | PS | |

| Emotion Information Quality | 0.838 | |||||||

| Benefits | 0.430 | 0.728 | 0.549 | |||||

| System Quality | 0.331 | 0.703 | 0.727 | 0.513 | 0.883 | |||

| Perceived Satisfaction | 0.817 | 0.284 | 0.396 | 0.885 | 0.817 | 0.365 | 0.502 | |

| Emotion Information Quality | 0.538 (moderate level) | 0.244 |

| System Quality | 0.686 (substantial level) | 0.460 |

| No. | Question Formulation | Avg. |

|---|---|---|

| Q1 | The information provided by the system related to students’ emotion is useful | 4.2 |

| Q2 | The system features are improvements with regard to standard e-learning platforms | 4.8 |

| Q3 | The system allows the teacher to adapt the content to students’ needs | 4.6 |

| Q4 | The system makes communication easier with the teacher and other students | 3.8 |

| Q5 | Overall, the system is useful | 4.6 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Muñoz, S.; Sánchez, E.; Iglesias, C.A. An Emotion-Aware Learning Analytics System Based on Semantic Task Automation. Electronics 2020, 9, 1194. https://doi.org/10.3390/electronics9081194

Muñoz S, Sánchez E, Iglesias CA. An Emotion-Aware Learning Analytics System Based on Semantic Task Automation. Electronics. 2020; 9(8):1194. https://doi.org/10.3390/electronics9081194

Chicago/Turabian StyleMuñoz, Sergio, Enrique Sánchez, and Carlos A. Iglesias. 2020. "An Emotion-Aware Learning Analytics System Based on Semantic Task Automation" Electronics 9, no. 8: 1194. https://doi.org/10.3390/electronics9081194

APA StyleMuñoz, S., Sánchez, E., & Iglesias, C. A. (2020). An Emotion-Aware Learning Analytics System Based on Semantic Task Automation. Electronics, 9(8), 1194. https://doi.org/10.3390/electronics9081194