Abstract

In this paper, we present in depth the hardware components of a low-cost cognitive assistant. The aim is to detect the performance and the emotional state that elderly people present when performing exercises. Physical and cognitive exercises are a proven way of keeping elderly people active, healthy, and happy. Our goal is to bring to people that are at their homes (or in unsupervised places) an assistant that motivates them to perform exercises and, concurrently, monitor them, observing their physical and emotional responses. We focus on the hardware parts and the deep learning models so that they can be reproduced by others. The platform is being tested at an elderly people care facility, and validation is in process.

1. Introduction

We are currently facing a societal problem: the world’s population is growing older fast [1]. While this could be great news (and it is), there are intrinsic problems that come with fast shifting demographic changes, and being unprepared for growing health requirements that elderly people have is one of them.

Persons over the age of 65 are the fastest-growing age group, and it is expected that by 2050, 16% of the world population will be over 65 years, while in 2019, this value was already 9% [1]. This projection is global, meaning that regions such as Northern Africa and Western Asia, Central and Southern Asia, Eastern and South-Eastern Asia, and Latin America and the Caribbean are expected to double their elderly population [1]. Furthermore, by 2050, 25% of the population in Europe will be 65 years or over, being accompanied by an interesting fact: in 2018, children under five years of age were outnumbered by persons aged over 65 [1]. This rapid increase is mainly due to improved medical care, which diminishes the mortality rate. Although people live longer, this is not without its problems. In the Euro28 area, it is expected that people over 65 years only have 10 more years (on average) until serious health problems start to appear, as reported by the United Nations (UN) [1].

In their latest census, the UN has identified that there is an increasing shortage of employed people, thus causing high stress to social protection systems [1]. This is due to two factors: the decrease of working-age people and the social-economic problems of countries. For instance, in Japan, the ratio between people aged 25–64 to those over age 65 is 1.8, while in most of Europe, the value is starting to fall below of three. This means that there will be a high impact to countries’ economies as the GDP will be affected by the decrease of the labor market, being overburdened by the increasing costs of healthcare systems, pensions, and social protection.

Apart from the economic distress, there is the healthcare distress. Studies, like the ones that were presented by Licher [2] and Jaul [3], show that maintaining high levels of quality of life while aging is complicated. There is no clear path towards a definitive medical solution, as most of the illnesses are non-curable and have very complex pathologies. A possible stand-in replacement to a medical treatment is backed by several research studies [4,5,6] that show that it is possible to refrain from the advances of these illnesses by keeping the elderly active both physically and cognitively through exercises. These exercises are often complex and require attention from a caregiver, either to help perform those exercises or to correct the posture/strategy. This requires a large amount of monitoring time by another person.

The caregivers are often overburdened by the care and assistance work, as evidenced by these works [7,8,9] (with the most severe cases being non-specialized trained caregivers combined with high-dependency elders). This means that often, the caregiver puts his/her health at risk, and the elder does not receive adequate care. In specialized facilities, like nursing homes, this is less accentuated; nonetheless, the lack of caregivers and the high number of residents may lead to a poorer experience in these facilities [10]. Furthermore, as previously stated, the elderly are not economically prepared for the high cost that these facilities charge [11].

In short, in the near future, a large number of older people will be left alone in their homes while suffering from limiting and life-threatening diseases because they cannot afford nursing homes or home care services or because they are not able to have assistance from an informal caregiver.

A possible solution to these issues may be the usage of technology to help elderly people perform Activities of Daily Living (ADL) or attenuate their loneliness, while actively monitoring their health status. There are already some projects in this domain, explained in depth in Section 2. These projects shed some light on what the current approaches are, and more importantly, what the needs of elderly people are and how technology can improve their quality of life.

Our project goal is to create a way so that elderly people can in stay their homes safely and under active supervision, while at the same time engaging them in personalized active exercises and exergames. The way that this goal is achieved by our platform is by using a low-cost, easy-to-deploy sensor system that is able to monitor said exercises and interact with the elders, sending reports to the informal/formal caregivers. The platform is constituted by two components: the sensors and the software (exercise evaluator, scheduler, information portal, and interactor). Our proposal covers a less traveled path, which is the usage of low-cost sensors (using in expensive commercial components and 3D printing) together with health-related software that gives personalized advice.

This paper is a continuation of the work presented in [12]. The main improvements in relation to it are the improved sensor systems and the learning models for information extraction. Additionally, the objective is that others are able to reproduce our platform with ease from the information presented in this paper.

2. Related Work

This project touches on prolific domains: emotion detection, human activity recognition, and cognitive assistants. Therefore, in this section, we present related work that belongs to those domains or, like this project, touches on all or part of them.

2.1. Cognitive Assistants

The cognitive assistants consist typically of a combination of software and hardware systems that help people (mostly cognitively impaired) in their ADL. The aim is to provide memory assistance (through reminders), visual/auditory cues, and physical assistance (through robots or smart home actuators) [13,14].

One example of this is the PHAROS [15] project, whose goal is to use a friendly-looking robot to engage elderly people in playful activities, such as physical exercises or cognitive games. The aim is to maintain a conversation that subliminally engages the users to perform the system’s suggestions. Furthermore, using the robot sensors, it is able to detect and gauge the exercise performance and give this information to the caregivers so they are able to access if the users are performing the exercise well and to measure if the users are losing abilities.

Using friendly robots to interact with the user was the work of Castillo et al. [16]. The objective was to use a robot to guide the users in therapy sessions for apraxia of speech. The robot captured the mouth movements and evaluated if they were correct, giving the users advice on how to perform the exercises and tips about mouth positions.

The CoMEproject [17] is an example of a cognitive assistant that does have a robot counterpart. This project uses wearable sensors and smartphones to monitor the users, giving way to interaction while concurrently collecting reports from the usage. The users receive information and have tutorials available on how to perform the planned activities. The caregivers are able to access the user performance reports. This project is designed to be implemented in elderly care facilities, maximizing the number of care receivers a caregiver is able to monitor.

The iGenda project [18,19,20] aims to provide assistance through ambient assisted living devices/environments like a smart home. The objective is to use IoT or Internet connected devices to convey information and actuators to change the environments for the users. The social objective is to be a cognitive aid to people who are suffering from light to mild cognitive disabilities. iGenda’s core is an event management system that monitors the users’ tasks and shared activities and provides cues through screens and speakers to remind the users of the upcoming activities. Furthermore, the users are able to interact with iGenda, using logical arguments and persuading them to perform certain activities. Apart from this, iGenda is able to monitor users outside their home, resorting to information of their smartphone; thus, it is able to verify if they are leaving safe/common areas.

2.2. Human Activity Recognition

The domain of human activity recognition is experiencing a boom in terms of development due to the usage of novel deep learning techniques that were not available previously. Several studies [21,22] showed that the majority of current projects and technologies used in human activity recognition display a clear pattern: deep learning and datasets. This pattern allows the advancement of the developments to the stage of micro-optimization due most models having over 85% accuracy.

One example is the work of Martinez-Martin et al. [23,24,25], which proposed a rehabilitation system to provide rehabilitation monitoring at home using a humanoid robot. The goal was to use the robot’s cameras to access the user’s physical movements visually, using deep learning methods, and correct them using the robot screen and body to convey this information. The robot was also able to navigate around the house and locate the user. The captured information (body movement measure) was made available to healthcare professionals for them to correct the user if needed, providing specialized attention.

The work of Vepakomma et al. [26] presented a framework that detected common home activities from wrist bracelets. They resorted to deep learning methods to classify the raw input and produce a result from even light gestures. Their framework was able to detect 22 distinct activities with an accuracy of 90%. The issue with this project was that it was too personalized, meaning that these results were achieved with only two persons, whereas the results were significantly lower with others users.

The work of Cao et al. [27] presented a novel classification method that achieved over 94% accuracy in detecting ADL. The method worked by creating associations between activities determining how usual a sequence of events was, like rinsing the mouth with water performed after brushing teeth. Using these pre-established associations was faster than calculating real-time data. The downside of this approach was its rigidity to changes and that singular activities were harder to detect, apart from being required to input these associations by a technician, as the system was unable to learn on its own.

2.3. Emotion Detection

A novel domain is emotion detection, where, using a combination of hardware and software, computer systems are able to identify human emotions. Several studies reported that there were various methods to human emotion recognition [28,29]. There was a division between using non-invasive sensors (like vital signs sensors) and using cameras. We focused on the advancements of detection using body sensors, as used in this project. This decision was based on the privacy issues arising from using cameras.

Brás et al. [30] presented 90% accuracy in detecting emotions using Electrocardiogram (ECG) sensors, in a controlled environment. To achieve this high result, a novel approach was developed, using a quantization method that compared the incoming signal to a dataset doing a meta-classification; then compressing ECG meta-data resorting to an ECG dataset as a reference; finally, using the probability that the ECG was classified correctly. This unorthodox process was limited to a tight coupling of the models to the individuals that were used to train the system. The tests may have introduced a bias in the results; for instance, it was reasonable to assume that people became scared and anxious when they were exposed to fearful situations. Fear is an intense emotion that regularly leads to an accelerated heartbeat, which is simple to identify in an ECG. The studies performed were designed to cause a strong emotional response, the minimum threshold values being unknown and whether muted emotions could be detected.

Using the matching pursuit algorithm and a probabilistic neural network method, Goshvarpour et al. [31] detected emotional features using ECG and Galvanic Skin Response (GSR). Nonetheless, in this work, only four emotions were detected: scary, happy, sad, and peaceful (from the pleasure arousal dominance model). As a trigger, music was used on eleven students. Over 90% accuracy was reported. From the study, it was determined that GSR had little impact on emotion detection. Furthermore, the emotions were not linearly detected. Strong emotions, like arousal (happy), were far simpler to detect than the others.

Naji et al. [32,33] used a combination of ECG with forehead biosignals to obtain a good accuracy in emotion identification. It was discovered that facial movements (like frowning) were very useful to identify emotions accurately. With the usage of the headband, a camera was not needed; thus, the privacy concerns were not significant.

Seoane et al. [34] used body sensors to detect stress levels of military personnel (ATRECproject). They established that placing the sensors (ECG and GSR) on the neck (throat area) provided a high level of accuracy in terms of valence markers and alert levels, which are directly related to stress levels. On the contrary, speech, GSR (on the hands/arms), or skin temperature provided little accuracy for emotion detection.

As can be seen, there are different (even contradictory) approaches to classifying emotions with minimal intrusion. ECG is crucial for the detection and classification of emotions, and the use of various sensors can improve the accuracy of the classification or help to detect triggering events.

With this project, we aim at the advancement of the state-of-the-art, by overcoming the issues that the projects presented in this section had. Nonetheless, it is of note that these projects were important hallmarks and should be regarded as so, as they established the pathway to newer advancements.

3. Low-Cost Cognitive Assistant

This section describes our proposal for a system that is a continuation of previous research presented in [12]. This new research incorporated a series of devices capable of detecting and classifying the movements carried out by elderly people and detecting their emotions when performing them.

With the emergence of wearable devices capable of counting daily steps and calculating the Heart Rate (HR), the use of these devices has many fields of application, the most common being in sport. Nevertheless, many healthcare related applications have emerged using these devices. Devices such as the Fitbit (https://www.fitbit.com/es/home) [35], which can be used to track physical activity, or the Apple Watch [36], which can be used to monitor people with cardiovascular diseases (through heart rate measurements), are some of the examples in which these devices are used.

In recent years, new devices have appeared including communication protocols such as WiFi and Bluetooth. All these features are used to create applications that facilitate the monitoring of the elderly, allowing the acquisition of signals such as ECG, Photoplethysmography (PPG), respiratory rate, and GSR.

Our device was designed by integrating two elements, the emotion detection using bio-signals and the detection of movements in the lower and upper extremities through accelerometers.

To make this application possible, it was necessary to use different types of hardware that facilitated the acquisition of data and software tools that analyzed the information sent by the devices. This way, mixing these technologies, it was possible to recognize patterns, analyze images, analyze emotions, detect stress, etc.

3.1. Wristbands

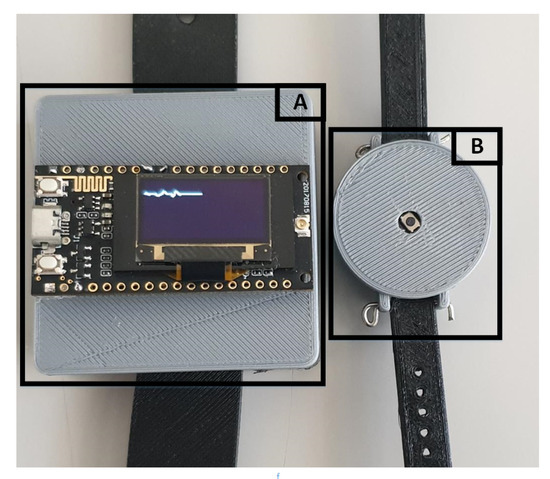

We devised a set of two wristband prototypes as shown in Figure 1 to be worn by the people being monitored. Wristband B detected motion, whilst Wristband A had a more complex composition, as can be observed in Figure 2. The goal pursued by using both of these wristbands was to detect not only the emotion of the people being monitored, but also if they were properly doing the exercises being suggested. The decision to manufacture our own devices was due to the fact that the data from the commercial wristbands were filtered and preprocessed, so they did not have the precision required for our platform.

Figure 1.

Wristband A and Wristband B (motion detector) prototypes.

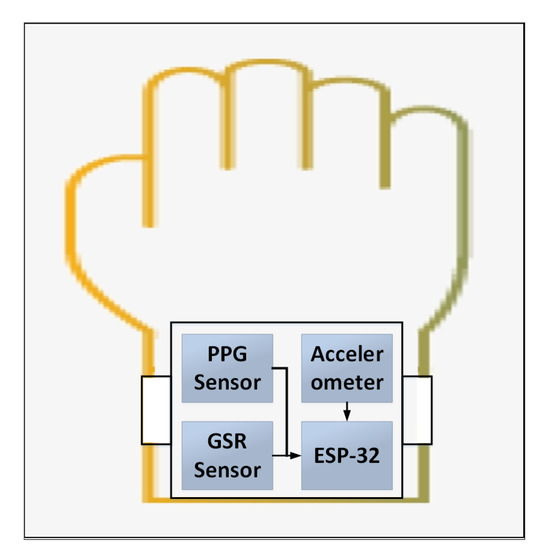

Figure 2.

Wristband A prototype composition.

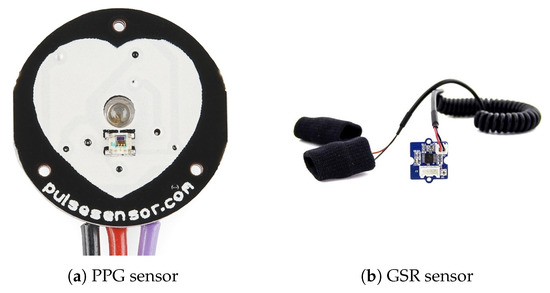

To perform the emotion detection using bio-signals, it was necessary to have a specific hardware to acquire these signals. We designed a device capable of acquiring these signals so we could control the tuning and raw signal. There were two signals captured by our device, and the first was a PPG signal. This measurement was made by a sensor (Figure 3) that passed a light beam over the skin, to make the subcutaneous vessels illuminate. This made a part of this beam be reflected, falling on a photo sensor that converted it into an equivalent voltage. Because the skin absorbed more than 90% of the light, the diode pair was accompanied by amplifiers and filters that ensured an adequate voltage.

Figure 3.

Photoplethysmography (PPG) and Galvanic Skin Response (GSR) sensors.

The second signal captured by our system was skin resistance, which is the galvanic response of the skin. This resistance varies with the state of the skin’s sweat glands, which are regulated by the Autonomous Nervous System (ANS). If the sympathetic branch of the ANS is excited, the sweat glands increase their activity by modifying the conductance of the skin. The ANS is directly related to the regulation of emotional behavior in human beings. To capture these variations, a series of electronic devices was used, equipped with sensors or electrodes that were in contact with the skin. When there was a variation in skin resistance, these devices registered this activity and returned an analog signal, which was proportional to the activity of the skin. Figure 3 shows the device used to make this capture.

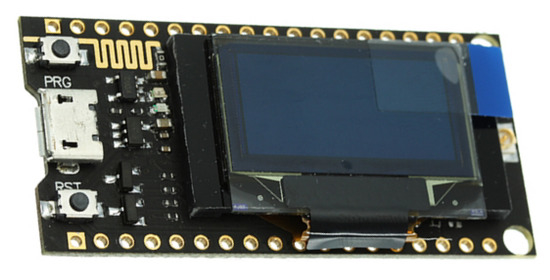

The analog signals returned by the sensors were digitized using the ESP-32’s analog to digital converter. Our system used an ESP-32 TTGO development system (Figure 4), which is being widely used in IoT applications. This was mainly due to its easy programming and to the fact that it had WiFi, LoRa, and Bluetooth communication protocols with low power consumption or BLE. These features make this device the ideal tool to be used in monitoring applications.

Figure 4.

ESP-32 TTGO developer board.

In this way, the ESP-32 transformed the analog signals returned by the sensors to digital. This was done using the analog-to-digital converter of the ESP-32. The digitized signals were transformed as voltage equivalent to the acquired signal. To carry out the transmission of the acquired data, one of the communication protocols incorporated in the development system was used. The ESP-32 TTGO incorporated three communication protocols, WiFi, LoRa, and low power Bluetooth. We used the HTTP protocol for data transfer via WiFi to the server.

3.2. Software

The sensor systems provided data about the human condition, and with this data we could form information about the exercises that the users performed and their emotional condition. This was performed by two modules that identified the exercises’ performance and the emotions. Additionally, this information was made available to the users and caregivers so they were informed about their progression.

3.2.1. Emotion Classification

To perform the emotion detection using biosignals, it was necessary to calculate the biosignal values corresponding to each emotion for concrete individuals, as biosignals vary for each person. Therefore, a dataset was created to train an artificial neural network that gave us the emotion values of each individual using the biosignals as input.

The experiment to create the dataset acquired the signals of GSR and PPG while observing a series of images, which sought to modify our emotions [37]. The experiments were performed by 20 test subjects using a database with 1182 images. This database was divided into two sets: the training set of 900 images and the test set of 282 images.

Each experiment was composed by the following steps:

- The set of training images (50) was observed by the test subjects for 10 s. During these 10 s, the signals of GSR, PPG, temperature, and heart rate were recorded and stored.

- At the same time, the subject was recorded using a camera in the monitoring system. The images recorded were used to detect the emotion expressed by the subject. This detection was performed using the Microsoft Detect Emotions Service, which detects the following emotions: anger, contempt, disgust, fear, happiness, neutral, sadness, and surprise.

- After 10 s of observation of the stimulus, the subject had 10 s more to respond to the SAM (Self-Assessment Manikin) test [38]. This test allowed us to know the emotional state of the individual in terms of PAD (Pleasure, Arousal, Dominance).

However, our dataset had two outputs: the first one corresponding to the emotion detected using the image processing and the second one the emotion obtained using the SAM (Self-Assessment Manikin) test [39]. The SAM test is a technique that allows the pictorial evaluation of emotional states using three parameters: pleasure, excitement, and dominance, which are associated with a person’s emotional reaction. SAM is an inexpensive and easy method to evaluate affective response reports in many contexts quickly. The output used to supervise the neural network training was the result obtained through the image; the SAM test gave us a qualitative description emotion associated with the image.

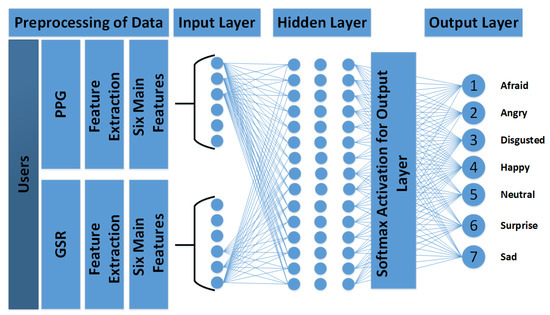

Once the dataset was built, the next step was to train the model. To do this, six features were extracted from each biosignal [40], which would allow us to perform the classification. To extract the main characteristics of this database, the equations presented by Picard [39] were used. Picard defined six equations to extract biological signal characteristics using statistical methods. Using these equations, these characteristics were extracted from PPG and GRS signals. This allowed us to use these data as input for the emotion classification algorithm, which used in-depth learning as a tool to perform this classification. Our classifier was composed of a 1D CNN (1D Convolutional Neural Network), and the network structure is shown in Figure 5.

Figure 5.

Structure of the 1D CNN used to classify emotions.

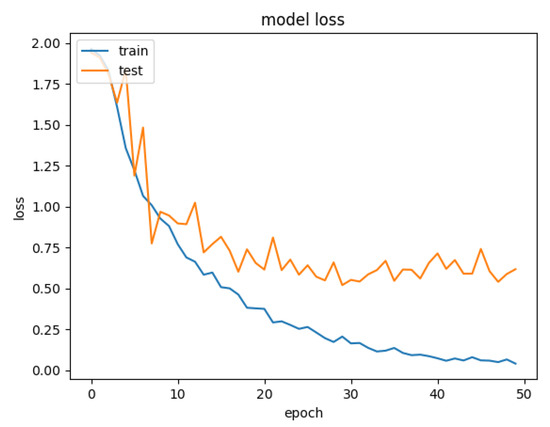

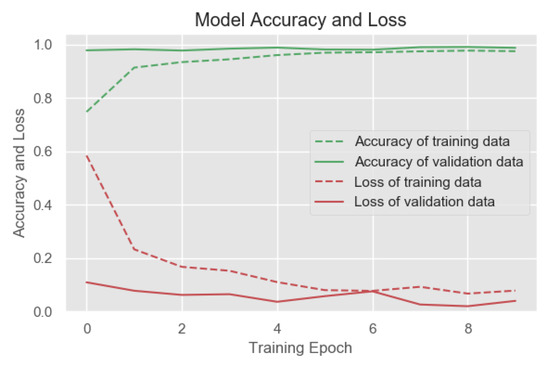

Figure 6 shows the accuracy between the input and validation data; likewise, you can observe the loss during the same process (training and validation).

Figure 6.

Model accuracy and loss of emotion recognition.

Those hyper-parameters were used in the experiments carried out in [12]. Due to the good results that were obtained there, it was decided to use the same parameters for this paper.

The network had 12 neurons in the input layer, and these corresponded to the 12 characteristics extracted from the signals (six for each signal). The hyper-parameters of the 1D-CNN are shown in Table 1.

Table 1.

1D-CNN’s hyper-parameters to classify activities.

3.2.2. Exercise Classification

Physical exercise has a direct impact on human health. Studies have shown that frequent exercise performed by older people [41] helps to reduce the risk of: stroke or heart attack, decreased bone density, developing dementia, common diseases; and boost confidence and independence. In the vast majority of cases, these exercises require the supervision of a specialist, a physiotherapist, or an expert in sports. These experts suggest the exercises to be performed, based on age and physical limitations or injuries. In some cases, this staff has to follow up, determining whether the exercises are being performed correctly. The expert recognizes whether the exercise is being done properly or not based on experience.

We propose a device to monitor remotely, capturing the movements of the wearer through two accelerometers using low energy Bluetooth for communication. These data were sent to the smartphone, which was responsible for recognizing the activity using deep learning techniques. As there was no public database, it was decided to develop our own database. This database contained five exercises, which were carried out by people aged between 30 and 50. During the exercises, people were accompanied by a physiotherapist who was responsible for determining whether the exercise was carried out correctly. Each one of the exercises: chest stretch, arm raises, one-leg stand, bicep curls, and sideways walking, had a total of 31 participants, and a total of 1000 samples was collected per exercise.

The database contained 150,000 signals; one has to be aware that these data were tripled. This was mainly because the three axes of the accelerometer (X, Y, Z) were stored, so that in the end, we obtained a database of 450,000 signals. From this database, the following partition was made to perform the training, test, and validation of our model: training 80%, test 10%, and validation 10%.

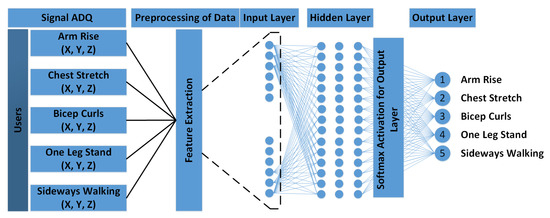

Figure 7 shows the different steps carried out for the classification of physical activities.

Figure 7.

Structure of the 1D CNN used to classify activities.

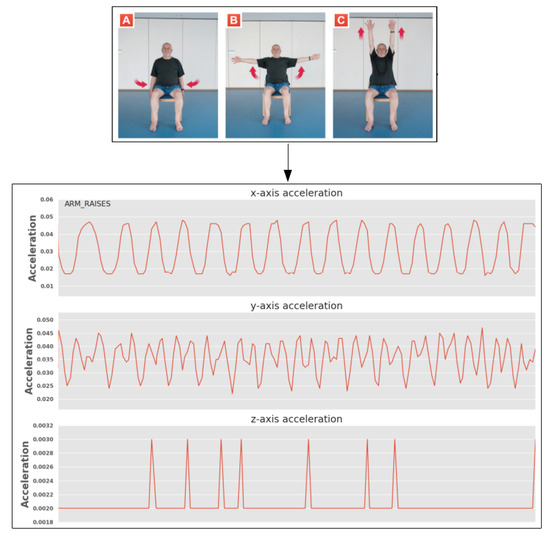

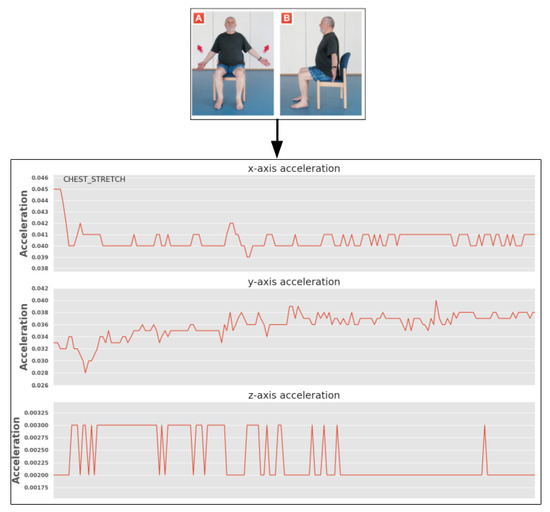

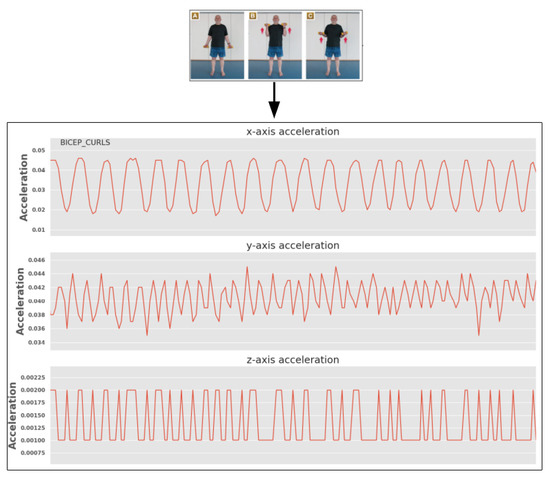

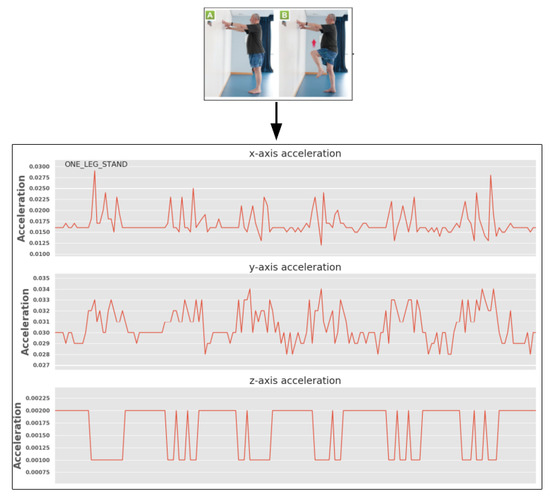

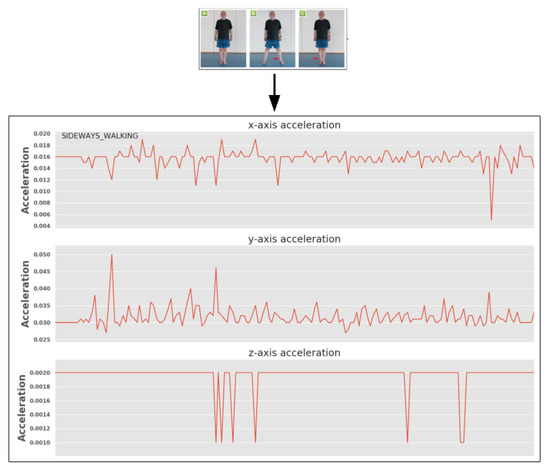

The entries that allowed carrying out the classification of the activities was the acceleration in the three axes of each of the activities. The realized activities, as well as the captured signals for each of them can be seen in Figure 8, Figure 9, Figure 10, Figure 11 and Figure 12 (the hyper-parameters of the 1D-CNN are shown in Table 2). Once the signals were obtained, they were reshaped, creating a 6415 × 150 matrix (Table 3). Once the matrix was reshaped, the data were sent to the neural network for classification.

Figure 8.

Arm rise.

Figure 9.

Chest stretch.

Figure 10.

Bicep curls.

Figure 11.

One leg stand.

Figure 12.

Sideways walking.

Table 2.

1D-CNN’s hyper-parameters to classify activities.

Table 3.

Input data from the network.

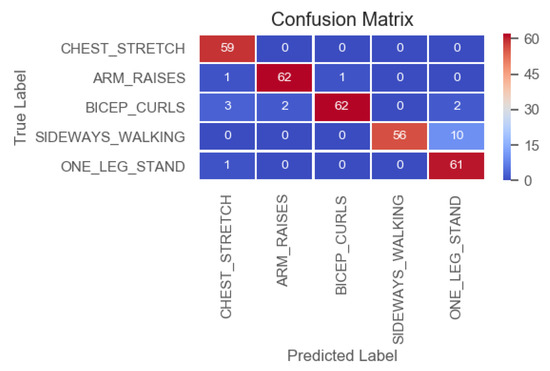

The results of the network are shown in Figure 13 and Figure 14. Figure 13 shows model accuracy and loss in the training phase.

Figure 13.

Model accuracy and loss.

Figure 14.

Confusion matrix.

Figure 14 shows the confusion matrix, which describes the false positives and false negatives of our network. The information extracted from these graphs allowed us to determine that the model adequately recognized physical activities. This matrix showed us the number of True Positives (TP), against False Negatives (TN). Based on this matrix, we could determine that our system obtained a total of 59 TP for the first exercise, a total of 62 TP for the second, for the third exercise 62 TP, the fourth exercise 56 TP, and a total of 61 TP for the fifth exercise.

3.2.3. Information Display

Currently, we are focused on extracting information from the data available and constructing reliable and accurate models that can be easily used in different scenarios. Nonetheless, the users and caregivers are able to visualize the saved information in a simple web-page. The objective is to build a multi-user/multi-level interface where the information is displayed in a personalized manner, showing different graphics to each type of user (the granularity of the information that should be displayed to the caregiver is very different from that that is displayed to the care receiver). Additionally, we will be using the features of a previous project, iGenda [18,19,20], to manage the care receivers ADL and exercises in an intelligent way, introducing cognitive help through remembering the care receivers of events and giving them advises. The aim is to improve cognition by triggering actions that help the elders to jog their memory, keeping them agile and active.

4. Conclusions

This paper presented the integration of non-invasive bio-signal monitoring for the detection and classification of human emotional states and physical activities using a low-cost, easy-to-deploy sensor system. In this manner, the developed system was used to attain data for the models capable of detecting and classifying body movements and inferring the emotion that these exercises generated in patients. Therefore, it was possible to build a system that produced complex results with minimal cost.

This was an advancement of the current state-of-the-art due to the combination of several software features on just two simple low-cost devices. As stated in Section 2, there are other projects working in this domain, but they tend to focus on off-the-shelf hardware solutions, thus suffering from less-than-optimal data access of filtered data, unlike our case, as we had total control of the data. Finally, most projects use expensive solutions, which may be a barrier for most elderly people, while we used significantly less expensive solutions that may also decrease the time-to-market value.

The proposed approach was partially validated by patients and workers of a daycare center Centro Social Irmandade de Säo Torcato. The validation was performed through the performance of simple exercises with the patients under the supervision of caregivers. The future work will focus on the development of new tests with a higher number of users and the complete version of the visual interface. These new tests will allow us to use the information obtained to improve our learning models for a better recognition of the different activities and tasks that are performed by the patients. We will also focus our future research on determining the degree of accuracy in which the patient performs the exercise, for greater confidence in making possible corrective decisions by the caregivers.

Author Contributions

Conceptualization, A.C., J.A.R., V.J., P.N., C.C.; methodology, A.C., J.A.R., V.J., P.N., C.C.; software, A.C., J.A.R., V.J., P.N., C.C.; investigation, A.C., J.A.R., V.J., P.N., C.C.; writing, original draft preparation, A.C., J.A.R., V.J., P.N., C.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Acknowledgments

This work was partly supported by the FCT (Fundação para a Ciência e Tecnología) through the Post-Doc scholarship SFRH/BPD/102696/2014 (A. Costa), by the Generalitat Valenciana (PROMETEO/2018/002), and by the Spanish Government (RTI2018-095390-B-C31).

Conflicts of Interest

The authors declare no conflict of interest.

References

- United Nations, Department of Economic and Social Affairs, Population Division. World Population Prospects 2019; Volume I: Comprehensive Tables; United Nations: New York, NY, USA, 2019. [Google Scholar]

- Licher, S.; Darweesh, S.K.L.; Wolters, F.J.; Fani, L.; Heshmatollah, A.; Mutlu, U.; Koudstaal, P.J.; Heeringa, J.; Leening, M.J.G.; Ikram, M.K.; et al. Lifetime risk of common neurological diseases in the elderly population. J. Neurol. Neurosurg. Psychiatry 2018, 90, 148–156. [Google Scholar] [CrossRef] [PubMed]

- Jaul, E.; Barron, J. Age-Related Diseases and Clinical and Public Health Implications for the 85 Years Old and Over Population. Front. Public Health 2017, 5, 335. [Google Scholar] [CrossRef]

- Brasure, M.; Desai, P.; Davila, H.; Nelson, V.A.; Calvert, C.; Jutkowitz, E.; Butler, M.; Fink, H.A.; Ratner, E.; Hemmy, L.S.; et al. Physical Activity Interventions in Preventing Cognitive Decline and Alzheimer-Type Dementia. Ann. Intern. Med. 2017, 168, 30. [Google Scholar] [CrossRef]

- Iuliano, E.; di Cagno, A.; Cristofano, A.; Angiolillo, A.; D’Aversa, R.; Ciccotelli, S.; Corbi, G.; Fiorilli, G.; Calcagno, G.; Costanzo, A.D. Physical exercise for prevention of dementia (EPD) study: Background, design and methods. BMC Public Health 2019, 19, 659. [Google Scholar] [CrossRef] [PubMed]

- Müllers, P.; Taubert, M.; Müller, N.G. Physical Exercise as Personalized Medicine for Dementia Prevention? Front. Physiol. 2019, 10. [Google Scholar] [CrossRef] [PubMed]

- Del Carmen Pérez-Fuentes, M.; Linares, J.J.G.; Fernández, M.D.R.; del Mar Molero Jurado, M. Inventory of Overburden in Alzheimer’s Patient Family Caregivers with no Specialized Training. Int. J. Clin. Health Psychol. 2017, 17, 56–64. [Google Scholar] [CrossRef]

- Berglund, E.; Lytsy, P.; Westerling, R. Health and wellbeing in informal caregivers and non-caregivers: A comparative cross-sectional study of the Swedish general population. Health Qual. Life Outcomes 2015, 13, 109. [Google Scholar] [CrossRef]

- Peña-Longobardo, L.M.; Oliva-Moreno, J. Caregiver Burden in Alzheimer’s Disease Patients in Spain. J. Alzheimer’s Dis. 2014, 43, 1293–1302. [Google Scholar] [CrossRef]

- Hoefman, R.J.; Meulenkamp, T.M.; Jong, J.D.D. Who is responsible for providing care? Investigating the role of care tasks and past experiences in a cross-sectional survey in the Netherlands. BMC Health Serv. Res. 2017, 17. [Google Scholar] [CrossRef] [PubMed]

- Pearson, C.F.; Quinn, C.C.; Loganathan, S.; Datta, A.R.; Mace, B.B.; Grabowski, D.C. The Forgotten Middle: Many Middle-Income Seniors Will Have Insufficient Resources for Housing and Health Care. Health Aff. 2019, 38. [Google Scholar] [CrossRef]

- Rincon, J.A.; Costa, A.; Novais, P.; Julian, V.; Carrascosa, C. Intelligent Wristbands for the Automatic Detection of Emotional States for the Elderly. In Intelligent Data Engineering and Automated Learning—IDEAL 2018; Springer International Publishing: Cham, Switzerland, 2018; pp. 520–530. [Google Scholar]

- Costa, A.; Novais, P.; Julian, V.; Nalepa, G.J. Cognitive assistants. Int. J. Hum.-Comput. Stud. 2018, 117, 1–3. [Google Scholar] [CrossRef]

- Martinez-Martin, E.; del Pobil, A.P. Personal Robot Assistants for Elderly Care: An Overview. In Intelligent Systems Reference Library; Springer International Publishing: Cham, Switzerland, 2017; pp. 77–91. [Google Scholar] [CrossRef]

- Costa, A.; Martinez-Martin, E.; Cazorla, M.; Julian, V. PHAROS—PHysical Assistant RObot System. Sensors 2018, 18, 2633. [Google Scholar] [CrossRef] [PubMed]

- Castillo, J.C.; Álvarez-Fernández, D.; Alonso-Martín, F.; Marques-Villarroya, S.; Salichs, M.A. Social Robotics in Therapy of Apraxia of Speech. J. Healthc. Eng. 2018, 2018, 1–11. [Google Scholar] [CrossRef] [PubMed]

- CoME. 2019. Available online: http://come-aal.eu/ (accessed on 12 June 2019).

- Costa, A.; Rincon, J.A.; Carrascosa, C.; Novais, P.; Julian, V. Activities suggestion based on emotions in AAL environments. Artif. Intell. Med. 2018, 86, 9–19. [Google Scholar] [CrossRef] [PubMed]

- Costa, A.; Novais, P.; Simoes, R. A caregiver support platform within the scope of an ambient assisted living ecosystem. Sensors 2014, 14, 5654–5676. [Google Scholar] [CrossRef] [PubMed]

- Costa, Â.; Heras, S.; Palanca, J.; Jordán, J.; Novais, P.; Julian, V. Using Argumentation Schemes for a Persuasive Cognitive Assistant System. In Multi-Agent Systems and Agreement Technologies; Springer International Publishing: Cham, Switzerland, 2017; pp. 538–546. [Google Scholar] [CrossRef]

- Wang, J.; Chen, Y.; Hao, S.; Peng, X.; Hu, L. Deep learning for sensor-based activity recognition: A survey. Pattern Recognit. Lett. 2019, 119, 3–11. [Google Scholar] [CrossRef]

- Nweke, H.F.; Teh, Y.W.; Al-garadi, M.A.; Alo, U.R. Deep learning algorithms for human activity recognition using mobile and wearable sensor networks: State of the art and research challenges. Expert Syst. Appl. 2018, 105, 233–261. [Google Scholar] [CrossRef]

- Martinez-Martin, E.; Cazorla, M. A Socially Assistive Robot for Elderly Exercise Promotion. IEEE Access 2019, 7, 75515–75529. [Google Scholar] [CrossRef]

- Martinez-Martin, E.; Cazorla, M. Rehabilitation Technology: Assistance from Hospital to Home. Comput. Intell. Neurosci. 2019, 2019, 1–8. [Google Scholar] [CrossRef]

- Cruz, E.; Escalona, F.; Bauer, Z.; Cazorla, M.; García-Rodríguez, J.; Martinez-Martin, E.; Rangel, J.C.; Gomez-Donoso, F. Geoffrey: An Automated Schedule System on a Social Robot for the Intellectually Challenged. Comput. Intell. Neurosci. 2018, 2018, 1–17. [Google Scholar] [CrossRef]

- Vepakomma, P.; De, D.; Das, S.K.; Bhansali, S. A-Wristocracy: Deep learning on wrist-worn sensing for recognition of user complex activities. In Proceedings of the 2015 IEEE 12th International Conference on Wearable and Implantable Body Sensor Networks (BSN), Cambridge, MA, USA, 9–12 June 2015; IEEE: Piscataway, NJ, USA, 2015. [Google Scholar] [CrossRef]

- Cao, L.; Wang, Y.; Zhang, B.; Jin, Q.; Vasilakos, A.V. GCHAR: An efficient Group-based Context—aware human activity recognition on smartphone. J. Parallel Distrib. Comput. 2018, 118, 67–80. [Google Scholar] [CrossRef]

- Marechal, C.; Mikołajewski, D.; Tyburek, K.; Prokopowicz, P.; Bougueroua, L.; Ancourt, C.; Wegrzyn-Wolska, K. Survey on AI-Based Multimodal Methods for Emotion Detection. In Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2019; pp. 307–324. [Google Scholar] [CrossRef]

- Garcia-Garcia, J.M.; Penichet, V.M.R.; Lozano, M.D. Emotion detection. In Proceedings of the XVIII International Conference on Human Computer Interaction (Interacción’17), Cancun, Mexico, September 2017; ACM Press: New York, NY, USA, 2017. [Google Scholar]

- Brás, S.; Ferreira, J.H.T.; Soares, S.C.; Pinho, A.J. Biometric and Emotion Identification: An ECG Compression Based Method. Front. Psychol. 2018, 9. [Google Scholar] [CrossRef] [PubMed]

- Goshvarpour, A.; Abbasi, A.; Goshvarpour, A. An accurate emotion recognition system using ECG and GSR signals and matching pursuit method. Biomed. J. 2017, 40, 355–368. [Google Scholar] [CrossRef] [PubMed]

- Naji, M.; Firoozabadi, M.; Azadfallah, P. A new information fusion approach for recognition of music-induced emotions. In Proceedings of the IEEE-EMBS International Conference on Biomedical and Health Informatics (BHI), Chicago, IL, USA, 19–22 May 2019; IEEE: Piscataway, NJ, USA, 2014. [Google Scholar] [CrossRef]

- Naji, M.; Firoozabadi, M.; Azadfallah, P. Emotion classification during music listening from forehead biosignals. Signal Image Video Process. 2015, 9, 1365–1375. [Google Scholar] [CrossRef]

- Seoane, F.; Mohino-Herranz, I.; Ferreira, J.; Alvarez, L.; Buendia, R.; Ayllón, D.; Llerena, C.; Gil-Pita, R. Wearable Biomedical Measurement Systems for Assessment of Mental Stress of Combatants in Real Time. Sensors 2014, 14, 7120–7141. [Google Scholar] [CrossRef]

- Diaz, K.M.; Krupka, D.J.; Chang, M.J.; Peacock, J.; Ma, Y.; Goldsmith, J.; Schwartz, J.E.; Davidson, K.W. Fitbit®: An accurate and reliable device for wireless physical activity tracking. Int. J. Cardiol. 2015, 185, 138–140. [Google Scholar] [CrossRef]

- Falter, M.; Budts, W.; Goetschalckx, K.; Cornelissen, V.; Buys, R. Accuracy of Apple Watch Measurements for Heart Rate and Energy Expenditure in Patients with Cardiovascular Disease: Cross-Sectional Study. JMIR mHealth uHealth 2019, 7, e11889. [Google Scholar] [CrossRef]

- Rincon, J.A.; Julian, V.; Carrascosa, C.; Costa, A.; Novais, P. Detecting emotions through non-invasive wearables. Log. J. IGPL 2018, 26, 605–617. [Google Scholar] [CrossRef]

- Porcu, S.; Uhrig, S.; Voigt-Antons, J.N.; Möller, S.; Atzori, L. Emotional Impact of Video Quality: Self-Assessment and Facial Expression Recognition. In Proceedings of the 2019 Eleventh International Conference on Quality of Multimedia Experience (QoMEX), Berlin, Germany, 5–7 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–6. [Google Scholar]

- Tsonos, D.; Kouroupetroglou, G. A methodology for the extraction of reader’s emotional state triggered from text typography. In Tools in Artificial Intelligence; IntechOpen: London, UK, 2008. [Google Scholar]

- Rincon, J.A.; Costa, A.; Carrascosa, C.; Novais, P.; Julian, V. EMERALD—Exercise Monitoring Emotional Assistant. Sensors 2019, 19, 1953. [Google Scholar] [CrossRef]

- Kannus, P.; Sievänen, H.; Palvanen, M.; Järvinen, T.; Parkkari, J. Prevention of falls and consequent injuries in elderly people. Lancet 2005, 366, 1885–1893. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).