Using Recurrent Neural Network to Optimize Electronic Nose System with Dimensionality Reduction

Abstract

1. Introduction

2. Related Work

2.1. The Related Applications

2.2. The Inherent Technologies

3. LLE-Based Dimensionality Reduction

3.1. Graph Construction

3.2. Weight Determination

3.3. Data Mapping

4. RNN-Based Pattern Recognition

4.1. LSTM-Based RNN

4.2. GA-based Weight Assignment

5. Results

5.1. Dataset Collection

5.2. Experiment Method

5.3. RNN Performance Analysis

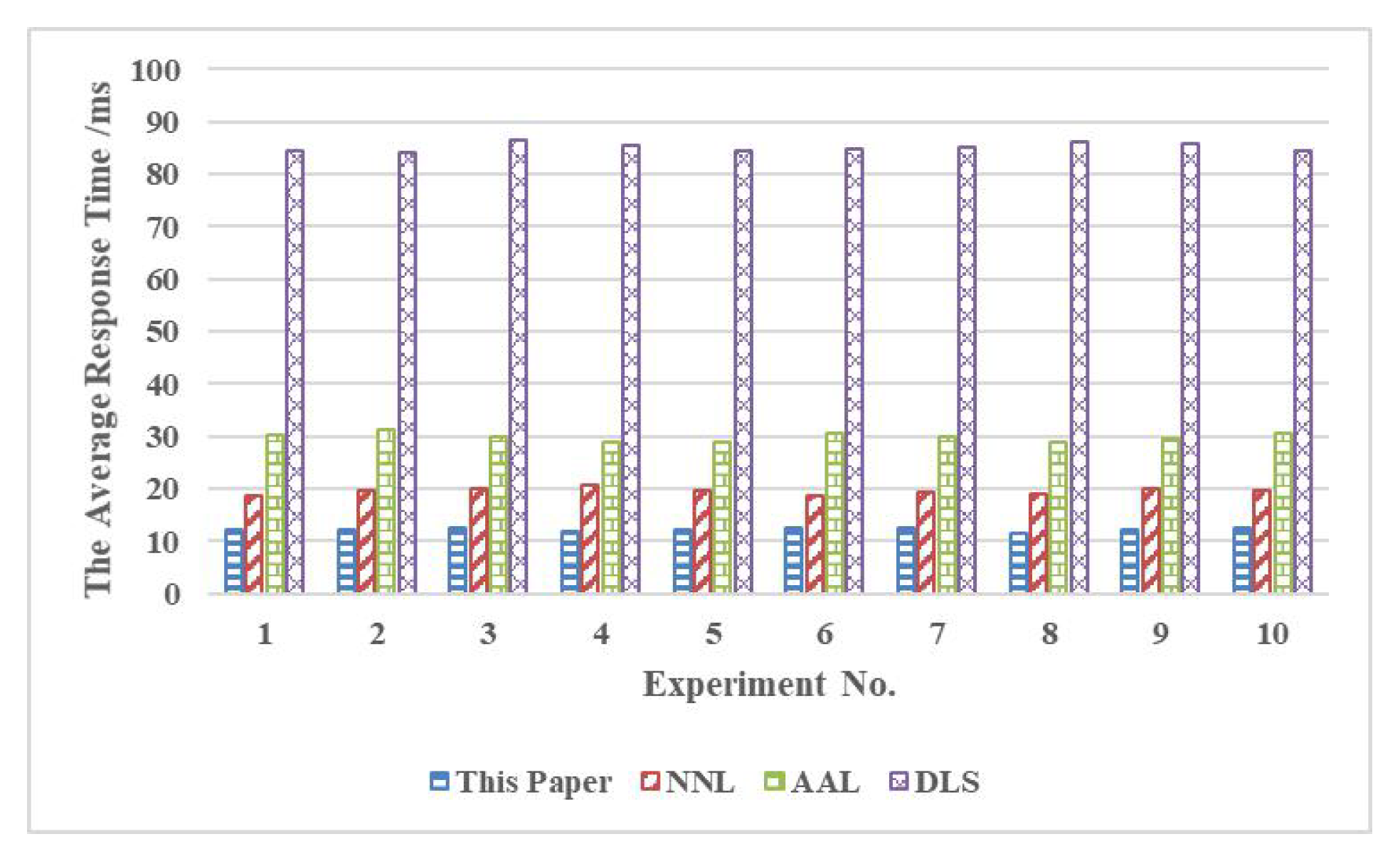

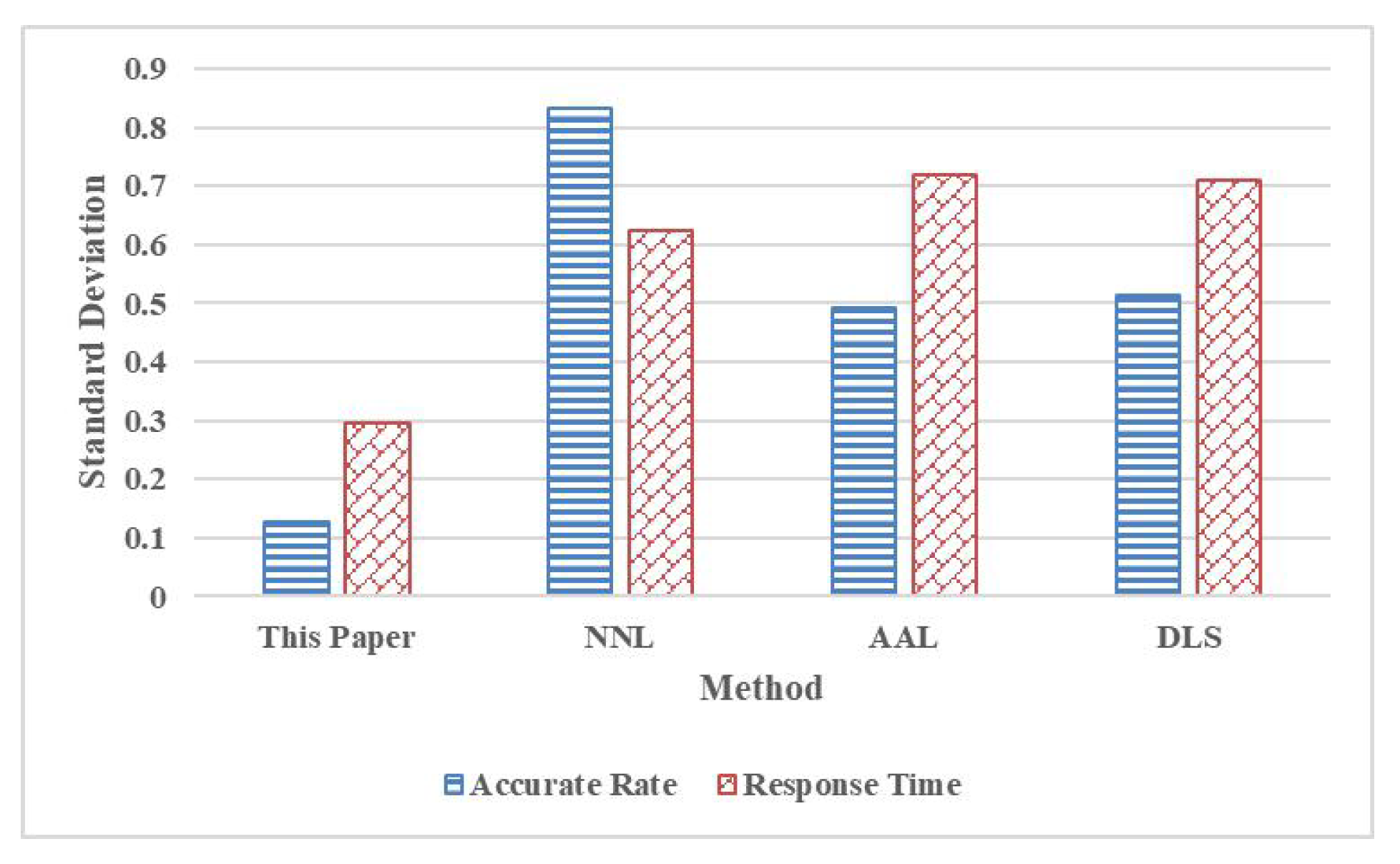

5.4. Comparison Analysis

5.5. Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| NN | Neural Network |

| ANN | Artificial NN |

| CNN | Artificial NN |

| DNN | Deep NN |

| GNN | Graph NN |

| RNN | Recurrent Neural Network |

| LLE | Locally Linear Embedding |

| AI | Artificial Intelligence |

| PCA | Principal Component Analysis |

| LDA | Linear Discriminant Analysis |

| LE | Laplacian Eigenmaps |

| t-SNE | t-Stochastic Neighbor Embedding |

| GA | Genetic Algorithm |

| SVM | Support Vector Machine |

| KNN | K-Nearest Neighbor |

| LSTM | Long Short-Term Memory |

| NNL | Natural Neural Learning |

| AAL | Adaptive Active Learning |

| DLS | Deep Learning models and SVMs |

References

- Zhang, L.; Tian, F.; Zhang, D. Electronic Nose: Algorithmic Challenges; Springer Nature: Singapore, 2018. [Google Scholar]

- Dymerski, T.M.; Chmiel, T.M.; Wardencki, W. Invited review article: An odor-sensing system-powerful technique for foodstuff studies. Rev. Sci. Instrum. 2011, 82, 1–32. [Google Scholar] [CrossRef] [PubMed]

- Karakaya, D.; Ulucan, O.; Turkan, M. Electronic nose and its applications: A survey. Int. J. Autom. Comput. 2020, 17, 179–209. [Google Scholar] [CrossRef]

- Hurot, C.; Scaramozzino, N.; Buhot, A. Bio-inspired strategies for improving the selectivity and sensitivity of artificial noses a review. Sensors 2020, 20, 1803. [Google Scholar] [CrossRef] [PubMed]

- Ma, L.; Cheng, S.; Shi, Y. Enhancing Learning Efficiency of Brain Storm Optimization via Orthogonal Learning Design. IEEE Trans. Syst. Man Cybern. Syst. 2020. [Google Scholar] [CrossRef]

- Hassanabadi, M.E.; Heidarpour, A.; Azam, S.E.; Arashpour, M. Recursive principal component analysis for model order reduction with application in nonlinear Bayesian filtering. Comput. Methods Appl. Mech. Eng. 2020, 71, 1–27. [Google Scholar]

- Ji, S.; Ye, J. Generalized linear discriminant analysis: A unified framework and efficient model selection. IEEE Trans. Neural Netw. 2018, 19, 1768–1782. [Google Scholar]

- Gerber, S.; Tasdizen, T.; Tyler, R. Robust non-linear dimensionality reduction using successive 1-dimensional Laplacian Eigenmaps. In Proceedings of the 24th International Conference on Machine Learning, Corvalis, OR, USA, 20–24 June 2007; pp. 281–288. [Google Scholar]

- Cieslak, M.C.; Castelfranco, A.M.; Roncalli, V.; Lenz, P.H.; Hartline, D.K. t-Distributed Stochastic Neighbor Embedding (t-SNE): A tool for eco-physiological transcriptomic analysis. Mar. Genom. 2019, 51, 1–13. [Google Scholar] [CrossRef]

- Wang, J.; Wong, R.K.W.; Lee, T.C.M. Locally linear embedding with additive noise. Pattern Recognit. Lett. 2019, 123, 47–52. [Google Scholar] [CrossRef]

- Vidal, E.; Thollard, F.; de la Higuera, C.; Casacuberta, F.; Carrasco, R.C. Probabilistic finite-state machines-part I. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1013–1025. [Google Scholar] [CrossRef]

- Raudys, S.J.; Jain, A.K. Small sample size effects in statistical pattern recognition: Recommendations for practitioners. IEEE Trans. Pattern Anal. Mach. Intell. 1991, 13, 252–264. [Google Scholar] [CrossRef]

- Rico-Juan, J.R.; Valero-Mas, J.J.; Inesta, J.M. Bounding Edit Distance for similarity-based sequence classification on structural pattern recognition. Appl. Soft Comput. 2020, 97, 1–9. [Google Scholar] [CrossRef]

- Shahmoradi, S.; Shouraki, S.B. Evaluation of a novel fuzzy sequential pattern recognition tool (fuzzy elastic matching machine) and its applications in speech and handwriting recognition. Appl. Soft Comput. 2018, 62, 315–327. [Google Scholar] [CrossRef]

- Tarigan, J.; Nadia; Diedan, R.; Suryana, Y. Plate recognition using backpropagation neural network and genetic algorithm. Procedia Comput. Sci. 2017, 116, 365–372. [Google Scholar] [CrossRef]

- Yoon, B.L. Artificial neural network technology. ACM Sigsmall/PC Notes 1989, 15, 3–16. [Google Scholar] [CrossRef]

- Sirohi, D.; Kumar, N.; Rana, P.S. Convolutional neural networks for 5G-enabled intelligent transportation system: A systematic review. Comput. Commun. 2020, 153, 459–498. [Google Scholar] [CrossRef]

- Huang, X.; Kroening, D.; Ruan, W.; Sharp, J.; Sun, Y.; Thamo, E.; Wu, M.; Yi, X. A survey of safety and trustworthiness of deep neural networks: Verification, testing, adversarial attack and defence, and interpretability. Comput. Sci. Rev. 2020, 37, 1–35. [Google Scholar] [CrossRef]

- Zhang, C.; Song, D.; Huang, C.; Swami, A.; Chawla, N.V. Heterogeneous graph neural network. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 793–803. [Google Scholar]

- Yi, J.; Park, J. Hypergraph convolutional recurrent neural network. In Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Virtual Event, CA, USA, 23–27 August 2020; pp. 3366–3376. [Google Scholar]

- Cheng, S.; Ma, L.; Lu, H.; Lei, X.; Shi, Y. Evolutionary computation for solving search-based data analytics problems. Artif. Intell. Rev. 2020. [Google Scholar] [CrossRef]

- Yang, Y.; Liu, H.; Gu, Y. A model transfer learning framework with back-propagation neural network for wine and Chinese liquor detection by electronic nose. IEEE Access 2020, 8, 105278–105285. [Google Scholar] [CrossRef]

- Shi, Y.; Gong, F.; Wang, M.; Liu, J.; Wu, Y.; Men, H. A deep feature mining method of electronic nose sensor data for identifying beer olfactory information. J. Food Eng. 2019, 163, 437–445. [Google Scholar] [CrossRef]

- Tian, Y.; Yan, J.; Zhang, Y.; Yu, T.; Wang, P.; Shi, D.; Duan, S. A drift-compensating novel deep belief classification network to improve gas recognition of electronic noses. IEEE Access 2020, 8, 121385–121397. [Google Scholar] [CrossRef]

- Liu, Y.; Tang, K. A minimum distance inlier probability (MDIP) feature selection method to improve gas classification for electronic nose systems. IEEE Access 2020, 8, 133928–133935. [Google Scholar] [CrossRef]

- Zhang, W.; Liu, T.; Ueland, M.; Forbes, S.L.; Wang, R.X.; Su, S.W. Design of an efficient electronic nose system for odour analysis and assessment. Measurement 2020, 165, 108089. [Google Scholar] [CrossRef]

- Lelono, D.; Abdillah, M.Z.; Widodo, T.W.; Apandi, M. Clusterization of pure and formalin fresh noodles with electronic nose based on kernel principal component analysis. In Proceedings of the International Conference on Science and Technology (ICST), Yogyakarta, Indonesia, 30–31 July 2019; pp. 1–5. [Google Scholar]

- Cruz, J.C.D.; Garcia, R.G.; Collado, A.N.M.; Jovero, R.J.S.; Macalangcom, R.V.; Tud, R.C. Formalin on fresh tilapia via electronic nose and assessment of toxicity levels with reference to average adult filipino weight. In Proceedings of the 11th IEEE International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management (HNICEM), Laoag, Philippines, 29 November–1 December 2019; pp. 1–6. [Google Scholar]

- Espanola, J.L.; Bandala, A.A.; Vicerra, R.R.P.; Dadios, E.P. Optimization of extracted features from an explosive-detecting electronic nose using genetic algorithm. In Proceedings of the IEEE Conference on Robotics, Automation and Mechatronics (RAM), Bangkok, Thailand, 18–20 November 2019; pp. 148–152. [Google Scholar]

- Lelono, D.; Putri, R.P.; Atmaji, C. Tofu shelf life using electronic nose based on curve fitting method. In Proceedings of the International Conference on Science and Technology (ICST), Yogyakarta, Indonesia, 30–31 July 2019; pp. 1–4. [Google Scholar]

- Jiang, H.; Zhang, H.; Chen, Q.; Mei, C.; Liu, G. Recent advances in electronic nose techniques for monitoring of fermentation process. World J. Microbiol. Biotechnol. 2015, 31, 1845–1852. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Wang, Z.; Yang, M.; Luo, Z.; Wang, Y.; Li, G. An electronic nose-based assistive diagnostic prototype for lung cancer detection with conformal prediction. Measurement 2020, 158, 107588. [Google Scholar] [CrossRef]

- Hazarika, S.; Choudhury, R.; Montazer, B.; Medhi, S.; Goswami, M.P.; Sarma, U. Detection of citrus tristeza virus in mandarin orange using a custom-developed electronic nose system. IEEE Trans. Instrum. Meas. 2020, 69, 9010–9018. [Google Scholar] [CrossRef]

- Gao, H.; Jia, P.; Xu, D.; Jiang, Y.; Qiao, S. Feature extraction of citrus juice during storage for electronic nose based on cellular neural network. IEEE Sens. J. 2020, 20, 3803–3812. [Google Scholar]

- Li, J.; Shao, Y.; Yao, Y.; Yu, Y.; Cao, G.; Zou, H.; Yan, Y. A novel quality evaluation method for magnolia bark using electronic nose and colorimeter data with multiple statistical algorithms. J. Tradit. Chin. Med Sci. 2020, 7, 221–227. [Google Scholar] [CrossRef]

- Ali, M.M.; Hashim, N.; Aziz, S.A.; Lasekan, O. Principles and recent advances in electronic nose for quality inspection of agricultural and food products, Principles and recent advances in electronic nose for quality inspection of agricultural and food products. Trends Food Sci. Technol. 2020, 99, 1–10. [Google Scholar]

- Wang, Y.; Dai, J.; Wang, Z.; Zhan, X.; Zhang, B.; Li, N.; Li, G. An optimized deep convolutional neural network for dendrobium classification based on electronic nose. Sens. Actuators A 2020, 307, 1–9. [Google Scholar] [CrossRef]

- Majchrzak, T.; Wojnowski, W.; Glowacz-Rozynska, A.; Wasik, A. On-line assessment of oil quality during deep frying using an electronic nose and proton transfer reaction mass spectrometry. Food Control 2021, 121, 1–9. [Google Scholar] [CrossRef]

- Gu, D.; Liu, W.; Yan, Y.; Wei, W.; Gan, J.; Lu, Y.; Jiang, Z.; Wang, X.; Xu, C. A novel method for rapid quantitative evaluating formaldehyde in squid based on electronic nose. LWT Food Sci. Technol. 2019, 101, 382–388. [Google Scholar] [CrossRef]

- Liu, H.; Yu, D.; Gu, Y. Classification and evaluation of quality grades of organic green teas using an electronic nose based on machine learning algorithms. IEEE Access 2019, 7, 172965–172973. [Google Scholar] [CrossRef]

- Zambotti, G.; Soprani, M.; Gobbi, E.; Capuano, R.; Pasqualetti, V.; Di Natale, C.; Ponzoni, A. Early detection of fish degradation by electronic nose. In Proceedings of the IEEE International Symposium on Olfaction and Electronic Nose (ISOEN), Fukuoka, Japan, 26–29 May 2019; pp. 1–3. [Google Scholar]

- Xu, D.; Jia, P.; Cao, H.; Cao, W.; Wu, G. A novel technique solving shortages of low-concentration samples of electronic nose based on global and local features fusion. IEEE Sens. J. 2020, 20, 11412–11420. [Google Scholar] [CrossRef]

- Ouhmad, S.; Makkaoui, K.E.; Beni-Hssane, A.; Hajami, A.; Ezzati, A. An electronic nose natural neural learning model in real work environment. IEEE Access 2019, 7, 134871–134880. [Google Scholar] [CrossRef]

- Yi, Z.; Li, C. Anti-drift in electronic nose via dimensionality reduction: A discriminative subspace projection approach. IEEE Access 2019, 7, 170087–170095. [Google Scholar] [CrossRef]

- Leon-Medina, J.X.; Anaya, M.; Pozo, F.; Tibaduiza, D.A. Application of manifold learning algorithms to improve the classification performance of an electronic nose. In Proceedings of the IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Dubrovnik, Croatia, 25–28 May 2020; pp. 1–6. [Google Scholar]

- Qian, J.; Luo, Y.; Tian, F.; Liu, R.; Yang, T. Design of multi-sensor electronic nose based on conformal sensor chamber. IEEE Trans. Ind. Electron. 2020, in press. [Google Scholar] [CrossRef]

- Liu, T.; Chen, Y.; Li, D. Drift compensation for an electronic nose by adaptive subspace learning. IEEE Sens. J. 2020, 20, 337–347. [Google Scholar] [CrossRef]

- Manna, A. Drift compensation for electronic nose by multiple classifiers system with genetic algorithm optimized feature subset. In Proceedings of the International Conference on Computer Communication and Informatics (ICCCI), Coimbatore, India, 22–24 January 2020; pp. 1–7. [Google Scholar]

- Verma, P.; Sinha, M.; Panda, S. Fuzzy c-means clustering based novel threshold criteria for outlier detection in electronic nose. IEEE Sens. J. 2020, in press. [Google Scholar] [CrossRef]

- Leon-Medina, J.X.; Pineda-Munoz, W.A.; Burgos, D.A.T. Joint distribution adaptation for drift correction in electronic nose type sensor arrays. IEEE Access 2020, 8, 134413–134421. [Google Scholar] [CrossRef]

- Liu, T.; Li, D.; Chen, Y.; Wu, M.; Yang, T.; Cao, J. Online drift compensation by adaptive active learning on mixed kernel for electronic noses. Sens. Actuators B. Chem. 2020, 316, 1–10. [Google Scholar] [CrossRef]

- Shaposhnik, A.V.; Moskalev, P.V. Processing electronic nose data using artificial neural networks. In Proceedings of the International Conference on Computing for Sustainable Global Development, New Delhi, India, 12–14 March 2020; pp. 208–209. [Google Scholar]

- Jia, P.; Meng, F.; Cao, H.; Duan, S.; Peng, X.; Xu, M. Training technique of electronic nose using labeled and unlabeled samples based on multi-kernel LapSVM. Sens. Actuators B Chem. 2019, 294, 98–105. [Google Scholar] [CrossRef]

- Gamboa, J.C.R.; da Silva, A.J.; Araujo, I.C.S.; Albarracin E., E.S.; Duran A., C.M. Validation of the rapid detection approach for enhancing the electronic nose systems performance, using different deep learning models and support vector machines. Sens. Actuators B Chem. 2021, 327, 1–7. [Google Scholar]

- Kumbure, M.M.; Luukka, P.; Collan, M. A new fuzzy k-nearest neighbor classifier based on the Bonferroni mean. Pattern Recognit. Lett. 2020, 140, 172–178. [Google Scholar] [CrossRef]

- Sherstinsky, A. Fundamentals of recurrent neural network (RNN) and long short-term memory (LSTM) network. Phys. D Nonlinear Phenom. 2020, 40, 1–28. [Google Scholar] [CrossRef]

- Zhang, L.; Liu, Y.; He, Z.; Liu, J.; Deng, P.; Zhou, X. Anti-drift in E-nose: A subspace projection approach with drift reduction. Sens. Actuators B Chem. 2017, 253, 407–417. [Google Scholar] [CrossRef]

| Batch | Ammonia | Benzene | Carbon Monoxide | Formaldehyde | Nitrogen Dioxide | Toluene | Total |

|---|---|---|---|---|---|---|---|

| Master | 60 | 72 | 58 | 126 | 38 | 66 | 420 |

| Slave 1 | 81 | 108 | 98 | 108 | 107 | 106 | 608 |

| Slave 2 | 84 | 87 | 95 | 108 | 108 | 94 | 576 |

| Batch | Ammonia | Benzene | Carbon Monoxide | Formaldehyde | Nitrogen Dioxide | Toluene |

|---|---|---|---|---|---|---|

| Master | 98.26 | 97.38 | 98.29 | 97.68 | 98.16 | 98.22 |

| Slave 1 | 95.87 | 96.19 | 97.46 | 96.89 | 97.37 | 96.95 |

| Slave 2 | 96.76 | 97.13 | 96.55 | 97.61 | 97.08 | 98.33 |

| Batch | Ammonia | Benzene | Carbon Monoxide | Formaldehyde | Nitrogen Dioxide | Toluene |

|---|---|---|---|---|---|---|

| Master | 96.97 | 97.83 | 96.09 | 97.86 | 97.66 | 96.58 |

| Slave 1 | 96.75 | 95.28 | 98.12 | 99.03 | 97.64 | 98.59 |

| Slave 2 | 97.38 | 97.19 | 96.28 | 96.79 | 98.32 | 98.76 |

| Batch | Ammonia | Benzene | Carbon Monoxide | Formaldehyde | Nitrogen Dioxide | Toluene |

|---|---|---|---|---|---|---|

| Master | 97.61 | 97.60 | 97.18 | 97.77 | 97.91 | 97.39 |

| Slave 1 | 96.31 | 95.73 | 97.79 | 97.95 | 97.50 | 97.76 |

| Slave 2 | 97.07 | 97.16 | 96.41 | 97.20 | 97.70 | 98.54 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zou, Y.; Lv, J. Using Recurrent Neural Network to Optimize Electronic Nose System with Dimensionality Reduction. Electronics 2020, 9, 2205. https://doi.org/10.3390/electronics9122205

Zou Y, Lv J. Using Recurrent Neural Network to Optimize Electronic Nose System with Dimensionality Reduction. Electronics. 2020; 9(12):2205. https://doi.org/10.3390/electronics9122205

Chicago/Turabian StyleZou, Yanan, and Jianhui Lv. 2020. "Using Recurrent Neural Network to Optimize Electronic Nose System with Dimensionality Reduction" Electronics 9, no. 12: 2205. https://doi.org/10.3390/electronics9122205

APA StyleZou, Y., & Lv, J. (2020). Using Recurrent Neural Network to Optimize Electronic Nose System with Dimensionality Reduction. Electronics, 9(12), 2205. https://doi.org/10.3390/electronics9122205