Optical Flow Filtering-Based Micro-Expression Recognition Method

Abstract

1. Introduction

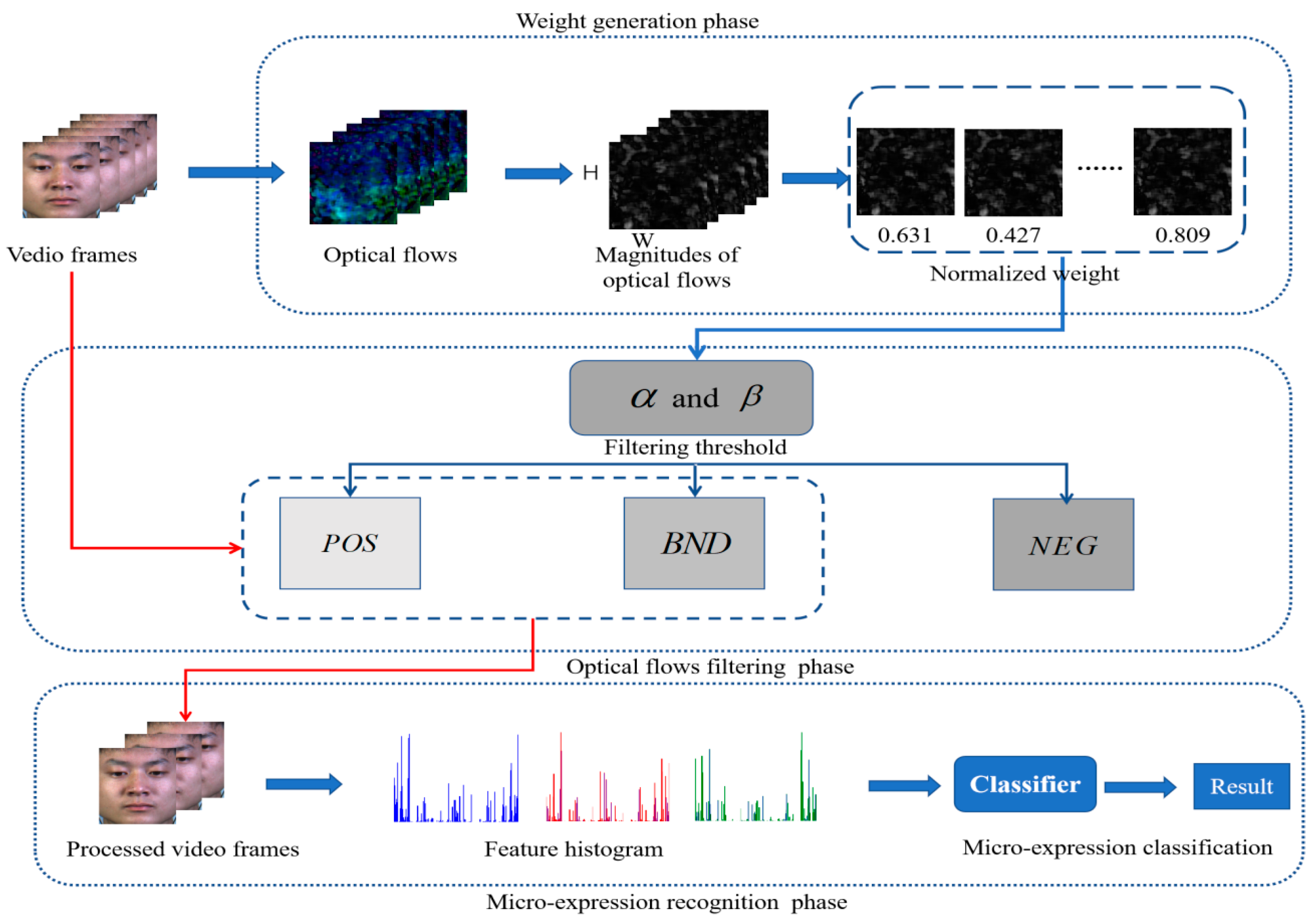

- From the morphological change perspective, we propose a novel image weighting method based on optical flow to assign semantic quality to images between video clips;

- Two optical flow filtering algorithms are proposed based on two-branch decisions and three-way decisions and a framework of micro-expression recognition, which establishes an interpretable and robust micro-expression recognition theory;

- Compared with the state-of-the-art solution, the experiment proves the superiority of the effectiveness and efficiency of this method.

2. Related Works

3. Proposed Methods

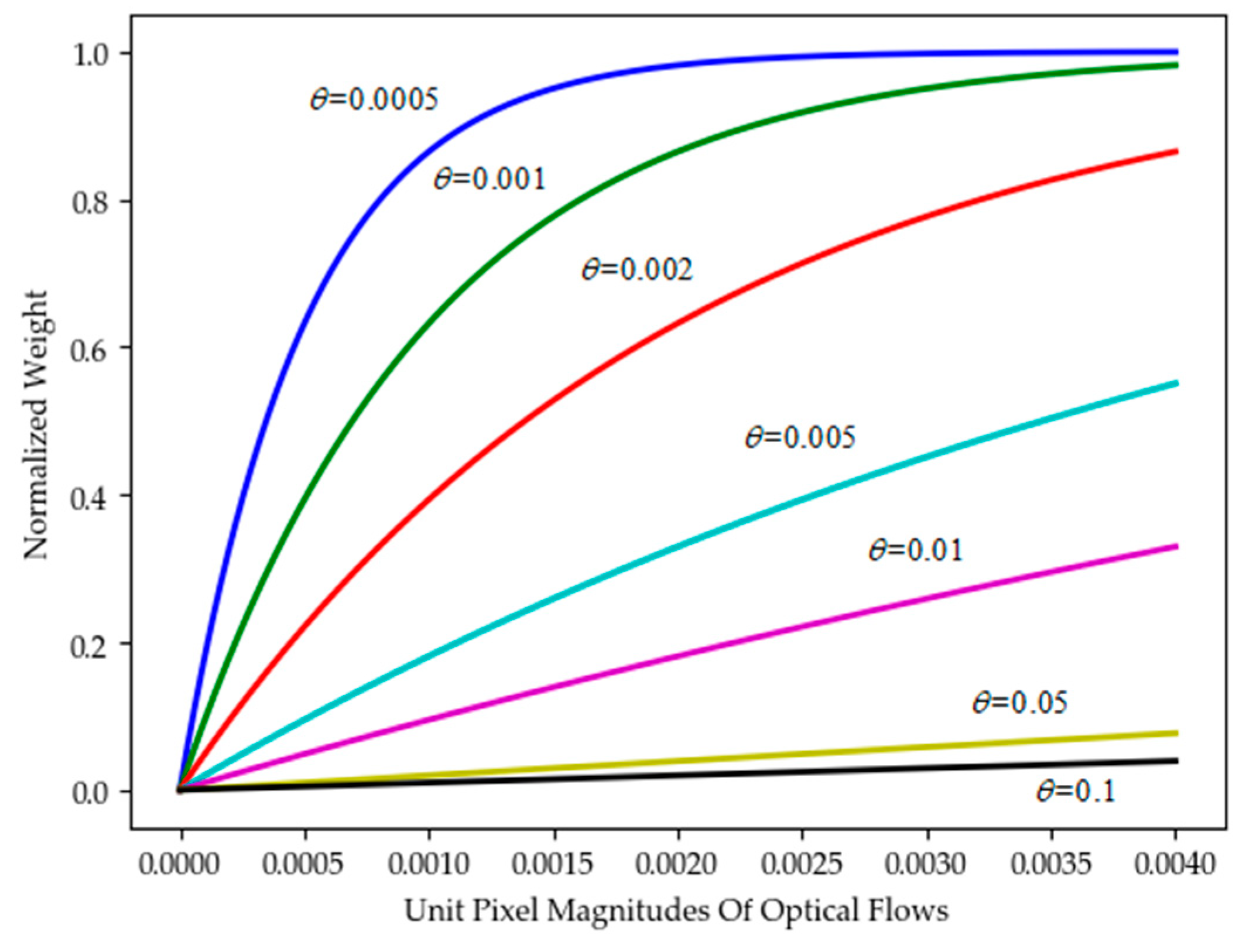

3.1. Weight Generation Phase

3.2. Optical Flows Filtering Phase

3.2.1. Optical Flows Filtering Algorithm Based on Two-Branch Decisions

| Algorithm 1 Optical Flows Filtering Algorithm Based on Two-Branch Decisions (OFF2BD) |

| Input: video frames = {}, threshold δ Output: High-quality semantic image set after optical flow filtering 1. For /*Get initial optical flow set */; 2. For ; 3. Generate optical flow for and ; 4. End for 5. End for 6. Obtain optical flow set ; 7. For /*Two-branch decisions iteration for optical flow in */; 8. Calculate the average optical flow motion intensity of optical flow w.r.t. Formulae (2) and (3); 9. Calculate the optical flow weight based on the average optical flow motion intensity w.r.t. formula 4; 10. If then; 11. put into ; 12. Else; 13. put into ; 14. End if; 15. End for; 16. Delete the optical flows set of and update the optical flow set with ; 17. Update video set according to the updated optical flow set ; 18. End for; 19. Output high-quality semantic image set |

3.2.2. Optical Flows Filtering Algorithm Based on Three-Way Decisions

| Algorithm 2 Optical flows filtering algorithm based on three-way decision (OFF3WD) |

| Input: video frames ={ }, threshold , iteration coefficient ; Output: High-quality semantic image set after optical flow filtering. 1. For /*Filtering iteration for video frames in set */; 2. For /*Get initial optical flow set */; 3. For ; 4. Generate optical flow for and ; 5. End for 6. End for 7. Obtain optical flow set ; 8. For /*Three-way decision iteration for optical flow in */; 9. Calculate the average optical flow motion intensity of optical flow w.r.t. Formulae (2) and (3); 10. Calculate the optical flow weight based on the average optical flow motion intensity w.r.t. Formula (4); 11. If ≥ then; 12. put into ; 13. If then; 14. put into ; 15. Else; 16. put into ; 17. End if; 18. When then;/*Reach the convergence condition*/ 19. Combine and to update ; 20. Break; 21. Increase the threshold naturally and delete the optical flows set of ; 22. Combine and to update ; 23. Update video set according to the updated optical flow set ; 24. End for; 25. End for; 26. Obtain the video frame set according to the completed iteration condition or the converged optical flow set ; 27. Output high-quality semantic image set . |

3.3. Micro-Expression Recognition Phase

3.3.1. Feature Extraction

3.3.2. Micro-Expression Recognition

4. Experiments and Discussion

4.1. Datasets

4.2. Experimental Setting

- Sensitivity of the parameter to normalization function .

- Sensitivity of the threshold to OFF2BD.

- Sensitivity of the threshold to OFF3WD.

- The comparison of performance scores between refined algorithms (OFF2BD-LBPTOP, OFF3WD-LBPTOP, OFF2BD-LBPSIP, OFF3WD-LBPSIP, OFF2BD-STCLQP, OFF3WD-STCLQP, OFF2BD-STLBPIP, OFF3WD-STLBIP) and original algorithms (LBPTOP, LBPSIP, STCLQP, STLBPIP).

- The confusion matrix of recognition accuracies for each micro-expression on CASMEII and SMIC dataset.

4.3. Experiments and Analysis

4.3.1. Sensitivity of the Threshold to OFF2BD

4.3.2. Sensitivity of the Threshold to OFF3WD

4.3.3. Comparison with the State-of-the-Art Methods

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Ekman, P.; Friesen, W.V. Nonverbal leakage and clues to deception. Psychiatry Interpers. Biol. Process. 1969, 32, 88–106. [Google Scholar] [CrossRef] [PubMed]

- Ekman, R. What the Face Reveals: Basic and Applied Studies of Spontaneous Expression Using the Facial Action Coding System (FACS); Oxford University Press: New York, NY, USA, 1997. [Google Scholar]

- Li, X.; Pfister, T.; Huang, X.; Zhao, G.; Pietikäinen, M. A spontaneous micro-expression database: Inducement, collection and baseline. In Proceedings of the 2013 10th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition (FG), Shanghai, China, 22–26 April 2013; pp. 1–6. [Google Scholar]

- Yan, W.J.; Li, X.; Wang, S.J.; Zhao, G.; Liu, Y.J.; Chen, Y.H.; Fu, X. CASME II: An improved spontaneous micro-expression database and the baseline evaluation. PLoS ONE 2014, 9, e86041. [Google Scholar] [CrossRef] [PubMed]

- Davison, A.K.; Lansley, C.; Costen, N.; Tan, K.; Yap, M.H. SAMM: A spontaneous micro-facial movement dataset. IEEE Trans. Affect. Comput. 2016, 9, 116–129. [Google Scholar] [CrossRef]

- Qu, F.; Wang, S.J.; Yan, W.J.; Li, H.; Wu, S.; Fu, X. CAS(ME)2: A Database for Spontaneous Macro-Expression and Micro-Expression Spotting and Recognition. IEEE Trans. Affect. Comput. 2017, 9, 424–436. [Google Scholar] [CrossRef]

- Pfister, T.; Li, X.B.; Zhao, G.Y.; Pietikinen, M. Recognising spontaneous facial micro-expressions. In Proceedings of the 2011 IEEE International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 1449–1456. [Google Scholar]

- Wang, S.J.; Yan, W.J.; Zhao, G.; Fu, X.; Zhou, C.G. Micro-Expression Recognition Using Robust Principal Component Analysis and Local Spatiotemporal Directional Features. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2014; pp. 325–338. [Google Scholar]

- Wu, Q.; Shen, X.; Fu, X. The machine knows what you are hiding: An automatic micro-expression recognition system. In International Conference on Affective Computing and Intelligent Interaction; Springer: Berlin/Heidelberg, Germany, 2011; pp. 152–162. [Google Scholar]

- Khor, H.Q.; See, J.; Phan, R.C.W.; Lin, W. Enriched long-term recurrent convolutional network for facial micro-expression recognition. In Proceedings of the 2018 13th IEEE International Conference on Automatic Face and Gesture Recognition (FG 2018), Xi’an, China, 15–19 May 2018; pp. 667–674. [Google Scholar]

- Wang, S.J.; Li, B.J.; Liu, Y.J.; Yan, W.J.; Ou, X.; Huang, X.; Xu, F.; Fu, X. Micro-expression recognition with small sample size by transferring long-term convolutional neural network. Neurocomputing 2018, 312, 251–262. [Google Scholar] [CrossRef]

- Zhi, R.; Xu, H.; Wan, M.; Li, T. Combining 3D Convolutional Neural Networks with Transfer Learning by Supervised Pre-Training for Facial Micro-Expression Recognition. IEICE Trans. Inf. Syst. 2019, 102, 1054–1064. [Google Scholar] [CrossRef]

- Hill, A.; Cootes, T.F.; Taylor, C.J. Active shape models and the shape approximation problem. Image Vis. Comput. 1996, 14, 601–607. [Google Scholar] [CrossRef]

- Edwards, G.J.; Cootes, T.F.; Taylor, C.J. Advances in active appearance models. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–25 September 1999; pp. 137–142. [Google Scholar]

- Cristinacce, D.; Cootes, T. Automatic feature localisation with constrained local models. Pattern Recognit. 2008, 41, 3054–3067. [Google Scholar] [CrossRef]

- Yang, Z.; Leng, L.; Kim, B.G. StoolNet for Color Classification of Stool Medical Images. Electronics 2019, 8, 1464. [Google Scholar] [CrossRef]

- Leng, L.; Yang, Z.; Kim, C.; Zhang, Y. A Light-Weight Practical Framework for Feces Detection and Trait Recognition. Sensors 2020, 20, 2644. [Google Scholar] [CrossRef]

- Yang, Z.; Li, J.; Min, W.; Wang, Q. Real-Time Pre-Identification and Cascaded Detection for Tiny Faces. Appl. Sci. 2019, 9, 4344. [Google Scholar] [CrossRef]

- Zhang, K.; Zhang, Z.; Li, Z.; Qiao, Y. Joint face detection and alignment using multitask cascaded convolutional networks. IEEE Signal Process. Lett. 2016, 23, 1499–1503. [Google Scholar] [CrossRef]

- Sun, X.; Wu, P.; Hoi, S.C.H. Face detection using deep learning: An improved faster RCNN approach. Neurocomputing 2018, 299, 42–50. [Google Scholar] [CrossRef]

- Jiang, H.; Learned-Miller, E. Face Detection with the Faster R-CNN. In Proceedings of the 2017 12th IEEE International Conference on Automatic Face and Gesture Recognition (FG 2017), Washington, DC, USA, 30 May–3 June 2017; pp. 650–657. [Google Scholar]

- Ojala, T.; Pietikäinen, M.; Maenpaa, T. Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 971–987. [Google Scholar] [CrossRef]

- Zhao, G.; Pietikäinen, M. Dynamic texture recognition using local binary patterns with an application to facial expressions. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 915–928. [Google Scholar] [CrossRef] [PubMed]

- Huang, X.; Wang, S.J.; Zhao, G.; Piteikäinen, M. Facial micro-expression recognition using spatiotemporal local binary pattern with integral projection. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Santiago, Chile, 11–18 December 2015; pp. 1–9. [Google Scholar]

- Huang, X.; Zhao, G.; Hong, X.; Zheng, W.; Pietikäinen, M. Spontaneous facial micro-expression analysis using spatiotemporal completed local quantized patterns. Neurocomputing 2016, 175, 564–578. [Google Scholar] [CrossRef]

- Huang, X.; Zhao, G. Spontaneous facial micro-expression analysis using spatiotemporal local radon-based binary pattern. In Proceedings of the 2017 International Conference on the Frontiers and Advances in Data Science (FADS), Xi’an, China, 23–25 October 2017; pp. 159–164. [Google Scholar]

- Guo, C.; Liang, J.; Zhan, G.; Liu, Z.; Pietikäinen, M.; Liu, L. Extended Local Binary Patterns for Efficient and Robust Spontaneous Facial Micro-Expression Recognition. IEEE Access 2019, 7, 174517–174530. [Google Scholar] [CrossRef]

- Wang, Y.; See, J.; Phan, W.; Oh, Y.H. LBP with Six Intersection Points: Reducing Redundant Information in LBP-TOP for Micro-expression Recognition. In Asian Conference on Computer Visio; Springer: Cham, Switzerland, 2014; pp. 525–537. [Google Scholar]

- Guo, Y.; Xue, C.; Wang, Y.; Yu, M. Micro-expression recognition based on CBP-TOP feature with ELM. Optik 2015, 126, 4446–4451. [Google Scholar] [CrossRef]

- Jia, X.; Ben, X.; Yuan, H.; Kpalma, K.; Meng, W. Macro-to-micro transformation model for micro-expression recognition. J. Comput. Sci. 2018, 25, 289–297. [Google Scholar] [CrossRef]

- Yan, W.J.; Chen, Y.H. Measuring dynamic micro-expressions via feature extraction methods. J. Comput. Sci. 2018, 25, 318–326. [Google Scholar] [CrossRef]

- Wang, L.; Xiao, H.; Luo, S.; Zhang, J.; Liu, X. A weighted feature extraction method based on temporal accumulation of optical flow for micro-expression recognition. Signal Process. Image Commun. 2019, 78, 246–253. [Google Scholar] [CrossRef]

- Wang, S.J.; Yan, W.J.; Li, X.; Zhao, G.; Zhou, C.G.; Fu, X.; Yang, M.; Tao, J. Micro-expression recognition using color spaces. IEEE Trans. Image Process. 2015, 24, 6034–6047. [Google Scholar] [CrossRef] [PubMed]

- Liong, S.T.; See, J.; Wong, K.S.; Phan, C.W. Less is more: Micro-expression recognition from video using apex frame. Signal Process. Image Commun. 2018, 62, 82–92. [Google Scholar] [CrossRef]

- Li, Z.; Xie, N.; Huang, D.; Zhang, G. A three-way decision method in a hybrid decision information system and its application in medical diagnosis. Artif. Intell. Rev. 2020, 25, 1–30. [Google Scholar] [CrossRef]

- Liang, W.; Zhang, Y.; Xu, J.; Lin, D. Optimization of Basic Clustering for Ensemble Clustering: An Information-theoretic Perspective. IEEE Access 2019, 7, 179048–179062. [Google Scholar] [CrossRef]

- Happy, S.L.; Routray, A. Fuzzy Histogram of Optical Flow Orientations for Micro-Expression Recognition. IEEE Trans. Affect. Comput. 2019, 10, 394–406. [Google Scholar] [CrossRef]

- Xia, Z.; Peng, W.; Khor, H.Q.; Feng, X.; Zhao, G. Revealing the Invisible with Model and Data Shrinking for Composite-Database Micro-Expression Recognition. IEEE Trans. Image Process. 2020, 29, 8590–8605. [Google Scholar] [CrossRef] [PubMed]

- Lu, H.; Kpalma, K.; Ronsin, J. Motion descriptors for micro-expression recognition. Signal Process. Image Commun. 2018, 67, 108–117. [Google Scholar] [CrossRef]

- Xu, F.; Zhang, J.; Wang, J.Z. Micro-expression identification and categorization using a facial dynamics map. IEEE Trans. Affect. Comput. 2017, 8, 254–267. [Google Scholar] [CrossRef]

- Xia, Z.; Hong, X.; Gao, X.; Feng, X.; Zhao, G. Spatiotemporal Recurrent Convolutional Networks for Recognizing Spontaneous Micro-Expressions. IEEE Trans. Multimed. 2020, 22, 626–640. [Google Scholar] [CrossRef]

- Black, M.J.; Anandan, P. The Robust Estimation of Multiple Motions: Parametric and Piecewise-Smooth Flow Fields. Comput. Vis. Image Underst. 1996, 63, 75–104. [Google Scholar] [CrossRef]

- Xu, J.; Miao, D.; Zhang, Y.; Zhang, Z. A three-way decisions model with probabilistic rough sets for stream computing. Int. J. Approx. Reason. 2017, 88, 1–22. [Google Scholar] [CrossRef]

- Yao, Y.Y. An outline of a theory of three-way decisions. In Rough Sets and Current Trends in Computing; Springer: Berlin/Heidelberg, Germany, 2012; pp. 1–17. [Google Scholar]

- Whitehill, J.; Littlewort, G.; Fasel, I.; Bartlett, M.; Movellan, J. Toward practical smile detection. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 31, 2106–2111. [Google Scholar] [CrossRef] [PubMed]

| Methods | Description | Advantage |

|---|---|---|

| STCLQP [25] | Extracts the features of sign, magnitude and orientation components information and fused. | For facial micro-expression appearance and motion feature extraction has a better effect. |

| TAOF [32] | The motion intensity is calculated by accumulating the neighboring optical flow in the time interval, and the feature are further weighted locally. | Enhanced the discrimination of the weighted features for micro-expression recognition. |

| BI-WOOF [34] | Created a new feature extractor, which to encode essential expressiveness of the apex frame. | Capable of representing the apexframe in a discriminative manner which emphasizes facial motion information at both bin and block levels. |

| FHOFO [37] | Construct suitable angular histograms from optical flow vector orientations and using histogram fuzzification to encode the temporal pattern. | Insensitive to change of illumination conditions among video clips and the extracted features are robust to the variation of expression intensities |

| RIMDS [38] | Explored the shallower-architecture and lower-resolution input data, shrinking model and input complexities simultaneously for the composite-database. | Achieved the superior performance compared to the micro-expression recognition approaches. |

| FMBH [39] | Combing both the horizontal and the vertical components of the differential of optical flow as inspired from the motion boundary histograms (MBH). | The unexpected motions, caused by residual mis-registration that appearsbetween images cropped from different frames, can be removed. |

| FDM [40] | Characterize the movements of a micro-expression in different granularity. | Provided an effective and intuitive understanding of human facial expressions. |

| STRCN [41] | A novel deep recurrent convolutional networks based micro-expression recognition approach and capturing the spatiotemporal deformations of micro-expression sequence. | Conquered the “limited and imbalanced training samples” problem and considered the spatiotemporal deformations. |

| Feature | SMIC-HS | SMIC-VIS/NIR | CASME II |

|---|---|---|---|

| subjects | 16 | 16 | 35 |

| samples | 164 | 71 | 247 |

| Frames per sec. | 100 | 25 | 200 |

| Posed/spontaneous | Spontaneous | Spontaneous | Spontaneous |

| AU labels | no | no | yes |

| Emotion class | 3(positive/negative/surprise) | 3(positive/negative/surprise) | 5(basic emotions) |

| Tagging | Emotion category | Emotion category | Emotion/FACS |

| CASME II | SMIC | |||||

|---|---|---|---|---|---|---|

| Acc (%)/F1-Score | Acc (%)/F1-Score | |||||

| (1,1,2) | 43.43/0.34 | 46.88/0.38 | 45.82/0.38 | 49.42/0.48 | 50.48/0.50 | 45.58/0.45 |

| (1,1,3) | 45.27/0.36 | 47.04/0.39 | 46.98/0.39 | 48.62/0.48 | 49.28/0.49 | 47.85/0.48 |

| (1,1,4) | 46.91/0.39 | 47.65/0.41 | 48.24/0.42 | 47.57/0.47 | 46.04/0.45 | 43.25/0.42 |

| (2,2,2) | 45.14/0.36 | 45.32/0.37 | 46.24/0.39 | 43.61/0.43 | 47.32/0.46 | 49.54/0.51 |

| (2,2,3) | 45.43/0.33 | 44.92/0.32 | 47.15/0.40 | 47.76/0.49 | 51.83/0.51 | 44.38/0.47 |

| (2,2,4) | 42.15/0.32 | 46.31/0.38 | 44.31/0.37 | 45.17/0.43 | 49.55/0.47 | 43.47/0.45 |

| (3,3,2) | 43.47/0.35 | 43.28/0.34 | 45.69/0.37 | 46.04/0.47 | 44.71/0.42 | 47.85/0.49 |

| (3,3,3) | 46.38/0.37 | 46.93/0.39 | 43.74/0.35 | 46.41/0.48 | 42.52/0.41 | 46.12/0.48 |

| (3,3,4) | 47.22/0.41 | 45.82/0.35 | 45.49/0.36 | 45.79/0.44 | 43.45/0.45 | 44.91/0.44 |

| Method | CASME II | SMIC | ||

|---|---|---|---|---|

| Acc (%) | F1-Score | Acc (%) | F1-Score | |

| LBPTOP (reproduced) | 48.24 | 0.42 | 51.83 | 0.51 |

| LBPSIP [28] | 49.73 | 0.47 | 47.42 | 0.46 |

| STCLQP [25] | 58.39 | 0.58 | 64.02 | 0.63 |

| STCLBP-IP [24] | 59.51 | - | 57.93 | - |

| BI-WOOF [34] | 58.46 | 0.60 | 61.29 | 0.61 |

| FHOFO [37] | 60.52 | 0.61 | 58.61 | 0.58 |

| OFF2BD-LBPTOP | 50.46 | 0.44 | 53.52 | 0.52 |

| OFF2BD-LBPSIP | 52.77 | 0.51 | 50.36 | 0.50 |

| OFF2BD-STCLQP | 60.87 | 0.62 | 64.84 | 0.63 |

| OFF2BD-STCLBP-IP | 61.57 | - | 59.18 | - |

| OFF3WD-LBPTOP | 51.68 | 0.46 | 54.35 | 0.54 |

| OFF3WD-LBPSIP | 53.45 | 0.51 | 51.57 | 0.52 |

| OFF3WD-STCLQP | 61.28 | 0.61 | 65.41 | 0.65 |

| OFF3WD-STCLBP-IP | 60.53 | - | 61.83 | - |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, J.; Xu, J.; Lin, D.; Tu, M. Optical Flow Filtering-Based Micro-Expression Recognition Method. Electronics 2020, 9, 2056. https://doi.org/10.3390/electronics9122056

Wu J, Xu J, Lin D, Tu M. Optical Flow Filtering-Based Micro-Expression Recognition Method. Electronics. 2020; 9(12):2056. https://doi.org/10.3390/electronics9122056

Chicago/Turabian StyleWu, Junjie, Jianfeng Xu, Deyu Lin, and Min Tu. 2020. "Optical Flow Filtering-Based Micro-Expression Recognition Method" Electronics 9, no. 12: 2056. https://doi.org/10.3390/electronics9122056

APA StyleWu, J., Xu, J., Lin, D., & Tu, M. (2020). Optical Flow Filtering-Based Micro-Expression Recognition Method. Electronics, 9(12), 2056. https://doi.org/10.3390/electronics9122056