To evaluate the performance of the proposed DCT-CNN on HDR source forensics, we created several datasets with different types of HDR images and different sizes of image blocks. The performance is assessed by classification accuracy (Acc), receiver operating characteristic curve (ROC) and the area under the curve (AUC), and compared with six state-of-art forensics methods in [

19,

20,

21,

22,

24,

27]. The classification accuracy (Acc) is defined as:

where

denotes true positive, which is an outcome when the model correctly predicts the positive class,

denotes true negative, which is an outcome when the model correctly predicts the negative class,

denotes false positive, which is an outcome when the model incorrectly predicts the positive class

denotes false negative, which is an outcome when the model incorrectly predicts the negative class. ROC is a graphical plot that illustrates the diagnostic ability of a binary classifier system as its discrimination threshold is varied. AUC is defined as the area under the ROC curve enclosed by the coordinate axis.

4.2. Forensics on Images without Anti-Forensics Attack

The classification accuracy averaged over the test datasets with a resolution of

are summarized in

Table 3 for all the tested methods. The best results are marked in bold. Since small-size images include less information related to forensics, experiments conducted on small-size images can reflect the feature extraction capability of forensic methods.

Table 3 indicates that the performance of HDR source forensics using manually specified feature extraction methods is weaker than using CNN-based methods to extract features automatically. For instance, the highest classification accuracy of LHS is 88.59% on the M-A dataset, while the accuracy of the two CNN-based forensic methods reached 94.62% and 98.94%. For CNN-based forensic methods, the performance of DCT-CNN in the frequency domain is better than HDR-CNN in the spatial domain. This result validates that the decorrelation of DCT helps CNN extract the most important features related to HDR source forensics. In this experiment, the proposed DCT-CNN manifests the best performance on different HDR datasets. For the proposed DCT-CNN, classification accuracy increased by 10.35% compared with the manually specified feature extraction methods. In addition, compared with HDR-CNN which is a CNN-based forensics method built the spatial domain, the forensics accuracy increased by 4.32%. The experimental results validate that the proposed DCT-CNN for HDR source forensics which is built in the DCT domain can achieve desired forensic performance on

images. It can be observed from

Table 3 that compared with other methods, the proposed DCT-CNN gained the highest AUC on different datasets.

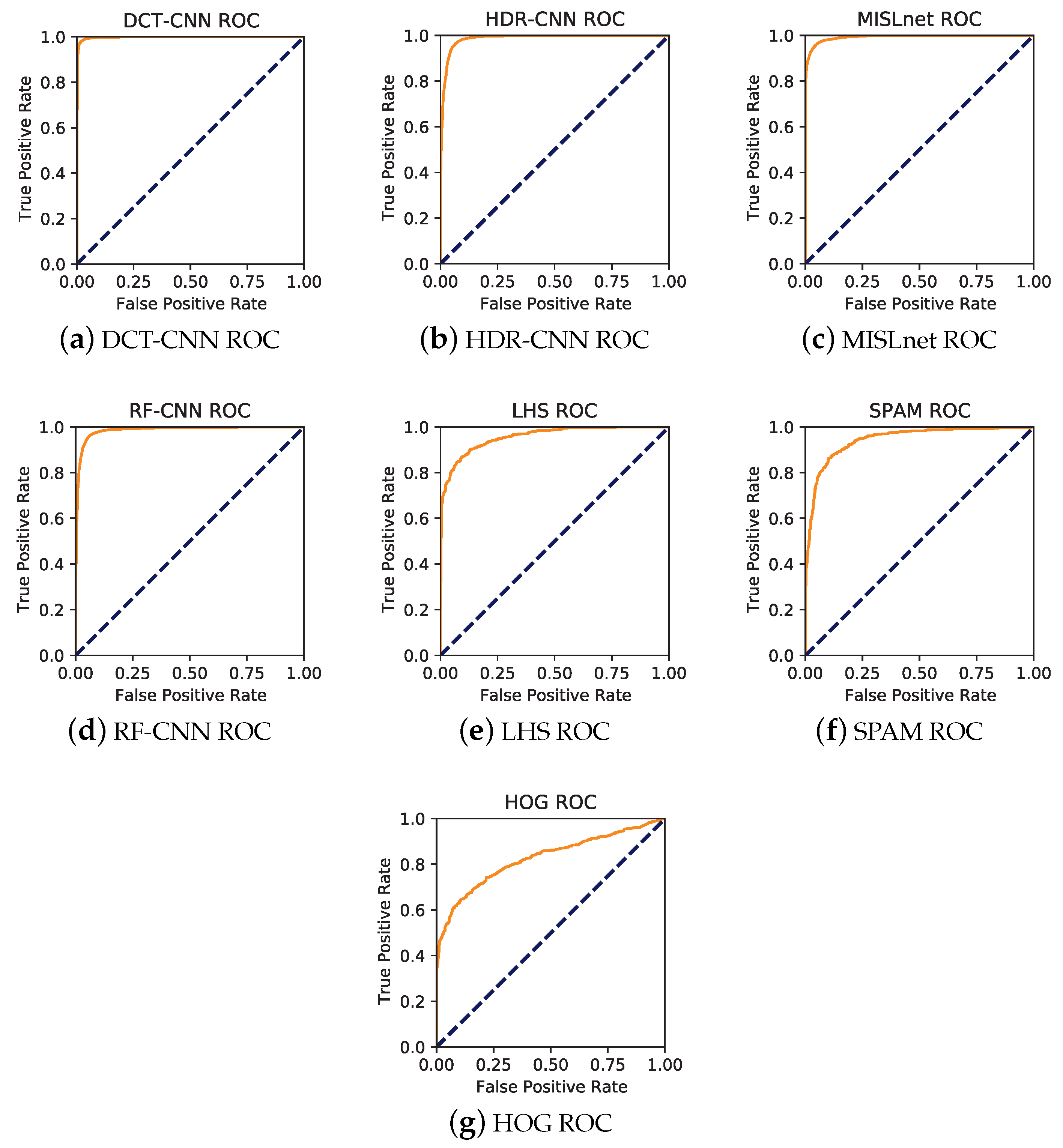

Figure 5 shows the ROC of different methods, the curve of the DCT-CNN proposed in this paper is closer to the point (0, 1), which indicates that DCT-CNN has better forensics performance over other methods.

The classification accuracy and AUC averaged over the test datasets with a resolution of

are summarized in

Table 4 for all the tested methods. It can be concluded that in both the CNN-based forensics methods and manually specified feature extraction methods, the accuracy was improved to a certain extent compared with results on

images. Taking LHS as an instance, the forensic accuracy is 93.15% on the M-A dataset with an image size of

, while accuracy of LHS on the M-A dataset with an image size of

is 88.59%. The forensics accuracy of HDR-CNN on

images is also improved by 2.92–4.74% compared to result on

images. It should be noted that our proposed method has achieved high forensic accuracy on

images. Hence, performance of proposed DCT-CNN only increased by 0.09–0.49% on

images. In this experiment, the proposed DCT-CNN still achieves the highest classification accuracy on four different datasets with a resolution of

. The DCT-CNN still achieved the highest AUC on different datasets, which verifies its forensic performance from another perspective.

The classification accuracy averaged and AUC over the test datasets with a resolution of

are listed in

Table 5. Clearly,

is a relatively large image size. A larger size means that image includes more information related to forensics. It can be observed from

Table 5 that the manually specified feature extraction methods and the CNN-based forensics methods have achieved higher classification accuracy on datasets with a resolution of

compared with results on

images and

images. In this experiment, the proposed DCT-CNN still achieves the highest classification accuracy and the highest AUC.

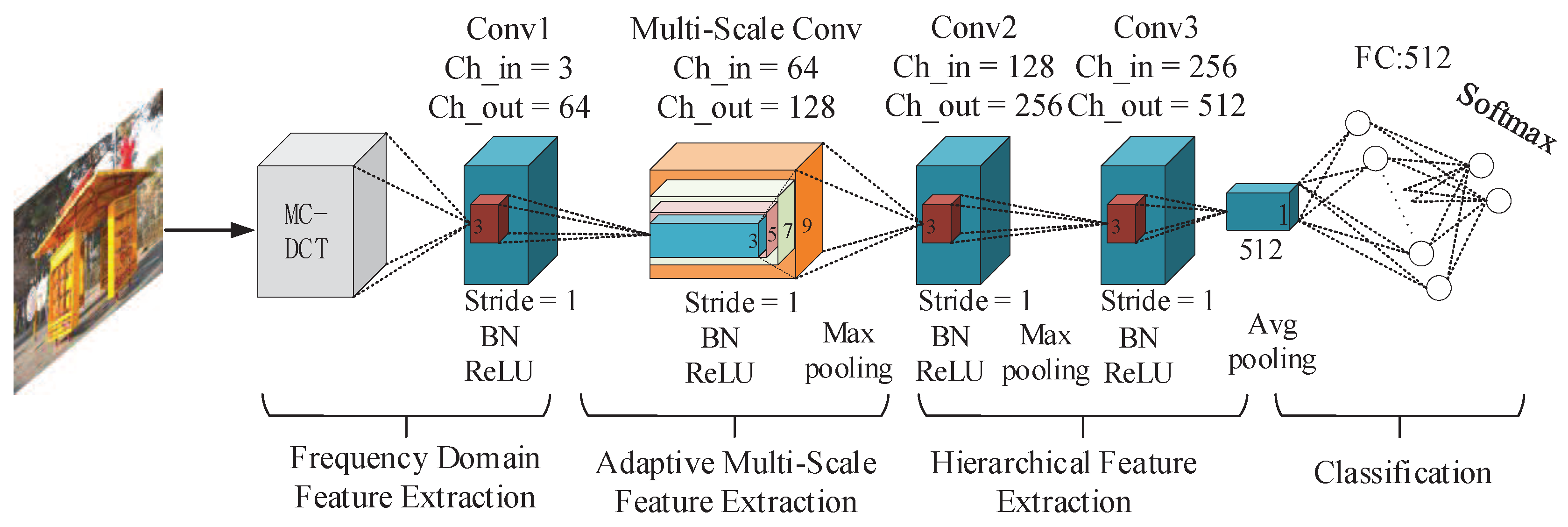

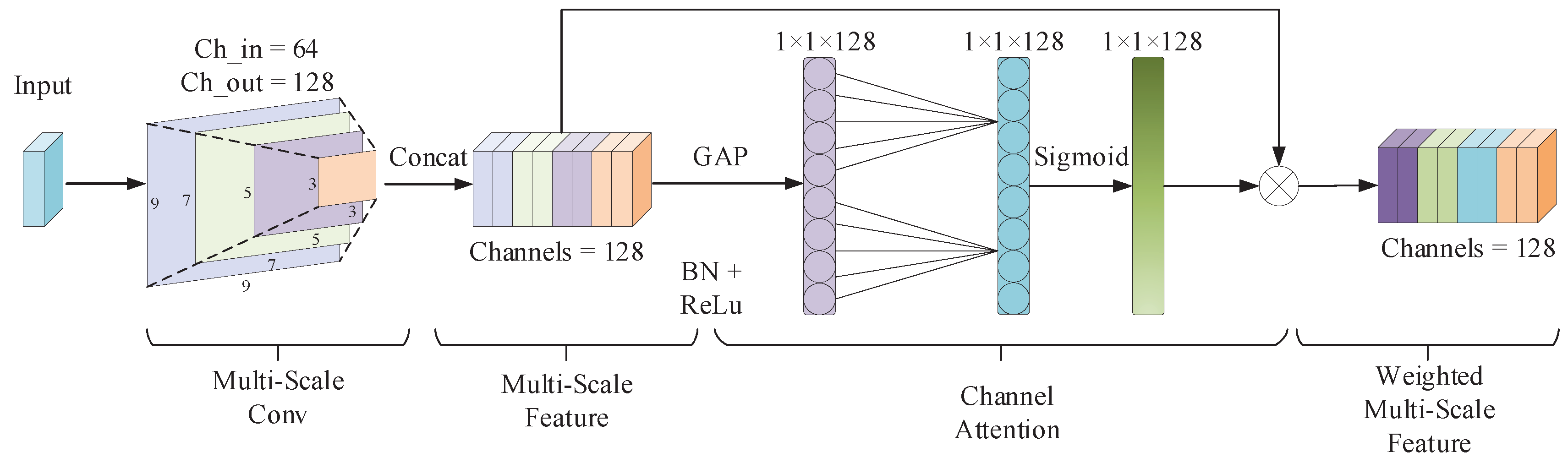

By analyzing the experimental results, we can draw a conclusion that larger image includes more information related to the HDR source forensics. It should be emphasized that among all the methods, RF-CNN and the proposed DCT-CNN were carried out in the DCT domain. The proposed DCT-CNN uses multi-channel DCT to avoid the loss of information and uses an adaptive multi-scale module to extract multi-scale features, which makes the forensic performance of DCT-CNN superior to RF-CNN.

Through

Table 3,

Table 4 and

Table 5, a conclusion can be drawn that the proposed method is not sensitive to the size of images. High classification accuracy and AUC can also be achieved on the images with low resolution, which validates the strong robustness of DCT-CNN in respect of image size. In addition, we can observe that the performance of forensics methods built in the spatial domain on different types of datasets is not very stable. For instance, HDR-CNN has an accuracy between 90.49–94.62% on different types of datasets with a resolution of

. The fluctuation in accuracy of HDR-CNN is 4.13%. The fluctuation in the forensic performance of SPAM, LHS and HOG on datasets with different types is 3.26–5.56%. The fluctuation in the accuracy of our proposed DCT-CNN on datasets with different types are within 1%, which indicates that the proposed DCT-CNN has strong robustness and adaptability in respect of HDR image types.

4.3. Forensics on Images under Anti-Forensics Attack

Image anti-forensics are techniques that aim to make forensics algorithms fail by modifying the images in a visually imperceptible way. Anti-forensics attack are methods used to make forensics method invalid or to decrease the performance of forensics method, which are used to verify the robustness of forensics methods in this experiment. Median filtering has the characteristic of changing the distribution of image pixel values while preserving the content of the image. Due to this characteristic of median filtering, median filtering is often used as anti-forensics attack, which invalidates or reduces the performance of forensic methods. Therefore, it is necessary to study the robustness of the forensics methods under the median filtering attack. The median filter replaces a pixel by the median of all pixels in a neighborhood

w:

where

w represents a neighborhood, centered around location

in the image. Furthermore, in order to verify the robustness against anti-forensics attack of the forensics methods, we chose the median filtering as the anti-forensics attack method.

In this experiment, the size of the images in datasets is fixed to

. Median filtering operation with two different kernels of

(MF3) and

(MF5) were conducted on all HDR images. The experiments were conducted on these post-processed datasets to verify the robustness of the HDR source forensics methods. The experimental results are shown in

Table 6 and

Table 7.

Compared with

Table 3, it can be observed from

Table 6 that the performance of all forensics methods decreased under the median filtering attack. Especially, the accuracy of forensics methods built in the spatial domain significantly decreased. For instance, LHS gains best performance among manually specified feature extraction methods. However, the accuracy of LHS on the M-A dataset has decreased by 7.85% compared to the accuracy without an attack. As a CNN-based forensics method, HDR-CNN has also decrease by 8.13% on the M-K dataset. For our proposed DCT-CNN, the accuracy under the median filtering attack is still the highest among all methods on four different datasets, which validates that DCT-CNN is robust against anti-forensics attacks.

Comparing

Table 6 and

Table 7, it can be concluded that the median filtering with a kernel size of

has a greater impact on the performance of forensics methods than that with a kernel size of

. In the case of more intense anti-forensics attacks, our proposed method still achieved the highest accuracy and the highest AUC on all the datasets. Compared with the results under median filtering with kernel size of

, the accuracy of the forensics methods built in the spatial domain fluctuates between 2–11%, while the fluctuation of DCT-CNN is between 0.5–2.29%, which proves the proposed DCT-CNN is very robust to anti-forensics attacks.