Optimal Feature Search for Vigilance Estimation Using Deep Reinforcement Learning

Abstract

1. Introduction

Related Work

2. Material and Methods

2.1. Experiments of Vigilance Task

- Preparation: A subject was seated in front of a screen and equipped with EEG and ECG electrodes.

- Reaction time task: The subject was asked to press a push-button switch when a stimulus (3 × 3 mm black square) appeared on the screen. The stimulation was presented in 1 s and the visual stimulation interval was random, from 5 to 15 s.

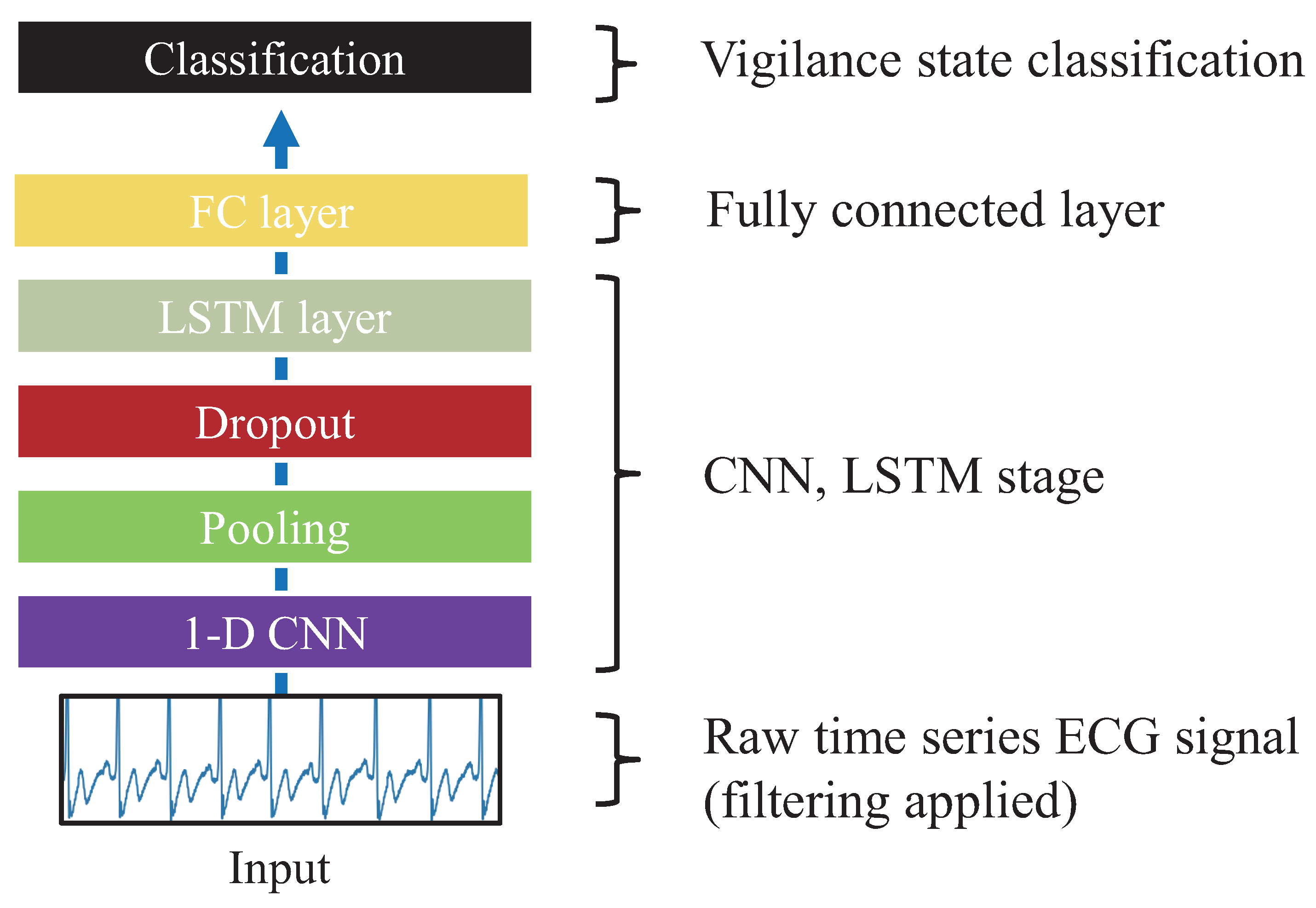

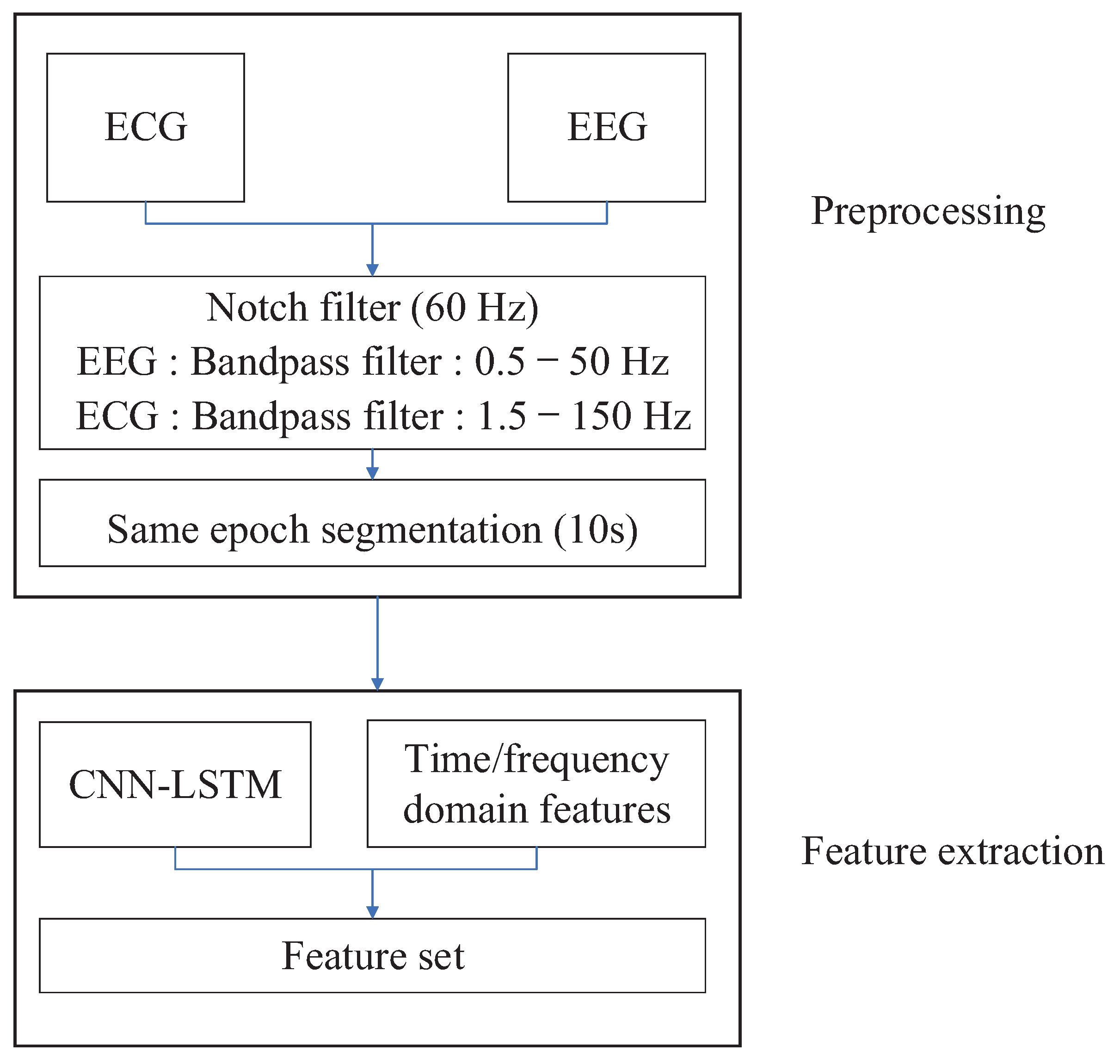

2.2. Feature Extraction for Assessment of Vigilance

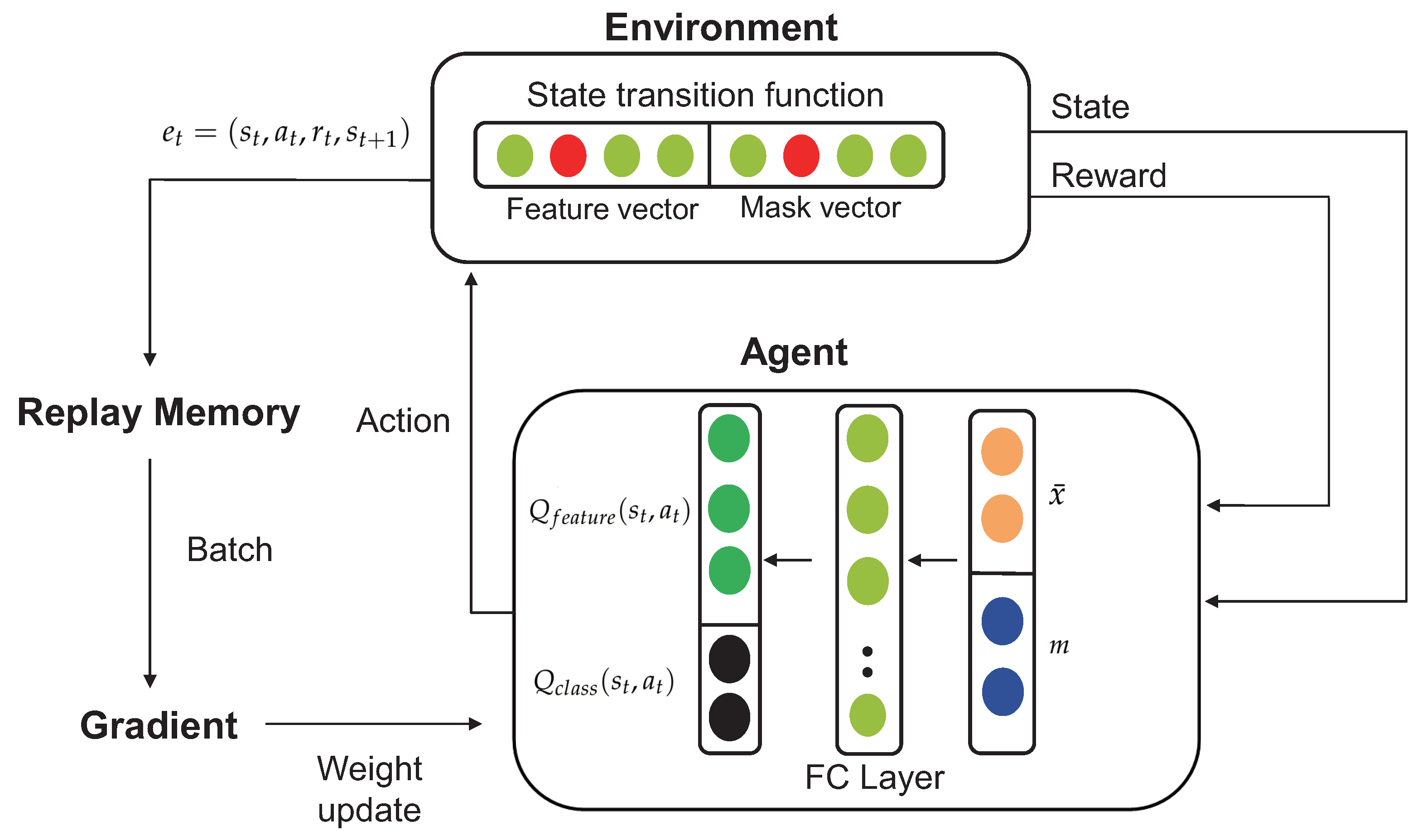

2.3. Redefinition of the Problem with Reinforcement Learning

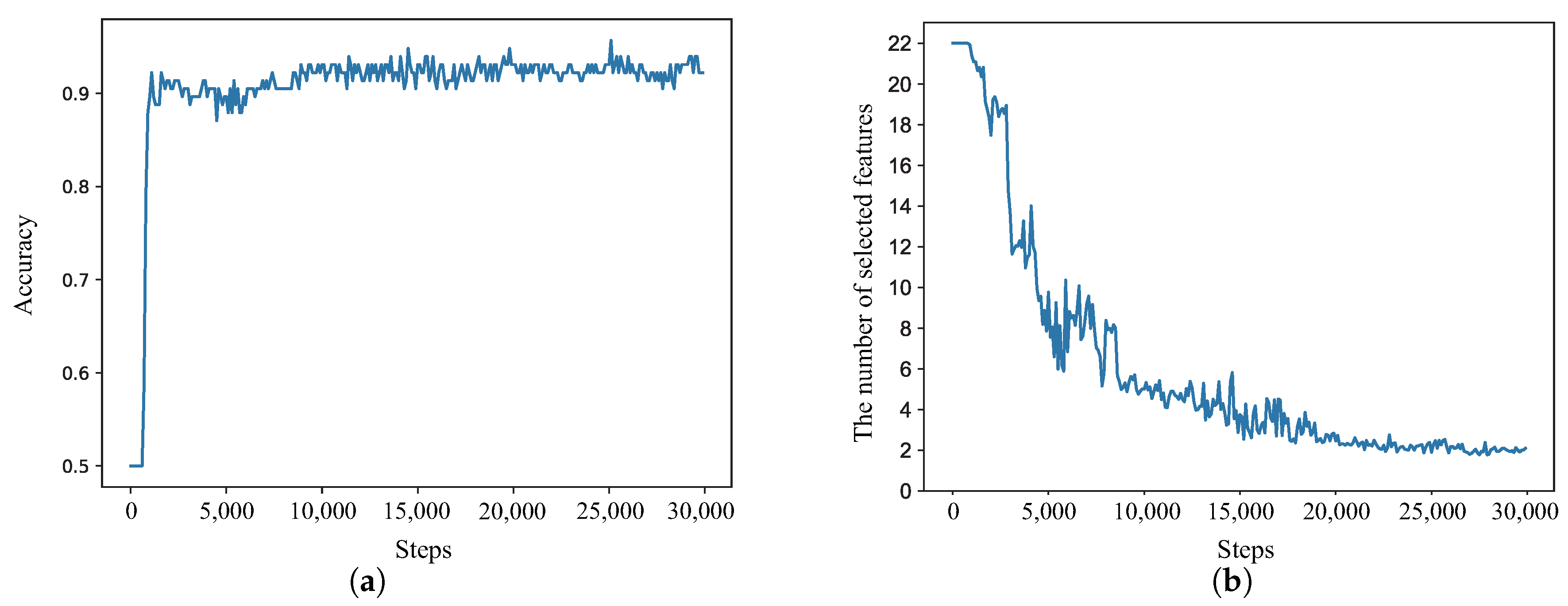

2.4. Deep Q-Learning

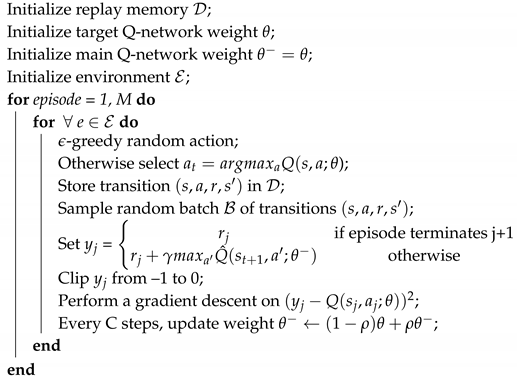

2.5. Training Algorithm

| Algorithm 1: Agent training. |

|

| Algorithm 2: Environment.simulation. |

|

3. Experimental Results

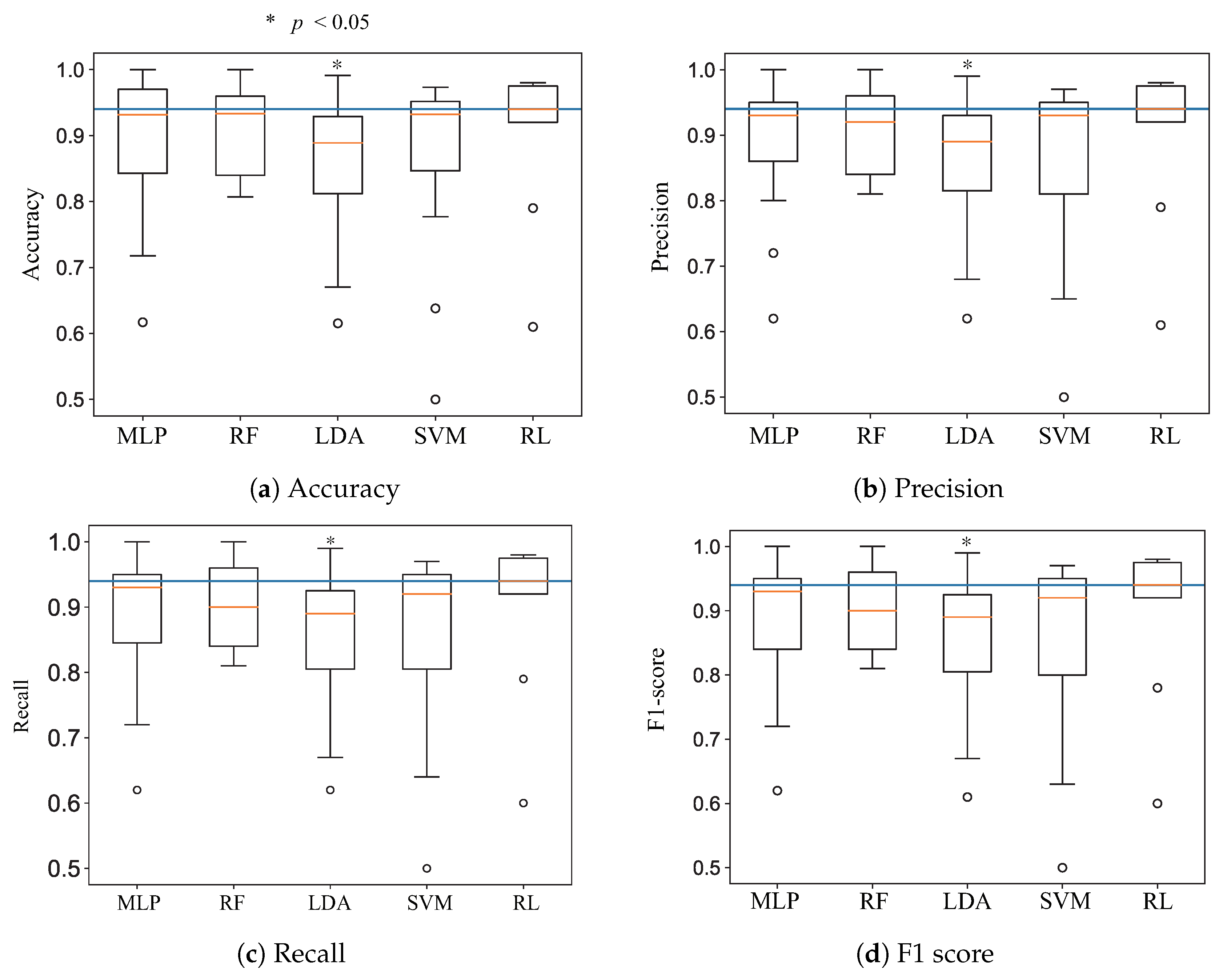

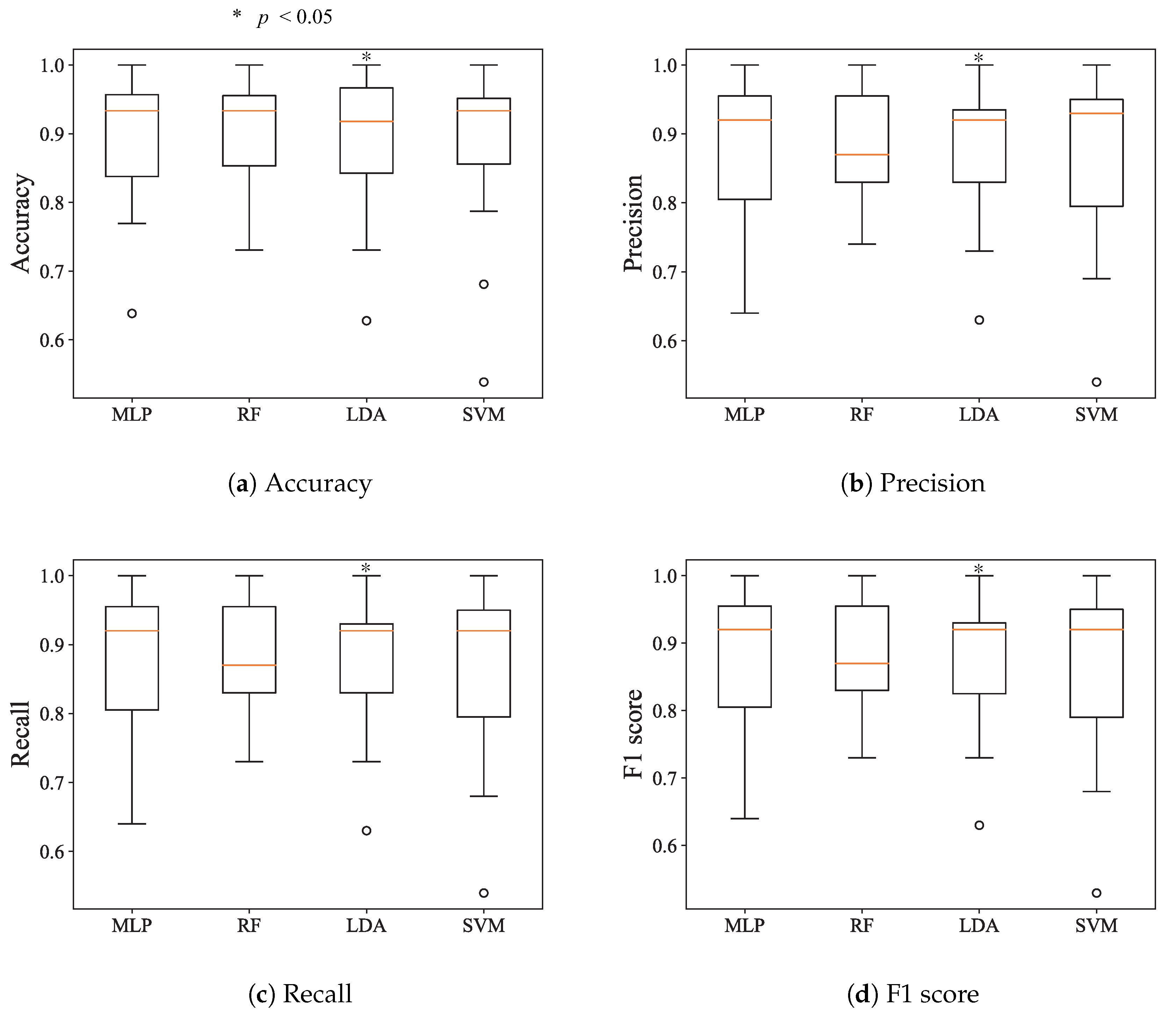

3.1. Classification for Assessment of Vigilance

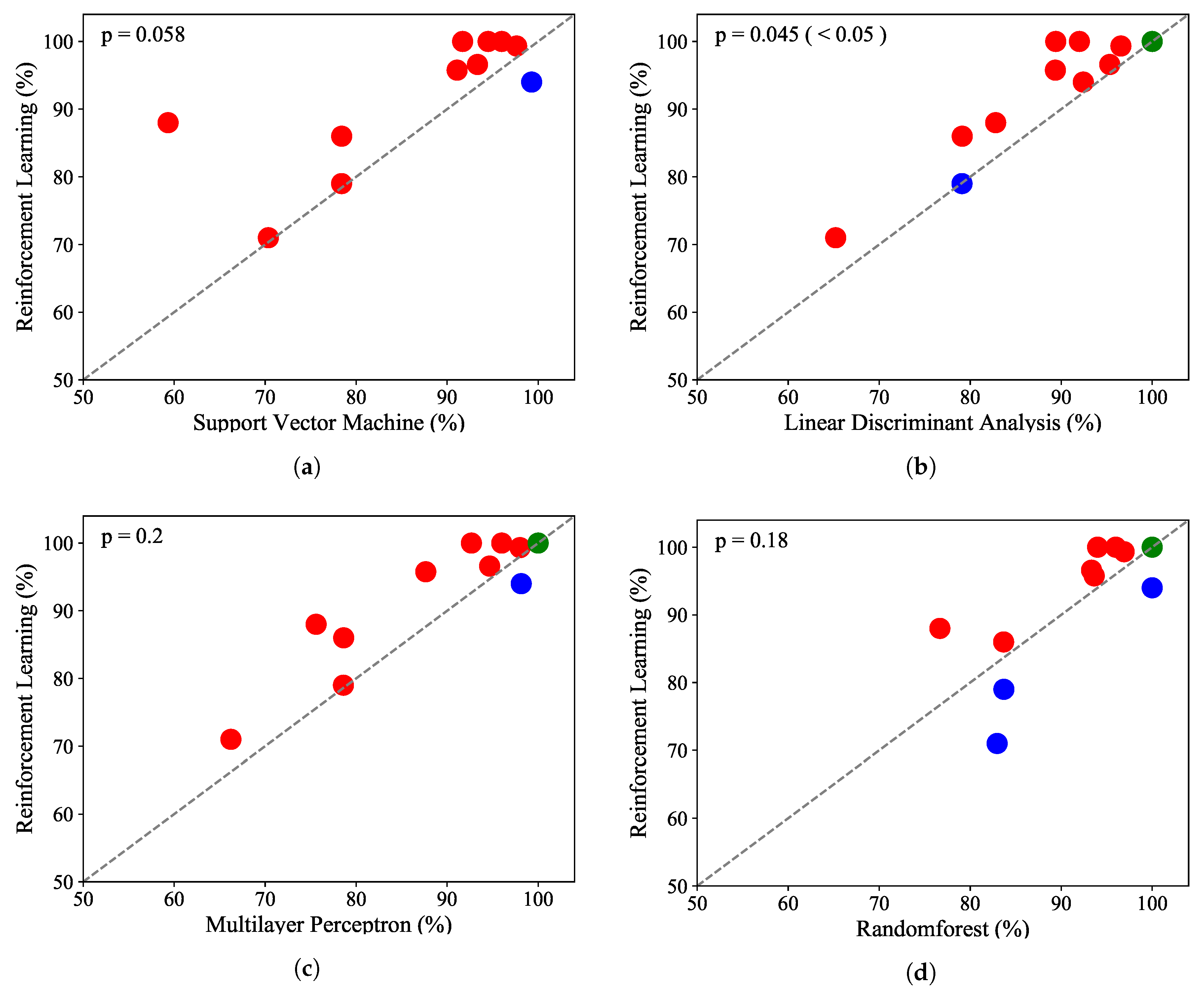

3.2. Comparison with Conventional Methods

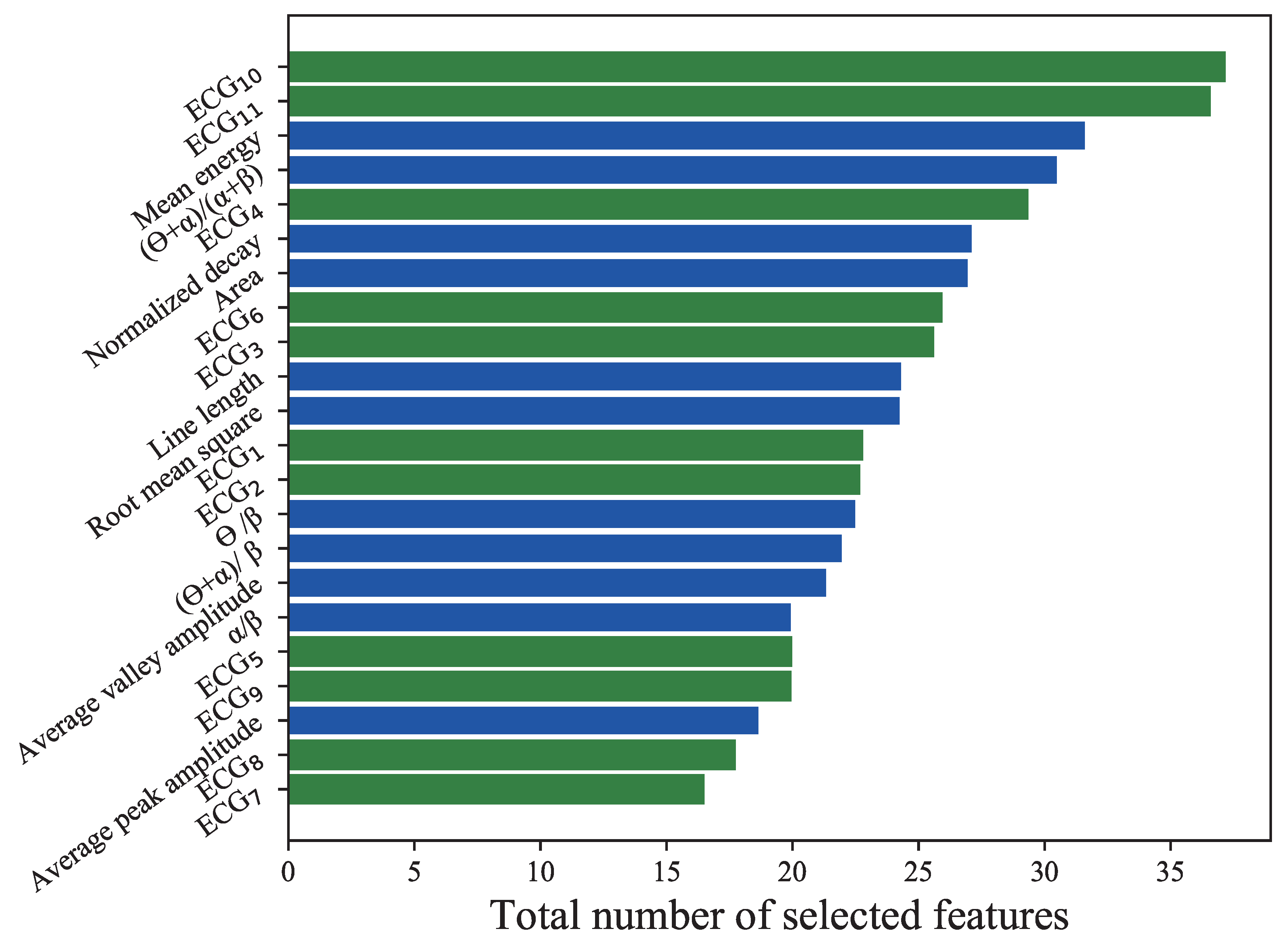

3.3. Feature Study

4. Discussion and Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Sahayadhas, A.; Sundaraj, K.; Murugappan, M. Detecting driver drowsiness based on sensors: A review. Sensors 2012, 12, 16937–16953. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Wu, X.; Zheng, X.; Yu, S. Driver drowsiness detection using multi-channel second order blind identifications. IEEE Access 2019, 7, 11829–11843. [Google Scholar] [CrossRef]

- Duta, M.; Alford, C.; Wilson, S.; Tarassenko, L. Neural network analysis of the mastoid EEG for the assessment of vigilance. Int. J. Hum.-Comput. Interact. 2004, 17, 171–195. [Google Scholar] [CrossRef]

- Shen, K.Q.; Li, X.P.; Ong, C.J.; Shao, S.Y.; Wilder-Smith, E.P. EEG-based mental fatigue measurement using multi-class support vector machines with confidence estimate. Clin. Neurophysiol. 2008, 119, 1524–1533. [Google Scholar] [CrossRef]

- Looney, D.; Kidmose, P.; Park, C.; Ungstrup, M.; Rank, M.L.; Rosenkranz, K.; Mandic, D.P. The in-the-ear recording concept: User-centered and wearable brain monitoring. IEEE Pulse 2012, 3, 32–42. [Google Scholar] [CrossRef]

- Akin, M.; Kurt, M.B.; Sezgin, N.; Bayram, M. Estimating vigilance level by using EEG and EMG signals. Neural Comput. Appl. 2008, 17, 227–236. [Google Scholar] [CrossRef]

- Åkerstedt, T.; Gillberg, M. Subjective and objective sleepiness in the active individual. Int. J. Neurosci. 1990, 52, 29–37. [Google Scholar] [CrossRef]

- Van Benthem, K.; Cebulski, S.; Herdman, C.M.; Keillor, J. An EEG Brain–Computer Interface Approach for Classifying Vigilance States in Humans: A Gamma Band Focus Supports Low Misclassification Rates. Int. J. Hum.- Interact. 2018, 34, 226–237. [Google Scholar] [CrossRef]

- Atkinson, J.; Campos, D. Improving BCI-based emotion recognition by combining EEG feature selection and kernel classifiers. Expert Syst. Appl. 2016, 47, 35–41. [Google Scholar] [CrossRef]

- Turner, J.; Page, A.; Mohsenin, T.; Oates, T. Deep belief networks used on high resolution multichannel electroencephalography data for seizure detection. In Proceedings of the 2014 AAAI Spring Symposium, Palo Alto, CA, USA, 24–26 March 2014. [Google Scholar]

- Forsman, P.M.; Vila, B.J.; Short, R.A.; Mott, C.G.; Van Dongen, H.P. Efficient driver drowsiness detection at moderate levels of drowsiness. Accid. Anal. Prev. 2013, 50, 341–350. [Google Scholar] [CrossRef]

- Britton, J.W.; Frey, L.C.; Hopp, J.; Korb, P.; Koubeissi, M.; Lievens, W.; Pestana-Knight, E.; St, E.L. Electroencephalography (EEG): An Introductory Text and Atlas of Normal and Abnormal Findings in Adults, Children, and Infants; American Epilepsy Society: Chicago, IL, USA, 2016. [Google Scholar]

- Jap, B.T.; Lal, S.; Fischer, P.; Bekiaris, E. Using EEG spectral components to assess algorithms for detecting fatigue. Expert Syst. Appl. 2009, 36, 2352–2359. [Google Scholar] [CrossRef]

- Åkerstedt, T.; Kecklund, G.; Knutsson, A. Manifest sleepiness and the spectral content of the EEG during shift work. Sleep 1991, 14, 221–225. [Google Scholar] [CrossRef] [PubMed]

- Acharya, U.R.; Fujita, H.; Oh, S.L.; Hagiwara, Y.; Tan, J.H.; Adam, M. Application of deep convolutional neural network for automated detection of myocardial infarction using ECG signals. Inf. Sci. 2017, 415, 190–198. [Google Scholar] [CrossRef]

- Tan, J.H.; Hagiwara, Y.; Pang, W.; Lim, I.; Oh, S.L.; Adam, M.; San Tan, R.; Chen, M.; Acharya, U.R. Application of stacked convolutional and long short-term memory network for accurate identification of CAD ECG signals. Comput. Biol. Med. 2018, 94, 19–26. [Google Scholar] [CrossRef] [PubMed]

- Park, E.; Lee, J. A Computer-aided, Automatic Heart Disease-detection System with Dry-electrode-based Outdoor Shirts. IEIE Trans. Smart Process. Comput. 2019, 8, 8–13. [Google Scholar] [CrossRef]

- Chui, K.T.; Tsang, K.F.; Chi, H.R.; Ling, B.W.K.; Wu, C.K. An accurate ECG-based transportation safety drowsiness detection scheme. IEEE Trans. Ind. Inform. 2016, 12, 1438–1452. [Google Scholar] [CrossRef]

- Sahayadhas, A.; Sundaraj, K.; Murugappan, M. Drowsiness detection during different times of day using multiple features. Australas. Phys. Eng. Sci. Med. 2013, 36, 243–250. [Google Scholar] [CrossRef]

- Mansour, R.F. Deep-learning-based automatic computer-aided diagnosis system for diabetic retinopathy. Biomed. Eng. Lett. 2018, 8, 41–57. [Google Scholar] [CrossRef]

- Beritelli, F.; Capizzi, G.; Lo Sciuto, G.; Napoli, C.; Scaglione, F. Automatic heart activity diagnosis based on Gram polynomials and probabilistic neural networks. Biomed. Eng. Lett. 2018, 8, 77–85. [Google Scholar] [CrossRef]

- Wei, R.; Zhang, X.; Wang, J.; Dang, X. The research of sleep staging based on single-lead electrocardiogram and deep neural network. Biomed. Eng. Lett. 2018, 8, 87–93. [Google Scholar] [CrossRef]

- Piryatinska, A.; Terdik, G.; Woyczynski, W.A.; Loparo, K.A.; Scher, M.S.; Zlotnik, A. Automated detection of neonate EEG sleep stages. Comput. Methods Programs Biomed. 2009, 95, 31–46. [Google Scholar] [CrossRef] [PubMed]

- Goldberger, A.L.; Amaral, L.A.; Glass, L.; Hausdorff, J.M.; Ivanov, P.C.; Mark, R.G.; Mietus, J.E.; Moody, G.B.; Peng, C.K.; Stanley, H.E. PhysioBank, PhysioToolkit, and PhysioNet: Components of a new research resource for complex physiologic signals. Circulation 2000, 101, e215–e220. [Google Scholar] [CrossRef]

- Hwang, B.; You, J.; Vaessen, T.; Myin-Germeys, I.; Park, C.; Zhang, B.T. Deep ECGNet: An optimal deep learning framework for monitoring mental stress using ultra short-term ECG signals. Telemed. e-Health 2018, 24, 753–772. [Google Scholar] [CrossRef]

- Gunning, D. Explainable Artificial Intelligence (xai); Defense Advanced Research Projects Agency (DARPA): Arlington County, VA, USA, 2017; Volume 2.

- Zoph, B.; Le, Q.V. Neural architecture search with reinforcement learning. arXiv 2016, arXiv:1611.01578. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Graves, A.; Antonoglou, I.; Wierstra, D.; Riedmiller, M. Playing atari with deep reinforcement learning. arXiv 2013, arXiv:1312.5602. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529. [Google Scholar] [CrossRef] [PubMed]

- Massoz, Q.; Langohr, T.; François, C.; Verly, J.G. The ULg multimodality drowsiness database (called DROZY) and examples of use. In Proceedings of the 2016 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Placid, NY, USA, 7–9 March 2016; pp. 1–7. [Google Scholar]

- Lee, H.; Lee, J.; Shin, M. Using Wearable ECG/PPG Sensors for Driver Drowsiness Detection Based on Distinguishable Pattern of Recurrence Plots. Electronics 2019, 8, 192. [Google Scholar] [CrossRef]

- Janisch, J.; Pevnỳ, T.; Lisỳ, V. Classification with Costly Features using Deep Reinforcement Learning. arXiv 2017, arXiv:1711.07364. [Google Scholar] [CrossRef]

- Janisch, J.; Pevnỳ, T.; Lisỳ, V. Classification with Costly Features as a Sequential Decision-Making Problem. arXiv 2019, arXiv:1909.02564. [Google Scholar]

- Chen, Y.E.; Tang, K.F.; Peng, Y.S.; Chang, E.Y. Effective Medical Test Suggestions Using Deep Reinforcement Learning. arXiv 2019, arXiv:1905.12916. [Google Scholar]

- Yamagata, T.; Santos-Rodríguez, R.; McConville, R.; Elsts, A. Online Feature Selection for Activity Recognition using Reinforcement Learning with Multiple Feedback. arXiv 2019, arXiv:1908.06134. [Google Scholar]

- Peng, Y.S.; Tang, K.F.; Lin, H.T.; Chang, E. Refuel: Exploring sparse features in deep reinforcement learning for fast disease diagnosis. In Advances in Neural Information Processing Systems; The MIT Press: Cambridge, MA, USA, 2018; pp. 7322–7331. [Google Scholar]

- Seok, W.; Park, C. Recognition of Human Motion with Deep Reinforcement Learning. IEIE Trans. Smart Process. Comput. 2018, 7, 245–250. [Google Scholar] [CrossRef]

- Rapaport, E.; Shriki, O.; Puzis, R. EEGNAS: Neural Architecture Search for Electroencephalography Data Analysis and Decoding. In Proceedings of the International Workshop on Human Brain and Artificial Intelligence, Macao, China, 12 August 2019; pp. 3–20. [Google Scholar]

- Klem, G.H.; Lüders, H.O.; Jasper, H.; Elger, C. The ten-twenty electrode system of the International Federation. Electroencephalogr. Clin. Neurophysiol. 1999, 52, 3–6. [Google Scholar]

- Selesnick, I.W.; Burrus, C.S. Generalized digital Butterworth filter design. IEEE Trans. Signal Process. 1998, 46, 1688–1694. [Google Scholar] [CrossRef]

- Correa, A.G.; Orosco, L.; Laciar, E. Automatic detection of drowsiness in EEG records based on multimodal analysis. Med. Eng. Phys. 2014, 36, 244–249. [Google Scholar] [CrossRef]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Caruana, R.; Lawrence, S.; Giles, C.L. Overfitting in neural nets: Backpropagation, conjugate gradient, and early stopping. In Advances in Neural Information Processing Systems; The MIT Press: Cambridge, MA, USA, 2001; pp. 402–408. [Google Scholar]

- Sak, H.; Senior, A.; Beaufays, F. Long short-term memory recurrent neural network architectures for large scale acoustic modeling. In Proceedings of the Fifteenth Annual Conference of the International Speech Communication Association, Singapore, 14–18 September 2014. [Google Scholar]

- Gardner, M.W.; Dorling, S. Artificial neural networks (the multilayer perceptron)—A review of applications in the atmospheric sciences. Atmos. Environ. 1998, 32, 2627–2636. [Google Scholar] [CrossRef]

- Puterman, M.L. Markov Decision Processes: Discrete Stochastic Dynamic Programming; John Wiley & Sons: Hoboken, NJ, USA, 2014. [Google Scholar]

- Sutton, R.S.; Barto, A.G. Introduction to Reinforcement Learning; MIT Press: Cambridge, MA, USA, 1998; Volume 135. [Google Scholar]

- Kaelbling, L.P.; Littman, M.L.; Cassandra, A.R. Planning and acting in partially observable stochastic domains. Artif. Intell. 1998, 101, 99–134. [Google Scholar] [CrossRef]

- Silver, D.; Huang, A.; Maddison, C.J.; Guez, A.; Sifre, L.; Van Den Driessche, G.; Schrittwieser, J.; Antonoglou, I.; Panneershelvam, V.; Lanctot, M.; et al. Mastering the game of Go with deep neural networks and tree search. Nature 2016, 529, 484. [Google Scholar] [CrossRef]

- Lin, L.J. Reinforcement Learning for Robots Using Neural Networks; Technical Report; Carnegie-Mellon Univ Pittsburgh PA School of Computer Science: Pittsburgh, PA, USA, 1993. [Google Scholar]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous control with deep reinforcement learning. arXiv 2015, arXiv:1509.02971. [Google Scholar]

- Bengio, Y.; Grandvalet, Y. No unbiased estimator of the variance of k-fold cross-validation. J. Mach. Learn. Res. 2004, 5, 1089–1105. [Google Scholar]

- Zhou, W.; Liu, Y.; Yuan, Q.; Li, X. Epileptic seizure detection using lacunarity and Bayesian linear discriminant analysis in intracranial EEG. IEEE Trans. Biomed. Eng. 2013, 60, 3375–3381. [Google Scholar] [CrossRef] [PubMed]

- Ruxton, G.D. The unequal variance t-test is an underused alternative to Student’s t-test and the Mann–Whitney U test. Behav. Ecol. 2006, 17, 688–690. [Google Scholar] [CrossRef]

- Schulman, J.; Levine, S.; Abbeel, P.; Jordan, M.; Moritz, P. Trust region policy optimization. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 1889–1897. [Google Scholar]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal policy optimization algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar]

- Mnih, V.; Badia, A.P.; Mirza, M.; Graves, A.; Lillicrap, T.; Harley, T.; Silver, D.; Kavukcuoglu, K. Asynchronous methods for deep reinforcement learning. In Proceedings of the International Conference on Machine Learning, New York City, NY, USA, 19–24 June 2016; pp. 1928–1937. [Google Scholar]

| Feature | Equation |

|---|---|

| Area | A = |

| Normalized decay | |

| Line length | |

| Mean energy | |

| Root mean square | |

| Average peak amplitude | |

| Average valley amplitude |

| Layers | Configurations |

|---|---|

| Convolution Layer | 1D conv, input channel : 1, output channel : 128, kernel size : 1000 |

| Pooling Layer | Max pool : 800, stride : 1 |

| LSTM Layer | Input channel : 128, hidden : 32, number of recurrent layers : 2 |

| Fully connected Layer | Input channel : 352, output : 22 |

| Classification Layer | Input channel : 22, output : 2 |

| Subject | Accuracy | Precision | Recall | F1 Score |

|---|---|---|---|---|

| 1 | 0.98 | 0.98 | 0.98 | 0.98 |

| 2 | 0.79 | 0.79 | 0.79 | 0.78 |

| 3 | 0.95 | 0.95 | 0.95 | 0.95 |

| 4 | 0.98 | 0.98 | 0.98 | 0.98 |

| 5 | 0.6 | 0.61 | 0.6 | 0.6 |

| 6 | 0.92 | 0.92 | 0.92 | 0.92 |

| 7 | 0.98 | 0.98 | 0.98 | 0.98 |

| 8 | 0.92 | 0.92 | 0.92 | 0.92 |

| 9 | 0.94 | 0.94 | 0.94 | 0.94 |

| 10 | 0.97 | 0.97 | 0.97 | 0.97 |

| 11 | 0.93 | 0.93 | 0.93 | 0.93 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Seok, W.; Yeo, M.; You, J.; Lee, H.; Cho, T.; Hwang, B.; Park, C. Optimal Feature Search for Vigilance Estimation Using Deep Reinforcement Learning. Electronics 2020, 9, 142. https://doi.org/10.3390/electronics9010142

Seok W, Yeo M, You J, Lee H, Cho T, Hwang B, Park C. Optimal Feature Search for Vigilance Estimation Using Deep Reinforcement Learning. Electronics. 2020; 9(1):142. https://doi.org/10.3390/electronics9010142

Chicago/Turabian StyleSeok, Woojoon, Minsoo Yeo, Jiwoo You, Heejun Lee, Taeheum Cho, Bosun Hwang, and Cheolsoo Park. 2020. "Optimal Feature Search for Vigilance Estimation Using Deep Reinforcement Learning" Electronics 9, no. 1: 142. https://doi.org/10.3390/electronics9010142

APA StyleSeok, W., Yeo, M., You, J., Lee, H., Cho, T., Hwang, B., & Park, C. (2020). Optimal Feature Search for Vigilance Estimation Using Deep Reinforcement Learning. Electronics, 9(1), 142. https://doi.org/10.3390/electronics9010142