1. Introduction

Globally, it is estimated that 1.1 billion hectares of land are affected by soil erosion, and from these, three million are lost each year for agriculture purposes. Soil erosion is a serious risk, traditionally associated with agriculture in tropical areas and semi-arid areas, and it is important for its long-term effects on soil productivity and the sustainability of agriculture [

1]. Soil protection using cover crops and pastures is of high interest in all agricultural areas of the world. Soil degradation by water and wind erosion are serious problems because they result in the loss of nutrients, minerals and organic materials [

2,

3].

Conservation agriculture (CA), which is an integrated management tool, includes conservation tillage, crop rotations, cover crops and stubble management [

4]. Soil conservation is essential to achieve sustainable production [

5]. Conservation tillage avoids bare soil, always maintaining the presence of vegetal coverage on the soil surface. The retention of stubble is a key element in CA systems.

The critical level of coverage must be established for each specific site, according to the type of soil and the environmental conditions [

6]. According to the Food and Agriculture Organization of the United Nations (FAO), to be considered as CA, the minimum coverage of the soil must reach 30% of the surface, a limit below which the risk of erosive processes becomes more important. In the semiarid and subhumid region of the Southwest of Buenos Aires province, Argentina, more than half of the farmer fields under no tillage (NT) system had a soil coverage behind the threshold of 30% [

6]. NT could exceed that limit, with vertical tillage [

7].

The soil quality, considering chemical, physical and biological fertility, depends on the vegetal coverage [

2,

3]. For this reason, it is crucial to have quantification methods in order to understand how variables are related with the protection value of vegetal material.

The thickness and density of the stubble layer determines the effectivity of soil vegetal coverage. Standing residue has important effects on the microclimatic characteristics of the soil surface by reducing wind speed, up to 70%, and water loss by evaporation [

8]. Therefore, a thick residue layer decreases the turbulent air above the soil surface and steam transport. At this point, it is important to note that the soil coverage is evaluated at least in two different and opposite conditions. The first one is measured when the crop is green and the coverage is useful to evaluate the evolution of the crop along time. The second case is after the harvest when the stubble is left to cover the soil and protect it from erosion. While in the first case measurements made by differentiating colors have a good precision with automatic or semiautomatic algorithms, in the second case, stubble’s colors may be quite similar to that of the soil making the differentiation more difficult. It is for this latter case that the methodology proposed in this paper is oriented.

There are several methods to determine the soil coverage proposed in the literature [

6]. The most used one in Argentina utilizes a picture taken at 1.2 m using a digital camera over a square frame of 50 cm. The image is later processed using an application developed by Instituto Nacional de Tecnología Agropecuaria (INTA) [

6], named CobCal v2.1. In order to measure the vegetal coverage percentage, the CobCal software requires the user to tag the colors of the pixels that correspond to the coverage as positive pixels and the colors that correspond to the ground as negative pixels. Then, by setting a threshold value, the pixels are classified by euclidean distance and the coverage percentage is calculated counting the number of pixels that correspond to each group. The different parameters that this software uses are configured by the user and the method is not completely objective as the results depend on the user’s perception and expertise. The use of LiDAR technology has also been proposed in recent literature for different applications to measure the soil coverage, canopy height, plant phenotyping among others [

9,

10,

11,

12]. Another option is to use unmanned aerial vehicles (UAV) to fly over the field taking several pictures for processing. There are basically two options in this case. In the first one, an RGB picture is taken; this can be processed with different software tools like the mentioned CobCal or other algorithms. In general, these algorithms aim to obtain indexes that are related to the area of soil that is covered with vegetal plants and mostly with the purpose of estimating the development of crops, assessing their growth, and estimating yield. Some of these techniques have been developed originally for satellite images, but have now been extended to manual digital cameras or airborne or UAV-mounted ones. Normalized Difference Vegetation Index (NDVI) [

13] with its variations, Green Normalized Difference Vegetation Index (GNDVI) and Enhanced Normalized Difference Vegetation Index (ENDVI), Normalized Excess Green Index (NExG) [

14], Normalized Green/Red Difference Index (NGRDI) [

15] and, most recently, Canopeo algorithm [

16] are some of the most used ones in the analysis of vegetable coverage. Although it can be said that the processing is almost automatic with no intervention of an operator like in the case of CobCal, all these methods require the adjustment of certain parameters that must be tuned depending on the type of soil and coverage, since there is a high sensitivity to the color histograms of the RGB images that are being analyzed and can be affected by light conditions. A comparison of these methods was performed in different publications [

17,

18]. To the best of the authors’ knowledge, these methods are not implemented on crops cultivated to preserve and improve the characteristics and productive potential of the soil. Anyway, it is assumed that they can also be applied to these cases. Finally, if the pictures are taken with more sophisticated cameras or sets of cameras in order to obtain 3D information, as in the case of stereoscopic vision systems, the cost of the equipment is substantially higher. Stereoscopic vision systems allow three-dimensional models to be obtained in a spherical coordinate system. Using photogrammetric processes, the soil coverage and canopy structure among others can be determined. This method can be implemented using a UAV with two digital cameras [

19,

20]. In some studies, the weight of the plant biomass is estimated from the coverage percentage but it is very sensitive to the structure of the plant. The 3D structure, volume and spatial density are not commonly taken into account due to their great complexity, but despite this, stubble height, architecture and/or disposition, understood as arrangement and/or spatial distribution, are crucial to avoid rain impact on soil surface and water conservation [

21]. The photogrammy technique can do this.

All the methods mentioned are being studied and provide solutions with different precision and cost. In this paper, the use of LiDAR technology presents a good trade-off between quality, cost and practicability for small producers in developing countries like Argentina. The contribution of this paper is the design and implementation of a simple electromechanic device devoted to evaluating and characterizing the soil vegetal coverage.

The rest of the paper is organized as follows:

Section 2 presents and discusses previous related work.

Section 3 introduces the system we propose, its hardware, software and firmware.

Section 4 discusses the equipment setup and a 3D model analysis.

Section 5 presents the preliminary results and validation against a traditional method, and finally,

Section 6 presents the conclusions and future lines of research.

2. Background

LiDAR technology has many applications in surveying, meteorology, aerospacial navigation, robotics and more. Some previous works used this technology combined with image processing for 3D reconstruction of vegetal structure for phenotyping, farming robot guidance, yield estimation, disease detection or precise fertilization and pesticides application.

In [

22], the authors employed an airborne imaging system for increasing the precision of the canopy height measurement in buckwheat fields to evaluate lodging and estimate yields. Image processing algorithms were used in contrast with terrain models to analyze lodging of the crops.

In [

23], the authors measured the height of crops using a middle-ranged rotating LiDAR sensor. The measurement technique presented an error ranged between 1.8% and 8%, depending on the crops stems density.

In [

24], the crop row structure 3D model was reconstructed using a simple digital camera and structure from motion algorithms.

Another outdoor mobile platform was developed by [

25]. It is equipped with a LiDAR sensor to measure the bottom section of the crops and estimate the corn plant spacing and population.

In [

26], the authors attempted to develop a low-cost 2D LiDAR scanner-based mobile ground sensing platform for 3D plant structure phenotyping that works indoors. The authors also compared prices and specifications of six different LiDAR-based scanners. Another low cost system for phenotyping was presented in [

27], where a chessboard pattern beacon array to track the position and attitude of the 3D cameras was used.

In [

28], the goal was to identify urine patches in grazed pastures, which basically consists of regions of abrupt height variation of the fodder. The authors proposed the use of a middled-ranged LiDAR system and a RTK-GNSS navigation system.

The authors in [

29,

30] scanned maize plants in an indoor environment in order to get a precise model of the plants for applications such as phenotyping or stem positioning for automated plant caring systems.

In other recent papers, LiDAR technology was used in combination with digital cameras [

31], multispectral imaging (MI) [

32,

33] or hyperspectral imaging (HI) [

33,

34] for different purposes that require high resolution models for measuring very large areas or volumes. In some cases, terrestrial lidar system (TLS) technology was used [

33,

35,

36] and in other cases airborne sensors were mounted on Unmanned Aerial Vehicles (UAV) [

32,

34].

3. Materials and Methods: Equipment Description

The availability and accessibility of modular electronic components in the market allows the development of projects with devices commercial off-the-shelf (COTS, for its acronym). There is a wide variety of components devoted to solving all types of requirements, whether it is sensing or controlling physical variables, adapting or converting signals, processing or computing, among others.

The proposed system must be able to create a 3D model that allows the visualization of the distribution on the ground of the vegetation coverage with a reduced budget. In order to do this, the heights of the plants need to be measured in a given portion of soil. For this, a distance sensor must be moved along a plane parallel to the ground by sweeping over a sufficiently representative surface, but without being too extensive, since it is important to know the medium-scale characteristics of the residue and vegetation coverage, as it is assumed that the vertical arrangement of the same coverage remains relatively constant over the whole farm parcel.

There are not many options available in the market for low-cost distance sensors. Ultrasound-based technologies allow to measure the distance to certain objects or geometries, but it is difficult to determine their structure or to differentiate thin shapes because of the sensor’s wide detection cone, making it hard to determine the geometry of vegetal coverage, for example, with acceptable precision.

Stereoscopic vision systems allow three-dimensional models to be obtained in a spherical coordinate system. Obtaining a 3D model of the vegetation cover from images has been considered, but this method requires two digital cameras and thus it has a considerable implementation cost. In addition, image processing methods also require greater computational resources and the design of complex algorithms.

Table 1 shows a comparison of material and operating costs and the obtained results for both, the proposed LiDAR scanner and a generic UAV for image acquisition. The costs of the materials and operation of UAVs were averaged based on the results of [

37] and the scanner’s operating costs contemplate the hiring of an operator during an 8 h day, batteries charging for the scanner and a laptop to perform 30 measurements along a 5 Ha parcel.

Laser distance sensors have several advantages compared to the previously mentioned methods. The vision cone and the sampling rate are much higher than ultrasound-based sensors. The advantage over a stereoscopic vision system is the cost, since there are short distance laser sensor devices that are very accessible.

Table 2 shows a simplified evaluation of the different sensors. The symbols “−” and “+” represent a negative or positive aspect, respectively. The case of RGB and UAV plus RGB have “−/+” as the precision of the measure depends on the expertise of the user.

3.1. Hardware: Mechanical System

In the previous section, different possible sensors were evaluated. In the authors’ opinion, the best option is the laser one. The commercial device selected is the one based on the chip VL53L0X [

38] that has a Time-of-Flight (ToF) sensor. This sensor is controlled through an Integer Integer Communication (I2C) interface. The sensor needs to be mounted on a mechanical structure capable of moving it along a predefined path to collect all the necessary values in the chosen square area.

The sensor moves over the rectangular area using a classical CNC mechanism. As the sensor has low weight and small dimensions, the mechanism requires a low torque to move it. Stepper motors (model BYJ48) were used as drivers to move the sensor because they are very precise as they have a reduction rate of 1:64 and a stride angle of 5.6255°. Although the angular speed is not high, when powered with 9–12 V, the motors move fast and can reach 15 rpm at the output shaft.

The mechanism chosen for the movement of the distance sensor over the horizontal plane is named CoreXY which is widely used in 3D printers from the project Replicating Rapid-prototyper (RepRap) [

39].

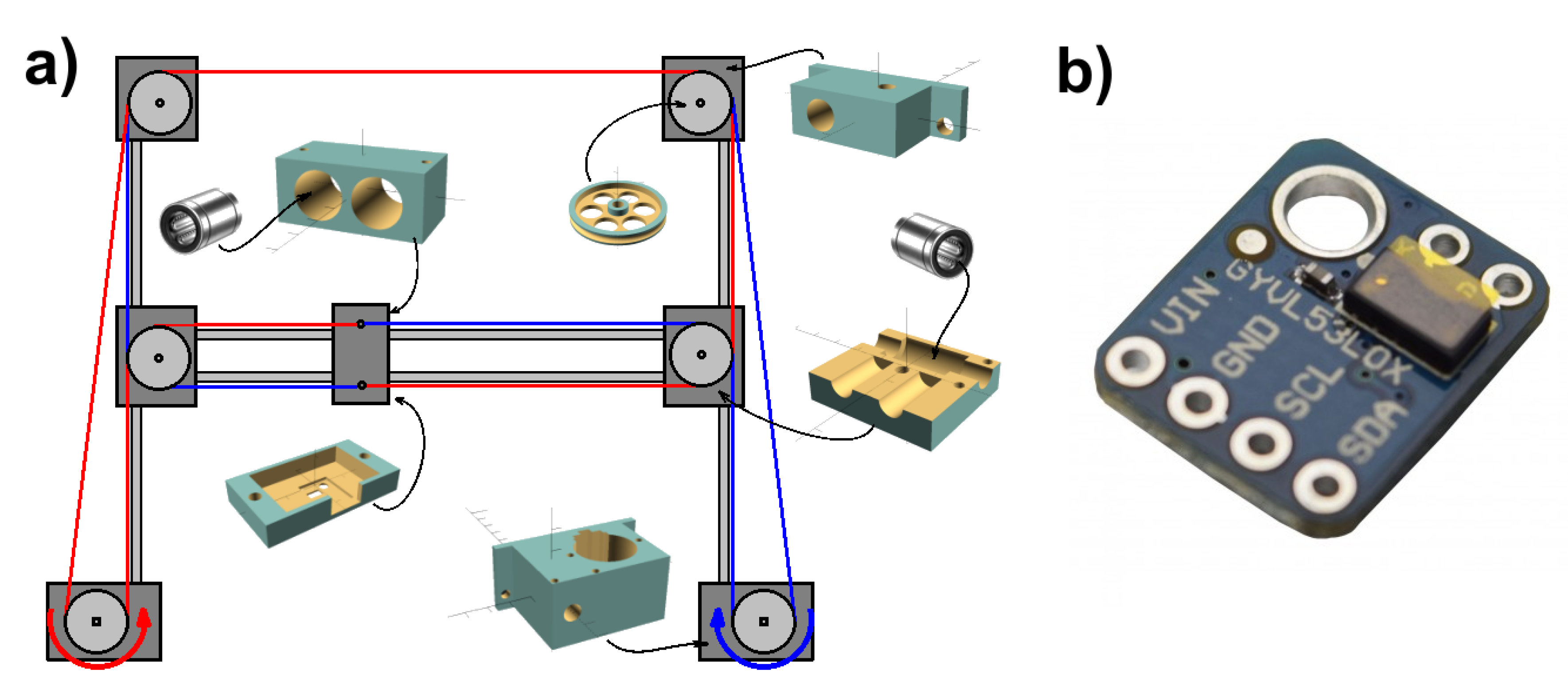

Figure 1 shows a basic schematic of the mechanical system as seen from the top point of view. The structure is based on four fixed supports, two of them hold the stepper motors and the other two, the free pulleys. Four rectified steel rods, 8x540 mm were used. Two of them allow the sensor to move in the x-axis while the other two are used to mount the cart that moves in the y-axis. All the pieces were designed using the OpenSCAD software and were printed with polilactic acid (PLA) in a Prusa A8 3D printer.

The CoreXY mechanism has two independent pulley systems that allow the movement in both directions,

x and

y, and are represented by red and blue lines in

Figure 1. When they rotate in the same direction, the cart moves on the

y-axis direction, and when they rotate in opposite directions, the sensor moves on the

x-axis direction.

The cart for the y-axis movement has two supports, one at each ends, which were adjusted to the rods with lineal bearings and the corresponding pulleys were located in the upper part. The cart for the displacement of the sensor in the x-axis, is also mounted on lineal bearings. The ToF sensor was placed at the bottom of this cart.

The end-stop sensors at the end of both axes are used to position the cart at the beginning in a known location from where the movement starts (

Figure 1).

The number of samples per unit of measured area and resolution could be configured setting the sampling frequency of the sensor and the carriage advance of every step during the sweeping process. The latter is the one that affects the measurement duration, because the smaller the carriage advance is, the longer it takes to complete the process. Therefore, the aim is to reduce the model resolution as much as possible while keeping the model parameters within a reasonable error range.

The resolution is constrained by the error of the absolute position of the CNC mechanism that is estimated to be 0.5 mm due to string stretching, pulley sliding and mostly, due to the stepper gearbox play. To determine the pulleys’ sliding error, the mechanism was operated so that it traversed a square path around the central position. After a total travel of 52.5 m and the head being returned to the initial position, the error was measured using a ruler, and it was 5 mm. This means that the slip error was less than 0.001%, and therefore it can be neglected. A 16,000-sample model has around 3.5 mm between samples, which means around 14% error for the carriage real position. We can assume this value as the maximum achievable resolution. The measurement process to get this amount of samples takes about an hour.

3.2. Hardware: Electric System

A block diagram of the system principal components is shown in

Figure 2. The microcontroller is an ATMega328 mounted on an Arduino Nano prototyping board having the advantage of being small, with low-power consumption, cheap and of an adequate computational power given the system requirements.

All system components are modular and compatible with the Arduino project. The BYJ48 motors require current amplifiers, preferably the ones integrated in the ULN2003 chip, which consists of an array of seven Darlington Negative–Positive–Negative transistors (NPN) pairs with common cathode outputs and flyback diodes for inductive loads.

A Bluetooth 4.0 AT-09 Low Energy module (BLE) was used for wireless communication. This module was controlled by the microcontroller through a TTL (Transistor–Transistor Logic) serial interface.

The voltage regulation required by the microcontroller, the Bluetooth module, the distance sensor and the end-stop switches, was achieved with a switching power supply based on the LM2576 integrated circuit. A thermistor in series and a varistor in parallel were added as overvoltage and overcurrent protections and also a diode bridge to avoid a wrong polarity from the power source.

The voltage supply for the phases of the stepper motors was controlled with the Darlington arrays fed with unregulated direct current, connected directly to the diode bridge output. The 1.4 V voltage drop of the bridge reduces the supply voltage of the motors, which have a nominal voltage of 5 V.

3.3. Firmware

The microcontroller drives the stepper motors controlling the mechanism position, it acquires the distance values measured through the laser sensor and it sends the data in the form of a three-dimensional vector by serial port or Bluetooth, depending on the interface used. Algorithm 1 describes the firmware architecture, which is composed of three Interrupt Service Routines (ISR) and an endless loop in the main function. The operation of the system is briefly detailed next.

| Algorithm 1 Firmware main procedure and functions. |

| 1: global variables | |

| 2: bool | ▹ Status of data acquisition from sensor |

| 3: int[2] | ▹ Current sensor position vector |

| 4: int[2] | ▹ Current setpoint or desired position vector |

| 5: Queue | ▹ Queue of setpoints to go |

| 6: CNC | ▹ Object for CNC mechanism control |

| 7: Serial | ▹ Object for serial communication |

| 8: end global variables | |

| 9: | |

| 10: procedure ISR() | ▹ ISR on endtop 1 or 2 activation |

| 11: | ▹ Set the correspondig coordinate 1 or 2 to origin or 0 |

| 12: end procedure | |

| 13: | |

| 14: procedure ISR() | ▹ ISR on timer event |

| 15: | ▹ Calculate the error signal |

| 16: .Step(err) | ▹ Advance mechanism in the desired direction |

| 17: if then | ▹ If setpoint reached |

| 18: .Pop() | ▹ Go to the next setpoint |

| 19: end if | |

| 20: end procedure | |

| 21: | |

| 22: procedure ISR() | ▹ ISR on serial communication event |

| 23: .Read() | ▹ Received character |

| 24: if ‘a’ then | ▹ ‘a’ command for initialize scanning process |

| 25: | ▹ Generate setpoints for a sweeping movement |

| 26: | ▹ Starts data acquisition |

| 27: end if | |

| 28: if ‘b’ then | ▹ ’b’ command for pause and hold |

| 29: | ▹ Stops data acquisition |

| 30: .Clear() | ▹ Delete al setpoints |

| 31: end if | |

| 32: if ‘c’ then | ▹ ‘c’ command for cancel scanning |

| 33: .Clear() | ▹ Delete setpoints |

| 34: .Push(0,0) | ▹ Go to origin |

| 35: end if | |

| 36: … | ▹ Other commands are not listed |

| 37: end procedure | |

| 38: | |

| 39: procedure MAIN() | ▹ Main function |

| 40: while true do | ▹ Endless loop |

| 41: if scanning then | ▹ Checks if data acquisition is enabled |

| 42: .Read() | ▹ Get heigh measurement from laser sensor |

| 43: .Print(pos[1],pos[2],z) | ▹ Sends (x,y,z) coordinates to computer/smartphone |

| 44: end if | |

| 45: end while | |

| 46: end procedure | |

To determine the action of the steppers, there is a queue of set-points, where each element consists of a vector that contains the (X, Y) coordinates to where the sliding carriage with the sensor should move. Each time a set-point is reached, it is removed from the queue and the procedure is repeated. When there are no set-points left, the motors are de-energized and the system stands by, awaiting for new commands. Importantly, it is possible to pause the process at any time, resume it or return it to its starting point.

When the system starts, the position of the carriage is unknown, since sensors for these variables are not available, therefore, the steppers are operated in such a way that the sensor carriage moves both horizontally and vertically until the end-stop switches are activated. Once the starting point is reached, the system is ready to start the scanning process.

Data acquisition of the sensor can be activated or deactivated at any time. While the measurement is being made, the microcontroller sends the current coordinates (X, Y) of the mechanism and the Z component, which is equivalent to the height being measured by the distance sensor, through the serial port.

The firmware allows to configure the sampling frequency of the sensor, the speed of the stepper motors and modify the scan path, and all the configuration can be made from the user graphical interface, which is described in the following section.

3.4. Software

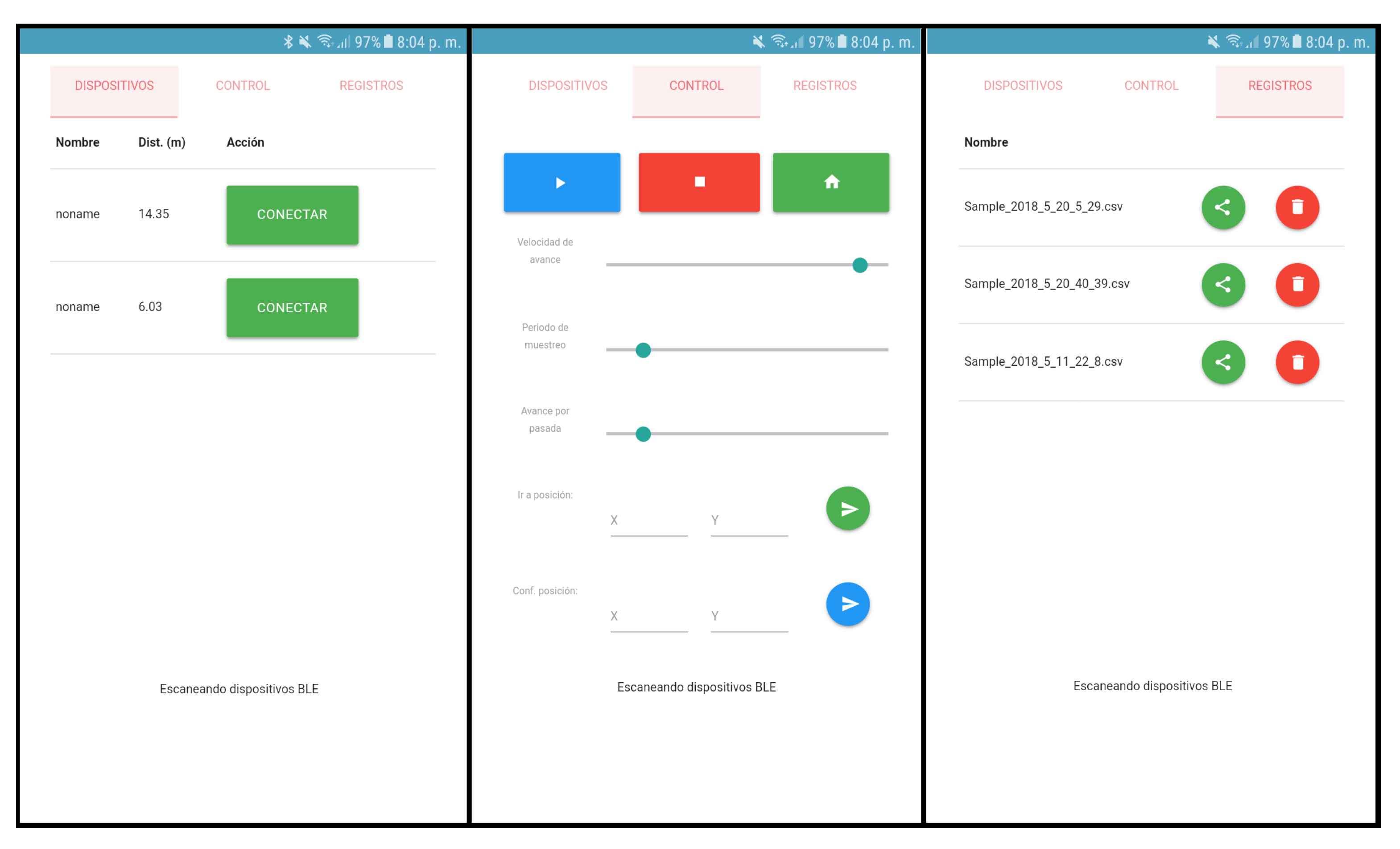

The user can command the system from a personal computer or using a smartphone. Different applications were developed for Windows, Linux and Mac, and Android and iOS platforms.

As mentioned before, the result of the measurement consists of a plain text file in CSV format.

Both the applications for personal computers (PC) and smartphones have simple interfaces with the basic commands for controlling the scanner: start, pause, resume or cancel the measurement process and carriage return to the tarting point. There is also a space to configure the advancing speed of the carriage, the path of the sensor during the measurement and the sampling frequency of the sensor. The main purpose of the application is to acquire the measured data, so the implementation was made keeping the graphical user interface as simple as possible.

During the measurement process, the application must remain connected to the microcontroller either through the serial port for the case of the PC or by Bluetooth for the case of the smartphone for a continuous data acquisition. Once the measurement is finished, the user assigns a name to the output file and it is saved on the available storage medium, whether to open, modify or share it.

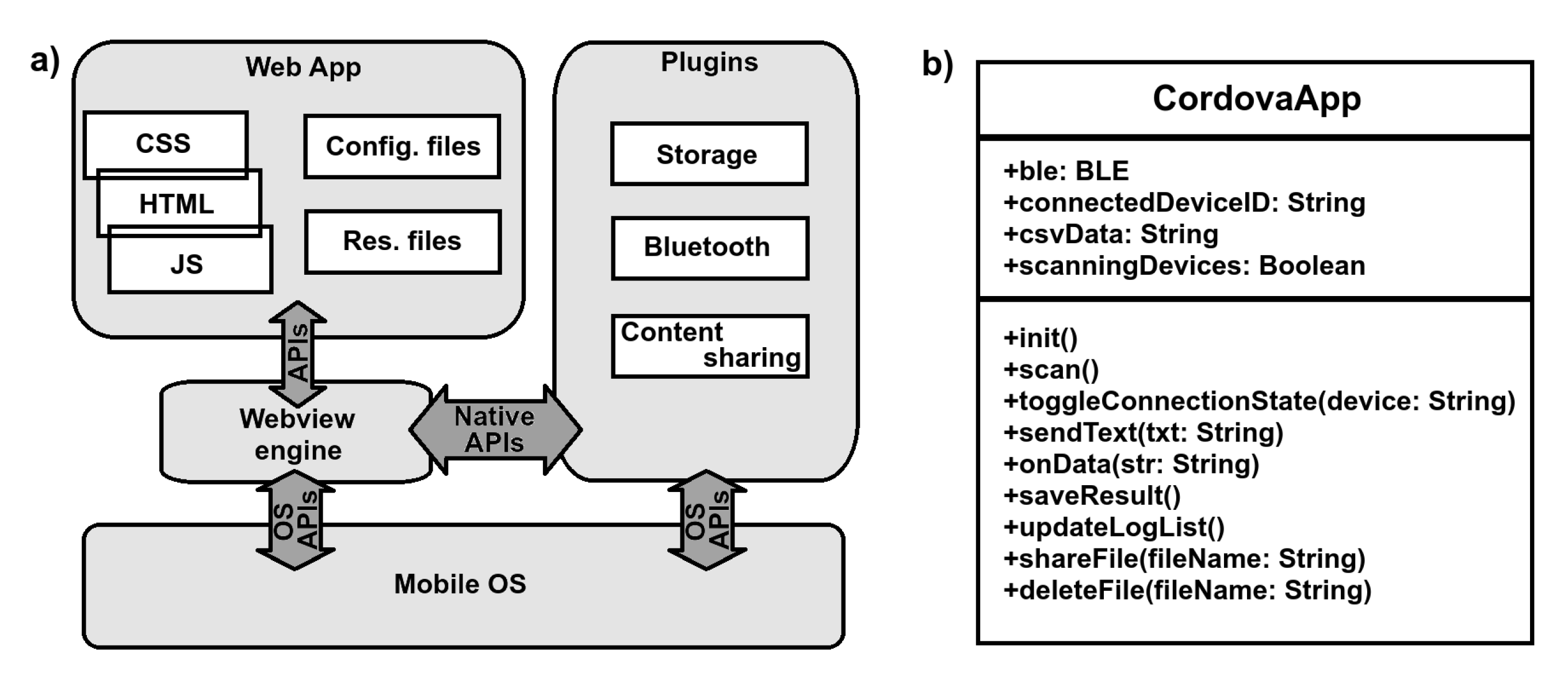

Figure 3a shows the overall architecture of the developed mobile software, which consists of a web application that, using the Apache Cordova framework, is implemented as a standalone, cross-platform app. The web application contains the user graphical interface and through the native Application Programming Interfaces (APIs), the app can access the mobile peripherals and tools, such as persistent storage, Bluetooth communications, and the content sharing dialogues. The web app main class attributes and functions are listed in

Figure 3b.

Figure 4 shows three different screenshots of the GUI for the three main tools: Bluetooth devices, scanner control and file records.

The PC application was programmed in C++ using the Qt5 library with QtCreator.

3.5. Uncertainty and Error Evaluation

Any measurement has an associated uncertainty and an intrinsic error. In the case of the method proposed in this paper, there are basically two sources of error: the laser sensor and the position of the sensor. Both errors are independent.

In [

38], the maximum error indicated for the distance is 6% when using the sensor in indoor conditions reflecting on a grey surface. As the constructed instrument consists of a black box, we can ensure the indoor condition. Usually vegetable cover is neither white nor grey, so taking the worst case provides an upper bound on the error. When considering the error introduced by the laser sensor, it is important to note that the same is considered for distances as large as 1.2 m, which is about twice the maximum height of the sensor to the ground, given the used scanner closed structure. In fact, as it is shown later, the maximum distance measured is just below 0.6 m. The error curves presented in the datasheet of the sensor show that the actual measured distance is slightly larger than the real one, the error increases with the distance reaching for the grey surface a 6% at 0.6 m. In the lower range of the scale the error is below 3%.

As the error is not constant and depends on different issues like the reflecting color, the size of the reflection area and the distance of it to the sensor, it is difficult to bound it appropriately. In

Section 5.4, a linear regression is proposed to calibrate the performance of the scanner. The scanner is used to evaluate, for example, wheat coverage, and the real values are measured using a measuring tape. With this, a first order function can be computed and the performance of the scanner is improved within the confidence interval provided by the regression.

The CNC mechanical movement has a maximum error of 14% in positioning the sensor for a reading as explained in

Section 3.1 for the maximum resolution, which is a grid with 3.5 mm space between samples. This error has a uniform distribution as it depends mostly on the strings stretching, which vary randomly for each step. The actual mean is 0.25 mm which is just 7% with standard deviation of just 0.14 mm, which is 4% of the resolution. It is important to remark here that the proposed method is not intended to determine the absolute position of the vegetal cover but its distribution and so the introduced error by the CNC positioning system is not statistically significant.

4. Coverage Crop Residues 3D Model

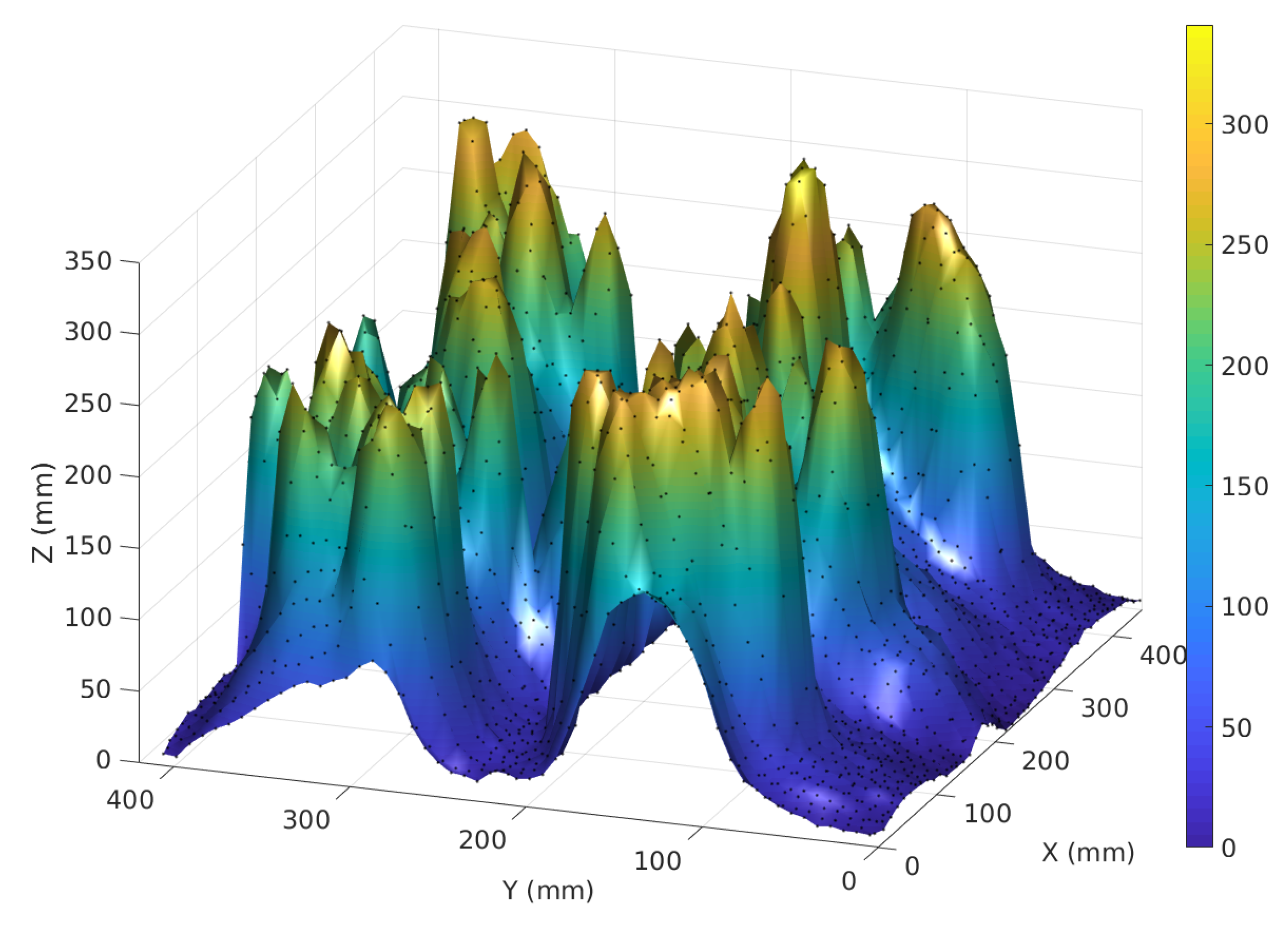

In order to test the instrument, a mock-up was used to mimic a portion of the soil with wheat stubble. The mock-up shown in

Figure 5 consists of a polystyrene base covered with soil, where two rows of wheat plants were inserted separated at a distance of 17.5 cm, which is a measure of spacing between rows commonly used for winter crops by the agricultural producers in this region. The plants were cut at 35 cm simulating the cut made by a combine harvester.

For each pair (X,Y) determined by the CNC mechanism a sample is taken. Data obtained in this way is considered scattered as it only samples the surface in a finite number of points and each read is independent from the others. The basic statistical functions we were run over the set of data to obtain the minimum, maximum, mean, standard deviation, variance and median.

The coverage model was built as a function of the height for each coordinate pair in the (X,Y) position of the CNC mechanism. This allowed the representation of the surface as a function of two variables. The estimated volume of the crop residue coverage was computed taking the mean value of the heights multiplied by the total area of the base.

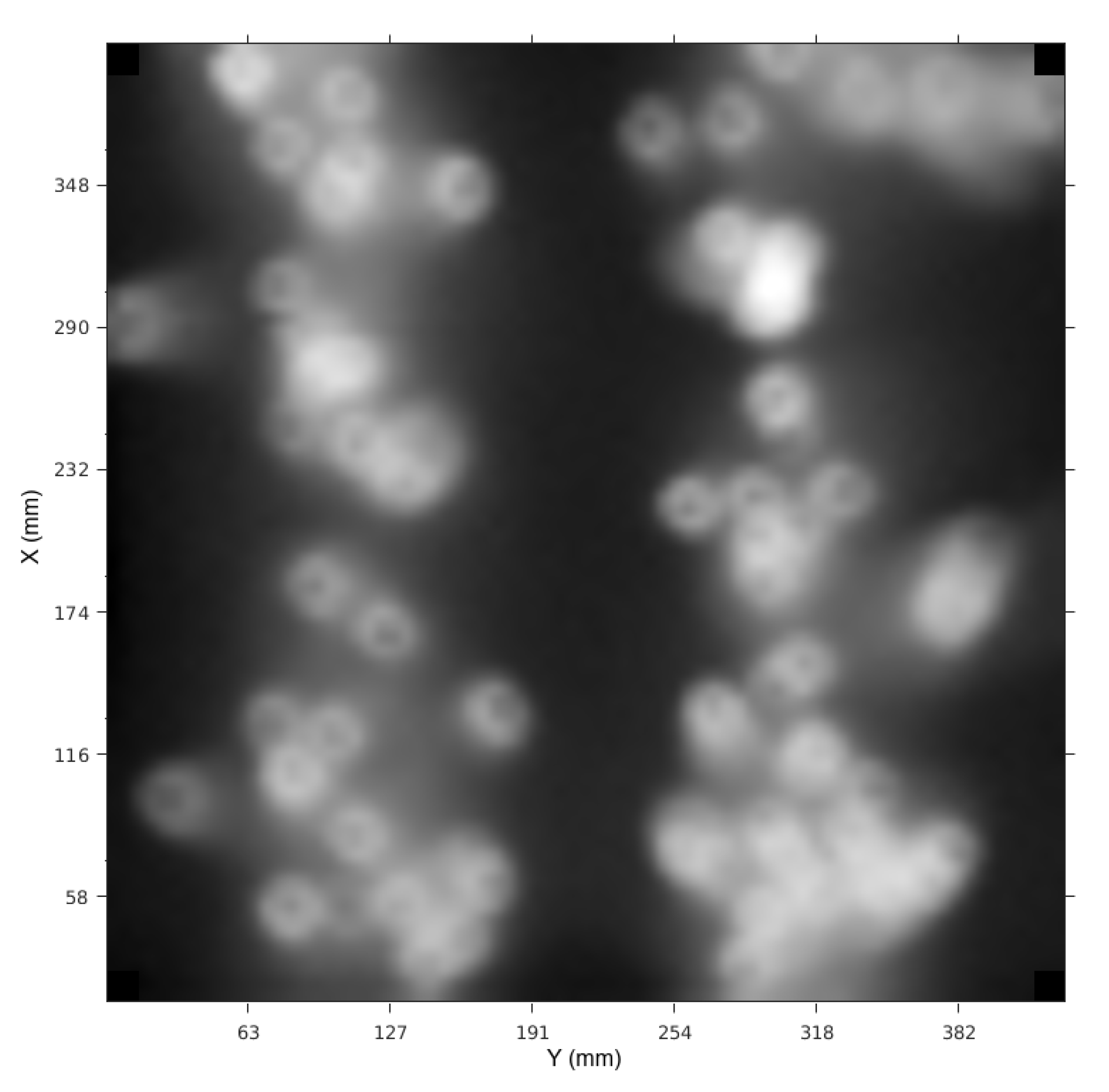

Data visualization can be done with different tools. The 3D plot as shown in

Figure 6 allows visualization of the general shape of the measured region and a visual comparison with the real structure. A different way is shown in

Figure 7, using a gray-scale image. In this case, a regular grid for the (X,Y) coordinates was used so that each point was allocated to a pixel of an image and this was achieved using bilinear interpolation from the scattered data. The Z component was normalized to fit the range (0,1) and was represented as the gray level of the corresponding pixel.

5. Experimental Results

From the measurement process, an array of 3D coordinates of each capture was obtained. With this data, it was possible to obtain the maximum and average heights of the coverage, and by means of bilinear interpolation, the volume and the surface of the envelope. These variables were then used to obtain indices and evaluate their correlation with variables of agricultural interest.

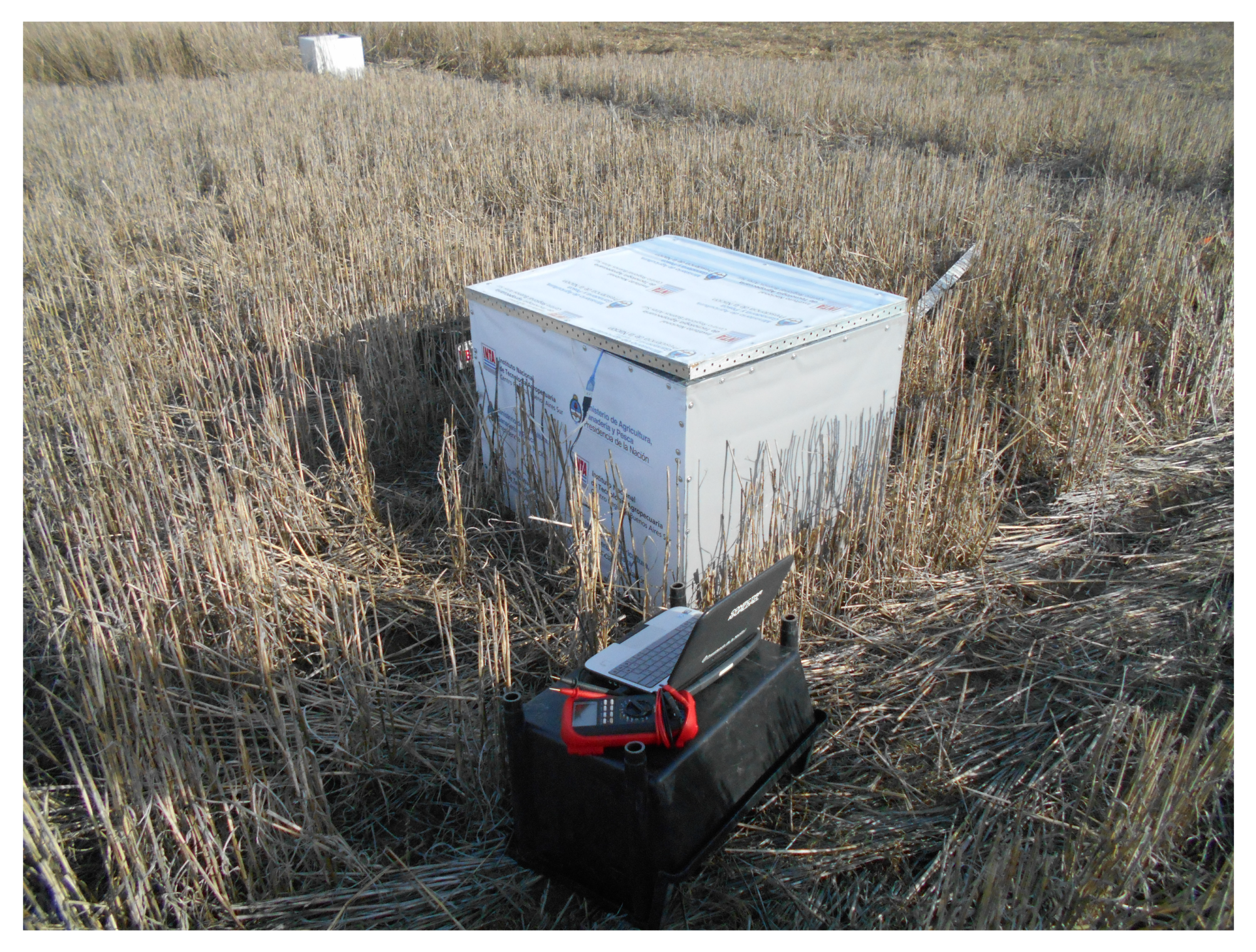

Figure 8 shows the scanner within the closed box while taking measurements in the field during one of the experiments.

5.1. Sampling Frequency

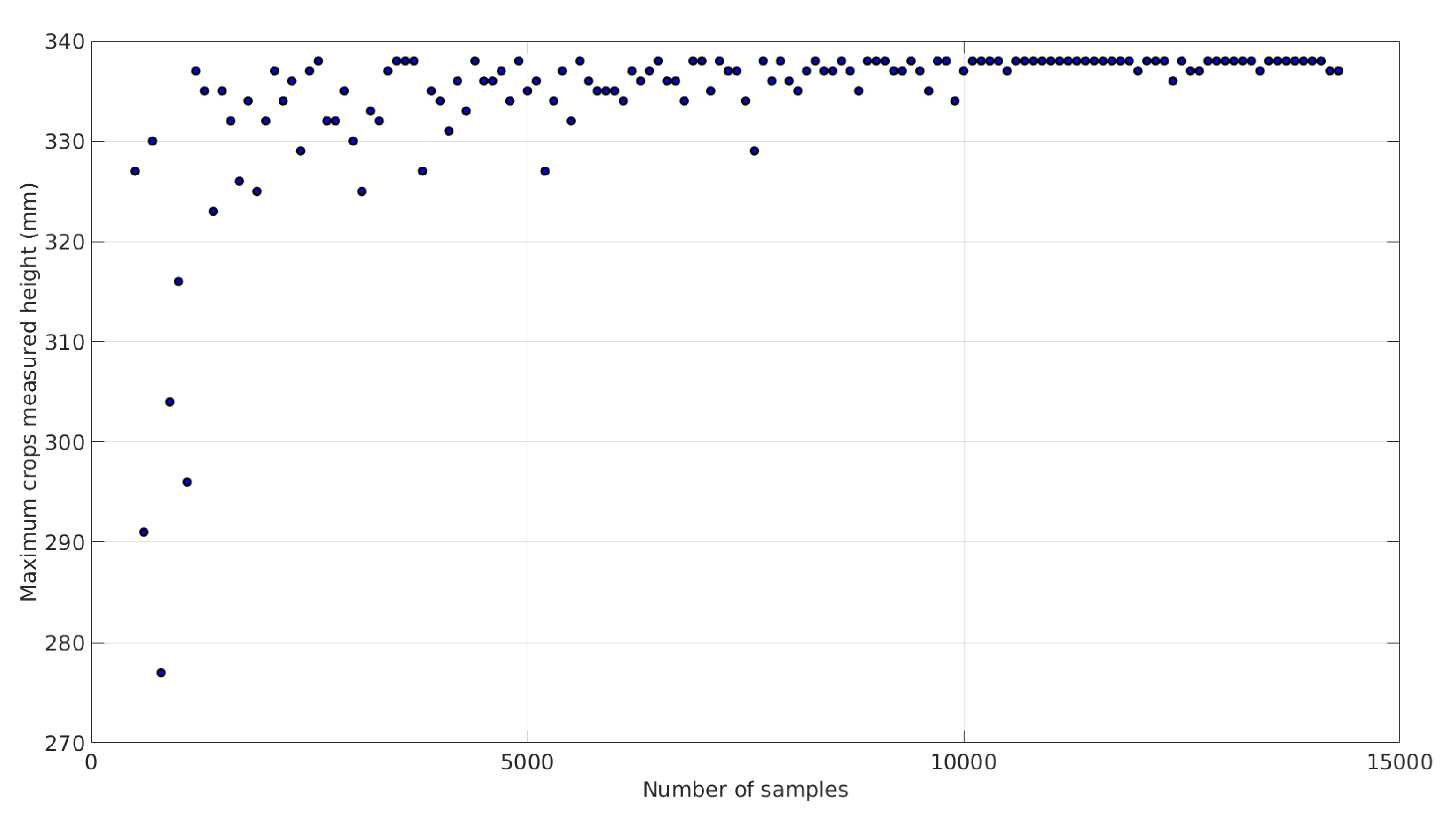

A high-resolution model, obtained from scanning the wheat stubble mock-up, consisting of approximately 15,500 data samples was randomly subsampled without replacing to observe how the number of data affects the main parameters.

Figure 9 shows the relationship between the maximum measured height and the number of samples of the model. Given the shape of the scanned crops, as the number of data decreases, the probability of measuring the height of a wheat cane is lower. Assuming the real maximum height of the model as the one obtained from the high-resolution model, the relative error is around 3% for a sample number of 5000 and decreases to 1% for a model with 10,000 samples.

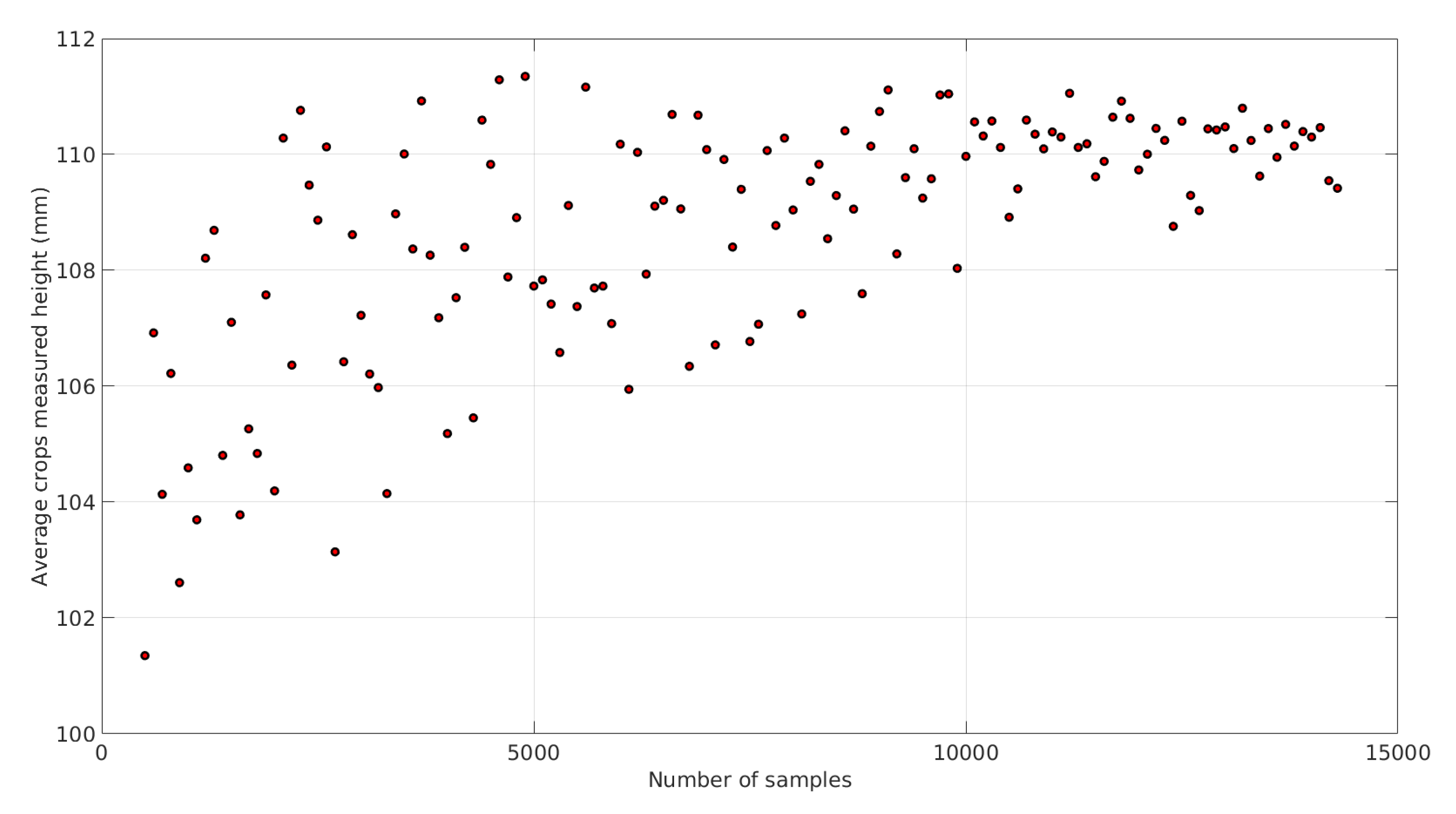

The dispersion of the average measured height is notably higher, as can be seen in

Figure 10. However, the maximum relative error is around 9% and decreases to approximately 5% in a model with 5000 samples and 3% in a model with 10,000 samples.

5.2. Height

The laser sensor has a Field-Of-View (FOV) of 25 degrees. The area

A of the surface where the light is reflected for measuring the ToF, can be expressed as a function of the distance

h being measured,

where the value of constant

k is

. The area of reflection increases proportionally to the square of the distance being measured. This represents a drawback when measuring pointed surfaces or geometries with small reflection areas, because the sensor does not detect them.

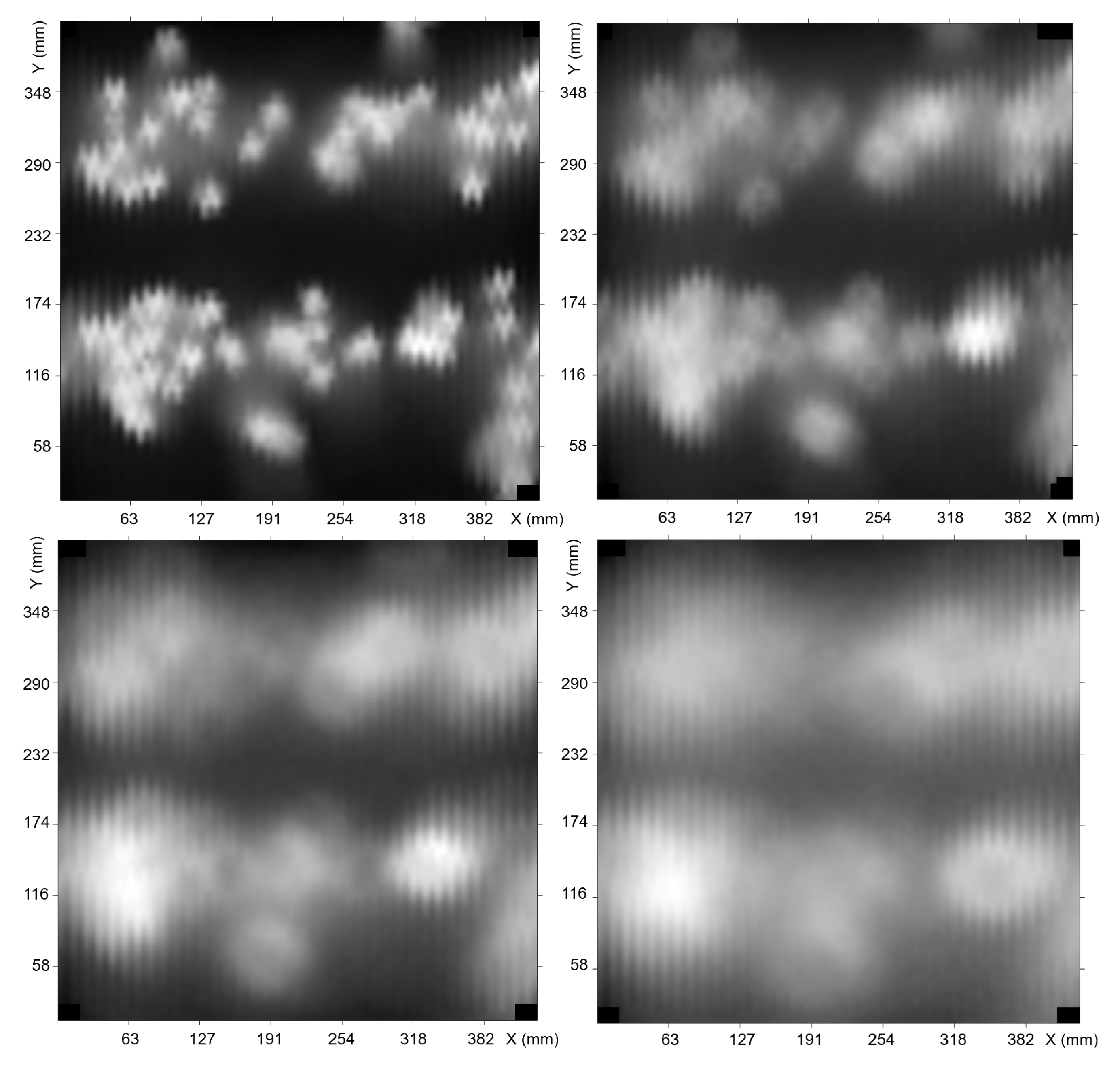

Figure 11 shows the grayscale images of data measured when the scanner is placed at different heights from the ground. The blurring effect occurs because the FOV of the sensor is overlapped with neighbor samples. Deblurring algorithms can be used for reconstructing the shape of the scanned object or for measuring the real dimensions with reasonable precision and, in general, it is a requirement to have some previous information about the geometry of the object or to use trained algorithms for different cases.

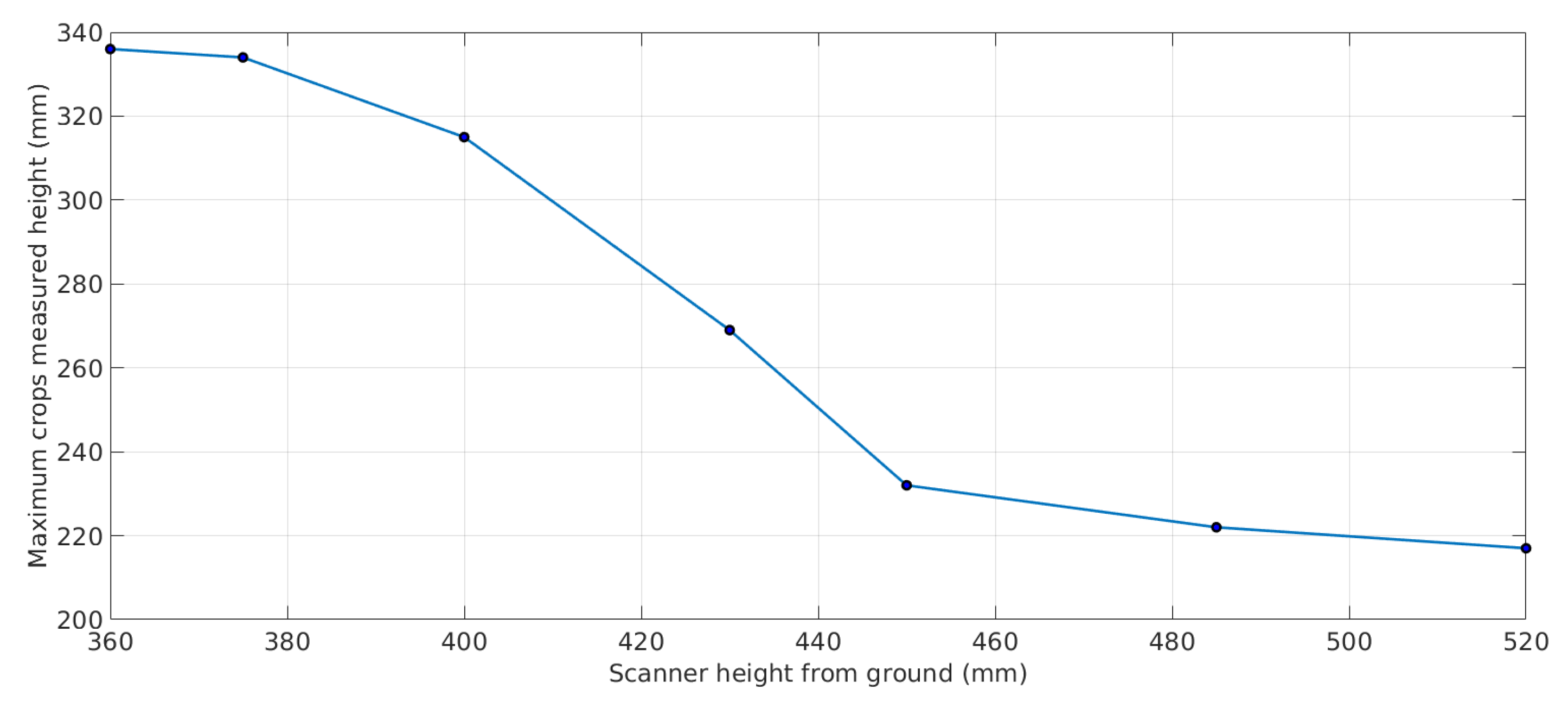

Figure 12 shows the relationship between the maximum measured height of the scanned crops and the ToF sensor altitude from the ground while

Figure 13 shows the relationship between the mean measured height and the ToF sensor altitude from the ground. As it can be observed, the maximum height decreases as the scanner is positioned at a higher altitude. The shape of this curve may be different for different types of cover crops. The inverted sigmoid shape gives an idea of the measurement error, where the left end indicates a trend towards the real value of the maximum measured height and the right tends towards an average value of the height of the model.

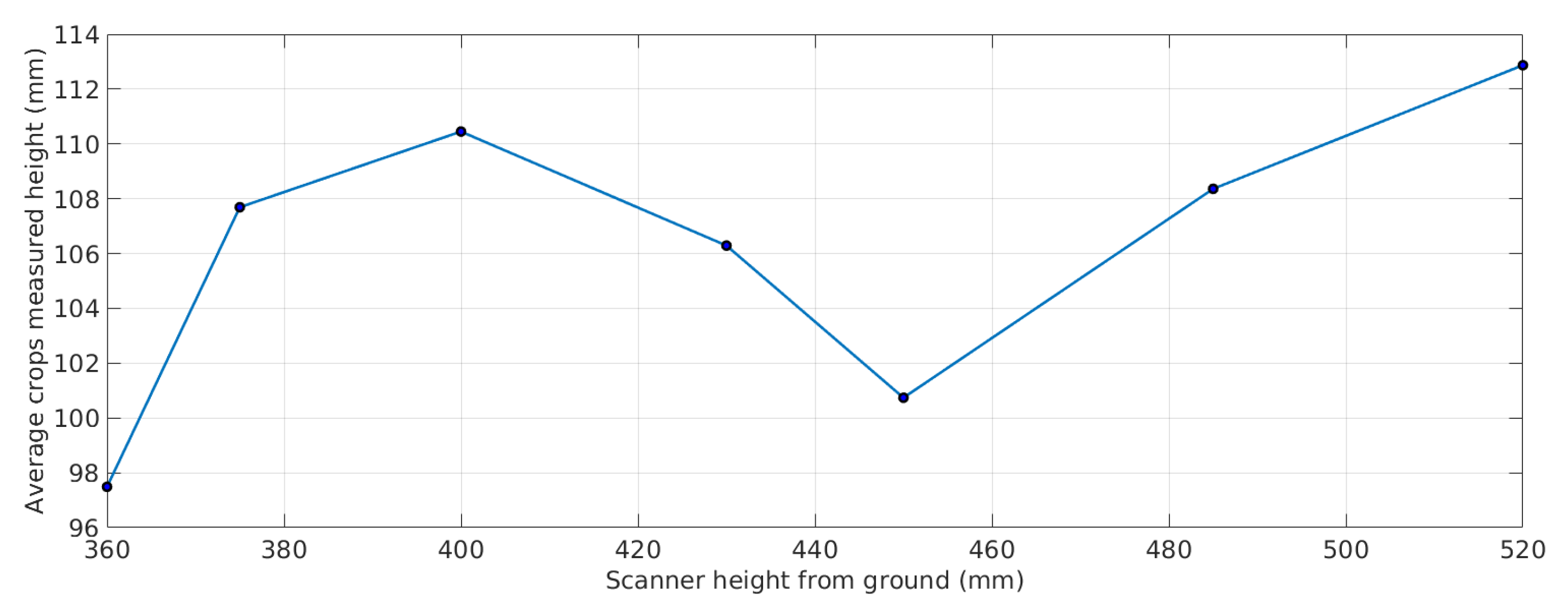

On the other hand, the average height of the measured crops does not change significantly if the scanner altitude is varied.

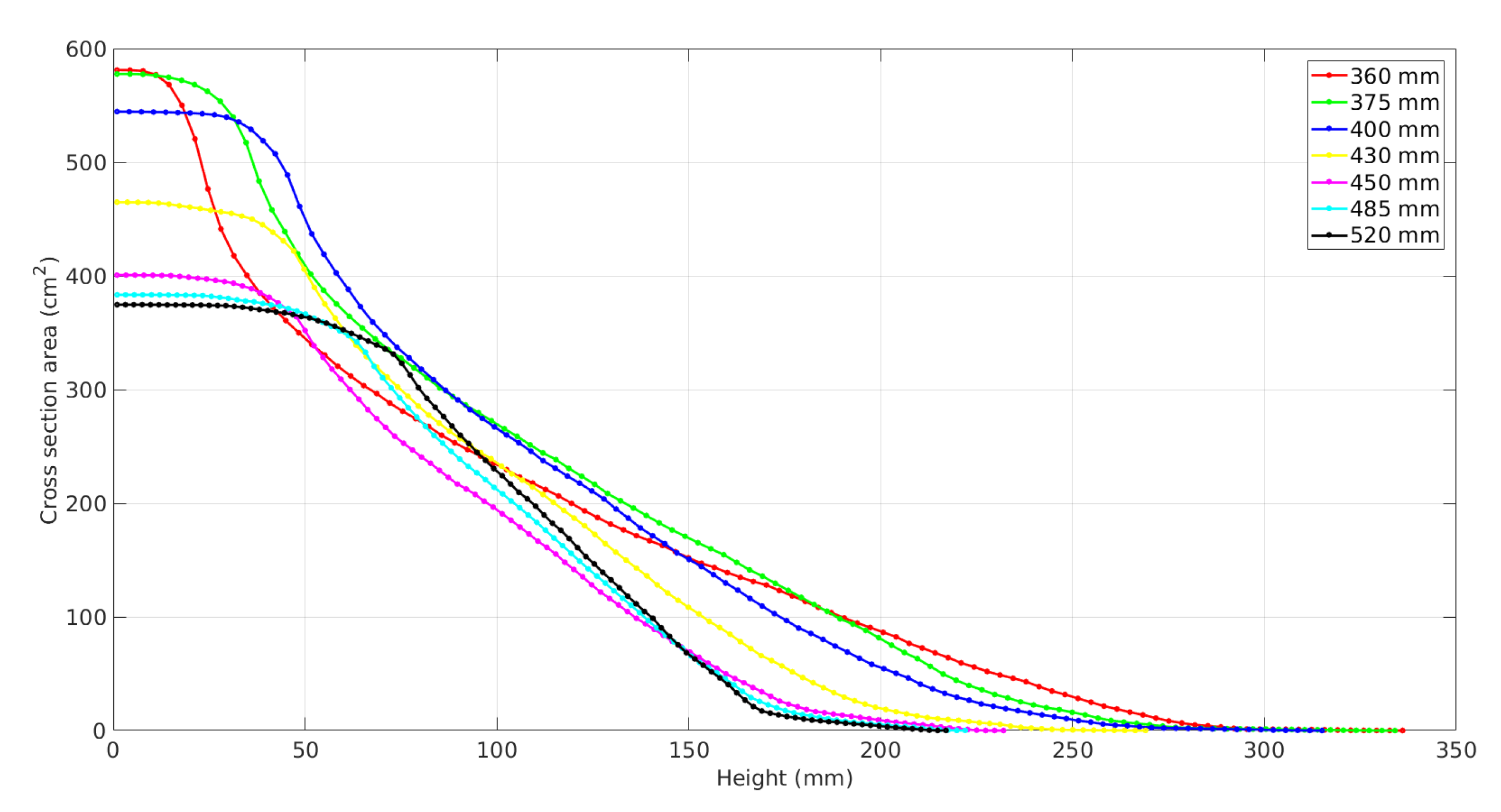

The cross section area functions shown in

Figure 14 change as the scanner is located at different altitudes indicating that the geometry of the measured data is different. In general, it is observed that the model is flattened in height and the edges are softened.

5.3. Time and Energy Efficiency

The instrument has a power consumption of 750 mA, marked mainly by the energy required by the motors to operate the mobile mechanism. At this load level, a small 55 Ah gel battery would support 58.67 h of continuous measurement without recharging.

The motors, fed at twice their nominal voltage, support a maximum stepping frequency of approximately 1.3 KHz. Since the stepper motors have 4096 steps per turn and use pulleys of 22 mm radius, the speed of the sensor head is estimated as approximately 44 mm/s. Experimentally, it has been verified that the estimated time to capture a model of 8000 samples takes approximately 15 min.

5.4. Calibrating the Scanner

When the scans were performed positioning the scanner at constant height from the ground, the measurement error was considerable.

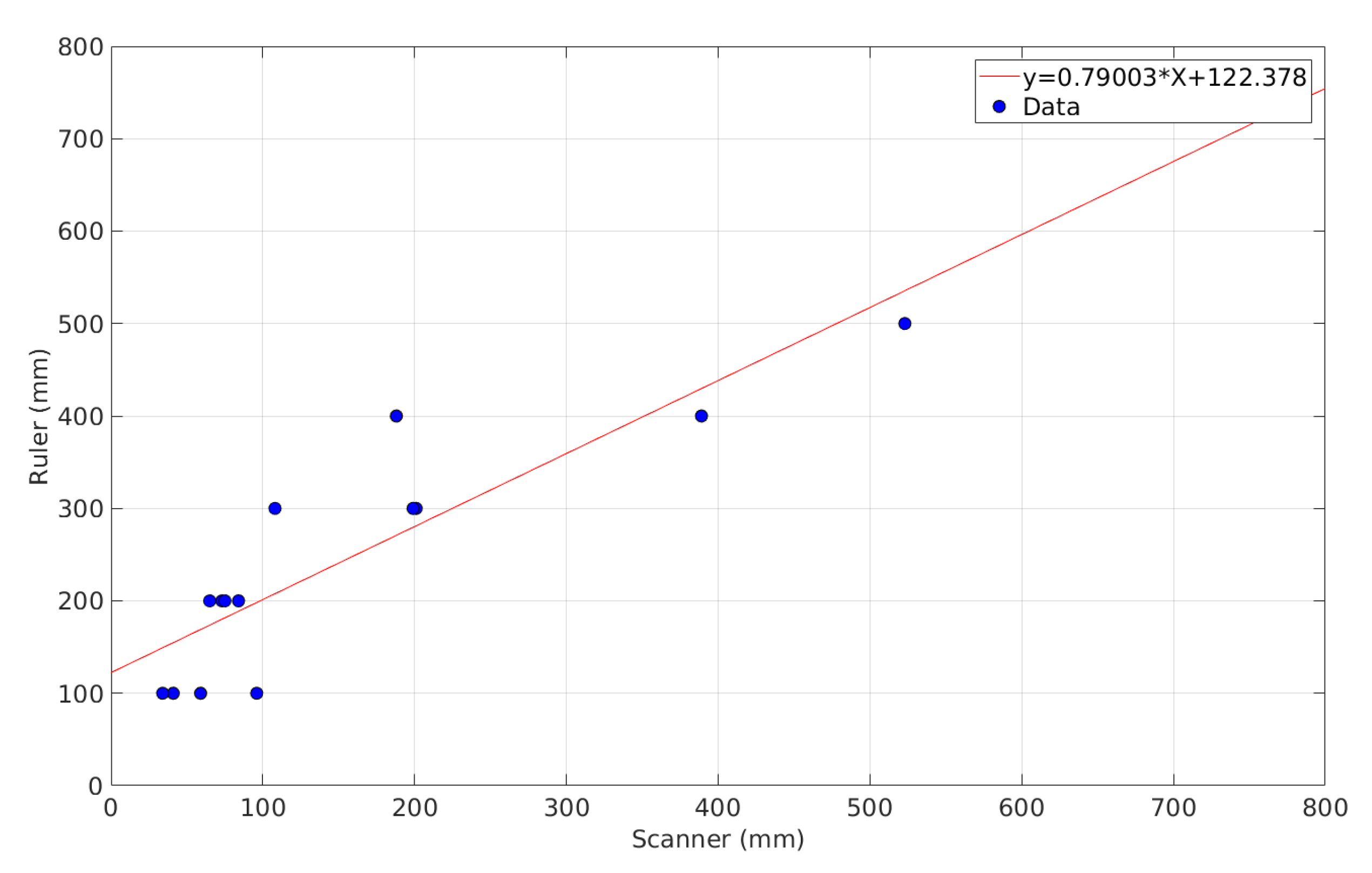

Figure 15 shows the comparison between the values measured with the traditional method, i.e., with a tape measure, and the values obtained with the scanner and the linear regression results. The experiment was carried out over a portion of soil with a vegetal coverage made up of mixed species and including varieties with broad and narrow leaves in order to avoid error when measuring different geometries.

This comparison was only made for the maximum height of the crop, since there are no other techniques available to measure other variables to validate the device. In this case, the standard error of the estimate was mm with a coefficient of determination .

Assuming a linear relationship, the crops height can be estimated from the maximum measured value with the scanner from Equation (

2).

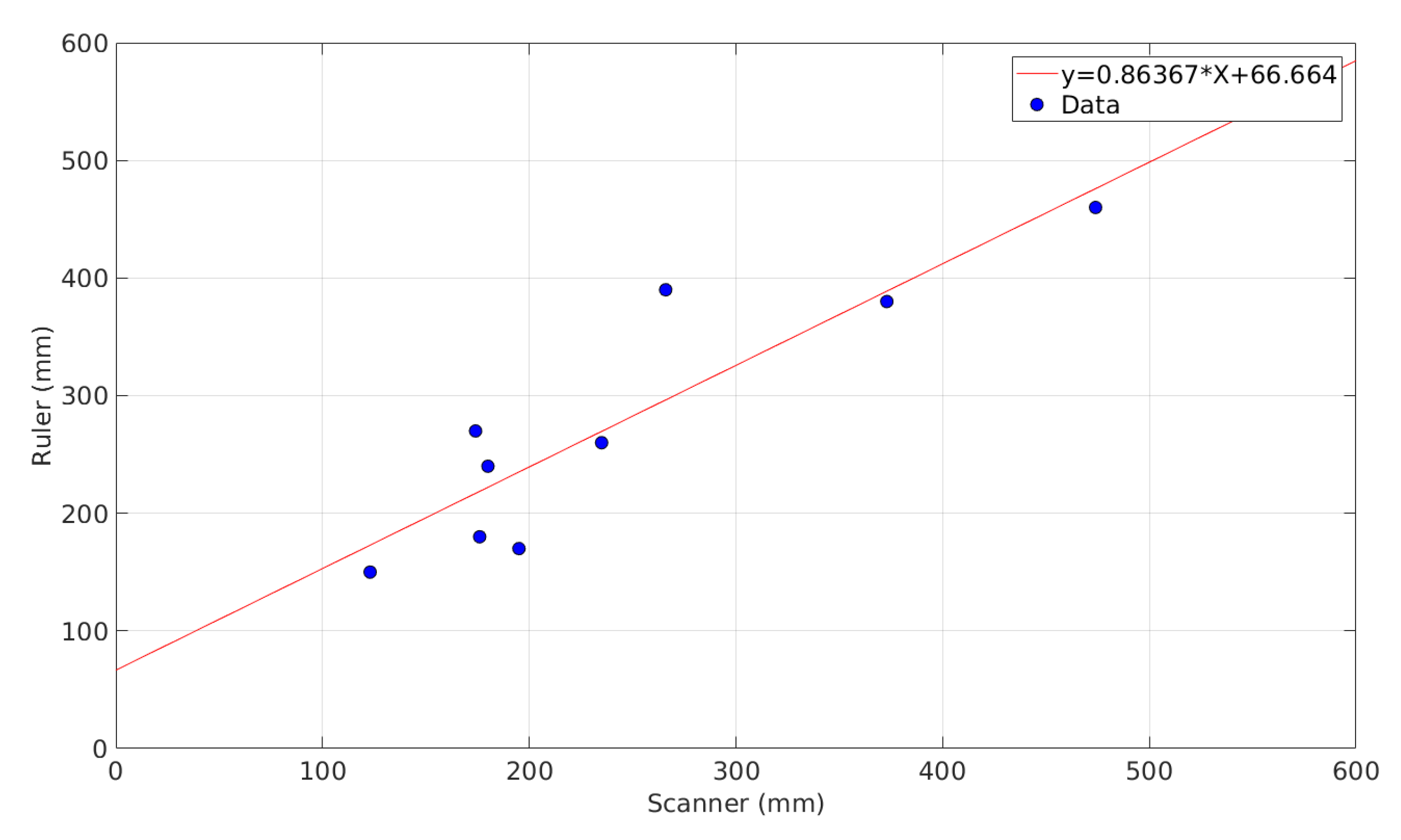

Figure 16 shows the comparison between the values measured with the traditional method and the values obtained with the scanner when placed at the lowest height so as to minimize the error when overlapping of the reflection surfaces occurs. The measured standard error of the estimate was

= 48.20 mm and the coefficient of determination was

.

In this case, Equation (

3) allowed a comparison between the values obtained with the scanner and the measure performed with the traditional method.

The procedure can be repeated on different kinds of known vegetable coverage. In this way, the parameters can be computed for wheat, maize, soy or some other crop. The characterization can be used later to evaluate the coverage using the kind of crop as a set-up parameter in the scanner.

These results show an approximate characterization of the measurement error of the scanner as an instrument to measure the height of vegetal coverage or cover crops. However, the main purpose of the instrument is to analyze the three-dimensional geometry of the crops by correlating characteristic parameters or indices of said geometry with variables of agronomic interest.

6. Final Remarks

This paper presented a structured process for the development, design and validation of a measuring instrument for empirical study in the field of agronomy, which aims to overcome the limitations found in traditional measurement methods.

It meets the premise of being a simple tool because the instrument is easy to build and materials are available on the market. It can be built by staff with minimal training. The operation of the device can be carried out without difficulties since the operation is simple and the user applications are intuitive. The equipment can be easily transported by hand by a person and it is also possible to transport it in small vehicles.

From the obtained data, it was possible to calculate the density and spatial distribution of a vegetal coverage in a 2500 cm2 area.

The sampling rate of the system does not significantly affect the accuracy of the model, so it was not necessary to obtain high-resolution models that take too long to measure and require the processing of heavy files. In order to determine the maximum height of the residue cover with the least possible approximation error, the measurements must be made at the lowest possible height with respect to the level of the coverage being scanned.

The proposed instrument is a proof of concept and consists of a prototype for experimental tests. It is possible to improve the model and achieve a measuring instrument for scientific use, with greater robustness and quality. In that sense, by having higher speed stepper motors or by using a sensor array, it would be possible to accelerate the mechanism or increase the acquisition speed, generating more accurate models with higher data density. In addition, better LiDAR sensors could be used to have a superior detection of pointed surface geometries or with low reflection. In this way, the scalability of the system can be adjusted according to the study objective and the available budget.

Future lines of research will involve relating the estimated variables using the scanner with other variables of productive or scientific interest, such as biomass or the physical and chemical properties of the soil, that are altered according to the morphology of the vegetation cover.