3.1. Statistical Analysis

As commented in the previous subsection, some positive results may be extracted from inspecting the grades obtained by students. However, it is necessary to go beyond in a formal statistical analysis to obtain reliable implications between variables. The large number of enrolled students, as depicted in

Table 4, permits producing consistent statistical results. Please note that the experimental setup did not consider control groups. This can be part of future work to be established during the next academic courses.

In this context,

Table 5 presents several statistical parameters in order to determine possible dependencies between the grades obtained and the total number of ICT resources used during each academic year. The variable

n represents the number of resources, from

n = 0 in year 2013/14, to

n = 4 in years 2016/17 and 2017/18 (see

Table 2). Please note that the grades are still subdivided into ranks of achievement, being processed quantitatively as the marks obtained by students, up to the fifth decimal.

To this purpose, Pearson’s and Spearman correlations have been computed, denoted as

and

, respectively [

42]. It can be noticed that only the first rank of grades (80–100), shows a slight correlation,

= 0.1784, with the number of ICT resources used during the course. Moreover, the

test [

42] is carried out in order to check whether there is a significant variation between samples. Specifically, the critical level for the test is

= 3.1824, with a confidence value of

= 0.05.

n = 4 represents the maximum number of ICT resources included in the course for each academic year. The null hypothesis,

, assumes that there is not a significant variation between the mean and the variance of the samples. Therefore, a linear dependence between grades within such ranks and the number of ICT resources would be confirmed.

is validated by means of the

test, whose values do not surpass the critical values of the test. That is,

(0.2560 < 3.1824, 0.1158 < 3.1824, etc). In addition, the associated

p-values for such computed

are greater than the confidence value

(0.1842 > 0.05, 0.1540 > 0.05, etc). As a result, this permits validating the null hypothesis

.

The previous test, presented in

Table 5, can be considered as an initial evidence of dependency between the grades and the number of ICT resources used in the course. However, such dependencies are still slight. In consequence, we have produced a more consistent and general test, the

test (

-squared), for categorical variables [

42]. In this case, the grades have been qualitatively computed over a contingency table. We registered the number of ICT resources used by each student within the same ranks of grades expressed in

Table 5. Now

Table 6 shows the computed

values, as well as a set of parameters to measure the level of association. Here, the null hypothesis,

, refers to the independence of variables, which are again the grades obtained within each rank, and the number of ICT resources used during the course. Such hypothesis is rejected, since the computed

values are greater than the critical,

>

, and the corresponding

p-value is lower than the confidence value (13.0236 > 7.8147 and 0.0021 <0.05, respectively). This test confirms again the dependency between variables. The rest of the parameters show low levels of association. Either

, (also known as

), the general and corrected contingency coefficients,

C and

, and the Cramer’s coefficient,

V. Ranges for these parameters are indicated inside brackets.

At this point, the statistical tests presented in

Table 5 and

Table 6 have only proved low level of association and dependency between the grades obtained by the students and the number of ICT resources used during the course. This made us devise more specific tests, in order to check whether certain resources are more relevant than others. In this sense,

Table 7 presents similar results than

Table 5. Here, we have quantitatively analysed the grades obtained by the students who have used certain resources (activity assignment through the LMS, PBL sessions, and CSA assignments). The attendance has been also considered for this test. It is worth noticing that the grades are now globally computed [0–100], without any particular classification within ranks.

The Pearson’s and Spearman’s correlations, and , demonstrate intermediate correlations (⪆0.3) between the grades, and the rest of variables considered. In particular, the use of CSA, with = 0.7606, provides the highest and more significant level of correlation. This is a more consistent result which allows us to identify CSA as a relevant resource for the achievement of students, with a considerable linear dependency on the grades. Moreover, the rest of the analysis is completed by another test. In this case, the null hypothesis, , implies the independency between variables. The only variable which rejects is the use of CSA, since > and its p-value < (9.2072 > 2.0518 and 9.1 × 10 < 0.05, respectively). Therefore, it is proved that, rather than using other resources or attending the sessions of the course, the use of CSA resources provides a significant and linear dependency on the grades of the students. Hence we can preliminary envisage that not all the ICT resources are equally relevant for the achievement of the students.

In addition, we have also produced another qualitative test, in order to evaluate the relationships between the use of the same resources presented in

Table 7 and the qualitative grades: pass or fail. According to this, we have generated a contingency table with categoric grades, as a qualitative and dichotomic variable [0 = fail, 1 = pass]. The results of the

test may be observed in

Table 8. These data correspond with the last academic year, 2017/18, since it is the only year in which CSA has been used. In this manner, we intended to avoid possible biases resulting from the variables of other years. We assume

as the null hypothesis for the independency of variables. It can be confirmed that such hypothesis is rejected by the variable associated to the use of CSA resources, since

>

, and its

p-value <

(23.7367 > 3.8415 and 5.7391 × 10

< 0.05, respectively). Again, the use of CSA demonstrates to be more relevant for the students to pass the course, with the highest value of

, but also with the highest values for the rest of the association parameters (

,

C,

, and

V). They all show certain level of association between the use of CSA and the pass grade in the course.

Finally,

Table 9 depicts a set of statistics regarding the representative grades per academic year. These are computed with the grades obtained by those students who passed the course (>50). First,

Table 9 presents the mean grade, its standard deviation (std), the standard error of the mean (sem), and the median grade. These values confirm the same deduction initially extracted from

Table 4, in which certain positive tendency on the grades may be observed during the last academic years. Here, the mean grade for the academic year 2017/18 proves to be the highest amongst the five academic years considered in the study. This might be interpreted as another positive outcome of the use of CSA, since this resource has only been used during the academic year 2017/18.

Additionally, the kurtosis coefficient [

43],

, and the Pearson’s skewness coefficient [

44],

, have also been listed in the table. In general terms, the grades distributions for each academic year are quite similar. They may be assumed as leptokurtic (

> 0), hence presenting tails which asymptotically decrease around the mean. The Pearson’s skewness coefficient shows positive values for all the distributions. This implies that there exists a positive accumulation over the mean in the grades distribution. Such fact can be also positively interpreted, since higher marks can be expected over the mean value.

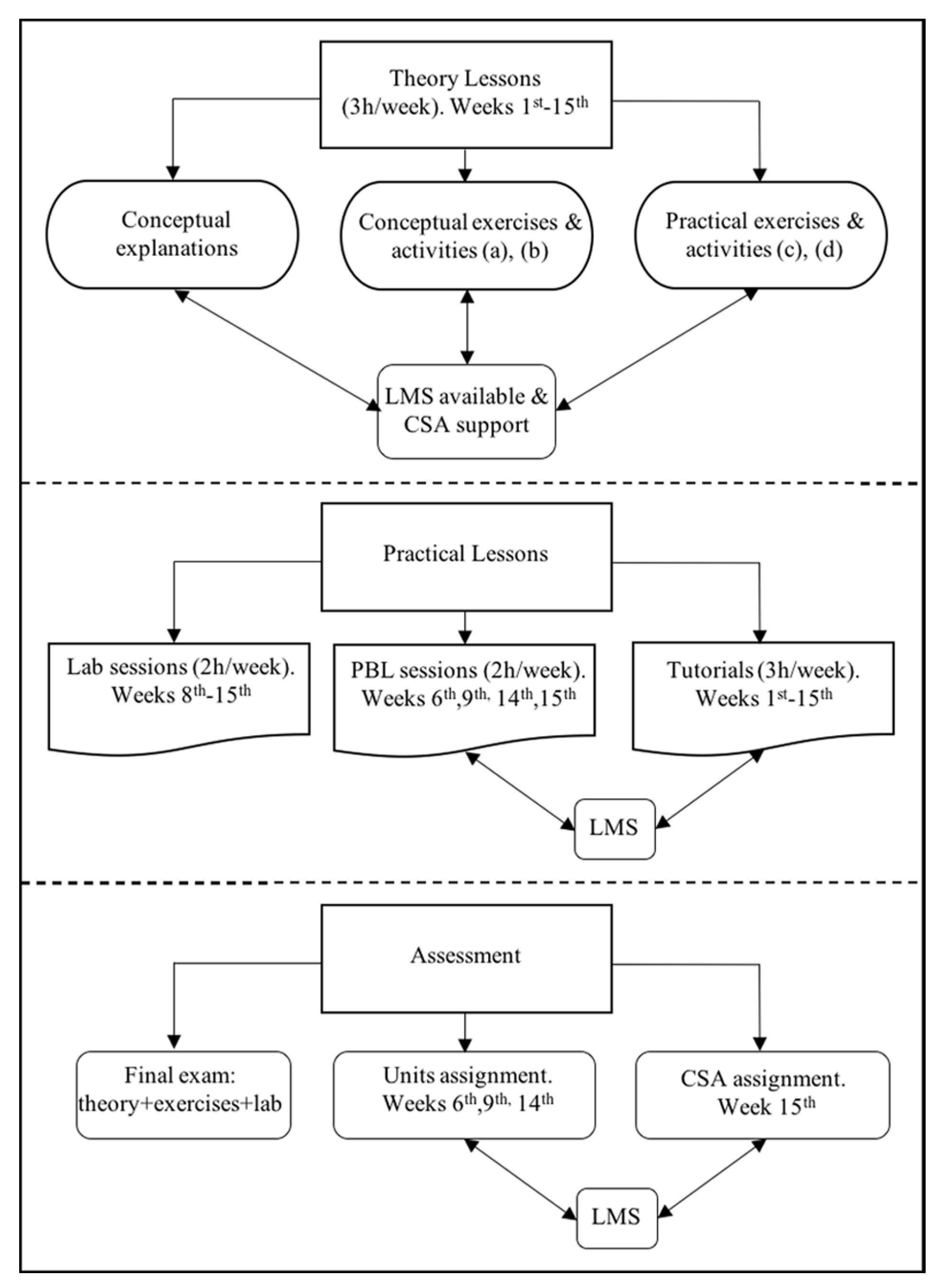

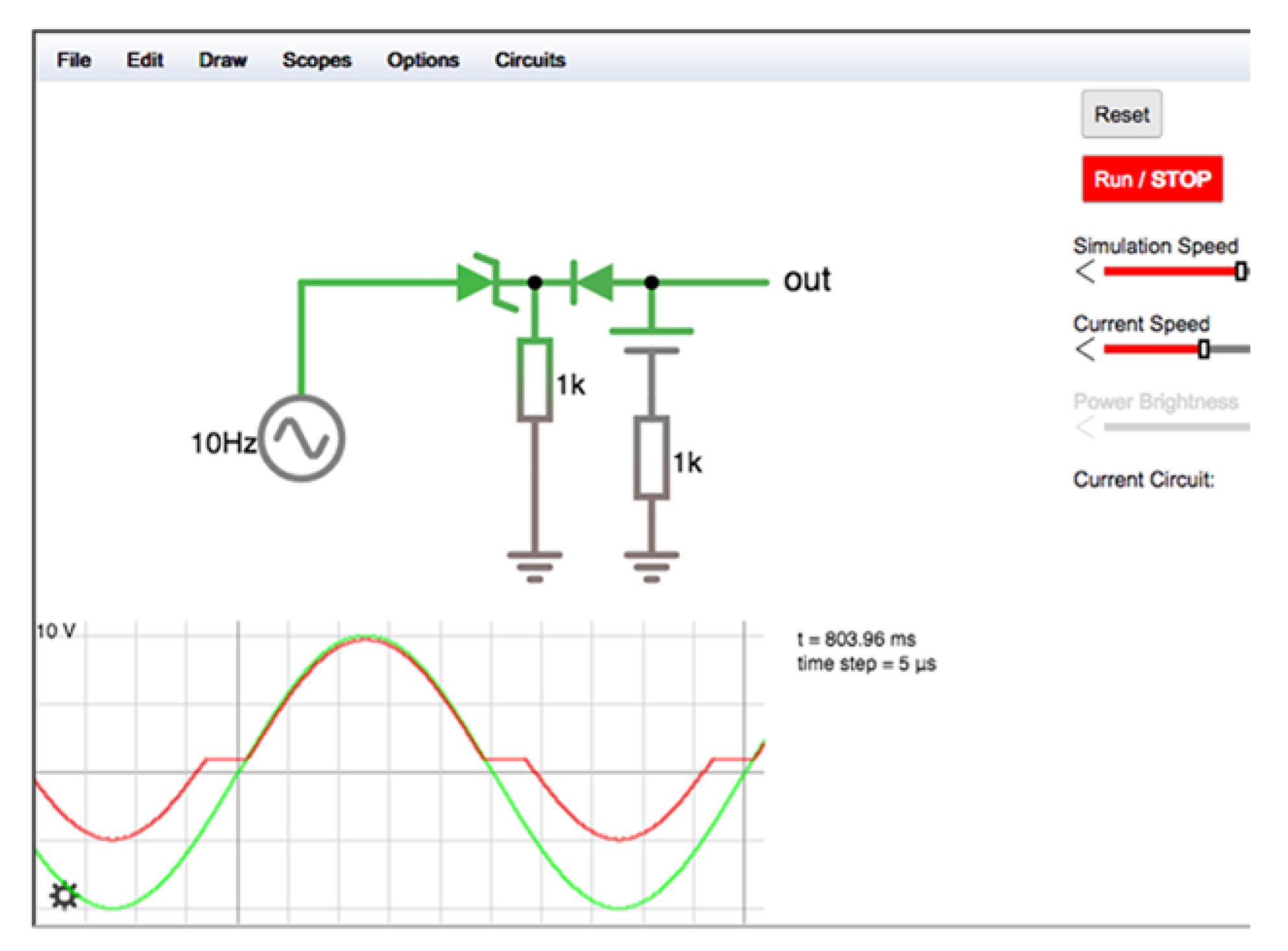

Despite the fact that the results confirm certain correlation between the use of ICT resources and the grades of the students, it has been also proved that not all these technology-based resources are equally significant for the achievement of the students. In particular, the use of CSA is the resource which does provide a real enhancement of their skills, beyond their grades. This has to do with the type of activities designed with CSA, in accordance with the general learning program presented in

Section 2, rather than with the grades, exclusively.

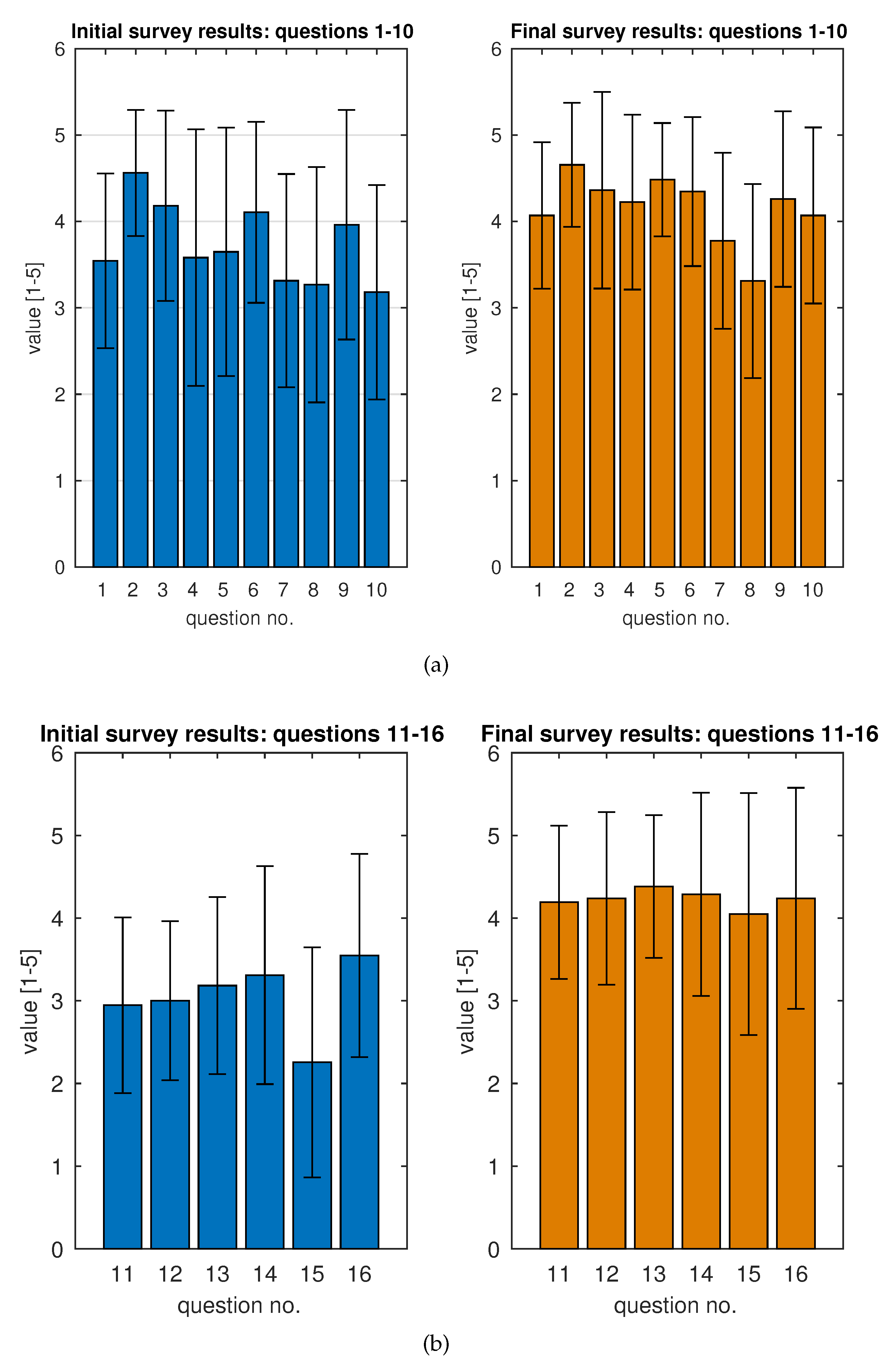

: survey results acquired during the 1st week of the course. Right axes

: survey results acquired during the 1st week of the course. Right axes  : survey results acquired during the 15th week of the course. Questions 1–10 (a). Questions 11–16 (b). Results are expressed with mean values and standard deviation.

: survey results acquired during the 15th week of the course. Questions 1–10 (a). Questions 11–16 (b). Results are expressed with mean values and standard deviation.

: survey results acquired during the 1st week of the course. Right axes

: survey results acquired during the 1st week of the course. Right axes  : survey results acquired during the 15th week of the course. Questions 1–10 (a). Questions 11–16 (b). Results are expressed with mean values and standard deviation.

: survey results acquired during the 15th week of the course. Questions 1–10 (a). Questions 11–16 (b). Results are expressed with mean values and standard deviation.