Real-Time Detection and Recognition of Multiple Moving Objects for Aerial Surveillance

Abstract

1. Introduction

2. Materials and Method

2.1. Materials

2.2. The Proposed Method

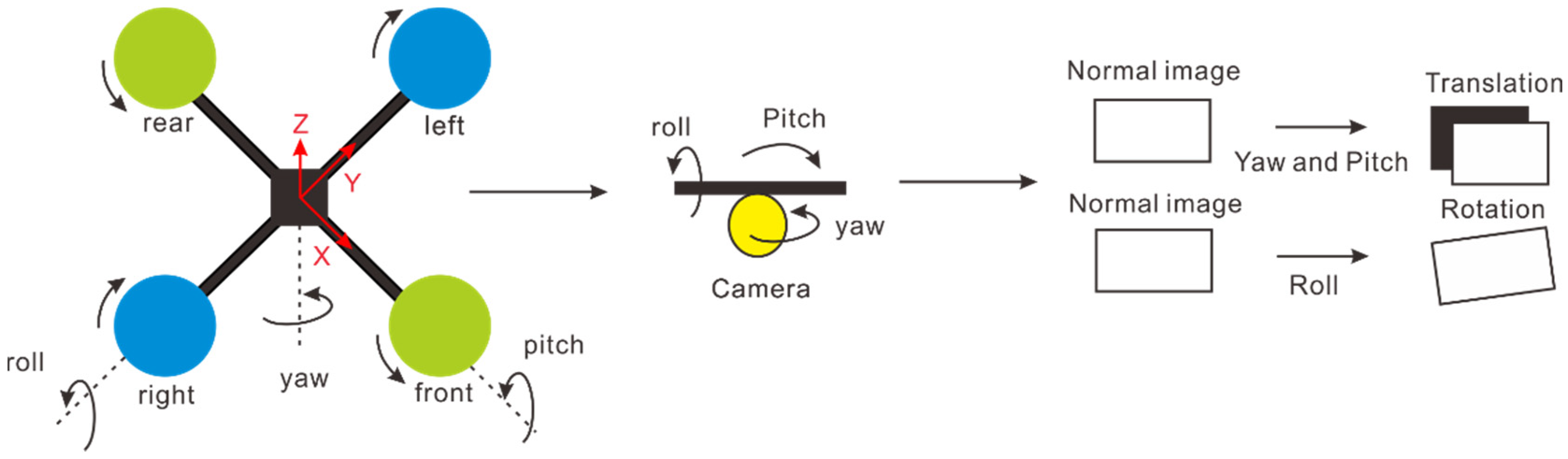

2.3. Step 1: Aerial Image Stabilization

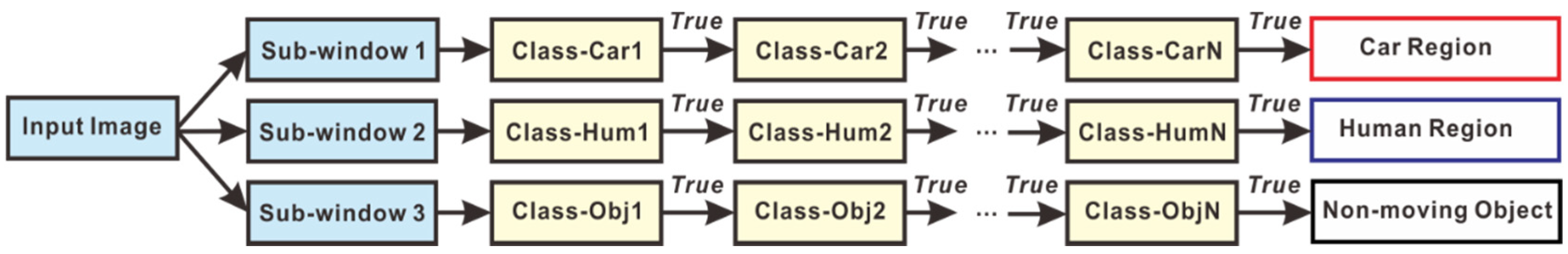

2.4. Step 2: Object Detection and Recognition

2.5. Step 3: Motion Vector Classification

| Algorithm 1. The proposed classification for selecting moving objects. |

|

3. Results and Discussion

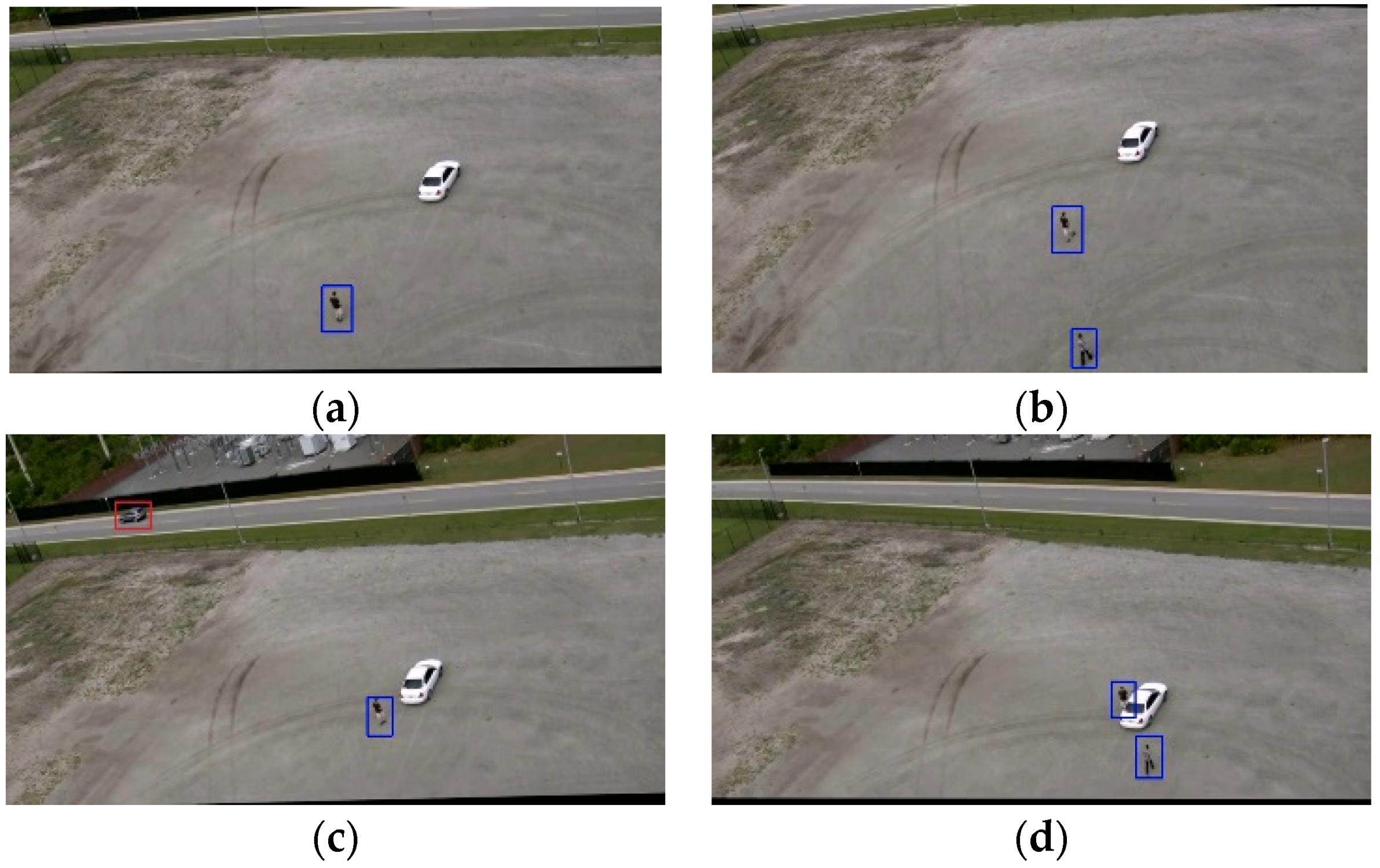

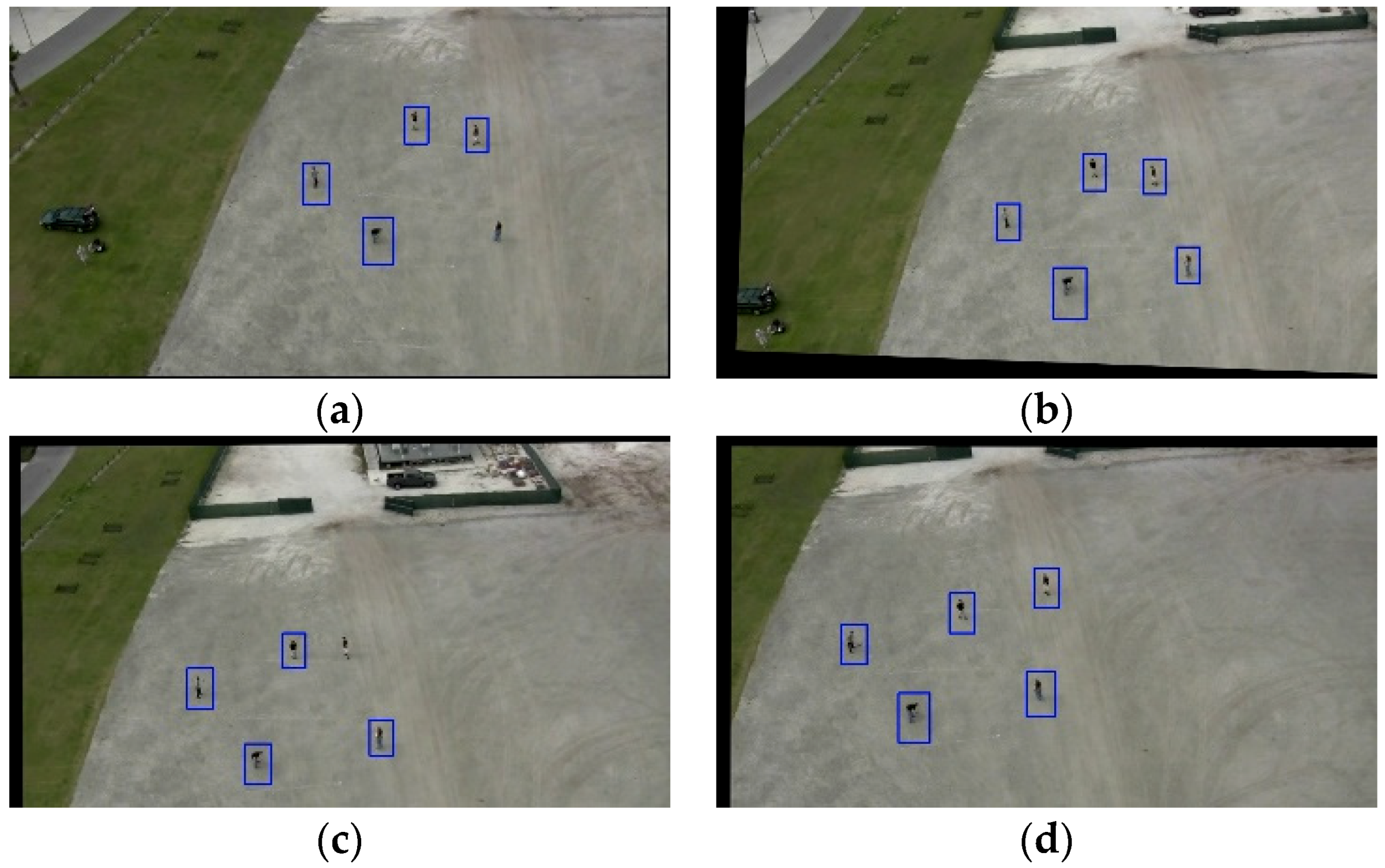

3.1. Result of Motion Vectors

3.2. Result of Moving Objects Detection

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Zhou, X.; Yang, C.; Yu, W. Moving object detection by detecting contiguous outliers in the low-rank representation. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 597–610. [Google Scholar] [CrossRef] [PubMed]

- Kang, B.; Zhu, W.-P. Robust moving object detection using compressed sensing. IET Image Process. 2015, 9, 811–819. [Google Scholar] [CrossRef]

- Chen, B.-H.; Shi, L.-F.; Ke, X. A robust moving object detection in multi-scenario big data for video surveillance. IEEE Trans. Circuits Syst. Video Technol. 2018, 29, 982–995. [Google Scholar] [CrossRef]

- Liu, K.; Mattyus, G. Fast Multiclass vehicle detection on aerial images. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1938–1942. [Google Scholar]

- Wu, Q.; Kang, W.; Zhuang, X. Real-time vehicle detection with foreground-based cascade classifier. IET Image Process. 2016, 10, 289–296. [Google Scholar]

- Chen, Y.W.; Chen, K.; Yuan, S.Y.; Kuo, S.Y. Moving object counting using a tripwire in H.265/HEVC bitstreams for video surveillance. IEEE Access 2016, 4, 2529–2541. [Google Scholar] [CrossRef]

- Wang, H.; Oneata, D.; Verbeek, J.; Schmid, C. A Robust and efficient video representation for action recognition. Int. J. Comput. Vis. 2016, 119, 219–238. [Google Scholar] [CrossRef]

- Lin, Y.; Tong, Y.; Cao, Y.; Zhou, Y.; Wang, S. Visual-attention-based background modeling for detecting infrequently moving objects. IEEE Trans. Circuits Syst. Video Technol. 2017, 27, 1208–1221. [Google Scholar] [CrossRef]

- Hammoud, R.I.; Sahin, C.S.; Blasch, E.P.; Rhodes, B.J. Multi-source multi-modal activity recognition in aerial video surveillance. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Columbus, OH, USA, 23–28 June 2014; pp. 237–244. [Google Scholar]

- Ibrahim, A.W.N.; Ching, P.W.; Gerald Seet, G.L.; Michael Lau, W.S.; Czajewski, W.; Leahy, K.; Zhou, D.; Vasile, C.I.; Oikonomopoulos, K.; Schwager, M.; et al. Recognizing human-vehicle interactions from aerial video without training. IEEE Robot. Autom. Mag. 2012, 19, 390–405. [Google Scholar]

- Liang, C.W.; Juang, C.F. Moving object classification using a combination of static appearance features and spatial and temporal entropy values of optical flows. IEEE Trans. Intell. Transp. Syst. 2015, 16, 3453–3464. [Google Scholar] [CrossRef]

- Nguyen, H.T.; Jung, S.W.; Won, C.S. Order-preserving condensation of moving objects in surveillance videos. IEEE Trans. Intell. Transp. Syst. 2016, 17, 2408–2418. [Google Scholar] [CrossRef]

- Lee, G.; Mallipeddi, R. A genetic algorithm-based moving object detection for real-time traffic surveillance. Signal. Process. Lett. 2015, 22, 1619–1622. [Google Scholar] [CrossRef]

- Chen, B.H.; Huang, S.C. An advanced moving object detection algorithm for automatic traffic monitoring in real-world limited bandwidth networks. IEEE Trans. Multimed. 2014, 16, 837–847. [Google Scholar] [CrossRef]

- Minaeian, S.; Liu, J.; Son, Y.J. Vision-based target detection and localization via a team of cooperative UAV and UGVs. IEEE Trans. Syst. Man Cybern. Syst. 2016, 46, 1005–1016. [Google Scholar] [CrossRef]

- Gupta, M.; Kumar, S.; Behera, L.; Subramanian, V.K. A novel vision-based tracking algorithm for a human-following mobile robot. IEEE Trans. Syst. Man Cybern. Syst. 2017, 47, 1415–1427. [Google Scholar] [CrossRef]

- Mukherjee, D.; Wu, Q.M.J.; Nguyen, T.M. Gaussian mixture model with advanced distance measure based on support weights and histogram of gradients for background suppression. IEEE Trans. Ind. Inf. 2014, 10, 1086–1096. [Google Scholar] [CrossRef]

- Zhang, X.; Zhu, C.; Wang, S.; Liu, Y.; Ye, M. A bayesian approach to camouflaged moving object detection. IEEE Trans. Circuits Syst. Video Technol. 2017, 27, 2001–2013. [Google Scholar] [CrossRef]

- Xu, Z.; Zhang, Q.; Cao, Z.; Xiao, C. Video background completion using motion-guided pixels assignment optimization. IEEE Trans. Circuits Syst. Video Technol. 2016, 26, 1393–1406. [Google Scholar] [CrossRef]

- Benedek, C.; Szirányi, T.; Kato, Z.; Zerubia, J. Detection of object motion regions in aerial image pairs with a multilayer markovian model. IEEE Trans. Image Process. 2009, 18, 2303–2315. [Google Scholar] [CrossRef]

- Wang, Z.; Liao, K.; Xiong, J.; Zhang, Q. Moving object detection based on temporal information. IEEE Signal. Process. Lett. 2014, 21, 1403–1407. [Google Scholar] [CrossRef]

- Bae, S.-H.; Kim, M. A DCT-based total JND profile for spatiotemporal and foveated masking effects. IEEE Trans. Circuits Syst. Video Technol. 2017, 27, 1196–1207. [Google Scholar] [CrossRef]

- Yazdi, M.; Bouwmans, T. New trends on moving object detection in video images captured by a moving camera: A survey. Comput. Sci. Rev. 2018, 28, 157–177. [Google Scholar] [CrossRef]

- Saif, A.F.M.S.; Prabuwono, A.S.; Mahayuddin, Z.R. Moving object detection using dynamic motion modelling from UAV aerial Images. Sci. World J. 2014, 2014, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Maier, J.; Humenberger, M. Movement detection based on dense optical flow for unmanned aerial vehicles. Int. J. Adv. Robot. Syst. 2013, 10, 146–157. [Google Scholar] [CrossRef]

- Kalantar, B.; Mansor, S.B.; Halin, A.A.; Shafri, H.Z.M.; Zand, M. Multiple moving object detection from UAV videos using trajectories of matched regional adjacency graphs. IEEE Trans. Geosci. Remote Sens. 2017, 55, 5198–5213. [Google Scholar] [CrossRef]

- Wu, Y.; He, X.; Nguyen, T.Q. Moving object detection with a freely moving camera via background motion subtraction. IEEE Trans. Circuits Syst. Video Technol. 2017, 27, 236–248. [Google Scholar] [CrossRef]

- Cai, S.; Huang, Y.; Ye, B.; Xu, C. Dynamic illumination optical flow computing for sensing multiple mobile robots from a drone. IEEE Trans. Syst. Man Cybern. Syst. 2017, 48, 1370–1382. [Google Scholar] [CrossRef]

- Minaeian, S.; Liu, J.; Son, Y.J. Effective and efficient detection of moving targets from a UAV’s camera. IEEE Trans. Intell. Transp. Syst. 2018, 19, 497–506. [Google Scholar] [CrossRef]

- Leal-Taixé, L.; Milan, A.; Schindler, K.; Cremers, D.; Reid, I.; Roth, S. Tracking the trackers: An analysis of the state of the art in multiple object tracking. arXiv 2017, arXiv:1704.02781. Available online: https://arxiv.org/abs/1704.02781 (accessed on 10 April 2017).

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-Up Robust Features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Shene, T.N.; Sridharan, K.; Sudha, N. Real-time SURF-based video stabilization system for an FPGA-driven mobile robot. IEEE Trans. Ind. Electron. 2016, 63, 5012–5021. [Google Scholar] [CrossRef]

- Rahmaniar, W.; Wang, W.-J. A novel object detection method based on Fuzzy sets theory and SURF. In Proceedings of the International Conference on System Science and Engineering, Morioka, Japan, 6–8 July 2015; pp. 570–584. [Google Scholar]

- Kumar, S.; Azartash, H.; Biswas, M.; Nguyen, T. Real-time affine global motion estimation using phase correlation and its application for digital image stabilization. IEEE Trans. Image Process. 2011, 20, 3406–3418. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.; Kim, J.; Byun, K.; Ni, J.; Ko, S. Robust digital image stabilization using the Kalman filter. IEEE Trans. Consum. Electron. 2009, 55, 6–14. [Google Scholar] [CrossRef]

- Ryu, Y.G.; Chung, M.J. Robust online digital image stabilization based on point-feature trajectory without accumulative global motion estimation. IEEE Signal. Process. Lett. 2012, 19, 223–226. [Google Scholar] [CrossRef]

- Viola, P.; Jones, M.J. Robust real-time face detection. Int. J. Comput. Vis. 2004, 57, 137–154. [Google Scholar] [CrossRef]

- Ludwig, O.; Nunes, U.; Ribeiro, B.; Premebida, C. Improving the generalization capacity of cascade classifiers. IEEE Trans. Cybern. 2013, 43, 2135–2146. [Google Scholar] [CrossRef]

- Rahmaniar, W.; Wang, W. Real-Time automated segmentation and classification of calcaneal fractures in CT images. Appl. Sci. 2019, 9, 3011. [Google Scholar] [CrossRef]

- Farneback, G. Two-frame motion estimation based on polynomial expansion. In Proceedings of the Scandinavian Conference on Image Analysis, Halmstad, Sweden, 29 June–2 July 2003; pp. 363–370. [Google Scholar]

- Cayon, R.J.O. Online Video Stabilization for UAV. Master’s Thesis, Politecnico di Milano, Milan, Italy, 2013. [Google Scholar]

- Li, J.; Xu, T.; Zhang, K. Real-Time Feature-Based Video Stabilization on FPGA. IEEE Trans. Circuits Syst. Video Technol. 2017, 27, 907–919. [Google Scholar] [CrossRef]

- Hong, S.; Dorado, A.; Saavedra, G.; Barreiro, J.C.; Martinez-Corral, M. Three-dimensional integral-imaging display from calibrated and depth-hole filtered kinect information. J. Disp. Technol. 2016, 12, 1301–1308. [Google Scholar] [CrossRef]

- Muja, M.; Lowe, D.G. Fast approximate nearest neighbors with automatic algorithm configuration. In Proceedings of the International Conference on Computer Vision Theory and Applications, Lisboa, Portugal, 5–8 February 2009; pp. 331–340. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Yu, H.; Moulin, P. Regularized Adaboost learning for identification of time-varying content. IEEE Trans. Inf. Forensics Secur. 2014, 9, 1606–1616. [Google Scholar] [CrossRef]

| Video Name | Average Fps |

|---|---|

| Action1 | 49.9 |

| Action2 | 42.16 |

| Action3 | 49.2 |

| Average | 47.08 |

| Video Name | TP | FP | FN | PR | Recall | F-Measure |

|---|---|---|---|---|---|---|

| Action1 | 124 | 7 | 6 | 0.95 | 0.95 | 0.95 |

| Action2 | 245 | 19 | 23 | 0.92 | 0.91 | 0.91 |

| Action3 | 184 | 12 | 25 | 0.94 | 0.88 | 0.90 |

| Average | 0.94 | 0.91 | 0.92 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rahmaniar, W.; Wang, W.-J.; Chen, H.-C. Real-Time Detection and Recognition of Multiple Moving Objects for Aerial Surveillance. Electronics 2019, 8, 1373. https://doi.org/10.3390/electronics8121373

Rahmaniar W, Wang W-J, Chen H-C. Real-Time Detection and Recognition of Multiple Moving Objects for Aerial Surveillance. Electronics. 2019; 8(12):1373. https://doi.org/10.3390/electronics8121373

Chicago/Turabian StyleRahmaniar, Wahyu, Wen-June Wang, and Hsiang-Chieh Chen. 2019. "Real-Time Detection and Recognition of Multiple Moving Objects for Aerial Surveillance" Electronics 8, no. 12: 1373. https://doi.org/10.3390/electronics8121373

APA StyleRahmaniar, W., Wang, W.-J., & Chen, H.-C. (2019). Real-Time Detection and Recognition of Multiple Moving Objects for Aerial Surveillance. Electronics, 8(12), 1373. https://doi.org/10.3390/electronics8121373