Artificial Neural Networks to Assess Emotional States from Brain-Computer Interface

Abstract

1. Introduction

2. Materials and Methods

2.1. Materials

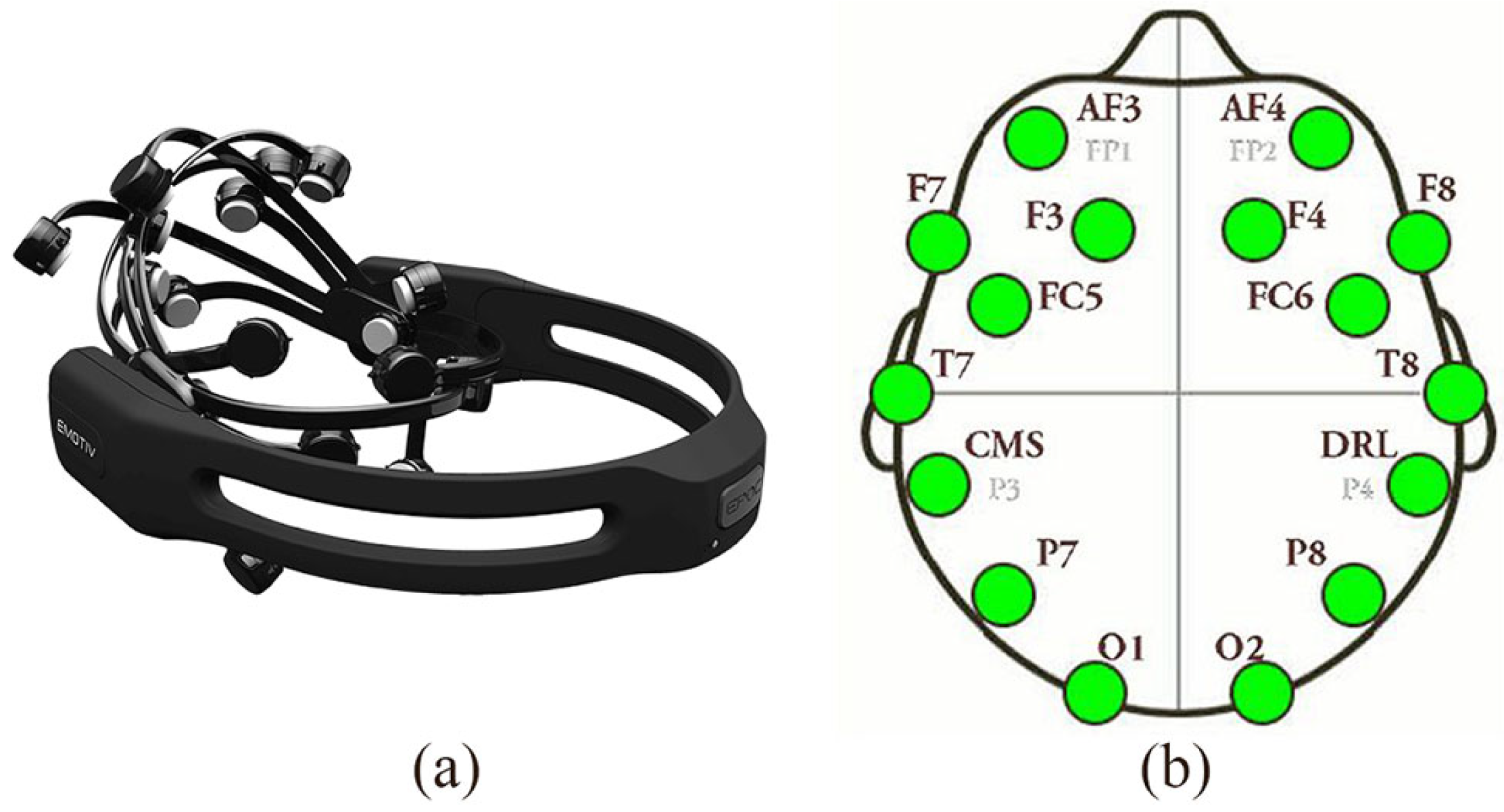

2.1.1. Emotiv EPOC+ Headset

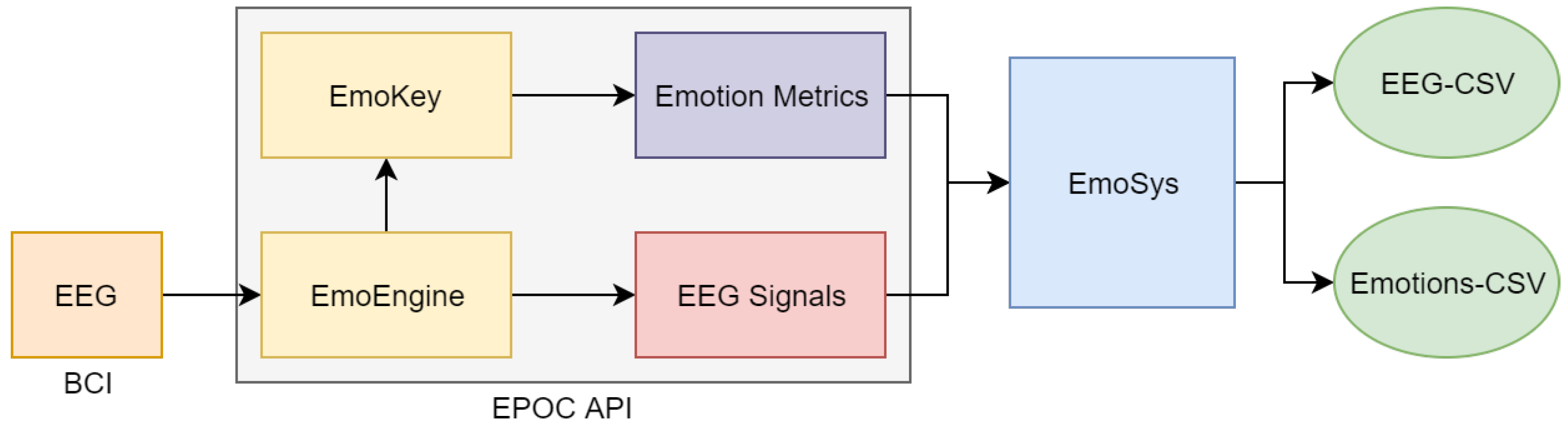

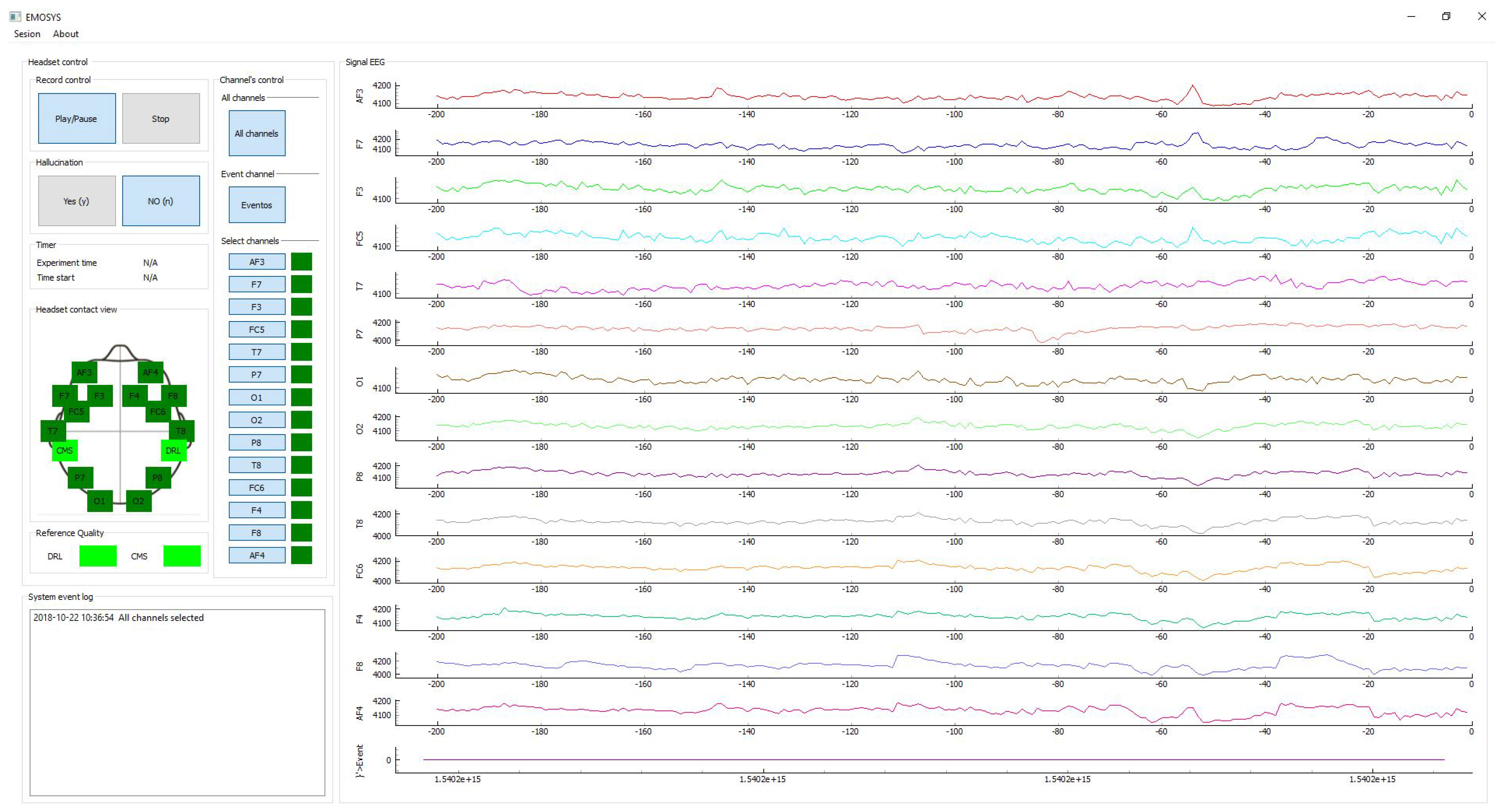

2.1.2. EmoSys Software Suite

2.2. Methods

2.2.1. Participants

2.2.2. E-Prime and IAPS

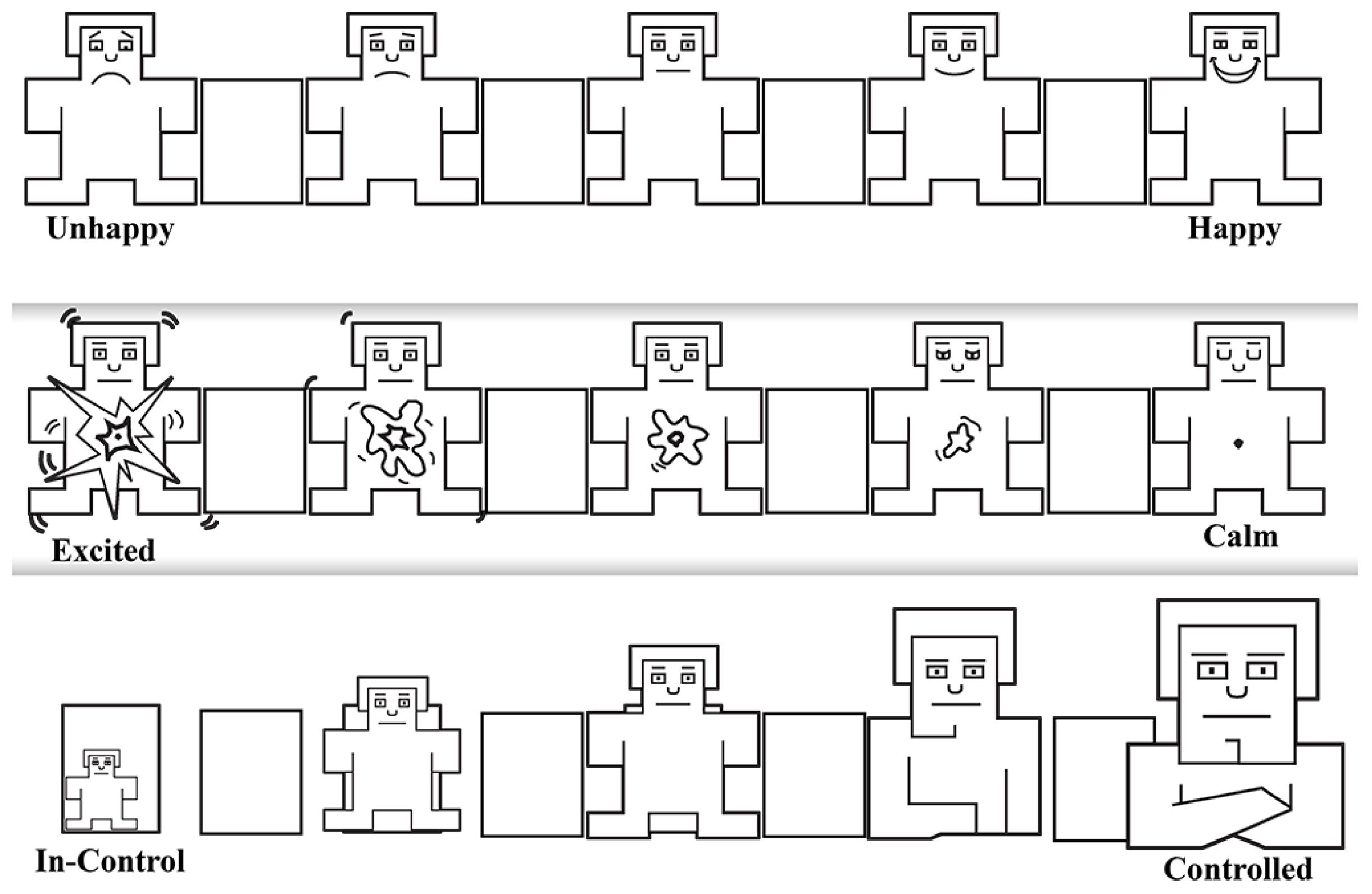

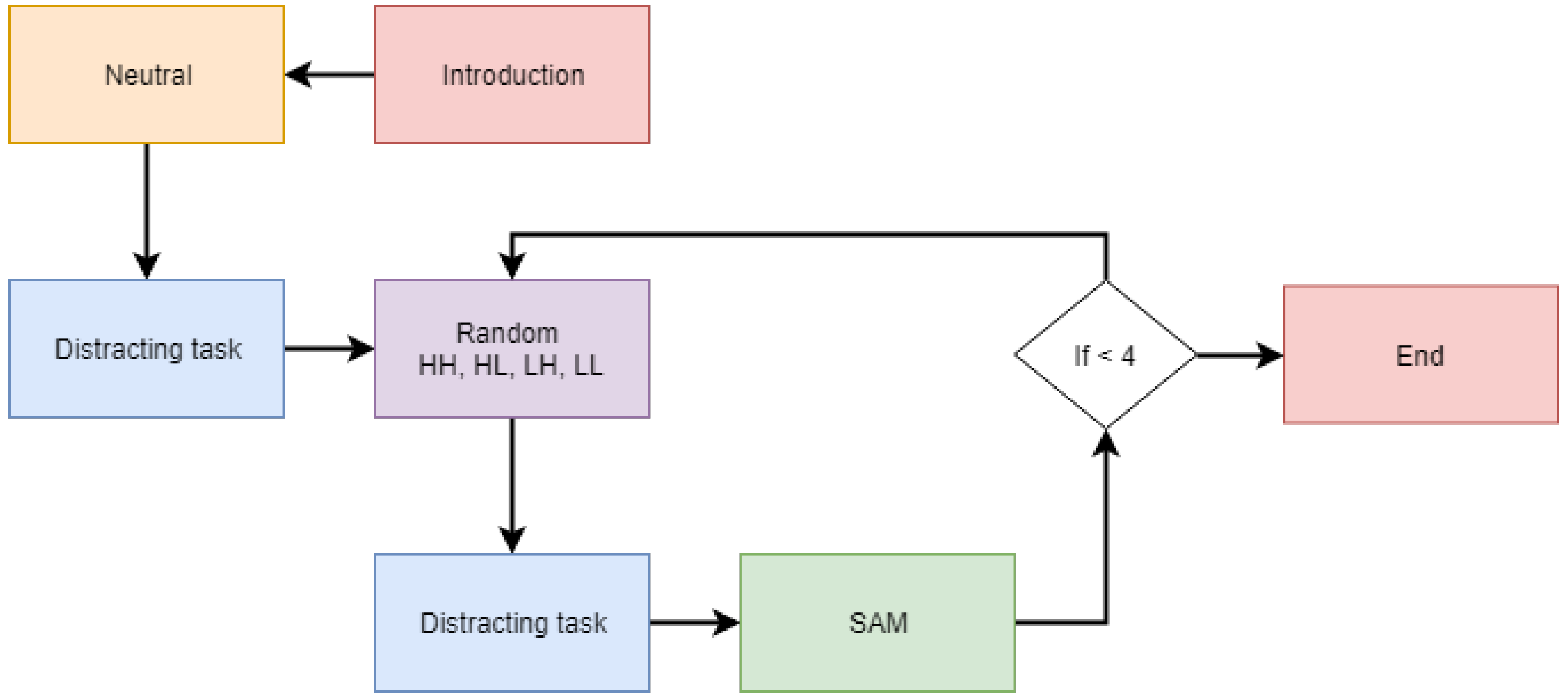

2.2.3. Experiment Design

2.2.4. Multilayer Percepton Architecture

3. Results

3.1. Assessment of SAM Responses vs. IAPS Values

3.2. Different ANN Configurations to Compare Emotiv EPOC+ API Outcomes with IAPS Values

4. Conclusions and Discussion

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| API | Application programming interface |

| ANN | Artificial Neural Network |

| BCI | Brain-Computer Interface |

| EEG | Electroencephalography |

| EPOC | Emotiv EPOC |

| IAPS | International Affective Picture System |

| SAM | Self-assessment manikin |

| MLP | Multilayer perceptron |

| L-M | Levemberg-Marquard |

| BR | Bayesian Regularisation |

References

- Castillo, J.C.; Castro-González, A.; Fernández-Caballero, A.; Latorre, J.M.; Pastor, J.M.; Fernández-Sotos, A.; Salichs, M.A. Software architecture for smart emotion recognition and regulation of the ageing adult. Cogn. Comput. 2016, 8, 357–367. [Google Scholar] [CrossRef]

- Fernández-Caballero, A.; Martínez-Rodrigo, A.; Pastor, J.M.; Castillo, J.C.; Lozano-Monasor, E.; López, M.T.; Zangróniz, R.; Latorre, J.M.; Fernández-Sotos, A. Smart environment architecture for emotion recognition and regulation. J. Biomed. Inform. 2016, 64, 55–73. [Google Scholar] [CrossRef] [PubMed]

- Sokolova, M.V.; Fernández-Caballero, A. A review on the role of color and light in affective computing. Appl. Sci. 2015, 5, 275–293. [Google Scholar] [CrossRef]

- Fernández-Caballero, A.; Latorre, J.M.; Pastor, J.M.; Fernández-Sotos, A. Improvement of the elderly quality of life and care through smart emotion regulation. In Ambient Assisted Living and Daily Activities; Pecchia, L., Chen, L.L., Nugent, C., Bravo, J., Eds.; Springer: Cham, Switzerland, 2014; pp. 348–355. [Google Scholar]

- Pantic, M.; Rothkrantz, L.J. Toward an affect-sensitive multimodal human-computer interaction. Proc. IEEE 2003, 91, 1370–1390. [Google Scholar] [CrossRef]

- Harrison, T.; Mitrovic, T. The Emotiv Mind: Investigating the Accuracy of the Emotiv EPOC in Identifying Emotions and Its Use in an Intelligent Tutoring System. Ph.D. Thesis, University of Canterbury, Christchurch, New Zealand, 2013. [Google Scholar]

- García-Martínez, B.; Martínez-Rodrigo, A.; Fernández-Caballero, A.; Moncho-Bogani, J.; Alcaraz, R. Nonlinear predictability analysis of brain dynamics for automatic recognition of negative stress. Neural Comput. Appl. 2019. [Google Scholar] [CrossRef]

- Martínez-Rodrigo, A.; García-Martínez, B.; Alcaraz, R.; González, P.; Fernández-Caballero, A. Multiscale entropy analysis for recognition of visually elicited negative stress from EEG recordings. Int. J. Neural Syst. 2019. [Google Scholar] [CrossRef] [PubMed]

- Keltner, D.; Ekman, P.; Gonzaga, G.; Beer, J. Facial Expression of Emotion; Guilford Publications: New York, NY, USA, 2000. [Google Scholar]

- Fernández-Sotos, A.; Martínez-Rodrigo, A.; Moncho-Bogani, J.; Latorre, J.M.; Fernández-Caballero, A. Neural correlates of phrase quadrature perception in harmonic rhythm: An EEG study (using a brain-computer interface). Int. J. Neural Syst. 2018, 28, 1750054. [Google Scholar] [CrossRef] [PubMed]

- Martínez-Rodrigo, A.; Fernández-Sotos, A.; Latorre, J.M.; Moncho-Bogani, J.; Fernández-Caballero, A. Neural correlates of phrase rhythm: An EEG study of bipartite vs. rondo sonata form. Front. Neuroinform. 2017, 11, 29. [Google Scholar] [CrossRef] [PubMed]

- Fernández-Sotos, A.; Fernández-Caballero, A.; Latorre, J.M. Influence of tempo and rhythmic unit in musical emotion regulation. Front. Comput. Neurosci. 2016, 10, 80. [Google Scholar] [CrossRef] [PubMed]

- Van Erp, J.; Lotte, F.; Tangermann, M. Brain-computer interfaces: Beyond medical applications. Computer 2012, 45, 26–34. [Google Scholar] [CrossRef]

- Hondrou, C.; Caridakis, G.; Karpouzis, K.; Kollias, S. Affective natural interaction using EEG: Technologies, applications, and future directions. In Artificial Intelligence: Theories and Applications; Springer: Berlin/Heidelberg, Germany, 2012; pp. 331–338. [Google Scholar] [CrossRef]

- Oliver, M.; Teruel, M.A.; Molina, J.P.; Romero-Ayuso, D.; González, P. Ambient Intelligence Environment for Home Cognitive Telerehabilitation. Sensors 2018, 18, 3671. [Google Scholar] [CrossRef] [PubMed]

- Lang, P.; Bradley, M.; Cuthbert, B. International Affective Picture System (IAPS): Affective Ratings of Pictures and Instruction Manual; NIMH, Center for the Study of Emotion & Attention: Gainesville, FL, USA, 2005. [Google Scholar]

- Pławiak, P. Novel genetic ensembles of classifiers applied to myocardium dysfunction recognition based on ECG signals. Swarm Evol. Comput. 2018, 39, 192–208. [Google Scholar] [CrossRef]

- Moon, S.E.; Jang, S.; Lee, J.S. Convolutional neural network approach for EEG-based emotion recognition using brain connectivity and its spatial information. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing, Calgary, AB, Canada, 15–20 April 2018; pp. 2556–2560. [Google Scholar]

- Tripathi, S.; Acharya, S.; Sharma, R.D.; Mittal, S.; Bhattacharya, S. Using deep and convolutional neural networks for accurate emotion classification on DEAP dataset. In Innovative Applications of Artificial Intelligence; AAAI Press: Palo Alto, CA, USA, 2017; pp. 4746–4752. [Google Scholar]

- Li, Y.; Huang, J.; Zhou, H.; Zhong, N. Human emotion recognition with electroencephalographic multidimensional features by hybrid deep neural networks. Appl. Sci. 2017, 7, 1060. [Google Scholar] [CrossRef]

- Bhatti, A.M.; Majid, M.; Anwar, S.M.; Khan, B. Human emotion recognition and analysis in response to audio music using brain signals. Comput. Hum. Behav. 2016, 65, 267–275. [Google Scholar] [CrossRef]

- Pławiak, P. Novel methodology of cardiac health recognition based on ECG signals and evolutionary-neural system. Expert Syst. Appl. 2018, 92, 334–349. [Google Scholar] [CrossRef]

- Emotiv. Emotiv SDK Advanced Edition 3.5.0. Available online: http://emotiv.github.io/community-sdk/ (accessed on 16 October 2018).

- Lievesley, R.; Wozencroft, M.; Ewins, D. The Emotiv EPOC neuroheadset: An inexpensive method of controlling assistive technologies using facial expressions and thoughts? J. Assist. Technol. 2011, 5, 67–82. [Google Scholar] [CrossRef]

- Schneider, W.; Eschman, A.; Zuccolotto, A. E-Prime: User’s Guide; Psychology Software Tools: Sharpsburg, PA, USA, 2002. [Google Scholar]

- Bradley, M.; Lang, P. The International Affective Picture System (IAPS) in the study of emotion and attention. In Handbook of Emotion Elicitation and Assessment; Series in Affective Science; Coan, J.A., Allen, J.J.B., Eds.; Oxford University Press: New York, NY, USA, 2007; pp. 29–46. [Google Scholar]

- Lang, P.J. Behavioral treatment and bio-behavioral assessment: Computer applications. In Technology in Mental Health Care Delivery Systems; Ablex Publishing: New York, NY, USA, 1980; pp. 119–137. [Google Scholar]

- Hornik, K.; Stinchcombe, M.; White, H. Multilayer feedforward networks are universal approximators. Neural Netw. 1989, 2, 359–366. [Google Scholar] [CrossRef]

- Pławiak, P.; Maziarz, W. Classification of tea specimens using novel hybrid artificial intelligence methods. Sens. Actuators B Chem. 2014, 192, 117–125. [Google Scholar] [CrossRef]

- Bose, N.K.; Liang, P. Neural Network Fundamentals with Graphs, Algorithms, and Applications; McGraw-Hill: New York, NY, USA, 1996. [Google Scholar]

- Valencia Velasquez, J.; Branch, J.; Tabares, H. Generación dinámica de la topología de una red neuronal artificial del tipo perceptron multicapa. In Revista Facultad de Ingeniería Universidad de Antioquia; Medellín, Colombia, 2006; pp. 146–162. [Google Scholar]

- Sohaib, A.T.; Qureshi, S.; Hagelbäck, J.; Hilborn, O.; Jerčić, P. Evaluating classifiers for emotion recognition Using EEG. In Foundations of Augmented Cognition; Schmorrow, D.D., Fidopiastis, C.M., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; pp. 492–501. [Google Scholar]

- Koelstra, S.; Muhl, C.; Soleymani, M.; Lee, J.S.; Yazdani, A.; Ebrahimi, T.; Pun, T.; Nijholt, A.; Patras, I. DEAP: A database for emotion analysis using physiological signals. IEEE Trans. Affect. Comput. 2012, 3, 18–31. [Google Scholar] [CrossRef]

| Experimental Condition | Valence | Arousal | Dominance |

|---|---|---|---|

| HH (high valence, high arousal) | 7.09 (1.61) | 6.43 (2.03) | 5.34 (2.19) |

| HL (high valence, low arousal) | 7.23 (1.54) | 3.26 (2.22) | 6.44 (2.10) |

| LH (low valence, high arousal) | 1.67 (1.21) | 6.93 (2.22) | 2.79 (2.11) |

| LL (low valence, low arousal) | 3.48 (1.51) | 3.65 (1.99) | 4.80 (2.08) |

| Hidden Layer Configuration | Neurons per Hidden Layer | Learning Method |

|---|---|---|

| Single-Layer (L = 1) | N = 15 | L-M |

| N = 15 | BR | |

| N = 30 | L-M | |

| N = 30 | BR | |

| Multiple-Layer (L = 3) | N = 15-8-3 | L-M |

| N = 15-8-3 | BR | |

| N = 30-8-3 | L-M | |

| N = 30-8-3 | BR |

| Condition | Valence | Arousal | Dominance |

|---|---|---|---|

| HH | 93.75 | 81.25 | 87.50 |

| HL | 93.75 | 81.25 | 93.75 |

| LH | 75.00 | 87.50 | 56.25 |

| LL | 81.25 | 68.75 | 75.00 |

| Mean | 85.94 | 79.69 | 78.13 |

| L = 1/N = 15 | L = 1/N = 30 | L = 3/N = 15-8-3 | L = 3/N = 30-8-3 | |||||

|---|---|---|---|---|---|---|---|---|

| L-M | BR | L-M | BR | L-M | BR | L-M | BR | |

| Training | 0.93 | 0.95 | 0.87 | 0.95 | 0.95 | 0.95 | 0.96 | 0.96 |

| Validation | 0.92 | – | 0.91 | – | 0.98 | – | 0.94 | – |

| Test | 0.94 | 0.91 | 0.90 | 0.93 | 0.96 | 0.91 | 0.89 | 0.90 |

| L = 1/N = 15 | L = 1/N = 30 | L = 3/N = 15-8-3 | L = 3/N = 30-8-3 | |||||

|---|---|---|---|---|---|---|---|---|

| L-M | BR | L-M | BR | L-M | BR | L-M | BR | |

| Training | 0.70 | 0.44 | 0.71 | 0.41 | 0.72 | 0.85 | 0.80 | 0.90 |

| Validation | 0.60 | 0.43 | 0.87 | 0.42 | 0.72 | 0.85 | 0.79 | 0.87 |

| Test | 0.76 | 0.41 | 0.74 | 0.50 | 0.73 | 0.82 | 0.79 | 0.85 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sánchez-Reolid, R.; García, A.S.; Vicente-Querol, M.A.; Fernández-Aguilar, L.; López, M.T.; Fernández-Caballero, A.; González, P. Artificial Neural Networks to Assess Emotional States from Brain-Computer Interface. Electronics 2018, 7, 384. https://doi.org/10.3390/electronics7120384

Sánchez-Reolid R, García AS, Vicente-Querol MA, Fernández-Aguilar L, López MT, Fernández-Caballero A, González P. Artificial Neural Networks to Assess Emotional States from Brain-Computer Interface. Electronics. 2018; 7(12):384. https://doi.org/10.3390/electronics7120384

Chicago/Turabian StyleSánchez-Reolid, Roberto, Arturo S. García, Miguel A. Vicente-Querol, Luz Fernández-Aguilar, María T. López, Antonio Fernández-Caballero, and Pascual González. 2018. "Artificial Neural Networks to Assess Emotional States from Brain-Computer Interface" Electronics 7, no. 12: 384. https://doi.org/10.3390/electronics7120384

APA StyleSánchez-Reolid, R., García, A. S., Vicente-Querol, M. A., Fernández-Aguilar, L., López, M. T., Fernández-Caballero, A., & González, P. (2018). Artificial Neural Networks to Assess Emotional States from Brain-Computer Interface. Electronics, 7(12), 384. https://doi.org/10.3390/electronics7120384