Integrated Computer Vision and Type-2 Fuzzy CMAC Model for Classifying Pilling of Knitted Fabric

Abstract

:1. Introduction

- A novel T2FCMAC classifier is proposed. It embeds a type-2 fuzzy system within a traditional CMAC.

- An efficient hybrid of group strategy and artificial bee colony (HGSABC) learning algorithm is also proposed. The proposed HGSABC was used for adjusting the parameters of T2FCMAC classifier and preventing the fall into a local optimum. A group search strategy was used to obtain balanced search capabilities and improve the performance of the artificial bee colony algorithm.

- The fixed and different illumination experiments were implemented in this study. The experimental results indicate that the proposed method exhibited a superior average accuracy rate to other methods.

2. Image Preprocessing and Feature Extraction

2.1. Image Preprocessing

2.2. Feature Extraction

3. T2FCMAC for Pilling Classification of Knitted Fabric

3.1. T2FCMAC Classifier

3.2. Proposed Hybrid of Group Strategy and Artificial Bee Colony

- Step 1:

- Initialize

- Step 2:

- Calculating and ranking fitness values

- Step 3:

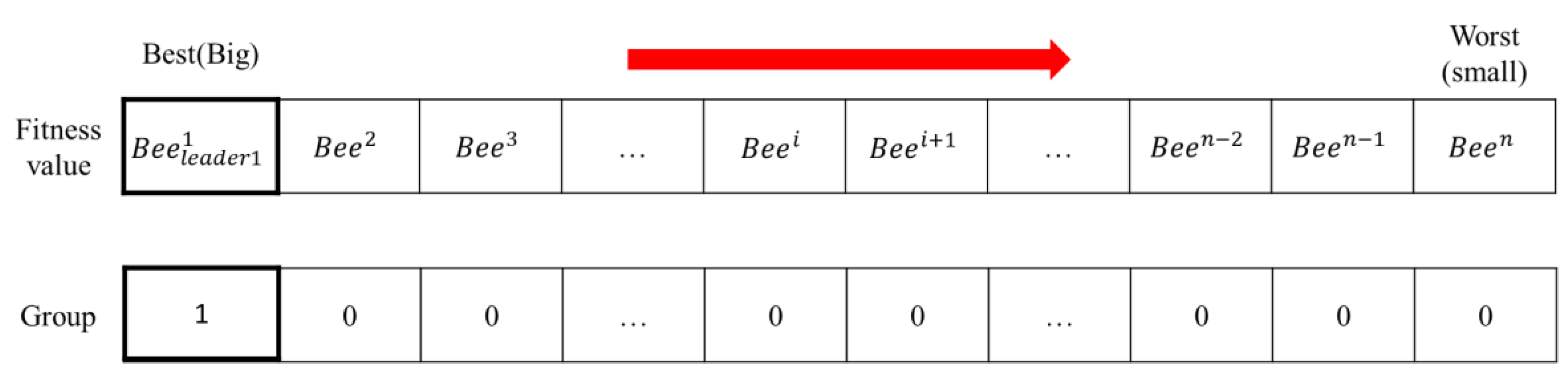

- Group strategy

- Step 4:

- Update bee position

4. Experimental Results

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Deng, Z.; Wang, L.; Wang, X. An integrated method of feature extraction and objective evaluation of fabric pilling. J. Text. Inst. 2010, 102, 1–13. [Google Scholar] [CrossRef]

- Saharkhiz, S.; Abdorazaghi, M. The performance of different clustering methods in the objective assessment of fabric pilling. J. Eng. Fibers Fabr. 2012, 7, 35–41. [Google Scholar] [CrossRef]

- Eldessouki, M.; Hassan, M.; Bukhari, H.A.; Qashqari, K. Integrated computer vision and soft computing system for classifying the pilling resistance of knitted fabrics. Fibres Text. East. Eur. 2014, 22, 106–112. [Google Scholar]

- Furferi, R.; Carfagni, M.; Governi, L.; Volpe, Y.; Bogani, P. Towards automated and objective assessment of fabric pilling. Int. J. Adv. Robot. Syst. 2014, 11. [Google Scholar] [CrossRef]

- Yun, S.Y.; Kim, S.; Park, C.K. Development of an objective fabric pilling evaluation method. I. Characterization of pilling using image analysis. Fibers Polym. 2013, 14, 832–837. [Google Scholar] [CrossRef]

- Techniková, L.; Tunák, M.; Janáček, J. Pilling evaluation of patterned fabrics based on a gradient field method. Indian J. Fibre Text. Res. 2016, 41, 97–101. [Google Scholar]

- Techniková, L.; Tunák, M.; Janáček, J. New objective system of pilling evaluation for various types of fabrics. J. Text. Inst. 2017, 108, 123–131. [Google Scholar] [CrossRef]

- Lin, H.Y.; Lin, C.J. Using a hybrid of fuzzy theory and neural network filter for single image dehazing. Appl. Intell. 2017, 47, 1099–1114. [Google Scholar] [CrossRef]

- Lee, C.L.; Lin, C.J.; Lin, H.Y. Smart robot wall-following control using a sonar behavior-based fuzzy controller in unknown environments. Smart Sci. 2017, 5, 160–166. [Google Scholar] [CrossRef]

- Pal, M.; Foody, G.M. Feature selection for classification of hyperspectral data by SVM. IEEE Trans. Geosci. Remote Sens. 2010, 48, 2297–2307. [Google Scholar] [CrossRef]

- Bruzzone, L. An approach to feature selection and classification of remote sensing images based on the Bayes rule for minimum cost. IEEE Trans. Geosci. Remote Sens. 2000, 38, 429–438. [Google Scholar] [CrossRef]

- Eldessouki, M. Evaluation of Fabric Pilling as an End-use Quality and a Performance Measure for the Fabrics. In Chapter 7 of Applications of Computer Vision in Fashion and Textiles; Wong, W.J., Ed.; Woodhead Publishing-Elsevier: London, UK, 2018; ISBN 978-0-08-101218-5. [Google Scholar]

- Albus, J.S. A new approach to manipulator control: The cerebellar model articulation controller (CMAC). J. Dyn. Syst. Meas. Control 1975, 97, 220–227. [Google Scholar] [CrossRef]

- Iiguni, Y. Hierarchical image coding via cerebellar model arithmetic computers. IEEE Trans. Image Process. 1996, 5, 1393–1401. [Google Scholar] [CrossRef] [PubMed]

- Juang, J.G.; Lee, C.L. Applications of cerebellar model articulation controllers to intelligent landing system. J. Univ. Comp. Sci. 2009, 15, 2586–2607. [Google Scholar]

- Yu, W.; Rodriguez, F.O.; Moreno-Armendariz, M.A. Hierarchical fuzzy CMAC for nonlinear systems modeling. IEEE Trans. Fuzzy Syst. 2008, 16, 1302–1314. [Google Scholar]

- Chen, J.Y.; Tsai, P.S.; Wong, C.C. Adaptive design of a fuzzy cerebellar model arithmetic controller neural network. IEE Proc. Control Theory Appl. 2005, 152, 133–137. [Google Scholar] [CrossRef]

- Lin, C.M.; Chen, L.Y.; Yeung, D.S. Adaptive filter design using recurrent cerebellar model articulation controller. IEEE Trans. Neural Netw. 2010, 21, 1149–1157. [Google Scholar] [CrossRef] [PubMed]

- Lin, C.M.; Yang, M.S.; Chao, F.; Hu, X.M.; Zhang, J. Adaptive filter design using type-2 fuzzy cerebellar model articulation controller. IEEE Trans. Neural Netw. Learn. Syst. 2016, 27, 2084–2094. [Google Scholar] [CrossRef] [PubMed]

- Kong, T.Y.; Rosenfeld, A. Digital topology: Introduction and survey. Comput. Vis. Graph. Image Process. 1989, 48, 357–393. [Google Scholar] [CrossRef]

- Samet, H.; Tamminen, M. Efficient component labeling of images of arbitrary dimension represented by linear bintrees. IEEE Trans. Pattern Anal. Mach. Intell. 1988, 10, 579–586. [Google Scholar] [CrossRef]

- Karaboga, D. An Idea Based on Honeybee Swarm for Numerical Optimization; Technical Report-TR06; Engineering Faculty, Computer Engineering Department, Erciyes University: Kayseri, Turkey, 2005. [Google Scholar]

- Huang, M.L.; Fu, C.C. Applying Image Processing to the Textile Grading of Fleece Based on Pilling Assessment. Fibers 2018, 6, 73. [Google Scholar] [CrossRef]

- Jing, J.; Zhang, Z.; Kang, X.; Jia, J. Objective evaluation of fabric pilling based on wavelet transform and the local binary pattern. Text. Res. J. 2012, 82, 1880–1887. [Google Scholar] [CrossRef]

- Eldessouki, M.; Hassan, M. Adaptive neuro-fuzzy system for quantitative evaluation of woven fabrics’ pilling resistance. Expert Syst. Appl. 2015, 42, 2098–2113. [Google Scholar] [CrossRef]

| Samples |  |  |  |  |  |

|---|---|---|---|---|---|

| The pilling of knitted fabric | very serious | serious | moderate | slight | no |

| Grade | 1 | 2 | 3 | 4 | 5 |

| Data Sets | Accuracy Rate |

|---|---|

| Data set 1 | 96.88% |

| Data set 2 | 98.44% |

| Data set 3 | 95.31% |

| Data set 4 | 93.75% |

| Data set 5 | 92.19% |

| Data set 6 | 100% |

| Data set 7 | 100% |

| Data set 8 | 100% |

| Data set 9 | 98.44% |

| Data set 10 | 98.44% |

| Average accuracy rate: 97.3% | |

| Methods | Proposed Method | Saharkhiz and Abdorazaghi [2] | Eldessouki et al. [3] | Furferi et al. [4] | Huang and Fu [23] | Jing et al. [24] | Eldessouki and Hassan [25] | |

|---|---|---|---|---|---|---|---|---|

| Average accuracy rate | Fixed illumination | 97.3% | 94.8% | 87.5% | 94.3% | 96.6% | 95% | 85.8% |

| Different illumination | 94.6% | 90.2% | 81.3% | 88.4% | 92.4% | 91.3% | 80.6% | |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, C.-L.; Lin, C.-J. Integrated Computer Vision and Type-2 Fuzzy CMAC Model for Classifying Pilling of Knitted Fabric. Electronics 2018, 7, 367. https://doi.org/10.3390/electronics7120367

Lee C-L, Lin C-J. Integrated Computer Vision and Type-2 Fuzzy CMAC Model for Classifying Pilling of Knitted Fabric. Electronics. 2018; 7(12):367. https://doi.org/10.3390/electronics7120367

Chicago/Turabian StyleLee, Chin-Ling, and Cheng-Jian Lin. 2018. "Integrated Computer Vision and Type-2 Fuzzy CMAC Model for Classifying Pilling of Knitted Fabric" Electronics 7, no. 12: 367. https://doi.org/10.3390/electronics7120367

APA StyleLee, C.-L., & Lin, C.-J. (2018). Integrated Computer Vision and Type-2 Fuzzy CMAC Model for Classifying Pilling of Knitted Fabric. Electronics, 7(12), 367. https://doi.org/10.3390/electronics7120367