Obstacle Avoidance Based-Visual Navigation for Micro Aerial Vehicles

Abstract

:1. Introduction

2. Related Work

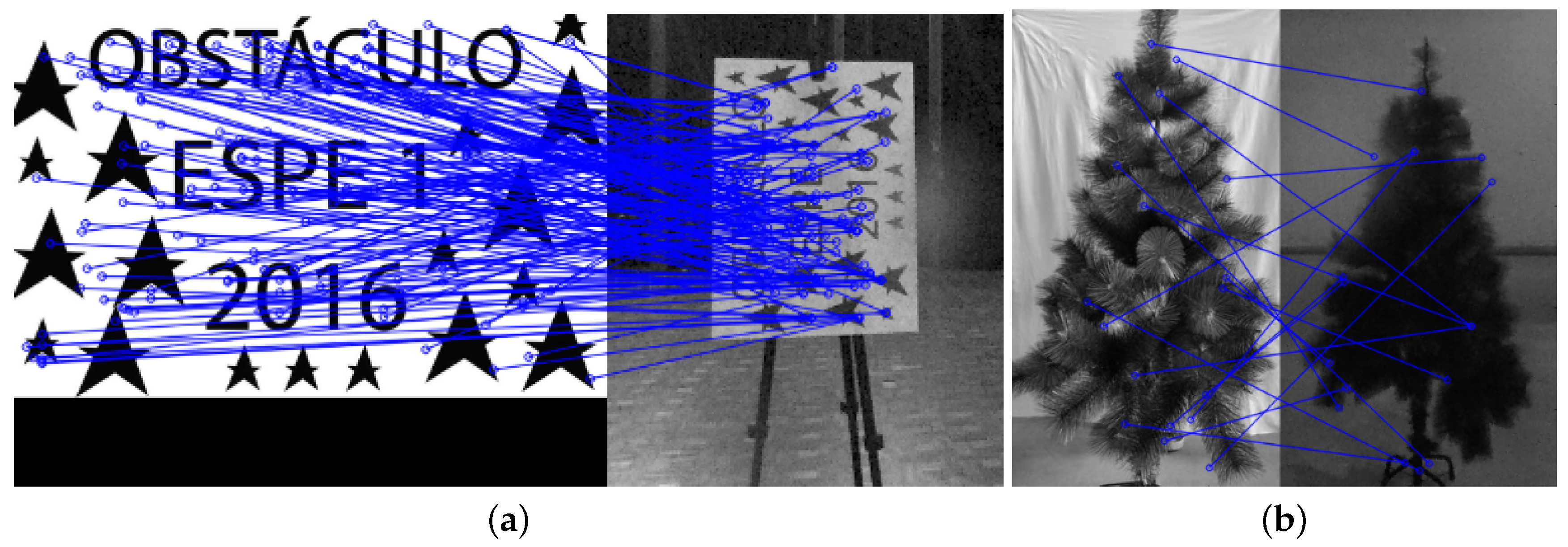

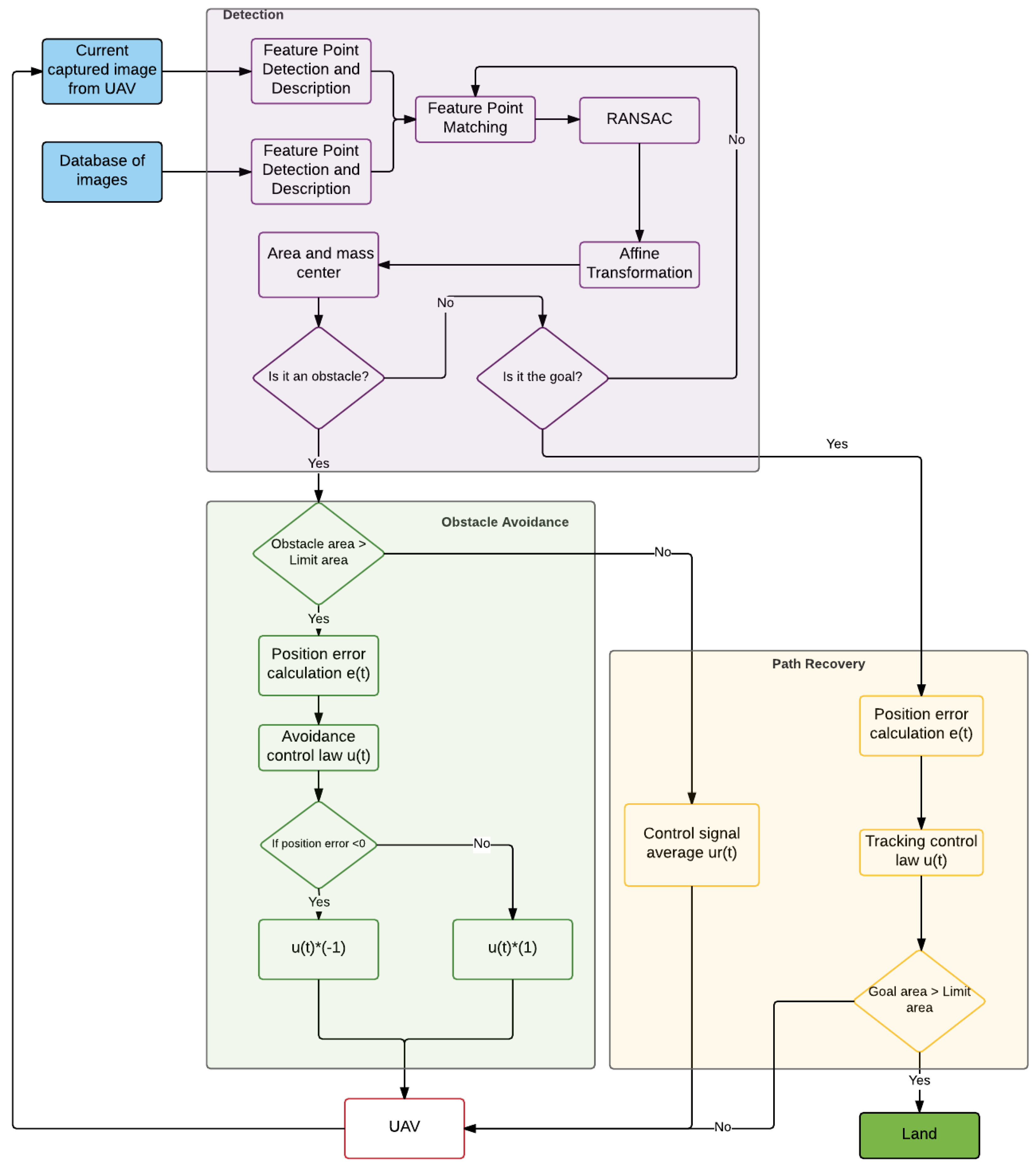

3. Obstacle Detection

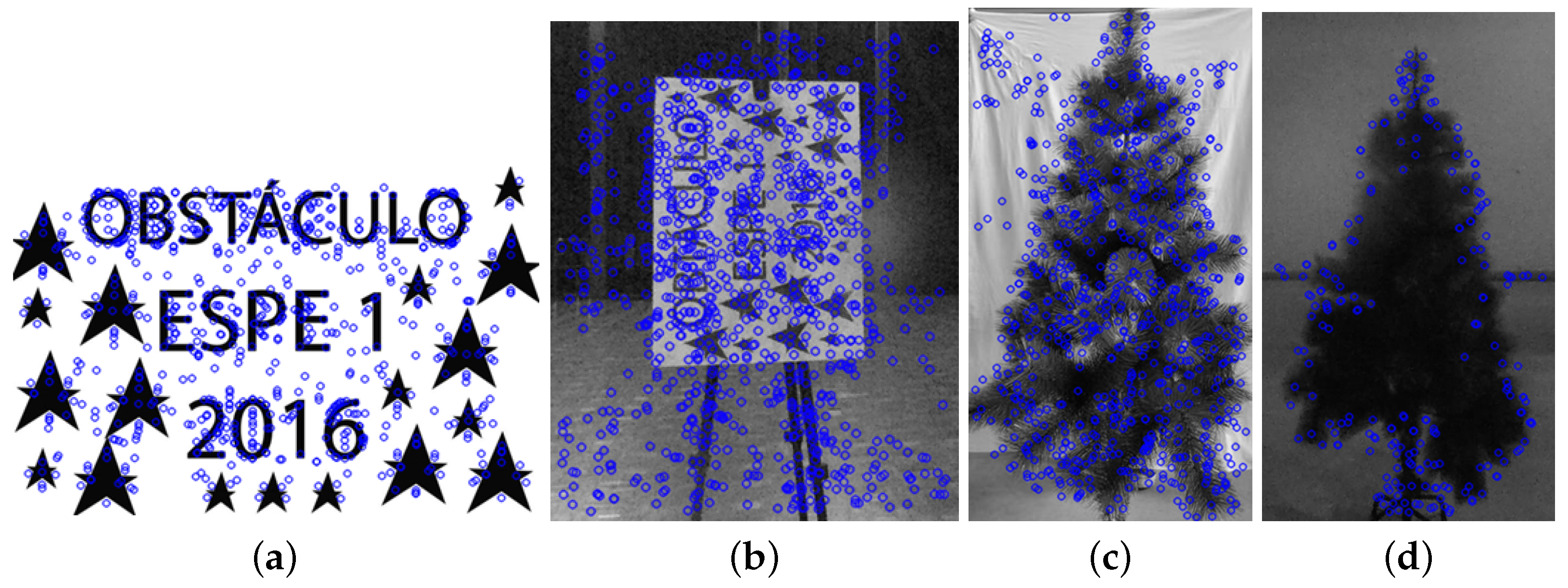

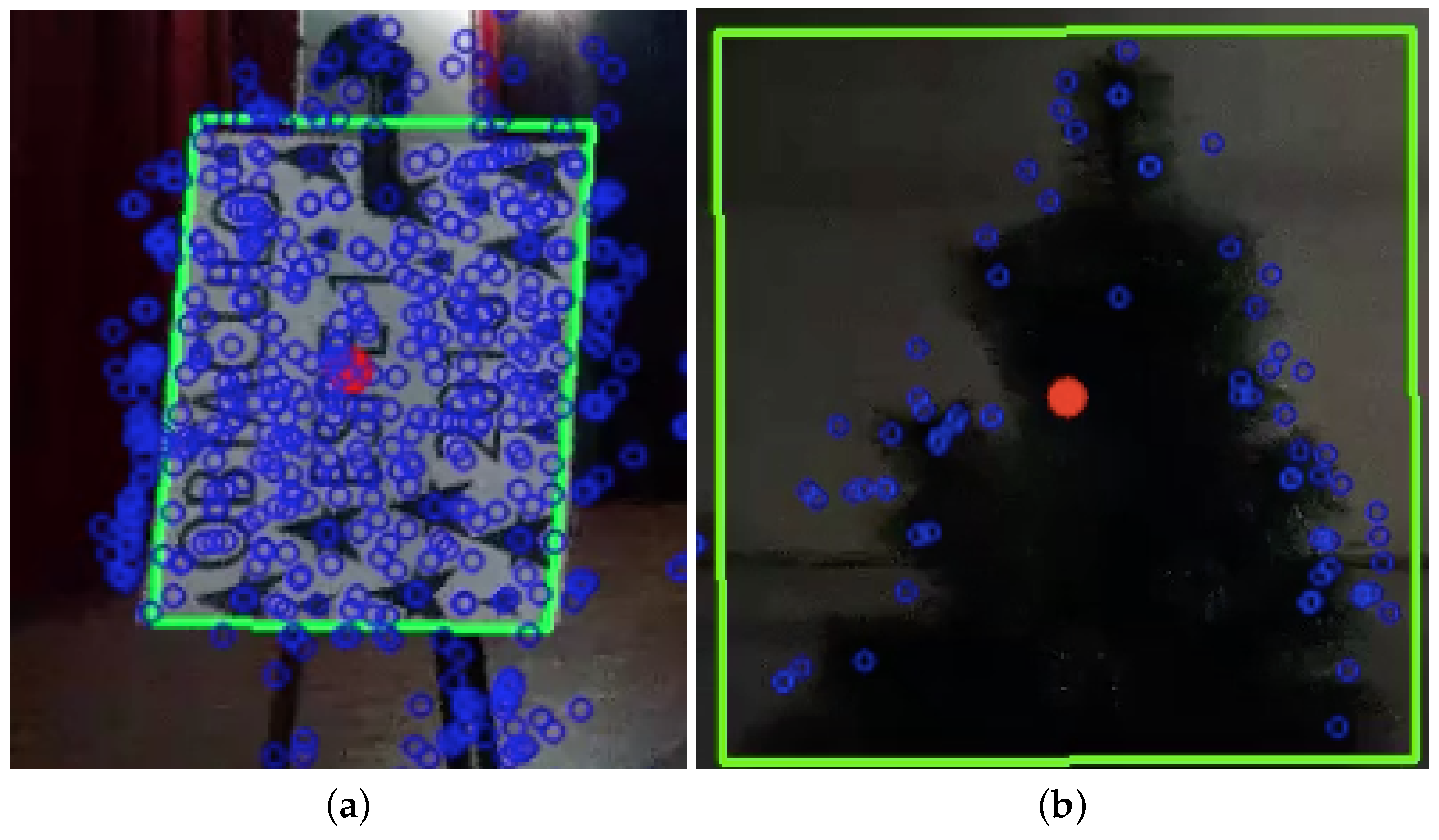

3.1. Feature Point

3.1.1. Feature Point Detection

3.1.2. Feature Point Description

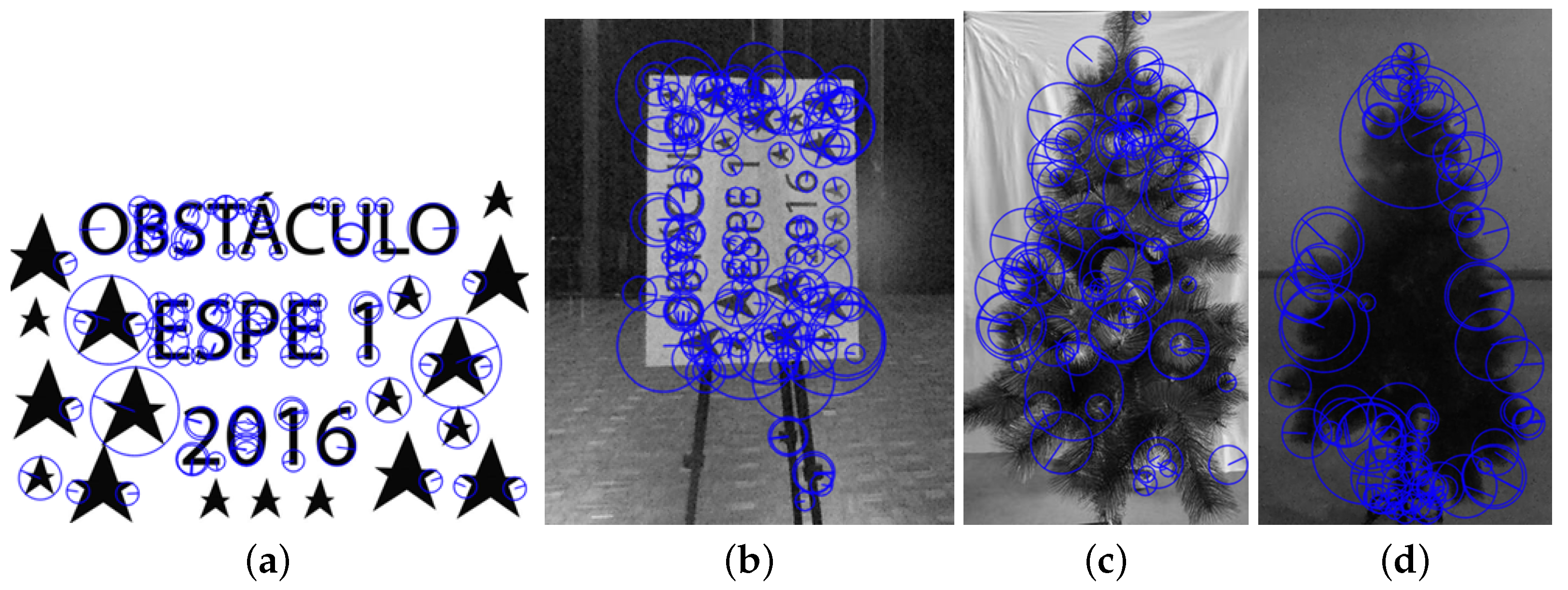

3.1.3. Feature Point Matching

3.2. Obstacle Area and Mass Center

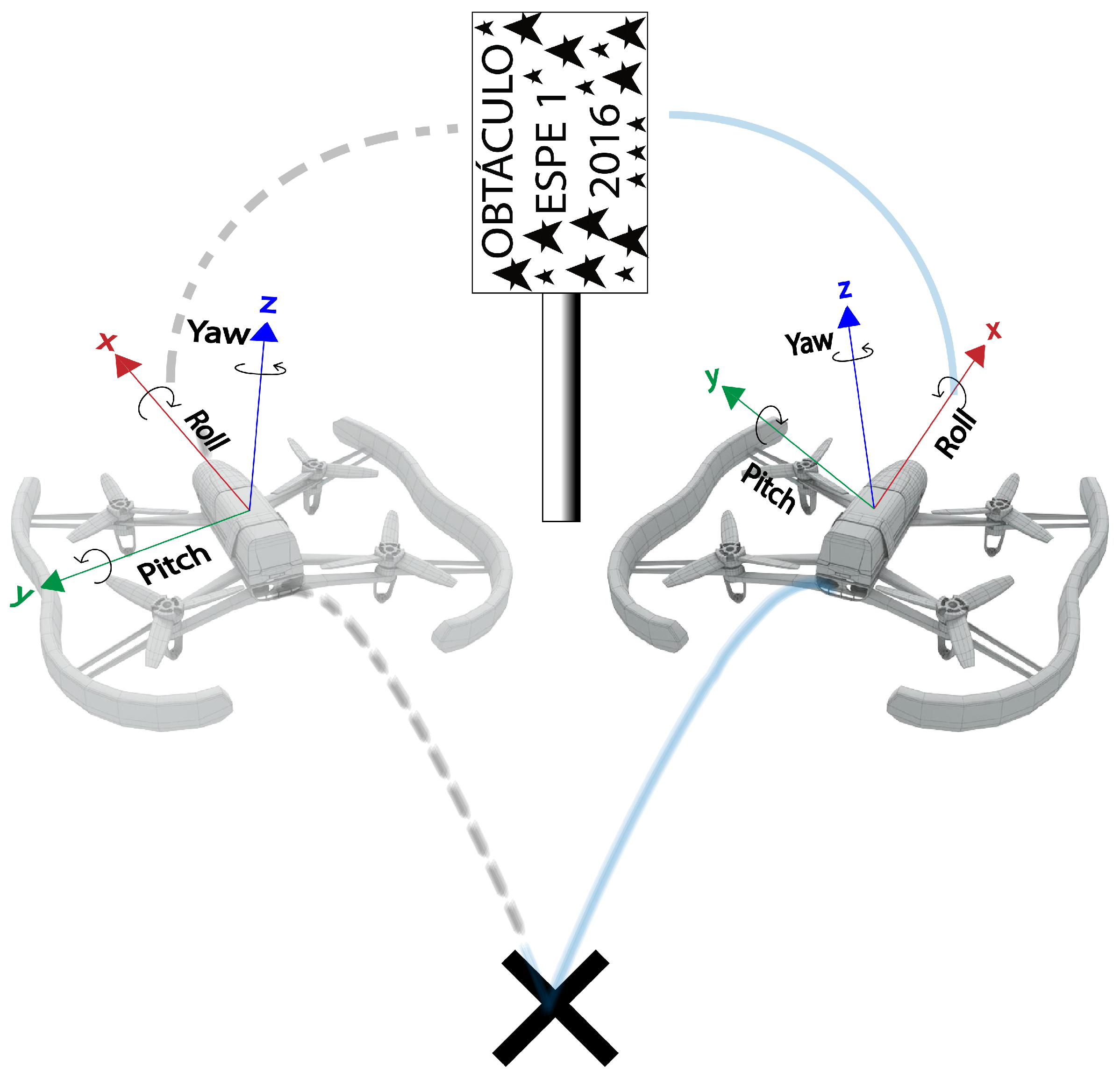

4. Obstacle Avoidance

- System identification,

- Controller design,

- Obstacle avoidance algorithm.

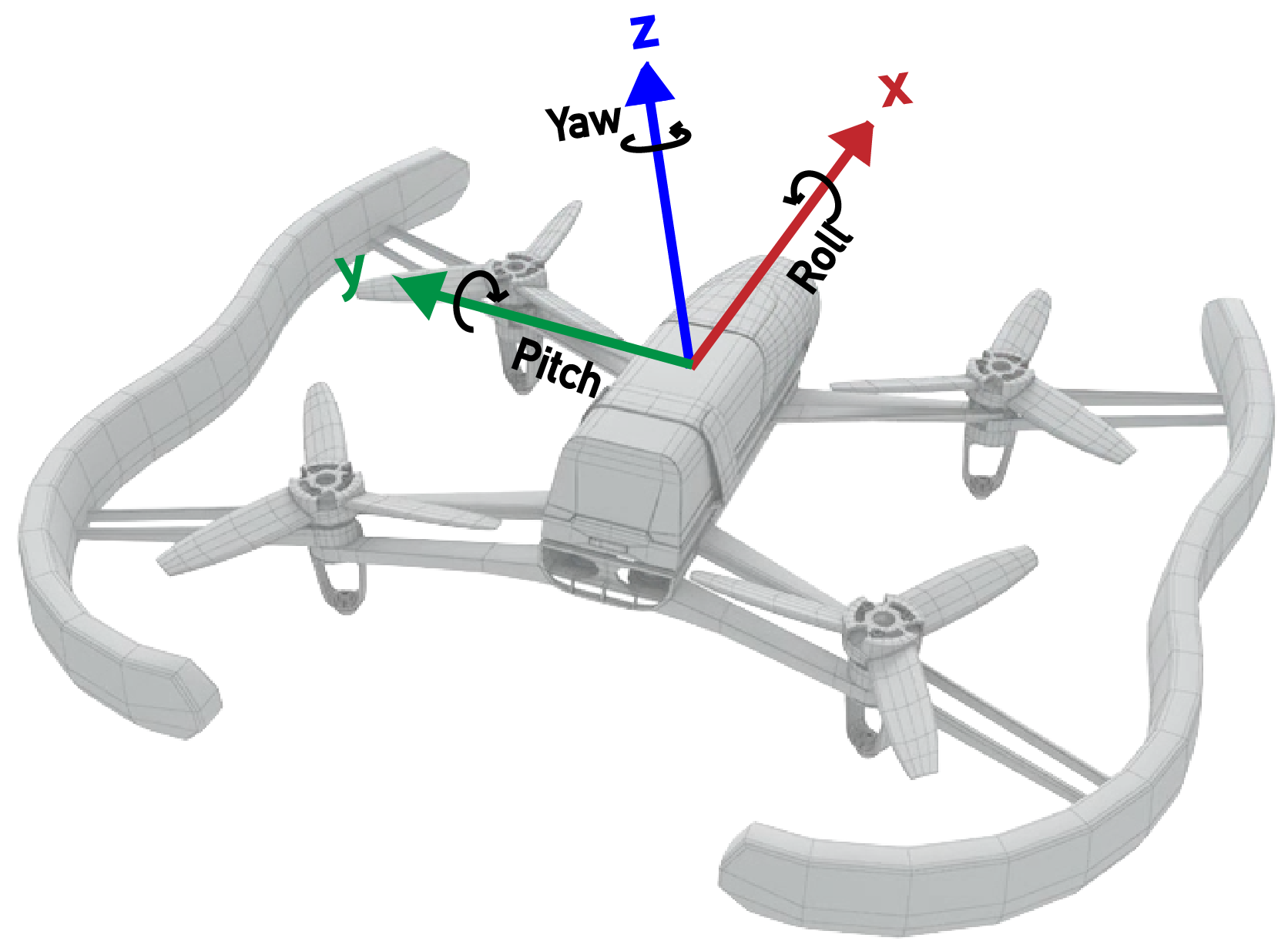

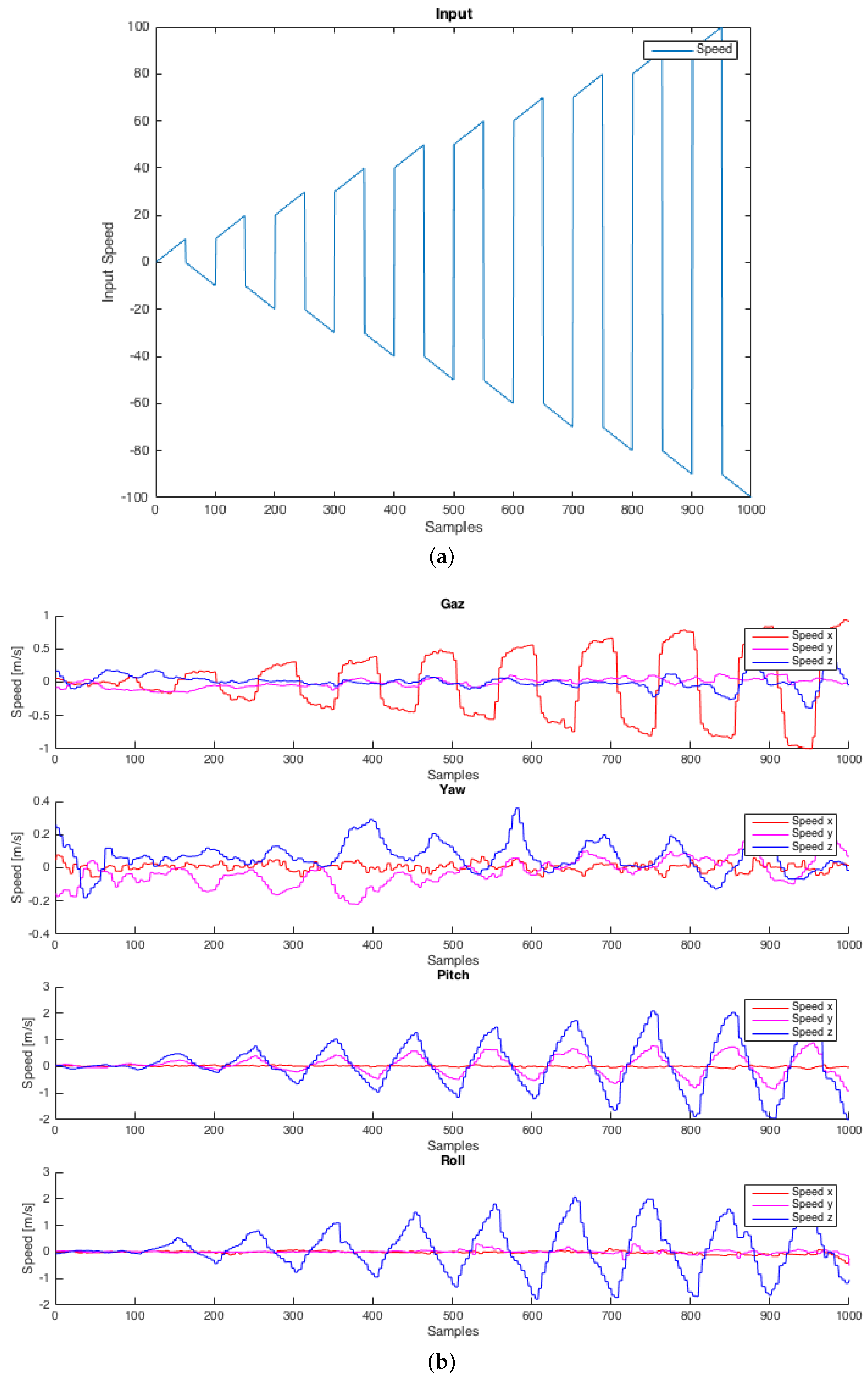

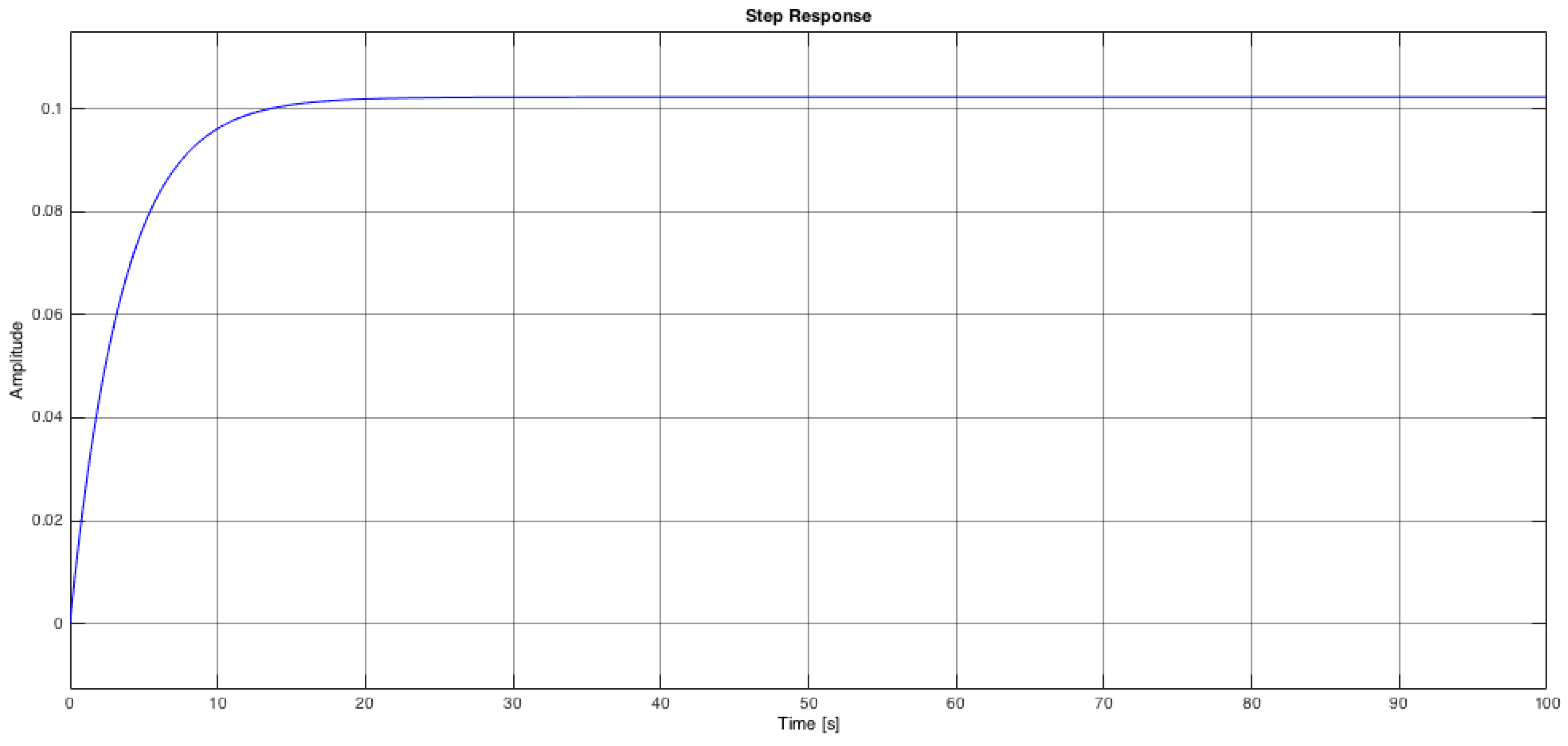

4.1. System Identification

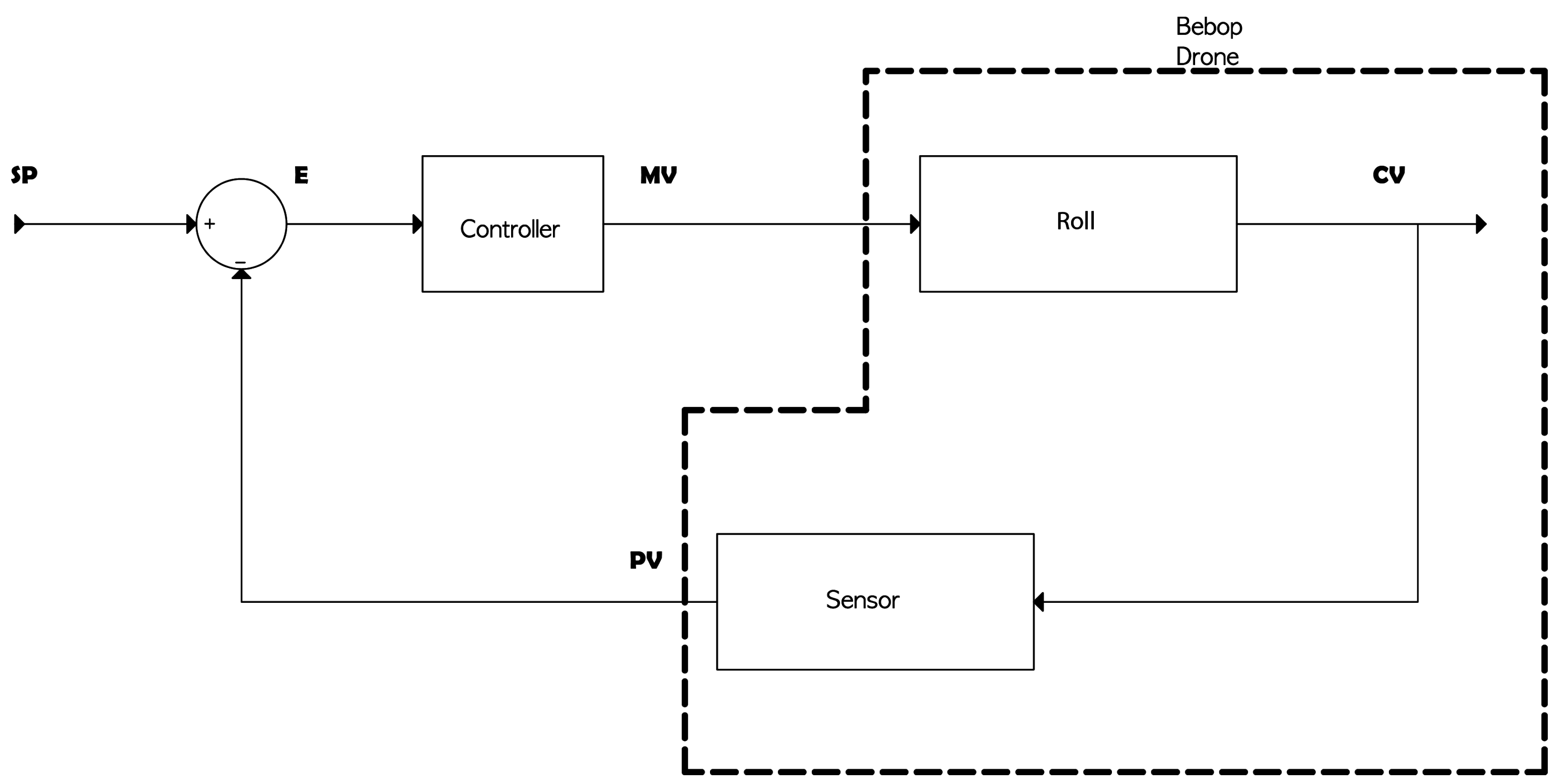

4.2. Controller Design

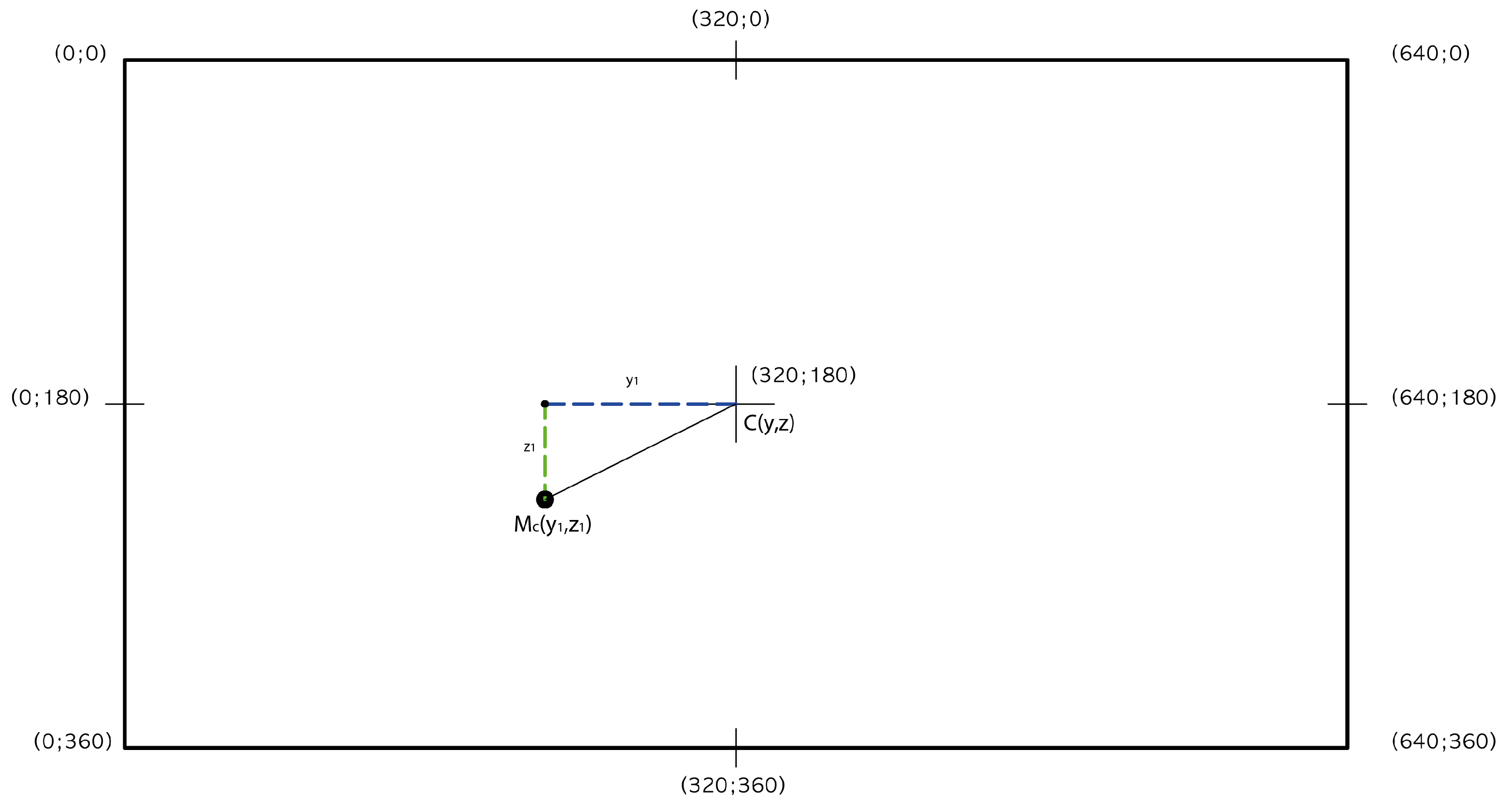

4.2.1. Position Error

4.2.2. Obstacle Area

4.2.3. Proportional Controller

4.2.4. Translation Compensation

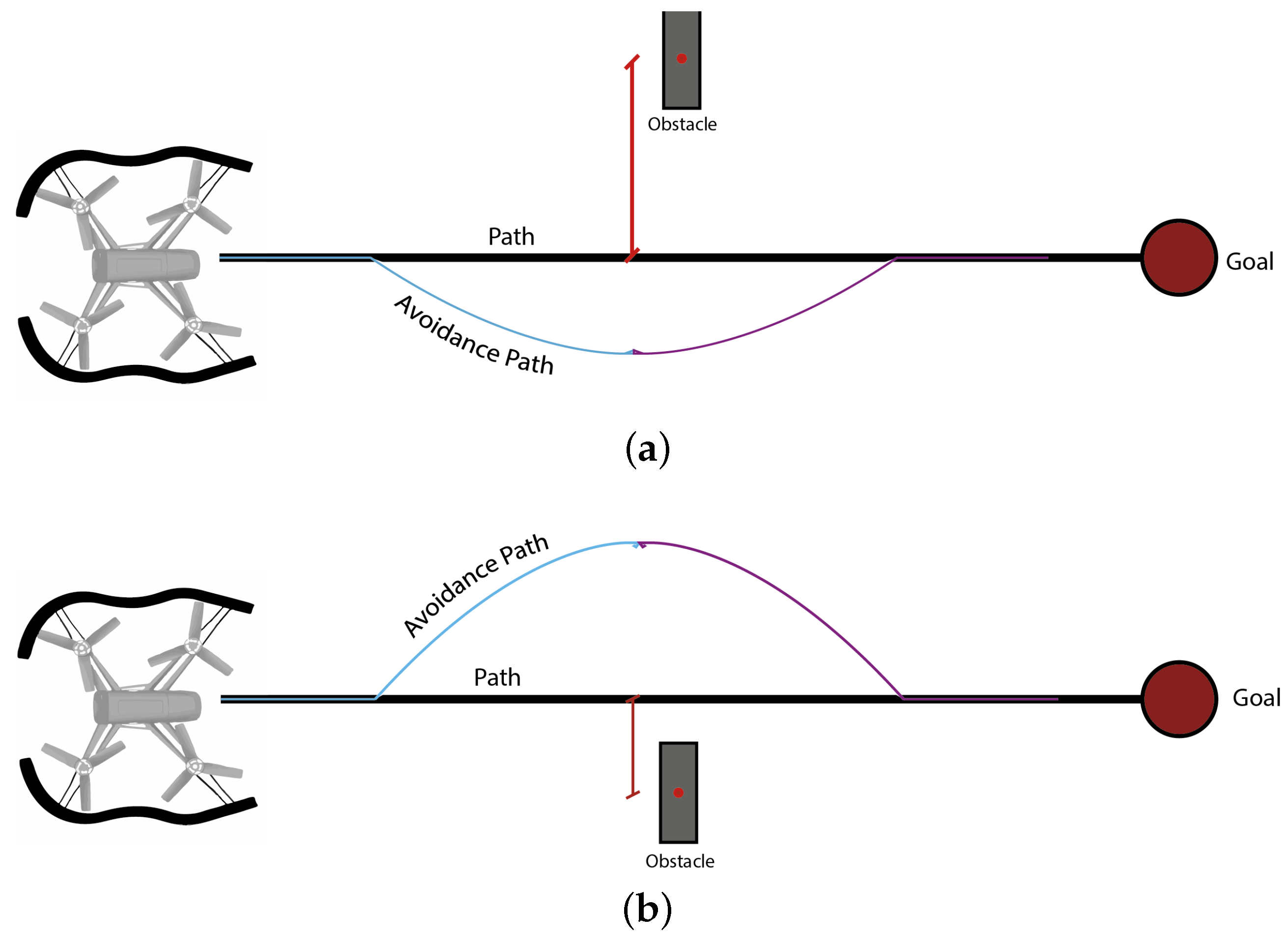

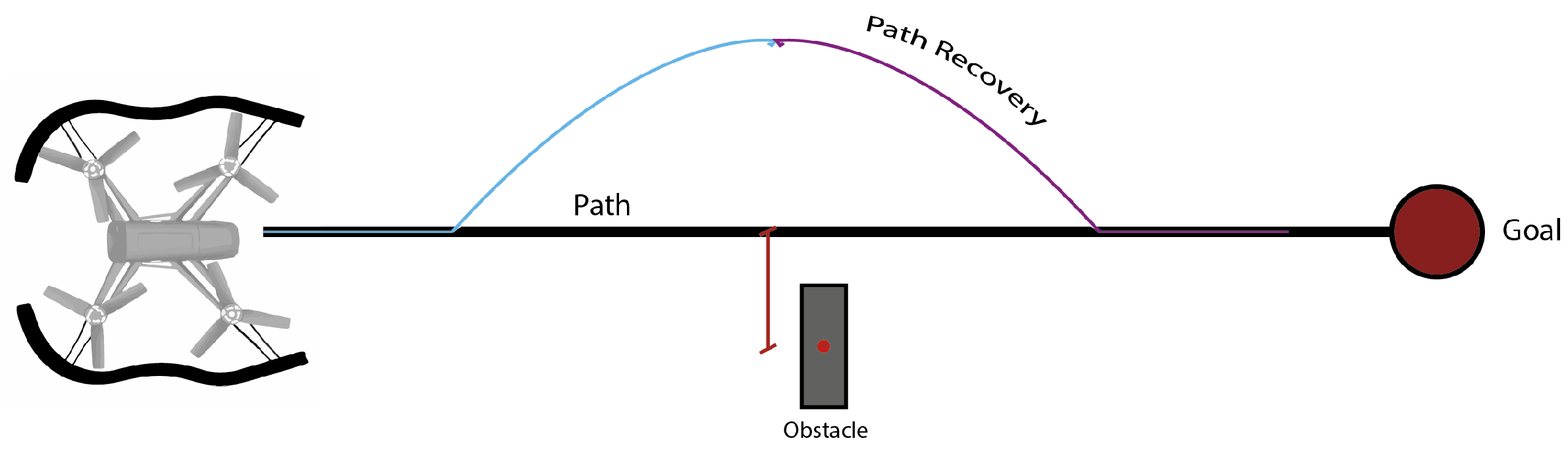

4.3. Obstacle Avoidance and Path Recovery Algorithm

| Algorithm 1 Obstacle avoidance algorithm. | |

| |

| Parameters | |

| obstacle area | |

| limit area | |

| position error, between the path center and mass center of obstacle | |

| speed that will be sent to MAV | |

| speed accumulated during the period of avoidance | |

| numbers of speed data saved | |

| Algorithm 2 Path recovery algorithm. | |

| |

| Parameters | |

| average speed | |

| wait time | |

| landing of the MAV | |

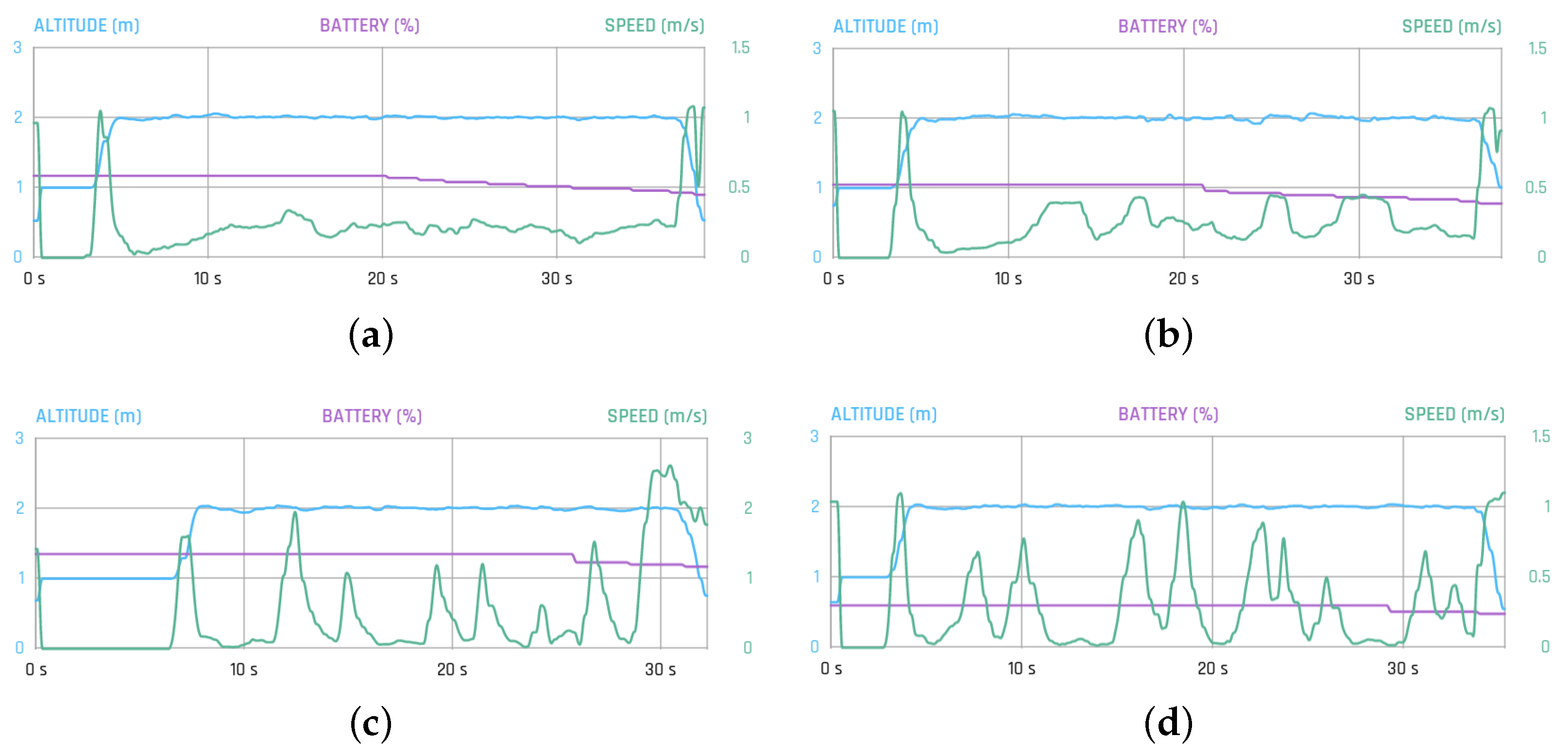

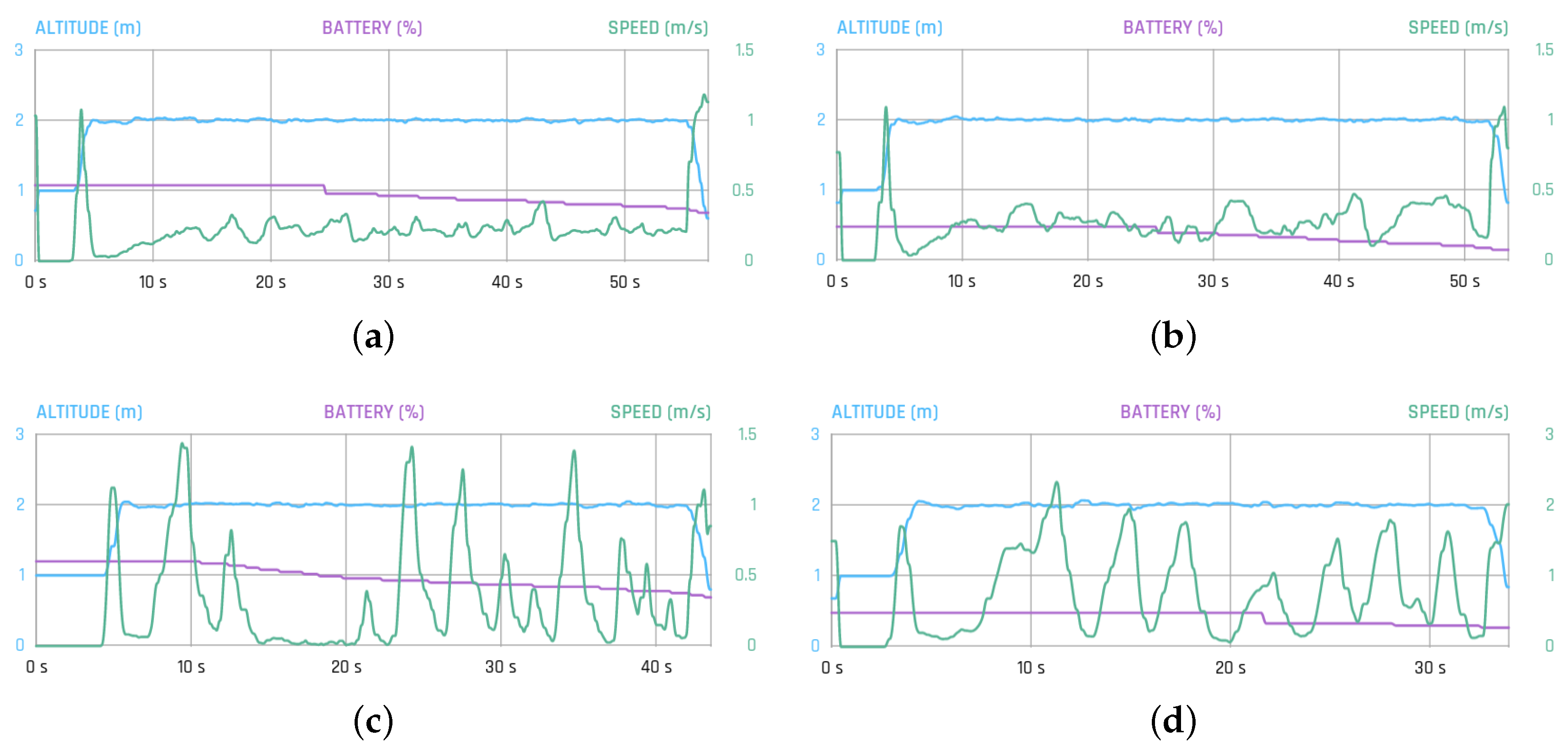

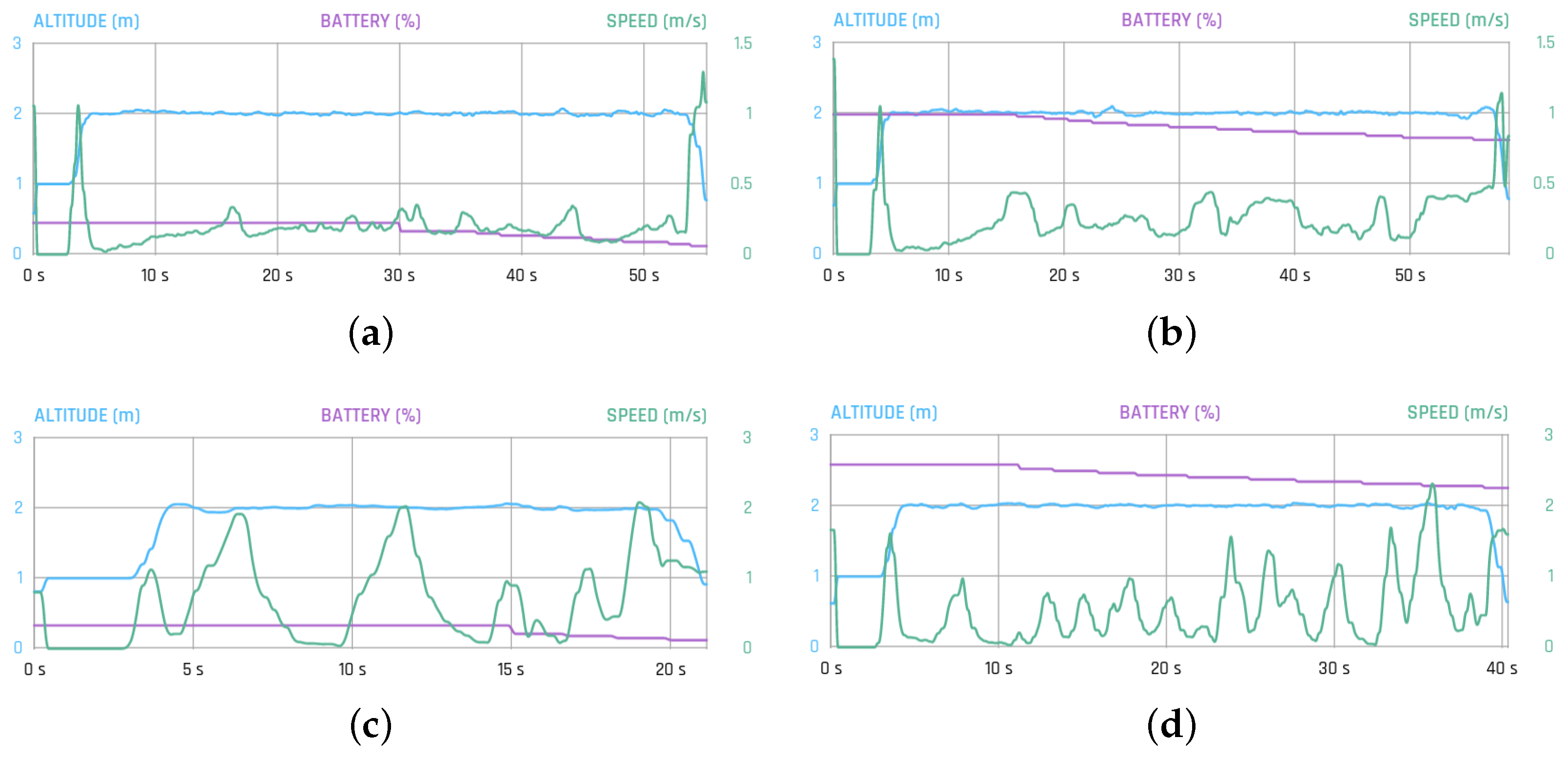

5. Experiments and Results

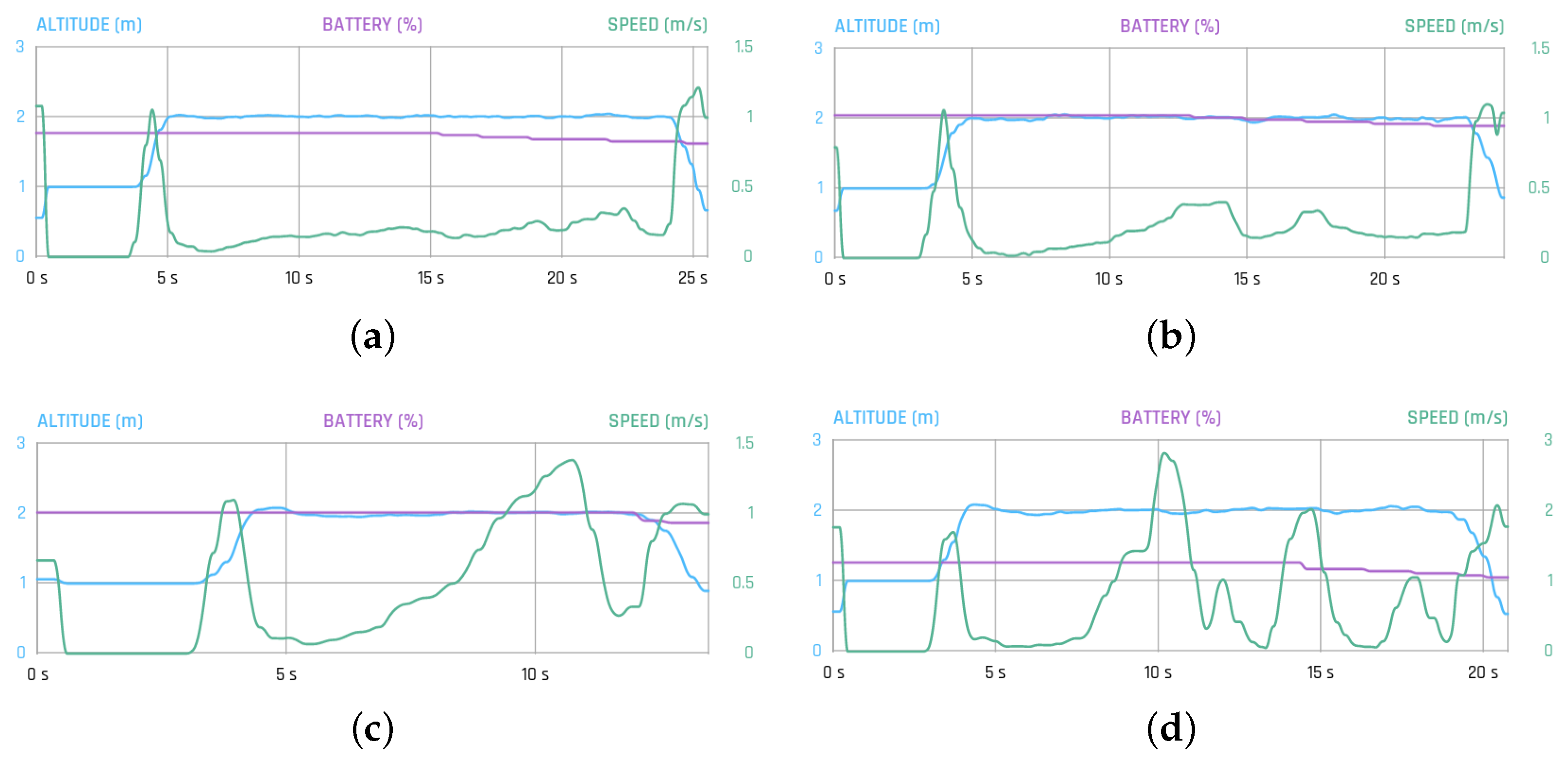

- Time: This shows the necessary time to complete the path.

- Maximum speed: This is an indicator proportional to the maximum distance to the rectilinear path.

- Minimum speed: This is an indicator proportional to the minimum distance to the rectilinear path.

- Average speed: This is an indicator proportional to the average distance to the rectilinear path.

- Distance: This shows the traveled distance of the flight.

- Battery: This shows the ratio of the battery used on the flight.

- Successful flights: This shows the number of flights that completed the path without the MAV touching or hitting the obstacles.

- Unsuccessful flights: This shows the number of flights that did not complete the path, due to the MAV touching or hitting the obstacles.

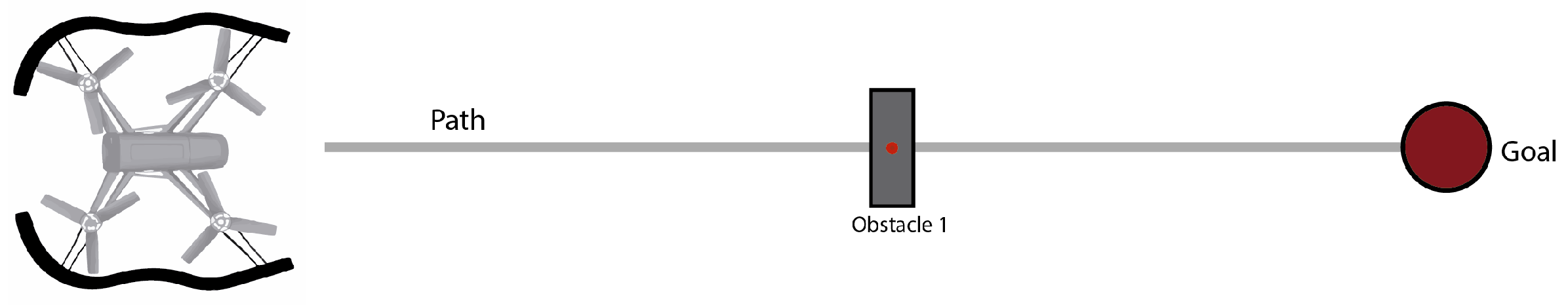

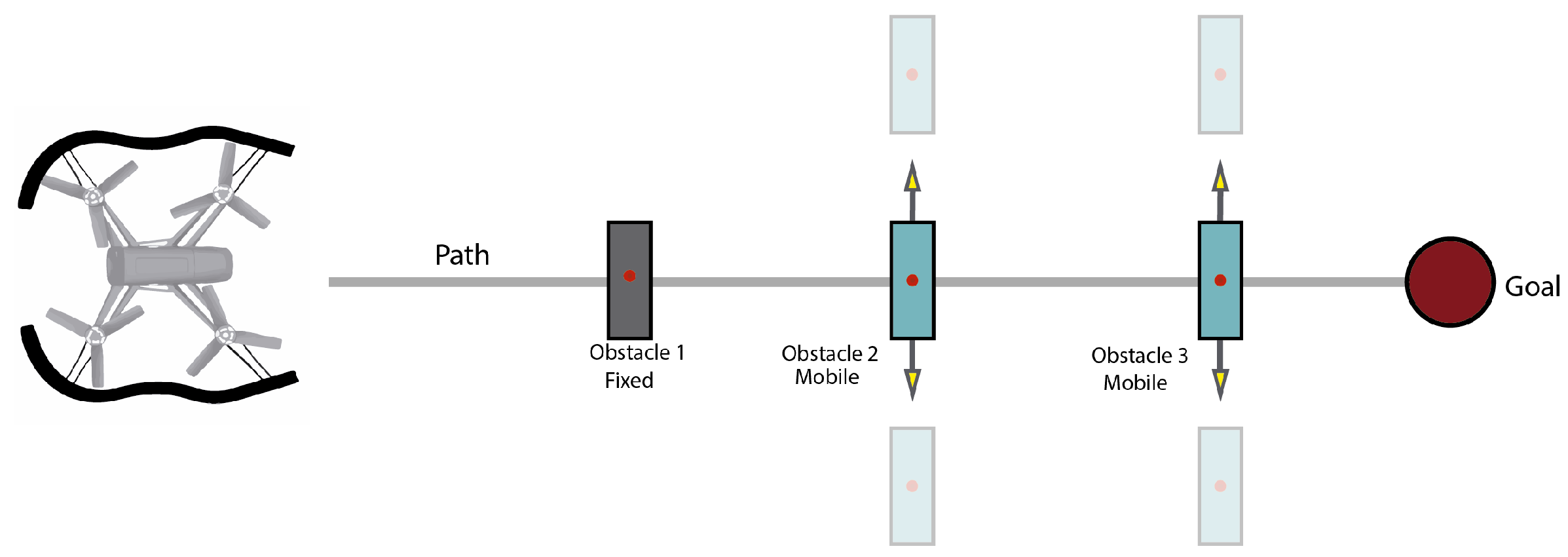

- One fixed obstacle.

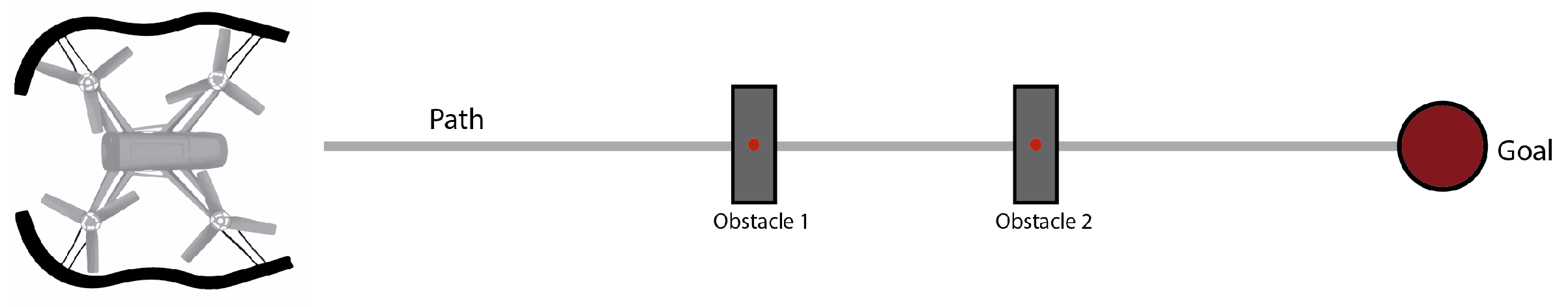

- Two fixed obstacles.

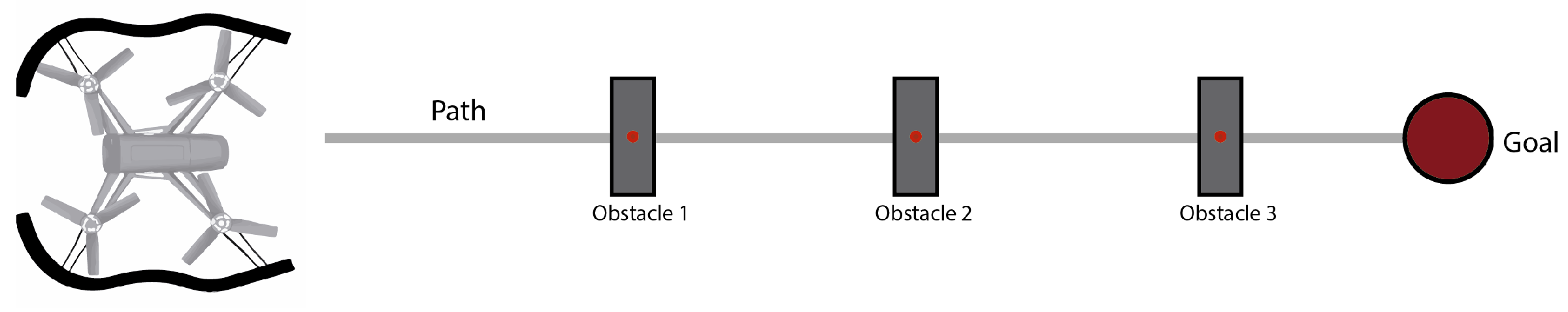

- Three fixed obstacles.

- One fixed obstacle and two mobile obstacles.

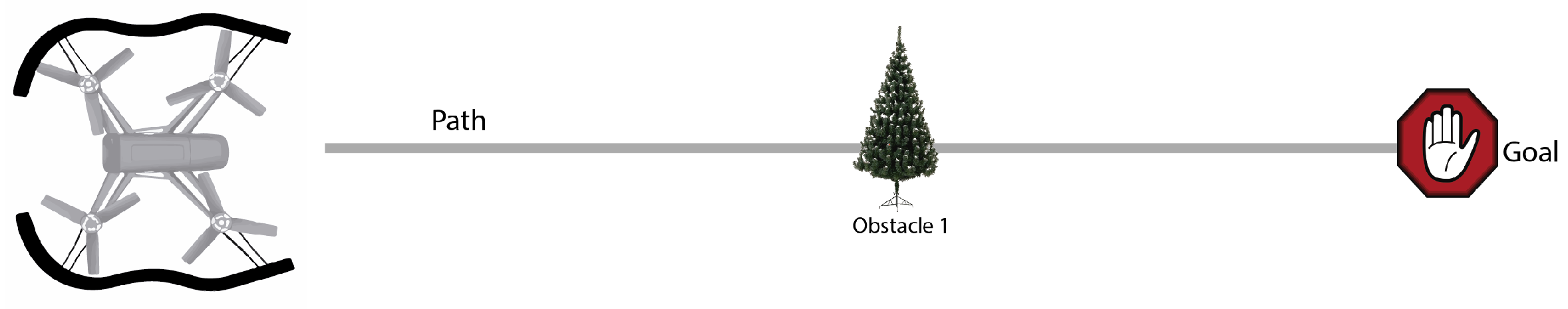

- One tree.

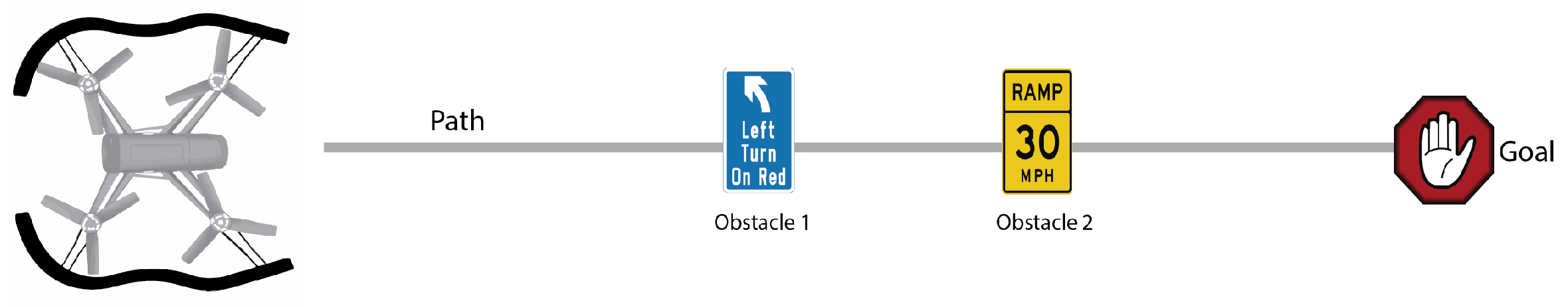

- Two traffic signs.

6. Conclusions and Future Works

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Barrientos, A.; del Cerro, J.; Gutiérrez, P.; San Martín, R.; Martínez, A.; Rossi, C. Vehículos Aéreos no Tripulados Para Uso Civil. Tecnología y Aplicaciones; Universidad Politécnica de Madrid: Madrid, Spain, 2007. [Google Scholar]

- Kendoul, F. Survey of advances in guidance, navigation, and control of unmanned rotorcraft systems. J. Field Robot. 2012, 29, 315–378. [Google Scholar] [CrossRef]

- Ortega, D.V.; Bueno, J.A.G.C.; Merino, R.V.; Sanz, S.B.; Correas, A.H.; Campo, D.R. Pilotos de Dron (RPAS); Ediciones Paraninfo S.A.: Madrid, Spain, 2005. [Google Scholar]

- Scherer, S.; Singh, S.; Chamberlain, L.; Elgersma, M. Flying fast and low among obstacles: Methodology and experiments. Int. J. Robot. Res. 2008, 27, 549–574. [Google Scholar] [CrossRef]

- Sabatini, R.; Gardi, A.; Richardson, M. LIDAR obstacle warning and avoidance system for unmanned aircraft. Int. J. Mech. Aerosp. Ind. Mechatron. Eng. 2014, 8, 718–729. [Google Scholar]

- Sabatini, R.; Gardi, A.; Ramasamy, S.; Richardson, M.A. A laser obstacle warning and avoidance system for manned and unmanned aircraft. In Proceedings of the MetroAeroSpace 2014: IEEE Workshop on Metrology for Aerospace, Benevento, Italy, 29–30 May 2014; pp. 616–621.

- Kerl, C. Odometry from RGB-D Cameras for Autonomous Quadrocopters. Ph.D. Thesis, Technischen Universitat Munchen (LUM), Munchen, Germany, 2012. [Google Scholar]

- Bachrach, A.; Prentice, S.; He, R.; Henry, P.; Huang, A.S.; Krainin, M.; Maturana, D.; Fox, D.; Roy, N. Estimation, planning, and mapping for autonomous flight using an RGB-D camera in GPS-denied environments. Int. J. Robot. Res. 2012, 31, 1320–1343. [Google Scholar] [CrossRef]

- Beyeler, A.; Zufferey, J.C.; Floreano, D. 3D vision-based navigation for indoor microflyers. In Proceedings of the 2007 IEEE International Conference on Robotics and Automation, Roma, Italy, 10–14 April 2007; pp. 1336–1341.

- Oh, P.Y.; Green, W.E.; Barrows, G. Neural nets and optic flow for autonomous micro-air-vehicle navigation. In Proceedings of the ASME 2004 International Mechanical Engineering Congress and Exposition, Anaheim, CA, USA, 13–19 November 2004; American Society of Mechanical Engineers: New York, NY, USA, 2004; pp. 1279–1285. [Google Scholar]

- Zufferey, J.C.; Floreano, D. Fly-inspired visual steering of an ultralight indoor aircraft. IEEE Trans. Robot. 2006, 22, 137–146. [Google Scholar] [CrossRef]

- Muratet, L.; Doncieux, S.; Meyer, J.A. A biomimetic reactive navigation system using the optical flow for a rotary-wing UAV in urban environment. In Proceedings of the International Symposium on Robotics ISR, Paris, France, 23–26 March 2004.

- Merrell, P.C.; Lee, D.J.; Beard, R.W. Obstacle Avoidance for Unmanned Air Vehicles Using Optical Flow Probability Distributions; International Society for Optics and Photonics: Bellingham, WA, USA, 2004; pp. 13–22. [Google Scholar]

- Green, W.E.; Oh, P.Y. Optic-flow-based collision avoidance. IEEE Robot. Autom. Mag. 2008, 15, 96–103. [Google Scholar] [CrossRef]

- Sabe, K.; Fukuchi, M.; Gutmann, J.S.; Ohashi, T.; Kawamoto, K.; Yoshigahara, T. Obstacle avoidance and path planning for humanoid robots using stereo vision. In Proceedings of the 2004 IEEE International Conference on Robotics and Automation (ICRA’04), New Orleans, LA, USA, 26 April–1 May 2004; Volume 1, pp. 592–597.

- Na, I.; Han, S.H.; Jeong, H. Stereo-based road obstacle detection and tracking. In Proceedings of the 2011 13th International Conference on Advanced CommunicationTechnology (ICACT), Gangwon-Do, Korea, 13–16 February 2011.

- Bills, C.; Chen, J.; Saxena, A. Autonomous MAV flight in indoor environments using single image perspective cues. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011; pp. 5776–5783.

- Çelik, K.; Somani, A.K. Monocular vision SLAM for indoor aerial vehicles. J. Electr. Comput. Eng. 2013, 2013, 1566–1573. [Google Scholar] [CrossRef]

- De Croon, G.; De Weerdt, E.; De Wagter, C.; Remes, B.; Ruijsink, R. The appearance variation cue for obstacle avoidance. IEEE Trans. Robot. 2012, 28, 529–534. [Google Scholar] [CrossRef]

- Lin, Y.; Saripalli, S. Moving obstacle avoidance for unmanned aerial vehicles. In Proceedings of the 69th American Helicopter Society International Annual Forum 2013, Phoenix, AZ, USA, 21–23 May 2013.

- Mejias, L.; Bernal, I.F.M.; Campoy, P. Vision Based Control for Micro Aerial Vehicles: Application to Sense and Avoid. In Recent Advances in Robotics and Automation; Springer: Berlin/Heidelberg, Germany, 2013; pp. 127–141. [Google Scholar]

- Lin, Y.; Saripalli, S. Path planning using 3D dubins curve for unmanned aerial vehicles. In Proceedings of the 2014 IEEE International Conference on Unmanned Aircraft Systems (ICUAS), Orlando, FL, USA, 27–30 May 2014; pp. 296–304.

- Pettersson, P.O.; Doherty, P. Probabilistic roadmap based path planning for an autonomous unmanned helicopter. J. Intell. Fuzzy Syst. 2006, 17, 395–405. [Google Scholar]

- Hrabar, S. 3D path planning and stereo-based obstacle avoidance for rotorcraft UAVs. In Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 807–814.

- Chavez, A.; Gustafson, D. Vision-based obstacle avoidance using SIFT features. In Proceedings of the International Symposium on Visual Computing, Las Vegas, NV, USA, 30 November–2 December 2009; Springer: Berlin/Heidelberg, Germany, 2009; pp. 550–557. [Google Scholar]

- Kim, D.; Dahyot, R. Face components detection using SURF descriptors and SVMs. In Proceedings of the 2008 International Machine Vision and Image Processing Conference (IMVIP’08), Dublin, Ireland, 3–5 September 2008; pp. 51–56.

- Chu, D.M.; Smeulders, A.W. Color invariant surf in discriminative object tracking. In Proceedings of the 11th European Conference on Computer Vision, Heraklion, Greece, 10–11 September 2010; Springer: Berlin/Heidelberg, Germany, 2010; pp. 62–75. [Google Scholar]

- He, W.; Yamashita, T.; Lu, H.; Lao, S. Surf tracking. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 1586–1592.

- Krajník, T.; Nitsche, M.; Pedre, S.; Přeučil, L.; Mejail, M.E. A simple visual navigation system for an UAV. In Proceedings of the 2012 IEEE 9th International Multi-Conference on Systems, Signals and Devices (SSD), Chemnitz, Germany, 20–23 March 2012; pp. 1–6.

- Mori, T.; Scherer, S. First results in detecting and avoiding frontal obstacles from a monocular camera for micro unmanned aerial vehicles. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation (ICRA), Karlsruhe, Germany, 6–10 May 2013; pp. 1750–1757.

- Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, PAMI-8, 679–698. [Google Scholar] [CrossRef]

- Harris, C.; Stephens, M. A combined corner and edge detector. In Proceedings of the 4th Alvey Vision Conference, Manchester, UK, 31 August–2 September 1988; Volume 15, p. 50.

- Miksik, O.; Mikolajczyk, K. Evaluation of local detectors and descriptors for fast feature matching. In Proceedings of the 2012 IEEE 21st International Conference on Pattern Recognition (ICPR), Tsukuba, Japan, 11–15 November 2012; pp. 2681–2684.

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 IEEE International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571.

- Alahi, A.; Ortiz, R.; Vandergheynst, P. Freak: Fast retina keypoint. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Rhode Island, Providence, USA, 16–21 June 2012; pp. 510–517.

- Leutenegger, S.; Chli, M.; Siegwart, R.Y. BRISK: Binary robust invariant scalable keypoints. In Proceedings of the 2011 IEEE International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2548–2555.

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer vision, Kerkyra, Greece, 20–25 September 1999; Volume 2, pp. 1150–1157.

- Bay, H.; Ess, A.; Tuytelaars, T.; van Gool, L. Speeded-up robust features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Juan, L.; Gwun, O. A comparison of sift, pca-sift and surf. Int. J. Image Process. (IJIP) 2009, 3, 143–152. [Google Scholar]

- Huang, D.S.; Jo, K.H.; Hussain, A. Intelligent Computing Theories and Methodologies. In Proceedings of the 11th International Conference, ICIC 2015, Fuzhou, China, 20–23 August 2015; Springer: Berlin, Germany, 2015; Volume 9226. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Derpanis, K.G. Overview of the RANSAC algorithm. Image Rochester NY 2010, 4, 2–3. [Google Scholar]

- Aguilar, W.G.; Angulo Bahón, C. Estabilización robusta de vídeo basada en diferencia de nivel de gris. Congr. Cienc. Tecnol. 2013, 8, 1. [Google Scholar]

- Aguilar, W.G.; Angulo, C. Robust video stabilization based on motion intention for low-cost micro aerial vehicles. In Proceedings of the 2014 IEEE 11th International Multi-Conference on Systems, Signals & Devices (SSD), Castelldefels, Spain, 11–14 February 2014; pp. 1–6.

- Aguilar, W.G.; Angulo, C. Real-time video stabilization without phantom movements for micro aerial vehicles. EURASIP J. Image Video Process. 2014, 2014, 1–13. [Google Scholar] [CrossRef]

- Aguilar, W.G.; Angulo, C. Estabilización de vídeo en micro vehículos aéreos y su aplicación en la detección de caras. In Proceedings of the IIX Congreso De Ciencia Y Tecnología ESPE 2014, Sangolquí, Ecuador, 28–30 May 2014; pp. 1–6.

- Vazquez, M.; Chang, C. Real-time video smoothing for small RC helicopters. In Proceedings of the 2009 IEEE International Conference on Systems, Man and Cybernetics (SMC 2009), San Antonio, TX, USA, 11–14 October 2009; pp. 4019–4024.

- Aguilar, W.G.; Angulo, C. Real-Time Model-Based Video Stabilization for Microaerial Vehicles. Neural Proc. Lett. 2016, 43, 459–477. [Google Scholar] [CrossRef]

- Aguilar, W.G.; Angulo, C. Control autónomo de cuadricópteros para seguimiento de trayectorias. In Proceedings of the IX Congreso de Ciencia y Tecnología ESPE, Sangolquí, Ecuador, 28–30 May 2014.

- Hughes, J.M. Real World Instrumentation with Python: Automated Data Acquisition and Control Systems; O’Reilly Media, Inc.: Fort Salvador, CA, USA, 2010. [Google Scholar]

- Dlouhý, M. Katarina. Available online: http://robotika.cz/robots/katarina/en (accessed on 17 October 2016).

- Aguilar, W.G.; Casaliglla, V.P.; Polit, J.L. Obstacle Avoidance for UAVs. Available online: http://www.youtube.com/watch?v=uiN9PgPpFao&feature=youtu.be (accessed on 26 December 2016).

- Aguilar, W.G.; Morales, S.G. 3D environment mapping using the Kinect V2 and path planning based on RRT algorithms. Electronics 2016, 5, 70. [Google Scholar] [CrossRef]

- Cabras, P.; Rosell, J.; Pérez, A.; Aguilar, W.G.; Rosell, A. Haptic-based navigation for the virtual bronchoscopy. IFAC Proc. Vol. 2011, 44, 9638–9643. [Google Scholar] [CrossRef]

| Control | Maximum Speed (m/s) | Minimum Speed (m/s) | Average Speed (m/s) | Distance (m) | Time (s) | Battery (%) |

|---|---|---|---|---|---|---|

| Autonomous algorithm | 0.865 | 0.116 | 0.256 | 4.957 | 18.869 | 5.389 |

| Bang-Bang | 0.918 | 0.108 | 0.290 | 5.262 | 18.207 | 5.422 |

| Teleoperator with experience | 2.625 | 0.108 | 1.073 | 8.716 | 9.817 | 5.750 |

| Teleoperator without experience | 2.313 | 0.141 | 0.809 | 9.490 | 11.997 | 6.692 |

| Control | Maximum Speed (m/s) | Minimum Speed (m/s) | Average Speed (m/s) | Distance (m) | Time (s) | Battery (%) |

|---|---|---|---|---|---|---|

| Autonomous algorithm | 0.780 | 0.113 | 0.252 | 8.582 | 33.382 | 8.811 |

| Bang-Bang | 0.832 | 0.109 | 0.320 | 10.227 | 33.075 | 9.419 |

| Teleoperator with experience | 1.862 | 0.112 | 0.597 | 12.879 | 23.104 | 7.291 |

| Teleoperator without experience | 1.585 | 0.108 | 0.543 | 12.813 | 26.660 | 9.664 |

| Control | Maximum Speed (m/s) | Minimum Speed (m/s) | Average Speed (m/s) | Distance (m) | Time (s) | Battery (%) |

|---|---|---|---|---|---|---|

| Autonomous algorithm | 0.940 | 0.104 | 0.253 | 11.914 | 46.143 | 11.536 |

| Bang-Bang | 0.952 | 0.108 | 0.276 | 13.120 | 46.633 | 15.357 |

| Teleoperator with experience | 2.646 | 0.104 | 0.626 | 21.056 | 48.418 | 6.321 |

| Teleoperator without experience | 2.791 | 0.106 | 0.987 | 32.123 | 48.887 | 7.361 |

| Control | Maximum Speed (m/s) | Minimum Speed (m/s) | Average Speed (m/s) | Distance (m) | Time (s) | Battery (%) |

|---|---|---|---|---|---|---|

| Autonomous algorithm | 0.760 | 0.104 | 0.235 | 11.759 | 48.271 | 13.888 |

| Bang-Bang | 0.942 | 0.108 | 0.304 | 16.997 | 51.800 | 13.184 |

| Teleoperator with experience | 2.189 | 0.118 | 0.915 | 15.859 | 20.490 | 11.390 |

| Teleoperator without experience | 2.910 | 0.103 | 0.767 | 22.040 | 34.038 | 12.419 |

| Control | Total Number of Flights | Successful Flights | Unsuccessful Flights | Successful Flights Ratio (%) |

|---|---|---|---|---|

| Autonomous algorithm | 20 | 16 | 4 | 80 |

| Bang-Bang | 20 | 12 | 8 | 60 |

| Teleoperator with experience | 20 | 13 | 7 | 65 |

| Teleoperator without experience | 20 | 11 | 9 | 55 |

| Obstacle-Type | Maximum Speed (m/s) | Minimum Speed (m/s) | Average Speed (m/s) | Distance (m) | Time (s) | Battery (%) |

|---|---|---|---|---|---|---|

| Pre-designed obstacle | 0.865 | 0.116 | 0.256 | 4.957 | 18.869 | 5.389 |

| Tree | 0.303 | 0.028 | 0.124 | 3.025 | 24.121 | 3.432 |

| Traffic signs | 0.634 | 0.052 | 0.241 | 5.928 | 27.620 | 5.625 |

| Obstacle-Type | Total Number of Flights | Successful Flights | Unsuccessful Flights | Successful Flights Ratio (%) |

|---|---|---|---|---|

| Pre-designed obstacle | 10 | 8 | 2 | 80 |

| Tree | 10 | 4 | 6 | 40 |

| Traffic signs | 10 | 8 | 2 | 80 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license ( http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aguilar, W.G.; Casaliglla, V.P.; Pólit, J.L. Obstacle Avoidance Based-Visual Navigation for Micro Aerial Vehicles. Electronics 2017, 6, 10. https://doi.org/10.3390/electronics6010010

Aguilar WG, Casaliglla VP, Pólit JL. Obstacle Avoidance Based-Visual Navigation for Micro Aerial Vehicles. Electronics. 2017; 6(1):10. https://doi.org/10.3390/electronics6010010

Chicago/Turabian StyleAguilar, Wilbert G., Verónica P. Casaliglla, and José L. Pólit. 2017. "Obstacle Avoidance Based-Visual Navigation for Micro Aerial Vehicles" Electronics 6, no. 1: 10. https://doi.org/10.3390/electronics6010010

APA StyleAguilar, W. G., Casaliglla, V. P., & Pólit, J. L. (2017). Obstacle Avoidance Based-Visual Navigation for Micro Aerial Vehicles. Electronics, 6(1), 10. https://doi.org/10.3390/electronics6010010