Abstract

Motion detection and tracking is a relevant problem for mobile robots during navigation to avoid collisions in dynamic environments or in applications where service robots interact with humans. This paper presents a simple method to distinguish mobile obstacles from the environment that is based on applying fuzzy threshold selection to consecutive two-dimensional (2D) laser scans previously matched with robot odometry. The proposed method has been tested with the Auriga-α mobile robot in indoors to estimate the motion of nearby pedestrians.

1. Introduction

Motion detection can be performed by separating foreground from background in a sequence of images [1,2] or laser scans [3,4] taken from a static location. From a vehicle in motion, a more challenging problem appears because unmatched areas can correspond either to moving obstacles or occlusions due to different points of view [5]. In this case, motion sensing can be useful both for mobile robot navigation [6] and for driving assistance systems [7].

Different sensor configurations such as a single camera [8], stereo vision [9], 2D [10] or 3D [11] laser scanners can be employed to assess motion. Mertz et al. present a complete review of works that have developed detection and tracking of moving objects based on 2D laser scanners [12]. Working with this kind of sensor, moving objects can be reliably identified by searching for discrepancies of a scan with a previously known map of the environment [13]. Alternatively, the robot can be first driven around to build a background map of the environment [14,15], an updated local map around the robot can be employed [16,17] or motion detection can be integrated into SLAM [18]. Simpler procedures employ, at least, matched consecutive laser scans [5]. Pedestrians are usually regarded as dynamic obstacles that should be avoided [8], but they can also be followed at a certain distance [10,19].

A key process for motion detection is data clustering that consists in grouping together those points in a laser scan that belong to the same potential mobile object. To distinguish them from static objects, geometric and statistical features [20], theoretical shape models [21], laser reflection intensity [22] or a threshold [16,17] can be used. The clustering threshold can be chosen empirically as a constant [17] or time-variant value [16].

In this paper, fuzzy threshold selection is applied to detect mobile objects by taking into account both curvature and speed of the robot. In this way, the number of clusters to be processed can be reduced. The proposed solution only employs two consecutive laser scans previously matched with robot odometry. This simple method has been tested in indoors with a tracked mobile robot to estimate the motion of nearby pedestrians. Moreover, the proposed strategy has also been compared with constant threshold selection.

2. Motion Detection Procedure

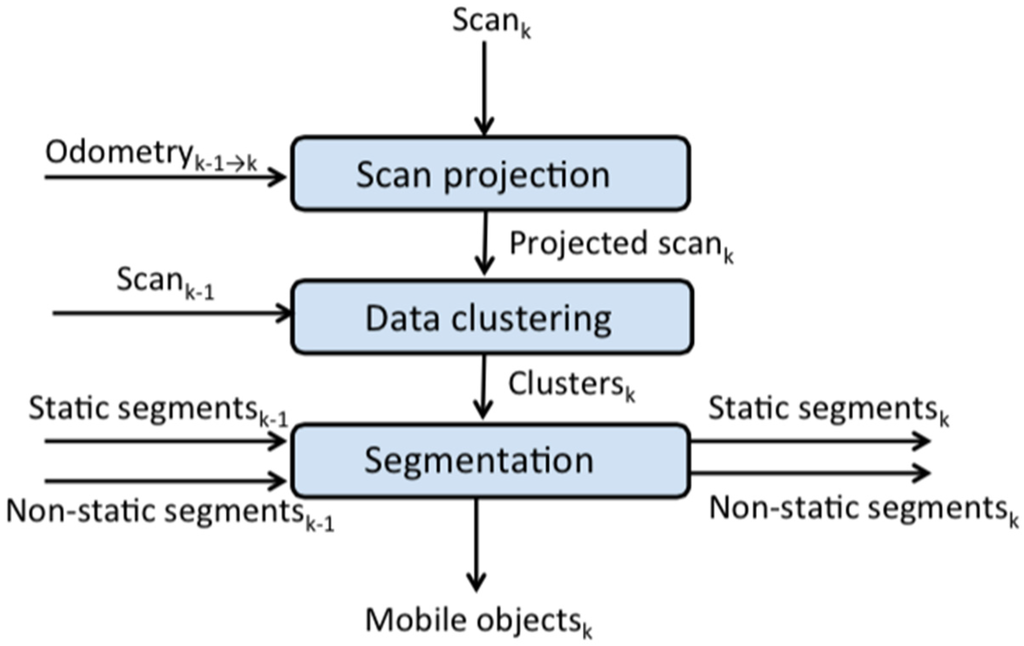

The procedure pursues to obtain sets of consecutive laser points from a scan called segments that can correspond to mobile objects. An overview of the whole procedure is outlined in Figure 1.

In the first step, those laser points from the current scan whose ranges are too far, are discarded using a maximum distance d:

where M is the minimum number of points required to discern a mobile object of size Z, and δ, Ф are the angular resolution and the field of view of the onboard laser scanner, respectively.

The range scan at discrete time k-1 is a set of Cartesian coordinates indexed by j = 1 to N. The current scan at time is projected into the previous XY frame to obtain according to odometric data, where J(j) represents the correspondence index function [23]. Note that with laser scanners with a limited field of view there can be points without correspondence.

Figure 1.

Overview of the motion detection procedure.

In the second step, called clustering, the projected scan is compared with the previous one. The procedure considers that for static objects, the distances measured in consecutive scans differ in a value lower than a given threshold σ. The result of applying σ to matched consecutive scans is a binary vector V sized N composed of zeros and ones

A zero in V(j) can mean either that point belongs to a stationary object or it has no correspondence, whereas a one means that the point can be part of a potential mobile object. Every unbroken sequence of ones is called a cluster of length . Let represent all the clusters T at discrete time k included in V:

where .

The last step, called segmentation, is to distinguish those clusters that can match with pedestrians. As the clusters generated by threshold selection differ in size, they are transformed into segments of a regular number of points by the application of the following basic rules.

Firstly, when short clusters are close to each other, they are grouped together in only one segment. This is useful to consider one mobile object for the legs of a person. Moreover, clusters with a fewer number of points than M are discarded, and long clusters are divided into smaller segments. In addition, the segments classified as potential pedestrians in the previous scan but not confirmed, such as occluded parts of the environment or the first detection of a person, together with the set of segments identified as static objects until time are considered as well. The final result is the set of segments that most probably correspond to pedestrians in the current scan and the updated sets and .

3. Fuzzy Threshold Selection

The number of clusters to be processed during navigation is a key parameter to be optimized. When using a small value for σ, a greater number of clusters need to be considered to discriminate those that can correspond to mobile objects. On the other hand, with a large value for σ, the number of clusters reduces but relevant motion information can be lost.

Bigger vehicle curvatures have usually associated bigger odometric errors. Thus, it is necessary to reduce the threshold to study in detail more clusters. However, with bigger robot speeds, wrong motion detections can be reduced by increasing the threshold. The problem with a constant threshold is that it cannot satisfy both situations at the same time.

Fuzzy logic can deal with this problem by applying approximate reasoning. Fuzzy logic has been employed in mobile robot navigation for a long time, e.g., path tracking [24]. In this way, a fuzzy algorithm can choose a proper value for σ according to the actual conditions of speed and curvature of the vehicle in an adaptive way.

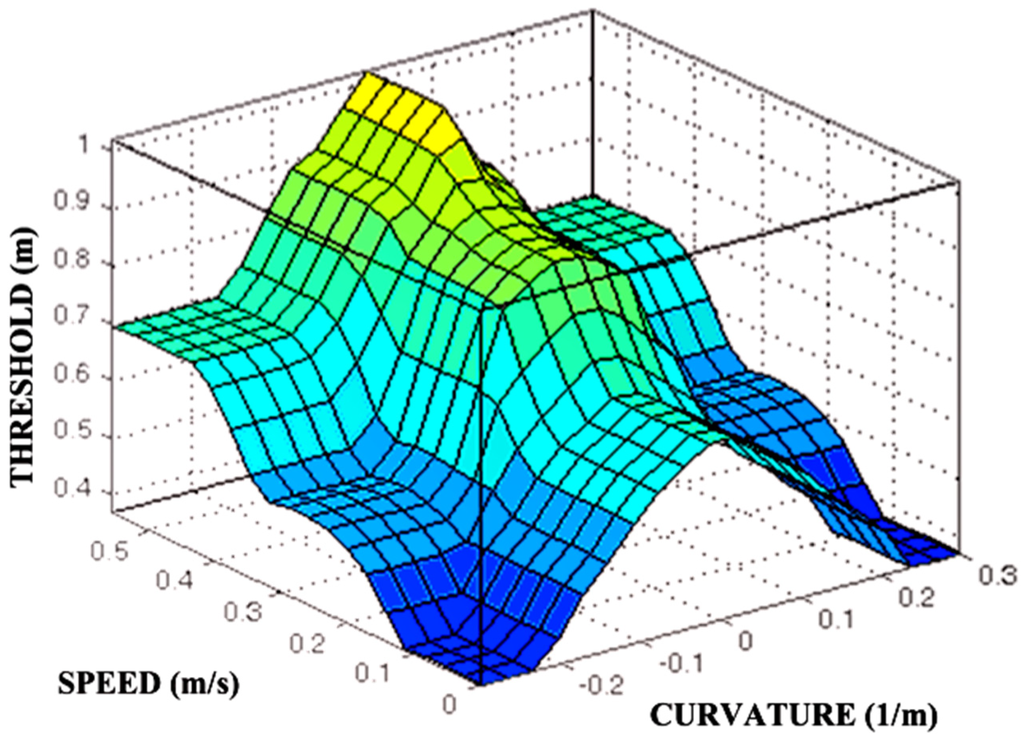

To formulate the fuzzy rules in the classical form IF <inputs> THEN <output>, the relationship between inputs and output must be established. Thus, when the absolute value of the curvature increases during turnings, the threshold must decrease. On the contrary, when the speed increases, the threshold has to increase. In case of conflict between both inputs, curvature is more relevant than speed. The fuzzy sets and rules need to be adjusted experimentally for each mobile robot. Particularly, it depends on robot odometry, and on its maximum speed and curvature.

Fuzzy inference can be a time-consuming process during navigation. To speed up threshold selection, a fuzzy surface can be generated off-line. Then, it is only necessary to interpolate this surface with real inputs to obtain the σ value to be applied.

4. Experimental Results

The experiments have been carried out by the tracked mobile robot Auriga-α with a 2D laser scanner (Sick LMS 200) placed in the front of the vehicle and 0.55 m above the ground (see Figure 2). Locomotion with skid-steer implies that the top speed of 1 m/s can only be reached during straight line motion and decreases according to the increase in demanded curvature.

Figure 2.

The mobile robot Auriga-α with its 2D laser scanner.

The field of view of this rangefinder is 180° with an angular resolution of 0.5°. This means that each scan is composed of N = 361 points. A complete new scan takes 270 ms to be transmitted to the on-board computer. Nevertheless, the motion detection procedure is applied every 540 ms, i.e., discarding one of two successive scans.

Odometric data is obtained every 30 ms by encoders on both traction motors. Motion estimation of this tracked vehicle is calculated using an approximate kinematic model for hard planar surfaces [25]. This data is employed for matching consecutive laser scans.

Three triangular fuzzy sets have been defined for each of the inputs (i.e., speed and curvature) and also for the output σ. The Mamdani fuzzification technique has been used as well as the center of gravity for defuzzification. The resulting fuzzy surface is shown in Figure 3.

The experiments took place in the hall of a building characterized by a centered pair of columns, stairs and a wall with several glass doors (see Figure 4). With the empirically-chosen values of M = 4 and Z = 0.5 m in Equation (1), the maximum distance for pedestrian detection is 14 m. The proposed approach has been tested using both simulated and real data obtained by manually guiding the robot with a joystick.

Figure 3.

Fuzzy surface for threshold selection in Auriga-α.

Figure 4.

The experimental site.

4.1. Results with Simulated Data

Three experiments have been performed with an accurate simulator of the Auriga-α mobile robot. Pedestrians are simulated as a circumference of radius 0.2 m moving in straight line at a constant speed of 0.8 m/s. In the first experiment, a person crosses behind the column and then passes through an open door. In the second and third experiments, a pedestrian walks in parallel to a wall separated at a distance of 0.1 m and 0.4 m, respectively.

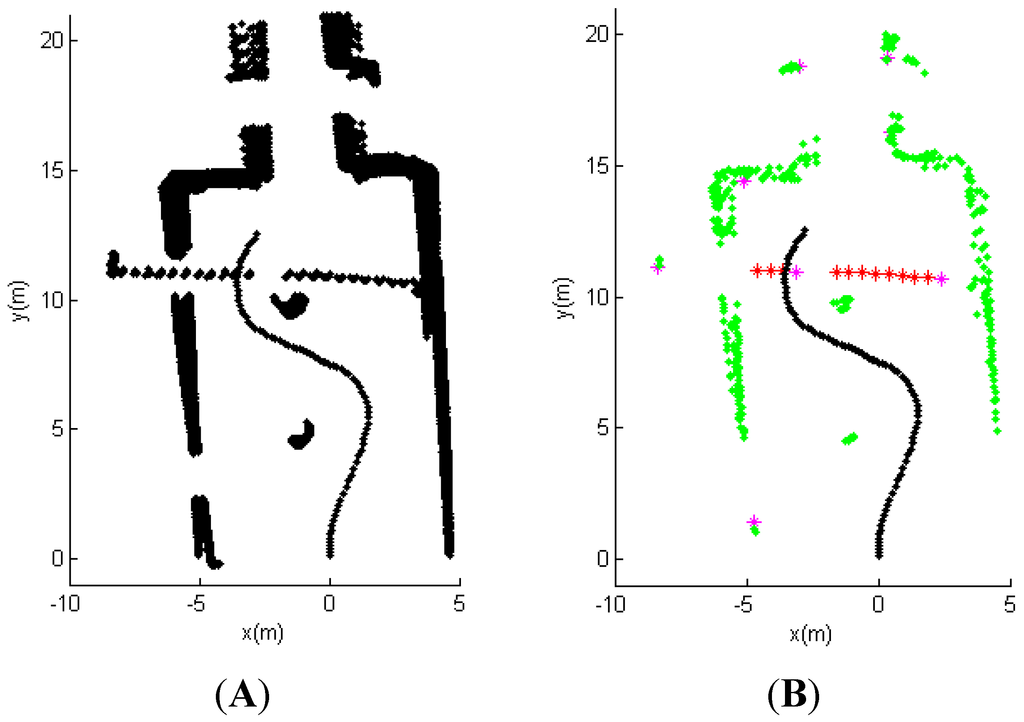

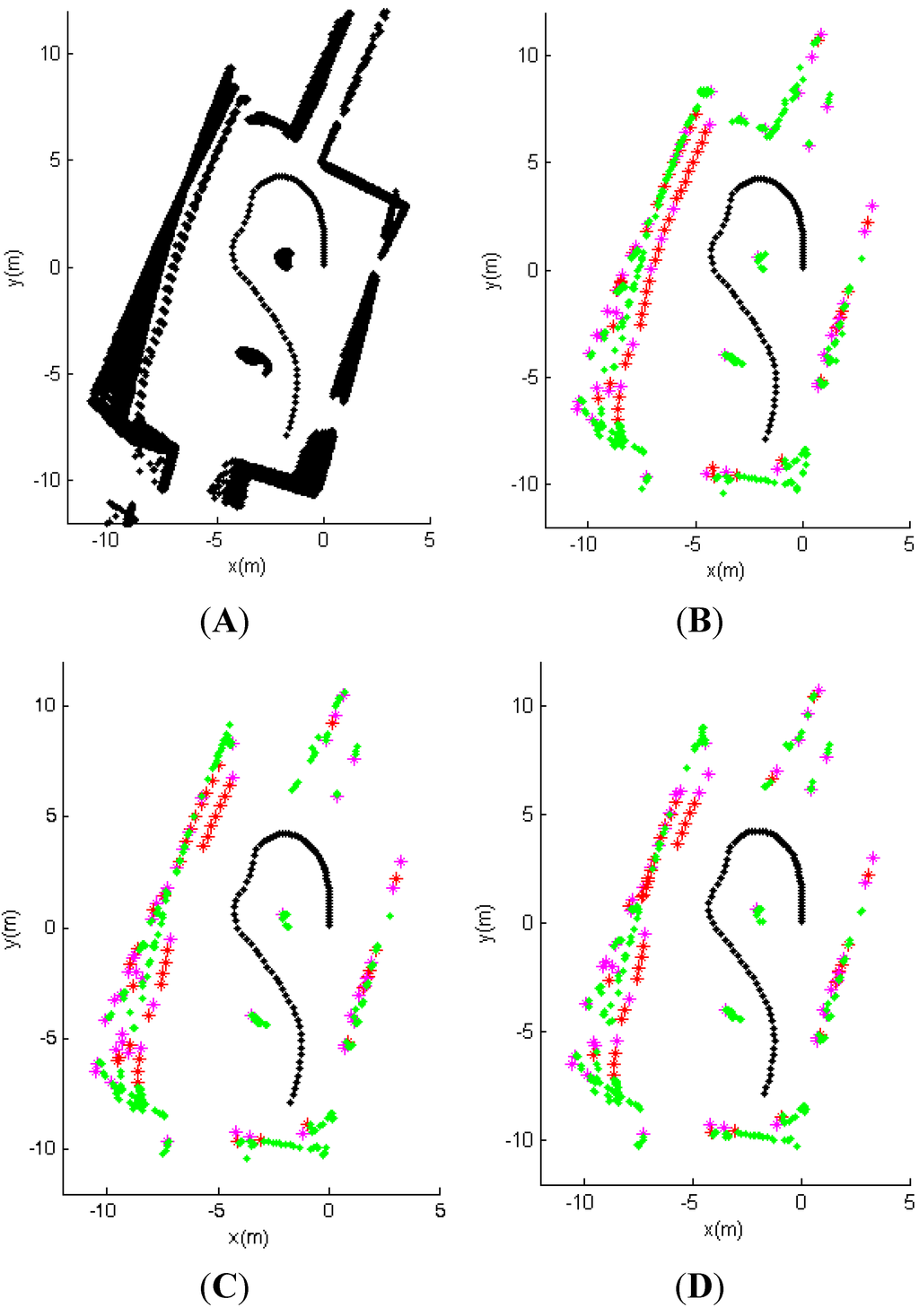

Figure 5.

Motion detection in the first simulated experiment: Raw matched data (A); and segmentation results (B).

The maps obtained by successive alignment of consecutive laser scan are shown in Figure 5A, Figure 6A and Figure 7A. It can be observed that these maps contain errors due to accumulated matching errors with robot odometry. These figures also show the trajectories followed by Auriga-α during the experiments.

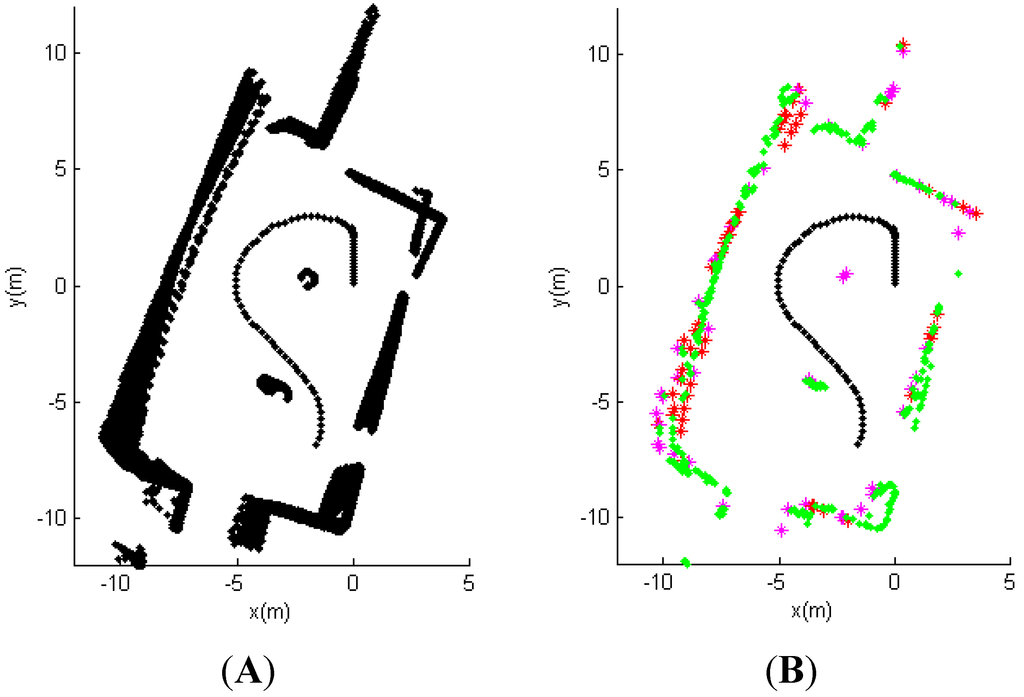

Figure 6.

Motion detection in the second simulated experiment: Raw matched data (A); and segmentation results (B).

The results of applying the motion detection procedure to the laser scan using fuzzy threshold selection are shown in Figure 5B, Figure 6B and Figure 7B. Segments are represented with asterisks that are plotted at the mean value of the coordinates of the points belonging to it. Red asterisks show the detected motion of a pedestrian . Green asterisks mean static segments . Magenta asterisks represent potential but not confirmed motion .

Figure 7.

Motion detection in the third simulated experiment: Raw matched data (A); fuzzy segmentation results (B); and results with constant threshold σ = 0.7 m (C); and σ = 0.8 m (D).

In the first simulated experiment (see Figure 5), it can be observed that the proposed method detects correctly the pedestrian and is able to resume detection after crossing behind the column. However, tracking is interrupted when the person passes through the door. In the second experiment (see Figure 6), the proposed method usually fails to distinguish the person from the wall. This does not happen with a bigger distance to the wall as shown in the third experiment (see Figure 7A,B). Moreover, in Figure 7C,D it is shown that fuzzy threshold performs better than a constant threshold.

4.2. Results with Real Data

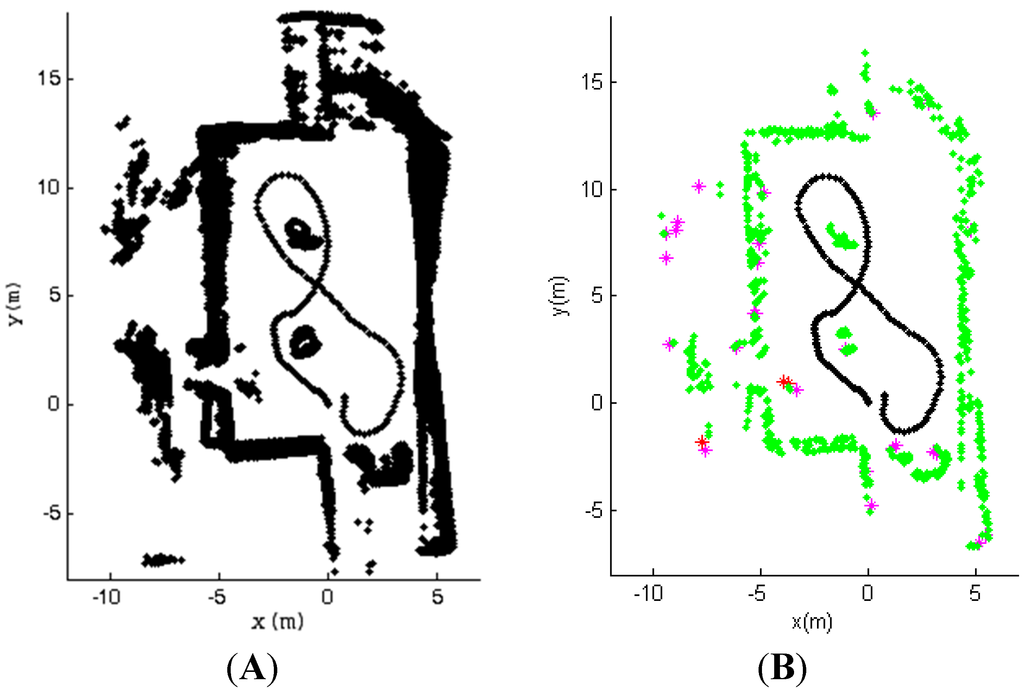

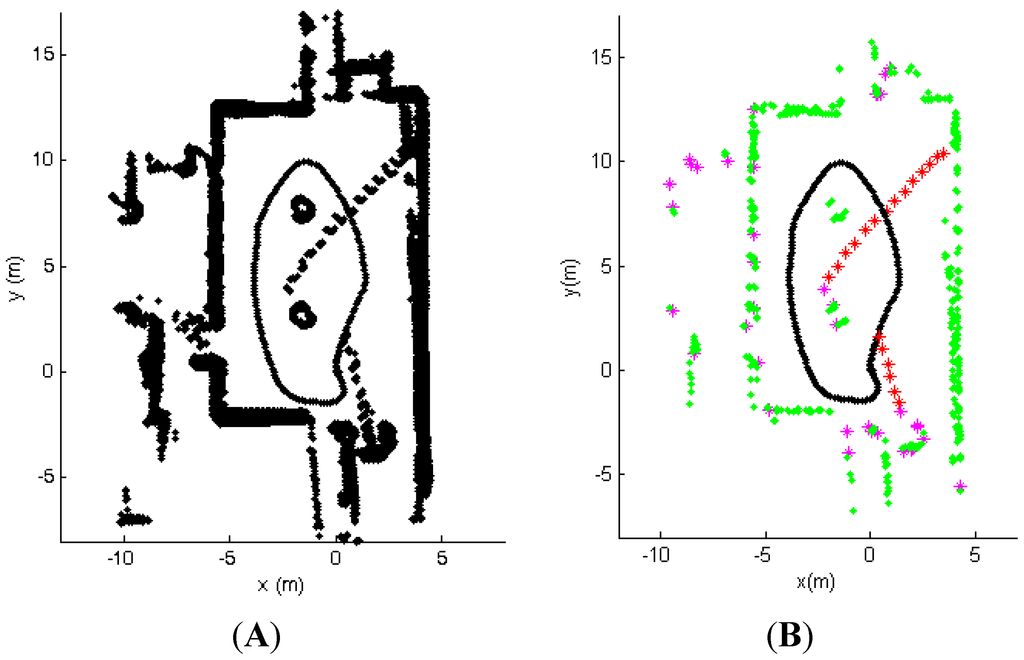

The maps obtained by successive alignment of consecutive laser scan are shown in Figure 8A and Figure 9A. The trajectories followed by Auriga-α in both experiments with 8-shaped and O-shaped forms are also shown.

The performance of the procedure to avoid wrong detections has been checked in the first experiment. In this sense, the pattern of a column partially captured by the laser is similar to the pattern of a person in a scan. During this experiment, only one person is in the hall, but walks from the rear of the mobile robot entering the field of view of the laser rangefinder just for a moment. On the other hand, in the second experiment, two persons at different times walked in the hall and can be observed by the mobile robot.

The results of applying the motion detection procedure to the laser scans using fuzzy threshold selection are shown in Figure 8B and Figure 9B for the first and second real experiments, respectively. Figure 8B shows that the columns are correctly identified as static objects. Moreover, the person who entered the field of view of the laser scanner for a moment is also properly detected. In Figure 9B, the trajectories of two persons walking through the hall during the experiment are correctly detected.

Figure 8.

Motion detection in the first real experiment: Raw matched data (A); and segmentation results (B).

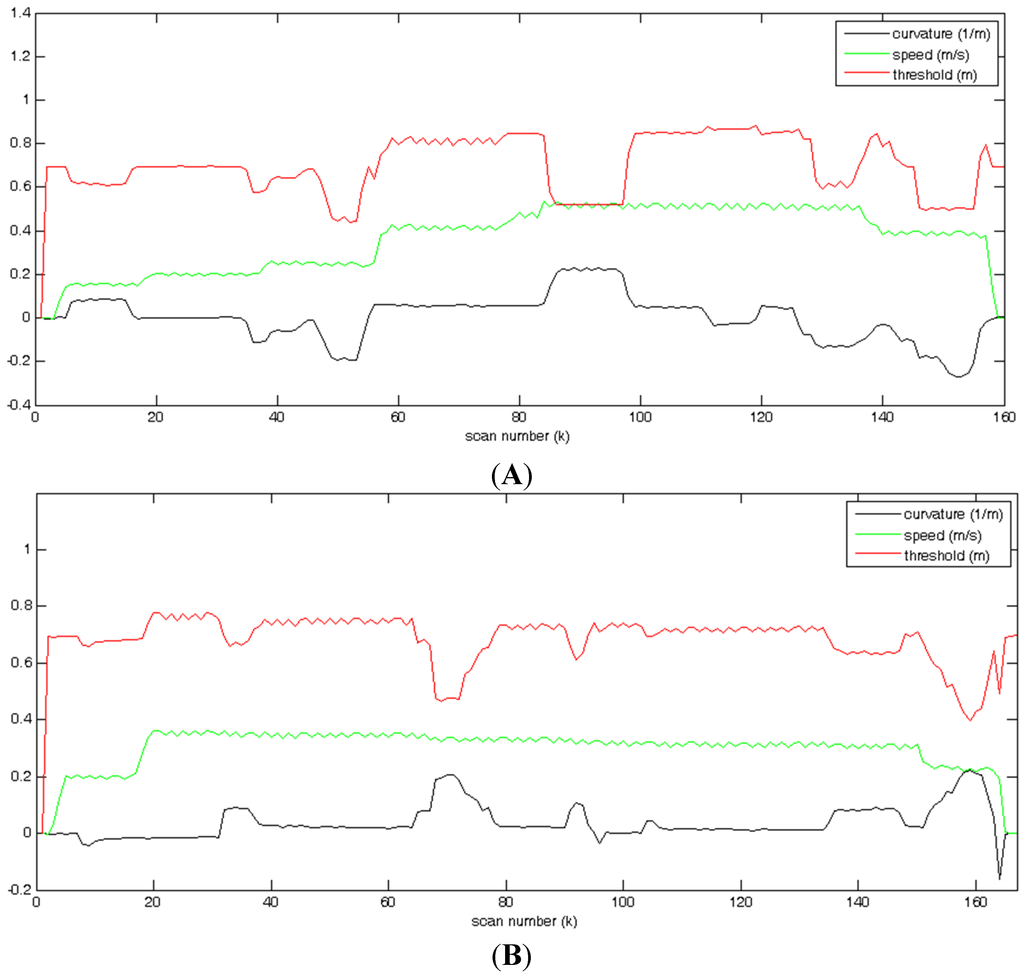

The thresholds applied during both experiments with fuzzy selection are shown in Figure 10. It can be observed that under constant speed, threshold decreases when the absolute value of curvature increases. The mean values for σ are 0.703 m and 0.687 m for the first and second experiments, respectively, which are very similar. The standard deviations with respect to the mean value are 12.6 cm and 7.9 cm for the first and second experiments, which is bigger for the first experiment because it includes greater variations both in speed and curvature.

Figure 9.

Motion detection in the second real experiment: Raw matched data (A); and segmentation results (B).

Figure 10.

Fuzzy thresholds obtained in the first (A); and second (B) real experiments.

The results obtained by applying different constant values to σ are compared with fuzzy threshold selection in Table 1 and Table 2 for the first and second experiments, respectively. The total number of clusters along the experiments is shown. The total number of wrong detections and undetected motions for every execution of the motion detection procedure are also specified. In the case of σ = 0.3 m, the number of clusters are bigger corresponding to the poorest filtering result. On the contrary, the value of σ = 0.9 m provides the fewest number of clusters. In case of using a constant threshold, the best option would be σ = 0.7 m, but the fuzzy selection provides slightly better detection results with a fewer number of analyzed clusters in both experiments.

Table 1.

Effect of threshold selection in the first real experiment.

| Threshold (σ) | 0.3 m | 0.6 m | 0.7 | 0.8 m | 0.9 m | Fuzzy |

|---|---|---|---|---|---|---|

| Number of clusters | 2054 | 1506 | 1312 | 1172 | 1042 | 1179 |

| Wrong detections | 3 | 1 | 1 | 4 | 1 | 1 |

| Undetected motion | 0 | 0 | 0 | 0 | 0 | 0 |

Table 2.

Effect of threshold selection in the second real experiment.

| Threshold (σ) | 0.3 m | 0.6 m | 0.7 m | 0.8 m | 0.9 m | Fuzzy |

|---|---|---|---|---|---|---|

| Number of clusters | 1420 | 809 | 771 | 712 | 645 | 708 |

| Wrong detections | 0 | 0 | 1 | 2 | 2 | 0 |

| Undetected motion | 0 | 0 | 0 | 1 | 1 | 0 |

5. Conclusions

In this work, it is proposed the use of adaptive threshold selection with fuzzy logic for the detection of mobile objects. Fuzzy threshold depends both on the actual speed and curvature of the mobile robot. This simple method employs two successive laser scans matched with odometry and requires neither building a map of the environment nor looking for the best scan overlap. The experiments with the mobile robot Auriga-α show the reduction in the number of clusters to be processed in order to detect pedestrians.

Future work will include implementation of robot speed adjustment during autonomous navigation according to estimated person trajectories [26]. It would also be interesting to compare the proposed method with more complex motion detection methods that incorporate target identification and tracking [27,28].

Acknowledgments

This work was partially supported by the Spanish CICYT project DPI 2011-22443 and the Andalusian project PE-2010 TEP-6101.

Author Contributions

M.A. Martínez developed the motion detection method; J.L. Martínez and M.A. Martínez wrote the paper; Illustrations were generated by M.A. Martínez; Simulated and real experimental data was recorded by J. Morales; Analysis of experiments was performed by M.A. Martínez, J.L. Martínez and J. Morales.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Cuevas, C.; Mohedano, R.; Jaureguizar, F.; García, N. High-quality real-time moving object detection by non-parametric segmentation. Electron. Lett. 2010, 46, 910–911. [Google Scholar] [CrossRef]

- Fernández-Caballero, A.; López, M.T.; Serrano-Cuerda, J. Thermal-infrared pedestrian ROI extraction through thermal and motion information fusion. Sensors 2014, 14, 6666–6676. [Google Scholar] [CrossRef] [PubMed]

- Panangadan, A.; Mataric, M.; Sukhatme, G.S. Tracking and modeling of human activity using laser rangefinders. Int. J. Soc. Robot. 2010, 2, 95–107. [Google Scholar] [CrossRef]

- Glas, D.F.; Ferreri, F.; Miyashita, T.; Ishiguro, H.; Hagita, N. Automatic calibration of laser range finder positions for pedestrian tracking based on social group detections. Adv. Robot. 2014, 28, 573–588. [Google Scholar]

- Amarasinghe, D.; Mann, G.K.I.; Gosine, R.G. Moving object detection in indoor environments using laser range data. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006.

- Ozaki, M.; Kakimuma, K.; Hashimoto, M.; Takahashi, K. Laser-based pedestrian tracking in outdoor environments by multiple mobile robots. Sensors 2012, 12, 14489–14507. [Google Scholar] [CrossRef] [PubMed]

- Xu, Y.; Xu, D.; Lin, S.; Han, T.X.; Cao, X.; Li, X. Detection of sudden pedestrian crossings for driving assistance systems. IEEE Trans. 2012, 42, 729–739. [Google Scholar]

- Kim, J.; Park, J.; Do, Y. Pedestrian detection by fusing multiple techniques of a single color camera for a mobile robot. In Proceedings of the International Conference on Information Science, Electronics and Electrical Engineering, Sapporo, Japan, 26–28 April 2014.

- Liu, S.; Huang, Y.; Zhang, R. Obstacle recognition for ADAS using stereovision and snake models. In Proceedings of the IEEE International Conference on Intelligent Transportation Systems, Qingdao, China, 8–11 October 2014.

- Chen, C.L.; Chou, C.C.; Lian, F.L. Detecting and tracking of host people on a slave mobile robot for service-related tasks. In Proceedings of the SICE Annual Conference, Tokyo, Japan, 13–18 September 2011.

- Petrovskaya, A.; Thrun, S. Model based vehicle detection and tracking for autonomous urban driving. Auton. Robot. 2009, 26, 123–139. [Google Scholar] [CrossRef]

- Mertz, C.; Navarro-Serment, L.E.; MacLachlan, R.; Rybski, P.; Steinfeld, A.; Suppé, A.; Urmson, C.; Vandapel, N.; Hebert, M.; Thorpe, C.; et al. Moving object detection with laser scanners. J. Field Robot. 2013, 30, 17–43. [Google Scholar] [CrossRef]

- Montemerlo, M.; Thrun, S.; Whittaker, W. Conditional particle filters for simultaneous mobile robot localization and people-tracking. In Proceedings of the IEEE International Conference on Robotics and Automation, Washington, DC, USA, 11–15 May 2002.

- Hu, N.; Englebienne, G.; Kröse, B. Bayesian fusion of ceiling mounted camera and laser range finder on a mobile robot for people detection and localization. Hum. Behav. Underst. 2012, 7559, 41–51. [Google Scholar]

- Ballantyne, J.; Johns, E.; Valibeik, S.; Wong, C.; Yang, G.Z. Autonomous navigation for mobile robots with human-robot interaction. Robot Intel. 2010, 12, 245–268. [Google Scholar]

- Kondaxakis, P.; Kasderidis, S.; Trahanias, P. A multi-target tracking technique for mobile robots using a laser range scanner. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008.

- Vu, T.D.; Burlet, J.; Aycard, O. Grid-based localization and local mapping with moving object detection and tracking. Inform. Fusion 2011, 12, 58–69. [Google Scholar] [CrossRef]

- Zhao, H.; Chiba, M.; Shibasaki, R.; Shao, X.; Cui, J.; Zha, H. SLAM in a dynamic large outdoor environment using a laser scanner. In Proceedings of the IEEE International Conference on Robotics and Automation, Pasadena, CA, USA, 19–23 May 2008.

- Morales, J.; Martínez, J.L.; Martínez, M.A.; Mandow, A. Pure-Pursuit reactive path tracking for non-holonomic mobile robots with a 2D laser-scanner. EURASIP J. Adv. Signal Process. 2009. [Google Scholar] [CrossRef]

- Arras, K.O.; Martínez-Mozos, O.; Burgard, W. Using boosted features for the detection of people in 2D range data. In Proceedings of the IEEE International Conference on Robotics and Automation, Rome, Italy, 10–14 April 2007.

- Glas, D.F.; Miyashita, T.; Ishiguro, H.; Hagita, N. Laser-Based tracking of human position and orientation using parametric shape modeling. Adv. Robot. 2009, 23, 405–428. [Google Scholar] [CrossRef]

- Carballo, A.; Ohya, A.; Yuta, S. Reliable people detection using range and intensity data from multiple layers of laser range finders on a mobile robot. Int. J. Soc. Robot. 2011, 3, 167–186. [Google Scholar] [CrossRef]

- Martínez, J.L.; González, J.; Morales, J.; Mandow, A.; García-Cerezo, A.J. Mobile robot motion estimation by 2D scan matching with genetic and iterative closest point algorithms. J. Field Robot. 2006, 23, 21–34. [Google Scholar] [CrossRef]

- Ollero, A.; García-Cerezo, A.; Martínez, J.L. Fuzzy supervisory path tracking of mobile robots. Control Engin. Pract. 1994, 2, 313–319. [Google Scholar] [CrossRef]

- Martínez, J.L.; Mandow, A.; Morales, J.; Pedraza, S.; García-Cerezo, A. Aproximating kinematics for tracked mobile robots. Int. J. Robot. Res. 2005, 24, 867–878. [Google Scholar] [CrossRef]

- Fernández, R.; Muñoz, V.F.; Mandow, A.; García-Cerezo, A.; Martínez, J.L. Scanner-laser local navigation in dynamic worlds. In Proceedings of the IFAC Triennial World Congress, Beijing, China, 5–9 July 1999.

- Kondaxakis, P.; Baltzakis, H.; Trahanias, P. Learning moving objects in a multi-target tracking scenario for mobile robots that use laser range measurements. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, St. Louis, MO, USA, 11–15 October 2009.

- Kondaxakis, P.; Baltzakis, H. Multiple-target classification and tracking for mobile robots using a 2D laser range scanner. Int. J. Hum. Robot. 2012, 9, 1–24. [Google Scholar] [CrossRef]

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).