1. Introduction

The Satellite IoT market is rapidly evolving, driven by advancements in satellite communication technologies and the increasing need for global connectivity, particularly in remote and underserved regions. As traditional terrestrial networks struggle to reach isolated areas, LEO satellites are emerging as a transformative solution, offering low-latency, high-throughput communication capabilities that are ideal for IoT applications. According to [

1], a significant growth in the Satellite IoT Market is forecasted by 2027. The market is projected to increase from

$1.1 billion in 2022 to

$2.9 billion by 2027, at a CAGR of 21.9%. This growth is primarily driven by the increasing demand for global, low-latency connectivity, facilitated by LEO satellites. These satellites offer faster, more reliable communication compared to traditional geostationary satellites, making them ideal for IoT applications in remote and underserved areas. Key trends identified in the report include the reduction in satellite launch costs, which makes satellite IoT solutions more affordable, and the integration of IoT and AI technologies, which enable real-time data analytics and monitoring. The report also highlights the growing adoption of satellite IoT in sectors like agriculture, automotive, and defense, where reliable communication networks are essential. North America is expected to hold the largest share of the market, while Asia-Pacific is predicted to experience the fastest growth. However, challenges such as regulatory complexities and spectrum allocation could impact the rapid expansion of satellite IoT networks. The potential of LEO satellites emerges to provide low-cost, reliable communication for IoT applications, offering a strong outlook for the satellite IoT market over the next few years [

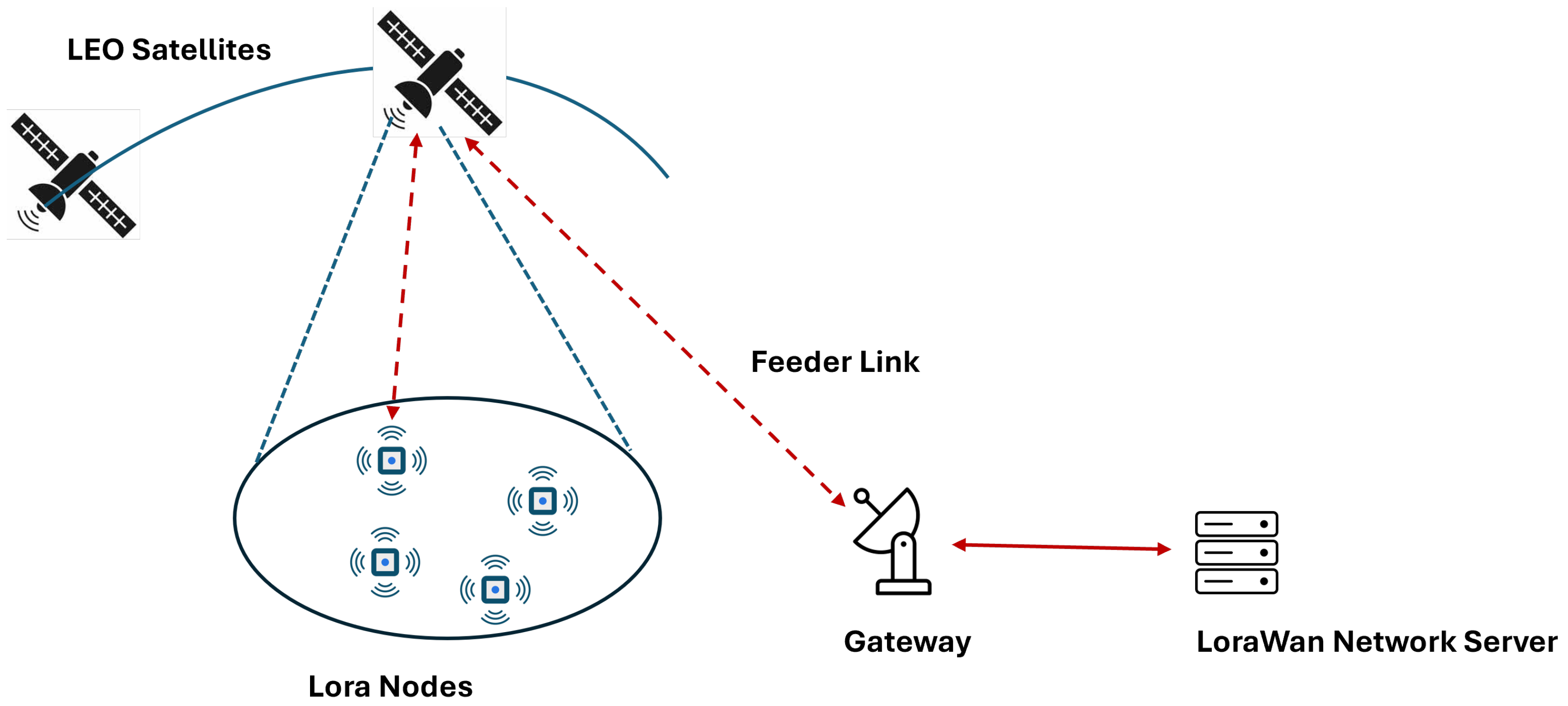

2]. In this context, the Lora/LoraWAN protocol has been updated for connections directly to the LEO satellite (with an altitude ranging from 500 km to 2000 km) [

3]. Nevertheless, LoRa and LoRaWAN were originally designed for terrestrial, low-mobility scenarios, and their direct application to satellite communications introduces several critical technical challenges that must be addressed. In particular, the high relative velocity between LEO satellites and ground terminals leads to severe Doppler shifts, which can significantly degrade synchronization and demodulation performance in conventional LoRa receivers. Moreover, the large free-space path loss associated with satellite-to-ground links, compared to terrestrial deployments, imposes stringent constraints on the link budget and receiver sensitivity. In addition, the limited adaptability of traditional LoRa modulation schemes reduces robustness against time-varying channel conditions, interference, and dynamic satellite pass characteristics [

4]. The paper presents a review of the papers on LoRa/LoRaWAN and LEO Satellite Communication. It also provides a performance analysis of the LoRa/LoRaWAN and LEO Satellite combined system.

Addressing the aforementioned limitations is therefore essential to enable scalable and reliable Direct-to-Satellite (D2S) IoT communications, and represents a key motivation of this work. The paper is structured as follows:

Section 2 reviews the current advancements in satellite IoT technologies, highlighting the role of LEO satellites and the increasing integration of satellite networks with terrestrial IoT systems.

Section 3 focuses on the design and technical aspects of LoRa transceivers that enable Direct-to-Satellite (D2S) communication. It covers the challenges of frequency management, link budget, and the technical requirements for establishing direct communication between ground devices and satellite gateways. In

Section 4, the paper introduces the Long-Range Frequency-Hopping Spread Spectrum (LR-FHSS) technique as an enhancement for LoRa communications. This technique is specifically conceived to overcome the limitations of traditional LoRa modulation in satellite environments, improving robustness to Doppler effects, scalability, and interference resilience.

It explains how this modulation improves scalability, reduces interference, and enhances robustness, especially in satellite communication environments.

Section 5 provides a performance analysis of the D2S communication system.

Section 6 presents the results of the performance analysis and compares LR-FHSS with traditional LoRa and other modulation schemes. Finally, the conclusions are drawn in

Section 7.

2. State of the Art

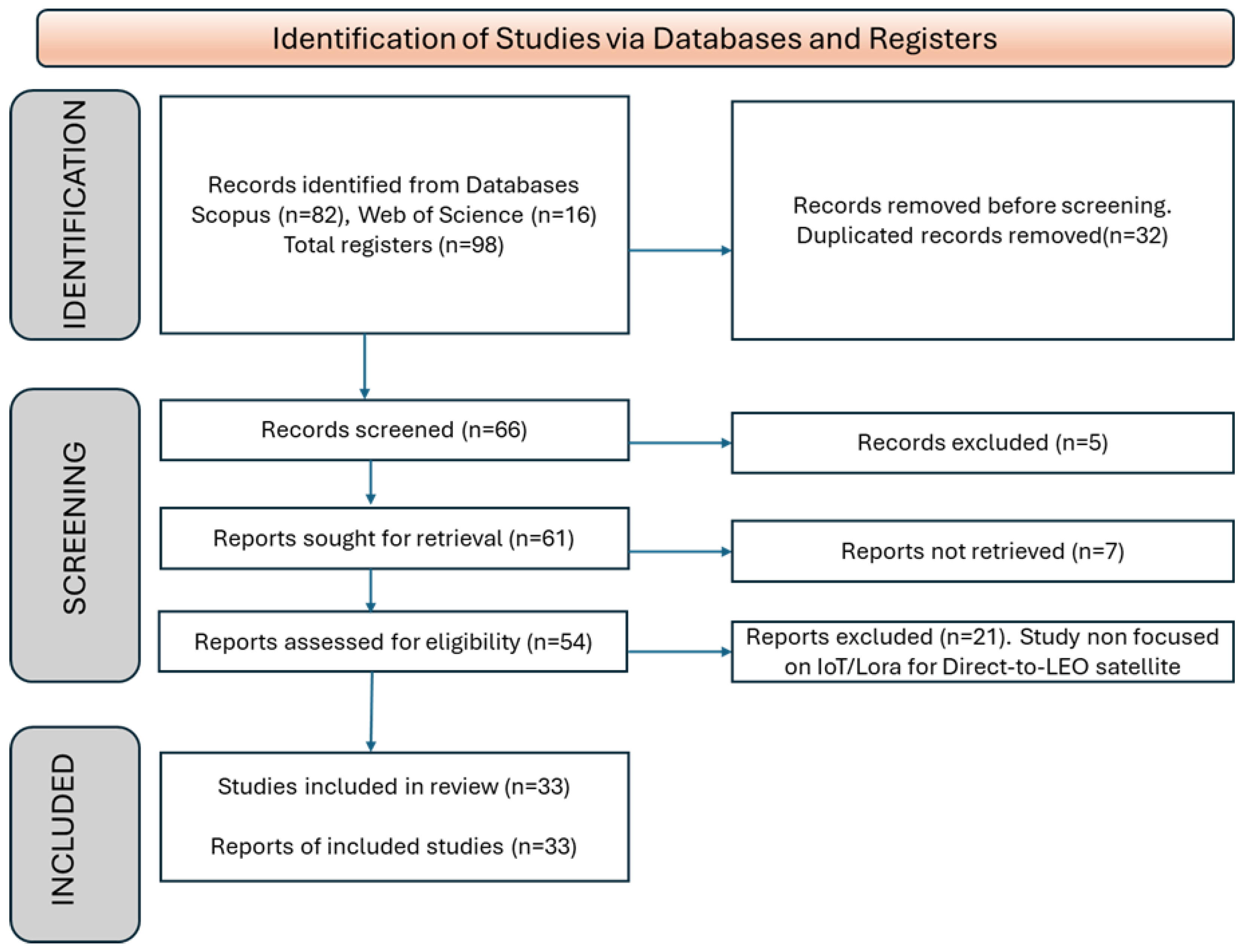

The systematic review was conducted on the basis of a PRISMA-Based Screening and Selection Process. The Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines ensure transparency, reproducibility, and methodological rigor in the study selection process, as shown in

Figure 1.

Scopus and Web Of Science are the databases accessed to find the manuscripts. Lora, LoraWAN, LEO satellite, and IoT are the essential keywords considered to perform the search of documents. The criteria for inclusion/exclusion of articles are shown in the following

Table 1 and

Table 2, respectively.

The criteria were designed to ensure that only studies directly relevant to LoRa/LoRaWAN-based Direct-to-LEO satellite IoT were considered. Specifically, only peer-reviewed publications providing analytical, simulation-based, or experimental evaluation in the context of LEO satellites were included. The timeframe was restricted to publications from 2019 to 2025, and only articles with accessible full texts written in English were considered. These criteria allowed for a focused and rigorous selection of studies that contribute meaningful technical insights to the field.

Studies were excluded if they did not focus on Direct-to-LEO satellite IoT, were not based on LoRa or LoRaWAN technologies, or addressed non-LEO satellite scenarios. Additional exclusions were applied to purely conceptual papers lacking quantitative evaluation, duplicates, non-peer-reviewed sources, and articles whose full text could not be retrieved. These exclusion criteria ensured that the review only incorporated high-quality, relevant, and technically rigorous studies, thereby minimizing potential bias and maintaining the integrity of the systematic review process. During the screening phase, a total of 66 records were screened based on title and abstract. Of these, 5 records were excluded as they were clearly not relevant to the scope of this review. The remaining 61 reports were considered potentially eligible and were therefore sought for full-text retrieval. Among the reports sought for retrieval, 7 full-text articles could not be retrieved, mainly due to access restrictions or unavailability. Consequently, 54 reports were successfully retrieved and assessed for eligibility through full-text examination. During the eligibility assessment, 21 reports were excluded because they did not specifically focus on IoT or LoRa/LoRaWAN communications in Direct-to-LEO satellite scenarios, which represents the core scope of this review. Following this rigorous selection process, 33 studies met all the inclusion criteria and were therefore included in the final review. Each included study corresponded to a single report; accordingly, the number of reports of included studies was also 33. This PRISMA-guided methodology ensured a systematic and unbiased identification of relevant literature, providing a solid foundation for the qualitative analysis of LoRa/LoRaWAN-based Direct-to-Satellite IoT solutions over LEO satellite constellations.

Table 3 summarizes the key studies on the integration of LoRa/LoRaWAN with LEO satellite communications. The table provides a chronological overview of research contributions from 2019 to 2025, highlighting the evolution of network design, modulation techniques, multiple-access schemes, and performance optimization strategies. This compilation offers a structured reference framework for understanding trends, challenges, and emerging solutions in satellite-based IoT deployments, setting the stage for the detailed discussion of individual works presented in the following sections.

Ref. [

5] explores the integration of LEO satellite constellations into 5G and beyond-5G communication systems. The authors employ mathematical modeling techniques, including network capacity models, signal propagation models, and orbital mechanics to simulate the behavior of LEO satellites in providing mobile broadband, massive machine-type communications (mMTC), and ultra-reliable low-latency communications (URLLC). The models account for satellite orbit parameters, satellite handovers, and coverage areas, while the formulae used include signal-to-noise ratio (SNR) calculations based on the Friis transmission equation and link budget analysis for satellite communication. Additionally, the paper uses models of interference from both satellite and terrestrial networks. The results of the mathematical simulations suggest that LEO satellites are crucial for expanding global network coverage, especially in underserved regions. Ref. [

6] focuses on optimizing the key parameters of LoRa communication for satellite-based IoT applications in the LEO environment. This paper develops a mathematical model to simulate LoRa’s communication behavior under different environmental and system conditions, such as Doppler shifts, signal degradation, and channel noise. The optimization approach is based on the analysis of key performance indicators such as energy efficiency and communication reliability, with the authors employing a multi-objective optimization framework. The formulae used include the computation of the signal-to-noise ratio (SNR) for each parameter configuration, the Shannon capacity formula for determining channel capacity, and energy consumption models derived from the transmission power and duty cycle. Simulation results demonstrate that optimized LoRa parameters can enhance communication reliability and energy efficiency, making it a viable solution for satellite IoT applications. In [

7], the authors introduce a novel mathematical model for ultra-dense LEO satellite constellations. The model is based on the queuing theory to represent satellite network traffic, the interference models to capture signal collisions, and the orbital mechanics models to simulate the deployment and movement of satellites in low Earth orbit. They apply models of Poisson processes to describe the traffic arrival rates and employ the Erlang-B formula to calculate the blocking probability for satellite channels. Additionally, interference management techniques are modeled using game theory, where the authors use Nash equilibrium solutions to model satellite resource sharing and interference mitigation. The results indicate that ultra-dense constellations significantly enhance system capacity, but advanced interference management is crucial to optimize network performance. Ref. [

8] investigates the performance of LoRa (Long Range) technology for Internet of Things (IoT) networks using LEO satellites. The authors conduct an in-depth analysis of LoRa’s feasibility in satellite IoT applications, focusing on the challenges posed by the satellite environment, including high mobility, Doppler shifts, and propagation conditions. The study uses both analytical models and simulation results to assess key performance metrics, such as packet delivery success, energy efficiency, and link reliability, under various channel conditions typical of LEO satellite communications. The authors examine how LoRa’s physical-layer characteristics, such as Chirp Spread Spectrum (CSS) modulation and long-range communication capabilities, enable reliable data transmission in satellite IoT networks, especially in remote or underserved areas. The paper also explores the trade-offs between spreading factor, transmission power, and network coverage in the context of LEO satellite IoT deployments. Key findings reveal that while LoRa performs well in terms of range and power efficiency, it requires optimization in terms of synchronization and Doppler shift compensation to effectively support large-scale satellite IoT networks. Ref. [

9] provides a comprehensive overview of the Long-Range Frequency-Hopping Spread Spectrum (LR-FHSS) modulation scheme, specifically designed to enhance the scalability and robustness of LoRa-based networks. LR-FHSS introduces frequency hopping to the conventional LoRa modulation, allowing for more efficient spectrum usage and reducing interference in densely deployed environments. The authors offer a detailed performance analysis of LR-FHSS, comparing it to traditional LoRa in terms of capacity, energy efficiency, and interference resilience. Through both theoretical modeling and simulation, the paper demonstrates that LR-FHSS can significantly increase network capacity (up to 40 times) without compromising the low power consumption that is characteristic of LoRa technology. Additionally, the study highlights that LR-FHSS can maintain reliable communication in the presence of narrowband interference and congestion, which are common challenges in large-scale IoT deployments. Key results show that LR-FHSS’s frequency diversity allows for reduced packet collisions and enhanced link reliability, making it an attractive solution for large-scale IoT networks, particularly in applications such as smart cities and industrial IoT. Ref. [

10] introduces a unified architecture that bridges terrestrial and space-based IoT infrastructures, aiming to enable global and ubiquitous connectivity. The authors classify IoT–satellite interactions into direct and indirect satellite access models and systematically assess their performance in terms of energy efficiency, coverage, and delay. Particular attention is given to Low-Power Wide-Area Network (LPWAN) technologies, specifically NB-IoT and LoRa/LoRaWAN, evaluating their physical and protocol-layer feasibility for Low Earth Orbit (LEO) satellite scenarios. Key challenges such as Doppler shift, timing synchronization, link intermittency, and contention in the access medium are analyzed in detail. The study highlights that although LoRa and NB-IoT offer strong potential for low-energy satellite IoT, protocol adaptations and cross-layer design improvements are critical to ensure robust operation in high-mobility satellite environments. This foundational work sets the stage for further development of hybrid terrestrial–satellite IoT systems. In [

11], the authors explore the application of differential modulation techniques within LoRa communication frameworks targeted at LEO satellite-based IoT networks. The primary objective is to mitigate synchronization and frequency offset challenges, which are prominent in satellite channels characterized by high Doppler shifts and rapid mobility. Differential modulation, by allowing symbol decoding without requiring explicit phase recovery, enhances the robustness of LoRa links in dynamic propagation environments. The study provides both theoretical analysis and simulation-based validation, demonstrating that differential LoRa modulation can maintain reliable connectivity at low SNRs and under varying Doppler conditions. Additionally, it retains the core advantages of LoRa, such as long-range communication and low power consumption, making it a viable candidate for energy-efficient, space-based IoT deployments. This research contributes to improving the link reliability and physical-layer adaptability of LoRa in non-terrestrial environments. The paper [

12] provides a review of dynamic communication techniques for LEO satellite networks. Although this paper focuses more on reviewing the existing literature, it references several key mathematical models, including beamforming models that use array gain calculations to optimize the directional communication of satellites. The authors also discuss frequency reuse models, interference mitigation using power control algorithms, and dynamic scheduling methods, with mathematical formulations for optimal frequency allocation and channel assignment. Furthermore, they refer to dynamic beamforming models that employ antenna gain and signal attenuation equations, and discuss adaptive algorithms to handle satellite mobility, including Markov chain models to represent handover and routing protocols in dynamic LEO networks. Ref. [

13] presents the design of a LoRa-like transceiver optimized for satellite IoT applications. The mathematical models used in this paper include link budget calculations, considering factors such as satellite altitude, path loss, and free-space propagation models. The paper also uses signal degradation models to predict the attenuation over long distances. The formulae applied include the path loss model derived from the Friis transmission equation, and power consumption models based on signal strength and modulation schemes. Additionally, the authors utilize the Shannon–Hartley theorem to assess the theoretical maximum data rates achievable under varying signal conditions. The optimization process focuses on minimizing energy consumption while maintaining acceptable communication reliability, and the results demonstrate that the LoRa-like transceiver provides an energy-efficient solution with good performance over long distances in satellite networks. Ref. [

14] examines how LoRaWAN combined with LEO satellites can facilitate mMTC in remote regions. The paper uses mathematical models for network coverage and reliability, incorporating satellite link budget equations and path loss models specific to LEO satellites. The authors also apply models of interference and fading, including the Ricean fading model to simulate the impact of environmental conditions on signal strength. Additionally, the paper employs stochastic geometry models to evaluate the spatial distribution of devices and network performance in terms of coverage, throughput, and scalability. Simulations show that integrating LoRaWAN with LEO satellites offers a cost-effective and reliable solution for mMTC in rural and isolated areas. Ref. [

15] explores the performance of the LoRaWAN protocol integrated with LR-FHSS modulation for direct communication to LEO satellites. The authors used a combination of analytical and simulation models to evaluate the feasibility and performance of this system in satellite communication scenarios. To assess packet delivery from ground nodes to LEO satellites, the authors developed mathematical models that consider various factors such as satellite mobility, channel characteristics, and potential signal collisions. These models focus on the integration of LR-FHSS within the LoRaWAN framework to improve network capacity and resilience, specifically addressing the challenges posed by the dynamic nature of satellite communication. The analytical model also takes into account the average inter-arrival times for different packet elements, including headers and payload fragments, and calculates the overall success probability for packet transmission. This success probability is influenced by the first (Ftx-1) payload fragments and the last fragment of a data packet. Additionally, the study incorporates the effects of Rician fading in the communication channel, considering the elevation angle of the satellite as a function of time, which reflects the rapid variations in the channel caused by the movement of the satellite. This dynamic modeling is crucial for accurately simulating the real-world conditions of satellite communication. The results indicate that the use of LR-FHSS significantly improves the potential for large-scale LoRaWAN networks in direct-to-satellite scenarios. The study also identifies important trade-offs between different LR-FHSS-based data rates, particularly for the European region, and highlights the causes of packet losses, such as insufficient header replicas or inadequate payload fragment reception. These findings emphasize the potential of LR-FHSS to enhance direct connectivity between IoT devices and LEO satellites, providing an effective solution for enabling IoT applications in remote and underserved areas. Ref. [

16] investigates the performance of LoRaWAN with Long-Range Frequency-Hopping Spread Spectrum (LR-FHSS) in direct-to-satellite communication scenarios. The paper uses mathematical models to simulate the impact of Doppler shifts and interference on LoRaWAN’s performance. The authors use Doppler shift models based on satellite velocity and orbital parameters to analyze the variation in signal frequency, and interference models that consider multiple access interference and the impacts of frequency hopping. Additionally, the authors use SNR-based formulas to evaluate the system’s performance under varying link conditions, such as changes in the relative positions of satellites and ground stations. Simulation results indicate that LR-FHSS in LoRaWAN enhances the system’s robustness against Doppler shifts and interference, ensuring reliable communication in direct-to-satellite scenarios. Ref. [

17] explores the scalability of LoRa for IoT satellite communication systems and evaluates its performance in multiple-access communication scenarios. The authors use analytical models to calculate the channel capacity and throughput of LoRa-based networks, applying queuing theory and slotted ALOHA for multiple-access schemes. The paper incorporates interference models based on the random arrival of packets and the effects of channel collisions. Additionally, the authors use the Shannon capacity formula to analyze the maximum achievable data rates in multiple-access satellite IoT networks. Their findings show that LoRa can be scaled for large IoT deployments, offering a low-power solution that ensures efficient multiple-access operation in satellite environments. In [

18] the authors model the uplink channel for LoRa in satellite-based IoT systems. The paper uses models based on link budget analysis to calculate the effective SNR and the associated BER for uplink transmissions. The authors apply the Shannon–Hartley theorem to evaluate the capacity of the uplink channel and use fading models, such as Rayleigh and Ricean fading, to simulate realistic satellite communication conditions. Additionally, the authors consider the impact of transmission power on energy consumption, employing optimization techniques to determine the optimal transmission power for minimizing energy consumption while maintaining sufficient communication reliability. The findings suggest that LoRa provides a viable solution for uplink transmission in satellite IoT networks, particularly when optimized for power and signal conditions.

Ref. [

19] presents a novel scheduling algorithm, SALSA, designed to manage data transmission from IoT devices to LEO satellites. The paper introduces an optimization model for dynamic traffic scheduling, where the authors use queuing models to prioritize data packets based on urgency and delay tolerance. The scheduling algorithm minimizes communication delays and optimizes throughput by dynamically adjusting the transmission window, considering factors such as satellite position and network load. Mathematical formulations used in this paper include the calculation of optimal scheduling intervals using linear programming techniques, and the analysis of queueing delay based on Poisson processes. The paper concludes that SALSA significantly enhances the efficiency of LoRa-based satellite communications by reducing latency and increasing throughput, improving the overall performance of IoT networks. Ref. [

20] provides an in-depth review of scalable LoRaWAN solutions for satellite IoT systems. The authors discuss scalability models that address network densification, including interference mitigation and synchronization techniques. They present mathematical models of LoRaWAN network traffic, using stochastic models and queuing theory to evaluate network scalability under different operational conditions. The paper also references interference models based on Gaussian noise and path loss equations specific to satellite communication. The authors apply models from game theory to address resource allocation and power control in large-scale IoT systems. The review concludes that LoRaWAN can effectively scale for massive IoT systems in satellite networks but suggests that further improvements in network management and interference mitigation are necessary to handle the increasing demands of such systems. Ref. [

21] investigates the applicability of quasisynchronous LoRa communication in LEO nanosatellites. The primary objective is to address the Doppler shift challenges typically encountered in such environments and to enhance the reliability of long-range, low-power communication. The authors conduct a series of simulations to test the robustness and efficiency of quasisynchronous transmission techniques. These simulations are designed to reduce Doppler-induced signal degradation while preserving energy efficiency. Mathematical modeling focuses on the analysis of symbol error rate (SER) under additive white Gaussian noise (AWGN) conditions, considering various spreading factors and chip waveform structures. The outcomes of these simulations demonstrate that quasisynchronous LoRa can significantly improve communication reliability for nanosatellites engaged in IoT operations, particularly where energy constraints are paramount. Ref. [

22] presents a system architecture for a cost-effective nanosatellite communication platform based on LoRa technology, targeting emerging IoT deployments. The study involves the design and prototyping of both hardware and software elements of the communication system. Performance evaluation includes link budget calculations and assessments of signal range and energy efficiency. The analysis employs received signal strength indicator (RSSI) metrics and estimates the maximum communication distance achievable, which exceeds 14 km. The findings suggest that LoRa-based nanosatellites offer a viable solution for long-range, low-power communication systems that are both scalable and economically sustainable for large-scale IoT applications. Ref. [

24] presents a novel transmission protocol that integrates Device-to-Device (D2D) communication with LoRaWAN LR-FHSS to enhance the performance of direct-to-satellite (D2S) IoT networks. The authors propose a D2D-assisted scheme in which IoT devices first exchange data using terrestrial LoRa links and subsequently transmit both the original and parity packets, generated through network coding, to a LEO satellite gateway using the LR-FHSS modulation. This approach is designed to mitigate the limitations of conventional LoRaWAN deployments in D2S scenarios, particularly those associated with limited capacity and high outage rates in dense environments. A comprehensive system model is developed, incorporating a realistic ground-to-satellite fading channel characterized by a shadowed-Rician distribution. The authors derive closed-form expressions for the outage probability of both the baseline and D2D-aided LR-FHSS systems, taking into account key system parameters including noise, channel fading, unslotted ALOHA scheduling, capture effect, IoT device spatial distribution, and the geometry of the satellite link. These analytical results are rigorously validated through computer-based simulations under various deployment scenarios. The study demonstrates that the proposed D2D-aided LR-FHSS scheme significantly enhances network capacity compared to the traditional LR-FHSS approach. Specifically, the network capacity improves by up to 249.9% for Data Rate 6 (DR6) and 150.1% for Data Rate 5 (DR5) while maintaining a typical outage probability of

. This performance gain is achieved at the cost of one to two additional transmissions per device per time slot due to the D2D cooperation. The D2D approach also exhibits strong performance in dense IoT environments, where packet collisions and interference are prominent. In [

23] the authors provide a comparative review of LPWAN technologies, with a particular emphasis on LoRaWAN, and evaluate their potential for direct-to-satellite IoT communication. The methodology involves the systematic comparison of LPWAN protocols based on their scalability, power consumption, and data throughput. The analytical framework includes interference modeling, latency evaluation, and throughput estimation under variable environmental and operational conditions. The survey concludes that LPWAN technologies, especially LoRaWAN, are well-positioned to support satellite IoT networks; however, persistent challenges such as high-latency communication and susceptibility to interference warrant further investigation. Ref. [

25] surveys recent technological trends in the integration of LPWAN, specifically LoRaWAN, within LEO satellite constellations. The authors conduct a thorough review of advancements in synchronization protocols, interference mitigation strategies, and system scalability. Analytical modeling is used to characterize interference dynamics, while synchronization models assess the temporal alignment of satellite communications. Furthermore, scalability is evaluated through network topology simulations and capacity forecasting. The paper concludes that although LPWAN presents numerous advantages for LEO constellations, including low power usage and wide coverage, the technology requires enhanced coordination mechanisms and interference control techniques to achieve full operational efficacy. In [

26] the focus is on quantifying and mitigating the impact of Doppler shifts in LoRa-based satellite communications. The authors develop a mathematical model to calculate Doppler shifts resulting from the relative velocity of LEO satellites and ground terminals. The study introduces frequency compensation algorithms aimed at counteracting the signal distortion caused by rapid satellite motion. Performance is assessed through simulations that incorporate SNR analyses and compare communication quality with and without Doppler compensation mechanisms. The results confirm that Doppler effects significantly impair LoRa signal integrity in direct-to-satellite communication, but also show that well-designed compensation techniques can restore acceptable levels of performance. Ref. [

27] investigates strategies for minimizing energy consumption in LoRaWAN-based communication systems deployed via LEO satellites. The central focus of the paper is the development of adaptive transmission techniques that dynamically adjust power levels and duty cycles based on real-time communication requirements and environmental conditions. The authors utilize simulation-based methods to compare multiple transmission schemes in terms of their energy efficiency and network reliability. The mathematical framework incorporates models for energy consumption as a function of packet size, transmission frequency, and satellite–ground link distance. Additionally, Markov models are used to simulate duty cycle transitions and assess network availability. The findings demonstrate that optimizing these parameters can significantly prolong the operational lifetime of IoT devices in satellite networks and make LoRaWAN a highly viable protocol for energy-constrained space-based IoT deployments. Ref. [

28] proposes a novel uplink scheduling strategy for LoRaWAN-based D2S IoT systems, termed Beacon-based Uplink LoRaWAN (BU-LoRaWAN). The method leverages the Class B synchronization framework of LoRaWAN, utilizing periodic beacons transmitted by satellites to synchronize ground devices. By aligning transmissions within beacon-defined time slots, the scheme significantly reduces packet collisions and improves spectral efficiency, particularly in contention-heavy, low-duty-cycle uplink scenarios. Simulation results demonstrate that BU-LoRaWAN outperforms standard ALOHA-based LoRaWAN protocols in terms of delivery probability and latency under typical LEO orbital conditions. The study also discusses practical implementation challenges such as beacon visibility duration, orbital scheduling, and ground terminal hardware constraints. The proposed protocol is shown to be especially advantageous for delay-tolerant IoT applications in remote and infrastructure-less regions, supporting scalability and efficient utilization of limited satellite resources. Ref. [

29] provides a deep analysis of SALSA solution, based on a first-come-first-served approach to assign time slots according to device visibility. The obtained performance degrades in dense deployments where many devices have limited visibility windows. Authors propose methods to exploit multiple frequency channels and allow reordering of transmissions within a visibility period to enhance uplink efficiency. The proposed solution consists of a scheduling strategy that considers the availability of H orthogonal frequency channels. Simulation results show that uplink efficiency increases with the number of channels, reaching improvements of nearly 80%, and that combining both strategies maintains efficiency above 50% even under high-density conditions. Ref. [

30] is focused on the LoRa communications as energy-efficient and cost-effective solutions for low-power wide-area networks. Conventional terrestrial LoRa networks, however, are limited in their ability to provide ubiquitous coverage, particularly in remote and rural areas. To address these limitations, LoRa-based LEO satellite IoT has emerged as a promising approach. The paper presents a novel analytical framework based on spherical stochastic geometry (SG) to characterize the uplink access probability in LoRa-based LEO satellite IoT systems. Multiple classes of end-devices are modeled as independent Poisson point processes. Both the unique features of LoRa networks and the channel characteristics of near-Earth satellite links are incorporated. Closed-form expressions for the uplink access probability are derived using the Laplace transform of the aggregated interference. In [

31] the optimization of the management of computational tasks in IoT networks supported by LEO satellites is analyzed. The idea is based on edge computing, which is able to reduce latency and improve energy efficiency. A methodological framework is proposed to strategically balance the communication–computation trade-off by distributing tasks across multiple satellites. Four allocation strategies are analyzed. Two fixed approaches, assigning all tasks either to the nearest satellite or to a terrestrial cloud infrastructure, serve as benchmarks. Two adaptive strategies exploit satellite capacity information to optimize task distribution along the path between IoT devices and the cloud. Numerical results demonstrate that the adaptive strategies significantly enhance system efficiency compared to conventional methods, while providing flexibility and scalability.

7. Simulations and Performance Analysis

7.1. Simulations

Table 4 summarizes the key input parameters used for simulating LoRa D2S communications. The chosen carrier frequency of 2.1 GHz corresponds to the S-Band, which is typical for LEO satellite links. The distance range from 500 to 2000 km reflects realistic satellite altitudes above the Earth.

The parameters listed in

Table 4 define the simulation setup for analyzing a LoRa-based direct-to-satellite communication link. These values are chosen to reflect realistic constraints and configurations used in current LEO satellite communication systems, particularly for IoT and LPWAN. A carrier frequency of 2.1 GHz is selected, which falls within the S-band spectrum—a frequency band often used in satellite-based IoT applications due to its favorable propagation characteristics, including lower atmospheric attenuation compared to higher frequencies [

38]. The simulated distance range spans from 500 km to 2000 km. The LoRa bandwidth is fixed at 125 kHz, as this setting provides a good trade-off between coverage and energy efficiency and is supported by commercial LoRa transceivers, like the Semtech LR1121. Spreading Factors (SF) from 7 to 12 are considered to assess the trade-off between coverage and data rate. As the SF increases, the symbol duration becomes longer, which improves the link budget but reduces the bitrate [

39]. The simulation uses a standard free-space path loss model to evaluate signal attenuation as a function of distance and frequency. Thermal noise power is calculated using the well-established formula

, where

T is the system noise temperature set at 290 K. The transmit power is assumed to be +22 dBm, which aligns with the maximum allowed power output of many commercial LoRa modules operating in satellite uplink scenarios [

40].

7.2. Analysis of Result

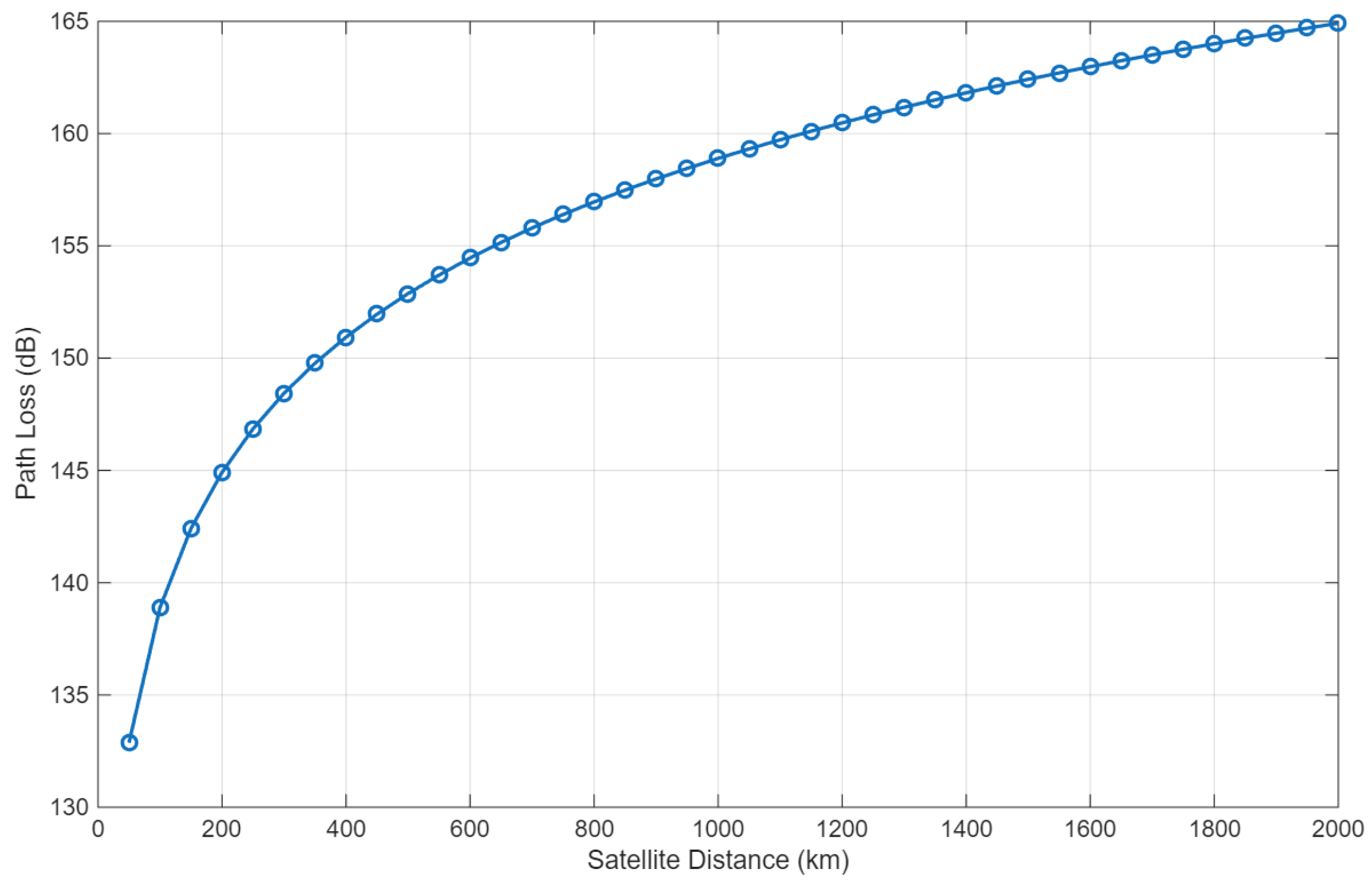

Figure 3 illustrates the relationship between Path Loss (PL) and satellite distance, based on the free-space path loss model.

Path loss increases logarithmically with distance, which is typical for radio wave propagation in free space. The model considers factors such as the transmission frequency (2.1 GHz in this case), the speed of light, and the distance between the transmitter and receiver.

Figure 3 shows the free-space path loss (PL) as a function of the satellite-to-receiver distance, ranging from 0 km up to 2000 km at a carrier frequency of 2.1 GHz. As expected, the path loss increases monotonically with distance, reflecting the inverse-square law of free-space propagation. At very short distances near 0 km, the path loss is undefined (

dB), representing the singularity in the logarithmic path loss formula. At 50 km, the path loss is approximately 132.87 dB, while at 500 km it reaches 152.87 dB. For the maximum simulated distance of 2000 km, the path loss is about 164.91 dB. These high path loss values quantitatively demonstrate the significant signal attenuation encountered in long-range satellite links. Such attenuation directly impacts the required transmit power, antenna gain, and sensitivity of the receiver to maintain reliable communication. At 500 km, the path loss is approximately 150 dB, while at 2000 km, it reaches about 170 dB. This exponential increase in path loss emphasizes the strong sensitivity of the received signal to distance in satellite communication systems. As the path loss grows, the received signal strength diminishes, resulting in a weaker signal at the receiver. This in turn degrades the signal quality and can significantly affect the reliability of the communication link. This relationship highlights the inherent challenges in satellite communications, where longer distances lead to substantial increases in path loss. The smooth logarithmic increase of path loss with distance confirms the suitability of the free-space path loss model for line-of-sight satellite channels, where multipath effects, shadowing, or atmospheric losses are negligible. The obtained values are crucial for system design, as they provide the necessary margins for link budget calculations, selection of spreading factors in LoRa LR-FHSS, and estimation of the maximum achievable communication range while maintaining acceptable BER performance.

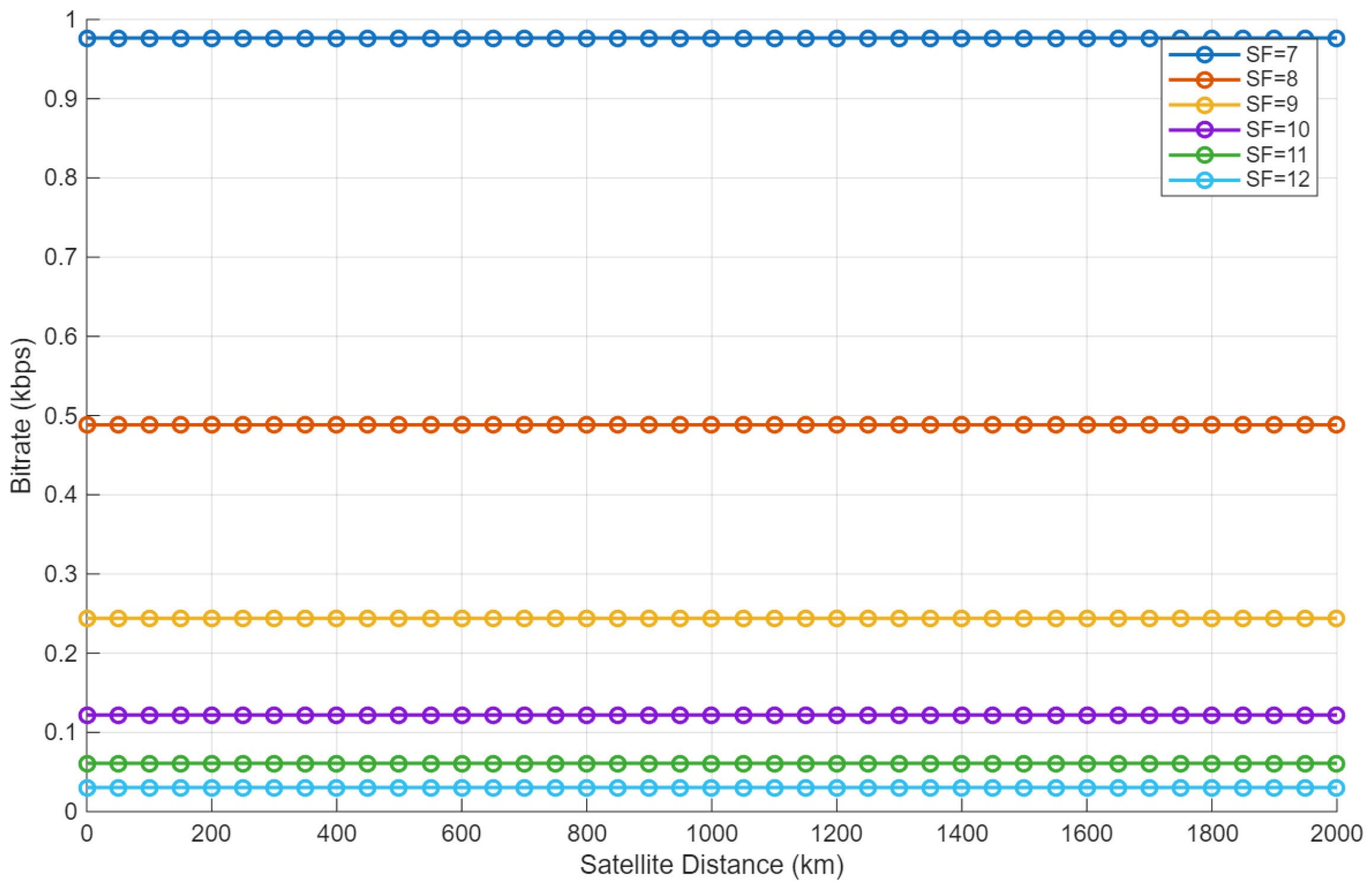

Figure 4 demonstrates the theoretical achievable bitrate for different spreading factors (SFs) as a function of satellite distance.

The figure illustrates that the bitrate is inversely proportional to the spreading factor: as the SF increases, the bitrate decreases. This inverse relationship between bitrate and SF is a key characteristic of LoRa communication systems. At 500 km, the bitrate for SF7 is approximately 7.8 kbps, whereas for SF12, it decreases to around 1.9 kbps. As expected, the bitrate decreases exponentially with increasing SF, reflecting the trade-off inherent in LoRa modulation between data rate and link robustness. Specifically, SF7 provides the highest bitrate of approximately 0.98 kbps, while SF12 achieves only 0.03 kbps. This reduction in bitrate with higher SFs is due to the increased symbol duration and greater spreading, which enhance resilience against noise and interference but reduce spectral efficiency. Therefore, higher SFs are typically used for longer satellite-to-receiver distances or for scenarios with lower SNR, ensuring reliable communication, whereas lower SFs can be employed at shorter distances where the SNR is sufficiently high to maintain data integrity. This shows that while higher SFs improve the robustness of the signal, they come at the cost of reducing the available bandwidth. This trade-off between robustness and data rate is essential for designing LoRa-based satellite systems, where the demand for high bitrate must be balanced with the need for long-range, low-power communication. Thus, the figure emphasizes the critical decision of selecting the appropriate SF based on the operational distance, as the trade-off between data rate and signal robustness becomes more significant over long distances.

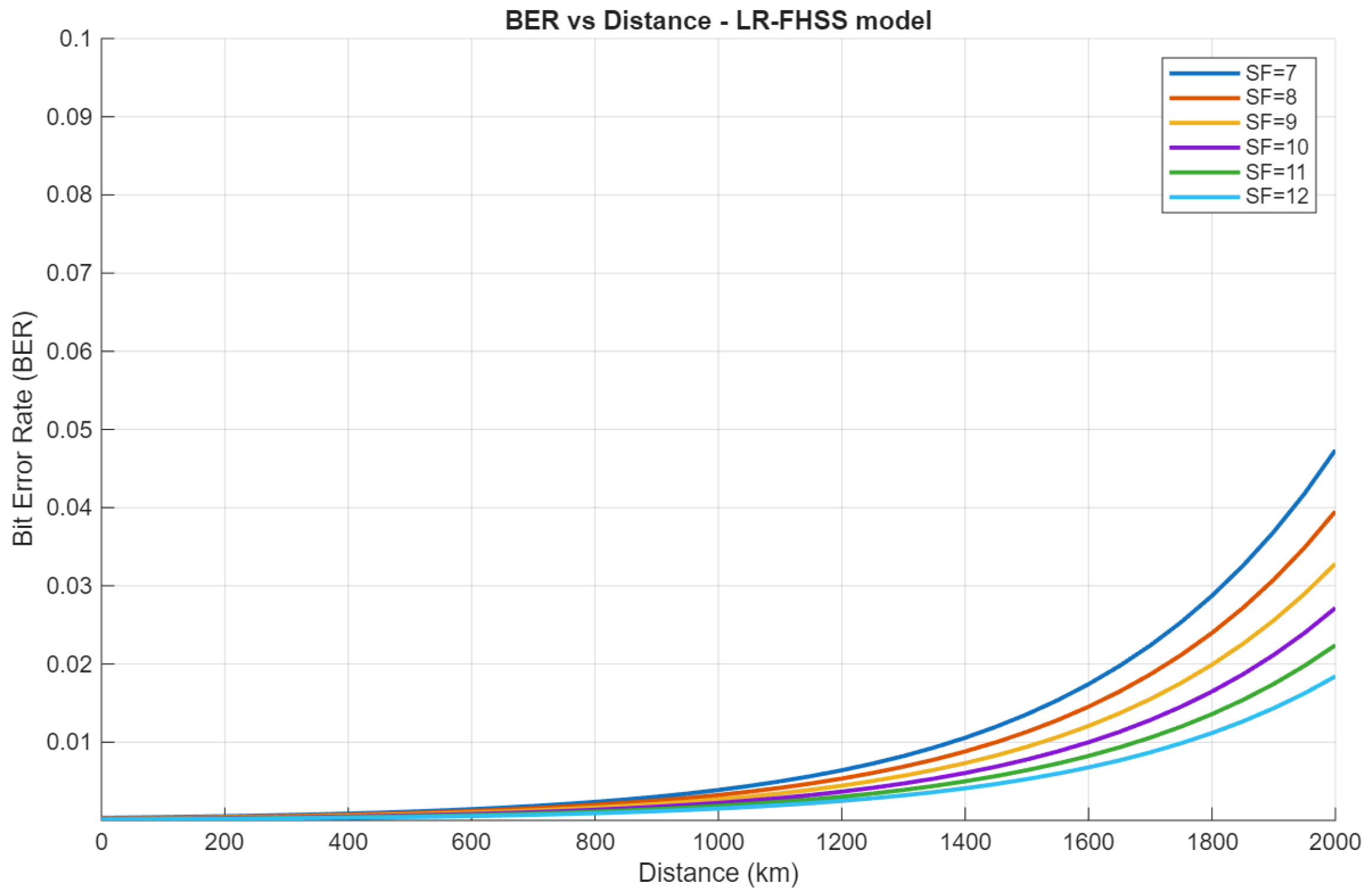

Figure 5 illustrates the BER of LR-FHSS communication links as a function of satellite distance (from 0 to 2000 km) for various SFs.

BER is a critical performance metric in communication systems, representing the fraction of incorrectly received bits compared to the total transmitted bits. As the satellite distance increases, the quality of the received signal deteriorates, leading to a higher BER. A consistent trend is observable: the BER increases with distance for all SFs, reflecting the progressive signal degradation due to path loss, interference, and other channel impairments. For short distances (0–200 km), the BER values are very low, on the order of to , indicating reliable communication across all SFs. As the distance increases, the BER rises almost linearly in the logarithmic scale, reaching values of ∼ at 2000 km. This growth is more pronounced for lower Spreading Factors (SF7–SF9), which are associated with higher data rates but reduced resilience to noise and fading. Conversely, higher Spreading Factors (SF10–SF12) exhibit consistently lower BER at the same distance, confirming their stronger robustness against channel impairments, albeit at the cost of reduced spectral efficiency. Quantitatively, at a distance of 1000 km, SF7 exhibits a BER of , while SF12 shows . This indicates that adopting higher SFs can reduce BER by nearly a factor of 2–3 under the same channel conditions. The trend continues across the entire distance range: the BER of SF7 rises to at 2000 km, whereas SF12 remains below , demonstrating the effectiveness of larger spreading factors in maintaining communication reliability over long distances. The results are consistent with the underlying LR-FHSS model. In this context, the increase in BER with distance reflects the reduction of the received SNR below the SF-specific threshold (). Higher Spreading Factors correspond to lower values, which explains their superior performance at longer distances.

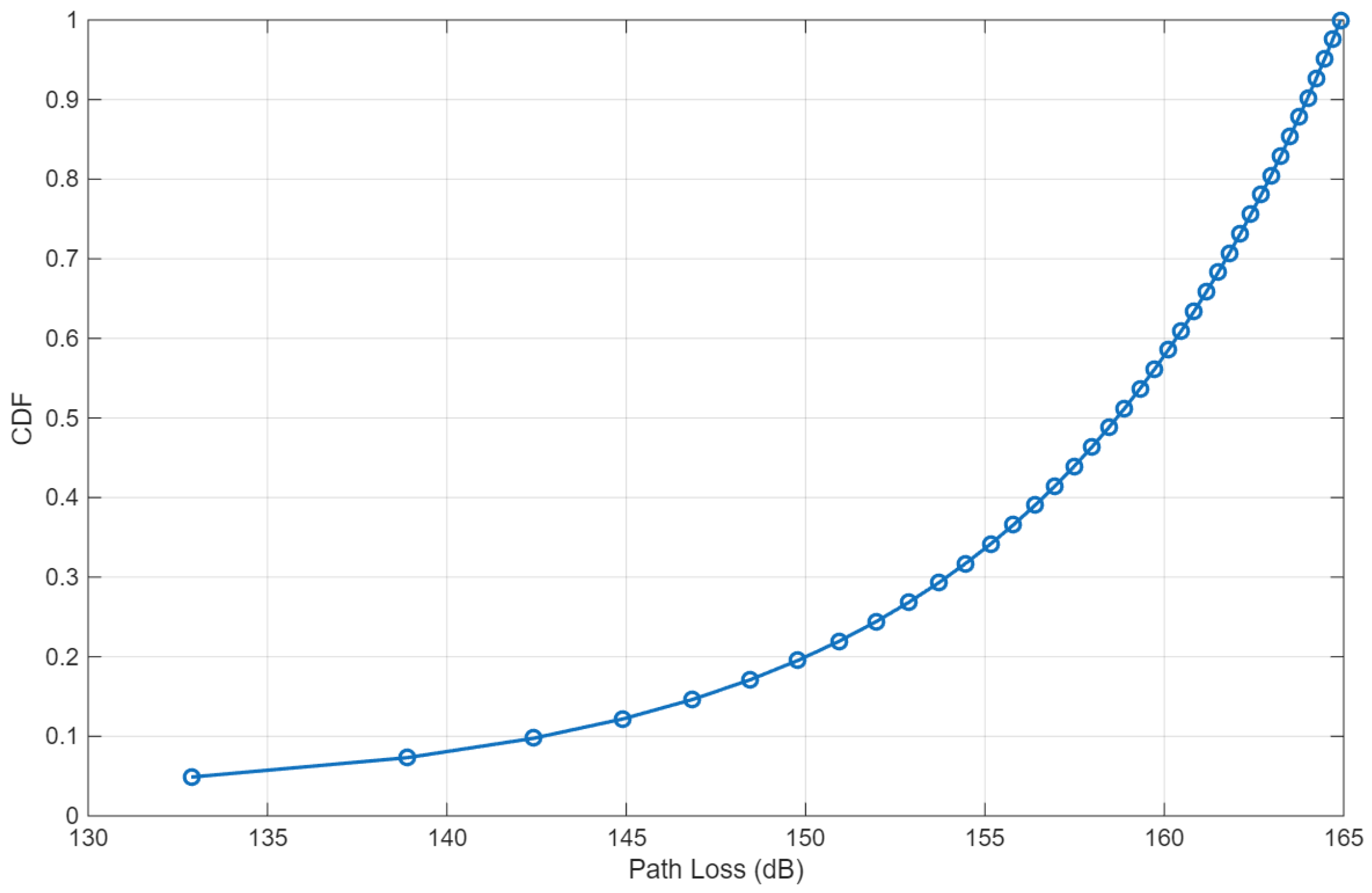

Figure 6 presents the Cumulative Distribution Function (CDF) of the path loss as a function of satellite distance.

The CDF provides a detailed view of the distribution of path loss values, offering insight into how likely it is that a given path loss value will be exceeded at various distances. As the satellite distance increases, the path loss shifts to higher values, as reflected by the increasing slope of the CDF. The CDF increases monotonically from 0 to 1, as expected for a cumulative probability distribution. At very short distances, the path loss is singular ( dB), corresponding to the logarithmic singularity in the free-space path loss formula at zero distance. Beyond 50 km, the path loss values rise rapidly, e.g., reaching approximately 132.87 dB at 50 km, 152.87 dB at 500 km, and 164.91 dB at 2000 km. These values reflect the significant attenuation encountered in long-range satellite links. The CDF values provide insight into the probability of encountering a given path loss in the system. For instance, a path loss of 157.48 dB corresponds to a cumulative probability of 0.4390, indicating that approximately 44% of the distance samples experience a path loss below this threshold. Similarly, 164.00 dB corresponds to a cumulative probability of 0.9024, meaning that 90% of distances result in path loss below this level. The plot also emphasizes the need for robust error correction and power management strategies, particularly at greater distances where path loss becomes more severe.

Figure 7 presents the CDF of the BER for various SFs at different satellite distances.

This plot illustrates how the error rates are distributed across different distances for each SF. The cumulative distribution function (CDF) of the BER for LoRa LR-FHSS transmissions at different spreading factors (SF = 7–12) provides a comprehensive view of the probability of experiencing a given error rate across the satellite link distances considered. For SF = 7, the BER values range from approximately to , with the CDF smoothly increasing from 0 to 1. This indicates that low BER values occur with relatively low probability at short distances, while higher BER values dominate at larger distances, reflecting the expected degradation of link quality with increasing path loss. Similar trends are observed for SF = 8, where the BER values span from to , showing slightly improved performance compared to SF 7 due to the increased spreading gain. As the spreading factor increases (SF = 9–12), the minimum BER further decreases; e.g., for SF = 12, the BER ranges from up to . The corresponding CDF curves demonstrate a more gradual increase, indicating that lower BER values are more probable, reflecting the enhanced error resilience associated with higher spreading factors. This trend confirms the well-known trade-off in LoRa modulation: higher SF provides stronger processing gain and lower BER, but at the cost of reduced data rate. Quantitatively, the median BER (CDF = 0.5) decreases from roughly for SF 7 to about for SF 12. This illustrates that for more robust SFs, half of the transmitted packets experience a BER below this value, implying more reliable communications over long-range satellite links. These CDF results are crucial for system design, as they allow estimation of the probability of achieving a target BER for a given SF and distance, supporting link budget analysis and selection of appropriate SFs to maintain reliable connectivity under varying propagation conditions.