1. Introduction

As the modern automotive industry continues to thrive, cars have firmly established themselves as an essential mode of daily transportation. However, this progress has been accompanied by a growing concern for traffic safety, especially due to the alarming rise in traffic accidents caused by distracted driving. These accidents have emerged as a significant threat to road safety, even surpassing those caused by drunk driving and speeding. Distracted driving encompasses visual, cognitive, and manual distractions, all of which severely diminish the driver’s focus on the road, thereby increasing the likelihood of accidents. In response to this challenge, the effective detection of distracted driving behaviors has become a focal point of research. Traditional detection methods, which often rely on sensors such as eye trackers and head motion sensors, are hindered by their high costs and complex installation requirements, limiting their practical application.

In recent years, deep learning-based computer vision techniques have achieved remarkable advancements in the field of distracted driving detection. YOLO-based networks, renowned for their high computational efficiency and superior detection performance, have become a dominant approach in this area. Nevertheless, existing methods for distracted driving detection still face certain limitations. For instance, the deep learning-based method proposed by Li Bo et al. [

1] attains high detection accuracy but is encumbered by high model complexity, making it challenging to deploy on resource-limited embedded devices. Qi Kang et al. [

2] utilized an enhanced YOLOv4-tiny model to reduce computational complexity, albeit at the expense of detection accuracy. Renxiang Chen et al. [

3] improved the YOLOv5 model to boost detection accuracy, yet the model retains a high number of parameters and computational complexity, struggling to meet real-time detection demands. Zhihui Mao et al. [

4] conducted a review of YOLO-based autonomous driving object detection research, highlighting the need for further optimization in balancing lightweight models with accuracy. Current comparisons often do not consider recent multi-modal or gaze-integrated detection approaches, which could further enhance distracted driving recognition beyond visual cues alone (e.g., combining gaze cues and step recognition for assembly [

5]). Although this paper focuses on visual detection using YOLOv8, such multi-modal extensions offer a promising direction for future research. To tackle these issues, this paper introduces a lightweight distracted driving detection method based on YOLOv8. By incorporating the FasterNet architecture, PConv module, NAM attention mechanism, and Focal-EIoU loss function, the proposed method substantially reduces the model’s parameter size and computational complexity while preserving high detection accuracy. The primary contributions of this paper are as follows:

A lightweight distracted driving detection model based on YOLOv8, named YOLOv8s-FPNE, which significantly diminishes the model’s parameter size and computational complexity.

The integration of FasterNet, PConv, NAM attention mechanism, and Focal-EIoU loss function to enhance detection accuracy and efficiency.

A comprehensive series of experiments conducted on publicly available datasets to validate the effectiveness and superiority of the proposed method.

2. Introduction to YOLO

YOLOv8 represents the latest evolution in the YOLO series of object detection algorithms, building upon the strengths of its predecessors, such as rapid inference speed and high detection accuracy, while introducing enhancements to improve performance in complex scenarios. YOLOv8 manages model complexity through depth and width factors, offering several versions—YOLOv8-N, YOLOv8-S, YOLOv8-M, YOLOv8-L, and YOLOv8-X—to accommodate varying computational capabilities.

The YOLOv8 architecture is composed of four main components: the input layer, the backbone network, the neck network, and the detection head. YOLOv8 accepts input images of fixed dimensions (typically 640 × 640 × 3) and performs preprocessing tasks such as resizing to standard dimensions and normalization. This ensures that the input data is compatible with the model’s structure and parameter settings, thereby maintaining both detection efficiency and accuracy.

YOLOv8 utilizes more efficient feature extraction networks, such as CSPNet (Cross-Stage Partial Network), to minimize redundant computations while preserving high detection accuracy. The backbone’s primary role is to extract multi-level feature maps through convolution operations. In the neck section, YOLOv8 employs classical architectures like FPN (Feature Pyramid Network) and PAN (Path Aggregation Network). These architectures enhance the network’s ability to detect objects of various sizes by fusing multi-scale features, ensuring robust detection performance even with small objects or complex backgrounds.

In the detection head, YOLOv8 introduces an anchor-free detection approach, dispensing with the traditional anchor-based mechanism to make detection more flexible and efficient. The head is responsible for predicting the locations and categories of objects based on the feature maps output by the neck, directly yielding the detection results.

3. YOLO Algorithm Improvements

To address the critical need for low parameter count and low complexity in distracted driving detection, this paper presents an enhanced version of YOLOv8s, incorporating improvements in four key areas: the FasterBlock [

6] is introduced to replace the Bottleneck in C2f. This modification efficiently extracts spatial features by reducing redundant computations and memory accesses, thereby significantly lowering the parameter count and computational complexity. Furthermore, PConv is integrated into the detection head. By partially convolving the input channels and combining this with PWConv, the entire feature map is utilized more effectively, leading to reduced computational and memory overhead. Moreover, the NAM [

7] attention mechanism is incorporated, applying sparse weight penalties to the attention module. This enhancement makes the computation more efficient while preserving the model’s performance. Additionally, the Focal-EIoU [

8] loss function is adopted, replacing the CIoU loss function in the original model. This change accelerates convergence and improves detection accuracy.

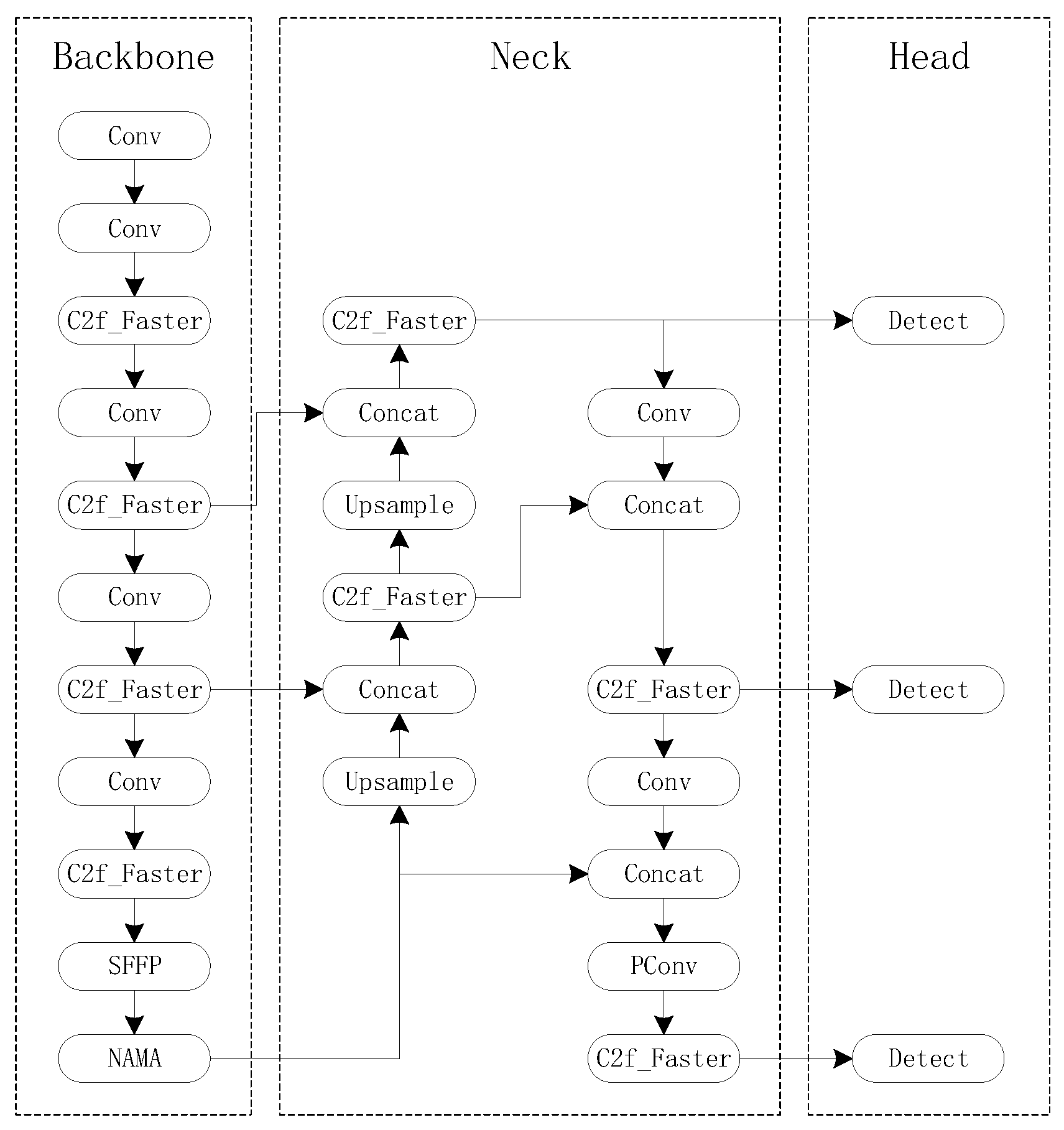

The network architecture of the improved YOLOv8s-FPNE model is depicted in

Figure 1.

3.1. Integration of FasterNet

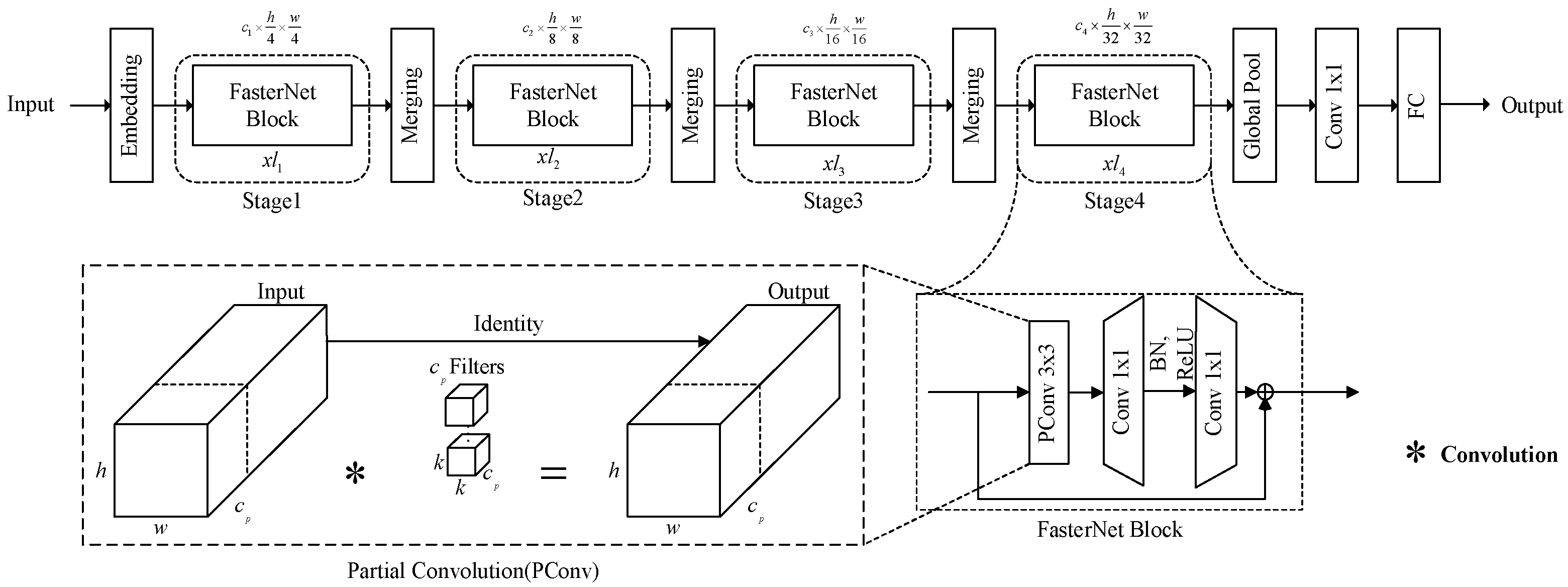

FasterNet represents a novel lightweight network architecture meticulously devised to enhance the efficiency of neural networks by curtailing redundant computations, as illustrated in

Figure 2. This module demonstrates particular suitability for resource-constrained settings, such as edge computing apparatuses or embedded systems.

Conventional neural networks generally resort to depthwise convolutions (DWConv) or group convolutions (GConv) for extracting spatial features. Although these approaches have achieved certain advancements in diminishing FLOPS (floating point operations), they frequently entail additional memory access overhead. MicroNet [

9] takes a step further by decomposing and sparsifying the network to drive FLOPS down to extremely low levels. Nevertheless, despite being a remarkable breakthrough in FLOP optimization, this method suffers from suboptimal computational efficiency and is often accompanied by supplementary data manipulations like cascading, shuffling, and pooling, which substantially impede the runtime performance of small models. In contrast, FasterNet optimizes the computational pathway by introducing PConv, which not only mitigates redundant computations and memory access but also attains remarkable performance across classification, detection, and segmentation tasks, manifesting lower latency and higher throughput.

FasterNet refines the computational route via the utilization of PConv. In comparison to traditional convolution operations, PConv significantly trims down computational overhead while safeguarding the quality of feature extraction. Traditional neural networks, especially deep convolutional neural networks (CNNs), ordinarily demand copious computational resources when handling images. Typical convolution operations necessitate processing data from every input channel and applying convolutions uniformly to all data, thereby engendering colossal computation requirements and memory access strain. In real-time application scenarios, such as distracted driving detection, it is of paramount importance to expeditiously analyze each frame of an image to guarantee the system’s real-time responsiveness. FasterNet’s design centers around minimizing superfluous computations without compromising accuracy, facilitating swift and efficient processing of input data.

FasterNet employs PConv to curtail computation. The principal distinction between PConv and traditional convolution lies in the fact that PConv conducts convolution operations solely on a subset of the input channels. Traditional convolution operations apply the same convolution to all input channels, implying that even if the features of certain channels exert minimal influence on subsequent detection, computational resources are still squandered. PConv adeptly diminishes redundant computation by selectively applying convolution operations.

The operational mechanism of PConv hinges on analyzing the channel similarity within the input feature map to ascertain which channels are amenable to information sharing. It conducts standard convolution operations exclusively on pivotal channels, while the remaining channels preserve their feature information via sparse connections or feature sharing. This maneuver not only curtails the quantity of convolution computations but also markedly diminishes the frequency of memory access, thereby augmenting the model’s computational efficiency.

The C2f module in YOLOv8, which represents a variant of the Cross-Stage Partial Network, serves as the feature extraction module in the original structure. C2f mitigates redundant computations by sharing feature information across diverse stages. Notwithstanding its satisfactory performance in YOLOv8, it still harbors a relatively high computational complexity, especially when high-resolution inputs are deployed, which substantially augments the model’s FLOPS (Floating Point Operations Per Second).

In this work, FasterNet supplants the C2f module in YOLOv8. By capitalizing on its lightweight architecture and the efficient computation proffered by PConv, FasterNet conspicuously reduces the model’s FLOPS. While upholding comparable or even enhanced detection accuracy, FasterNet curtails memory bandwidth consumption, endowing the model with the capacity to operate proficiently on embedded devices or within edge computing scenarios.

3.2. PConv

PConv (Partial Convolution) constitutes an optimized convolution operation methodology devised to abate redundant computations in convolutional neural networks, and it is especially apt for high-resolution image processing tasks such as object detection and distracted driving detection. In contrast to traditional convolution operations that execute identical computations across every input channel, PConv confines its convolution operations to a subset of input channels. This approach effectively trims down computation and memory bandwidth consumption while sustaining a high level of feature extraction proficiency.

When convolutional neural networks process images, they routinely engender multi-channel feature maps. These channels frequently display a high degree of similarity, and thus performing the same convolution operations across all channels can precipitate significant redundant calculations. PConv mitigates unnecessary computations by applying standard convolutions solely to a subset of the input feature map’s channels, while other channels are managed through sparse or skip connections.

In the YOLOv8s-FPNE model, PConv is predominantly employed in the detection head. The detection head shoulders the responsibility of classifying and localizing objects based on the extracted feature maps. Traditional detection heads customarily apply multiple convolutional layers to execute convolution calculations on all input channels. This practice incurs a hefty computational load, especially when grappling with high-resolution images. By downsizing the number of convolution operations, PConv significantly slashes FLOPs (Floating Point Operations) and empowers the model to conduct inference more expeditiously when processing high-resolution images.

3.3. NAM Attention

Normalized Attention Mechanism (NAM) represents a parsimonious and highly efficient attention mechanism. Engineered to heighten a model’s concentration on pivotal features, NAM concurrently alleviates the computational load. It has found extensive application in computer vision tasks, with a particular emphasis on object detection. In this domain, attention mechanisms enable models to zero-in on the significant regions within an image. This focused attention ultimately leads to a notable improvement in detection accuracy.

In neural networks, convolution operations play a pivotal role in extracting spatial and channel features from input images. Nevertheless, a common drawback of these operations is that they generally treat all features equally. This egalitarian approach may impede the model’s ability to differentiate between primary and secondary features.

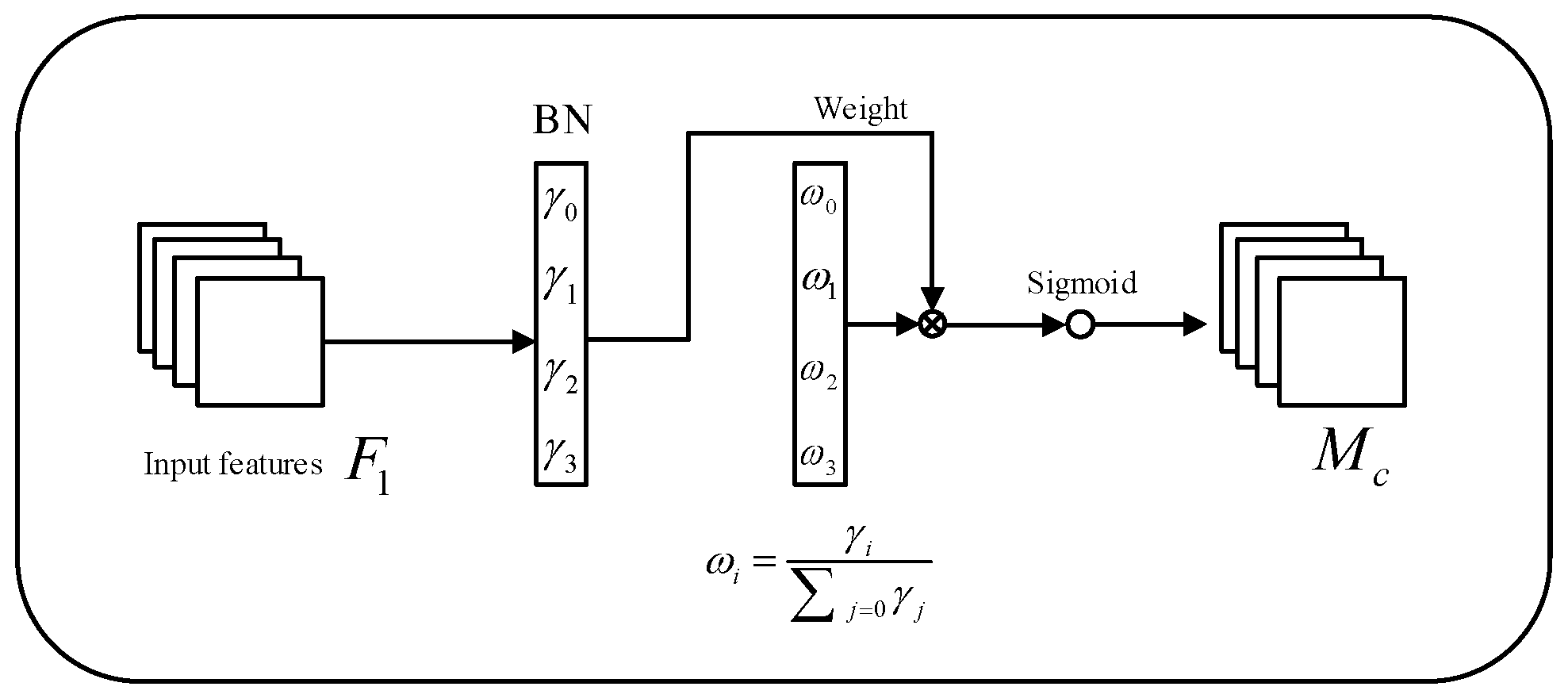

The essence of the attention mechanism is to assign distinct weights to features, thereby enabling the model to concentrate more effectively on critical areas. The NAM (Normalized Attention Mechanism) takes this concept a step further by integrating both channel and spatial attention (as illustrated in

Figure 3). This combination empowers the model to dynamically adapt its attention across various regions and channels.

Channel attention is primarily employed to fine-tune the significance of different channels within the feature map. Meanwhile, spatial attention zeroes in on identifying the most crucial regions in the spatial dimension of the feature map.

When compared to traditional attention mechanisms like the CBAM (Convolutional Block Attention Module) and SE (Squeeze-and-Excitation) modules, NAM strikes a superior balance between computational complexity and performance. The key innovation of NAM lies in streamlining the attention computation process. It achieves this by incorporating normalization operations and a lightweight weight adjustment mechanism. This not only curtails redundant computations but also preserves the model’s capacity to focus on important features.

In the YOLOv8s-FPNE model, the NAM attention mechanism is seamlessly integrated into multiple network modules. By appending the NAM module after the convolution layers, the model can dynamically modulate its attention across different channels and spatial regions during feature extraction. As a result, YOLOv8s-FPNE can more precisely detect distracted driving behavior in complex scenarios. Especially under multi-task conditions or challenging lighting situations, NAM substantially boosts the model’s performance.

3.4. Loss Function

In the realm of object detection tasks, the loss function serves as a crucial determinant for assessing a model’s performance. Traditional YOLO models commonly adopt the CIoU (Complete Intersection over Union) as their loss function. This function is well-designed as it not only takes into account the Intersection over Union (IoU) between the predicted bounding boxes and the ground-truth boxes but also incorporates considerations regarding the distance between them and their aspect ratios. Nevertheless, CIoU is not without its limitations, particularly when confronted with complex scenarios. For instance, when dealing with high-quality samples and hard-to-detect objects, the model often exhibits a sluggish convergence speed and limited localization accuracy.

To surmount these challenges, this paper presents the Focal-EIoU loss function. This advanced loss function integrates the Focal mechanism with the EIoU (Extended IoU) loss. Such a combination empowers the model to conduct more effective feature learning and localization prediction when handling intricate samples.

CIoU enhances the accuracy of object detection by imposing penalties on the distance between the center points of the predicted and ground truth boxes, as well as their aspect ratios. However, it fails to fully address the issues associated with handling hard samples and the unequal weighting of high-quality samples. In the context of distracted driving detection tasks, CIoU demonstrates slow convergence and suboptimal localization precision in certain complex scenarios. Specifically, when attempting to detect fine-grained driver actions, such as holding a phone or looking up at the mirror, the errors tend to be relatively large.

The Focal-EIoU loss function leverages the Focal mechanism, which enables the model to concentrate more intently on hard-to-detect samples during the training process. By reducing the weight assigned to easy-to-detect samples, the model can allocate a greater proportion of its attention to challenging targets. Consequently, the model achieves a substantial improvement in detection accuracy when dealing with complex distracted driving behaviors, including multitask distractions and subtle actions.

EIoU builds upon CIoU by expanding the geometric relationship between the predicted and ground truth boxes. It not only takes into account the IoU, distance, and aspect ratio but also introduces a new term to optimize the alignment of the boxes. This enhancement allows the model to converge more rapidly and maintain a higher level of prediction accuracy in demanding detection scenarios.

The calculation formula for the Focal-EIoU loss function is as follows:

In this context, IoU represents the Intersection over Union between the predicted bounding boxes and the ground-truth boxes. Meanwhile, α and γ are the weight parameters that regulate the penalties related to the distance and aspect ratio. The Focal mechanism plays a crucial role by dynamically adjusting these weights, which allows the model to place greater emphasis on samples that are difficult to detect.

Within the YOLOv8s-FPNE model, the Focal-EIoU loss function takes the place of the original CIoU loss function. This substitution leads to significant improvements, enabling the model to exhibit superior convergence and stability when confronted with complex scenarios.

Particularly in the realm of distracted driving detection tasks, the Focal-EIoU loss function proves highly effective in enhancing the localization accuracy of subtle driver actions. For example, it can accurately recognize whether a driver is using a phone, looking upward, or turning their head, even under complex lighting conditions.

4. Experimental Setup

4.1. Experimental Environment

The input image resolution for this experiment was set to 640 × 480 pixels. Training parameters included a batch size of 16, 100 training epochs, and an initial learning rate of 0.001. Detailed experimental configurations are shown in

Table 1.

4.2. Dataset

The dataset employed in this research was sourced from the online domain. It encompasses a total of 5147 images depicting three prevalent distracted driving behaviors: drinking water, using a mobile phone, and smoking. These images were captured from a multitude of angles, endowing the dataset with a rich variety of visual perspectives. When juxtaposed with the dataset utilized by Shen Qian et al. [

10] this dataset stands out for its enhanced diversity, broader generalization capabilities, and heightened robustness. To mitigate the risk of overfitting and optimize the utilization of the dataset, it was meticulously partitioned into training, testing, and validation sets at a ratio of 7:2:1, respectively.

Specifically, the training set consists of 3602 images, which serve as the primary material for the model to learn the patterns and features associated with distracted driving behaviors. The test set, containing 1029 images, is employed to evaluate the model’s performance after training. The validation set, with 516 images, plays a crucial role in fine-tuning the model during the training process, ensuring its generalization ability.

All images in the dataset have a standardized resolution of 640 × 640. To ensure the accuracy of the data, annotations, including bounding boxes that precisely delineate the location of the distracted behavior in the image and class labels that identify the type of behavior, were painstakingly created using the LabelImg tool (version 1.8.6). This manual annotation process guarantees the reliability and quality of the dataset for subsequent model training and evaluation.

4.3. Model Evaluation Metrics

To evaluate the lightweight YOLOv8-based model for distracted driving detection, a range of commonly used metrics were adopted to assess both accuracy and efficiency. Specifically, the following metrics were used: mean Average Precision (mAP), number of parameters, and Floating Point Operations Per Second (FLOPS). The formula for mAP is as follows:

where TP denotes true positives, FP false positives, FN false negatives, p precision, and r recall. Higher mAP values indicate better overall model performance. The number of parameters reflects the total number of learnable weights in the model, with fewer parameters indicating a more lightweight model suitable for deployment on resource-constrained devices. FLOPS quantifies the computational complexity of the model, with lower values indicating faster inference times.

4.4. Ablation Studies

To assess the influence of diverse improvement modules on detection accuracy, a comprehensive series of ablation experiments were carried out on the modified YOLOv8s-FPNE model. These experiments were designed to methodically analyze the contributions of each individual improvement module by progressively eliminating them. The setup and outcomes of the ablation study are summarized as follows:

Baseline Model: The unaltered YOLOv8s model served as the reference point for comparison.

Integration of FasterNet Module (C2f—Faster): In this modification, the original C2f module was substituted with the FasterNet module, aiming to potentially enhance the model’s efficiency.

Inclusion of PConv Module: The PConv module was added to the detection head, with the intention of improving the model’s detection capabilities.

Incorporation of NAM Attention Mechanism: The NAM attention mechanism was embedded into each network block, enabling the model to better focus on relevant features.

Loss Function Replacement: The CIoU loss function was replaced with the Focal-EIoU loss function, with the expectation of optimizing the model’s learning process.

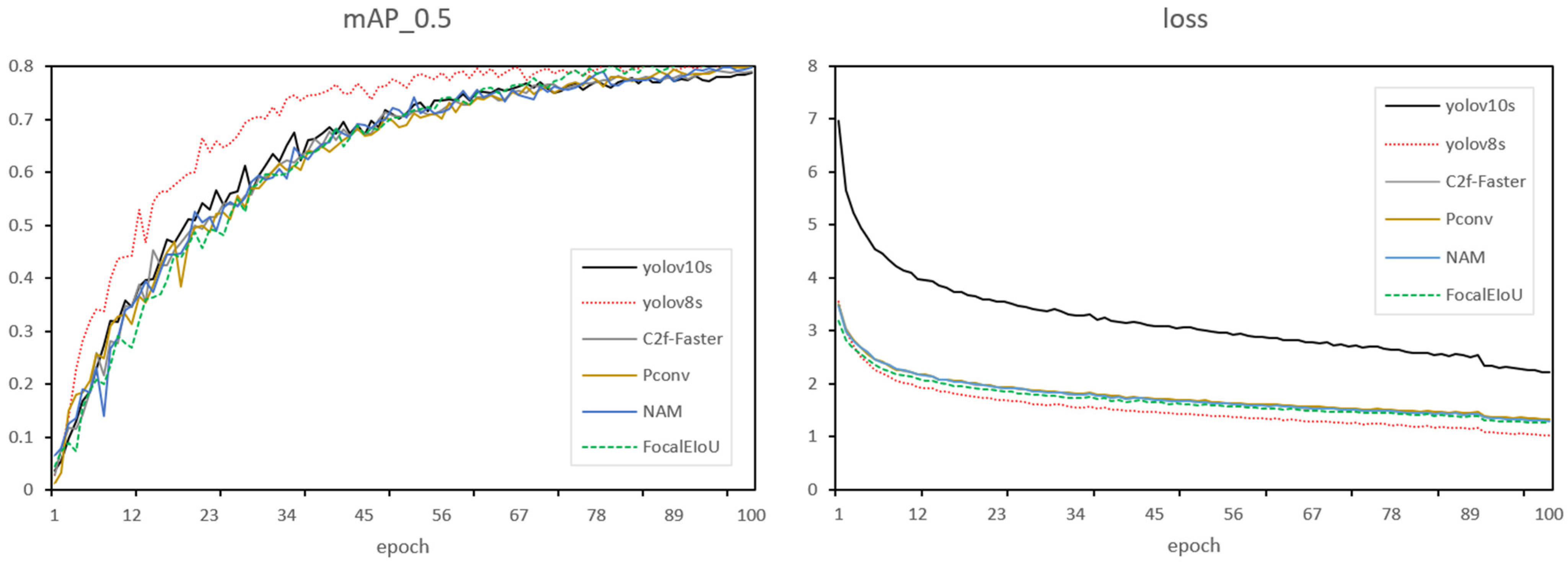

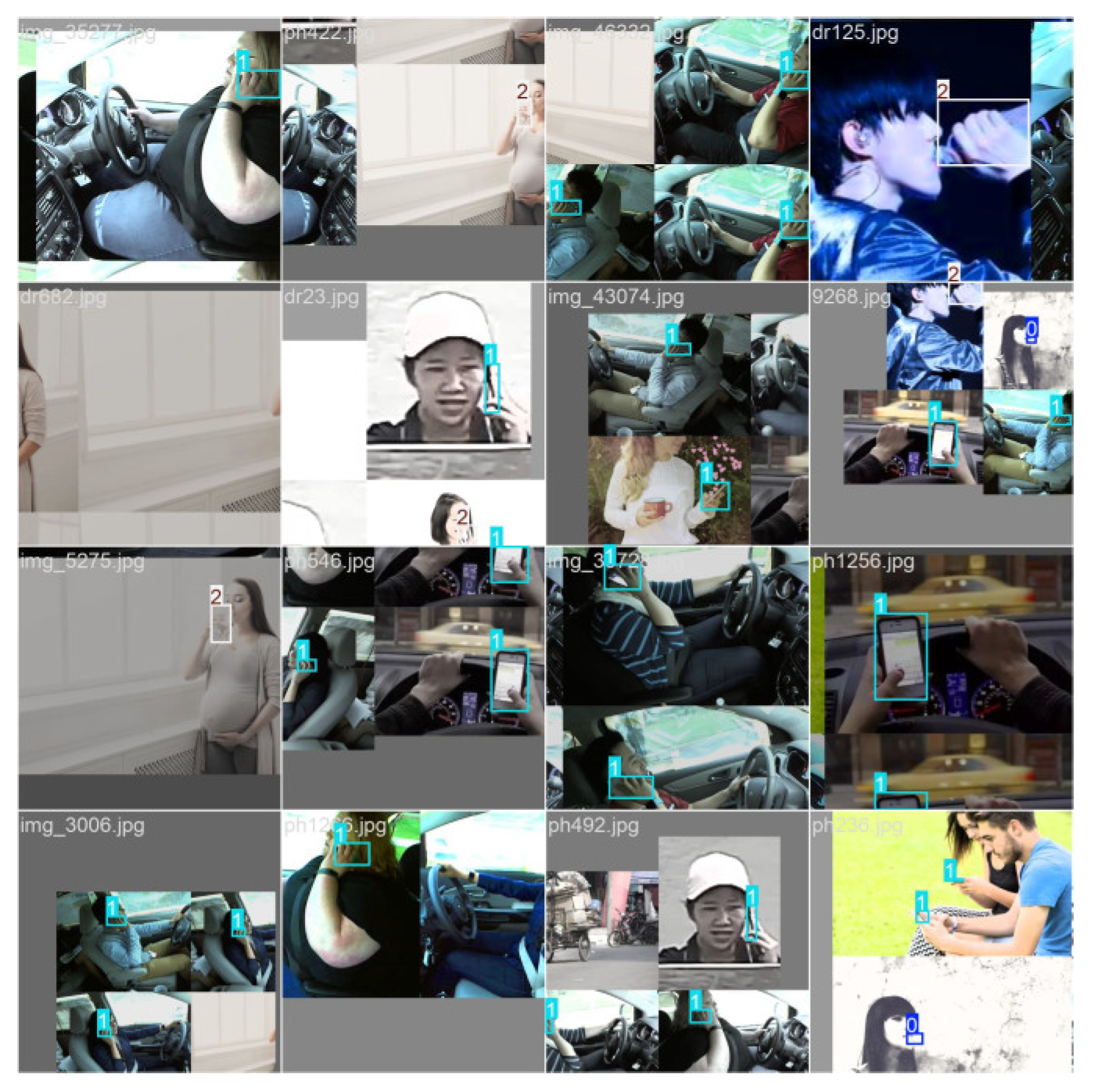

The experimental results, as shown in

Table 2, indicate that the integration of the FasterNet module leads to a substantial reduction in the number of parameters and computational complexity. However, this comes at the cost of a slight decrease in the mean Average Precision (mAP). On the other hand, the introduction of the PConv module and the NAM attention mechanism both contribute to an improvement in mAP. The detailed comparison of mAP and loss across different models is presented in

Figure 4. Among them, the NAM attention mechanism yields the most favorable results, achieving an mAP of 81.6% and a recall of 76.0%. Additionally,

Figure 5 presents a performance comparison of the models in real-world detection tasks.

4.5. Comparison of Network Structures

To identify the optimal network structure for lightweight modeling, a comparative experiment was conducted with mainstream lightweight networks, including MobileNetV3 [

11], ShuffleNetV2 [

12], and C2f-Faster. Results are presented in

Table 3.

The C2f-Faster structure achieved the best balance between parameter reduction and accuracy, making it suitable for distracted driving detection tasks.

4.6. Comparison of Various Loss Functions

To identify the optimal loss function, this study compares several mainstream loss functions, including CIoU Loss, Focal-EIoU Loss, EIoU Loss, GIoU Loss [

13], DIoU Loss [

14], SIoU Loss [

15], and WIoU Loss [

16]. The experimental results are summarized in

Table 4.

4.7. Multiple Attention Mechanisms

The introduction of attention mechanisms can effectively improve model detection accuracy and efficiency. To select the best attention mechanism, this study compares several lightweight attention mechanisms, including TripleAttention [

17], MLCA [

18], iRMB [

19], Acmix [

20], ECA [

21], NAM, SGE [

22], and EMA [

23]. The experimental results are shown in

Table 5.

The experimental results clearly demonstrate notable disparities among the models in terms of parameter count, computational complexity, and mean average precision (mAP). The Triplet Attention mechanism, while augmenting the model’s parameter count and complexity, paradoxically leads to a decline in mAP. This deterioration in performance renders it sub-optimal for practical applications. Both the MLCA and iRMB models are plagued by excessively protracted training times. Such long training durations make them ill-suited for real-time detection tasks, where prompt and efficient results are of the essence.

The Acmix model experiences a certain degree of increase in complexity. Regrettably, its mAP is slightly lower than that of the baseline model, indicating that it fails to offer a substantial improvement in performance. The ECA mechanism manages to keep the complexity and parameter count at a low level. Nevertheless, its mAP is marginally inferior to that of the baseline model, suggesting only modest effectiveness.

Both the SGE and EMA models contribute to an enhancement in accuracy. However, the NAM attention mechanism stands out from the rest. It achieves an impressive mAP of 81.6%, significantly boosting the detection accuracy without causing a substantial surge in either the parameter count or complexity. This outcome firmly validates the efficacy of the NAM attention mechanism in distracted driving detection tasks. Consequently, the NAM attention mechanism is chosen as the final attention mechanism for implementation.

4.8. Comparison of Parameters and Complexity

This section presents a comparison of YOLOv8s, YOLOv8s-FPNE, and several DETR-based detectors in terms of parameter count and computational complexity.

As summarized in

Table 6, the baseline YOLOv8s model contains 11.13 M parameters and requires 28.4 G FLOPS, while the proposed YOLOv8s-FPNE achieves a substantial reduction to 8.72 M parameters and 21.7 G FLOPS, indicating a lighter and more efficient design.

In contrast, transformer-based detectors such as Vanilla DETR and Deformable DETR exhibit significantly higher computational costs, with 42.13 M/69.1 G and 37.46 M/46.3 G, respectively. Even the so-called Lightweight DETR still maintains 13.62 M parameters and 24.6 G FLOPS, which remain higher than those of YOLOv8s-FPNE.

These results demonstrate that the proposed YOLOv8s-FPNE model achieves superior compactness and computational efficiency, making it particularly suitable for real-time deployment on resource-constrained platforms such as embedded systems and edge devices.

4.9. Comparison of Detection Accuracy

In terms of detection accuracy, the YOLOv8s-FPNE model attains a mAP@0.5 of 0.816, outperforming the baseline YOLOv8s (0.804) despite its lighter structure and reduced computational burden.

When compared with DETR-based architectures, Vanilla DETR achieves 0.795, Deformable DETR reaches 0.806, and Lightweight DETR obtains 0.792 in mAP@0.5.

These findings indicate that YOLOv8s-FPNE achieves a more favorable balance between accuracy and efficiency. While DETR variants exhibit strong representational power through self-attention mechanisms, their higher computational costs make them less practical for real-time or embedded applications.

Overall, the YOLOv8s-FPNE model delivers competitive accuracy with markedly lower complexity, highlighting its strong potential for deployment in real-world distracted driving detection systems.

4.10. Inference Speed Evaluation

To further verify the deployment feasibility of the proposed model, we evaluate the inference efficiency of YOLOv8s-FPNE under a realistic hardware configuration (AMD Ryzen 9 6900HX, RTX 3070 Ti Laptop GPU, PyTorch 2.1, CUDA 12.6). As shown in

Table 7, the model achieves a median model-side inference latency of 9.0 ms per frame, corresponding to approximately 73 FPS. When including decoding and NMS, the end-to-end latency stabilizes at 13.7 ms, maintaining real-time processing at over 70 FPS.

Compared with the baseline YOLOv8s—whose model-side latency reaches 11.5 ms and end-to-end delay reaches 16.0 ms—YOLOv8s-FPNE delivers faster inference speed and more consistent latency while operating with fewer parameters and lower computational complexity, as summarized in

Table 7. Although other ablation variants (e.g., the C2f-Faster or PConv-enhanced versions) show slightly lower model-only latency (8.6–9.0 ms), their overall end-to-end performance remains inferior, and they do not achieve the same accuracy improvement as the final integrated YOLOv8s-FPNE design.

In contrast, DETR-based detectors suffer from substantially higher computational latency due to multi-head self-attention and iterative bipartite matching. Under similar hardware conditions, Vanilla DETR typically exceeds 35–50 ms per frame, making it unsuitable for real-time, safety-critical driving-monitoring scenarios.

In summary, the key inference indicators presented in

Table 7 demonstrate that YOLOv8s-FPNE achieves a more advantageous speed–accuracy trade-off than both the baseline and the ablation variants. Its sub-14 ms end-to-end latency, together with strong detection accuracy, confirms the model’s practical suitability for real-time and resource-constrained distracted-driving detection systems.

5. Discussion

This research presents a streamlined approach for distracted driving detection, leveraging the YOLOv8 framework. The proposed method integrates the FasterNet module, PConv, the NAM attention mechanism, and the Focal-EIoU loss function.

The experimental outcomes clearly demonstrate that the refined YOLOv8s-FPNE model achieves a substantial reduction in both the parameter count and computational complexity. Remarkably, it manages to preserve detection accuracy at the same time. This makes the model an ideal candidate for deployment on devices with limited resources, where efficiency and performance need to be carefully balanced.

Looking ahead, future research endeavors will focus on several key directions. Firstly, there will be a continued effort to optimize the model structure, aiming to enhance its internal architecture for better performance. In addition, improving detection accuracy will remain a top priority, with the exploration of advanced techniques to refine the model’s ability to accurately identify distracted driving behaviors. Furthermore, additional lightweight strategies will be investigated to further reduce the model’s resource requirements without sacrificing its effectiveness. Finally, the model will be applied in real-world driving scenarios. This real-world testing will not only provide broader validation of the model’s performance but also open up new avenues for its practical application in various driving contexts.