Cross-Modality Guided Super-Resolution for Weak-Signal Fluorescence Imaging via a Multi-Channel SwinIR Framework

Abstract

1. Introduction

- (1)

- We propose a cross-modality guided super-resolution framework for weak-signal fluorescence imaging, in which a high-SNR auxiliary channel provides a structural prior to improve the reconstruction of the target weak-signal channel.

- (2)

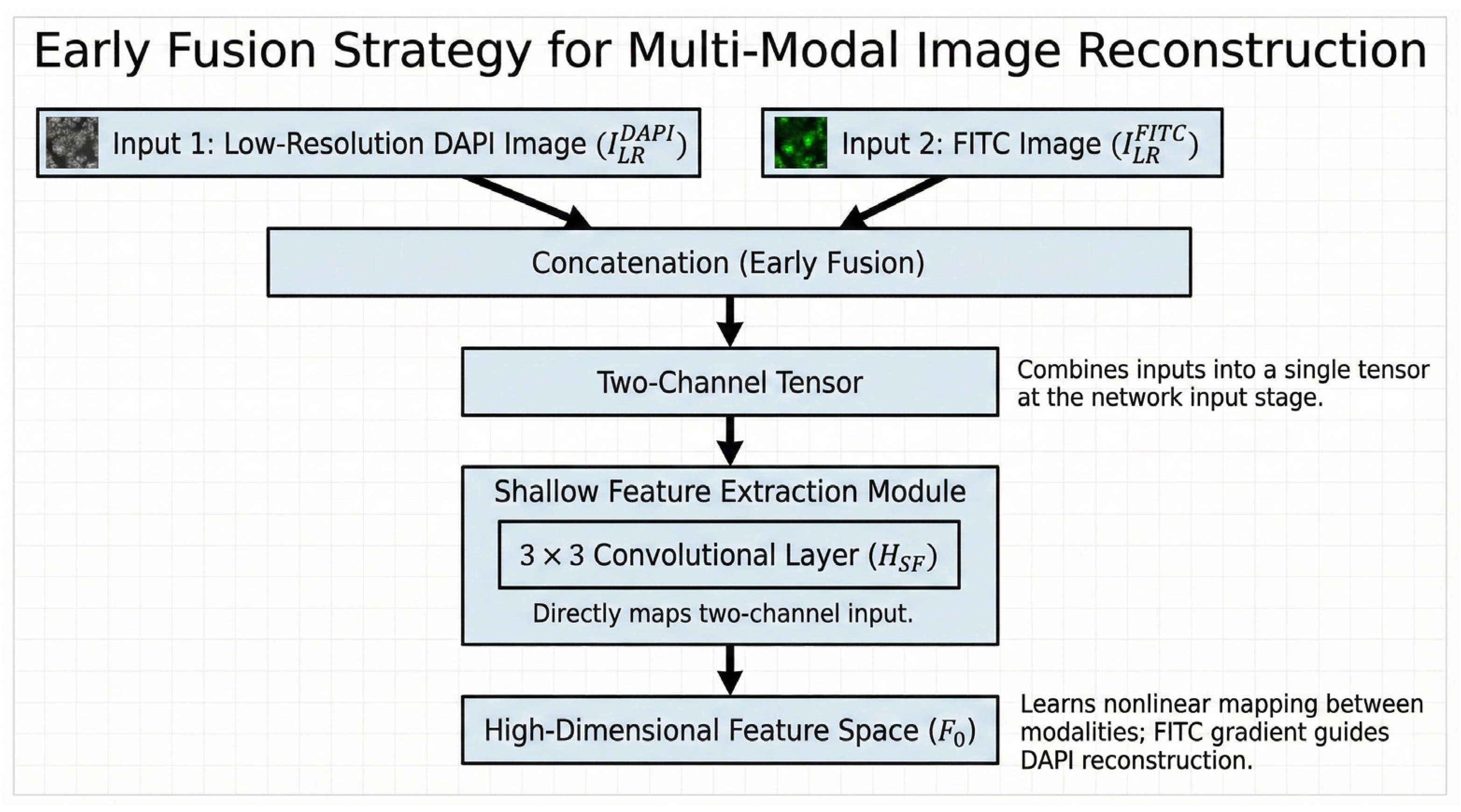

- We adapt SwinIR to a dual-channel early-fusion input setting and analyze the role of window-based attention in enabling cross-channel feature interaction for structure-aware restoration.

- (3)

- We design a hybrid objective by combining pixel-, structure-, and frequency-domain consistency constraints, and validate the proposed method against bicubic interpolation, Real-ESRGAN, and single-channel SwinIR using both quantitative metrics and ROI-based qualitative comparisons.

2. Materials and Methods

2.1. Problem Formulation: Physics-Guided Image Restoration

2.2. Data Acquisition and Preprocessing

2.2.1. Imaging and 16-Bit High Dynamic Range Processing

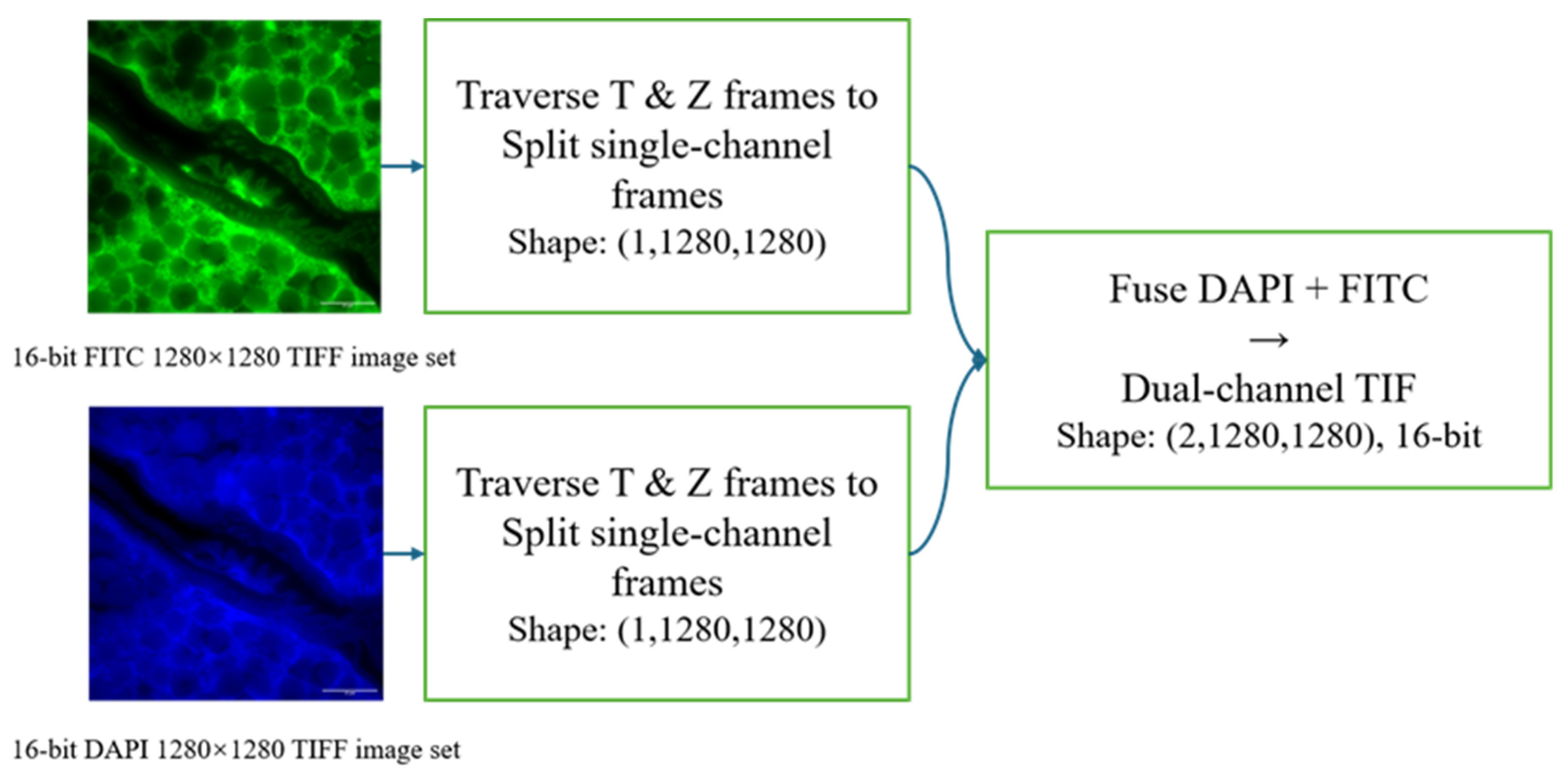

2.2.2. Cross-Modality Alignment and Dataset Construction

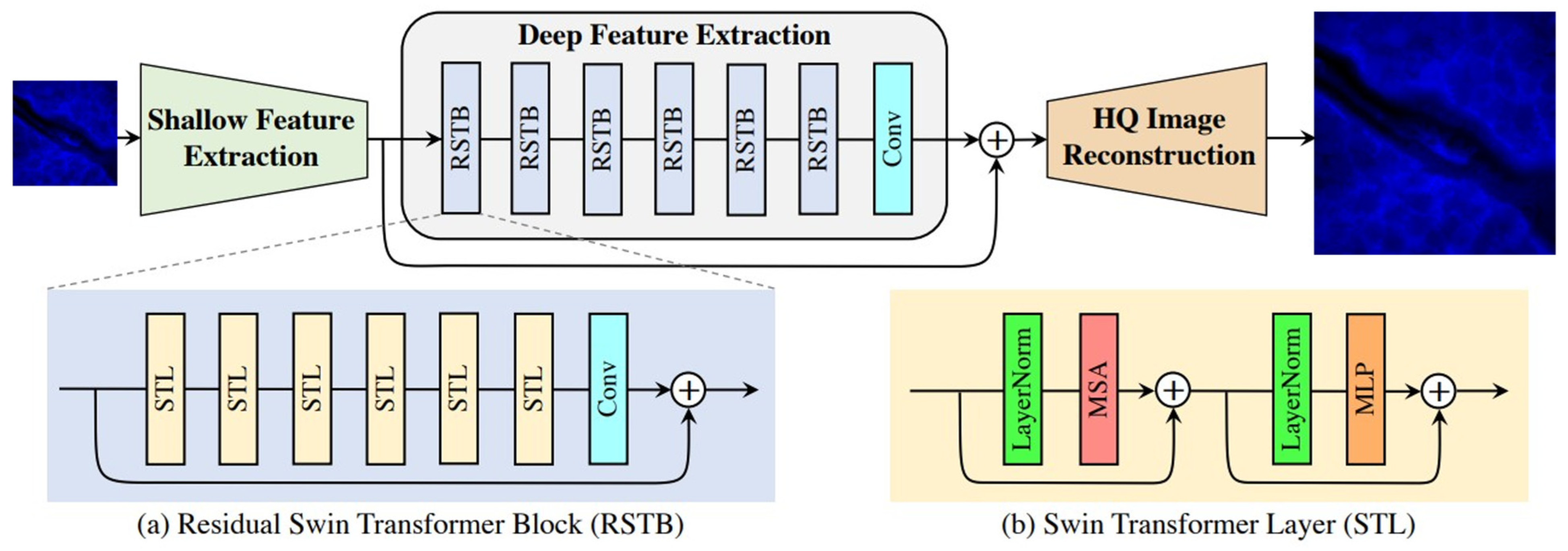

2.3. Network Architecture: Multi-Channel Guided SwinIR

2.3.1. Early Fusion Strategy

| Algorithm 1. Overall training and inference pipeline. |

| Input: Paired HR images (); scale factor s = 4; loss weights , ; Adam (, ); total iterations T. Output: Trained parameters ; predicted SR image . Dataset preparation For each paired HR sample (): ← BicubicDownsample (, s) ← BicubicDownsample (, s) Randomly split samples into training/validation/test sets with a ratio of 7:2:1 (test set: 74 pairs). Training Initialize model parameters θ. For t = 1 to T do: Sample a mini-batch of paired patches {(, , )} from the training set. Early fusion: ← [, ] (channel-wise concatenation). Forward: ← . Joint loss: Update: ← AdamUpdate(, ). Validation (periodically): evaluate PSNR/SSIM on the validation set and save the best checkpoint. End for Inference/Testing Load the best checkpoint . For each test sample (, ): ← [, ] ← . Report average PSNR/SSIM on the test set (74 pairs). |

2.3.2. Deep Feature Extraction with RSTB

2.3.3. Image Reconstruction

2.4. Loss Function Formulation

2.4.1. Charbonnier Loss

2.4.2. SSIM Loss

2.4.3. Frequency-Domain Consistency Loss

2.5. Implementation Details and Evaluation Metrics

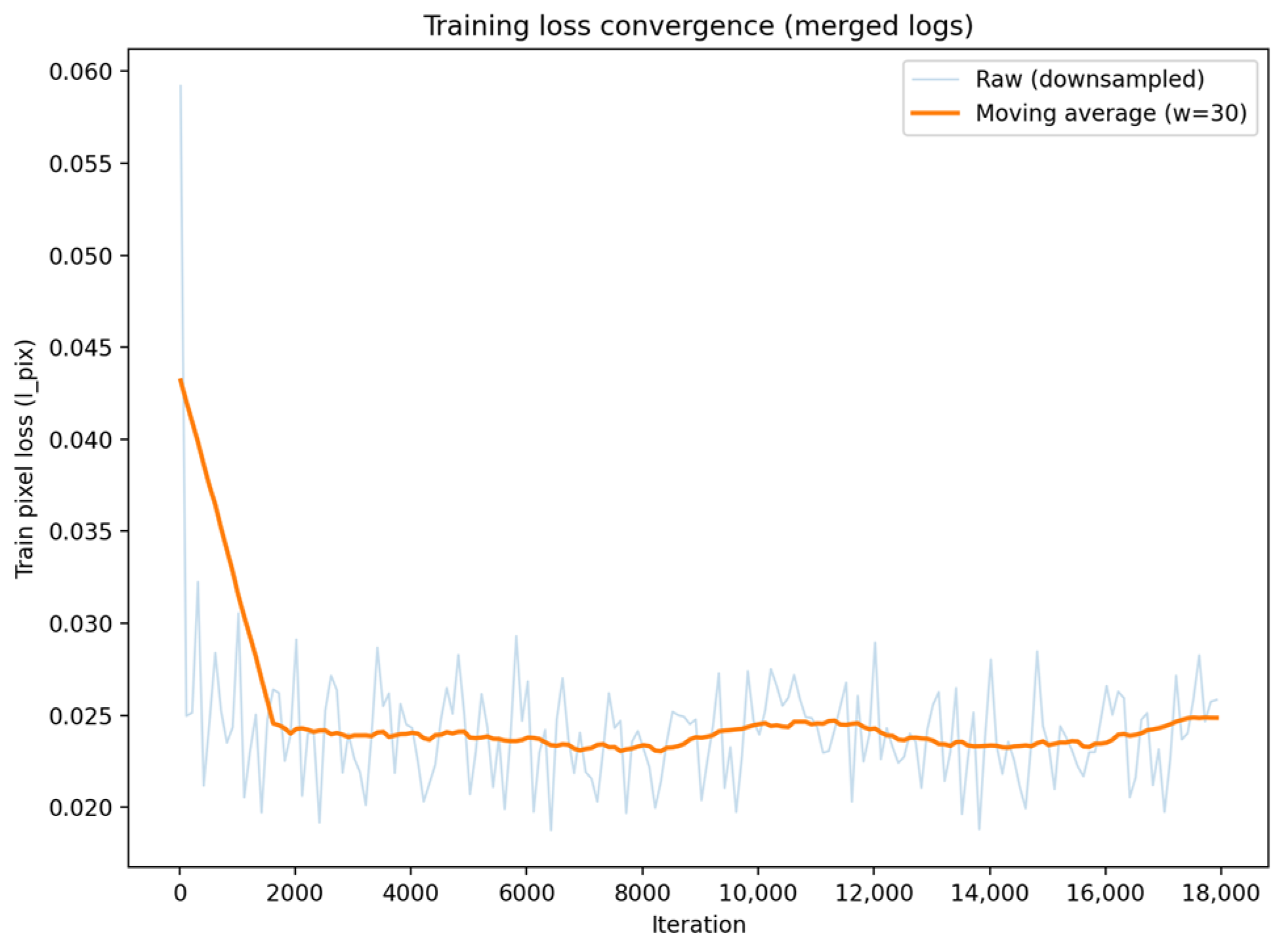

2.5.1. Experimental Setup

2.5.2. Evaluation Metrics

3. Results

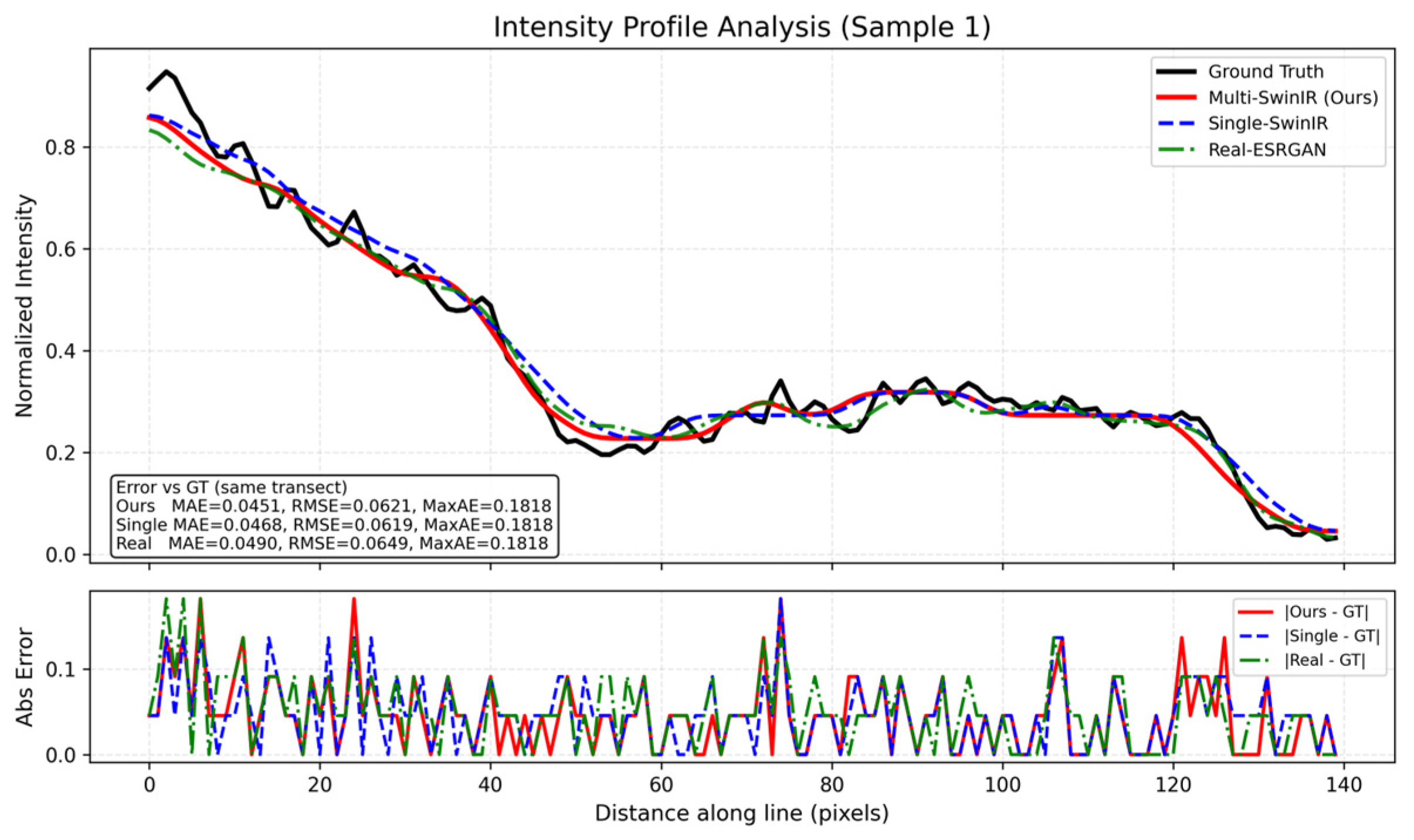

3.1. Quantitative Evaluation

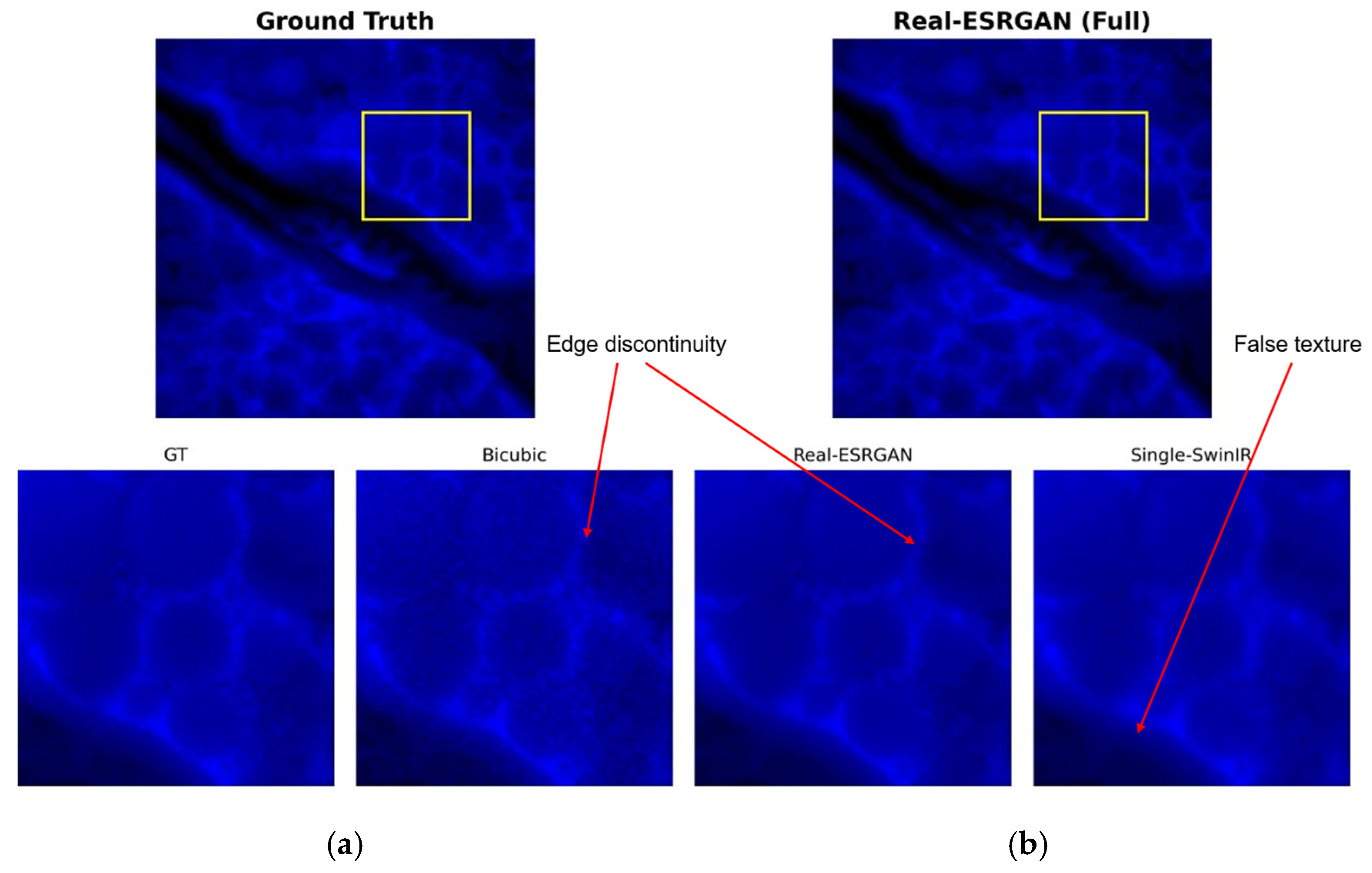

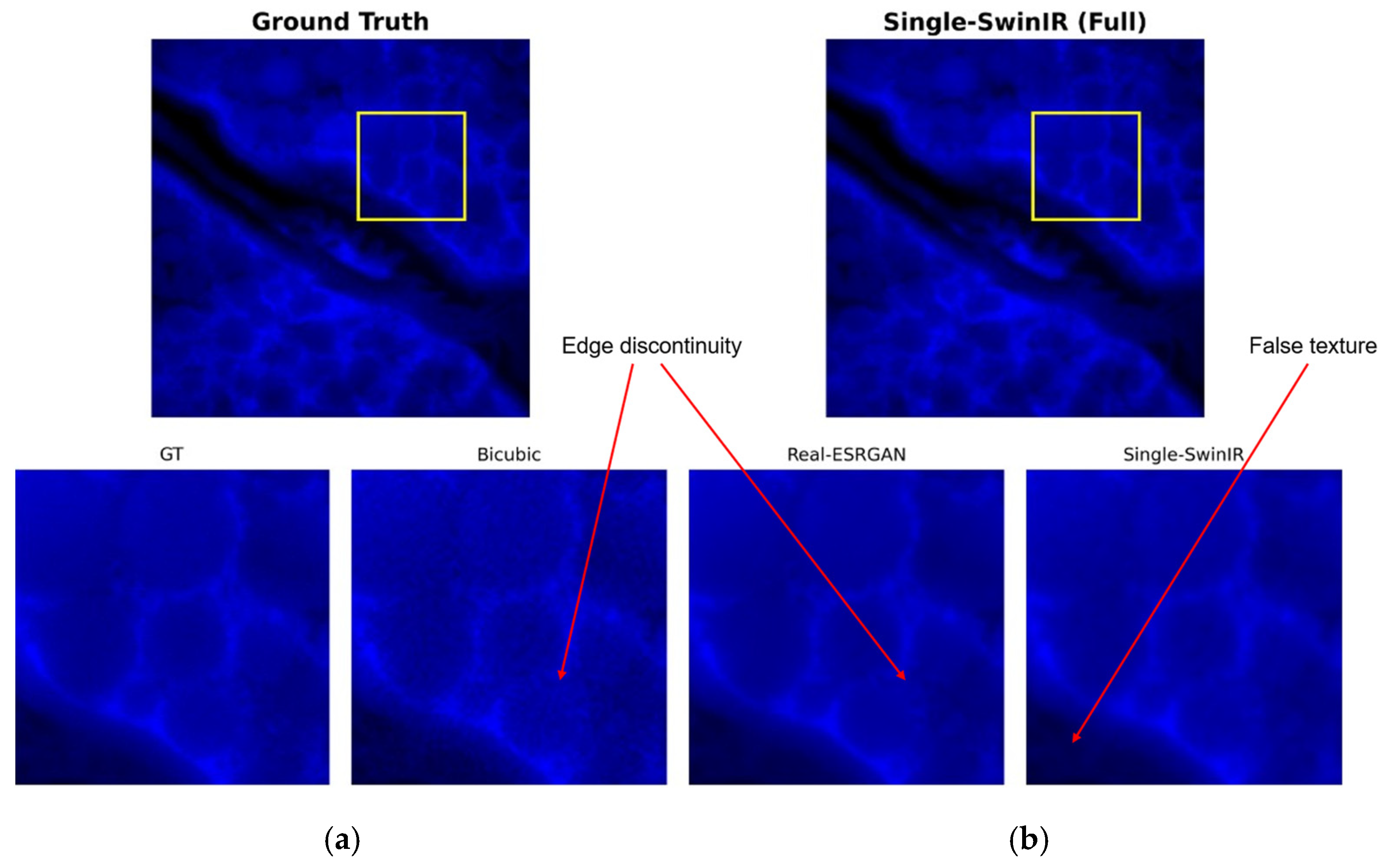

3.2. Analysis of Single-Channel Reconstruction Defects

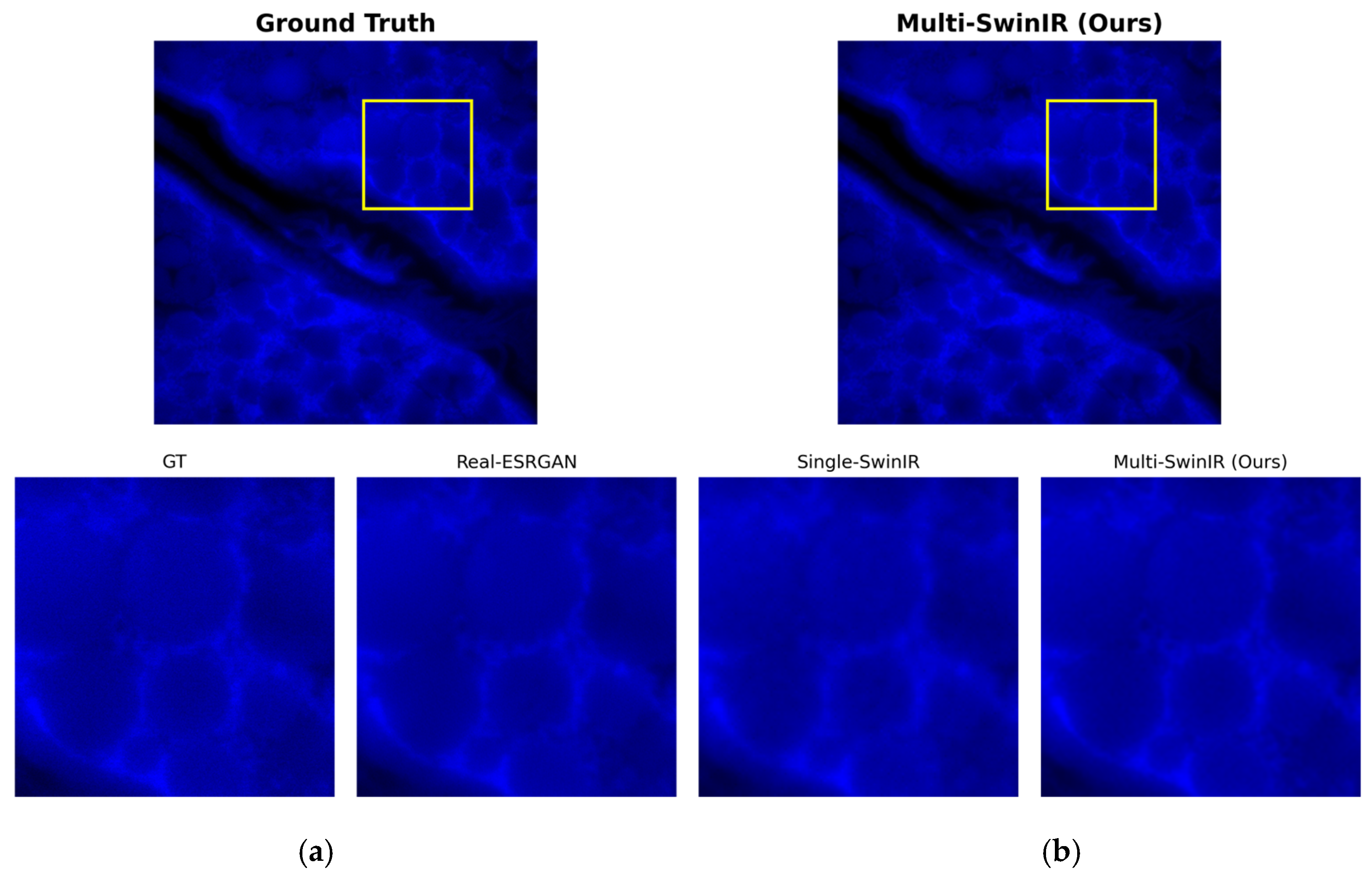

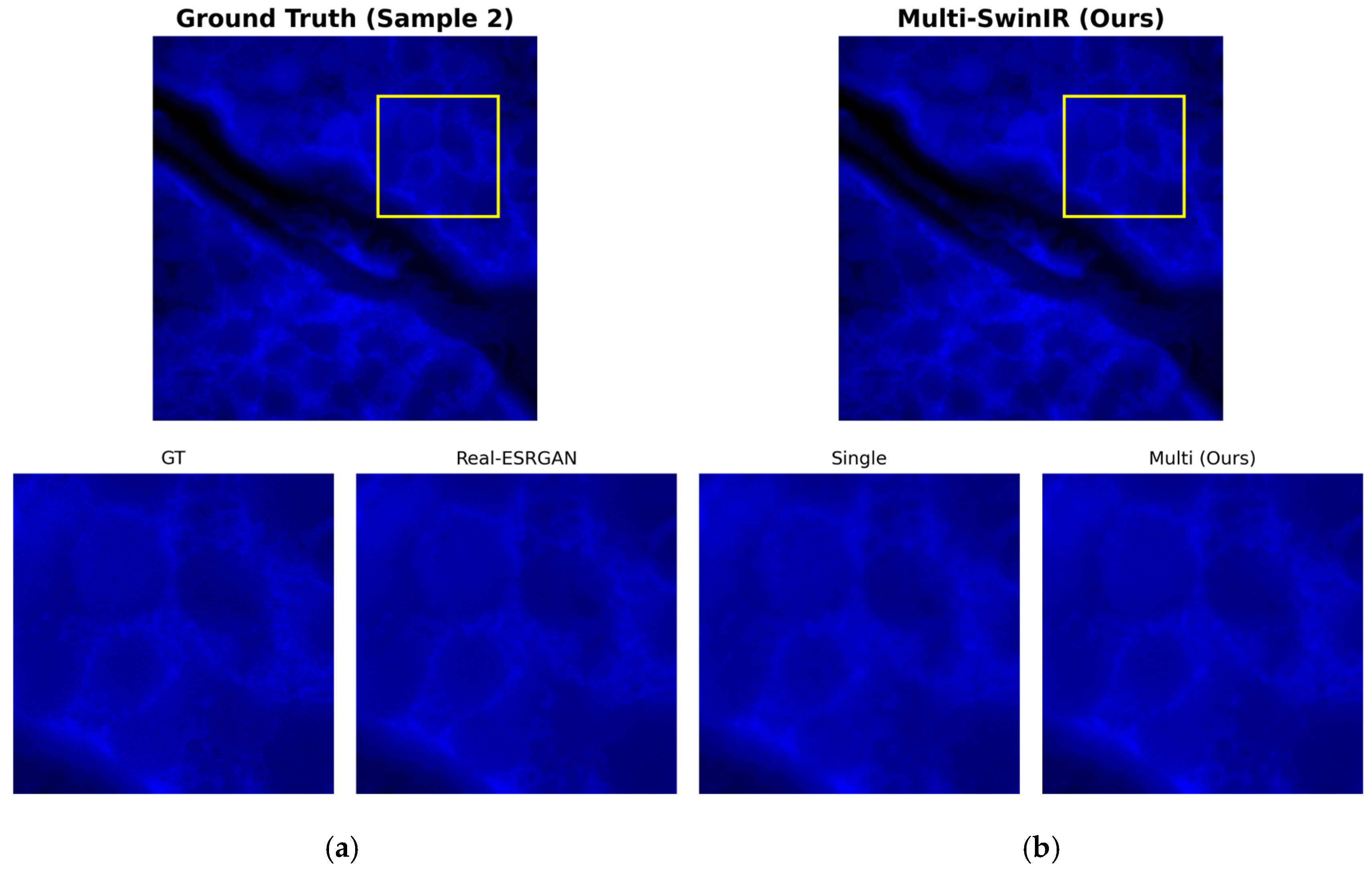

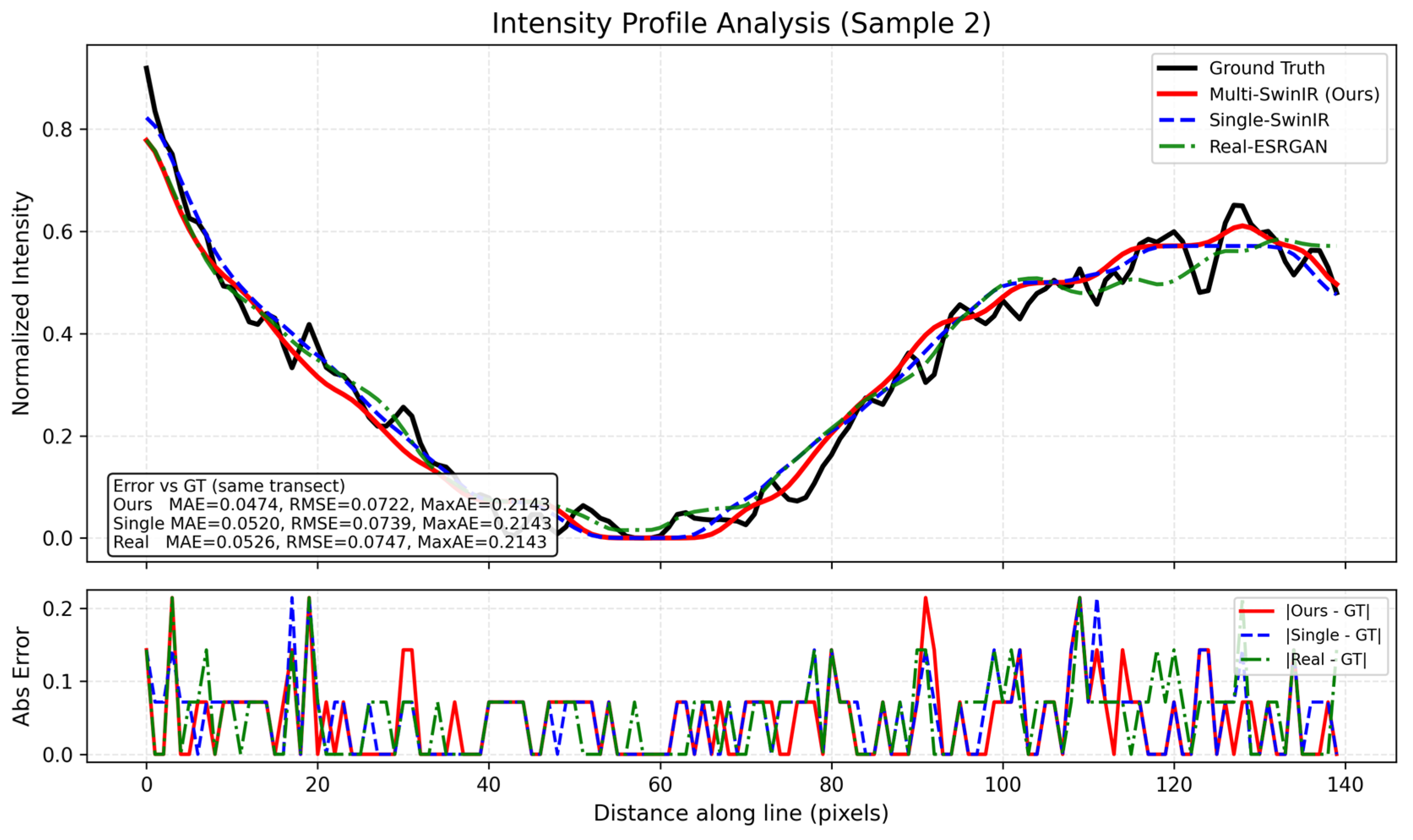

3.3. Dual-Channel Reconstruction Performance in Challenging Scenarios

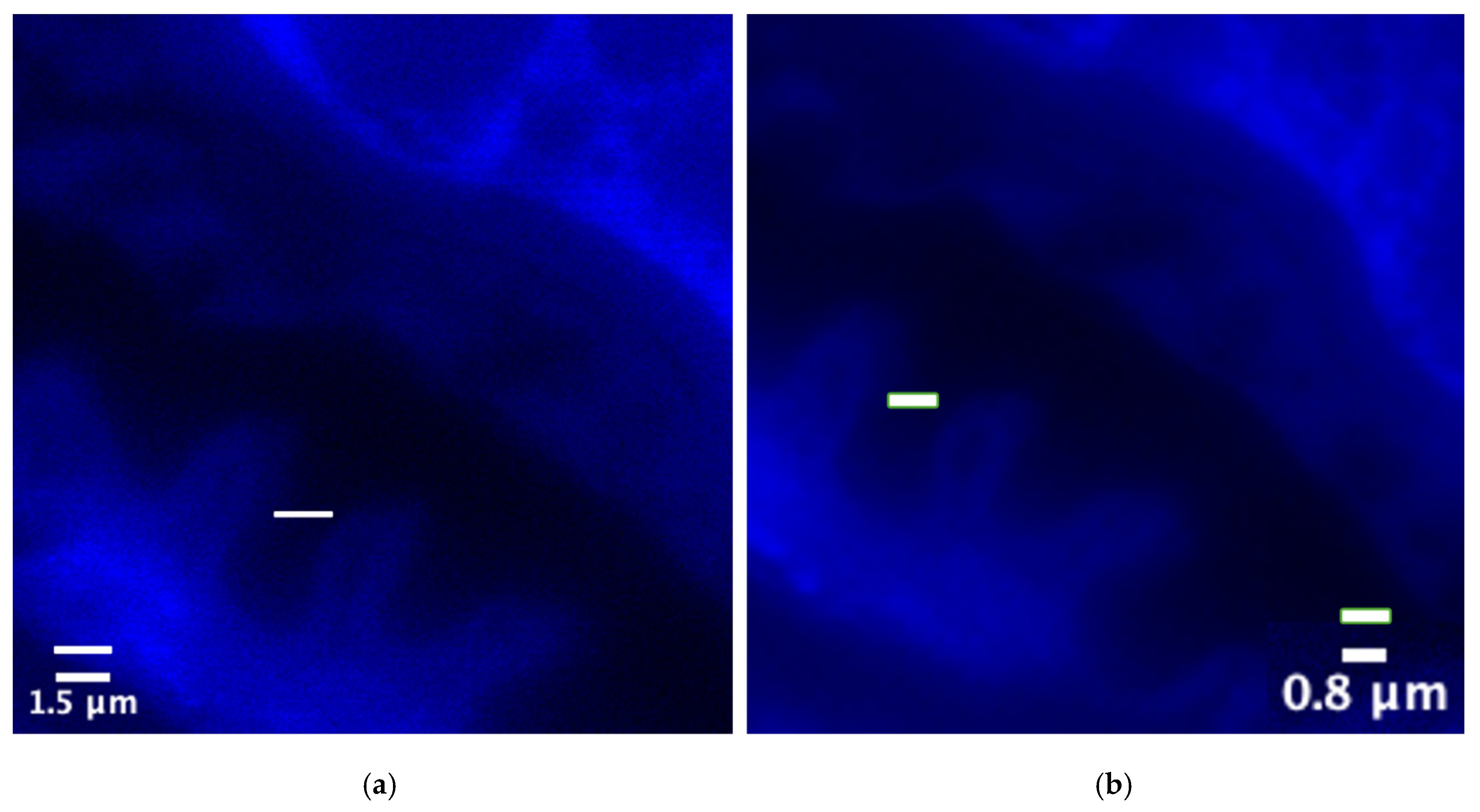

3.4. Improvement in Minimum Resolvable Structure Size

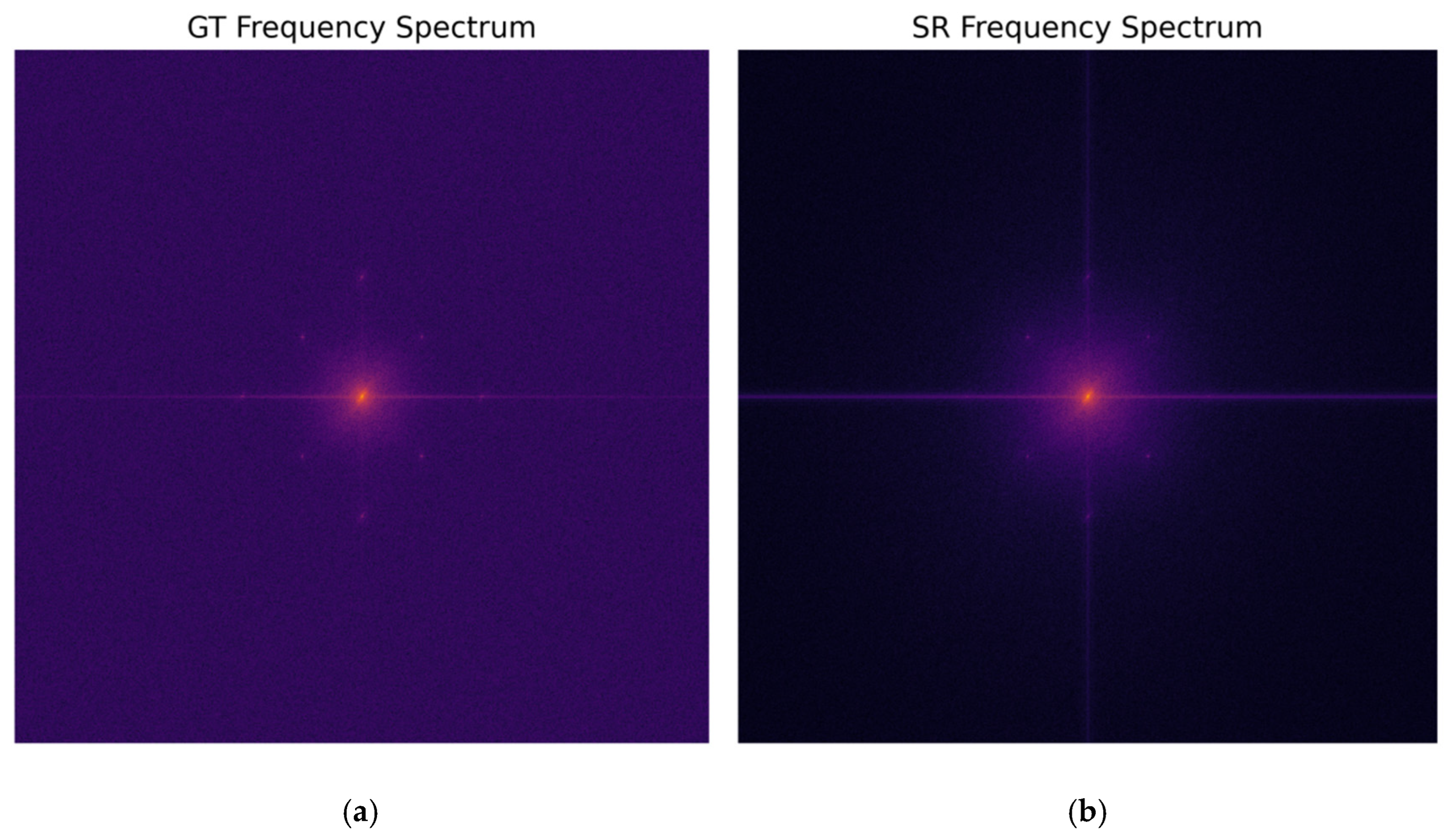

3.5. Validation of Frequency-Domain Consistency

4. Discussion

4.1. Mechanism: Cross-Channel Information Compensation via Structural Priors

4.2. Physics: Overcoming the Ill-Posed Nature of Short-Wavelength Imaging

4.3. Improving Effective Resolution Under Low-SNR Conditions

4.4. Limitations and Future Scope

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| Adam | Adaptive Moment Estimation |

| CNN | Convolutional Neural Network |

| DAPI | 4′,6-diamidino-2-phenylindole |

| FFT | Fast Fourier Transform |

| FITC | Fluorescein isothiocyanate |

| GT | Ground Truth |

| HR | High Resolution |

| LR | Low Resolution |

| MSE | Mean Squared Error |

| PSNR | Peak Signal-to-Noise Ratio |

| Q/K/V | Query/Key/Value |

| ROI | Region of Interest |

| RSTB | Residual Swin Transformer Block |

| SIM | Structured Illumination Microscopy |

| SNR | Signal-to-Noise Ratio |

| SR | Super-Resolution |

| SSIM | Structural Similarity Index Measure |

| STL | Swin Transformer Layer |

| W-MSA | Window-Based Multi-head Self-Attention |

References

- Lichtman, J.W.; Conchello, J.A. Fluorescence microscopy. Nat. Methods 2005, 2, 910–919. [Google Scholar] [CrossRef] [PubMed]

- Stephens, D.J.; Allan, V.J. Light microscopy techniques for live cell imaging. Science 2003, 300, 82–86. [Google Scholar] [CrossRef] [PubMed]

- Waters, J.C. Accuracy and precision in quantitative fluorescence microscopy. J. Cell Biol. 2009, 185, 1135–1148. [Google Scholar] [CrossRef] [PubMed]

- Monici, M. Cell and tissue autofluorescence research and diagnostic applications. Biotech. Histochem. 2005, 80, 133–139. [Google Scholar]

- Neumann, M.; Gabel, D. Fluorescence properties of DAPI strongly depend on intracellular environment. Histochem. Cell Biol. 2002, 117, 287–294. [Google Scholar]

- Johnson, I. Fluorescent probes for living cells. In Handbook of Biological Confocal Microscopy; Springer: Boston, MA, USA, 2010; pp. 353–367. [Google Scholar]

- Gustafsson, M.G.L. Surpassing the lateral resolution limit by a factor of two using structured illumination microscopy. J. Microsc. 2000, 198, 82–87. [Google Scholar] [CrossRef] [PubMed]

- Demmerle, J.; Innocent, C.; North, A.J.; Ball, G.; Müller, M.; Miron, E.; Matsuda, A.; Dobbie, I.M.; Markaki, Y.; Schermelleh, L. Strategic and practical guidelines for successful structured illumination microscopy. Nat. Protoc. 2017, 12, 988–1010. [Google Scholar] [CrossRef] [PubMed]

- Shah, Z.H.; Müller, M.; Hübner, W.; Wang, T.C.; Telman, D.; Huser, T.; Schenck, W. Evaluation of Swin Transformer and knowledge transfer for denoising of super-resolution structured illumination microscopy data. GigaScience 2024, 13, giad109. [Google Scholar] [CrossRef] [PubMed]

- He, Y.; Yao, Y.; He, Y.; Huang, Z.; Luo, F.; Zhang, C.; Qi, D.; Jia, T.; Wang, Z.; Sun, Z.; et al. Surpassing the resolution limitation of structured illumination microscopy by an untrained neural network. Biomed. Opt. Express 2023, 14, 106–117. [Google Scholar] [CrossRef]

- Zhang, X.; Jiang, X.; Song, Q.; Zhang, P. A Visual Enhancement Network with Feature Fusion for Image Aesthetic Assessment. Electronics 2023, 12, 2526. [Google Scholar] [CrossRef]

- Qiao, C.; Liu, S.; Wang, Y.; Xu, W.; Geng, X.; Jiang, T.; Zhang, J.; Meng, Q.; Qiao, H.; Dai, Q. A neural network for long-term super-resolution imaging of live cells with reliable confidence quantification. Nat. Biotechnol. 2025, 1–10. [Google Scholar] [CrossRef]

- Liang, J.; Cao, J.; Sun, G.; Zhang, K.; Van Gool, L.; Timofte, R. SwinIR: Image restoration using swin transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 1833–1844. [Google Scholar]

- Conde, M.V.; Choi, U.J.; Burchi, M.; Timofte, R. Swin2sr: Swinv2 transformer for compressed image super-resolution and restoration. In European Conference on Computer Vision; Springer Nature: Cham, Switzerland, 2022; pp. 669–687. [Google Scholar]

- Huo, Y.; Gang, S.; Guan, C. FCIHMRT: Feature cross-layer interaction hybrid method based on Res2Net and transformer for remote sensing scene classification. Electronics 2023, 12, 4362. [Google Scholar] [CrossRef]

- Wang, X.; Xie, L.; Dong, C.; Shan, Y. Real-esrgan: Training real-world blind super-resolution with pure synthetic data. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 1905–1914. [Google Scholar]

- Tseng, H.M.; Tseng, W.M.; Lin, J.W.; Tan, G.L.; Chu, H.T. Enhancing Image Super-Resolution Models with Shift Operations and Hybrid Attention Mechanisms. Electronics 2025, 14, 2974. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Laine, R.F.; Jacquemet, G.; Krull, A. Imaging in focus: An introduction to deep learning for microscopists. Int. J. Biochem. Cell Biol. 2021, 139, 106050. [Google Scholar]

- Deng, X.; Dragotti, P.L. Deep coupled feedback network for joint exposure fusion and image super-resolution. IEEE Trans. Image Process. 2020, 30, 1450–1465. [Google Scholar] [CrossRef] [PubMed]

- Linkert, M.; Rueden, C.T.; Allan, C.; Burel, J.M.; Moore, W.; Patterson, A.; Loranger, B.; Moore, J.; Neves, C.; Macdonald, D.; et al. Metadata matters: Access to image data in the real world. J. Cell Biol. 2010, 189, 777–782. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Ramachandram, D.; Taylor, G.W. Deep multimodal learning: A survey on recent advances and trends. IEEE Signal Process. Mag. 2017, 34, 96–108. [Google Scholar] [CrossRef]

- Lai, W.S.; Huang, J.B.; Ahuja, N.; Yang, M.H. Deep laplacian pyramid networks for fast and accurate super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 624–632. [Google Scholar]

- Zhang, K.; Zuo, W.; Chen, Y.; Meng, D.; Zhang, L. Beyond a gaussian denoiser: Residual learning of deep CNN for image denoising. IEEE Trans. Image Process. 2017, 26, 3142–3155. [Google Scholar] [CrossRef] [PubMed]

| Input Type | Model | PSNR (dB, Mean) | SSIM (Mean) |

|---|---|---|---|

| Single channel blue DPAI input | Bicubic Interpolation | 30.79 | 0.799 |

| Single channel blue DPAI input | Real-ESRGAN | 25.43 | 0.742 |

| Single channel blue DPAI input | SwinIR-1channel (Baseline) | 27.05 | 0.763 |

| Multi-channel blue DAPI with green FITC input | SwinIR-2channel | 44.98 | 0.960 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2026 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.

Share and Cite

Huang, H.; Abbas, H. Cross-Modality Guided Super-Resolution for Weak-Signal Fluorescence Imaging via a Multi-Channel SwinIR Framework. Electronics 2026, 15, 204. https://doi.org/10.3390/electronics15010204

Huang H, Abbas H. Cross-Modality Guided Super-Resolution for Weak-Signal Fluorescence Imaging via a Multi-Channel SwinIR Framework. Electronics. 2026; 15(1):204. https://doi.org/10.3390/electronics15010204

Chicago/Turabian StyleHuang, Haoxuan, and Hasan Abbas. 2026. "Cross-Modality Guided Super-Resolution for Weak-Signal Fluorescence Imaging via a Multi-Channel SwinIR Framework" Electronics 15, no. 1: 204. https://doi.org/10.3390/electronics15010204

APA StyleHuang, H., & Abbas, H. (2026). Cross-Modality Guided Super-Resolution for Weak-Signal Fluorescence Imaging via a Multi-Channel SwinIR Framework. Electronics, 15(1), 204. https://doi.org/10.3390/electronics15010204