Abstract

Image forgery detection, as an essential technique for analyzing image credibility, has experienced significant advancements recently. However, the forgery detection performance remains unsatisfactory in terms of meeting practical requirements. This is partly attributed to the limited availability of pixel-level annotated forgery samples and insufficient utilization of forgery traces. We try to mitigate these issues through three aspects: training data, network design, and training strategy. In the aspect of training data, we introduce iterative self-supervision which helps generate a large collection of pixel-level labeled single or composite forgery samples through one or more rounds of random copy-move, splicing, and inpainting, addressing the insufficient availability of forgery samples. In the aspect of network design, recognizing that characteristic anomalies are generally apparent at the boundary between true and fake regions, often aligning with image edges, we propose a new edge-guided learning module to effectively capture forgery traces at image edges. In the aspect of training strategy, we introduce progressive self-adversarial training, dynamically generating adversarial samples by gradually increasing the frequency and intensity of adversarial actions during training. This increases the detection difficulty, driving the detector to identify forgery traces from harder samples while maintaining a low computational cost. Comprehensive experiments have shown that the proposed method surpasses the leading competing methods, improving image-level forgery identification by 6.6% (from 73.8% to 80.4% on average F1 score) and pixel-level forgery localization by 15.2% (from 59.1% to 74.3% in average F1 score).

1. Introduction

The rapid advancement of image editing technology, coupled with the widespread availability of low-cost imaging equipment, has significantly enhanced the quality and efficiency of image creation and editing. However, images containing misleading or harmful content can be used for illegal purposes, such as spreading fake news, interfering with political elections, extorting money, or undermining government credibility. To discover the malicious use and dissemination of fake images, image forgery detection has received extensive attention and has developed rapidly in recent years.

Image forgery detection can be typically divided into image-level and pixel-level detection. Image-level detection determines whether a given image has been tampered with, essentially distinguishing between real and fake images. Pixel-level detection, on the other hand, identifies whether each pixel has been tampered with, also known as forgery localization, which segments the image into authentic and forged areas. Regarding versatility, detection methods are categorized into specialized and universal methods. Specialized forgery detection targets specific types of forgery, such as copy-move [1,2], splicing [3,4], and inpainting [5,6]. Universal detection methods [7,8,9,10,11,12,13,14,15,16], however, learn to detect common traces left by various forgeries, enabling them to identify multiple types of forgery. In this work, the proposed method is designed to detect multiple types of forgery and is capable of conducting both image-level and pixel-level forgery detection.

Image forgery detection faces multiple challenges. First, while real (authentic) images are widely available in real-world scenarios, acquiring forged images with pixel-level annotations is costly, leading to the relative scarcity of forged samples in terms of both image-level and pixel-level quantity (i.e., there are fewer fake images and pixels compared to real ones). Second, the boundaries between real and forged regions often coincide with the image edges, making it extremely challenging to learn genuine forgery traces from these edges. Third, detectors often overfit to specific yet non-essential forgery traces during training, which can impair the performance of forgery detection.

We attempt to address the above-mentioned challenges from three aspects: training data, network module design, and training strategy. For training data, we proposed an improved self-supervised approach for generating both single and multiple forged images, effectively mitigating the shortage of forged samples. For network module design, we propose a simple yet effective multi-scale edge-guided artifacts capturing (MEAC) module for learning forgery traces from image edges, which contributes to enhancing detection accuracy. For the training strategy, we propose a progressive self-adversarial training approach that encourages the detector to learn more distinctive features from increasingly difficult adversarial samples, while also providing a mechanism to adjust the frequency and intensity of adversarial training, thus preventing excessive computational demands. The main contributions of this paper are summarized as follows.

- We propose an iterative self-supervised forgery image generation approach that produces various forged images by iteratively applying random splicing, copy-move, or inpainting forgeries. This approach not only increases the forged samples, mitigating the issue of pixel-level label imbalance where forged pixels are far fewer than real ones, but also enriches the diversity of forged samples by applying single and multiple tampering.

- We design a simple yet effective multi-scale edge-guided artifacts capturing (MEAC) module to characterize forgery artifacts at the edges of image content. The basic idea is that the transition zones between authentic and forged regions exhibit inconsistent artifacts closely near each other, whose sharp contrast aids in more easily revealing forgery traces. Based on this idea, we first use an edge extractor such as the Laplacian operator to generate multi-scale edge maps of the image. These maps guide the use of shallow stacked convolutional layers to capture the forgery artifacts. The parameters of the MEAC modules are optimized using a multi-scale edge loss.

- We propose a progressive self-adversarial training strategy that intersperses the adversarial training stage within the standard training stage. This approach mitigates the issue of forgery detectors excessively focusing on specific traces, which can impair detection accuracy. The frequency and intensity of adversarial training gradually increase: in the early stages, when the detector’s capability is limited, both frequency and intensity are low, allowing the detector to focus on learning forgery traces. As the detector’s capabilities improve, both the frequency and intensity of the adversarial training are gradually raised, enabling the detector to learn from more difficult adversarial samples and thereby facilitating the learning of implicit forgery traces, enhancing detection performance. Unlike the self-adversarial training in [11], which fixes a high frequency for self-adversarial training and thus incurs an extremely high computational cost, our progressive self-adversarial strategy allows for more flexible adjustments of adversarial frequency and intensity, with a lower computational cost.

- We conduct extensive experiments including ablation studies and comparisons in both image-level and pixel-level forgery detection, validating the effectiveness and superiority of the proposed method over the compared methods in both detection accuracy and robustness against post-processing.

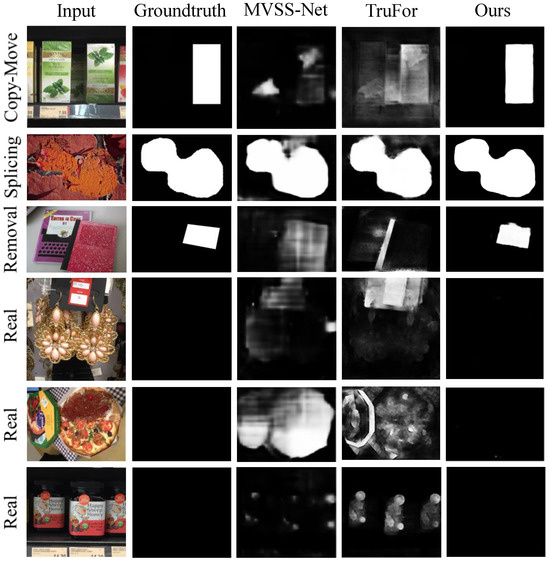

Figure 1 exemplifies the pixel-level forgery detection performance of the proposed method compared to MVSS-Net [13] and TruFor [17] on authentic images and forged images by copy-move, splicing, and inpainting (removal). It is evident that the proposed method precisely locates the forged regions and has a lower false alarm rate on authentic images.

Figure 1.

Demonstration of the proposed method’s performance on the pixel-level forgery detection compared with MVSS-Net [13] and TruFor [17]. The proposed method precisely locates the forged regions and has a lower false alarm rate on authentic images.

2. Related Works

2.1. Specialized Forgery Detection

Image forgery has three basic manners: copy-move, splicing, and inpainting (also known as removal). Each manner introduces certain detectable artifacts. In copy-move forgery, a region of an image is copied and pasted to another region within the same image, creating two highly similar regions, which serves as critical clues for copy-move detection [1,2,18,19]. Splicing involves pasting one or more regions from source image(s) onto the target image, resulting in regions originally from two or more different images. The disparities in camera fingerprints, compression quality, and blurring levels between these regions can be characterized to detect the presence of splicing [3,4]. Inpainting forgery involves filling a region that is meant to be removed with its adjacent regions, thus eliminating original characteristics and introducing a high correlation with surrounding regions [5,6]. Generally speaking, leveraging prior knowledge of specific forgery methods aids in the accurate detection of that forgery; however, this might limit the adaptability to detect other forgery methods.

2.2. Universal Forgery Detection

In recent years, considerable efforts have been dedicated to universal forgery detection. Table 1 lists some representative works, outlining their attributes and features. These include their published sources, the use of real images (w/o real images) or self-supervised data augmentation (w/o Self-sup.) during training, the use of self-adversarial training (w/o Self-adv.), the terms involved in their loss functions (image-level loss, edge-level loss, and image-level loss), and the forensic task(s) addressed (forgery detection and/or localization).

Table 1.

A concise summary of the existing representative methods for forgery detection and localization. ✓ indicates available; ✗ indicates unavailable.

Mantra-Net [15] integrates SRM [21], BayarConv [22], and Conv2D, followed by a fully connected module to improve the forgery detection and localization performance for various types of forgery. However, its detection accuracy declines sharply with increasing JPEG compression strength. SPAN (spatial pyramid pooling attention network) [12] extends ManTra-Net’s architecture, further modeling spatial correlation through self-attention blocks and pyramid propagation. Noiseprint [10] approaches tampering detection from a unique angle by learning camera model fingerprints to detect low-level artifacts, later enhancing this approach with a Transformer-based TruFor fusion architecture for extracting both high-level and low-level traces [17]. CR-CNN [9] proposes a coarse-to-fine architecture called Constrained R-CNN that utilizes Constrained Conv to capture operational cues directly from the data for the complete and accurate image forensics. MVSS-Net [13] utilized the sobel operator to extract edge features from the output of the network block, and also proposed a two-branch network architecture with edge supervision block (ESB) and noise supervision, which combines the three loss functions of pixel scale, edge scale, and image scale to learn the image tampering traces. PSCC-Net [8] used multi-scale features and progressive mechanisms for image forgery detection. SATFL [11] introduced the self-adversarial training strategy to forgery detection, dynamically enhancing training data to boost model robustness. CAT-Net [7] proposed a CNN network that learns the distribution of Discrete Cosine Transform (DCT) coefficients to perform classification to locate the tampered region, which is significantly better than the traditional deep neural network-based approach against JPEG compression. Wu et al. [16] proposed IF-OSN, a social platform noise approximation network and a tamper detection network based on the spatial channel squeeze-and-excitation (SCSE) module, both of which use a U-Net-like architecture and are robust to images that have been transmitted through social media. ObjectFormer [14] leverages ViT for precise image tampering detection and localization. IML-Vit [20] verifies the reliability of the three core elements of the image tamper detection task: high resolution, multi-scale, and edge supervision, it also demonstrates the effectiveness of self-attention in capturing non-semantic artifacts.

As shown in Table 1, it can be seen that only three methods, [13,14,17], are capable of performing image-level forgery detection tasks, while the other methods only perform forgery detection for fake images, causing the detector to focus only on identifying forgeries. This tends to cause the detector to incorrectly segment each image into real and fake regions, resulting in high false positives for real images. Moreover, most of their methods did not use self-supervised data augmentation and self-adversarial training strategy, which may result in poor detection performance. In addition, forgery edge features should also be fully exploited as important clues. Only MVSS-Net uses three scale supervised methods, including edge loss, while other methods use only one to two loss functions. In our method, we used all supervised scales and training enhancement strategies, and performed forgery detection tasks at the image and pixel level.

3. Proposed Method

We start by presenting the overall framework of the proposed method, followed by detailed descriptions of its important components, including iterative self-supervised forgery image generation, the proposed multi-scale edge artifact capturing module, the progressive self-adversarial training strategy, and the loss function.

3.1. The Overall Framework

Figure 2 shows the overall framework/structure of our proposed method. As previously seen and mentioned, our proposed method has five components. Iterative self-supervised forgery image generation is proposed to provide a diverse range of single and multiple forgery samples for training forgery detectors. The forgery detection network features a single encoder and dual decoders architecture. The encoder extracts multi-scale forgery detection features from the input images. One decoder is tasked with predicting a pixel-level forgery mask, while the other, an edge decoder, uses edge detection operators to derive semantic edge information from images and predicts an edge mask, which is guided by this information through shallow stacked convolutional layers. Direct connections between the encoder, the decoder, and the edge decoder at the same scale enhance the integration of low-level and high-level features. This architecture allows the network to concurrently address both the global information and local details within the image.

Figure 2.

The overall framework of the proposed method.

The encoder uses ConvNeXt V2 [23] as its backbone considering ConvNeXt V2’s robust and stable feature extraction capabilities, which can effectively learn forgery traces for precise detection and localization. This is enhanced by incorporating a global response normalization (GRN) layer in ConvNeXt V2, which boosts the network’s discriminatory power and field of view, facilitating the more effective processing of detailed and global information in feature maps. The backbone block depicted in Figure 2 of the encoder adheres to the design utilized in [23].

The decoder and the edge decoder have similar structures. For the decoder, UperNet [24] is selected as its backbone since UperNet can effectively utilize the feature pyramid networks (FPNs) and pyramid pooling module (PPM) to decode the prediction mask from previously collected features. For the edge decoder, we developed a multi-scale edge artifact capturing (MEAC) module to detect the underlying forgery traces, guided by the image edge. The features extracted by MEAC at various scales are channel-wise concatenated and convoluted to produce edge mask predictions. Finally, forgery edge predictions at each scale are incorporated into the calculation of edge loss, together with the integrated edge predictions.

3.2. Iterative Self-Supervised Forgery Image Generation

As shown in Table A1 reveals an obvious imbalance between forged and authentic pixel distributions. Real images are widely available, yet acquiring pixel-level annotated forged samples is expensive, which limits the precision of forgery detection. To address this issue, we propose an iterative self-supervised method for generating forged images, as described in Algorithm 1.

This method uses copy-move, splicing, and inpainting as three primary forgery operations. By setting the number of iterations to k, we randomly selected a forged image and its corresponding forgery mask from an existing dataset. Each iteration uses the previously forged image as the base for the next, continuing this process until the iteration step is reached. The so-called self-supervision means that the pixel-level mask of the generated fake samples can be automatically inferred from the input fake image and its mask, without the need for manual annotation. This self-supervised generation approach not only yields a variety of single, double, and multiple forgery samples, resulting in different forgery types, but also significantly increases the number of forged pixels in the forged images, helping mitigate the impact of the high imbalance between authentic and forged pixels on the task of forgery localization.

| Algorithm 1 Iterative Self-Supervised Forgery Image Generation |

| Require: A forgery image I, the step k of iteration Ensure: The generated forgery image

|

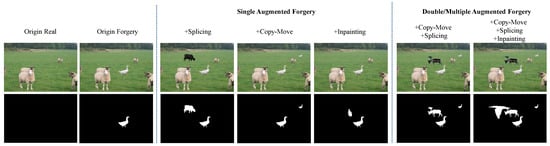

Figure 3 illustrates the generation of progressively complex forged samples over three iterations. The two leftmost columns present real and forged images originally taken from an existing dataset, with the latter showing one instance of splicing forgery. After the first iteration, single augmented forgeries are created, adding an extra forgery to the original splicing, resulting in double splicing forgeries, splicing combined with copy-move forgery, and splicing combined with inpainting forgery, respectively. As the iterations advance, increasingly diverse and sophisticated forgery samples emerge, such as double or multiple augmented forgeries. For a mathematical analysis of the relationship between sample scale and classification performance, please refer to [25]. These augmented forged samples can significantly improve the performance of forgery detection, as evidenced in the subsequent ablation study.

Figure 3.

Examples of single, double, and multiple forgeries created by iterative self-supervised forgery image generation.

3.3. Multi-Scale Edge Artifact Capturing Module

Statistical inconsistency is a key clue in image forgery detection. In the inner of the real or forged region, statistical consistency is generally well maintained. However, at the transition zones between real and forged region, statistical inconsistency becomes pronounced, facilitating the exposure of forgery traces. Based on this idea, we designed the Multi-Scale Edge Artifact Capturing Module (MEAC), whose structure is shown in the lower left corner (dashed box) of Figure 2. It starts by detecting edges using Laplacian edge detection operator, which guides subsequent convolutional layers in discovering forgery traces. The Laplacian is a second-order differential operator, which is inherently more sensitive to subtle intensity variations and high-frequency signals than first-order gradient operators like Sobel. It is capable of detecting fine-grained, weak, and non-directional edges, making it particularly suitable for detecting smooth, irregular transitions that frequently occur at the boundaries of tampered regions in forgery images, especially in local manipulations. Unlike directional operators such as Sobel, which emphasize edge orientation, the Laplacian operator provides omnidirectional sensitivity and better exposes inconsistent or noisy edge patterns, which are critical for accurate forgery localization. Considering the fact that forgery traces are low-level anomalies and to save computational resources, the subsequent convolutional layers employ a shallow stacking structure. This method filters and refines the response maps of the edge operators to discern genuine forgery edges. Forgery detection edge masks at multiple scales and their integrated edge masks are then compared with the groundtruth of forgery edges to further optimize the learnable parameters of the MEAC module.

3.4. Progressive Self-Adversarial Training

Adversarial samples are intentionally crafted to mislead targeted detectors into making erroneous decisions. During the training of forgery detectors, adversarial samples created based on the current model parameters of the detectors are integrated into the training process. Adversarial training helps toughen the detectors under more challenging conditions, enabling them to learn better forgery detection features. The previous work SATFL [11] investigated the benefits of alternating between standard and adversarial training from the beginning, which improves detection accuracy but also significantly raises computational demands. Starting adversarial training too early, when the detector’s ability to identify forgeries is still underdeveloped, can impede the detector’s progress. As the detector’s performance in identifying forgeries advances, it is advantageous to gradually increase the frequency and intensity of adversarial training to boost the detector’s performance.

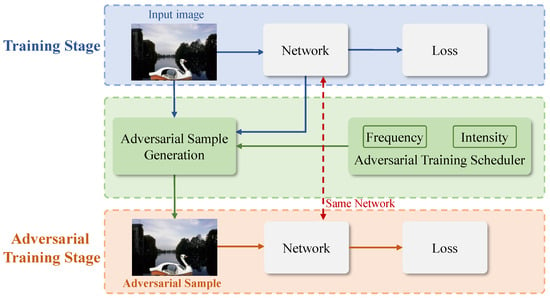

Based on these ideas, we propose a progressive self-adversarial training strategy, as depicted in Figure 4. This strategy consists of two phases: a training phase and an adversarial training phase. During the training phase, the network model is trained with samples from the training set. In the adversarial training phase, adversarial samples are created using the samples from the training set, combined with the current detector parameters, under the control of the Adversarial Training Scheduler. The Adversarial Training Scheduler acts to progressively increase the frequency and intensity of adversarial training, allowing the forgery detector to improve its performance against increasingly difficult samples.

Figure 4.

Progressive self-adversarial training.

In this paper, the FGSM [26] method is used for generating adversarial samples. The frequency of adversarial training is defined as:

where k denotes the number of training stages, i denotes the current training stage number (starting from 0), t denotes the current epoch number, T denotes the total number of epochs during the entire training process, is a hyperparameter controlling the interval between two self-adversarial training, and means that for, every standard iterations in stage i, one self-adversarial training iteration is performed.

In our setting (, , ), the four-stage frequencies are as follows:

- Stage 0 (Epoch 0–4): (meaning that insert 1 self-adversarial training every 5 standard training batches);

- Stage 1 (Epoch 5–9): ;

- Stage 2 (Epoch 10–14): ;

- Stage 3 (Epoch 15–19): .

Taking the above setting as an example, the regular training consists of 20 epochs, while the additional training introduced by progressive self-adversarial training is epochs. Compared with the SATFL [11] training scheme (where one adversarial training epoch is added after each standard training epoch, leading to 20 additional training epochs), our progressive self-adversarial scheme reduces the number of training epochs by about .

The intensity of adversarial training is randomly sampled from the numerical range , with the upper limit gradually increasing. The purpose of introducing randomness is to prevent the detector from overfitting the adversarial disturbances of a specific intensity.

where denotes the intensity of the adversarial training at epoch t, and is a hyperparameter controlling the increasing speed of adversarial intensity.

3.5. Loss Function

The loss function is composed of image-level loss (classification loss), pixel-level loss (localization loss) and edge-level loss.

- The image-level loss is to drive the detector to accurately classify real and fake images. We use the binary cross-entropy loss for image-level loss, denoted by .

- The pixel-level loss is to measure the goodness of forgery localization performance. It is formulated as a combination of the Dice Loss [27] and the binary cross-entropy loss:where serves an auxiliary role, and thus is set to .

- The edge-level loss measures the effectiveness of edge prediction at various scales and their combined version, similar in form to pixel-level loss, but includes terms for different scales and a fusion term:where , indicating that we have four edge maps corresponding to four scales, respectively.

The total loss function is the weighted sum of the three losses:

In practical scenarios, if a task requires greater emphasis on image-level authenticity classification, the weight of the classification loss () can be correspondingly increased. Conversely, when the precise localization of tampered regions is prioritized, higher weights can be assigned to the pixel-level and edge-level loss terms ( and ), respectively.

In this work, however, we do not presume any specific application context. Our objective is to achieve balanced performance between image-level classification and pixel-level forgery localization. To this end, we assign equal weights to all loss components, empirically setting and . This setting facilitates stable training and consistently yields favorable results for both subtasks.

4. Experimental Evaluation

In this section, we validate the performance of our method on four current mainstream forgery detection datasets at both the image level and pixel level. We also compare it with existing methods, and assess its robustness and conduct ablation studies.

4.1. Experiment Setup

(1) Datasets: We use the same training dataset as CAT-Net v2 [7]. For testing, we employ four widely recognized public datasets: NIST (NIST+ is the same test dataset as used by TruFor, including both real and forgery images.), Coverage [28], Columbia [29], and CASIA v1+ (CASIA v1+ replaces the real images in CASIA v1, following MVSS-Net’s operation) [30,31]. These datasets include three types of tampering operations: splicing, copy-move, and inpainting. There is no overlap between the training and test datasets.

(2) Evaluation metrics: We evaluate the pixel-level localization with binary-F1, Areas Under the Receiver Operating Characteristic Curve (AUC), and Intersection over Union (IOU) scores. For image-level classification, we use binary-F1, AUC, and Accuracy (ACC) scores. A standard 0.5 threshold is used for the F1 metric.

(3) Implementation details: All experiments were conducted on a server equipped with 4 NVIDIA A100 80GB GPUs (NVIDIA Corporation; Santa Clara, CA, USA), using the PyTorch v1.13.0 framework. Models were trained with a batch size of 32, an initial learning rate of 1 × 10−4, and the AdamW optimizer with a weight decay of 1 × 10−5. A ReduceLROnPlateau scheduler was employed to dynamically adjust the learning rate, reducing it by if the validation loss did not improve for two consecutive epochs. The training process comprised 20 epochs, with model checkpoints saved based on validation performance. The validation set was used for both early stopping and learning rate adjustment, while the test set was strictly reserved for final performance evaluation.

4.2. Performance Comparison with Existing Methods

The performance comparison involves six leading learning-based methods for image forgery detection, including PSCC [8], ObjectFormer [14], MVSS-Net [13], IF-OSN [16], CAT-Net v2 [7], and TruFor [17].

For pixel-level forgery localization, as shown in Table 2, our method achieves the highest F1, AUC, and IOU scores for all four datasets tested. It outperforms the second-best method by 15.2% in F1, 4.3% in AUC, and 17.1% in IOU on average, demonstrating our method’s superiority in forgery localization. TruFor ranks highest among the other methods for the NIST and Coverage datasets. Meanwhile, CAT-Net v2 excels on the CASIA v1+ dataset with an F1 score of 0.715. Our method enhances feature extraction and generalization capabilities in forgery detection through our proposed techniques, achieving significant F1 score gains of 14.6%, 14.8%, 14.8%, and 16.7% on four benchmark datasets (NIST, Coverage, Columbia, and CASIA v1+), respectively.

Table 2.

Pixel-level binary-F1, Areas Under the Receiver Operating Characteristic Curve (AUC) and Intersection over Union (IOU) scores of the comparative methods. F1 and IOU fixed threshold: 0.5. The best results are in bold. The second-best results are underlined.

As shown in Table 3, our method demonstrates significant advantages in image-level forgery detection across four benchmark datasets. In terms of average performance metrics, our approach achieves state-of-the-art results on all three key indicators—F1 score, AUC value, and ACC accuracy—outperforming the second-best method by substantial margins of 6.6%, 11.4%, and 9.9%, respectively. Specifically on the Coverage and CASIA V1+ datasets, our method maintains comprehensive superiority across all metrics: it surpasses competitors by 9.4% in F1 score and 21.4% in AUC value on Coverage, while achieving leads of 12.7% and 11.1% on CASIA v1+. Although our method shows slightly lower performance on the Columbia dataset, with F1 and AUC scores being 4.6% and 0.2% below TruFor, respectively, this marginal difference is statistically insignificant given the dataset’s limited sample size. Comprehensive experimental analysis confirms that our approach delivers statistically significant performance improvements compared to current state-of-the-art methods.

Table 3.

Image-level binary-F1, AUC, Accuracy (ACC) scores of the comparative methods. The best results are in bold. The second-best results are underlined.

To further assess the classification performance, we additionally present examples of the precision–recall (PR) curve and the receiver operating characteristic (ROC) curve for the image-level authenticity classification task. As some datasets contain a limited number of samples—potentially leading to unstable or less representative ROC and PR curves—we perform this analysis on the CASIA v1+ dataset, which offers a relatively larger sample size (800 real and 920 forged images).

Specifically, we compare our proposed method with two representative baselines—TruFor and MVSS—both of which support image-level binary classification. As shown in Figure 5, our method consistently outperforms these baselines in both ROC and PR curves, thereby further demonstrating its superiority in terms of classification accuracy.

Figure 5.

ROC and PR curves of our method, TruFor, and MVSS-Net on CASIA v1+.

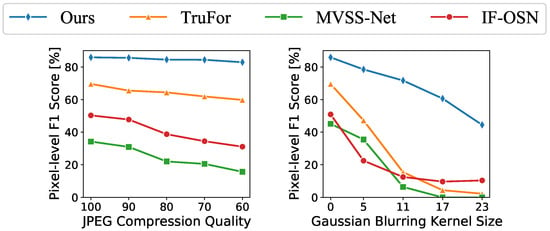

4.3. Robustness Study

We perform robustness analyses on two prevalent image post-processing operations: JPEG compression and Gaussian blurring. Our method demonstrates superior resistance to both JPEG compression and Gaussian blurring, consistently outperforming the other three methods, as illustrated in Figure 6. In the JPEG compression test, our method exhibits minimal performance degradation. In the Gaussian blurring test, while the performance of the other methods significantly degrades with larger kernel sizes, resulting in near-zero F1 scores, our method still maintains high performance. This confirms our method’s robustness.

Figure 6.

Robustness comparison of three leading methods in forgery localization.

4.4. Ablation Study

In this subsection, we evaluate our proposed loss function and the individual methods contributing to our approach through ablation experiments. These pixel-level forgery localization experiments are conducted on both real and forged images.

(1) Ablation study on loss functions: We evaluate the impact of localization loss and edge loss on the performance of our method. The forgery localization results are shown in Table 4, and the binary classification results are provided in Table A2 in Appendix B. DiceLoss () proves less effective for distinguishing between authentic and tampered images. The composite loss function, DiceBCELoss (), which integrates weighted DiceLoss with BCELoss, significantly enhances performance, elevating the average F1 score by 28.7%. The addition of edge loss () further boosts the model’s localization capabilities, with the average F1 score rising by 5% and the IOU score by 6.6%. This underscores the efficacy of our edge decoder and the integrated multi-supervised loss function.

Table 4.

Pixel-level forgery localization performance (F1 and IoU) under different loss functions on authentic and forged images. indicates DiceLoss. indicates DiceBCELoss combining DiceLoss and weighted BCELoss. indicates edge loss. ✓ indicates the module is used; ✗ indicates the module is not used. The best results are in bold. The second-best results are underlined.

(2) Ablation study on training methods: We evaluate the effectiveness of iterative self-supervised forgery image generation and progressive adversarial training, and analyze the impact of training with authentic images on the experimental outcomes. The forgery localization results are shown in Table 5, and the binary classification results are provided in Table A3 in Appendix C. The results indicate that training solely using forged images is the least effective method. Incorporating authentic images significantly improved the training results, with a 9.3% increase in F1 score for localization, underscoring the importance of including authentic images in the training process. The introduction of binary classification loss led to an additional improvement of 1.1% in the F1 score for localization. The subsequent integration of the iterative self-supervised forgery image generation strategy resulted in a further 3.2% enhancement in the F1 score. Lastly, the application of progressive self adversarial training boosted the F1 score by an additional 1.2%.

Table 5.

Pixel-level forgery localization performance (F1 and IoU) under different training strategies on authentic and forged images. indicates the use of authentic images. indicates the use of classification loss. Self-sup. represents our proposed iterative self-supervised forgery image generation. Self-adv. represents our proposed progressive self-adversarial training strategy. ✓ indicates the module is used; ✗ indicates the module is not used. The best results are in bold. The second-best results are underlined.

To empirically validate the effectiveness of this strategy, we conducted ablation studies on the number of augmentation iterations. As shown in Table 6, increasing the number of iterations improves the pixel-level localization performance, as measured by IoU, across four datasets.

Table 6.

IoU scores under different numbers of self-supervised iterations. The best results are in bold. The second-best results are underlined.

5. Conclusions

In this study, we introduced an image tampering detection and localization algorithm that integrates self-supervised data augmentation with progressive adversarial training. By incorporating an edge decoder module, our method focuses more precisely on the details of forged edges, enhancing its accuracy in pinpointing tampered areas. Additionally, our proposed iterative self-supervised forgery image generation technique effectively leverages the forged information in the dataset, thereby improving the method’s fitting capabilities. The progressive self-supervised adversarial method further enhances the robustness and stability of the method. Our scheme achieves good performance in forgery localization and binary classification, effectively identifying forged regions while significantly reducing the false alarm rate.

5.1. Limitations

We recognize the limitations of our study, acknowledging that there is room for performance enhancement on specific datasets. For example, when evaluated on the Columbia dataset, our solution recorded a suboptimal AUC, marginally trailing the leading current results, which suggests further potential for improvement on certain datasets.

5.2. Future Work

Our future work will involve gathering and enhancing larger-scale forgery datasets from real-world scenarios, as well as investigating more robust forgery detection frameworks to better address the challenges of real-world scenarios.

Author Contributions

Conceptualization, J.Z. and J.Y.; Methodology, H.Z., J.Z. and J.Y.; Validation, H.Z.; Formal analysis, H.Z. and J.Y.; Investigation, H.Z.; Resources, J.Z. and J.Y.; Data curation, H.Z.; Writing—original draft, H.Z.; Writing—review & editing, H.Z., J.Z. and J.Y.; Visualization, H.Z. and J.Y.; Project administration, J.Y.; Funding acquisition, J.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded in part by Shenzhen Science and Technology Program under Grant JCYJ20210324102204012, Grant JCYJ20230807111207015, Grant 202206193000001 and Grant 20220816225523001; and in part by Guangdong Basic and Applied Basic Research Foundation under Grant 2023A1515030032.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

Author Jishen Zeng was employed by the company Alibaba Group. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Appendix A. Test Datasets

Table A1 provides statistics for the five prevalent datasets used in experiments, detailing the number of authentic and forged image samples, the types of forgeries involved (splicing, copy-move, and inpainting), and the average percentage of forged pixels in each forged image within a specific dataset. It is noted that the NIST dataset contains only forged images, with no real counterparts, whereas the NIST+, Coverage, Columbia, and CASIA v1+ datasets include both forged and real images, with a relatively balanced number of each class. At the pixel level, we also calculated the average percentage of forged pixels per image across all forged images in each of these datasets. As shown, the percentage of forged pixels in the Columbia dataset stands at only 26.4%, indicating a ratio of approximately 1:3 between forged and real pixels. In three of the datasets, the percentage of forged pixels is less than 9% ( 1:11), indicating a ratio of less than 1:10 between the forged and real pixels. This significant imbalance poses a high challenge for pixel-level forgery detection. Regarding the types of forgeries, the NIST and NIST+ datasets cover three basic forgery types, while the other three datasets cover one to two types, showing an imbalance in the number of images among different types of forgeries. This imbalance has motivated us to propose the iterative self-supervised forgery image generation, which could alleviate the issue of a small proportion of forged pixels relative to genuine pixels and provide a richer variety of single and multiple forged samples.

Table A1.

Sample number and forgery type of the test datasets. ✓ indicates the dataset contains this forgery type. ✗ indicates absence of this forgery type.

Table A1.

Sample number and forgery type of the test datasets. ✓ indicates the dataset contains this forgery type. ✗ indicates absence of this forgery type.

| Sample Number | Forgery Type | Forged Pixel Percentage | ||||

|---|---|---|---|---|---|---|

| Authentic | Forged | Splicing | Copy-Move | Inpainting | ||

| NIST | 0 | 564 | ✓ | ✓ | ✓ | 7.5% |

| NIST+ | 160 | 160 | ✓ | ✓ | ✓ | 6.2% |

| Coverage | 100 | 100 | ✗ | ✓ | ✗ | 11.3% |

| Columbia | 183 | 180 | ✓ | ✗ | ✗ | 26.4% |

| CASIA v1+ | 800 | 920 | ✓ | ✓ | ✗ | 8.7% |

Appendix B. Ablation Study on Loss Functions (Image-Level Forgery Detection)

We demonstrate the impact of different loss functions on the performance of pixel-level forgery detection (forgery localization) in the main text. Here, we supplement this by examining their effects on image-level forgery detection (binary classification between real and forged images). As shown in Table A2, the Diceloss () performs well in binary classification with an average AUC of 87.1% and an average ACC of 81.1%, though it shows poorer performance in pixel-level forgery detection (see Table 4 in the main text). Using the localization loss () results in a slight decrease in binary classification performance compared to the Diceloss, but significantly improves the forgery localization, with a 4.5% increase in F1 score and a 5.8% increase in IOU (also see Table 4 in the main text). Adding the edge loss () and incorporating with the localization loss () achieves optimal or near-optimal results in most binary classification tests, increasing the average binary classification AUC by 2.8% and ACC by 2%. Combining Table A2 in Appendix B and Table 4, the addition of the edge loss () considerably enhances both image-level classification and pixel-level localization performance, validating the effectiveness of our proposed multi-scale edge decoder.

Table A2.

Image-level forgery detection performance (AUC and ACC) under different loss functions. indicates DiceLoss. indicates DiceBCELoss combining DiceLoss and weighted BCELoss. indicates edge loss. ✓ indicates the module is used; ✗ indicates the module is not used. The best results are in bold. The second-best results are underlined.

Table A2.

Image-level forgery detection performance (AUC and ACC) under different loss functions. indicates DiceLoss. indicates DiceBCELoss combining DiceLoss and weighted BCELoss. indicates edge loss. ✓ indicates the module is used; ✗ indicates the module is not used. The best results are in bold. The second-best results are underlined.

| NIST+ | Coverage | Columbia | CASIA v1+ | Mean | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| AUC | ACC | AUC | ACC | AUC | ACC | AUC | ACC | AUC | ACC | |||

| ✓ | ✗ | ✗ | 0.718 | 0.659 | 0.787 | 0.695 | 0.994 | 0.967 | 0.986 | 0.922 | 0.871 | 0.811 |

| ✗ | ✓ | ✗ | 0.693 | 0.656 | 0.737 | 0.685 | 0.990 | 0.956 | 0.987 | 0.927 | 0.852 | 0.806 |

| ✗ | ✓ | ✓ | 0.759 | 0.688 | 0.786 | 0.700 | 0.997 | 0.981 | 0.981 | 0.934 | 0.880 | 0.826 |

Appendix C. Ablation Study on Training Methods (Image-Level Forgery Detection)

In the main text, we demonstrate the impact of different training methods on pixel-level forgery detection performance. Here, we supplement this with the effects of different training methods on image-level forgery detection performance. We conduct ablation experiments to assess the impact of using authentic images (+), iterative self-supervised forgery image generation (+Self-sup.), and progressive self-adversarial training (+Self-adv.) for training the detector. As shown in Table A3, the results show that training solely with forged images yields the poorest detector performance. Introducing authentic images (+) into the training significantly increased the binary classification AUC by 29.8%, highlighting the necessity of incorporating real image samples to reduce the risk of misclassifying real images. Adding the binary classification loss (+) further improved the AUC by 16.9%. Incorporating iterative self-supervised forgery image generation (+Self-sup.) led to a modest increase in binary classification AUC by 0.3% and ACC by 2%, which, although not substantial for image-level forgery detection, significantly enhanced the pixel-level forgery detection performance. Finally, implementing progressive self-adversarial training (+Self-adv.) achieved the best results in most metrics for pixel-level forgery localization, as presented in Table 5, with an optimal average binary classification AUC of 85.5%. Overall, the incremental addition of each option enhanced performance, validating the effectiveness of the proposed training methods.

Table A3.

Image-level forgery detection performance (AUC and ACC) under different training strategies. indicates the use of authentic images. indicates the use of classification loss. Self-sup. represents our proposed iterative self-supervised forgery image generation. Self-adv. represents our proposed progressive self-adversarial training strategy. ✓ indicates the module is used; ✗ indicates the module is not used. The best results are in bold. The second-best results are underlined.

Table A3.

Image-level forgery detection performance (AUC and ACC) under different training strategies. indicates the use of authentic images. indicates the use of classification loss. Self-sup. represents our proposed iterative self-supervised forgery image generation. Self-adv. represents our proposed progressive self-adversarial training strategy. ✓ indicates the module is used; ✗ indicates the module is not used. The best results are in bold. The second-best results are underlined.

| + | + | +Self-sup. | +Self-adv. | NIST+ | Coverage | Columbia | CASIA v1+ | Mean | |||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| AUC | ACC | AUC | ACC | AUC | ACC | AUC | ACC | AUC | ACC | ||||

| ✗ | ✗ | ✗ | ✗ | 0.517 | 0.497 | 0.445 | 0.500 | 0.068 | 0.154 | 0.498 | 0.645 | 0.382 | 0.404 |

| ✓ | ✗ | ✗ | ✗ | 0.633 | 0.572 | 0.478 | 0.490 | 0.896 | 0.777 | 0.712 | 0.661 | 0.680 | 0.625 |

| ✓ | ✓ | ✗ | ✗ | 0.772 | 0.659 | 0.669 | 0.605 | 0.995 | 0.984 | 0.959 | 0.897 | 0.849 | 0.786 |

| ✓ | ✓ | ✓ | ✗ | 0.693 | 0.656 | 0.737 | 0.685 | 0.990 | 0.956 | 0.987 | 0.927 | 0.852 | 0.806 |

| ✓ | ✓ | ✓ | ✓ | 0.705 | 0.641 | 0.733 | 0.670 | 0.990 | 0.948 | 0.992 | 0.934 | 0.855 | 0.798 |

References

- Wang, C.; Huang, Z.; Qi, S.; Yu, Y.; Shen, G.; Zhang, Y. Shrinking the semantic gap: Spatial pooling of local moment invariants for copy-move forgery detection. IEEE Trans. Inf. Forensics Secur. 2023, 18, 1064–1079. [Google Scholar] [CrossRef]

- Liu, Y.; Xia, C.; Zhu, X.; Xu, S. Two-stage copy-move forgery detection with self deep matching and proposal superglue. IEEE Trans. Image Process. 2021, 31, 541–555. [Google Scholar] [CrossRef] [PubMed]

- Tan, Y.; Li, Y.; Zeng, L.; Ye, J.; Wang, W.; Li, X. Multi-scale Target-Aware Framework for Constrained Splicing Detection and Localization. In Proceedings of the 31st ACM International Conference on Multimedia, Ottawa, ON, Canada, 29 October–3 November 2023; pp. 8790–8798. [Google Scholar]

- Hadwiger, B.C.; Riess, C. Deep metric color embeddings for splicing localization in severely degraded images. IEEE Trans. Inf. Forensics Secur. 2022, 17, 2614–2627. [Google Scholar] [CrossRef]

- Wu, H.; Zhou, J. IID-Net: Image Inpainting Detection Network via Neural Architecture Search and Attention. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 1172–1185. [Google Scholar] [CrossRef]

- Zhang, Y.; Fu, Z.; Qi, S.; Xue, M.; Cao, X.; Xiang, Y. PS-Net: A Learning Strategy for Accurately Exposing the Professional Photoshop Inpainting. IEEE Trans. Neural Networks Learn. Syst. 2023, 35, 13874–13886. [Google Scholar] [CrossRef] [PubMed]

- Kwon, M.J.; Nam, S.H.; Yu, I.J.; Lee, H.K.; Kim, C. Learning JPEG Compression Artifacts for Image Manipulation Detection and Localization. Int. J. Comput. Vis. 2022, 130, 1875–1895. [Google Scholar] [CrossRef]

- Liu, X.; Liu, Y.; Chen, J.; Liu, X. PSCC-Net: Progressive Spatio-Channel Correlation Network for Image Manipulation Detection and Localization. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 7505–7517. [Google Scholar] [CrossRef]

- Yang, C.; Li, H.; Lin, F.; Jiang, B.; Zhao, H. Constrained R-CNN: A general image manipulation detection model. In Proceedings of the 2020 IEEE International Conference on Multimedia and Expo (ICME), London, UK, 6–10 July 2020. [Google Scholar]

- Cozzolino, D.; Verdoliva, L. Noiseprint: A CNN-based camera model fingerprint. arXiv 2018, arXiv:1808.08396. [Google Scholar] [CrossRef]

- Zhuo, L.; Tan, S.; Li, B.; Huang, J. Self-Adversarial Training Incorporating Forgery Attention for Image Forgery Localization. IEEE Trans. Inf. Forensics Secur. 2022, 17, 819–834. [Google Scholar] [CrossRef]

- Hu, X.; Zhang, Z.; Jiang, Z.; Chaudhuri, S.; Yang, Z.; Nevatia, R. SPAN: Spatial Pyramid Attention Network forImage Manipulation Localization. In Computer Vision–ECCV 2020, Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2020; pp. 312–328. [Google Scholar]

- Dong, C.; Chen, X.; Hu, R.; Cao, J.; Li, X. MVSS-Net: Multi-View Multi-Scale Supervised Networks for Image Manipulation Detection. arXiv 2021, arXiv:2112.08935. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Wu, Z.; Chen, J.; Han, X.; Shrivastava, A.; Lim, S.N.; Jiang, Y.G. ObjectFormer for Image Manipulation Detection and Localization. arXiv 2022, arXiv:2203.14681. [Google Scholar]

- Wu, Y.; AbdAlmageed, W.; Natarajan, P. ManTra-Net: Manipulation Tracing Network for Detection and Localization of Image Forgeries With Anomalous Features. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Wu, H.; Zhou, J.; Tian, J.; Liu, J.; Qiao, Y. Robust Image Forgery Detection against Transmission over Online Social Networks. IEEE Trans. Inf. Forensics Secur. 2022, 17, 443–456. [Google Scholar] [CrossRef]

- Guillaro, F.; Cozzolino, D.; Sud, A.; Dufour, N.; Verdoliva, L. TruFor: Leveraging all-round clues for trustworthy image forgery detection and localization. arXiv 2022, arXiv:2212.10957. [Google Scholar]

- He, Y.; Li, Y.; Chen, C.; Li, X. Image Copy-Move Forgery Detection via Deep Cross-Scale PatchMatch. In Proceedings of the 2023 IEEE International Conference on Multimedia and Expo (ICME), Brisbane, Australia, 10–14 July 2023; pp. 2327–2332. [Google Scholar]

- Islam, A.; Long, C.; Basharat, A.; Hoogs, A. DOA-GAN: Dual-Order Attentive Generative Adversarial Network for Image Copy-Move Forgery Detection and Localization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Ma, X.; Du, B.; Liu, X.; Hammadi, A.; Zhou, J. IML-ViT: Image Manipulation Localization by Vision Transformer. arXiv 2023, arXiv:2307.14863. [Google Scholar]

- Fridrich, J.; Kodovsky, J. Rich Models for Steganalysis of Digital Images. IEEE Trans. Inf. Forensics Secur. 2012, 7, 868–882. [Google Scholar] [CrossRef]

- Bayar, B.; Stamm, M.C. Constrained Convolutional Neural Networks: A New Approach Towards General Purpose Image Manipulation Detection. IEEE Trans. Inf. Forensics Secur. 2018, 13, 2691–2706. [Google Scholar] [CrossRef]

- Woo, S.; Debnath, S.; Hu, R.; Chen, X.; Liu, Z.; Kweon, I.; Xie, S.; Ai, M. ConvNeXt V2: Co-designing and Scaling ConvNets with Masked Autoencoders. arXiv 2023, arXiv:2301.00808. [Google Scholar]

- Xiao, T.; Liu, Y.; Zhou, B.; Jiang, Y.; Sun, J. Unified Perceptual Parsing for Scene Understanding. In Computer Vision–ECCV 2018, Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2018; pp. 432–448. [Google Scholar]

- Salazar, A.; Vergara, L.; Vidal, E. A proxy learning curve for the bayes classifier. Pattern Recognit. 2023, 136, 109240. [Google Scholar] [CrossRef]

- Goodfellow, I.; Shlens, J.; Szegedy, C. Explaining and Harnessing Adversarial Examples. arXiv 2014, arXiv:1412.6572. [Google Scholar]

- Milletari, F.; Navab, N.; Ahmadi, S.A. V-Net: Fully Convolutional Neural Networks for Volumetric Medical Image Segmentation. In Proceedings of the 2016 Fourth International Conference on 3D Vision (3DV), Stanford, CA, USA, 25–28 October 2016. [Google Scholar] [CrossRef]

- Wen, B.; Zhu, Y.; Subramanian, R.; Ng, T.T.; Shen, X.; Winkler, S. COVERAGE—A novel database for copy-move forgery detection. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016. [Google Scholar]

- Hsu, Y.F.; Chang, S.F. Detecting Image Splicing using Geometry Invariants and Camera Characteristics Consistency. In Proceedings of the 2006 IEEE International Conference on Multimedia and Expo, Toronto, ON, Canada, 9–12 July 2006. [Google Scholar]

- Guan, H.; Kozak, M.; Robertson, E.; Lee, Y.; Yates, A.N.; Delgado, A.; Zhou, D.; Kheyrkhah, T.; Smith, J.; Fiscus, J. MFC Datasets: Large-Scale Benchmark Datasets for Media Forensic Challenge Evaluation. In Proceedings of the 2019 IEEE Winter Applications of Computer Vision Workshops (WACVW), Waikoloa Village, HI, USA, 7–11 January 2019. [Google Scholar]

- Dong, J.; Wang, W.; Tan, T. CASIA Image Tampering Detection Evaluation Database. In Proceedings of the 2013 IEEE China Summit and International Conference on Signal and Information Processing, Beijing, China, 6–10 July 2013. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).