Abstract

Probability calibration and decision threshold selection are fundamental aspects of risk prediction and classification, respectively. A strictly proper loss function is used in clinical risk prediction applications to encourage a model to predict calibrated class-posterior probabilities or risks. Recent studies have shown that training with focal loss can improve the discriminatory power of gradient-boosted decision trees (GBDT) for classification tasks with an imbalanced or skewed class distribution. However, the focal loss function is not a strictly proper loss function. Therefore, the output of GBDT trained using focal loss is not an accurate estimate of the true class-posterior probability. This study aims to address the issue of poor calibration of GBDT trained using focal loss in the context of clinical risk prediction applications. The methodology utilizes a closed-form transformation of the confidence scores of GBDT trained with focal loss to estimate calibrated risks. The closed-form transformation relates the focal loss minimizer and the true-class posterior probability. Algorithms based on Bayesian hyperparameter optimization are provided to choose the focal loss parameter that optimizes discriminatory power and calibration, as measured by the Brier score metric. We assess how the calibration of the confidence scores affects the selection of a decision threshold to optimize the balanced accuracy, defined as the arithmetic mean of sensitivity and specificity. The effectiveness of the proposed strategy was evaluated using lung transplant data extracted from the Scientific Registry of Transplant Recipients (SRTR) for predicting post-transplant cancer. The proposed strategy was also evaluated using data from the Behavioral Risk Factor Surveillance System (BRFSS) for predicting diabetes status. Probability calibration plots, calibration slope and intercept, and the Brier score show that the approach improves calibration while maintaining the same discriminatory power according to the area under the receiver operating characteristics curve (AUROC) and the H-measure. The calibrated focal-aware XGBoost achieved an AUROC, Brier score, and calibration slope of 0.700, 0.128, and 0.968 for predicting the 10-year cancer risk, respectively. The miscalibrated focal-aware XGBoost achieved equal AUROC but a worse Brier score and calibration slope (0.140 and 1.579). The proposed method compared favorably to the standard XGBoost trained using cross-entropy loss (AUROC of 0.755 versus 0.736 in predicting the 1-year risk of cancer). Comparable performance was observed with other risk prediction models in the diabetes prediction task.

1. Introduction

Risk prediction is one of the most important tasks in clinical research. It consists of estimating the probability that an individual will experience an event or outcome of interest. A model estimates the probability of the event based on features such as age, sex, body mass index, blood type, or previous history of malignancy. Estimated probabilities are useful to group patients into different risk strata such as low or high risk. Patients in the high-risk group can receive preemptive treatment. For example, solid organ transplant recipients may be closely monitored if they are at risk of post-transplant malignancy [1]. They may also receive anti-viral medications to prevent serious adverse events arising from viral infections [2]. A solid organ donor can be matched to a specific recipient on the waiting list to improve survival after transplantation [3]. Another example of clinical risk prediction applications includes heart failure assessment. Individuals with genetic risk factors can undergo non-invasive imaging to check for heart disease [4]. Individuals at high risk for heart failure can be treated preemptively with medications to manage blood pressure [5]. Preemptive treatment can reduce heart failure diagnoses and save money for the healthcare system [6].

Risk prediction models are assessed by their discrimination or their ability to separate high-risk patients from low-risk patients. A model with perfect discriminatory power assigns higher probabilities to all patients with a medical condition or “event” and lower probabilities to patients who do not have the condition or event. The area under the receiver operating characteristics curve (AUROC) and the H-measure are evaluation metrics used to assess the discriminatory power of risk prediction models and probabilistic classifiers [7]. Clinical risk prediction models are also expected to be calibrated [8]. Estimated probabilities or risks are calibrated if they match the frequency of the event. For example, if a model provides an estimated probability of given a set of patient variables (features), then 20 out of 100 similar patients should experience the event. The evaluation of probability calibration has generally received less attention than discriminatory power, although it is important for clinical decision-making [9,10]. A miscalibrated model can underestimate or overestimate risks. Underestimation may prevent patients from receiving the necessary medical treatment, while overestimation can lead to unnecessary and costly treatment. Calibration curves, calibration slope, and intercept are commonly used in the risk prediction literature to assess probability calibration [8,10]. The Brier score metric summarizes both discrimination and calibration and is considered an overall accuracy metric [9].

One of the most commonly used models in clinical risk prediction applications is logistic regression. The logistic regression model assumes the logit (logarithmic odds) of the estimated posterior probability to be a linear function of the variables or features. Logistic regression offers a straightforward interpretation for clinical professionals. More complex machine learning models, such as gradient-boosted decision trees (GBDT), have attracted attention in medical fields, where large electronic health records are commonly used. GBDT is an iterative method that adds individual decision trees to improve the performance of an ensemble of trees [11]. GBDT has attributes that make it capable of handling electronic health records effectively. GBDT can handle heterogeneous features (numerical and categorical) available in electronic health records and identify non-linear interactions automatically. GBDT variants also offer regularization techniques to prevent overfitting noisy electronic health records [12]. Efficient implementations of GBDT include extreme gradient-boosting machine (XGBoost) [13], light gradient-boosting machine (LightGBM) [14], CatBoost [15], and PaloBoost [12].

GBDT models offer the flexibility to optimize a smooth and differentiable loss function to guide the decision tree training process. Each decision tree in the ensemble is fitted to the pseudo-residuals or negative gradient of the loss function. The standard loss function for probability estimation and classification in GBDT is the cross-entropy loss function (also known as log loss or negative log likelihood). Recent studies related to class-imbalanced data classification have combined GBDT with the focal loss function to improve classification performance [16,17,18,19,20,21,22,23]. The focal loss adds a modulating factor to the cross-entropy loss function. This factor modulates the relative loss of the samples according to the accuracy of the estimated confidence scores [24]. This modulation effect guides the training process to focus on the samples with large losses (“hard-to-classify” samples). This effect may be desirable in applications such as clinical risk prediction and object detection because it allows the training process to focus on rare and difficult samples [25,26]. Previous studies that combined GBDT with focal loss were primarily concerned with classification tasks and evaluated predictive performance based on discrimination and classification metrics. Training GBDT with focal loss leads to miscalibrated confidence scores because the loss function is not strictly proper [27]. Hence, these confidence scores cannot be interpreted as reliable class-posterior probabilities. In class probability estimation, the expected loss of a strictly proper loss function is minimized if and only if the estimated conditional distribution of the outcome variable is equal to the true distribution [28]. Therefore, strictly proper loss functions are fundamental for prediction tasks that require accurate probabilities.

In this study, we investigate the focal loss function and GBDT in the context of risk prediction and classification of clinical data with a binary label or event. A recent theoretical study on focal loss for class-posterior probability estimation proposed a closed-form transformation of confidence scores to recover the true class-posterior probabilities [27]. The transformation is known as the transformation. The transformation relates the focal loss minimizer and the true class-posterior probability. It can be applied to binary and multi-class prediction tasks that require calibrated class-posterior probabilities.

The contributions of this study are the following: (1) We investigate the application of the transformation to GBDT trained using focal loss and evaluate the calibration of confidence scores for clinical risk prediction. (2) We propose two algorithms based on Bayesian hyperparameter optimization and K-fold cross-validation to choose the focal loss parameter and optimize discriminatory power and calibration (as measured by the Brier score). (3) We investigate the adjustment of the decision threshold in combination with GBDT, focal loss, and transformation. We specifically choose a decision threshold to optimize the arithmetic mean of sensitivity and specificity (balanced accuracy) for classification. (4) We investigate the performance of these techniques in two important clinical risk prediction tasks: prediction of cancer in lung transplant recipients and prediction of diabetes. We use real datasets extracted from the Scientific Registry of Transplant Recipients (SRTR) and the Behavioral Risk Factor Surveillance System (BRFSS). We provide comparisons with GBDT trained using the standard cross-entropy loss and unregularized and regularized logistic regression. We also provide comparisons with GBDT calibrated with Platt scaling and isotonic regression.

The paper is organized as follows: Section 2 discusses previous studies on GBDT for clinical risk prediction, custom loss functions for GBDT, and probability calibration. We describe gaps in previous studies that modified the loss function of GBDT. Section 3 describes the methodology. It is divided into five subsections. Section 3.1 introduces the cross-entropy loss, focal loss, strictly proper losses, and the transformation for risk prediction tasks. Section 3.2 introduces the training objective of GBDT and regularized GBDT. Section 3.3 introduces Bayesian hyperparameter optimization. This section describes two algorithms to tune the GBDT and choose the focal loss parameter. We also provide a detailed discussion of hyperparameter values and distributions. Section 3.4 discusses the decision threshold that will be used to calculate the classification metrics. The section also discusses the importance of calibrated probabilities in optimizing the balanced accuracy metric for classification tasks. Section 3.5 introduces the relevant metrics for risk prediction and classification, including discrimination, calibration, and classification metrics. Section 4 describes the experiments. It is divided into three subsections. Section 4.1 describes the datasets from the SRTR and BRFSS databases. Section 4.2 describes the design of the experiments. Section 4.3 presents the results of the experiments. Section 5 provides a discussion of the study and the main results. Finally, the conclusion summarizes the study and suggests future directions for researchers.

2. Related Works

2.1. GBDT in Clinical Risk Prediction

Seto et al. studied the probability calibration of LightGBM for the prediction of diabetes using the Kokuho database from Japan. The authors reported that LightGBM produced more accurate probabilistic predictions (measured by the expected calibration error) compared to logistic regression as the sample size increased beyond [29]. Ma et al. applied task-wise split gradient boosting trees (TSGB) to predict venous thromboembolism (VTE) across different hospital departments [30]. TSGB is based on GBDT and showed a superior discriminatory power (measured by the AUROC) compared to logistic regression. However, the study did not assess the calibration of the proposed TSGB model. Another study found XGBoost to achieve the best performance among various models in predicting graft survival after kidney transplantation [31]. The study used discrimination and classification metrics including AUROC, accuracy, sensitivity, specificity, and F1-measure, but did not assess the calibration of the model. However, other studies have found that GBDT and logistic regression have comparable performance in some clinical risk prediction tasks [32,33]. Bae et al. compared logistic regression and XGBoost in predicting kidney transplant outcomes using the Scientific Registry of Transplant Recipients (SRTR). They found that AUROC and overall accuracy (measured by the Brier score) were comparable in different tasks, such as predicting delayed graft function and acute rejection [34]. The authors found that the AUROC of the models was in the range 0.717–0.723 for the prediction of the delayed graft function. Austin et al. performed simulations using two large cardiovascular datasets to compare machine learning and statistical models [35]. They found that conventional logistic regression and GBDT had superior performance across a variety of data-generating processes. The authors used metrics such as AUROC, Brier score, calibration slope, and intercept. Miller et al. compared machine learning and statistical models and concluded that performance depended on the type of cross-validation used [36]. The authors used data from the United Network for Organ Sharing (UNOS) to predict mortality after heart transplantation. XGBoost obtained better AUROC than logistic regression in shuffled cross-validation (0.820 vs. 0.661). However, the AUROC values decreased to 0.657 and 0.641 for XGBoost and ridge logistic regression in rolling cross-validation, respectively. These studies used GBDT trained with the standard cross-entropy loss function and did not consider other loss functions such as focal loss.

2.2. GBDT and Custom Loss Functions

GBDT can handle the optimization of custom smooth loss functions. Several studies have combined GBDT with focal loss and other loss functions to mainly address problems in classifying data with imbalanced or skewed class distribution. Wang et al. compared XGBoost trained with different loss functions such as cross-entropy loss, weighted cross-entropy loss, and focal loss in Parkinson’s disease classification [16]. They reported that XGBoost combined with focal loss performed better than standard XGBoost according to the F1-score metric. Liu et al. combined a cost-sensitive version of LightGBM with focal loss to classify imbalanced credit scoring datasets [18]. The authors found that the combination of cost-sensitive learning and focal loss was superior or competitive on four different credit scoring tasks. Boldini et al. compared weighted cross-entropy loss, focal loss, logit-adjusted loss, label-distribution-aware margin loss, and equalization loss to train LightGBM for imbalanced bioassay classification [17]. The authors reported logit-adjusted loss and label-distribution-aware margin loss to achieve the most promising results in different experiments. Label-distribution-aware margin loss and logit-adjusted loss are designed to optimize classification metrics appropriate for class-imbalanced data. Cao et al. proposed the label-distribution-aware margin loss function to optimize a balanced generalization error bound [37]. Logit-adjusted loss was originally proposed as a loss function to minimize balanced error in deep long-tail learning [38]. Mushava et al. proposed unified focal loss combined with flexible link functions such as generalized extreme value (GEV) and exponentiated-exponential logistic (EEL) distribution-based link functions [19,20]. The authors used their proposed loss function to train XGBoost using the Freddie Mac mortgage loan database. They reported improvements in discriminatory power according to the H-measure metric. Rao et al. combined cost-sensitive CatBoost with focal loss for imbalanced customer churn prediction. The authors also used a modified adaptive synthetic (ADASYN) resampling approach to handle class-imbalanced data [22]. Luo et al. compared different loss functions to train LightGBM and XGBoost [23]. The authors compared weighted cross-entropy loss, focal loss, and asymmetric losses for binary, multi-class, and multi-label imbalanced datasets. They reported improvements in F1-score when training XGBoost with weighted cross-entropy and asymmetric losses. Fan et al. proposed to combine XGBoost with triage loss to diagnose vibration sensor failure [39]. The triage loss is a modification of the focal loss that further increases the weight of the hard-to-classify samples during the training process. The authors reported that XGBoost combined with triage loss outperformed XGBoost trained with cross-entropy and focal losses.

The previous studies that combined GBDT with custom losses were primarily concerned with classification tasks, so they did not assess the calibration of predicted probabilities or discuss whether the loss functions were strictly proper.

2.3. Probability Calibration

Probability calibration is concerned with the agreement between the estimated probabilities and the observed fraction of positive events. The assessment of probability calibration generally receives less attention than the assessment of discriminatory power and classification accuracy [10]. The topic has gained popularity in recent years in the literature on deep learning [40]. Studies in the area have focused on the properties of loss functions that encourage calibration, heuristics, and appropriate methods and metrics to evaluate performance. The properties of loss functions that encourage accurate probability estimation have been studied in [25,28,41,42,43,44]. Loss functions that encourage accurate probability estimation are known as strictly proper loss functions. Strictly proper loss functions are globally minimized if and only if the estimated class-posterior probabilities are equal to the ground-truth probabilities. Examples of these loss functions are the cross-entropy loss and the Brier score loss. However, note that minimization of strictly proper loss functions encourages calibration but is not sufficient to guarantee good calibration [44]. Strictly proper loss functions are also used in heuristic techniques for post hoc probability calibration. An example of a heuristic technique is the Platt scaling method proposed in [45]. In Platt scaling, a sigmoid function is applied to the predictions of a miscalibrated model. The parameters of the sigmoid function are found by minimizing the strictly proper cross-entropy loss over a calibration set or by performing a K-fold cross-validation over the training set. Temperature scaling is a variant of Platt scaling that uses a single parameter [40,46]. Temperature scaling is effective in improving the calibration of deep neural networks. Another method of calibrating probabilities is isotonic regression [47]. The isotonic regression method finds a step-wise non-decreasing function to map the miscalibrated confidence scores to calibrated probabilities. Isotonic regression can perform poorly when the sample size is small. Beta calibration is a technique that calibrates confidence scores using calibration maps based on the beta distribution [48]. Continuous model update strategies have also been studied to maintain calibration after deployment in a clinical setting [10]. Continuous update strategies are useful when the distribution of the population changes over time [49]. Many recent studies on probability calibration are also related to evaluation methods such as reliability diagrams and appropriate metrics for risk prediction applications [8,50,51].

3. Methods

This section describes the machine learning methodology for the prediction of clinical risk and the classification of data with an imbalanced or skewed class distribution. It consists of GBDT trained using focal loss, calibration, and Bayesian optimization. The proposed approach is also combined with an empirical decision threshold to optimize the arithmetic mean of sensitivity and specificity (balanced accuracy). We consider the problem of risk prediction using data with a binary class label. We also consider the classification of binary class-imbalanced data through decision threshold adjustment. Let be a training set consisting of n samples, where is the p-dimensional feature vector and is the binary class label. The first goal is to learn a risk prediction model, . The risk prediction model must provide a reliable estimate of the true class-posterior probability . The second goal is to learn a binary classification model , where is an approximation of the true function . We assign a sample to one of two classes by thresholding the class-posterior probability using a decision threshold estimated from the training data.

3.1. Focal Loss for Risk Prediction

The most common loss function used for risk prediction is the binary cross-entropy loss (also known as log loss or negative log-likelihood). The cross-entropy loss is a strictly proper loss function, and it is used to estimate the true class-posterior probability. It is also used as a surrogate loss function for classification. The cross-entropy loss is shown in Equation (1). For simplicity, we denote as q and as :

A loss function previously applied to classification tasks with imbalanced class labels is the focal loss, . The focal loss was proposed in [24] for the object detection task in computer vision. It adds a modulating factor to . The modulating factor is tuned by the focusing parameter, . The binary focal loss is given by

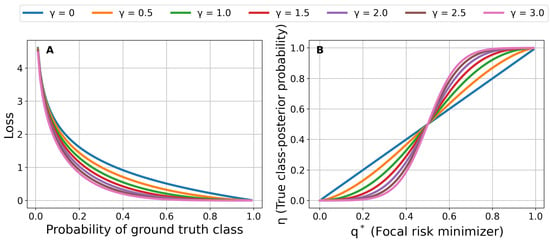

A modulating factor with reduces the focal loss to the cross-entropy loss. A modulating factor with decreases the loss for “easy” samples. For example, suppose that the training sample has the class label . The modulating factor decreases to 0 if the estimated confidence score for the training sample . Therefore, the relative loss for the sample decreases to 0 and the training process shifts its focus to samples with erroneous confidence scores. The modulating effect of focal loss is enhanced for higher values of the focusing parameter . Figure 1A shows the modulation effect caused by the focusing parameter .

Figure 1.

(A) Focal loss for different values of the focusing parameter . (B) Relationship between the focal risk minimizer and the true class-posterior probability for different values of the focusing parameter .

Unlike binary cross-entropy loss, focal loss is not a strictly proper loss function, and estimated confidence scores cannot be interpreted as reliable class-posterior probabilities [27]. A loss function, , is proper if for every , where [28,41,42]. Furthermore, it is strictly proper if is the unique minimizer.

The expected loss of a sample is the binary pointwise conditional risk given the prediction q and the true class-posterior probability :

where and are partial losses. The binary pointwise conditional risk can be used to recover the true posterior probability, , if the focal risk minimizer is available [27]. Let be the focal risk minimizer. Since focal loss is classification calibrated, then preserves the order of : . Furthermore, the binary pointwise conditional risk with respect to focal loss, , is differentiable. To keep expressions simple, denote as :

To find an expression for the class-posterior probability, the derivative of with respect to q is set equal to 0:

Finally, the class-posterior probability given the focal risk minimizer is given by the following expression:

According to Equation (5), if . Hence, is strictly proper if and only if , i.e., when it is equal to . However, if , i.e., is not strictly proper if . The closed-form transformation serves to calibrate a model trained via focal loss. The discriminatory power attained (often measured by the AUROC) through focal loss minimization is also maintained, since the transformation is a strictly increasing function. Therefore, the model gains calibration without losing discriminatory power. Figure 1B shows the relationship between the true class-posterior probability and the focal risk minimizer for different values of the focusing parameter . It should be emphasized that [27] initially introduces the transformation for the multiclass classification problem , where K is the number of classes, is a K-dimensional simplex vector, and . These equations are the result of a constrained convex program to optimize the pointwise conditional risk for the multiclass classification case. Equation (5) can be obtained from multiclass equations considering the case and .

Applying the transformation is a necessary post-processing step to obtain the true class-posterior probability from the focal risk minimizer. Hence, the transformation is fundamental in the application of focal loss in risk prediction applications. However, statistical and machine learning models are trained to minimize a loss function over finite training datasets. Therefore, the loss function minimizer may not be obtained. In practice, even models such as logistic regression that minimize a strictly proper loss function may be miscalibrated [44]. Studies suggest adding a regularization term to the loss function to encourage better calibration of class-posterior probabilities [43,52].

3.2. Regularized GBDT

We employ regularized gradient-boosted decision trees (GBDT) to minimize a loss function. The training objective of the original GBDT is given by [11]:

where l is a loss function, is the -decision tree, and is the ensemble model from iteration . Regularized GBDT such as XGBoost [13], LightGBM [14], and CatBoost [15] use the second-order Taylor expansion approximation to minimize the training objective:

where is a regularization term to control the complexity of the decision tree, and and are the first- and second-order derivatives of the loss function, respectively. The first- and second-order derivatives are known as gradient and Hessian, respectively. The right-hand side of Equation (7) can be rewritten as the weighted square loss, , with labels and weights , regularized by . The goal in the - iteration is to find the decision tree that minimizes this weighted loss. A class-posterior probability is obtained by applying the sigmoid transformation to the raw prediction of the ensemble model . The gradient and Hessian of focal loss are derived in studies [16,18].

3.3. Bayesian Hyperparameter Optimization

Previous studies have suggested that Bayesian optimization outperforms random search, grid search, and manual search to tune the hyperparameters of gradient-boosted decision trees [53]. Bayesian optimization is capable of performing an informed search of hyperparameters to efficiently tune a machine learning model. Hence, in this study, we use the Bayesian optimization method known as sequential model-based optimization (SMBO) to tune hyperparameters [54,55]. SMBO uses a cheap surrogate model to evaluate an expensive black-box objective, g. Hyperparameters, , and losses, l, are stored as elements in a trajectory set, , to carry out an informed search of hyperparameters. In this study, we use the Gaussian process (GP) as a prior of . An acquisition function is optimized using the posterior distribution of given the trajectory set to find the next hyperparameters to evaluate [56,57]. The acquisition function used in this study is the expected improvement:

where is the best hyperparameter combination obtained before the current iteration and is the next hyperparameter combination to evaluate by SMBO. This expectation is computed using the predictive mean and predictive variance of [57].

Unlike previous studies on focal-aware gradient-boosted decision trees that used a balanced version of the focal loss and tuned hyperparameters to optimize g-mean or H-measure [18,19,20], we seek to tune hyperparameters to minimize the K-fold cross-validated focal loss on the training set. The reason is that the focal loss minimizer is necessary to obtain calibrated posterior probabilities after applying the transformation [27]. The Bayesian hyperparameter optimization procedure is shown in Algorithm 1:

| Algorithm 1 SMBOFocalGBDT |

| Input: , T, , focusing parameter , K Output: with minimum

|

Algorithm 1 depends on the selection of a focusing parameter . Since risk prediction models are evaluated in terms of discrimination and calibration, we will seek the focusing parameter that provides the GBDT with the best K-fold cross-validated Brier score on the training set. The Brier score, , is a strictly proper scoring rule and can be decomposed into discrimination and calibration components [9]. Therefore, the Brier score serves as a good metric for choosing the focusing parameter. We provide a second algorithm that describes the process of choosing the focusing parameter and selecting the final GBDT for risk prediction. Algorithm 2 trains different GBDTs via focal loss minimization with different and then selects the GBDT calibrated by transformation with the best K-fold cross-validated Brier score. Notice that this method is different from adjusting the focal loss parameter to directly optimize the Brier score. Here, we tune several models to minimize focal loss with predefined and then select the final clinical risk prediction model based on the Brier score.

| Algorithm 2 Learning and Prediction Stages |

| Input: , T, , focusing parameters , K, test sample Output: Prediction for test sample

|

GBDTs such as XGBoost and LightGBM provide a wide range of hyperparameters to prevent overfitting. Great differences in performance can be seen in GBDT with different hyperparameters due to the large number of possible combinations. Careful tuning of these hyperparameters is important in the context of clinical risk prediction applications. Large clinical databases pose several challenges, such as inaccurate data, noisy class labels, and high dimensionality (large number of features) [12]. Decision trees can easily overfit irrelevant patterns in clinical databases due to these problems. Decision trees are also biased towards numerical features. Numerical features provide more split values, leading to overfitting. We address these issues by carefully limiting the range of possible values for each hyperparameter. Nine hyperparameters are considered for XGBoost. These hyperparameters are learning rate, maximum tree depth, number of trees, fraction of samples in each tree, fraction of features in each tree, fraction of features in each tree level, l1 regularization, l2 regularization, and minimum sum of sample weights (Hessians) in each child node. Bayesian hyperparameter optimization starts with a distribution of possible values for each hyperparameter. We limit the learning rate to values between and . We choose these values to reduce the weights of each tree in the ensemble. However, small learning rates can possibly lead the Bayesian hyperparameter tuning process to increase the number of trees to overfit the validation dataset. Therefore, we also limit the total number of decision trees to the range [20, 300]. We use between 55% and 85% of the features in each decision tree to deal with high dimensionality. We also limit the total number of samples in each decision tree to the range [55%, 85%]. Random sampling of observations can decrease the variance of the ensemble and improve performance [58]. We limit the tree depth to values between 1 (decision stump) and 8. The l1 and l2 regularization parameters are used to shrink the values of the leaves. We search for a value in the space [, ]. Larger values encourage sparsity and reduce leaf scores. The last hyperparameter is related to the sum of sample weights (Hessians) in a child node. The Hessian is the weight of each sample shown in the weighted square loss form of Equation (7). This hyperparameter is known as the “minimum child weight”. The “minimum child weight” hyperparameter stops tree growth if the sum of Hessians in a leaf is less than a threshold. We search for an appropriate threshold in the space [, ]. Note that higher thresholds prevent overfitting.

Different distributions can be used in the Bayesian hyperparameter search. We select a discrete uniform distribution for the number of trees and the maximum tree depth. Uniform distributions are used for the maximum number of samples per tree, the fraction of features per tree, and the fraction of features per level. Log-uniform distributions are chosen for the remaining hyperparameters. These distributions are in part based on the distributions in the experimental setting described in [15]. This experimental setting was designed to compare XGBoost, LightGBM, and CatBoost. Appendix B provides the distribution of each hyperparameter used in the experiments.

3.4. Class-Imbalanced Classification Using Calibrated Focal-Aware GBDT

Rare outcomes and imbalanced class labels are ubiquitous in real clinical datasets. Common approaches to solving this problem include resampling to balance the frequency of class labels. Resampling approaches include oversampling observations from the minority class [59] and undersampling observations from the majority class. Potential problems with these techniques include miscalibrated posterior probabilities [60], the generation of synthetic minority samples that are likely to belong to the majority class [61], and the loss of information in the case of undersampling. In cases where the imbalance is extreme (e.g., malignancies after solid organ transplantation), resampling techniques would have to generate or discard a very large number of samples. Furthermore, these techniques do not optimize any specific classification metric or the cost of clinical intervention.

A simple approach to deal with class imbalance is to introduce class weights in the loss function [24]. Class weights equal to the inverse of the class frequencies can be multiplied by the relative losses. Another approach is to add margins or biases to the logits of the loss function. For example, adding margins equal to and to the logits of the positive and negative class samples in the logistic loss, , leads to Fisher consistency for the balanced error [38]. The Fisher consistency for balanced error guarantees an optimal balanced error in the large sample size limit. Minimizing this logit-adjusted loss encourages the model to optimize the balanced error. In recent years, logit adjusted losses have led to better classification performance in long-tailed deep learning [26] and in GBDT for the modeling of imbalanced bioassay data [17]. However, these methods consider misclassification costs during the training process and are not designed for risk prediction problems where the true class-posterior probabilities must be estimated.

A method of developing a classification rule using a clinical risk prediction model is threshold or logit adjustment after training. The threshold adjustment addresses the classification of class-imbalanced data by choosing a decision threshold that optimizes a classification metric or the cost of clinical intervention. The estimated posterior probabilities are then converted into binary labels [62]. Setting the decision threshold to the prior of the positive class, , optimizes the arithmetic mean of sensitivity and specificity [60,63,64]. This metric is known as the balanced accuracy. The prior probability, , is estimated using the relative frequency of the positive class, , where is the number of samples in the positive class, and n is the total number of samples in the training set. The success of probability thresholding depends on obtaining accurate class-posterior probabilities according to Theorem 1 in [60]. We provide the Theorem for the binary class-imbalanced problem for completeness:

Theorem 1

([60]). Let be the prior of class 1, and let be the true class-posterior probability. If the proportion of the minority class label in the testing set is equal to the proportion of the minority class label in the training set, then

maximizes the balanced accuracy.

Theorem 1 provides a method to maximize balanced accuracy (or minimize balanced error) after training. Equation (9) is a cost-weighted Bayes rule, , with costs of misclassification and . In this expression, is the cost of classifying a positive sample as negative, and is the cost of classifying a negative sample as positive [63,65]. This classifier assumes that the costs of misclassification are much higher for positive samples when the data set has imbalanced class labels (). A rearrangement of the condition in (9) leads to the optimal decision threshold for the balanced accuracy to be . In practice, we have the model’s estimated class-posterior probabilities and the relative frequency of the positive class in the training set . Therefore, the goal is to estimate accurate class-posterior probabilities to optimize the balanced accuracy. Collel. et al. previously investigated the combination of threshold adjustment with bagging classifiers [60]. In this study, we investigate the performance of GBDT trained via focal loss and the empirical threshold equal to the relative frequency of the positive class in the training set:

where is the calibrated confidence score of a model trained by minimizing the focal loss (5). This method has advantages in the context of clinical risk prediction. The model should provide calibrated class-posterior probabilities assuming the focal loss minimizer is learned. Then, Equation (10) optimizes both sensitivity and specificity without threshold optimization. The classifier solely depends on having accurate estimates of the class-posterior probabilities and a consistent estimator of the prior probability of the minority class. Other decision thresholds can be used assuming the class-posterior probabilities are well calibrated. Different decision thresholds may optimize costs associated with clinical interventions [9] or classification metrics such as the F1-score [66].

3.5. Evaluation Metrics

This section introduces the evaluation metrics used in this study. We describe discrimination, calibration, and classification metrics to evaluate risk prediction and classification models. The discrimination metrics used in this study are the AUROC and the H-measure. The receiver operating characteristic (ROC) curve displays the true positive rate () and the false positive rate () for different decision thresholds. The AUROC is 1 when the model ranks all positive samples higher than negative samples. The AUROC is 0.5 if the model cannot separate positive samples from negative samples; that is, the model provides a random guess. The AUROC is given by the following expression:

The H-measure metric is also defined over the ROC curve and considers misclassification costs by incorporating a misclassification severity distribution [7,67]. The average precision is also considered. The average precision measures the area under the precision–recall curve, which is useful for comparing classifiers trained with class-imbalanced data. The precision–recall curve provides precision and recall, or TPR, for different decision thresholds. The average precision is calculated as the weighted mean of precision values for different decision thresholds. Each weight is the increase in the recall with respect to the recall value obtained using the previous decision threshold.

To assess calibration, we used the Brier score. The Brier score is the mean squared error for predicted probabilities and it is a strictly proper scoring rule:

The best possible Brier score for a model is 0. The Brier score measures both calibration and discrimination, so it serves as an overall accuracy metric. Therefore, we also used the calibration plot to visualize the calibration of the predicted probabilities. We use quantile binning to assign predicted probabilities to groups. The predicted probabilities are sorted and grouped into 10 bins, each with the same number of samples. The fraction of positive samples and the mean predicted probability are then plotted for each bin. A perfect calibration curve must be aligned with the line of identity. We also provide the calibration slope and intercept to quantify calibration. The calibration slope and intercept are extracted from a logistic regression model that uses the logit of the predicted posterior probabilities as an independent variable and the binary outcome as a dependent variable. The coefficient and intercept are the calibration slope and the calibration intercept, respectively. A risk prediction model is calibrated if the calibration slope and intercept are equal to 1 and 0, respectively. The predicted posterior probabilities are then considered to be in agreement with the positive label frequencies in the test data. The deviation from these values indicates miscalibration. The calibration slope and intercept are commonly used to assess models in the clinical risk prediction literature [8,10,35,68].

To assess classification performance, we used sensitivity (recall or TPR), specificity or true negative rate (TNR), and balanced accuracy or arithmetic mean of sensitivity and specificity. These metrics measure the proportion of samples correctly classified. The metrics are expressed as follows:

where TP stands for true positives, TN are true negatives, FP are false positives, and FN are false negatives. These metrics depend on the selection of a decision threshold to convert the class-posterior probabilities into class labels.

This study used data from the Scientific Registry of Transplant Recipients (SRTR) [69]. The SRTR data system includes data on all donors, wait-listed candidates, and transplant recipients in the US, submitted by members of the Organ Procurement and Transplantation Network (OPTN). The Health Resources and Services Administration (HRSA), US Department of Health and Human Services, provides oversight to the activities of the OPTN and SRTR contractors.

4. Experiments

This section describes lung transplantation data from the Scientific Registry of Transplant Recipients (SRTR) and the Behavioral Risk Factor Surveillance System (BRFSS) of the Centers for Disease Control and Prevention (CDC). The first application consists of cancer prediction in lung transplant recipients. The second application consists of the prediction of diabetes based on survey responses from residents of the United States. The experimental settings and results are described in detail.

4.1. Datasets

4.1.1. Lung Transplant Data

SRTR has stored data on solid organ transplant candidates, recipients, and donors in the United States since 1987. Its primary source is the Organ Procurement and Transplantation Network (OPTN). Solid organ transplants in the registry consist of the heart, lung, kidney, pancreas, liver, and intestines. SRTR files provide features related to demographics, medical history, immunosuppression, histocompatibility, donor–recipient matching, transplant follow-up, and outcomes including death and malignancy after transplantation.

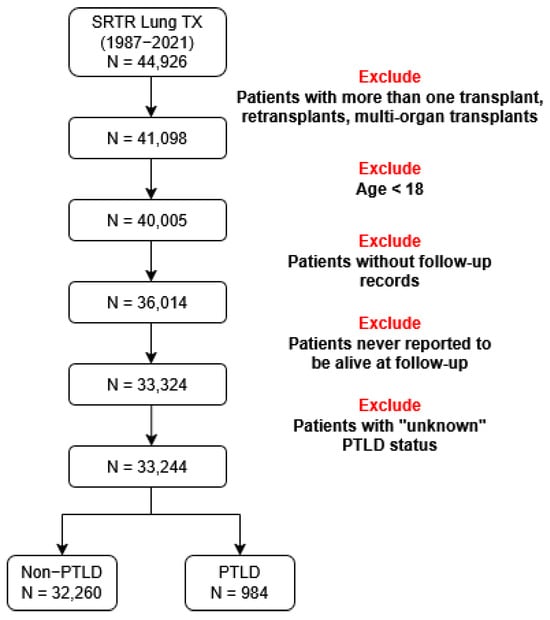

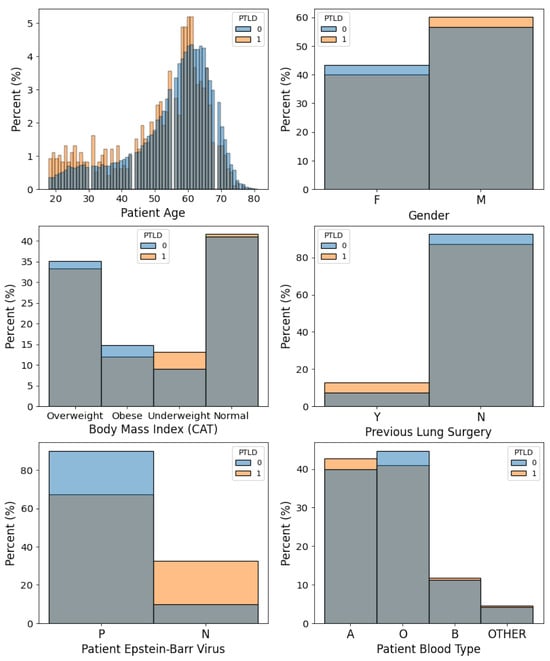

The initial lung transplant file contains 44,926 patients from 16 October 1987, to 1 June 2021. We extract lung transplant recipients from the SRTR and filter the data using the exclusion criteria shown in Figure 2. We exclude patients with retransplantation, patients under 18 years of age, patients without follow-up records, and patients with unknown cancer status. The final dataset includes 33,244 patients from 1 January 1988 to 28 November 2020. The binary outcome is post-transplant lymphoproliferative disorder (PTLD). PTLD is a malignancy that occurs in solid organ transplant recipients [70]. It is one of the most common malignancies after non-melanoma skin cancer among lung transplant recipients. The mortality range for lung transplant recipients who develop PTLD is 20–50% [71]. The status and type of malignancy are extracted from the SRTR malignancy file. We calculate the time-to-PTLD by subtracting the date of diagnosis of PTLD from the date of transplantation. The final number of patients is 33,244 with 32,260 (97.04%) patients who did not develop PTLD and 984 (2.96%) patients who developed PTLD. We then generate datasets with binary outcomes for five different time horizons: 1, 3, 5, 8, and 10 years. Patients who were not followed up or died during the period of time were excluded. Patients who developed PTLD before the end of the time horizon are assigned an outcome of 1, while patients who did not develop PTLD before the end of the time horizon are assigned an outcome of 0. The numbers of patients are 28,883, 19,501, 13,351, 7507, and 5159 for 1, 3, 5, 8, and 10 years after transplantation, respectively. The datasets show a high degree of class-imbalance. The relative frequencies of the event are 1.14%, 2.92%, 5.19%, 10.87%, and 17.02% for 1, 3, 5, 8, and 10 years after transplantation, respectively. We consider features including demographics, immunosuppression during transplantation and early post-transplantation period (medications for induction and anti-rejection), viral infections prior to transplantation, and some of the most common human leukocyte antigens (HLA) among lung recipients. A total of 54 features are used to predict PTLD in lung transplant recipients. Feature names and basic statistics are shown in Table A1 and Table A2 of Appendix A. Two of the 54 features are numerical. The remaining features are categorical. We perform a Wilcoxon rank sum test to compare numerical features of cancer and non-cancer patients. The Wilcoxon rank sum test is a non-parametric statistical test that compares the locations of two distributions. The p-values of the statistical test are available in Table A1 of Appendix A. We also perform a chi-square test to compare categorical features of cancer and non-cancer patients. The test is performed to assess whether there is an association between categorical features and the outcome. The contingency tables and p-values are available in Table A2 of Appendix A. These statistical tests are performed as preliminary analyses of the features. Figure 3 shows the distributions of six features used to predict PTLD.

Figure 2.

Lung transplantation patients extracted from the Scientific Registry of Transplant Recipients (SRTR).

Figure 3.

Examples of features used to predict risk of post-transplant lymphoproliferative disorder (PTLD) in lung transplant recipients.

4.1.2. Diabetes Data

We also use data from the Behavioral Risk Factor Surveillance System (BRFSS) [72]. The BRFSS is a survey collection data system established by the Centers for Disease Control and Prevention (CDC) in 1984. It collects data from residents of the United States related to demographics, risky living habits, healthcare coverage, chronic, and infectious diseases. More than 400,000 surveys are conducted by telephone in 50 states of the US every year. We utilize the pre-processing pipeline available in [73]. The pipeline extracts 253,680 survey responses without missing values from the 2015 file available in [74]. We obtained 229,474 samples after removing duplicated rows.

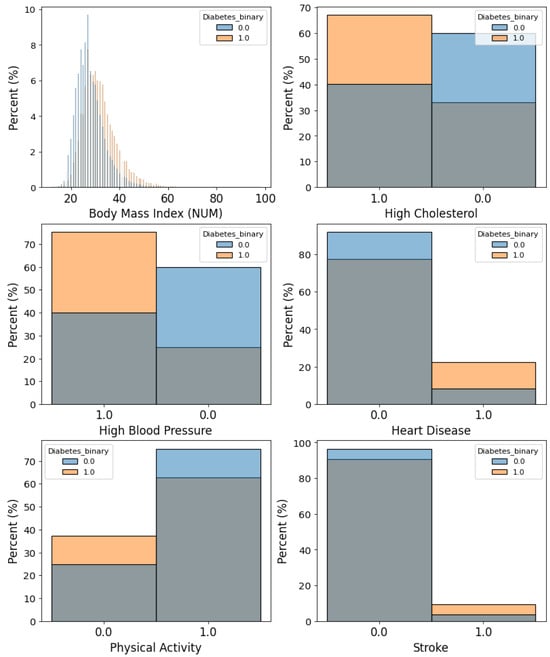

The binary outcome for the BRFSS dataset is diabetes (including pre-diabetes). The relative frequency of the event is 15.29%. There are 21 features in total. These features are extracted from the survey responses. Each survey represents a row or sample. Three of the 21 features are numerical features. Numerical features include body mass index, number of days with poor mental health (in a 30-day window), and number of days with poor physical health (in a 30-day window). The remaining features are categorical. These features are related to high blood pressure, high cholesterol, smoking, stroke, alcohol consumption, heart disease, demographics (sex, age group, education, and income), healthcare coverage, and eating habits (fruit and vegetable consumption per day). Wilcoxon rank sum test was performed to compare the numerical features of residents with diabetes and residents without diabetes. Chi-square test was performed to compare categorical features. The names of features, means, standard deviations, and categories are shown in Table A3 and Table A4. Figure 4 shows the distributions of six features used to predict diabetes.

Figure 4.

Examples of features used to predict risk of diabetes. Features are based on survey responses collected by the Centers for Disease Control and Prevention (CDC).

4.2. Design of Experiments

Each dataset is split into a training set (70%) and a testing set (30%). Median and mode imputations are performed for continuous and categorical features with missing values, respectively. We consider several statistical and machine learning models for comparison, including logistic regression (LR) without regularization, LR with least absolute shrinkage and selection operator (LASSO) regularization, LR with ridge regularization, LightGBM, XGBoost, LightGBM-Focal, and XGBoost-Focal. LR models are chosen because they are commonly used in clinical risk prediction applications. We compare the performance of focal-aware GBDT before and after applying the transformation. We also apply Platt scaling [45] and isotonic regression [47] to the predictions of GBDT trained using focal loss. For these heuristic techniques, we use 10-fold cross-validation over the training set to generate a new set consisting of miscalibrated predictions and true class labels. We then fit sigmoid and isotonic regression models to this new set. Note that miscalibrated predictions are converted into logits, and true labels are scaled to for the Platt scaling method [45]. We provide the expression of the sigmoid transformation used in the Platt scaling:

where is the logarithmic odds of prediction q, and A and B are the hyperparameters to be tuned to improve the calibration. The output is the calibrated probabilistic prediction.

All models are tuned with Bayesian hyperparameter optimization with iterations. Hyperparameters are selected to minimize the 10-fold cross-validated cross-entropy loss for all models except for models trained through focal loss. The focal loss parameter is initially set to 0.1, and then the parameter search continues from 0.25 to 2.5 with a step size of 0.25. The distributions of other hyperparameters considered are provided in Appendix B. The scikit-learn [75], LightGBM, XGBoost, scikit-optimize, statsmodels, and hmeasure packages are used for model development, hyperparameter tuning, and evaluation. Algorithms 1 and 2 are used to train all models that used focal loss as a loss function.

We use the relative frequency of the minority class label (prevalence) as the decision threshold for calibrated models. In addition, we also use an optimized decision threshold for the miscalibrated GBDT models trained using focal loss. An optimized threshold is used because the posterior probabilities produced by these models are not accurate. Therefore, the prevalence threshold will not optimize balanced accuracy according to Theorem 1 in Section 3.4. To find the optimized threshold, we train the model with optimal hyperparameters and perform 10-fold cross-validation again. For each iteration of 10-fold cross-validation, we find the best decision threshold that optimizes the balanced accuracy in the validation fold. Finally, the mean of these ten thresholds is used as the final decision threshold to perform the classification in the test set.

Risk probabilities are plotted to show the calibration of models trained using focal loss. We provide calibration plots based on quantile binning with 10 bins. The described experiment is repeated 10 times for the PTLD dataset using different random seeds. For the prediction of diabetes using the BRFSS, we performed only three rounds of cross-validation due to the large size of the dataset. We provide the mean performance over the three cross-validation experiments.

4.3. Results

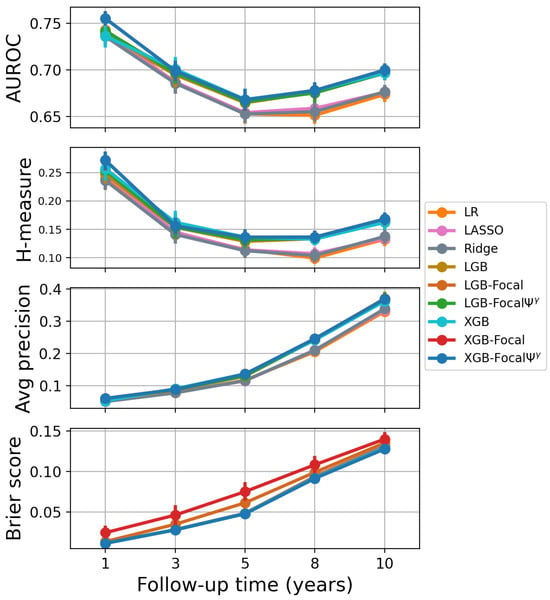

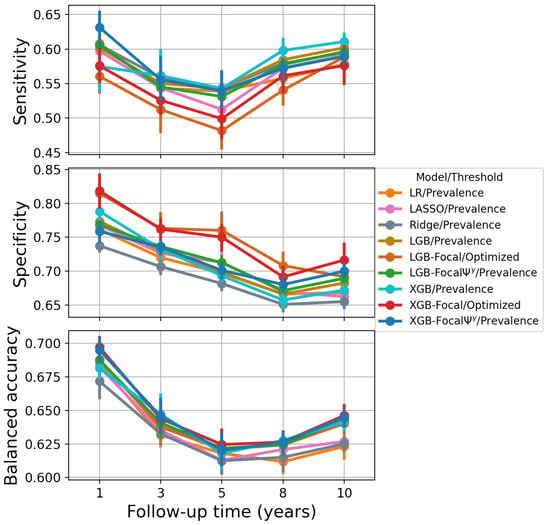

The mean values for the 10 experiments for the XGBoost-Focal models are 1.49, 1.34, 1.63, 1.10, and 0.92 for the prediction of the risk of PTLD at 1, 3, 5, 8, and 10 years, respectively. The mean values for the 10 experiments for the LightGBM-Focal models are 0.60, 0.85, 1.05, 0.65, and 0.61 for the prediction of the risk of PTLD at 1, 3, 5, 8, and 10 years, respectively. The value for each repetition of shuffled cross-validation is automatically selected by Bayesian hyperparameter optimization (Algorithms 1 and 2). Higher values deteriorate the calibration. Table 1, Table 2 and Table 3 and Figure 5 and Figure 6 show the model performance for the prediction of PTLD in lung transplant recipients. From Table 1, we observe that AUROC, the H-measure, and the average precision are the same for GBDT-Focal before and after applying the transformation. GBDT-Focal combined with Platt scaling also preserves the same discriminatory power. This result is expected because the and sigmoid transformations (used in Platt scaling) preserve the order of the confidence scores. Therefore, ranking metrics such as AUROC, H-measure, and average precision remain unchanged.

Table 1.

Model performance according to AUROC, H-measure, and average precision for PTLD prediction in lung transplant recipients. The best models are underlined.

Table 2.

Model performance according to Brier score for PTLD prediction in lung transplant recipients. The best models are underlined.

Table 3.

Model performance according to classification metrics (sensitivity, specificity and balanced accuracy) for PTLD risk prediction in lung transplant recipients. The best models are underlined.

Figure 5.

Performance based on unthresholded metrics for PTLD prediction. AUROC, H-measure and average precision show equal performance for calibrated and miscalibrated GBDT-Focal models. LightGBM-Focal and XGBoost-Focal have worse Brier scores due to miscalibration of the posterior probabilities.

Figure 6.

Performance based on classification metrics for PTLD prediction. Optimizing the decision threshold for balanced accuracy provides lower sensitivity and higher specificity compared to the prevalence threshold.

Table 2 and Figure 5 show that the transformation improves the performance of XGBoost-Focal and LightGBM-Focal according to the Brier score. All calibrated XGBoost-Focal models show an overall improved accuracy according to the Brier score metric compared to their miscalibrated counterparts. The Brier scores for XGBoost-Focal are 0.0242, 0.0460, 0.0749, 0.1082, and 0.1400 for years 1, 3, 5, 8, and 10, respectively. The Brier scores for XGBoost-Focal are 0.0110, 0.0277, 0.0476, 0.0914, and 0.1278 for years 1, 3, 5, 8, and 10, respectively. Since discrimination remains unchanged, the improvement in the Brier score can be attributed to an improvement in the calibration of the confidence scores.

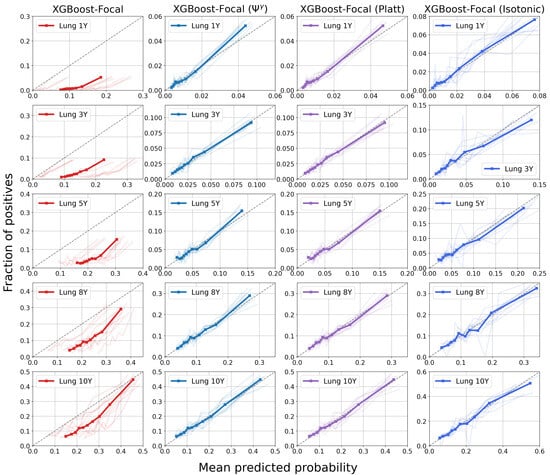

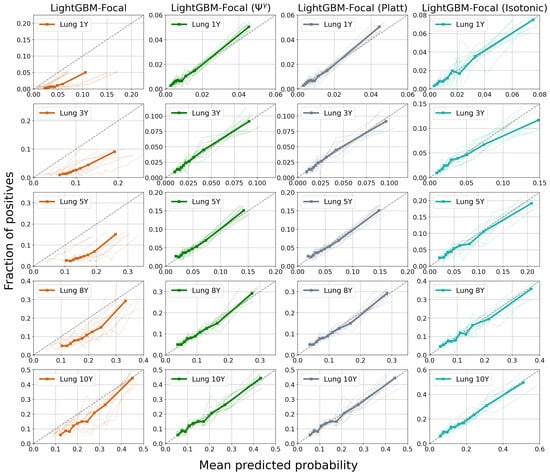

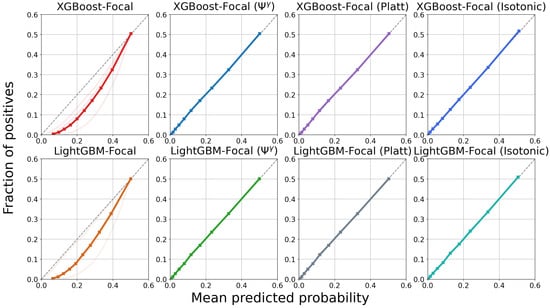

Figure 7 and Figure 8 display calibration plots for different time horizons. As seen in the leftmost columns, both XGBoost and LightGBM trained via focal loss minimization overestimate the risks of PTLD post-transplantation across all time horizons. For example, the group with the lowest risk during the first year after transplantation (first bin in the first subplot of XGBoost-Focal in Figure 7) has a mean predicted probability of 0.0758, while the actual risk is 0.002. Furthermore, the group with the highest post-transplant risk during the first year has a mean predicted probability of 0.185 while the actual risk is 0.052. In contrast, calibration of the XGBoost models trained via focal loss is greatly improved across all time horizons after applying the transformation. The mean predicted probabilities of the lowest and highest risk groups for the first year are 0.0035 and 0.044 after applying the transformation, respectively. All post-processing methods improve the calibration with respect to the raw GBDT trained through focal loss. However, GBDT calibrated using isotonic regression shows worse performance, as reflected by the higher Brier score in Table 2. The poor performance might be due to the small number of positive samples available in the lung transplant dataset.

Figure 7.

Calibration plots for XGBoost trained using focal loss for the prediction of post-transplant lymphoproliferative disorder (PTLD) in lung transplant recipients. Each row displays the model calibration for each of the five time horizons. There are 10 curves in each subplot representing 10 different cross-validation experiments. The thick bold curve is the mean. The dotted diagonal line (line of identity) represents perfect calibration, where the mean predicted probabilities match the fraction of positives. Confidence scores are miscalibrated before applying methods such as the transformation, Platt scaling, and isotonic regression.

Figure 8.

Calibration plots for LightGBM trained using focal loss for the prediction of post-transplant lymphoproliferative disorder (PTLD) in lung transplant recipients. Each row displays the model calibration for each of the five time horizons. There are 10 curves in each subplot representing 10 different cross-validation experiments. The thick bold curve is the mean. The dotted diagonal line (line of identity) represents perfect calibration, where the mean predicted probabilities match the fraction of positives. Confidence scores are miscalibrated before applying methods such as the transformation, Platt scaling, and isotonic regression.

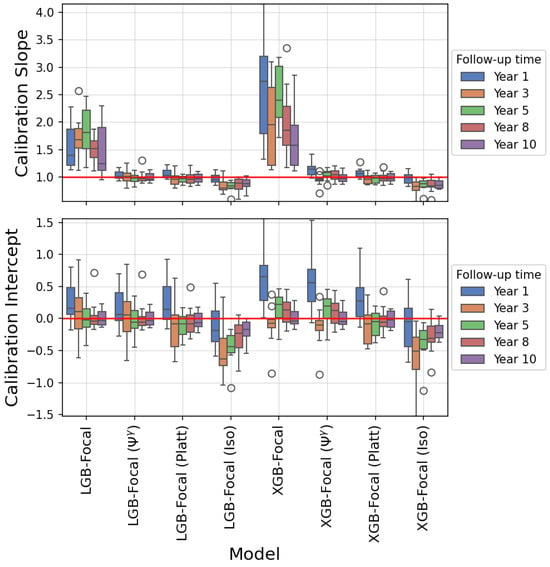

The calibration slope and intercept were also calculated to quantify the calibration plots. These values are shown as box plots in Figure 9. The ideal values for the calibration slope and intercept are 1 and 0 (shown as red horizontal lines in both subplots), respectively. The median calibration slope and intercept for XGBoost-Focal for the prediction of 1-year risk of PTLD are 2.744 (interquartile range (IQR): 1.787–3.196) and 0.654 (IQR: 0.282–0.829), respectively. These values indicate that XGBoost-Focal is severely miscalibrated. The median calibration slope and intercept for the XGBoost-Focal calibrated using the transformation are 1.138 (IQR: 1.041–1.192) and 0.563 (0.262–0.772), respectively. The median calibration slope and intercept for the XGBoost-Focal calibrated using Platt scaling are 1.066 (IQR: 1.009–1.113) and 0.272 (IQR: 0.032–0.486), respectively. The median calibration slope and intercept for the XGBoost-Focal calibrated using isotonic regression are 0.986 (IQR: 0.892–1.043) and −0.048 (IQR: −0.436–0.160), respectively. The median calibration slope and intercept for XGBoost-Focal for the prediction of 10-year risk of PTLD are 1.579 (IQR: 1.217–1.941) and −0.040 (IQR: −0.081–0.104), respectively. The median calibration slope and intercept for the XGBoost-Focal calibrated using the transformation are 0.968 (IQR: 0.917–1.040) and −0.045 (IQR: −0.088–0.099), respectively. The median calibration slope and intercept for the XGBoost-Focal calibrated using Platt scaling are 0.969 (IQR: 0.932–1.058) and −0.026 (IQR: −0.137–0.114), respectively. The median calibration slope and intercept for the XGBoost-Focal calibrated using isotonic regression are 0.848 (IQR: 0.802–0.935) and −0.224 (IQR: −0.297–(−0.110)), respectively. The calibration slopes and intercepts for the remaining time horizons are shown in Figure 9.

Figure 9.

Calibration slope and intercept of GBDT trained using the focal loss function (prediction of post-transplant lymphoproliferative disorder). The calibration slope and intercept were extracted from a logistic regression model with the logit of the predicted class-posterior probabilities as the independent variable and the binary outcome as the dependent variable. The box plots show results for 10 repetitions of shuffled cross-validation. Deviations from a slope of 1 and an intercept of 0 (red horizontal lines) indicate a miscalibration of the class-posterior probabilities. XGBoost-Focal shows worse miscalibration compared to LightGBM-Focal because it was trained using larger values of focusing parameter . Applying calibration methods such as transformation, Platt scaling, and isotonic regression pushes the calibration slope towards 1 (better calibration).

XGBoost trained using focal loss achieved better discrimination compared to standard XGBoost to predict cancer one year after lung transplantation. The AUROC values are 0.755 and 0.736 for XGBoost-Focal and standard XGBoost, respectively. The H-measure values are 0.271 and 0.257 for XGBoost-Focal and standard XGBoost, respectively. These values are shown in Table 1 and Figure 5. XGBoost-Focal calibrated using the transformation achieved the best sensitivity (0.631) to predict cancer one year after transplantation, as shown in Table 3 and Figure 6. The discriminatory power and the overall accuracy (in terms of the Brier score) of XGBoost-Focal and XGBoost trained using cross-entropy loss are comparable in the remaining time periods. The differences in AUROC between the best GBDT model and the best logistic regression are in the range 0.007–0.023 for all risk prediction tasks (including diabetes prediction). Differences in the H-measure and average precision are more prominent. The differences between the best GBDT model and the best logistic regression are in the range of 0.013–0.031 for the H-measure and 0.006–0.035 for the average precision. XGBoost also tended to perform slightly better than LightGBM in all prediction tasks.

In summary, the XGBoost-Focal models are equally competitive or better than other models according to the AUROC, H-measure, average precision, and Brier score across all time horizons. We observe that GBDT models achieve better performance compared to logistic regression models (unregularized and regularized) as the time horizon increases for the prediction task, as evidenced by the H-measure and average precision of the models. Figure 5 displays the performance of the risk prediction models as the time horizon changes.

The thresholded classification metrics of all models, including sensitivity and specificity, are provided in Table 3. Models trained with focal loss are overconfident, that is, they assign a higher probability of event. As a result, the prevalence threshold would convert most confidence scores to positive labels, leading to a perfect or nearly perfect sensitivity but low specificity. This problem is solved using an optimized threshold or by calibrating confidence scores before using the prevalence as a decision threshold, as discussed in Section 3.4 (Equation (10)). In addition, we observe that optimized decision thresholds provide comparable or slightly better balanced accuracy at the expense of lower sensitivity and higher specificity. We notice this trend for both LightGBM-Focal and XGBoost-Focal through different time horizons in the PTLD prediction task (see Table 3 and Figure 6). We also find that the sensitivity values of LightGBM-Focal and XGBoost-Focal using the optimized thresholds tend to be worse than the sensitivity values achieved by the models using the prevalence threshold. This is not desirable, as positive samples are associated with higher costs of misclassification in clinical risk prediction applications. Therefore, the prevalence threshold is more appropriate than the optimized balanced accuracy threshold in the PTLD prediction task.

Figure 10 shows the calibration plots for the diabetes prediction task. The mean values of across the three shuffled cross-validation experiments are for LightGBM-Focal and for XGBoost-Focal. LightGBM-Focal and XGBoost-Focal show equal miscalibration. All post-processing methods successfully calibrate the predicted probabilities, as shown in Figure 10 (mean predicted probabilities and fraction of positives in the test set match). Isotonic regression provides almost equal performance to the other calibration methods thanks to the larger sample size. Table 4 provides the AUROC, H-measure, average precision, and Brier score for the diabetes prediction task. We observe that the calibrated XGBoost-Focal and the standard XGBoost have a mean Brier score equal to 0.1052. In general, the differences between GBDT trained using focal loss and GBDT trained using cross-entropy loss are less prominent in the diabetes prediction task. The classification metrics for the diabetes prediction task are provided in Table A5 of the Appendix C.

Figure 10.

Calibration plots for prediction of diabetes. Shuffled cross-validation was repeated three times using different random seeds. The mean focusing parameters () are 1.03 and 1.08 for LightGBM-Focal and XGBoost-Focal, respectively. The thick bold curve is the mean calibration curve. The dotted diagonal line (line of identity) represents perfect calibration, where the mean predicted probabilities match the fraction of positives. We compare the miscalibrated models and models calibrated using different techniques ( transformation, Platt scaling and isotonic regression).

Table 4.

Performance according to discrimination and overall accuracy metrics (AUROC, H-measure, average precision and Brier score) for the prediction of diabetes. The best models are underlined.

5. Discussion

This study investigated the impact of focal loss in the context of clinical risk prediction. Although existing studies have combined GBDT with custom loss functions [16,17,18,19,20,21,22,23,39], few have addressed the topic of probability calibration. Probability calibration assesses the accuracy of the confidence scores provided by a model. The confidence scores represent the predictive uncertainty of the model. Miscalibrated posterior probabilities can be misleading for decision-making tasks [10]. This is because miscalibrated scores do not represent the fraction of positive observations for a given set of features. We proposed a method to select the focusing parameter of the focal loss function to optimize discrimination and calibration. The method was based on the transformation proposed in [27] and Bayesian hyperparameter optimization. Our study also uses calibration plots and the Brier score to assess the effect of the transformation and the “overall accuracy” (discrimination plus calibration), respectively. Probability calibration plots and the Brier score are popular in the clinical risk prediction literature [8,9]. These calibration metrics have attracted attention in recent deep learning research related to calibration [44,76].

Since focal loss is not strictly proper, minimization does not lead to true class-posterior probabilities. Therefore, confidence scores should not be interpreted as accurate clinical risks unless a transformation is applied after training. The transformation applied in the experiments directly related the focal loss minimizer to the true class-posterior probability. Such a transformation may not exist for all loss functions. For example, class-posterior probability estimation is not possible for some asymmetric losses [52] and for the hinge loss [45,77]. Models trained using these losses require heuristic techniques such as Platt scaling and isotonic regression. These techniques increase computational time and require a calibration dataset or cross-validation to prevent overfitting. Isotonic regression can also produce poor calibration for small sample sizes.

In our experiments, we have observed that miscalibrated GBDTs trained via focal loss minimization produce overconfident predictions. The overconfidence can be seen in the mean predicted probabilities of XGBoost-Focal and LightGBM-Focal in Figure 7, Figure 8 and Figure 10. The mean predicted probabilities before calibration (horizontal axis) are greater than the fraction of positives (vertical axis) in the test dataset. The plotted bins were below the identity line, indicating overestimated risks [10]. However, models trained through focal loss can also produce underconfident predictions. This phenomenon was first observed in deep neural networks trained using focal loss [76] and was later formalized in [27]. Mukhoti et al. used this property and proposed a method to find focusing parameters to calibrate overconfident deep neural networks [76].

The use of isotonic regression resulted in a worse performance than using the transformation or Platt scaling in the prediction of PTLD (see the higher Brier score and lower average precision for 5, 8, and 10 year cancer prediction in Table 2 and Table 1, respectively). The most likely explanation is that the isotonic regression is sensitive to data imbalance and that a small number of positive cancer patients leads to poor performance. Furthermore, isotonic regression did not maintain the discriminatory power of the model. This is because the isotonic regression fits a step-wise isotonic function instead of a strictly increasing function [47]. This approach introduces ties between similar probabilities and changes ranking metrics such as the AUROC, the H-measure, and the average precision. Unlike isotonic regression, the transformation and sigmoid transformation (used in Platt scaling) are strictly increasing. Hence, they do not alter the discrimination of the model, as shown in the AUROC and H-measure metrics in Table 1 and Table 4. Isotonic regression achieved performance comparable to other calibration methods in the prediction of diabetes (see Brier scores in Table 4). Note that the diabetes dataset contains more positive observations compared to the lung transplant dataset.

An advantage of choosing focal loss over the strictly proper cross-entropy loss in clinical risk prediction applications is its flexibility. Focal loss forces the training process to “focus” on hard-to-classify samples, thanks to its focusing parameter. This parameter can prove useful when dealing with large medical databases with imbalanced classes or rare samples (samples with large losses). As an example, we found that XGBoost and LightGBM trained using focal loss achieved better discrimination compared to standard XGBoost and LightGBM for cancer prediction one year after lung transplantation. XGBoost-Focal () also achieved a higher sensitivity compared to other models for the same time horizon as shown in Table 3. However, it is important to emphasize that focal loss might not always enhance performance. GBDT trained with focal loss and cross-entropy loss had an equal Brier score and an almost equal AUROC in the diabetes prediction application (Table 4). Therefore, forcing the training process to focus on rare samples is not necessary for every dataset or application. The focusing parameter can be considered to tune the GBDT using Algorithms 1 and 2. A focusing parameter will handle cases for which the cross-entropy loss would lead to better performance. In this case, the transformation is no longer necessary because the loss is strictly proper, as explained in Section 3.1.

This study also explored threshold adjustment in combination with GBDT trained using focal loss. Many applied machine learning studies assume a regular decision threshold of 0.5. In this setting, minimization of weighted cross-entropy loss or logit-adjusted loss is necessary to maximize balanced accuracy. Boldini et al. previously studied these loss functions in combination with GBDT [17]. A model trained through weighted cross-entropy loss or logit-adjusted loss will provide balanced class-posterior probabilities [38]. Note that balanced posterior probabilities would overestimate the risks or true posterior probabilities. Therefore, these loss functions are mainly applicable to classification problems. We specifically used the prevalence threshold to maximize balanced accuracy after applying the transformation . The choice of decision threshold is based on Theorem 1 provided by Collel et al. in [60]. This decision threshold optimizes the balanced accuracy in the large sample size limit given accurate posterior probabilities. This threshold would fail to optimize the balanced accuracy without a calibration step because the probabilities estimated using focal loss are not reliable. Therefore, an optimized threshold is needed when the transformation or a heuristic technique is not applied. Note that estimation of the optimized threshold would increase the computational burden since it would require K-fold cross-validation to prevent overfitting.

A limitation observed in the experiments is that the selected focusing parameter may not be the same for cross-validation repetitions with different random seeds. This is evident in Figure 7, Figure 8 and Figure 10. The background curves show different degrees of miscalibration caused by training XGBoost-Focal and LightGBM-Focal with different focusing parameters. These focusing parameters were chosen automatically in the Bayesian hyperparameter tuning stage, as described in Section 3.3. A possible explanation is that the heterogeneity of the patient groups available in the SRTR database (including patient groups from different transplant centers and time periods) causes different focusing parameters to be selected for different cross-validation repetitions. Problems such as label noise may also cause hyperparameter values to fluctuate among different cross-validation runs. These observations are consistent with studies showing that the hyperparameter tuning of GBDT is sensitive to noise in electronic health records [12]. For model deployment, it is recommended to estimate this parameter using the full dataset after assessing stability through repeated cross-validation runs.

A limitation of our experimental setting is that we did not consider other GBDT models in addition to XGBoost and LightGBM. Other variants of GBDT include CatBoost [15] and PaloBoost [12]. PaloBoost is a variant of the original stochastic GBDT model [58]. PaloBoost is designed to be less sensitive to hyperparameter settings. CatBoost introduces an algorithm to automatically handle categorical features. Clinical databases such as the SRTR typically contain many categorical and ordinal features. Hence, CatBoost can be used as an alternative to XGBoost and LightGBM in clinical risk prediction applications. We also did not consider recent hyperparameter optimization techniques to tune GBDT [78]. A future research direction is to explore CatBoost and novel hyperparameter optimization techniques in combination with custom loss functions.

6. Conclusions

This paper investigated focal loss in clinical risk prediction problems. We investigated the application of a closed-form transformation ( transformation) to calibrate the confidence scores of XGBoost and LightGBM trained using focal loss. Algorithms based on Bayesian hyperparameter tuning were proposed to select the focal loss parameter to optimize discriminatory power and calibration. Adjustment of the decision threshold was also investigated to optimize the balanced accuracy classification metric. We considered two real-life applications related to lung transplantation and diabetes prediction.

The methods and experiments presented in this study can support the adoption of the focal loss function to train clinical risk prediction models. The practical implications of improving clinical risk prediction include providing data-driven models to support clinical professionals, standardize patient care, accelerate clinical decision making to prioritize treatment, and improve patient outcomes through preventive interventions. Despite the benefits of machine learning techniques for clinical risk prediction, there are ethical concerns that require attention. Although the gradient-boosted decision tree model can potentially improve predictive performance compared to conventional statistical approaches, it can also inherit biases present in the training data. These biases are more difficult to detect in complex models such as GBDT than in simpler linear models. Therefore, extensive validation is essential to detect and minimize biases. Interpretable approaches also need to be thoroughly explored to support the adoption of GBDT and new loss functions in high-stakes clinical decision making.

A future research direction is to explore the application of focal loss in the field of fair machine learning. Focal loss can be applied to improve predictive performance in tasks with underrepresented or minority groups in electronic health records. The focal loss parameter can be used to reduce the loss of a sample of a minority group in a medical database. Custom loss functions can be developed to correct biases in GBDT for clinical risk prediction. Another direction for future research is to use focal loss and logit-adjusted losses to train GBDT for multiclass and multilabel classification.

Author Contributions

Conceptualization, N.N. and D.D.; Methodology, H.J. and D.D.; Validation, H.J.; Formal analysis, H.J.; Data curation, N.N.; Writing—original draft, H.J.; Writing—review & editing, D.D.; Supervision, N.N. and D.D.; Project administration, D.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The Scientific Registry of Transplant Recipients (SRTR) database is publicly available through the SRTR (https://www.srtr.org). The database is subject to the data use agreement. Behavioral Risk Factor Surveillance System (BRFSS) files are publicly available through the Centers for Disease Control and Prevention (CDC). The files can be accessed via https://www.cdc.gov/brfss/annual_data/annual_data.htm (accessed on 24 July 2024). The diabetes dataset is available at: https://www.kaggle.com/datasets/alexteboul/diabetes-health-indicators-dataset/data (accessed on 24 July 2024).

Acknowledgments

The data reported here have been supplied by the Hennepin Healthcare Research Institute (HHRI) as the contractor for the Scientific Registry of Transplant Recipients (SRTR). The interpretation and reporting of these data are the responsibility of the author(s) and in no way should be seen as an official policy of or interpretation by the SRTR or the U.S. Government.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Feature Description