Autism Spectrum Disorder Diagnosis Based on Attentional Feature Fusion Using NasNetMobile and DeiT Networks

Abstract

1. Introduction

- High-Level Representation via NasNetMobile: NasNetMobile is employed to extract high-level abstract patterns from the input images, utilizing its deep architecture to generate robust and discriminative features.

- Fine-Grained Feature Extraction using DeiT Network: The DeiT network focuses on capturing fine-grained facial characteristics, enriching the overall representation with detailed local information essential for precise analysis.

- Attentional Feature Fusion: The extracted features are fused using an adaptive attention-based mechanism that assigns importance to the most discriminative features, leading to improved feature representation and robustness.

- Robust Classification Strategy: We utilize a bagging-based SVM classifier with a polynomial kernel, enhancing generalization capabilities and mitigating overfitting issues.

2. Related Work

| Paper | Methodology | Dataset | Strengths | Limitations | Evaluation Metric% |

|---|---|---|---|---|---|

| Akter et al. [29] (2021) | MobileNet-V1 | Autism Image Data [39] | Improved MobileNet-V1 outperforms other methods with higher accuracy | Limited images and low quality | Accuracy: 92.10 |

| Li et al. [30] (2023) | MobileNetV2 and MobileNetV3 | Autism Image Data [39] | Suitable for mobile devices | Low accuracy | Accuracy: 90.5 Recall: 92.33 F1-score: 90.67 |

| Melinda et al. [31] (2024) | DeepLabV3 | Autism Image Data [39] | The integration of DeepLabV3 improves accuracy | Limited dataset | Accuracy: 85.9 Recall: 90 Precision: 85.9 F1 score: 87 |

| Ahmed et al. [32] (2024) | ResNet34, ResNet50, AlexNet, MobileNetV2, VGG16, and VGG19 | Autism Image Data [39] | Efficient use of transfer learning | Low accuracy | Accuracy: 92 |

| Fahaad et al. [33] (2024) | ViT model | Autism Image Data [39] | ViT models capture both local and global features | Limited dataset | Accuracy: 77 |

| Reddy et al. [34] (2024) | EfficientNetB0 | Autism Image Data [39] | Lightweight deep learning | Low accuracy | Accuracy: 87.9 |

| Mahmoud et al. [35] (2023) | A sequencer-based patch wise Local Feature Extractor along with a Global Feature Extractor. | Autism Image Data [39] | Combines local and global features for improved classification. | Limited dataset | Accuracy: 94.7 Recall: 95.3 Precision: 94 F1-score: 94.6 |

| Mujeeb et al. [36] (2022) | Used five CNN models as MobileNet, Xception, EfficientNetB0, EfficientNetB1, EfficientNetB2 for FE and a DNN for classification. | Autism Image Data [39] | Strong features | Limited dataset | Accuracy: 90 Recall: 88.46 Precision: 92 |

| Alam et al. [37] (2025) | Xception | Autism Image Data [39] | Effectively handles domain differences | Limited dataset | Accuracy: 91 Recall: 91 Precision: 91 F1-score: 91 |

| Hossain et al. [38] (2025) | DenseNet121 | Autism Image Data [39] | Used explainable AI techniques for interpretability | Low accuracy | Accuracy: 90.33 Recall: 92 Precision: 92 F1-score: 90 |

3. Proposed Methodology

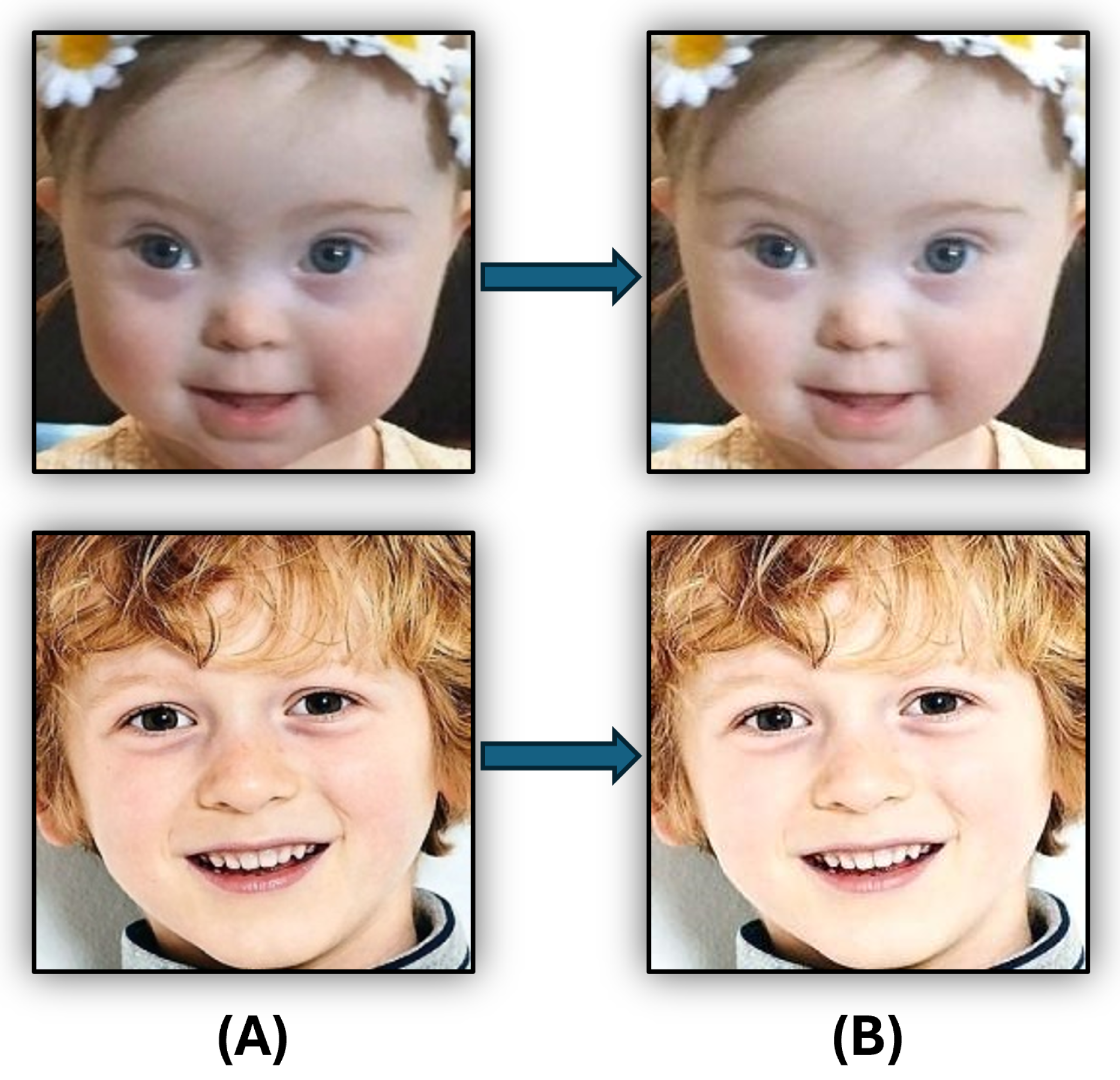

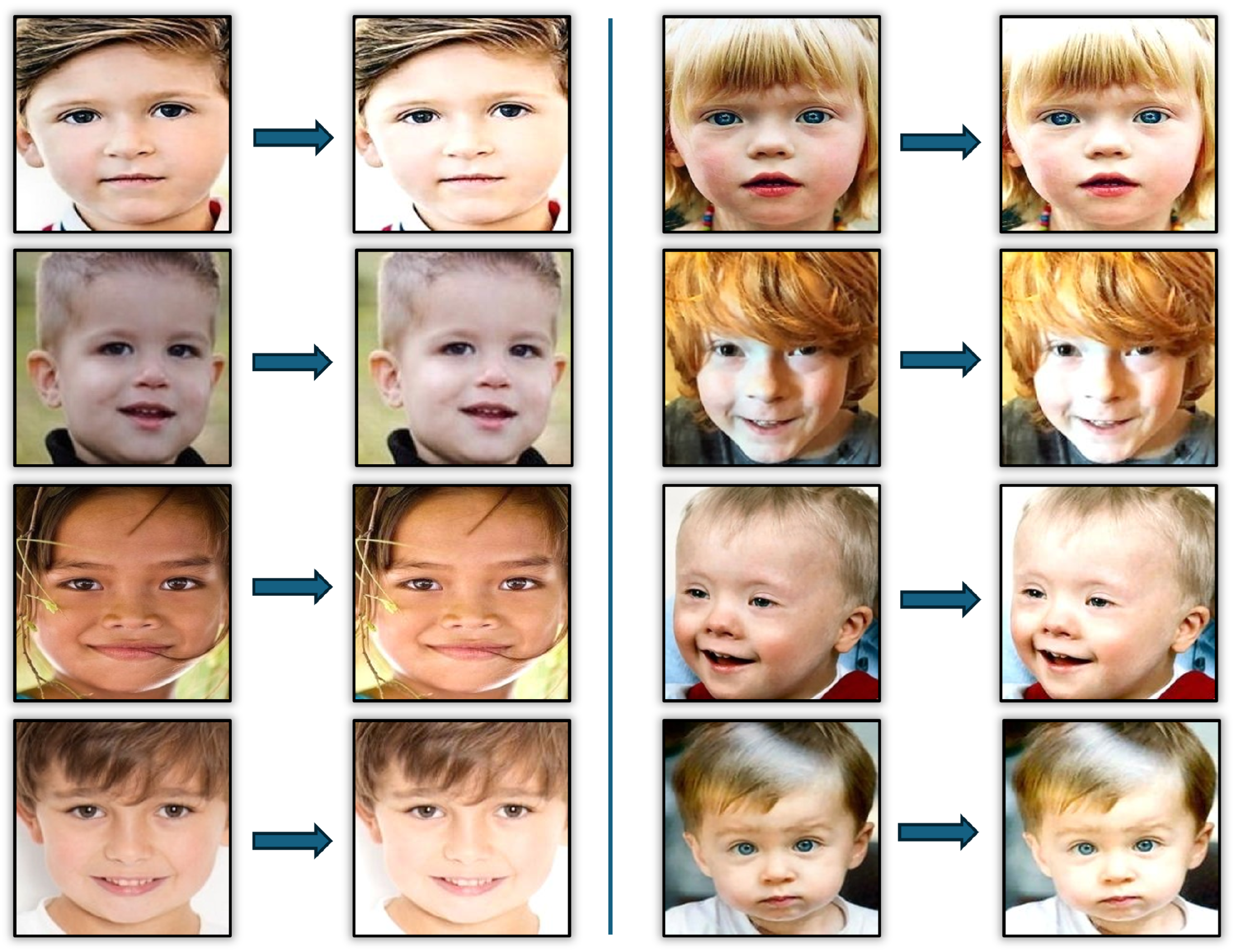

3.1. Input Images

3.2. Images Pre-Processing

3.3. Features Extraction

3.3.1. NASNetMobile DL Model

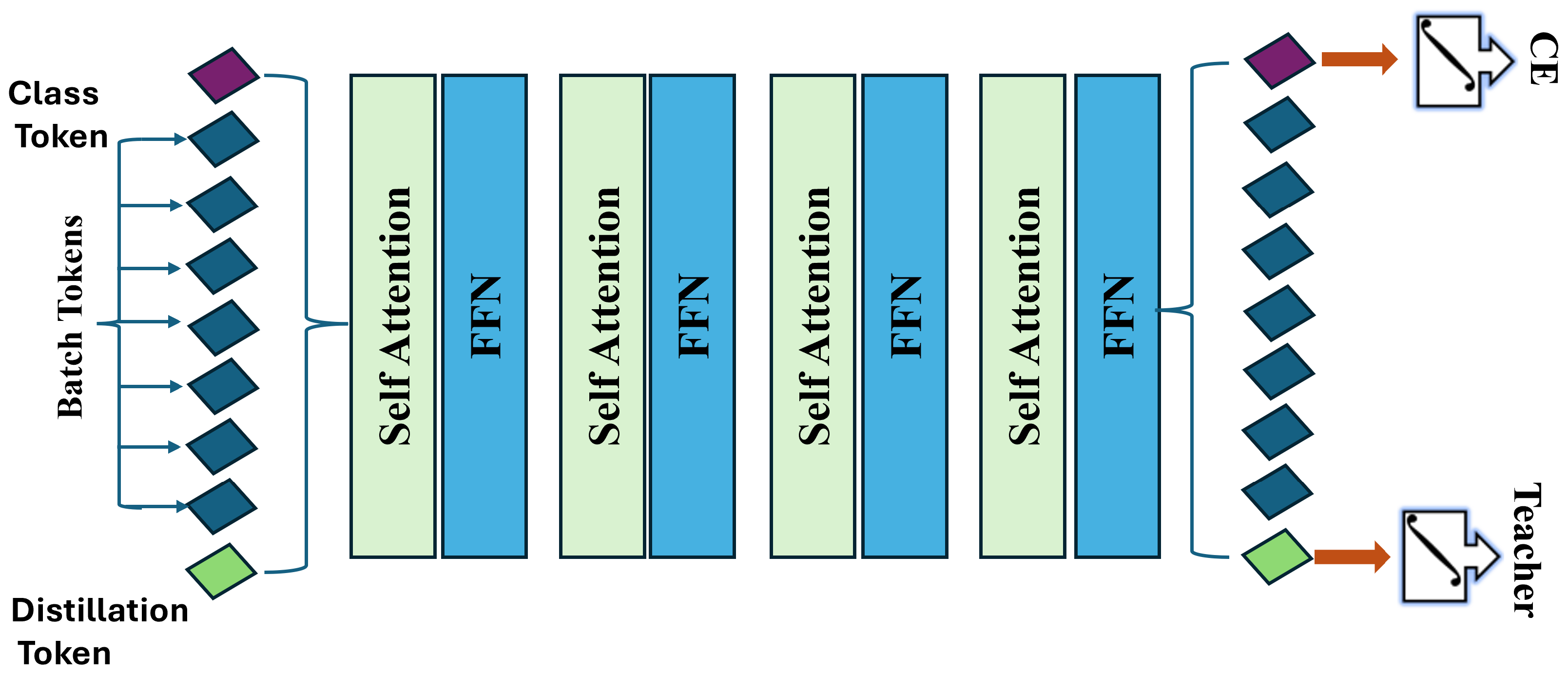

3.3.2. Data-Efficient Image Transformer (DeiT)

- Patch Embedding: The image is split into a sequence of N patches, where

- Linear Embedding: Each patch is linearly embedded into a vector of dimension d, resulting inwhere is a class token, E is the embedding matrix, and represents the position embeddings.

- Self-Attention Mechanism: The self-attention layer is defined as

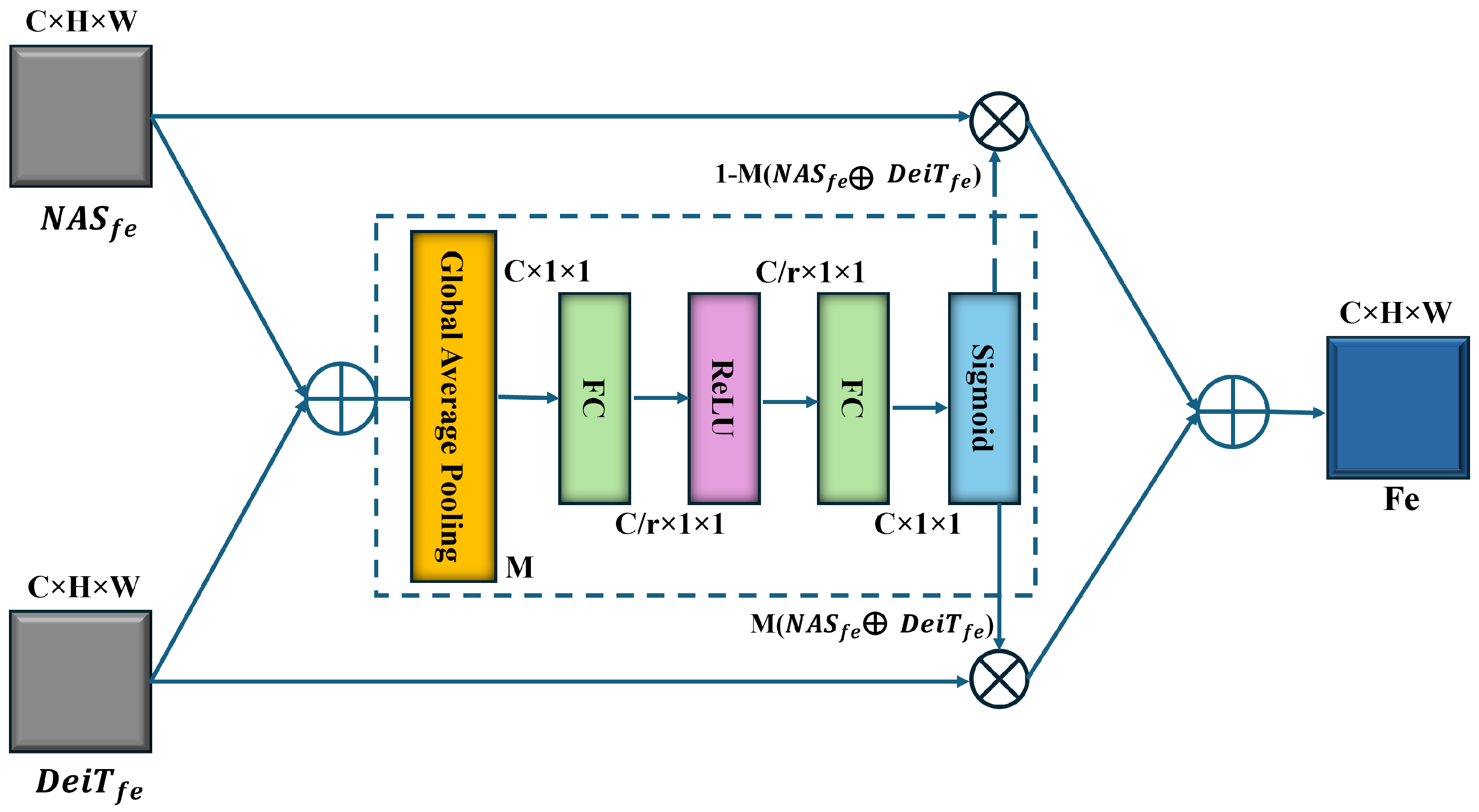

3.4. Feature Fusion

3.5. Classification

4. Experimental Results

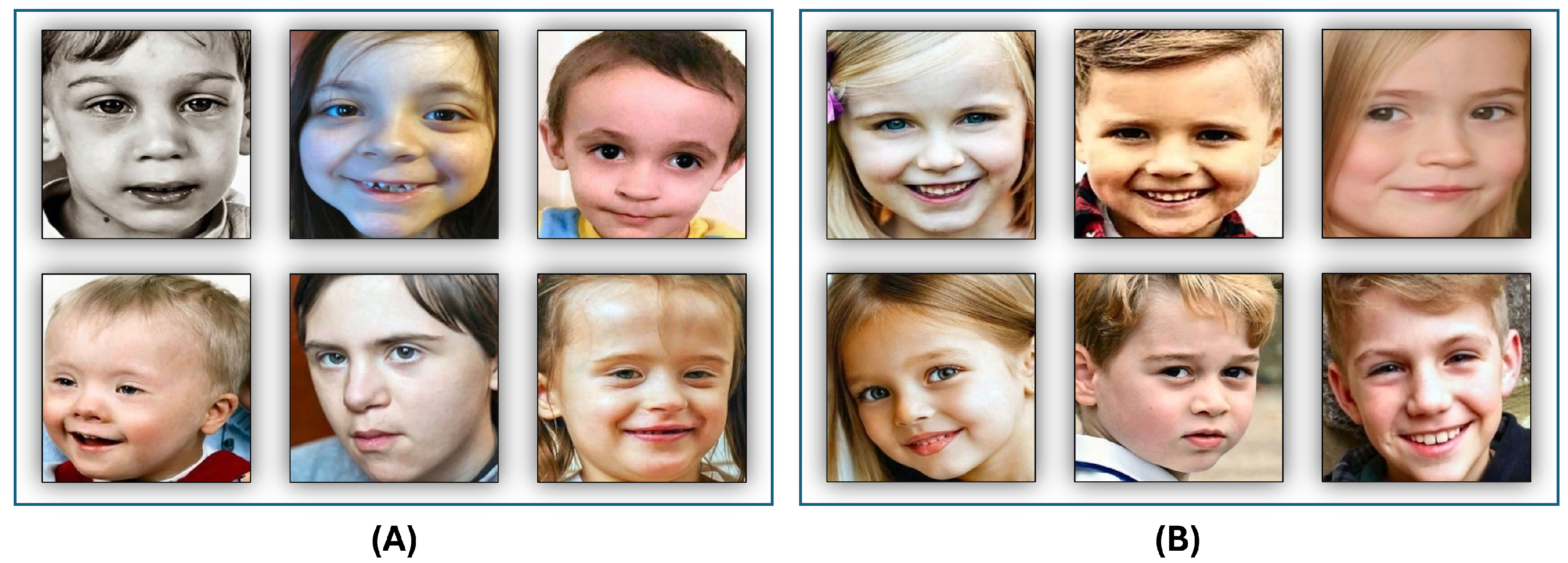

4.1. Dataset Description

4.2. Evaluation Metrics

- p-values: To quantify the probability that the observed improvement is due to chance. A p-value < 0.05 indicates statistical significance.

- Mann-Whitney U test: It is a non-parametric test to compare the distributions of our model’s accuracy against the baseline, especially useful when data are not normally distributed.

5. Results and Discussion

5.1. A Comparison of ML Classifiers to Pre-Trained DL Models

5.2. A Comparison of ML Classifiers on Fusion Between DL Models with DeiT Transformer

5.3. Comparison with the State-of-the-Art Techniques

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ASD | Autism Spectrum Disorder | NGS | Next-generation sequencing |

| ID | Intellectual disabilities | ADHD | Hyperactivity disorder |

| EEG | Electroencephalogram | SOR | Sensory Over-Responsivity |

| AOs | Anger outbursts | DSM | Diagnostic and Statistical Manual of Mental Disorders |

| GI | Gastrointestinal | AI | Artificial intelligence |

| DL | Deep learning | EGs | Experimental groups |

| CGs | Control groups | TD | Typically developing |

| LSTM | Long short-term memory | DWT | Discrete wavelet transform |

| KNN | k-nearest neighbors | XGB | Extreme gradient boosting |

| AUC | Area under curve | LSTM | Long Short-Term Memory |

| NLP | Natural language processing | Bi-LSTM | Bidirectional LSTM |

| ML | Machine learning | DeiT | Data-efficient Image Transformer |

| NAS | Neural architecture search | ViT | Vision transformer |

| ANNs | Artificial neural networks | SVMs | Support vector machines |

| DTs | Decision trees | RF | Random forest |

| TP | True positive | FP | False positive |

| TN | True negative | FN | False negative |

References

- Wankhede, N.L.; Kale, M.B.; Shukla, M.; Nathiya, D.; Roopashree, R.; Kaur, P.; Goyanka, B.; Rahangdale, S.R.; Taksande, B.G.; Upaganlawar, A.B.; et al. Leveraging AI for the diagnosis and treatment of autism spectrum disorder: Current trends and future prospects. Asian J. Psychiatry 2024, 101, 104241. [Google Scholar] [CrossRef] [PubMed]

- Liloia, D.; Zamfira, D.A.; Tanaka, M.; Manuello, J.; Crocetta, A.; Keller, R.; Cozzolino, M.; Duca, S.; Cauda, F.; Costa, T. Disentangling the role of gray matter volume and concentration in autism spectrum disorder: A meta-analytic investigation of 25 years of voxel-based morphometry research. Neurosci. Biobehav. Rev. 2024, 164, 105791. [Google Scholar] [CrossRef]

- Choi, L.; An, J.Y. Genetic architecture of autism spectrum disorder: Lessons from large-scale genomic studies. Neurosci. Biobehav. Rev. 2021, 128, 244–257. [Google Scholar] [CrossRef]

- Khogeer, A.A.; AboMansour, I.S.; Mohammed, D.A. The role of genetics, epigenetics, and the environment in ASD: A mini review. Epigenomes 2022, 6, 15. [Google Scholar] [CrossRef]

- Sandin, S.; Lichtenstein, P.; Kuja-Halkola, R.; Larsson, H.; Hultman, C.M.; Reichenberg, A. The familial risk of autism. JAMA 2014, 311, 1770–1777. [Google Scholar] [CrossRef]

- Sandin, S.; Schendel, D.; Magnusson, P.; Hultman, C.; Surén, P.; Susser, E.; Grønborg, T.; Gissler, M.; Gunnes, N.; Gross, R.; et al. Autism risk associated with parental age and with increasing difference in age between the parents. Mol. Psychiatry 2016, 21, 693–700. [Google Scholar] [CrossRef]

- Frans, E.; Lichtenstein, P.; Hultman, C.; Kuja-Halkola, R. Age at fatherhood: Heritability and associations with psychiatric disorders. Psychol. Med. 2016, 46, 2981–2988. [Google Scholar] [CrossRef]

- Ronald, A.; Pennell, C.E.; Whitehouse, A.J. Prenatal maternal stress associated with ADHD and autistic traits in early childhood. Front. Psychol. 2011, 1, 223. [Google Scholar] [CrossRef]

- Rijlaarsdam, J.; Pappa, I.; Walton, E.; Bakermans-Kranenburg, M.J.; Mileva-Seitz, V.R.; Rippe, R.C.; Roza, S.J.; Jaddoe, V.W.; Verhulst, F.C.; Felix, J.F.; et al. An epigenome-wide association meta-analysis of prenatal maternal stress in neonates: A model approach for replication. Epigenetics 2016, 11, 140–149. [Google Scholar] [CrossRef] [PubMed]

- Hughes, H.K.; Onore, C.E.; Careaga, M.; Rogers, S.J.; Ashwood, P. Increased monocyte production of IL-6 after toll-like receptor activation in children with autism spectrum disorder (ASD) is associated with repetitive and restricted behaviors. Brain Sci. 2022, 12, 220. [Google Scholar] [CrossRef]

- Al-Beltagi, M. Autism medical comorbidities. World J. Clin. Pediatr. 2021, 10, 15. [Google Scholar] [CrossRef] [PubMed]

- Hustyi, K.M.; Ryan, A.H.; Hall, S.S. A scoping review of behavioral interventions for promoting social gaze in individuals with autism spectrum disorder and other developmental disabilities. Res. Autism Spectr. Disord. 2023, 100, 102074. [Google Scholar] [CrossRef] [PubMed]

- Summers, J.; Shahrami, A.; Cali, S.; D’Mello, C.; Kako, M.; Palikucin-Reljin, A.; Savage, M.; Shaw, O.; Lunsky, Y. Self-injury in autism spectrum disorder and intellectual disability: Exploring the role of reactivity to pain and sensory input. Brain Sci. 2017, 7, 140. [Google Scholar] [CrossRef] [PubMed]

- Cummings, K.K.; Jung, J.; Zbozinek, T.D.; Wilhelm, F.H.; Dapretto, M.; Craske, M.G.; Bookheimer, S.Y.; Green, S.A. Shared and distinct biological mechanisms for anxiety and sensory over-responsivity in youth with autism versus anxiety disorders. J. Neurosci. Res. 2024, 102, e25250. [Google Scholar] [CrossRef]

- Thiele-Swift, H.N.; Dorstyn, D.S. Anxiety prevalence in Youth with Autism: A systematic review and Meta-analysis of Methodological and Sample moderators. Rev. J. Autism Dev. Disord. 2024, 11, 1–14. [Google Scholar] [CrossRef]

- Ambrose, K.; Adams, D.; Simpson, K.; Keen, D. Exploring profiles of anxiety symptoms in male and female children on the autism spectrum. Res. Autism Spectr. Disord. 2020, 76, 101601. [Google Scholar] [CrossRef]

- Townsend, A.N.; Guzick, A.G.; Hertz, A.G.; Kerns, C.M.; Goodman, W.K.; Berry, L.N.; Kendall, P.C.; Wood, J.J.; Storch, E.A. Anger outbursts in youth with ASD and anxiety: Phenomenology and relationship with family accommodation. Child Psychiatry Hum. Dev. 2024, 55, 1259–1268. [Google Scholar] [CrossRef]

- Wang, J.; Ma, B.; Wang, J.; Zhang, Z.; Chen, O. Global prevalence of autism spectrum disorder and its gastrointestinal symptoms: A systematic review and meta-analysis. Front. Psychiatry 2022, 13, 963102. [Google Scholar] [CrossRef]

- Piven, J.; Harper, J.; Palmer, P.; Arndt, S. Course of behavioral change in autism: A retrospective study of high-IQ adolescents and adults. J. Am. Acad. Child Adolesc. Psychiatry 1996, 35, 523–529. [Google Scholar] [CrossRef]

- Seltzer, M.M.; Krauss, M.W.; Shattuck, P.T.; Orsmond, G.; Swe, A.; Lord, C. The symptoms of autism spectrum disorders in adolescence and adulthood. J. Autism Dev. Disord. 2003, 33, 565–581. [Google Scholar] [CrossRef]

- Beadle-Brown, J.; Murphy, G.; Wing, L.; Gould, J.; Shah, A.; Holmes, N. Changes in skills for people with intellectual disability: A follow-up of the Camberwell Cohort. J. Intellect. Disabil. Res. 2000, 44, 12–24. [Google Scholar] [CrossRef] [PubMed]

- Kohli, M.; Kar, A.K.; Sinha, S. The role of intelligent technologies in early detection of autism spectrum disorder (asd): A scoping review. IEEE Access 2022, 10, 104887–104913. [Google Scholar] [CrossRef]

- Zhang, X.; Quan, L.; Yang, Y. SAPDA: Significant areas preserved data augmentation. Int. J. Mach. Learn. Cybern. 2024, 15, 5107–5118. [Google Scholar] [CrossRef]

- Biswas, M.; Buckchash, H.; Prasad, D.K. pNNCLR: Stochastic pseudo neighborhoods for contrastive learning based unsupervised representation learning problems. Neurocomputing 2024, 593, 127810. [Google Scholar] [CrossRef]

- Wang, J.; Li, X.; Ma, Z. Multi-Scale Three-Path Network (MSTP-Net): A new architecture for retinal vessel segmentation. Measurement 2025, 250, 117100. [Google Scholar] [CrossRef]

- Zhao, Y.; Li, X.; Zhou, C.; Peng, H.; Zheng, Z.; Chen, J.; Ding, W. A review of cancer data fusion methods based on deep learning. Inf. Fusion 2024, 108. [Google Scholar] [CrossRef]

- Yin, W.; Mostafa, S.; Wu, F.X. Diagnosis of autism spectrum disorder based on functional brain networks with deep learning. J. Comput. Biol. 2021, 28, 146–165. [Google Scholar] [CrossRef]

- Ding, Y.; Zhang, H.; Qiu, T. Deep learning approach to predict autism spectrum disorder: A systematic review and meta-analysis. BMC Psychiatry 2024, 24, 739. [Google Scholar] [CrossRef]

- Akter, T.; Ali, M.H.; Khan, M.I.; Satu, M.S.; Uddin, M.J.; Alyami, S.A.; Ali, S.; Azad, A.; Moni, M.A. Improved transfer-learning-based facial recognition framework to detect autistic children at an early stage. Brain Sci. 2021, 11, 734. [Google Scholar] [CrossRef]

- Li, Y.; Huang, W.C.; Song, P.H. A face image classification method of autistic children based on the two-phase transfer learning. Front. Psychol. 2023, 14, 1226470. [Google Scholar] [CrossRef]

- Melinda, M.; Aqif, H.; Junidar, J.; Oktiana, M.; Basir, N.B.; Afdhal, A.; Zainal, Z. Image segmentation performance using Deeplabv3+ with Resnet-50 on autism facial classification. JURNAL INFOTEL 2024, 16, 441–456. [Google Scholar] [CrossRef]

- Ahmad, I.; Rashid, J.; Faheem, M.; Akram, A.; Khan, N.A.; Amin, R.u. Autism spectrum disorder detection using facial images: A performance comparison of pretrained convolutional neural networks. Healthc. Technol. Lett. 2024, 11, 227–239. [Google Scholar] [CrossRef] [PubMed]

- Fahaad Almufareh, M.; Tehsin, S.; Humayun, M.; Kausar, S. Facial Classification for Autism Spectrum Disorder. J. Disabil. Res. 2024, 3, 20240025. [Google Scholar] [CrossRef]

- Reddy, P. Diagnosis of Autism in Children Using Deep Learning Techniques by Analyzing Facial Features. Eng. Proc. 2024, 59, 198. [Google Scholar] [CrossRef]

- Mahamood, M.N.; Uddin, M.Z.; Shahriar, M.A.; Alnajjar, F.; Ahad, M.A.R. Autism Spectrum Disorder Classification via Local and Global Feature Representation of Facial Image. In Proceedings of the 2023 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Honolulu, HI, USA, 1–4 October 2023; pp. 1892–1897. [Google Scholar]

- Mujeeb Rahman, K.; Subashini, M.M. Identification of autism in children using static facial features and deep neural networks. Brain Sci. 2022, 12, 94. [Google Scholar] [CrossRef]

- Alam, S.; Rashid, M.M. Enhanced Early Autism Screening: Assessing Domain Adaptation with Distributed Facial Image Datasets and Deep Federated Learning. IIUM Eng. J. 2025, 26, 113–128. [Google Scholar] [CrossRef]

- Hossain, S.S.; Al-Islam, F.; Islam, M.R.; Rahman, S.; Parvej, M.S. Autism Spectrum Disorder Identification from Facial Images Using Fine Tuned Pre-trained Deep Learning Models and Explainable AI Techniques. Semarak Int. J. Appl. Psychol. 2025, 5, 29–53. [Google Scholar] [CrossRef]

- Gerry. Autistic Children Data Set. 2020. Available online: https://www.kaggle.com/cihan063/autism-image-data (accessed on 2 July 2021).

- Chaudhury, S.; Raw, S.; Biswas, A.; Gautam, A. An integrated approach of logarithmic transformation and histogram equalization for image enhancement. In Proceedings of the Fourth International Conference on Soft Computing for Problem Solving: SocProS 2014, Silchar, Assam, India, 27–29 December 2014; Springer: New Delhi, India, 2015; Volume 1, pp. 59–70. [Google Scholar]

- Manikpuri, U.; Yadav, Y. Image enhancement through logarithmic transformation. Int. J. Innov. Res. Adv. Eng. (IJIRAE) 2014, 1, 357–362. [Google Scholar]

- Bhosale, A. Log Transform. MATLAB Central File Exchange. 2023. Available online: https://www.mathworks.com/matlabcentral/fileexchange/50286-log-transform (accessed on 22 October 2023).

- Alsakar, Y.M.; Sakr, N.A.; Elmogy, M. An enhanced classification system of various rice plant diseases based on multi-level handcrafted feature extraction technique. Sci. Rep. 2024, 14, 30601. [Google Scholar] [CrossRef]

- Guyon, I.; Elisseeff, A. An introduction to feature extraction. In Feature Extraction: Foundations and Applications; Springer: Berlin/Heidelberg, Germany, 2006; pp. 1–25. [Google Scholar]

- Nader, N.; El-Gamal, F.E.Z.A.; Elmogy, M. Enhanced kinship verification analysis based on color and texture handcrafted techniques. Vis. Comput. 2024, 40, 2325–2346. [Google Scholar] [CrossRef]

- Mutlag, W.K.; Ali, S.K.; Aydam, Z.M.; Taher, B.H. Feature extraction methods: A review. J. Phys. Conf. Ser. 2020, 1591, 012028. [Google Scholar] [CrossRef]

- Addagarla, S.K.; Chakravarthi, G.K.; Anitha, P. Real time multi-scale facial mask detection and classification using deep transfer learning techniques. Int. J. 2020, 9, 4402–4408. [Google Scholar] [CrossRef]

- He, X.; Zhao, K.; Chu, X. AutoML: A survey of the state-of-the-art. Knowl.-Based Syst. 2021, 212, 106622. [Google Scholar] [CrossRef]

- Zoph, B.; Vasudevan, V.; Shlens, J.; Le, Q.V. Learning transferable architectures for scalable image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8697–8710. [Google Scholar]

- Touvron, H.; Cord, M.; Douze, M.; Massa, F.; Sablayrolles, A.; Jégou, H. Training data-efficient image transformers & distillation through attention. In Proceedings of the 38th International Conference on Machine Learning (ICML), Virtual Event, 18–24 July, 2021; PMLR: Birmingham, UK, 2021; Volume 139, pp. 10347–10357. [Google Scholar]

- Wang, W.; Zhang, J.; Cao, Y.; Shen, Y.; Tao, D. Towards data-efficient detection transformers. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Cham, Switzerland, 2022; pp. 88–105. [Google Scholar]

- Dai, Y.; Gieseke, F.; Oehmcke, S.; Wu, Y.; Barnard, K. Attentional feature fusion. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Virtual Event, 5–9 January 2021; pp. 3560–3569. [Google Scholar]

- Shahzad, I.; Khan, S.U.R.; Waseem, A.; Abideen, Z.U.; Liu, J. Enhancing ASD classification through hybrid attention-based learning of facial features. Signal Image Video Process. 2024, 18, 475–488. [Google Scholar] [CrossRef]

- Dong, X.; Qin, Y.; Gao, Y.; Fu, R.; Liu, S.; Ye, Y. Attention-based multi-level feature fusion for object detection in remote sensing images. Remote Sens. 2022, 14, 3735. [Google Scholar] [CrossRef]

- An, L.; Wang, L.; Li, Y. HEA-Net: Attention and MLP hybrid encoder architecture for medical image segmentation. Sensors 2022, 22, 7024. [Google Scholar] [CrossRef]

- Fan, X.; Li, X.; Yan, C.; Fan, J.; Chen, L.; Wang, N. Converging Channel Attention Mechanisms with Multilayer Perceptron Parallel Networks for Land Cover Classification. Remote Sens. 2023, 15, 3924. [Google Scholar] [CrossRef]

- Dong, S.; Liu, J.; Han, B.; Wang, S.; Zeng, H.; Zhang, M. UMAP-Based All-MLP Marine Diesel Engine Fault Detection Method. Electronics 2025, 14, 1293. [Google Scholar] [CrossRef]

- Li, W.; Deng, Y.; Ding, M.; Wang, D.; Sun, W.; Li, Q. Industrial data classification using stochastic configuration networks with self-attention learning features. Neural Comput. Appl. 2022, 34, 22047–22069. [Google Scholar] [CrossRef]

- Du, W.; Fan, Z.; Yan, Y.; Yu, R.; Liu, J. AFMUNet: Attention Feature Fusion Network Based on a U-Shaped Structure for Cloud and Cloud Shadow Detection. Remote Sens. 2024, 16, 1574. [Google Scholar] [CrossRef]

- Alsakar, Y.M.; Elazab, N.; Nader, N.; Mohamed, W.; Ezzat, M.; Elmogy, M. Multi-label dental disorder diagnosis based on MobileNetV2 and swin transformer using bagging ensemble classifier. Sci. Rep. 2024, 14, 25193. [Google Scholar] [CrossRef] [PubMed]

- Arjunagi, S.; Patil, N. Texture based leaf disease classification using machine learning techniques. Int. J. Eng. Adv. Technol. (IJEAT) 2019, 9, 2249–8958. [Google Scholar] [CrossRef]

- Bonidia, R.P.; Sampaio, L.D.H.; Lopes, F.M.; Sanches, D.S. Feature extraction of long non-coding rnas: A fourier and numerical mapping approach. In Proceedings of the Iberoamerican Congress on Pattern Recognition, Havana, Cuba, 28–31 October 2019; Springer: Cham, Switzerland, 2019; pp. 469–479. [Google Scholar]

- Wang, B.; Zhang, C.; Du, X.x.; Zhang, J.f. lncRNA-disease association prediction based on latent factor model and projection. Sci. Rep. 2021, 11, 19965. [Google Scholar] [CrossRef]

- Chowdhury, M.E.; Rahman, T.; Khandakar, A.; Ayari, M.A.; Khan, A.U.; Khan, M.S.; Al-Emadi, N.; Reaz, M.B.I.; Islam, M.T.; Ali, S.H.M. Automatic and reliable leaf disease detection using deep learning techniques. AgriEngineering 2021, 3, 294–312. [Google Scholar] [CrossRef]

- Weiss, K.; Khoshgoftaar, T.M.; Wang, D. A survey of transfer learning. J. Big Data 2016, 3, 9. [Google Scholar] [CrossRef]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; Volume 31. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning (PMLR), Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- Pereira, F.; Mitchell, T.; Botvinick, M. Machine learning classifiers and fMRI: A tutorial overview. Neuroimage 2009, 45, S199–S209. [Google Scholar] [CrossRef]

- Cristianini, N.; Shawe-Taylor, J. An Introduction to Support Vector Machines and Other Kernel-Based Learning Methods; Cambridge University Press: Cambridge, UK, 2000. [Google Scholar]

- Safavian, S.R.; Landgrebe, D. A survey of decision tree classifier methodology. IEEE Trans. Syst. Man Cybern. 1991, 21, 660–674. [Google Scholar] [CrossRef]

- Sandika, B.; Avil, S.; Sanat, S.; Srinivasu, P. Random forest based classification of diseases in grapes from images captured in uncontrolled environments. In Proceedings of the 2016 IEEE 13th International Conference on Signal Processing (ICSP), Chengdu, China, 6–10 November 2016; pp. 1775–1780. [Google Scholar]

- Chen, J.; Zeb, A.; Nanehkaran, Y.A.; Zhang, D. Stacking ensemble model of deep learning for plant disease recognition. J. Ambient Intell. Humaniz. Comput. 2023, 14, 12359–12372. [Google Scholar] [CrossRef]

- Vo, H.T.; Quach, L.D.; Hoang, T.N. Ensemble of deep learning models for multi-plant disease classification in smart farming. Int. J. Adv. Comput. Sci. Appl. 2023, 14, 1045–1054. [Google Scholar] [CrossRef]

| Attribute | Value |

|---|---|

| Total Number of Images | 2936 |

| Number of Training Images | 2536 |

| Number of Validation Images | 100 |

| Number of Test Images | 300 |

| Age Range | 2 to 14 years old (mostly 2 to 8 years old) |

| Model | Classifier | Metric | Classes Name | Overall Accuracy (%) | |||

|---|---|---|---|---|---|---|---|

| Recall (%) | Precision (%) | F1-Score (%) | Accuracy (%) | ||||

| NASNetMobile | SVM (Linear) | Non_Autistic | 85 | 85 | 85 | 85 | 84.67 |

| Autistic | 85 | 85 | 85 | 85 | |||

| SVM (Poly) | Non_Autistic | 86 | 88 | 87 | 86 | 87 | |

| Autistic | 88 | 86 | 87 | 88 | |||

| SVM (RBF) | Non_Autistic | 82 | 84 | 83 | 82 | 83.33 | |

| Autistic | 85 | 82 | 84 | 85 | |||

| KNN | Non_Autistic | 85 | 75 | 79 | 85 | 78 | |

| Autistic | 71 | 82 | 76 | 71 | |||

| DT | Non_Autistic | 83 | 81 | 82 | 83 | 81.33 | |

| Autistic | 80 | 82 | 81 | 80 | |||

| RF | Non_Autistic | 89 | 92 | 90 | 89 | 90.33 | |

| Autistic | 92 | 89 | 90 | 92 | |||

| Bagging | Non_Autistic | 91 | 92 | 92 | 91 | 91.67 | |

| Autistic | 92 | 91 | 92 | 92 | |||

| DeiT | SVM (Linear) | Non_Autistic | 85 | 85 | 85 | 85 | 85 |

| Autistic | 85 | 85 | 85 | 85 | |||

| SVM (Poly) | Non_Autistic | 91 | 88 | 90 | 91 | 89.67 | |

| Autistic | 88 | 91 | 89 | 88 | |||

| SVM (RBF) | Non_Autistic | 85 | 87 | 86 | 85 | 86.33 | |

| Autistic | 87 | 86 | 86 | 87 | |||

| KNN | Non_Autistic | 93 | 77 | 84 | 93 | 82.67 | |

| Autistic | 72 | 92 | 81 | 72 | |||

| DT | Non_Autistic | 86 | 85 | 86 | 86 | 85.67 | |

| Autistic | 85 | 86 | 86 | 85 | |||

| RF | Non_Autistic | 90 | 94 | 92 | 90 | 92.33 | |

| Autistic | 95 | 90 | 93 | 95 | |||

| Bagging | Non_Autistic | 92 | 93 | 93 | 92 | 92.67 | |

| Autistic | 93 | 92 | 93 | 93 | |||

| InceptionResNetV2 | SVM (Linear) | Non_Autistic | 89 | 88 | 88 | 89 | 88 |

| Autistic | 87 | 89 | 88 | 87 | |||

| SVM (Poly) | Non_Autistic | 87 | 87 | 87 | 87 | 87 | |

| Autistic | 87 | 87 | 87 | 87 | |||

| SVM (RBF) | Non_Autistic | 79 | 81 | 80 | 79 | 80.33 | |

| Autistic | 81 | 80 | 81 | 81 | |||

| KNN | Non_Autistic | 69 | 84 | 76 | 69 | 78 | |

| Autistic | 87 | 74 | 80 | 87 | |||

| DT | Non_Autistic | 87 | 84 | 86 | 87 | 85.33 | |

| Autistic | 84 | 86 | 85 | 84 | |||

| RF | Non_Autistic | 93 | 89 | 91 | 93 | 90 | |

| Autistic | 88 | 92 | 90 | 88 | |||

| Bagging | Non_Autistic | 91 | 90 | 91 | 91 | 90.66 | |

| Autistic | 90 | 91 | 91 | 90 | |||

| VGG16 | SVM (Linear) | Non_Autistic | 89 | 81 | 85 | 89 | 84 |

| Autistic | 79 | 88 | 83 | 79 | |||

| SVM (Poly) | Non_Autistic | 91 | 88 | 90 | 91 | 89.33 | |

| Autistic | 87 | 91 | 89 | 87 | |||

| SVM (RBF) | Non_Autistic | 84 | 84 | 84 | 84 | 84 | |

| Autistic | 84 | 84 | 84 | 84 | |||

| KNN | Non_Autistic | 92 | 73 | 81 | 92 | 78.67 | |

| Autistic | 65 | 89 | 75 | 65 | |||

| DT | Non_Autistic | 83 | 84 | 84 | 83 | 83.67 | |

| Autistic | 85 | 83 | 84 | 85 | |||

| RF | Non_Autistic | 85 | 86 | 86 | 85 | 86 | |

| Autistic | 87 | 86 | 86 | 87 | |||

| Bagging | Non_Autistic | 91 | 88 | 90 | 91 | 89.33 | |

| Autistic | 87 | 91 | 89 | 87 | |||

| EfficientNetB0 | SVM (Linear) | Non_Autistic | 87 | 92 | 89 | 87 | 89.33 |

| Autistic | 92 | 87 | 90 | 92 | |||

| SVM (Poly) | Non_Autistic | 85 | 88 | 86 | 85 | 86.33 | |

| Autistic | 88 | 85 | 87 | 88 | |||

| SVM (RBF) | Non_Autistic | 83 | 83 | 83 | 83 | 83.33 | |

| Autistic | 83 | 83 | 83 | 83 | |||

| KNN | Non_Autistic | 66 | 85 | 74 | 66 | 77.33 | |

| Autistic | 89 | 72 | 80 | 89 | |||

| DT | Non_Autistic | 83 | 86 | 84 | 83 | 84.67 | |

| Autistic | 86 | 84 | 85 | 86 | |||

| RF | Non_Autistic | 89 | 86 | 88 | 89 | 87.33 | |

| Autistic | 86 | 88 | 87 | 86 | |||

| Bagging | Non_Autistic | 85 | 88 | 86 | 85 | 86.33 | |

| Autistic | 88 | 85 | 87 | 88 | |||

| MobileNetV2 | SVM (Linear) | Non_Autistic | 88 | 87 | 88 | 87 | 87.67 |

| Autistic | 87 | 88 | 88 | 88 | |||

| SVM (Poly) | Non_Autistic | 89 | 89 | 89 | 89 | 89.33 | |

| Autistic | 89 | 89 | 89 | 89 | |||

| SVM (RBF) | Non_Autistic | 85 | 88 | 86 | 85 | 86.67 | |

| Autistic | 88 | 86 | 87 | 88 | |||

| KNN | Non_Autistic | 91 | 79 | 85 | 91 | 83.33 | |

| Autistic | 75 | 90 | 82 | 75 | |||

| DT | Non_Autistic | 87 | 86 | 86 | 87 | 86.33 | |

| Autistic | 86 | 87 | 86 | 86 | |||

| RF | Non_Autistic | 85 | 91 | 88 | 85 | 88 | |

| Autistic | 91 | 86 | 88 | 91 | |||

| Bagging | Non_Autistic | 89 | 89 | 89 | 89 | 89.33 | |

| Autistic | 89 | 89 | 89 | 89 | |||

| Model | Classifier | Metric | Classes Name | Overall Accuracy (%) | |||

|---|---|---|---|---|---|---|---|

| Recall (%) | Precision (%) | F1-Score (%) | Accuracy (%) | ||||

| InceptionResNetV2 + DeiT | SVM (Linear) | Non_Autistic | 93 | 90 | 91 | 93 | 91.33 |

| Autistic | 90 | 92 | 91 | 90 | |||

| SVM (Poly) | Non_Autistic | 93 | 92 | 93 | 93 | 92.67 | |

| Autistic | 92 | 93 | 93 | 92 | |||

| SVM (RBF) | Non_Autistic | 91 | 90 | 91 | 91 | 90.67 | |

| Autistic | 90 | 91 | 91 | 90 | |||

| KNN | Non_Autistic | 67 | 89 | 77 | 67 | 79.33 | |

| Autistic | 91 | 74 | 82 | 91 | |||

| DT | Non_Autistic | 87 | 82 | 84 | 87 | 84 | |

| Autistic | 81 | 86 | 84 | 81 | |||

| RF | Non_Autistic | 94 | 90 | 92 | 94 | 91.67 | |

| Autistic | 89 | 94 | 91 | 89 | |||

| Bagging | Non_Autistic | 95 | 93 | 94 | 95 | 94 | |

| Autistic | 93 | 95 | 94 | 93 | |||

| VGG16 + DeiT | SVM (Linear) | Non_Autistic | 88 | 90 | 89 | 88 | 89 |

| Autistic | 90 | 88 | 89 | 90 | |||

| SVM (Poly) | Non_Autistic | 87 | 92 | 90 | 87 | 90 | |

| Autistic | 93 | 88 | 90 | 93 | |||

| SVM (RBF) | Non_Autistic | 88 | 91 | 89 | 88 | 89.67 | |

| Autistic | 91 | 88 | 90 | 91 | |||

| KNN | Non_Autistic | 88 | 82 | 85 | 88 | 84.33 | |

| Autistic | 81 | 87 | 84 | 81 | |||

| DT | Non_Autistic | 87 | 87 | 87 | 87 | 87 | |

| Autistic | 87 | 87 | 87 | 87 | |||

| RF | Non_Autistic | 96 | 91 | 94 | 96 | 93.33 | |

| Autistic | 91 | 96 | 93 | 91 | |||

| Bagging | Non_Autistic | 90 | 95 | 92 | 90 | 92.67 | |

| Autistic | 95 | 91 | 93 | 95 | |||

| EfficientNetV2B0 + DeiT | SVM (Linear) | Non_Autistic | 88 | 91 | 89 | 88 | 89.67 |

| Autistic | 91 | 88 | 90 | 91 | |||

| SVM (Poly) | Non_Autistic | 91 | 90 | 90 | 91 | 90.33 | |

| Autistic | 90 | 91 | 90 | 90 | |||

| SVM (RBF) | Non_Autistic | 93 | 91 | 92 | 93 | 92 | |

| Autistic | 91 | 93 | 92 | 91 | |||

| KNN | Non_Autistic | 67 | 96 | 79 | 67 | 82.33 | |

| Autistic | 97 | 75 | 85 | 97 | |||

| DT | Non_Autistic | 89 | 87 | 88 | 89 | 87.67 | |

| Autistic | 87 | 88 | 88 | 87 | |||

| RF | Non_Autistic | 96 | 90 | 93 | 96 | 92.67 | |

| Autistic | 89 | 96 | 92 | 89 | |||

| Bagging | Non_Autistic | 93 | 93 | 93 | 93 | 93 | |

| Autistic | 93 | 93 | 93 | 93 | |||

| MobileNetV2 + DeiT | SVM (Linear) | Non_Autistic | 90 | 91 | 90 | 90 | 90.33 |

| Autistic | 91 | 90 | 90 | 91 | |||

| SVM (Poly) | Non_Autistic | 92 | 91 | 91 | 92 | 91.33 | |

| Autistic | 91 | 92 | 91 | 91 | |||

| SVM (RBF) | Non_Autistic | 92 | 92 | 92 | 92 | 92 | |

| Autistic | 92 | 92 | 92 | 92 | |||

| KNN | Non_Autistic | 72 | 91 | 80 | 72 | 82.33 | |

| Autistic | 93 | 77 | 84 | 93 | |||

| DT | Non_Autistic | 87 | 86 | 86 | 87 | 86.33 | |

| Autistic | 86 | 87 | 86 | 86 | |||

| RF | Non_Autistic | 97 | 91 | 94 | 97 | 93.33 | |

| Autistic | 90 | 96 | 93 | 90 | |||

| Bagging | Non_Autistic | 95 | 93 | 94 | 95 | 94 | |

| Autistic | 93 | 95 | 94 | 93 | |||

| NASNetMobile + DeiT | SVM (Linear) | Non_Autistic | 93 | 90 | 91 | 93 | 91.33 |

| Autistic | 90 | 92 | 91 | 90 | |||

| SVM (Poly) | Non_Autistic | 93 | 91 | 92 | 93 | 92 | |

| Autistic | 91 | 93 | 92 | 91 | |||

| SVM (RBF) | Non_Autistic | 91 | 90 | 90 | 91 | 90.33 | |

| Autistic | 89 | 91 | 90 | 89 | |||

| KNN | Non_Autistic | 82 | 91 | 86 | 82 | 87 | |

| Autistic | 92 | 84 | 88 | 92 | |||

| DT | Non_Autistic | 87 | 87 | 87 | 87 | 86.67 | |

| Autistic | 87 | 87 | 87 | 87 | |||

| RF | Non_Autistic | 95 | 90 | 93 | 95 | 92.33 | |

| Autistic | 90 | 94 | 92 | 90 | |||

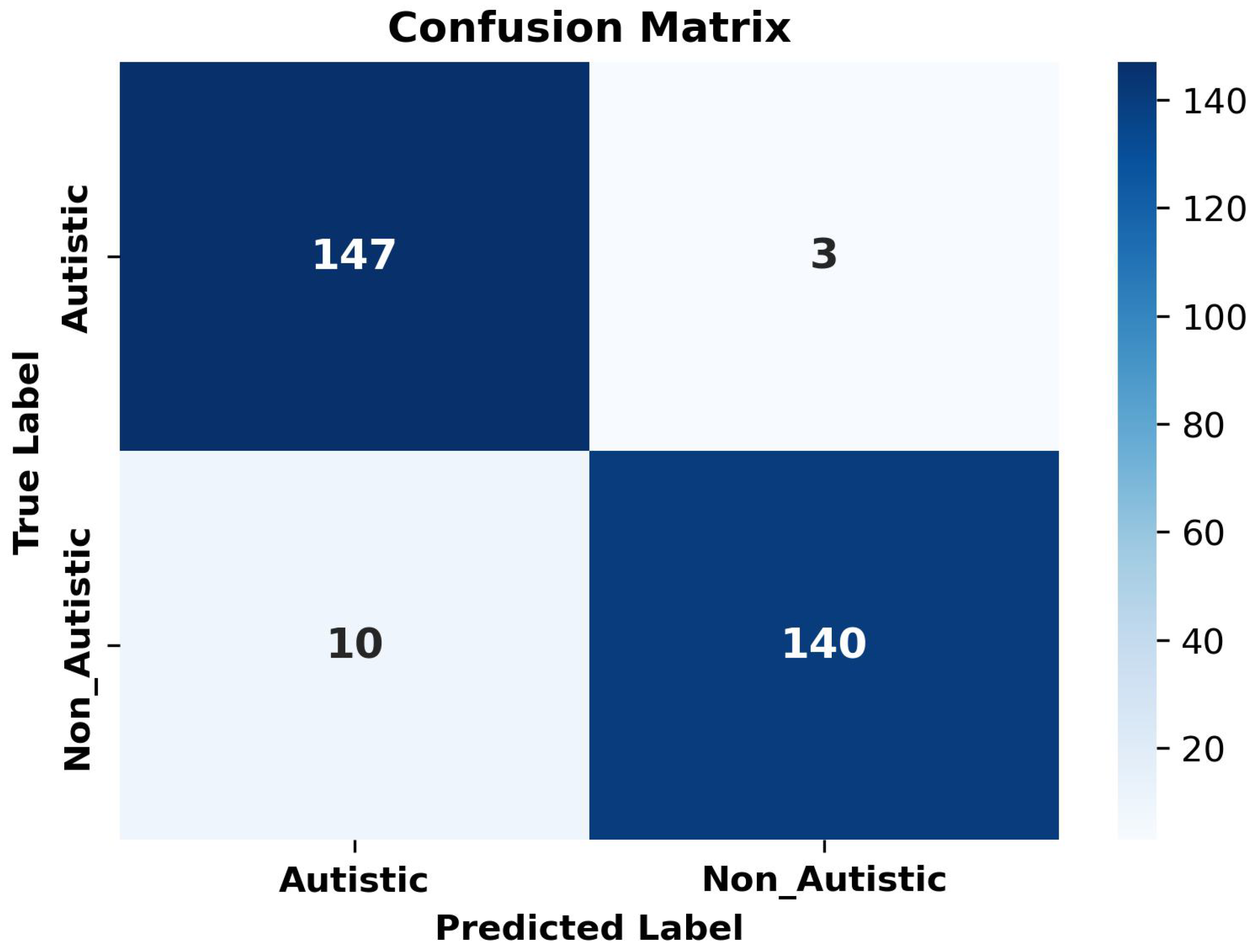

| Bagging | Non_Autistic | 98 | 94 | 96 | 98 | 95.67 | |

| Autistic | 93 | 98 | 96 | 93 | |||

| Metric | Value |

|---|---|

| Class-Specific Accuracy | |

| Autistic | 0.9800 |

| Non-Autistic | 0.9300 |

| Average Metrics | |

| Precision | 0.9577 |

| Recall | 0.9567 |

| F1-Score | 0.9566 |

| Overall Accuracy | 0.9567 |

| Statistical Significance | |

| Mann–Whitney U p-value | <0.0001 |

| 95% CI for Accuracy | [0.9300, 0.9800] |

| Paper | Date | Recall (%) | Precision (%) | F1-Score (%) | Accuracy (%) |

|---|---|---|---|---|---|

| Akter et al. [29] | 2021 | — | — | — | 92.10 |

| Li et al. [30] | 2023 | 92.33 | — | 90.67 | 90.5 |

| Melinda et al. [31] | 2024 | 90 | 85.9 | 87 | 85.9 |

| Ahmed et al. [32] | 2024 | — | — | — | 92 |

| Fahaad et al. [33] | 2024 | — | — | — | 77 |

| reddy et al. [34] | 2024 | — | — | — | 87.9 |

| Mahmoud et al. [35] | 2023 | 95.3 | 94 | 94.6 | 94.7 |

| Mujeeb et al. [36] | 2022 | 88.46 | 92 | — | 90 |

| Alam et al. [37] | 2025 | 91 | 91 | 91 | 91 |

| Hossain et al. [38] | 2025 | 92 | 92 | 90 | 90.33 |

| Prposed Methodology | 2025 | 95.77 | 95.67 | 95.66 | 95.67 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Altomi, Z.A.; Alsakar, Y.M.; El-Gayar, M.M.; Elmogy, M.; Fouda, Y.M. Autism Spectrum Disorder Diagnosis Based on Attentional Feature Fusion Using NasNetMobile and DeiT Networks. Electronics 2025, 14, 1822. https://doi.org/10.3390/electronics14091822

Altomi ZA, Alsakar YM, El-Gayar MM, Elmogy M, Fouda YM. Autism Spectrum Disorder Diagnosis Based on Attentional Feature Fusion Using NasNetMobile and DeiT Networks. Electronics. 2025; 14(9):1822. https://doi.org/10.3390/electronics14091822

Chicago/Turabian StyleAltomi, Zainab A., Yasmin M. Alsakar, Mostafa M. El-Gayar, Mohammed Elmogy, and Yasser M. Fouda. 2025. "Autism Spectrum Disorder Diagnosis Based on Attentional Feature Fusion Using NasNetMobile and DeiT Networks" Electronics 14, no. 9: 1822. https://doi.org/10.3390/electronics14091822

APA StyleAltomi, Z. A., Alsakar, Y. M., El-Gayar, M. M., Elmogy, M., & Fouda, Y. M. (2025). Autism Spectrum Disorder Diagnosis Based on Attentional Feature Fusion Using NasNetMobile and DeiT Networks. Electronics, 14(9), 1822. https://doi.org/10.3390/electronics14091822