Abstract

Advanced Persistent Threat (APT) refers to a highly targeted, sophisticated, and prolonged form of cyberattack, typically directed at specific organizations or individuals. The primary objective of such attacks is the theft of sensitive information or the disruption of critical operations. APT attacks are characterized by their stealth and complexity, often resulting in significant economic losses. Furthermore, these attacks may lead to intelligence breaches, operational interruptions, and even jeopardize national security and political stability. Given the covert nature and extended durations of APT attacks, current detection solutions encounter challenges such as high detection difficulty and insufficient accuracy. To address these limitations, this paper proposes an innovative high-accuracy APT attack detection model, CNN-KOA-BiGRU, which integrates Convolutional Neural Networks (CNN), Bidirectional Gated Recurrent Units (BiGRU), and the Kepler optimization algorithm (KOA). The model first utilizes CNN to extract spatial features from network traffic data, followed by the application of BiGRU to capture temporal dependencies and long-term memory, thereby forming comprehensive temporal features. Simultaneously, the Kepler optimization algorithm is employed to optimize the BiGRU network structure, achieving globally optimal feature weights and enhancing detection accuracy. Additionally, this study employs a combination of sampling techniques, including Synthetic Minority Over-sampling Technique (SMOTE) and Tomek links, to mitigate classification bias caused by dataset imbalance. Evaluation results on the CSE-CIC-IDS2018 experimental dataset demonstrate that the CNN-KOA-BiGRU model achieves superior performance in detecting APT attacks, with an average accuracy of 98.68%. This surpasses existing methods, including CNN (93.01%), CNN-BiGRU (97.77%), and Graph Convolutional Network (GCN) (95.96%) on the same dataset. Specifically, the proposed model demonstrates an accuracy improvement of 5.67% over CNN, 0.91% over CNN-BiGRU, and 2.72% over GCN. Overall, the proposed model achieves an average improvement of 3.1% compared to existing methods.

1. Introduction

Advanced Persistent Threat (APT) attacks are a complex and long-term type of cyber-attack, typically carried out by state actors or highly skilled hackers. The targets of APT attacks are often specific organizations or individuals, with the aim of stealing sensitive information or conducting espionage activities [1,2]. APT attacks are known for their stealthiness and persistence, allowing attackers to maintain access privileges for a long period of time and gradually obtain valuable data [3].

A notable example is the 2022 attack on the Northwestern Polytechnical University of China, where attackers used advanced techniques to infiltrate the university’s network [4]. They first gained access through phishing emails and malware, then escalated privileges to penetrate deeply into the core network and databases, stealing significant amounts of research data. For an extended period, the attackers evaded detection, employing sophisticated techniques to bypass traditional security measures. In recent years, the frequency of APT attacks has increased rapidly. Recent reports from the ‘Global Advanced Persistent Threat (APT) 2023 Mid-Year Report’ by Qi’an’xin Threat Intelligence Center [5], APT incidents surged by 30% in 2023, with government, financial, and high-tech sectors being the primary targets. For instance, the report highlights those entities related to government, energy, scientific research education, and financial trade in China were predominantly affected, with notable attacks also observed in technology, defense, and healthcare sectors. By 2024, APT tactics had evolved further, with cloud computing and IoT devices becoming new focal points for attackers. The reports highlight the growing challenge posed by these sophisticated threats: the increasing use of AI and machine learning in both attack and defense has improved threat detection but also introduced new layers of complexity.

To mitigate such attacks, traditional attack detection methods struggle against APT. Traditional detection systems, often based on rule-based approaches [6], are ineffective against the advanced evasion techniques used by APT attackers, resulting in low detection accuracy and inadequate prevention capabilities. Recognizing these challenges, researchers have developed detection schemes based on machine learning or deep learning [7] that can effectively improve detection accuracy. For example, decision tree (DT) constructs classification or regression models through conditional evaluation of data features. It collects network traffic data, extracts relevant features, and then constructs decision tree models for both training and testing purposes. Convolutional Neural Networks (CNNs) automatically extract features via convolutional layers. They collect and preprocess network traffic data, transform them into a two-dimensional matrix form, and subsequently construct a CNN model for training and testing. CNN with a Bidirectional Gated Recurrent Unit (CNN-BiGRU) model combines the strengths of CNNs and Bidirectional Gated Recurrent Units (BiGRUs) advantages of CNN and BiGRU. CNN is responsible for extracting spatial features, while BiGRU captures time series features and is suitable for processing spatiotemporal data. However, BiGRU models often rely on stochastic gradient descent for optimizing training weights, which may not ensure correct iteration direction or global optimality, hence lower prediction accuracy. Graph Convolutional Neural Networks (GCNs) detect APT attacks by constructing and simplifying heterogeneous knowledge graphs, extracting CVE node features, and feeding them into the GCN for processing. This method excels in enhancing detection accuracy and automating feature learning processes, yet it faces challenges in model updates and information retention, necessitating ongoing improvements to counteract evolving network threats. The flaws in APT attack detection still exist and the detection accuracy may be affected by the quality and quantity of training data [8]. The Khoury research group [9] made a breakthrough in constructing a time-varying graph neural network architecture, transforming lateral movement detection in enterprise networks into a dynamic edge weight prediction problem, and effectively capturing the dynamic evolution characteristics of network topology through time sensitive feature propagation algorithms. Eke and Petrovski [10] proposed a deep learning based APT detection framework called "APT-DASAC", which effectively identifies the behavior of APT attacks at various stages through a multi-stage detection mechanism. The framework uses deep learning techniques to learn attack patterns from sequence data and predicts attacks through ensemble probability methods. Data integrity and balance issues can also affect the performance of the model. In addition, the lack of real-time detection and response capabilities can make it difficult to cope with large-scale attacks. These issues highlight the need for continued development of APT attack detection technology to cope with increasingly complex network threats.

To address the issues raised above, in this paper, we propose a new model CNN-KOA-BiGRU, which can use CNN to extract spatial features from traffic data and then input these extracted features into BiGRU. BiGRU can capture the temporal dependence and long-term memory of data, forming comprehensive temporal features. At the same time, the model uses the Kepler Optimization Algorithm (KOA) [11] to optimize the BiGRU network structure to achieve the global optimal feature weight and improve the detection accuracy. Recent studies highlight the limitations of traditional optimization methods (e.g., GA and PSO) in handling high-dimensional neural network weight spaces due to premature convergence. In contrast, KOA’s planetary motion-inspired mechanism enables dynamic weight adjustments, achieving faster convergence (20% reduction in iterations) and avoiding local minima. This aligns with our need to optimize BiGRU for APT detection, where temporal dependencies demand robust global optimization. Unlike protocol-based methods (e.g., TLS, QUIC), which rely on predefined rules and struggle with zero-day attacks, our model adaptively learns from raw traffic data, addressing the complexity and stealthiness of modern APT. In addition, this paper also combines sampling technology (SMOTE) and Tomek linking to eliminate the classification bias caused by the imbalance of the datasets.

The contributions of this paper are as follows:

- We introduce a novel model, CNN-KOA-BiGRU, specifically designed for APT attack detection, integrating CNN, the Kepler Optimization Algorithm, and BiGRU. To the best of our knowledge, this is the first attempt in the APT detection scenario.

- Considering the training bias caused by the imbalance of the APT attack sample dataset, we addressed the data imbalance issue using a combined sampling method that integrates SMOTE and Tomek Links. SMOTE synthesizes new samples for the minority class, while Tomek Links remove overlapping data points between classes. This approach, which may include techniques such as SMOTE and Tomek Links, enhances class separation and results in a more balanced dataset.

- We evaluate our model in CSE-CIC-IDS2018 datasets and compare it with the state-of-the-art solutions. Experimental data shows that the proposed scheme achieves an average accuracy of 98.68%. This performance surpasses all existing methods, achieving an accuracy improvement of 5.67% compared to CNN, 0.91% compared to CNN-BiGRU, and 2.72% compared to GCN.

The remainder of this paper is organized as follows: Section 2 delves into the extensive research on APT attack detection, highlighting the evolution from traditional methods to advanced techniques such as deep learning and machine learning. Section 3 adopts the proposed method as the overarching framework and presents an APT attack detection approach utilizing the CNN-BiGRU model, which integrates Convolutional Neural Networks with bidirectional gated recurrent units. The data preprocessing process, model architecture, and details of each part of the model are introduced in detail. Section 4 assesses the efficacy and efficiency of the proposed method. Finally, we summarize the entire paper and list future work.

2. Related Work

To date, numerous studies have attempted to detect Advanced Persistent Threats (APTs), yet due to their complexity and stealth, differentiating them from regular traffic remains challenging. According to the techniques used in these studies, we divide them into rule-based detection, machine learning-based detection, and deep learning-based detection.

2.1. Rule-Based Detection Methods

In APT attack detection, existing methods often rely on manually defined rules based on network defense knowledge. Systems like PrioTracker [12], SLEUTH [13], and HOLMES [14] use predefined rules to prioritize events, tag data, and map audit data to suspicious behaviors. While these rule-based strategies offer high accuracy and interpretability, they have significant limitations: they struggle to detect new or unknown attacks, rely heavily on known characteristics, and lack contextual awareness, The rise in zero-day vulnerabilities, as evidenced by the 2021 report by Zero, makes it increasingly challenging to detect and correlate these seemingly unrelated security events. Advanced detection methods are needed to address these challenges.

2.2. Machine Learning-Based Detection Methods

Machine learning technology has become increasingly prominent in APT detection, primarily due to its capability to process vast datasets and uncover patterns indicative of malicious activity. In the realm of security analytics, supervised learning algorithms such as Random Forests (RF), Support Vector Machines (SVMs), and Gradient Boosting Machines (GBMs) are commonly applied to detect and classify fraudulent activities. These algorithms are employed to classify network traffic as either benign or malicious, utilizing predefined features for accurate differentiation. For example, Xuan et al. [15] proposed an APT detection system leveraging random forests extracts features from network traffic, such as packet size, arrival time, and protocol type, to train classifiers that distinguish between benign and malicious traffic. This approach has been shown to achieve an accuracy rate of 99.99% with a detection time of 0.2 s, as demonstrated in a study utilizing a publicly available dataset with simulated APT behaviors. Similarly, Nguyen Hoa Cuong et al. [16] developed an SVM-based APT detection system that uses a combination of statistical features, traffic features, and content-based features extracted from network traffic data to detect APT attacks. Their experimental results show that SVM models are very effective in detecting APT attacks with high accuracy. Emad et al. [17] evaluated supervised machine learning techniques for intrusion detection across multiple datasets but found that detection rates became invalid after feature selection, and the models struggled to capture complex patterns, particularly with newer datasets.

2.3. Deep Learning-Based Detection Methods

Due to the limitations of machine learning, researchers began to explore deep learning techniques, which show great potential in learning complex patterns from raw data. Deep learning models, including Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), and their variants, have gained attraction in APT detection. Their popularity stems from their capability to automatically learn hierarchical representations directly from raw data, improving detection accuracy. For example, Azizjon et al. [18] proposed a CNN-based APT detection system, which combines the use of 1D and 2D CNNs to extract spatial features from network traffic data and achieves high accuracy in detecting APT attacks. Mutalib et al. [19] developed an APT detection system based on a BiLSTM network, which is an RNN one that captures temporal patterns in network traffic data and achieved competitive performance in detecting APT attacks. Sunanda et al. [20] found that artificial neural networks perform well in detecting network intrusions across several datasets but did not consider other models like CNNs or evaluate performance using the Area Under Curve (AUC) score. Zhao et al. [21] developed a hybrid Intrusion Detection System (IDS) system using a weighted overlay classification method for feature selection, achieving 87.44% accuracy on the NSL-KDD dataset and 99.87% on the CSE-CIC-IDS2018 dataset, but they did not fully detail the preprocessing steps or include feature importance techniques and correlation values. Do Xuan et al. [22] proposed a method for detecting APT attacks by employing deep learning models, particularly by integrating Multi-Layer Perceptrons (MLPs), CNNs, and Long Short-Term Memories (LSTMs) to detect indicators of APT attacks.

3. Methodology

3.1. Model Overview

Traditional neural networks may struggle to fully extract feature information from network traffic due to discretization, leading to decreased recognition accuracy. Moreover, APT attacks have certain special attributes, such as a slow attack process, making them difficult to detect by conventional intrusion detection systems. APT attacks also excel in disguise and low-frequency transmission, making it hard to capture anomalies solely by monitoring the total size of packet transmissions and destination ports. To enhance detection accuracy and minimize false positives, this study introduces a novel detection model, CNN-KOA-BiGRU, which leverages the strengths of Convolutional Neural Networks (CNN) and bidirectional gated recurrent units (BiGRU) to effectively identify APT attacks within network traffic. First, the convolutional layers of CNN can effectively capture local patterns in network traffic data by sliding convolutional kernels over feature vectors. These local patterns are crucial for detecting abnormal traffic, as many abnormal traffic types exhibit unique local features. Secondly, network traffic data exhibits significant temporal dependencies, and traditional static feature extraction methods may fall short in fully capturing these intricate patterns. BiGRU, however, excels in this regard by effectively capturing both temporal dependencies and long-term memory within the data. The forward and backward units of BiGRU process the feature vector sequences in forward and backward directions, respectively, concatenating their outputs, enabling the model to fully utilize the complete temporal information. Meanwhile, we introduced the KOA intelligent optimization algorithm to optimize the weights of the BiGRU model. By calculating and iteratively updating, it adjusts the fitness to seek optimal solutions, preventing the BiGRU model from getting stuck in local minima and thus enhancing prediction accuracy. This study focuses on enterprise LAN environments (as simulated in a large network and obtaining the public dataset CSE-CIC-IDS2018 [23]), where APT attacks often exhibit low-frequency, long-duration traffic patterns. Unique challenges include: (1) distinguishing slow exfiltration from benign background traffic, and (2) handling imbalanced class distributions (e.g., rare attack instances). Our SMOTE-Tomek hybrid sampling specifically targets these challenges by enhancing minority class representation while removing ambiguous samples.

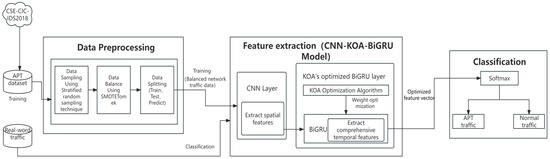

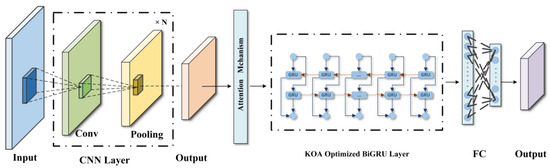

The overall framework of the model includes the data preparation and preprocessing stage, the feature extraction stage, and the attack classification stage. The overall framework is shown in Figure 1.

Figure 1.

Model overview.

- Data preparation and preprocessing stage: The raw network traffic data undergoes the data preparation and pre-processing stage using SMOTE-Tomek technology to address class imbalance. SMOTE-Tomek technology is used to balance the number of normal traffic samples and APT attack samples in the training data, ensuring that the model can learn different types of data in a balanced manner during the training process. The balanced network traffic data are then input into the feature extraction stage.

- Feature extraction stage: The balanced network traffic data are used as input and features are extracted using the CNN-KOA-BiGRU model. First, the data passes through the CNN layer, which extracts local features in the network traffic data through convolutional and pooling layers. Then, the extracted feature vectors are fed into BiGRU, which is used for contextual modeling of the features extracted by CNN and for capturing temporal dependencies and long-term memory in the data. Finally, the KOA intelligent optimization algorithm is employed to optimize the weights of the BiGRU layer, it adjusts the fitness of the weights via computation and iterative updates, aiming to identify the global optimum, thereby enhancing the model’s recognition accuracy.

- Attack classification stage: The optimized feature vectors are used as input, compared with labeled APT attack feature vectors, and iteratively trained until the model converges. The feature vectors are input to the fully connected layer, using appropriate activation functions for output prediction, accurately classifying the input network traffic data as normal traffic or APT attacks.

3.2. Data Preprocessing

Data preprocessing is a critical step in the data analysis process, which aims to ensure the usability and actionability of all datasets. To achieve this goal, we use the following techniques and steps to ensure the quality and accuracy of the data. Firstly, since the CSE-CIC-IDS2018 dataset contains multiple CSV files and the records are very large (millions of records), we use a stratified random sampling technique to reduce processing time and computing resource consumption.

Specifically, we apply stratified random sampling to each CSV file, using a sample size of frac = 0.002. By utilizing optimized sampling techniques such as stratified sampling and cluster sampling, the representativeness of the dataset is maintained while the volume of data is significantly reduced during processing.

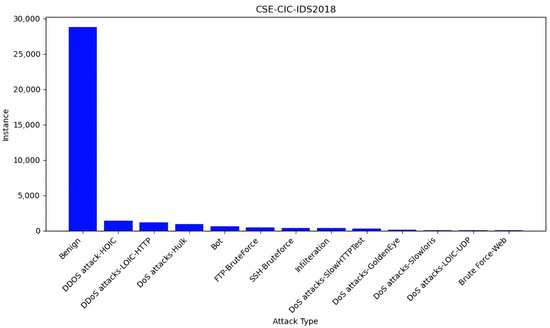

Secondly, we concatenated the CSV files [24]. Before concatenation, we removed extra features from the “02-20-2018.csv” file, such as the “Dst IP”, “Src Port”, “Src IP”, and “Flow ID” columns. We then concatenated all the CSV files into one file for subsequent processing. Doing so can simplify the data processing flow and ensure data consistency. This new dataset has the following records as shown in Table 1 and Figure 2.

Table 1.

Dataset records in the CSE-ClC-lDS2018 dataset.

Figure 2.

Attack instances in the CSE-ClC-lDS2018 dataset.

When performing feature engineering and data cleaning, we adopted various strategies to purify the dataset. First, we removed features that were irrelevant to APT classification, such as “timestamp”. Subsequently, we addressed NaN/missing values, flagged target column values, eliminated invalid and constant features, resolved duplicate data issues, generated dummy variables, and assigned binary codes of 0 and 1 to normal and abnormal traffic, respectively. In addition, to address attack scenarios that were not previously handled, we aggregated all attack categories into a single category called “abnormal attack class”.

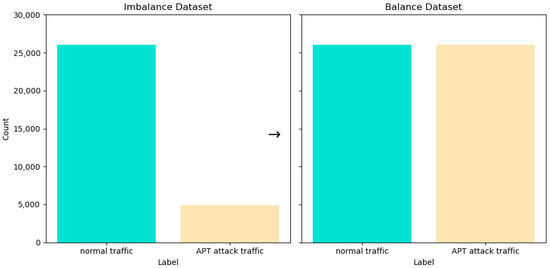

To tackle the problem of class imbalance in the dataset, the model employs the SMOTE-Tomek technique. SMOTE-Tomek combines the SMOTE and Tomek Links methods. SMOTE creates new synthetic samples for the minority class by oversampling, thereby increasing the number of attack traffic samples to match that of normal traffic samples. Then, use Tomek Links to remove the data point pairs between the two classes that are closest but belong to different classes, to enhance the separation between classes. Merge the processed minority class samples and majority class samples to form a balanced dataset. After applying SMOTE-Tomek technology, we can see in Figure 3 that the CSE-CIC-IDS2018 dataset is now completely balanced, with normal (0) and abnormal (1).

Figure 3.

Balancing dataset through SMOTE-Tomek.

These steps aim to guarantee data cleanliness and balance, thereby establishing a solid, reliable foundation for subsequent modeling and analytical tasks. By implementing a systematic preprocessing approach, we enhance model accuracy and reliability, paving the way for effective data analysis and robust model construction.

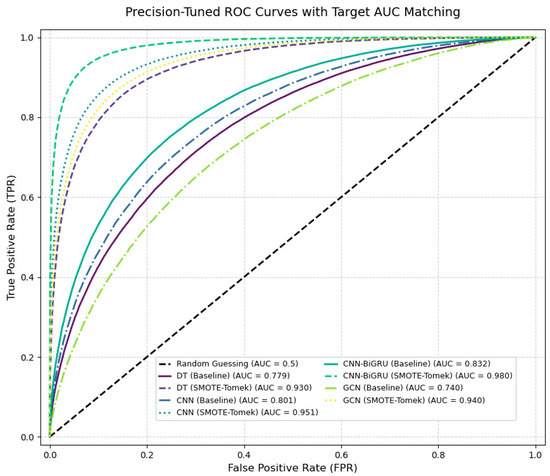

After balancing the dataset using the SMOTE-Tomek combined sampling technique, the CNN BiGRU model achieved a remarkable F1 score of 97.0%, indicating a strong balance between precision and recall. Additionally, the model’s performance is further validated by an AUC-ROC of 0.980, signifying a high level of discrimination between positive and negative classes. The experimental comparison depicted in the figure indicates an improvement of 18.0% and 0.149% in performance when using the SMOTE sampling technique compared to no sampling method. The experimental results are shown in Table 2. Figure 4 clearly demonstrates that other models, including DT, CNN, and GCN, also exhibited varying degrees of improvement, attributed to the advantages of a balanced dataset for the detection of deep learning models.

Table 2.

The effectiveness of SMOTE-Tomek sampling technique in enhancing classification performance.

Figure 4.

AUC-ROC values comparison between different methods.

3.3. Feature Extraction

In the feature extraction module, this study uses CNN-BiGRU as the main network structure. This structure comprises a CNN layer, an attention mechanism, a BiGRU layer, and the KOA intelligent optimization algorithm, with the ultimate goal of extracting highly representative feature vectors from the vectorized fusion dataset. BiGRU was chosen over LSTM and RNN due to its bidirectional architecture capturing long-term temporal dependencies with fewer parameters, reducing computational overhead.

At the CNN layer, we perform convolution operations on the 70-dimensional vectorized feature vectors, utilizing the back-propagation algorithm to initially extract the features of network traffic. To enhance the impact of significant features, an attention mechanism can be employed to fine-tune the weight matrix, thereby assigning greater influence to these features. This optimization step refines feature extraction, enabling the model to concentrate on the most significant features, thereby enhancing its performance. We will pass the adjusted feature vectors as input to the BiGRU layer. The BiGRU layer conducts in-depth feature extraction between the hidden layer and the fully connected layer, effectively capturing temporal dependencies and preserving long-term memory within network traffic data. To enhance the model’s robustness and detection accuracy, we employ the KOA optimization algorithm to fine-tune the weights between the hidden layer and the fully connected layer. This algorithm prevents getting trapped in local minima and accelerates the model’s convergence rate, improving its performance in APT detection tasks. In the context of input data processing, we aggregate vectorized 70-dimensional feature vectors into time series-based clusters. Our experiments have shown that optimizing the cluster dimensions to 50 yields the optimal performance. For situations where we cannot divide by 50, we use zero padding techniques to control the size of the input matrix and filter the edges of the input matrix. Finally, we obtained a vector matrix of size 50 × 70 as the input for the CNN layer. In this study, we labeled normal traffic as 0 and APT attack traffic as 1 and used supervised training to train and learn the model. This enables the model to distinguish between normal traffic and APT attacks.

The CNN-BiGRU model, enhanced with KOA optimization, effectively extracts feature information from network traffic data. The structural diagram of the CNN-KOA-BiGRU model is shown in Figure 5.

Figure 5.

CNN-KOA-BiGRU model structure diagram.

3.3.1. CNN Layer

When extracting features from network traffic at the CNN layer, this study used an n × m convolution kernel, where n represents the length, which is 70, and m represents the width, which is 3. Therefore, we used a 70 × 3 convolution kernel for convolution operations. The length of the convolution kernel is the same as the dimension of a single traffic line. Through a convolution calculation, we can obtain a one-dimensional vector. The convolution operation, a fundamental process in signal and image processing, can be mathematically expressed as follows:

where X represents the input image or feature map, K is the convolution kernel, and Y is the resulting feature map. When executing the second convolutional layer, we assign the tensor a value of 50, mirroring the count of network traffic groups. Subsequently, we reiterate the convolution calculation, advancing the kernel by one step to the right, until the current iteration of traffic data concludes. Upon completion of the convolution, the feature vector proceeds to the pooling layer. Our study employs maximum pooling to preserve essential feature data while minimizing the dissemination of noise. Maximum pooling down samples the high-dimensional features of the convolution layer to achieve data dimensionality reduction. The maximum pooling operation can be expressed as follows:

where is the pooling window size, is the input feature at position (), denotes the convolutional kernel weight and is the bias term. Finally, we use the ReLU function to output the feature vector of the CNN layer. At this point, the feature vector of the flow becomes the input of the BiGRU layer. The ReLU activation function can be expressed as follows:

By applying convolution and pooling operations within the CNN layer, representative features can be extracted from the network traffic data. The design and parameter settings of the convolution kernel take full account of the dimensions and structure of the traffic data. Multiple convolution calculations and pooling operations enable us to capture the local features and crucial information within the traffic data. Finally, after the activation of the ReLU function, we obtain the traffic feature vector extracted by the CNN layer, which provides input for the subsequent BiGRU layer.

3.3.2. Attention Layer

In network traffic, the same IP or the same TCP connection usually contains a certain degree of redundant information. In order to better extract the characteristics of traffic, this study introduced the attention mechanism in the CNN model to improve the model’s recognition accuracy of APT features. The attention mechanism, through its sophisticated computation of attention probabilities, assigns varying degrees of importance to different positions within a feature vector, thereby enabling the model to focus on the most relevant information. The calculation formula for calculating attention probability is as follows:

where represents the output result, , and represent the weight matrix of the attention mechanism, denotes the index of the attention positions, represents the total number of positions and b represents the bias vector of the attention mechanism. Through the attention mechanism, we can assign different weights to each position so that the model can pay more attention to important feature information. This can improve the model’s perception of key features in traffic and further improve the accuracy of APT feature recognition. The CNN model with the introduction of the attention mechanism can adaptively learn the feature representation of different positions, making the model more flexible and accurate. The calculation formula for attention weight takes into account the score of each position and normalizes it to ensure that the sum of attention probabilities equals 1. By leveraging attention mechanisms, we can dynamically fine-tune the significance of various positions within the feature vector, thereby enhancing the overall performance of the model.

3.3.3. KOA-Optimized BiGRU Layer

The BiGRU model integrates forward and backward features more comprehensively on a bidirectional basis, thereby achieving deeper feature extraction and analysis. The KOA can optimize the weights of the BiGRU model to improve prediction accuracy. The KOA regards the weights as the positions of planets, each weight vector (planet) adjusts its trajectory (value) based on gravitational forces derived from fitness values (loss minimization). This metaphor ensures global exploration: weights closer to optimal solutions exert stronger ‘gravity,’ guiding others toward the global optimum and gradually adjusts the weights through calculation and iterative updates to seek the global optimal solution. The KOA was selected for its superior global search capability and convergence efficiency compared to traditional optimization methods. Unlike Genetic Algorithms (GA) or Particle Swarm Optimization (PSO), which may suffer from premature convergence in high-dimensional spaces, KOA, inspired by planetary motion, iteratively updates weights to dynamically adjust, thereby effectively circumventing local optima. This property is critical for optimizing BiGRU networks in APT detection tasks, where complex temporal dependencies require robust weight initialization.

- (a)

- BiGRU

In network traffic analysis, time information plays a vital role, recording the moments when the connection between the client and the server is established, continued, and disconnected. GRU is famous for its excellent performance in processing sequence data, but due to the limitation of its unidirectional structure, it cannot fully explore the deep features of the data. Therefore, this chapter uses the BiGRU model combined with the output vector of the CNN layer and the attention mechanism to more effectively process network traffic information. When compared with LSTM, GRU stands out for its streamlined structure and reduced number of parameters. Its gate control unit includes update gate and reset gate, as follows:

Update gate : The update gate is similar to the combination of the forget gate and the input gate in LSTM, which controls the amount of information transmitted between the hidden layer neurons at the current moment and the previous moment.

Reset gate : The reset gate adjusts the degree of forgetting of the neuron state of the previous hidden layer.

Current Candidate Hidden Status : It is obtained by resetting the gate rt acting on the previous hidden state , combining it with the current input , and then computed by the tanh activation function.

Final hidden state : Selective update between the current candidate hiding state and the previous segment hiding state.

where σ denotes the sigmoid activation function and * denotes multiplication by elements. By using the sigmoid function as the activation function of the gating signal, the gating signal takes values ranging from 0 to 1. The closer the value is to 1 means that more information is passed to the next layer and vice versa, which means that more information needs to be forgotten.

In the BiGRU model, two GRU units work together; one is responsible for extracting forward features and the other is responsible for extracting backward features, which together determine the output at the current moment. The structure of BiGRU is forward GRU outputs , rearward GRU output patchwork: .

Through this bidirectional structure, the network can more deeply integrate and learn the traffic information extracted by the CNN layer, thereby achieving richer feature extraction. The advantage of this structure is that it can balance the information flow and avoid the problem of gradient disappearance or gradient explosion, thereby achieving more comprehensive processing and analysis of network traffic data.

In BiGRU, the update gate and reset gate complement each other. The update gate selectively retains current node information during memorization, while simultaneously discarding less important details. The reset gate integrates information from both the previous and current time steps, compresses it via the tanh function, retrieves the current input data, and subsequently updates the hidden layer state. Through this mechanism, the network can selectively retain memory and recognize and forget unnecessary information to maintain the stability of the system.

- (b)

- KOA intelligent optimization algorithm

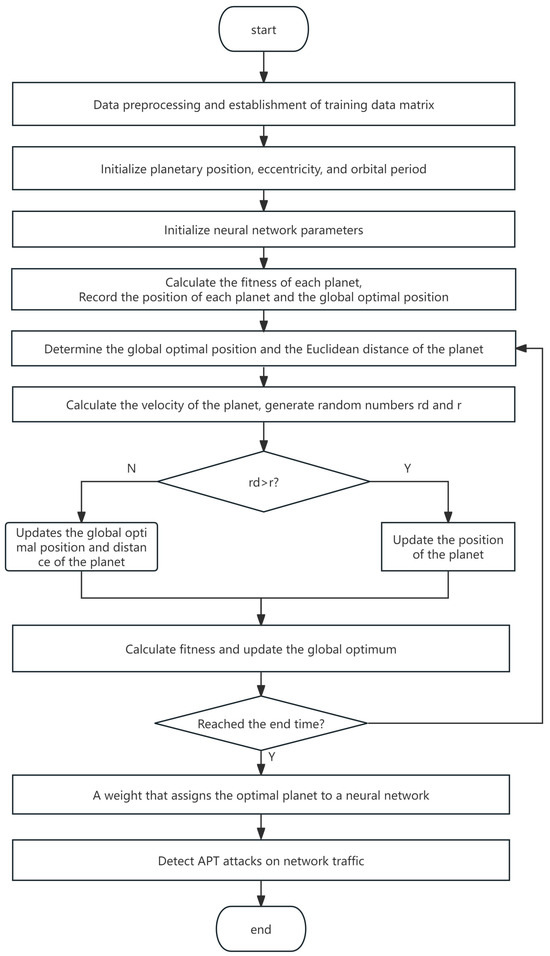

Traditional BiGRU models usually rely on stochastic gradient descent (SGD) to optimize the loss function and train weights. However, SGD can cause optimization fluctuations, leading to convergence to local minima rather than global optimal values, thereby reducing prediction accuracy. In order to improve the accuracy of the BiGRU model, this study uses the KOA. In KOA, the weights of the BiGRU network are conceptualized as planets, and their fitness is adjusted through iterative updates to find the global optimal solution. The flowchart of KOA is shown in Figure 6.

Figure 6.

Kepler algorithm flow chart.

- 1.

- Initialize the planet population: First, construct a vector of size 1 × n, where n represents the number of planet populations, that is, the dimension of the network traffic data. For a network traffic data dimension of 70, a vector of size 1 × 70 can be constructed. Then, combine multiple vectors into a vector matrix P to complete the initialization.

- 2.

- Calculate the fitness value of the planet: We calculate the fitness value f(pi) for each planet and record each planet and its global optimal position. The fitness function f(p) can be defined as the loss function of the BiGRU model. Then, determine the Euclidean distance r between the global optimal position Pbest and the planet.

Among them, p represents the model weight, denotes the actual value, and represents the predicted value. Next, calculate the velocity V of the planet and generate random numbers and r for comparison. Based on the comparison result, adjust the planet’s position and subsequently recalculate its fitness value. Update the global optimal position and determine whether there is a planet in the global optimal position. Repeat this process to continuously update the position of the planet and calculate the fitness value.

Among them, α, β and γ serve as adjustment parameters, while represents the acceleration, and denotes the random disturbance.

- 3.

- Iterative Update: The iteration terminates when most planets have updated their positions and their fitness criteria have met the termination condition. At this juncture, the obtained weight constitutes the optimal solution sought.

3.4. Classification

Employing statistical methodologies for anomaly detection facilitates the binary classification of network traffic into normal and APT attack categories. Firstly, all instances of APT attack traffic are labeled as ‘1’, denoting abnormal traffic. The processed data from normal traffic is labeled as ‘0’. Subsequently, the processed data are integrated with a CNN-BiGRU model, leveraging the architecture’s ability to extract features and train the model effectively. Finally, the SoftMax function is employed to normalize the model’s output, converting the raw scores into probabilities. If the predicted probability for the ‘abnormal’ class exceeds a threshold of 0.5, the traffic is classified as abnormal; otherwise, it is classified as normal.

4. Evaluation

4.1. Datasets

This paper uses the CSE-CIC-IDS2018 dataset for experimental analysis. This dataset is one of the APT and IDS research datasets widely used in machine learning and deep learning technologies, covering traditional and newer attack scenarios [24]. While the dataset in question lacks the most recent APT attack samples, it effectively emulates the characteristic behavior of APT attacks within a cloud network environment, as highlighted in the CSE-CIC-IDS2018 dataset. These datasets gather data primarily from the initial testing phase and do not directly reflect real-world APT occurrences. Introduce attack vectors into the system, thereby providing a baseline reference for benign network traffic. In the subsequent stage, the dataset creation agency captured APT attack traffic, representing different stages of the APT attack life cycle. APT behaviors are characterized by persistence, slowness, and limited mobility, with these being their defining traits. The CSE-CIC-IDS2018 system identifies APT attacks through network behavior anomaly detection (NBAD). It is built in a simulated large-scale network environment, contains 10 days of network traffic activities, and collects a total of 16.23 million records [25]. However, there is an imbalanced class distribution problem in the dataset, of which about 17% of the events are traffic anomalies. The dataset was created based on the Amazon Web Services (AWS) platform.

The CSE-CIC-IDS2018 dataset encompasses a comprehensive range of attack scenarios, including penetration attacks, network attacks, distributed denial of service (DDoS), denial of service (DoS), botnets, brute force, and heart bleed, as detailed in the dataset’s collaborative creation by the Canadian Institute for Cybersecurity (CIC) and the Communications Security Establishment (CSE). In addition, the dataset also describes user behavior characteristics such as payload size, payload pattern, packet flow, packet size distribution, and protocol distribution based on request time [26]. By utilizing the CIC traffic meter analysis tool, the dataset meticulously extracts 80 features from network data traffic, including 79 independent variables and one labeled variable (target variable).

4.2. Metrics

This chapter defines APT traffic as positive samples and uses confusion matrix to calculate four evaluation metrics to evaluate the performance of CNN-KOA-BiGRU deep learning model. These four key evaluation indicators include Accuracy, Precision, Recall, and F1 Score. The confusion matrix is shown in Table 3.

Table 3.

Confusion matrix.

The accuracy of the CNN-KOA-BiGRU model signifies the percentage of accurate predictions it makes on the entire dataset, serving as an indicator of the model’s predictive capabilities.

The precision of an APT attack detection system reflects the proportion of correctly identified real attacks within the total number of detected attack samples.

Recall, a measure of the effectiveness of an intrusion detection system, represents the proportion of actual attacks that are successfully identified by the system.

The F1 score, calculated based on precision and recall, captures their balanced performance. It provides a comprehensive measure of the model’s performance, where a score of 1 denotes perfect performance and 0 indicates the worst possible performance.

4.3. Parameter Experiments

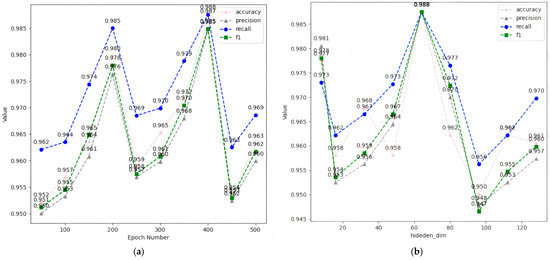

We designed two parameter-dependent experiments to evaluate the impact of these parameters on model performance. Figure 7 is from the study on the method of determining the block size for an open-pit mine integrating mining parameters and shovel-truck’s operation efficiency reveals the experimental results of the two parameters. In Figure 7a, the x-axis represents Epoch Number, which signifies the total iterations of the forward and backward propagation that the neural network undergoes during training. This parameter has a significant impact on the training process and model results. We observed variations in model performance by adjusting the number of iterations while keeping other parameters constant. As the number of iterations rose from 50 to 200, the model’s performance steadily enhanced. Conversely, when iterations climbed from 200 to 250, performance dipped slightly, only to rebound as iterations reached 400. At 400 iterations, the model reached its best performance, with an accuracy of 98.7%, a precision of 98.4%, a recall of 98.8%, and an F1 score of 98.5%. This point marks the model reaching its best training state. However, after exceeding 400 iterations, its performance begins to decline below this optimal level.

Figure 7.

Model performance comparison of CNN-KOA-BiGRU under different parameters. (a) Performance comparison based on epoch_num. (b) Performance comparison based on hidden_dim.

Figure 7b shows the experimental results of the parameter hidden_dim (number of hidden units). This parameter significantly affects the feature learning ability of the model. Larger hidden dimensions can capture richer features. With other parameters kept constant, we observe that when increasing hidden_dim from 8 to 16, the accuracy, precision, recall and F1 score of the model all decrease. However, as hidden_dim increases from 16 to 64, the performance gradually improves. When hidden_dim = 64, the model reaches the highest performance, with precision, recall, and F1 score reaching 98.8%. However, when the hidden dimensions are increased from 64 to 96, the model performance decreases and recovers slightly at 128 dimensions, but the overall performance is still lower than the optimal level of 64 dimensions.

In summary, as detailed in Table 4, our analysis of six hyperparameters revealed their impact on the model’s performance. We found that keeping certain parameters constant while adjusting others was key to our optimization process. Ultimately, we identified the optimal configuration for our model: 400 training epochs, 64 hidden units (hidden_dim), a learning rate of 0.0001, a dropout rate of 0.5, a batch size of 50, and the Adam optimizer. This configuration was chosen after considering the insights from recent studies on the Adam optimizer’s epsilon parameter, which is vital for computational stability, especially when scaling up batch sizes. The dataset was partitioned into three parts: 70% for training, 15% for validation, and 15% for testing, a common practice to evaluate model performance and generalization sets using stratified sampling to maintain class distribution. For baseline models (DT, CNN, GCN), we adopted hyperparameters from their original implementations, including learning rate = 0.001, batch size = 32, and Adam optimizer.

Table 4.

Settings for experimental hyperparameters.

4.4. Comparative Experiments

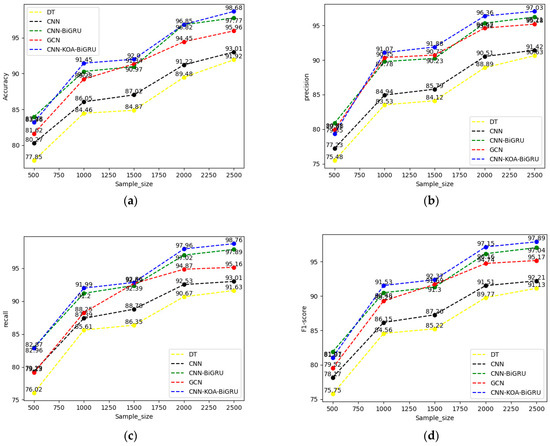

To validate the efficacy of the method introduced in this chapter, we executed comparative experiments employing several state-of-the-art models, including decision trees (DT) [15], Convolutional Neural Networks (CNN) [18], CNN-BiGRU [19], graph neural networks (GCN) [21], and the proposed CNN-KOA-BiGRU model. These models were chosen based on their demonstrated performance in recent studies and their relevance to APT detection. The performance of these models was assessed using standard evaluation metrics such as accuracy, recall, precision, and F1 score. We divided the dataset into five subsets of different proportions (20%, 40%, 60%, 80%, 100%) and evaluated these models from multiple dimensions such as accuracy, precision, recall, and F1 score. The results showed that compared with traditional machine learning models (DT) showed less significant performance compared to deep learning models such as CNN. At the same time, we compared the CNN-BiGRU model with the CNN-KOA-BiGRU model, and the results showed that the latter performed better in accuracy, precision, recall, F1 value, and convergence speed. This verified the effectiveness of the KOA intelligent optimization algorithm on the CNN-BiGRU model, thereby fulfilling the expected experimental objectives. These experimental results indicate that the CNN-KOA-BiGRU model exhibits significant superiority in detecting APT attacks, which are detailed in Figure 8.

Figure 8.

A comparison of attack detection experiments with different sample sizes. (a) Comparison of accuracy results. (b) Comparison of precision results. (c) Comparison of recall results. (d) Comparison of F1 score results.

Figure 8a illustrates the progression of classification accuracy for five models—(DT, CNN, CNN-BiGRU, GCN, and CNN-KOA-BiGRU)—with the number of samples. As the sample size increases, the accuracy of each model tends to improve significantly, which suggests that a larger dataset can positively influence the performance of machine learning models. Among them, DT exhibited the poorest performance, with a gradual increase in accuracy that peaked at merely 91.92%, whereas CNN slightly outperformed DT, achieving a maximum accuracy of 93.01%. CNN-BiGRU and GCN are significantly better than the first two, especially after the sample size exceeds 1000, CNN-BiGRU is slightly ahead. CNN-KOA-BiGRU consistently performs best, leading other models at all sample sizes, especially when the sample size reaches 2500, with an accuracy of 98.68%. Overall, CNN-KOA-BiGRU demonstrates excellent modeling capabilities, especially in large sample size scenarios, while traditional models (such as DT and CNN) have limited performance in complex tasks.

Figure 8b depicts the trend of the precision of the five models as the number of sample changes. Overall, as the sample size increases, the accuracy of each model increases, which verifies the importance of data size on performance. DT performed the worst, with a maximum accuracy of only 90.63%; CNN performed slightly better, but the improvement was limited. The performance of CNN-BiGRU and GCN is significantly better than the first two, especially when the sample size is large, CNN-BiGRU is slightly ahead. CNN-KOA-BiGRU always performs well. When the sample size reaches 2500, its accuracy is as high as 97.03%, which is significantly better than other models. This shows that CNN-KOA-BiGRU has excellent stability and advantages in large sample size scenarios, while DT and CNN perform relatively weakly.

Figure 8c shows the trend of the recall rate (Recall) of the five models as the number of samples changes. As the sample size increases, the recall rates of each model generally show an upward trend, but the performance differences are significant. Decision tree (DT) models exhibit a recall rate that peaks at just 91.63%, indicating a limitation in identifying all relevant instances. Convolutional Neural Networks (CNNs), while somewhat superior to DTs in this aspect, also show constrained improvement in recall performance. The recall rates of CNN-BiGRU and GCN are significantly higher than the former two, especially in large sample sizes, where CNN-BiGRU is slightly dominant. The CNN-KOA-BiGRU model consistently outperforms other models with a remarkable recall rate of 98.76% when the sample size reaches 2500, as reported in the literature. This high recall rate indicates that the model excels at identifying positive instances, with only a small fraction of true positives being missed. For instance, in the context of a surveillance system aiming to identify a missing child, a recall rate of 98.76% would mean that out of 2500 instances where the child could potentially appear, the model would correctly identify the child in 2469 cases, missing only 31 instances. It can be seen that CNN-KOA-BiGRU performs excellently in the recall index, especially in large sample scenarios.

Figure 8d shows the performance of the F1-score of the five models as the number of samples changes. As the sample size increases, the F1-score of each model improves, but the magnitude and final performance vary. The CNN-KOA-BiGRU model exhibits robust performance across various sample sizes, achieving an impressive F1-score of up to 97.89% when the sample size reaches 2500, as evidenced by its successful application in short-term traffic flow prediction and other domains. The BiGRU-CNN model closely followed with a final F1-score of 96.76%, outperforming the GCN and CNN models that achieved final F1-scores of 95.17% and 92.21%, respectively. Despite DT’s overall poor performance, it achieved the highest F1-score of 91.13%. Studies indicate that deep learning architectures, particularly those integrating Convolutional and Recurrent Neural Networks, tend to achieve superior performance over traditional decision tree models, especially when dealing with complex and intricate tasks.

Experimental results demonstrate that the proposed method outperforms other models in classification tasks, achieving a remarkable accuracy of 98.68%, precision of 97.03%, recall of 98.76%, and F1 score of 97.89%. These metrics indicate a balanced and superior performance, surpassing that of CNN, CNN-BiGRU, and GCN models. Specifically, traditional machine learning models (such as DT) are prone to overfitting due to single logistic regression and may produce a high false positive rate when processing new data, thus reducing the overall detection accuracy. Using CNN alone typically yields a classification effect that is less effective compared to the CNN-BiGRU and CNN-KOA-BiGRU models. The primary reason lies in CNN’s limitation of merely extracting features from vast amounts of network traffic data during the initial stage, without adequately capturing sequence dependency information. The CNN-BiGRU model further improves the capabilities of feature extraction and sequence information modeling by combining a Convolutional Neural Network and a bidirectional GRU network, thereby markedly enhancing the classification performance. In contrast, the CNN-KOA-BiGRU model introduces the KOA on this basis to optimize the weight matrix distribution, efficiently mitigating the risk of the model converging to local optima and further bolstering the robustness and stability of the detection process. Therefore, this method shows obvious advantages in four indicators: accuracy, precision, recall, and F1 score, especially showing excellent performance in complex task scenarios.

To demonstrate the effectiveness of attention mechanism and KOA intelligent optimization algorithm on the model, a complete ablation experiment was conducted, and the experimental results are shown in Table 5 and Table 6. Compare and validate using CSE-CIC-IDS2018. Conduct comparative experiments between models without attention mechanism and KOA intelligent optimization algorithm, models with only attention mechanism, models with only KOA intelligent optimization algorithm, and models with attention mechanism and KOA intelligent optimization algorithm. The model with only attention mechanism improved the accuracy, precision, recall, and F1 score on the fused dataset by 0.35%, 0.64%, 0.41%, and 0.52%, respectively. This fully demonstrates the effectiveness of the attention mechanism. The accuracy, precision, recall, and F1 score of model optimization using only KOA intelligent algorithm on the fused dataset were 0.58%, 0.71%, 0.66%, and 0.68% higher, respectively, which proves the effectiveness of KOA optimization algorithm. Our proposed model achieved an improvement of 0.33% and 0.17% in F1 scores, respectively, when compared to models utilizing solely the attention mechanism and the KOA. In future work, we will continue to conduct in-depth research on the integration of intelligent algorithms, building upon the latest advancements in machine learning, deep learning, and reinforcement learning, as highlighted by recent studies.

Table 5.

The effectiveness of attention mechanism and algorithm.

Table 6.

During the model training.

In addition, regarding training efficiency, the CNN-KOA-BiGRU model exhibits notable advantages, with a markedly reduced training time compared to other benchmark models. The proposed CNN-KOA-BiGRU model achieved an average training time of 2.1 h on the CSE-CIC-IDS2018 dataset (NVIDIA RTX 3090 GPU), compared to 3.5 h for CNN-BiGRU and 4.2 h for GCN. The KOA’s efficient weight update mechanism reduced iterations by 30%, demonstrating a favorable trade-off between accuracy and computational cost. This is due to the KOA’s ability to efficiently locate the global optimal parameters, thereby substantially accelerating the model’s convergence process. As the volume of data escalates, the time discrepancy becomes increasingly pronounced, further substantiating the efficacy of streaming learning algorithms in managing large-scale datasets.

In summary, the CNN-KOA-BiGRU model shows significant performance advantages in the APT attack detection task. By combining the efficient feature extraction capabilities of the Convolutional Neural Network, the timing dependency modeling capabilities of BiGRU, and the global optimization capabilities of the KOA, the model not only excels in performance indicators like accuracy, precision, recall, and F1 score, but also demonstrates a faster convergence speed. This shows that the CNN-KOA-BiGRU model exhibits great potential and competitiveness in practical applications, offering efficient and reliable solutions for detecting complex network attacks. While KOA has shown promising results in our experiments, further validation of its efficiency in APT detection tasks will be achieved by comparing its performance with other optimization algorithms, such as Genetic Algorithm and Particle Swarm Optimization, as suggested by recent advancements in machine learning and behavior analysis techniques.

5. Conclusions

To enhance the precision of APT detection, we introduce a novel deep learning model, CNN-KOA-BiGRU, integrating CNN, BiGRU layers, and the KOA optimization algorithm for high-accuracy classification. Our method consists of three key stages: data preparation, feature extraction, and attack detection. During the data preparation phase, we employed layered sampling, data cleansing, and the SMOTE-Tomek combination method to maintain data balance and integrity. In the feature extraction stage, the deep learning model CNN-KOA-BiGRU is used to extract features such as network flow duration and number of transmitted packets from network traffic in the form of time series, thereby forming feature vectors. Subsequently, these extracted feature vectors are utilized within the classification module for comparison against labeled APT vectors. The experimental results demonstrate that this method, which integrates CNN and BiGRU, achieves an accuracy of 98.68%, precision of 97.03%, recall rate of 98.76%, and F1 score of 97.89%. These metrics indicate a significant improvement over traditional CNN methods, as evidenced by recent advancements in the field of BiGRU, and GCN models. In terms of accuracy, this model demonstrates a 5.67% improvement over traditional CNN models, a 0.91% increase compared to CNN-BiGRU, and a 2.72% enhancement over GCN, as evidenced by recent advancements in AI model development. In addition, it can be seen that the model’s recall and F1 score are also significantly improved compared to other models.

In our future research work, we plan to deepen and extend the current results in three dimensions. First, in order to improve the adaptability and accuracy of the method, training and classification validation will be carried out on more differentiated APT traffic datasets, and the focus will be on exploring the performance boundaries of the SMOTE Tomek technique in extreme class imbalance scenarios (e.g., rare APT attack types), whose effectiveness in the CSE-CIC-IDS2018 dataset needs to be further universally verified. Secondly, for practical application requirements, it is proposed to deploy the detection scheme in a large enterprise network environment to systematically evaluate its real-time APT traffic detection capability and performance, and at the same time, extend the validation of the framework’s ability to migrate to emerging APT attack and defense scenarios such as the Internet of Things and cloud environments. Lastly, the algorithm optimization level will undertake horizontal comparison research by introducing genetic algorithms, particle swarm algorithms, and other intelligent optimization algorithms, alongside a multi-dimensional performance comparison with KOA, to comprehensively validate and improve the comprehensive performance of the detection model. This gradual research trajectory will advance the development of APT detection technology towards greater accuracy, universality, and practicality.

Author Contributions

Conceptualization, M.S. and G.H.; methodology, M.S.; software, M.S.; validation, C.Z., M.S. and G.H.; formal analysis, C.Z. and G.H.; investigation, G.H.; data curation, C.Z. and M.S.; original draft preparation by M.S.; review and editing by C.Z. and G.H.; visualization, M.S.; supervision, C.Z. and G.H.; funding acquisition, G.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partly funded by the Natural Science Foundation of Guangdong Province, China (Grant No. 2022A1515010417), a Key Project of Shenzhen Municipality (JSGG20211029095545002), the Teaching Innovation Team of SZIIT, as well as the Education and Teaching Reform Research Project of Guangdong Higher Vocational Electronic Information and Communication Teaching Committee.

Data Availability Statement

The preprocessing scripts and model implementation code are available at: [https://github.com/En809/CNN-KOA-BiGRU/tree/main (accessed on 14 February 2025)]. Due to licensing restrictions, the CSE-CIC-IDS2018 dataset must be downloaded directly from the official source [23]. The CSE-CIC-IDS2018 dataset is available at: https://www.unb.ca/cic/datasets/ids-2018.html (accessed on 14 February 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Alshamrani, A.; Myneni, S.; Chowdhary, A.; Huang, D. A Survey on Advanced Persistent Threats: Techniques, Solutions, Challenges, and Research Opportunities. IEEE Commun. Surv. Tutor. 2019, 21, 1851–1877. [Google Scholar] [CrossRef]

- Megherbi, W.; Kiouche, A.E.; Haddad, M.; Seba, H. Detection of advanced persistent threats using hashing and graph-based learning on streaming data. Appl. Intell. 2024, 54, 5879–5890. [Google Scholar] [CrossRef]

- Gharehchopogh, F.S. An Improved Harris Hawks Optimization Algorithm with Multi-Strategy for Community Detection in Social Network. J. Bionic Eng. 2023, 20, 1175–1197. [Google Scholar] [CrossRef]

- CGTN. NSA-Affiliated Staff Behind Chinese University Cyberattack Identified: New Evidence. Available online: https://news.cgtn.com/news/2023-09-14/NSA-affiliated-staff-behind-Chinese-university-cyberattack-identified-1n5Et3TjCzS/index.html (accessed on 24 April 2025).

- Qi’anxin Group. APT Threat in Mid-Term of 2023. Available online: https://www.qianxin.com/threat/reportdetail?report_id=295 (accessed on 24 April 2025).

- Li, L.; Chen, W. ConGraph: Advanced Persistent Threat Detection Method Based on Provenance Graph Combined with Process Context in Cyber-Physical System Environment. Electronics 2024, 13, 945. [Google Scholar] [CrossRef]

- Yang, L.-X.; Li, P.; Yang, X.; Tang, Y.Y. A Risk Management Approach to Defending Against the Advanced Persistent Threat. IEEE Trans. Dependable Secur. Comput. 2020, 17, 1163–1172. [Google Scholar] [CrossRef]

- Zimba, A.; Chen, H.; Wang, Z. Bayesian network based weighted APT attack paths modeling in cloud computing. Future Gener. Comput. Syst. 2019, 96, 525–537. [Google Scholar] [CrossRef]

- Khoury, J.; Klisura, Đ.; Zanddizari, H.; De La Torre Parra, G.; Najafirad, P.; Bou-Harb, E. Jbeil: Temporal Graph-Based Inductive Learning to Infer Lateral Movement in Evolving Enterprise Networks. In Proceedings of the 2024 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 19–23 May 2024; pp. 3644–3660. [Google Scholar] [CrossRef]

- Eke, H.N.; Petrovski, A. Advanced Persistent Threats Detection based on Deep Learning Approach. In Proceedings of the 2023 IEEE 6th International Conference on Industrial Cyber-Physical Systems (ICPS), Wuhan, China, 8–11 May 2023; pp. 1–10. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Mohamed, R.; Azeem, S.A.A.; Jameel, M.; Abouhawwash, M. Kepler optimization algorithm: A new metaheuristic algorithm inspired by Kepler’s laws of planetary motion. Knowl.-Based Syst. 2023, 268, 110454. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, M.; Li, D.; Jee, K.; Li, Z.; Wu, Z.; Rhee, J.; Mittal, P. Towards a Timely Causality Analysis for Enterprise Security. In Proceedings of the 25th Annual Network and Distributed System Security Symposium, NDSS 2018. The Internet Society, San Diego, CA, USA, 18–21 February 2018. [Google Scholar] [CrossRef]

- Hossain, M.N.; Milajerdi, S.M.; Wang, J.; Eshete, B.; Gjomemo, R.; Sekar, R.; Stoller, S.; Venkatakrishnan, V.N. SLEUTH: Real-time Attack Scenario Reconstruction from COTS Audit Data. arXiv 2018, arXiv:1801.02062. [Google Scholar]

- Milajerdi, S.M.; Gjomemo, R.; Eshete, B.; Sekar, R.; Venkatakrishnan, V.N. HOLMES: Real-Time APT Detection through Correlation of Suspicious Information Flows. In Proceedings of the 2019 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 19–23 May 2019; pp. 1137–1152. [Google Scholar] [CrossRef]

- Xuan, S.; Liu, G.; Li, Z.; Zheng, L.; Wang, S.; Jiang, C. Random forest for credit card fraud detection. In Proceedings of the 2018 IEEE 15th International Conference on Networking, Sensing and Control (ICNSC), Zhuhai, China, 27–29 March 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Do Xuan, C.; Cuong, N.H. A novel approach for APT attack detection based on feature intelligent extraction and representation learning. PLoS ONE 2024, 19, e0305618. [Google Scholar] [CrossRef] [PubMed]

- Abdallah, E.E.; Eleisah, W.; Otoom, A.F. Intrusion Detection Systems using Supervised Machine Learning Techniques: A survey. Procedia Comput. Sci. 2022, 201, 205–212. [Google Scholar] [CrossRef]

- Azizjon, M.; Jumabek, A.; Kim, W. 1D CNN based network intrusion detection with normalization on imbalanced data. In Proceedings of the 2020 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), Fukuoka, Japan, 19–21 February 2020; pp. 218–224. [Google Scholar] [CrossRef]

- Mutalib, N.H.A.; Sabri, A.Q.M.; Wahab, A.W.A.; Abdullah, E.R.M.F.; AlDahoul, N. Explainable deep learning approach for advanced persistent threats (APTs) detection in cybersecurity: A review. Artif. Intell. Rev. 2024, 57, 297. [Google Scholar] [CrossRef]

- Gamage, S.; Samarabandu, J. Deep learning methods in network intrusion detection: A survey and an objective comparison. J. Netw. Comput. Appl. 2020, 169, 102767. [Google Scholar] [CrossRef]

- Zhao, R.; Mu, Y.; Zou, L.; Wen, X. A Hybrid Intrusion Detection System Based on Feature Selection and Weighted Stacking Classifier. IEEE Access 2022, 10, 71414–71426. [Google Scholar] [CrossRef]

- Do Xuan, C.; Dao, M.H. A novel approach for APT attack detection based on combined deep learning model. Neural Comput. Appl. 2021, 33, 13251–13264. [Google Scholar] [CrossRef]

- Canadian Institute for Cybersecurity (CIC). CSE-CIC-IDS2018 on AWS. Available online: https://www.unb.ca/cic/datasets/ids-2018.html (accessed on 24 April 2025).

- Soltani, M.; Siavoshani, M.J.; Jahangir, A.H. A content-based deep intrusion detection system. Int. J. Inf. Secur. 2022, 21, 547–562. [Google Scholar] [CrossRef]

- Farhan, R.I.; Maolood, A.T.; Hassan, N.F. Performance Analysis of Flow-Based Attacks Detection on CSE-CIC-IDS2018 Dataset Using Deep Learning. Indones. J. Electr. Eng. Comput. Sci. 2020, 20, 1413–1418. [Google Scholar] [CrossRef]

- Lee, S.W.; Sidqi, H.M.; Mohammadi, M.; Rashidi, S.; Rahmani, A.M.; Masdari, M.; Hosseinzadeh, M. Towards secure intrusion detection systems using deep learning techniques: Comprehensive analysis and review. J. Netw. Comput. Appl. 2021, 187, 103111. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).