Abstract

Self-supervised learning (SSL) is vulnerable to backdoor attacks, while the downstream classifiers based on SSL models inevitably inherit these backdoors, even when they are trained on clean samples. Despite the proposal of several methods of backdoor defense against backdoor attacks, few methods remain that can be used to effectively defend against various backdoor attacks while maintaining the high performance of the model. In this paper, based on the discovery that unlearning any trigger enhances the overall backdoor robustness of the model, a novel, efficient, and straightforward approach is proposed to address the most advanced backdoor attacks. This method involves two stages. Firstly, a backdoor is actively implanted in the model with a custom trigger. Secondly, the model is fine-tuned to unlearn the custom trigger. Through these two stages, it is found out that not only is the implanted backdoor removed, but the unknown backdoors implanted by attackers are also effectively mitigated. As illustrated by the extensive experiments conducted on multiple datasets against the current state-of-the-art methods of attack, the method proposed in this paper requires only a small amount of clean data (approximately 1%) to reduce the success rate of backdoor attacks effectively while ensuring minimal impact on model performance.

1. Introduction

Self-supervised learning (SSL) not only facilitates the learning of rich representations from massive uncurated datasets but also achieves performance comparable to supervised learning in many applications [1,2,3,4]. For this reason, it has received increasing attention from researchers attracted. The application of SSL typically involves two stages. In the first stage, the SSL method is used to train an encoder on massive unlabeled datasets. In the second stage, the trained encoder is used for downstream classifiers with few labeled data [5,6,7]. However, in the relevant studies, it is also indicated that SSL is vulnerable to backdoor attacks [8,9,10,11].

A backdoored SSL encoder is highly sensitive to the triggers specified by the attacker. It projects the features of any trigger-embedded sample similar to the attacker-chosen target class, as a result of which the downstream classifiers misclassify any trigger-embedded sample into a specific class. The backdoor attacks aimed at SSL encoders mainly involve two scenarios: (1) The attacker poisons the training dataset, for which any SSL encoder trained on this dataset is implanted with a backdoor [8,10]; (2) The supplier of the SSL encoder is also the attacker, who often manipulates the training process to improve the success rate of attack [9,11].

The backdoor attacks on SSL pose significant risks due to their highly covert nature. Since backdoored encoders usually perform on clean samples, the users are likely to use the backdoored encoder unknowingly to train their downstream classifiers. Furthermore, once a trained SSL encoder is shared or deployed, the threat is amplified as these backdoors are propagated across multiple downstream classifiers. Training an SSL encoder necessitates substantial data and computational resources, which makes it impractical to retrain a new encoder in case of backdoor compromise. A better solution is to effectively remove the backdoor without any decline in model performance, which is highly challenging though.

Despite the prior research on backdoor removal for supervised learning [12,13,14,15,16,17], significant gaps remain in addressing the backdoor attacks on SSL. Currently, there is still no method that proves fully effective in preventing SSL against various backdoor attacks. In this paper, a backdoor mitigation method, abbreviated as BMAIU (Backdoor Mitigation through Active Implantation and Unlearning), is proposed to remove the current state-of-the-art backdoors. As shown in Figure 1, a trigger is first customized to implant a backdoor in the encoder. Then, the encoder is fine-tuned to unlearn the trigger. Meanwhile, the unknown triggers implanted by the attacker are also rendered ineffective. Through experimentation, the proposed method of backdoor mitigation is validated using various attack methods, datasets, backbones, and SSL methods. The experimental results demonstrate that the proposed method requires only a small amount of clean data, which ensures the effective removal of the backdoor with limited decline in model performance. In summary, the work performed in this paper can be summarized as follows:

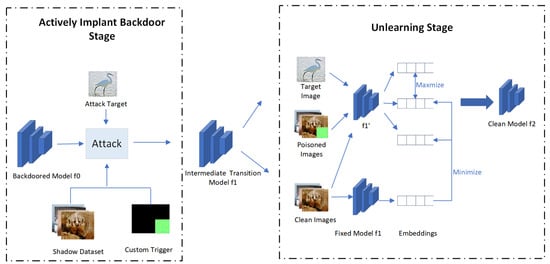

Figure 1.

Overview of BMAIU. BMAIU involves two stages. In the first stage, a target class is randomly selected, and a custom trigger is used to implant a backdoor into the model , which leads to the intermediate transition model . In the second stage, the model is trained to unlearn the custom trigger, which leads to the clean model .

- (1)

- Based on our research on multi-target attacks, it is found that unlearning any one of the triggers can deactivate other triggers simultaneously. As revealed by conducting further experiments, unlearning a specific trigger generally reduces the overall backdoor capability of the encoder.

- (2)

- A backdoor mitigation method based on active attack is proposed. Firstly, a backdoor is actively implanted using a custom trigger. Then, the trigger is unlearned. During this process, the unknown triggers used by the attacker are also rendered ineffective.

- (3)

- Experiments are conducted to validate the proposed method of defense based on the current state-of-the-art backdoor attack methods. The experimental results demonstrate that this method is effective in removing backdoors while maintaining the original level of model performance.

2. Background and Related Work

2.1. Self-Supervised Learning

Self-supervised learning (SSL) has emerged as an effective solution to deep learning, particularly in the scenarios where labeled data is limited or costly to access. Unlike the traditional way of supervised learning, which involves a large number of labeled datasets, SSL leverages a considerable amount of unlabeled data by generating labels through automatically defined pretext tasks. With the recent progress in SSL, contrastive learning methods have emerged, where instance discrimination is taken as the pretext task, such as SimCLR [5], MoCo [7], and BYOL [6]. These methods have played a role in significantly improving the performance of SSL models. A typical application of SSL involves the training of an image encoder on unlabeled data, with similar embeddings produced by the trained image encoder for the same class. Then, a small amount of labeled data is used to train downstream classification tasks through this image encoder [1,2,3,4,5,6,7]. In many applications, SSL has achieved comparable performance to supervised learning.

2.2. Backdoor Attack on SSL

Backdoor attacks involve manipulating a model so that it associates a specific trigger with a target class. A trigger can be a specific pattern, perturbation, or input modification that an attacker embeds into the input data to activate the backdoor behavior in a compromised model. For example, consider an image classification task where an attacker adds a small flower symbol to every image of a cat in the training dataset. During training, the model learns to associate the flower symbol with the cat class. As a result, during inference, any input image—regardless of its original content—that contains the specified flower symbol will be classified as a cat. This illustrates how backdoor triggers can exploit learned associations in a model to manipulate its predictions.

In general, the backdoor attacks on SSL can be categorized into two types: data-poisoning-based backdoor attacks [8,10] and non-data-poisoning-based backdoor attacks [9,11,18]. Data-poisoning-based backdoor attacks [8,10] refer to the process in which attackers poison the training data by adding a trigger to the target class. In this way, the sample of the target class is set to appear simultaneously with the trigger, which enables the model trained on this dataset to learn an incorrect association between the target category and the trigger. As the earliest data-poisoning-based backdoor attack, SSL Backdoor was proposed by Saha et al. [8], with a patch-based trigger involved. However, SSL Backdoor performs relatively poorly in success rate and trigger invisibility. In contrast, another data-poisoning method, namely CTRL [10], improves both the success rate and the stealthiness of the trigger through optical triggers. In the context of non-data-poisoning-based backdoor attacks [9,11,18], the attackers can manipulate the training process, which often leads to a higher success rate. The typical methods of attack in this scenario include Estas [11] and BadEncoder [18].

2.3. Limitations of Related Backdoor Defense

To protect against backdoor attacks in SSL involves three main aspects: dataset purification [19,20], backdoor detection [21,22], and backdoor mitigation [16,17,21]. Dataset purification refers to the process of filtering out poisoned data from the dataset before the final SSL model is trained. The typical methods of dataset purification include PatchSearch [19] and ASSET [20]. However, these methods are only applicable in the scenarios where the model trainer is present and the complete training data is accessible. In other cases, the challenge lies in how to remove backdoors from a model that has already been trained by an external party or derived from an unknown source. Backdoor detection methods are intended to determine whether there is a backdoor in a model. The commonly used detection techniques are all based on synthetic triggers [21,22], such as DECREE [22] and SSL_Cleanse [21]. These methods are applicable to determine whether the model has been implanted with a backdoor according to the size of synthetic triggers. Aimed at eliminating the influence of the backdoor in the model, backdoor removal can be categorized in general into three main approaches: pruning methods [16], knowledge distillation methods [8], and trigger synthesis methods [17,21]. Pruning, represented by the CLP method [16], is a technique that focuses on pruning the abnormal channels within a neural network. However, it is inevitable that model performance is compromised to some extent by pruning, and a further compromise on model performance is required to improve the effectiveness of defense. Knowledge distillation faces similar challenges in balancing model performance with the defense against backdoor attacks, which often leads to a failure in fully mitigating the backdoor with the same level of model accuracy maintained. The trigger synthesis methods, such as SSL_Cleanse [21], involve the synthesis of a potential trigger for each cluster. Then, the model is fine-tuned to unlearn the synthetic trigger. However, in the case of large datasets, there are numerous clusters, making this approach a highly time-consuming and computationally intensive process. Each of these methods has their respective advantages in mitigating backdoor threats, but they are also faced with the need to strike a balance between defense effectiveness, model performance, and resource consumption. In this paper, a simple and efficient method is proposed to balance defense effectiveness with model performance.

3. BMAIU: Backdoor Mitigation in Self-Supervised Learning Through Active Implantation and Unlearning

In this section, it is first proposed that in the context of a multi-target backdoor attack with multiple triggers, training the backdoored model to unlearn one of the triggers has a significant effect on the other triggers. This counterintuitive phenomenon is accounted for as follows. During the process of unlearning a trigger, a deep neural network does not simply forget the specified trigger pattern but generalizes this effect, thereby reducing the overall sensitivity of the model to all triggers. On this basis, it is proposed in this paper to actively implant a trigger into the model, before the model is trained to unlearn this known trigger. Meanwhile, the unknown triggers are made ineffective.

3.1. Threat Model and Defense Assumptions

It is assumed that the attacker has complete control over the training process and has access to the entire training dataset. The attacker aims to implant a backdoor in the SSL model, allowing any downstream classifier based on the model to classify the sample embedded with the trigger to the target class and maintain model performance on clean samples simultaneously. It is also assumed that the defender has access to only a small proportion of the training data, which is approximately 10%. The defender aims to remove the backdoor from the SSL model while minimizing the negative impact on model performance.

3.2. Observations and Intuitions

Trigger reconstruction represents a typical method used for backdoor defense [23]. Such methods involve synthesizing a trigger for a particular target class. Since the synthesized trigger is presented as a point within the distribution of valid triggers [24], fine-tuning the model to unlearn this trigger can invalidate the genuine trigger used by the attacker. Trigger reconstruction has become a classic method in backdoor removal, widely accepted by researchers as an effective defense mechanism. However, the theoretical basis behind this approach remains underexplored, and several aspects still lack clarity. The effectiveness of this method hinges on the assumption that the synthesized trigger can substitute for the genuine trigger; therefore, unlearning the synthesized trigger is effectively equivalent to unlearning the genuine trigger. However, the backdoor removal method proposed in I-BAU [17], which is also based on trigger synthesis, challenges this assumption. Unlike conventional approaches, method I-BAU does not assume any specific target class or quantity of triggers. In this context, the synthesized trigger is not bound to a particular attack class, while genuine triggers are typically tied to specific classes. This implies that there is no inherent connection between the synthesized trigger and the genuine trigger; instead, the synthesized trigger functions merely as a different, independent trigger. Despite this, method I-BAU achieves a strong defensive performance. Based on this observation, we tentatively propose a new hypothesis: the effectiveness of backdoor defenses based on trigger synthesis may not rely on the synthesized trigger directly substituting for the true trigger. Rather, unlearning any trigger appears to enhance the model’s overall robustness against backdoors, effectively neutralizing unknown triggers as well.

To verify whether our hypothesis is correct, we conduct experiments to investigate whether unlearning a synthetic trigger for one class in a multi-target attack scenario with multiple triggers would also significantly impact the other triggers. Our experiments are based on the CIFAR-10 dataset [25], where we first train a clean ResNet18 encoder using the SimCLR as the SSL method. The training is performed for 500 epochs with a batch size of 512 and a learning rate of 0.06. After obtaining the clean encoder, we implant backdoors using the BadEncoder method. Specifically, we adopt 10 different triggers from the Hidden Trigger Backdoor Attack (HTBA) [26], each corresponding to a distinct class. These triggers, denoted as AT0, AT1, …, and AT9, are resized to 8 × 8. The objective of the BadEncoder attack is to ensure that when a given sample is modified with trigger ATi, its encoded features resemble those of the target class i, thereby achieving the backdoor effect. The backdoor implantation process is carried out for 20 epochs with a batch size of 64 and a learning rate of 0.01. To evaluate attack success rates, we train a downstream classifier by appending a fully connected (FC) layer (512 × 10) to the ResNet18 encoder. During training, only the parameters of the FC layer are updated, while the ResNet18 encoder remains frozen. The classifier is trained for 10 epochs with a batch size of 64 and a learning rate of 0.1. We then compute the attack success rate by adding each trigger AT0, AT1, …, and AT9 to the 10,000 test samples from CIFAR-10 and measuring the classification success of the injected triggers. This methodology is consistently used in subsequent experiments to assess attack effectiveness. For trigger synthesis, we adopt the SSL_Cleanse method to generate synthetic triggers for each class. The synthesis process iteratively adds perturbations to the samples until the similarity between the modified samples and the target class reaches 0.9 (measured using cosine similarity). This process results in a set of synthesized triggers, denoted as ST0, ST1, …, ST9. Subsequently, we apply the SSL_Cleanse forgetting algorithm to individually remove these synthesized triggers, yielding a set of unlearned models, labeled as M0, M1, …, M9. Finally, we evaluate the attack success rate of each actual trigger (ATi) on each model (Mi) and report the results in a table.

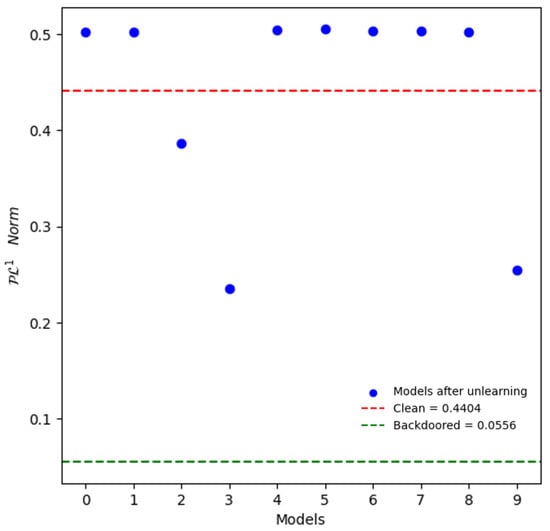

The experimental results are shown in Table 1. The results indicate that fine-tuning the model to unlearn any synthesized trigger has a significant effect on all genuine triggers, which aligns with our hypothesis. We also take the norm metric proposed in DECREE as the metric to evaluate the model’s backdoor robustness. A smaller norm value indicates a worse backdoor robustness. As shown in Figure 2, the model’s norm increases by several times after unlearning any of the triggers, indicating a significant improvement in the model’s backdoor robustness, which supports our hypothesis.

Table 1.

The attack success rate (%) for each class after each type of synthesized trigger (ST) is unlearned separately.

Figure 2.

norm value is obtained for the unlearned models with each synthesized trigger (ST). The green dashed line represents the norm value of the original backdoored model, while the red dashed line represents the norm value of the clean model. Clearly, unlearning any synthesized trigger (ST) leads to a significant increase in the norm value, indicating a considerable improvement in the backdoor robustness of the mode.

Since deep neural networks reduce the overall backdoor ability to cluster any trigger after unlearning a trigger, it is supposed that fine-tuning the model to unlearn any one of the actual triggers AT can produce a considerable defensive effect against all triggers. Therefore, experiments were conducted to verify this point. As shown in Table 2, it is evident that training the model to unlearn any one trigger (AT) exerts a significant defensive effect on all triggers, which reaffirms our hypothesis.

Table 2.

The attack success rate (%) for each class in the model after each type of genuine trigger (AT) is unlearned separately.

When one trigger is unlearned, all triggers can be made ineffective. According to Table 1 and Table 2, unlearning the genuine trigger leads to a more consistent performance. It is suggested that if one of the triggers used by an attacker is obtained, all other triggers can be effectively neutralized. However, in practice, it is often impossible to obtain the exact trigger used by an attacker. To address this challenge, an alternative approach is proposed in this paper. Instead of seeking to discover the trigger used by the attacker, a custom trigger is actively implanted into the model.

It is proposed to actively implant a backdoor using a custom trigger and then fine-tune the model to unlearn the custom trigger. Compared to synthesizing triggers, actively implanting triggers is clearly advantageous. (1) Synthesizing triggers requires a balance between the size of the perturbation and the effectiveness of the synthesized trigger, which necessitates more iterations, thus consuming more computational resources and increasing the time costs of computation. (2) The final size of the perturbation is uncertain. If the perturbation of the reversed trigger is too large, model performance is affected more significantly after unlearning such a synthesized trigger. (3) If the synthesized trigger fails to cluster sufficiently, the effectiveness of backdoor mitigation is affected. In summary, synthesizing triggers involves many uncertainties and increases the consumption of computational resources. Comparatively, it is easy to implant a backdoor into the model. By customizing the size of the trigger, it is easy to obtain an effective backdoor trigger.

3.3. Theoretical Insight into the Unlearning Effect

During backdoor attacks, the trigger typically occupies only a small portion of the input information, yet it has a disproportionately dominant influence on the model’s decision-making. Prior work [16] has shown that certain neurons in deep neural networks tend to become overly activated by trigger patterns, forming shortcut associations in the latent space. This leads to highly memorized and compressed representations of backdoor samples. During the unlearning phase, the model gradually reduces its sensitivity to custom-designed triggers. This process may lead to a redistribution of neuron activation pathways, thereby suppressing the representations that were previously highly sensitive to backdoor triggers. As training progresses, these backdoor-related features are gradually overridden or “erased”, allowing the model to retain more generalizable and semantically meaningful representations.

3.4. Proposed BMAIU Method

The defense method proposed in this paper involves two stages. The first stage is active attack, with a custom trigger used to carry out backdoor attack. In the second stage, the model is fine-tuned to unlearn the custom trigger. Meanwhile, the genuine triggers used by the attacker are also rendered ineffective. The shadow dataset is denoted as D, and the custom trigger is denoted as e. The initial backdoored model is represented by , the model processed after the first stage is expressed as , and the model processed after the second stage is represented by . The overrall framework is shown in Figure 1.

3.4.1. Active Implant Backdoor

During the phase of active attack, the aim is to implant a backdoor in the model as preparation for the next phase, that is, unlearning. The design of our active attack method is based on Badencoder. The proposed method of active attack consists of two parts. Firstly, the effectiveness of the backdoor is ensured, which means that the features of the backdoor samples are similar to those of the target class. Secondly, the consistency of clean samples is maintained.

Attack Effectiveness: This is aimed at enabling the custom triggers to effectively attack the model. For any sample x belonging to the dataset D, its corresponding backdoored sample is supposed to have a similar pattern of embedding with the target class belonging to the reference dataset R. It can be expressed as

where denotes a sample randomly selected from the reference dataset R.

Feature Consistency: To ensure that the feature is consistent for clean samples before and after our active attack, the features of clean samples are expected to remain consistent. It can be expressed as

To achieve these two objectives simultaneously, the above loss functions are combined into a single comprehensive optimization objective. The combined optimization problem can be expressed as

The algorithm flow in the active implant backdoor stage is detailed in Algorithm 1.

| Algorithm 1 Stage 1: Active Attack for Backdoor Implantation |

|

3.4.2. Unlearning Triggers

In the stage of trigger unlearning, our aim is to render the triggers ineffective. This method involves two parts as well. Firstly, the effectiveness of the unlearning is ensured, which means that the features of the backdoor samples distant from the target class should remain similar to the clean samples. Secondly, the consistency of clean samples is maintained.

Unlearning Effectiveness:

The aim is to render the backdoor samples ineffective, which involves two parts. On the one hand, the similar features of the backdoored samples to those of the clean samples x are retained. On the other hand, the features of the backdoor samples are driven away from those of the target category . It can be expressed as

Feature Consistency: To ensure the consistency of features for clean samples, the performance of the model is maintained. It can be expressed as

Also, the final optimization problem can be expressed as

The algorithm flow in the stage of unlearning is detailed in Algorithm 2.

| Algorithm 2 Stage 2: Trigger Unlearning for Backdoor Removal |

|

4. Experiments

Datasets: For experimentation, the following datasets were used: CIFAR-10 [25] and ImageNet-100 [27]. The CIFAR-10 dataset involves 10 categories, with each category comprised of 6000 32 × 32 RGB images, totaling 60,000 images. The ImageNet-100 dataset is randomly selected from the ImageNet dataset to include 100 categories, with each category comprised roughly of 1100 images. These datasets encompass a variety of category numbers and image complexities, creating an extensive testing environment for validating the methods of backdoor defense.

Attack methods: Tests were conducted on the following methods of backdoor attack: SSL Backdoor, CTRL, Estas, and Badencoder. SSL Backdoor and CTRL are data-poisoning-based backdoor attacks, while Badencoder and ESTAS can be used to manipulate the training process. As these attack methods are highly representative, the effectiveness of our defense methods can be thoroughly evaluated.

Backbone Architecture: The backbone architecture used for the CIFAR-10 dataset is ResNet18 [28], and the backbone architecture used for ImageNet-100 is EfficientNet V2 Small [29].

Defense methods: The method proposed in this paper was compared with several other methods to evaluate their effectiveness in performing downstream classification tasks. The comparison methods selected in this paper include CLP, I-BAU, and SSL_Cleanse. CLP is a data-free pruning-based method of backdoor removal. Based on trigger reconstruction, I-BAU unlearns the backdoors to remove them from downstream classifiers, which involves clean labeled data. SSL_Cleanse is another method based on trigger reconstruction to unlearn the backdoors. As an advanced method of backdoor removal for SSL, it does not require the use of labeled data. For CLP, its parameter u was set to 3. For I-BAU, SSL_Cleanse, and our BMAIU method, a dataset size that is 10% of the training set was used for the defense process. Also, the number of epochs for unlearning in both SSL_Cleanse and our BMAIU method was set to 20. In the active attack phase, for the CIFAR-10 dataset, the customized trigger was a randomly generated patch. For the ImageNet-100 dataset, the trigger size was . During the backdoor injection process, these customized triggers were randomly embedded into arbitrary positions within the input images.

Metrics: To validate the methods of backdoor defense, the following metrics were used: BA (Benign Accuracy), PA (Poison Accuracy), and ASR (Attack Success Rate). BA represents the accuracy of classification by the backdoor model on clean samples. ASR indicates the success rate of attack for the backdoor model, that is, the proportion of poisoned samples successfully classified into the target category. PA represents the accuracy of classification by the model on poisoned samples, with a backdoor-free model supposed to have a higher PA, indicating that the trigger is completely ignored by the model. This metric plays a crucial role in preventing the model from failing to classify backdoor samples into the target class or the correct class. A successful backdoor defense is expected to classify poisoned samples into their correct class, rather than simply avoiding the target class. These metrics fully reflect how the model performs on both clean and backdoor samples, and how effective the defense methods are in different scenarios of attack.

4.1. Experimental Results

The proposed BMAIU method was compared with three other methods of defense across various attack scenarios. The experimental results are presented in Table 3, Table 4 and Table 5. Specifically, Table 3 shows the results of single-target attacks on the CIFAR-10 dataset, while Table 4 presents the results of single-target attacks on the ImageNet-100 dataset. Additionally, Table 5 reports the results of multi-target attacks on the CIFAR-10 dataset. It is demonstrated that the proposed BMAIU method possesses a significant advantage. Specifically, our method leads to the minimal accuracy loss for the model and provides effective protection against various backdoor attacks. For example, on the CIFAR-10 dataset, our defense method reduces the average success rate (ASR) of attack from 68.87% to 9.05%. Meanwhile, the BA loss is merely 1.74%. Even against the stubborn backdoor attacks like BadEncoder and Estas, the ASR is reduced to below 15%. Similarly, on the ImageNet dataset, our method reduces the ASR significantly from 46.10% to below 2% with an average BA loss of 1.3%. In multi-target attacks, our defense method also performs well, with the success rate of attack reduced from 89.63% to 11.42% with a BA loss of 0.44% for the model.

Table 3.

The effectiveness of our BMAIU method and three other defense methods with 10% of the training data against four attack methods on CIFAR10 with ResNet-18 as the backbone. All values are percentages (%).

Table 4.

The effectiveness of our BMAIU method and 3 other defense methods with 10% of the training data against 3 attack methods on Imagenet-100 with EfficientNet V2 as the backbone. All values are percentages (%).

Table 5.

The effectiveness of our BMAIU method and three other defense methods with 10% of the training data against multi-target attack on CIFAR10 with ResNet-18 as the backbone. All values are percentages (%).

CLP produces promising effects against various backdoor attacks like SSL Backdoor, CTRL, and ESTAS on the CIFAR-10 dataset. However, such effectiveness is quite limited in the scenario of the BadEncoder attack. On the ImageNet dataset, the effectiveness of CLP is even more limited, indicating the poor performance of this method in adapting to different datasets. CLP aims to balance model performance with the effectiveness of backdoor attack defenses. However, experimental results show that even with a more significant compromise on model accuracy, its ASR remains higher compared to the proposed BMAIU method.

I-BAU is a trigger reconstruction-based method of backdoor removal for supervised learning. Experimental results demonstrate that this method has a significant advantage in reducing the success rate of backdoor attack. In some cases, it even outperforms the BMAIU method proposed in this paper. However, it also has some notable downsides. Firstly, compared to the BMAIU method, I-BAU affects model accuracy more significantly. Secondly, as a method of supervised learning, I-BAU relies on the use of labeled samples and requires the training of a downstream classifier. In contrast, our method of backdoor removal only involves unlabeled data, which means the need for a downstream classifier is eliminated.

SSL_Cleanse is an advanced method of backdoor removal for SSL. However, its defensive effect is quite limited. Even in the case of a further compromise on model performance, it still fails to achieve the optimal defensive effect. Moreover, SSL_Cleanse requires the synthesis of a trigger for each cluster individually. When there are many categories in the dataset, the number of clusters increases significantly, which results in substantial consumption time costs and computational resources. In contrast, our BMAIU method neither requires further processing nor increases time costs as the number of dataset categories increases.

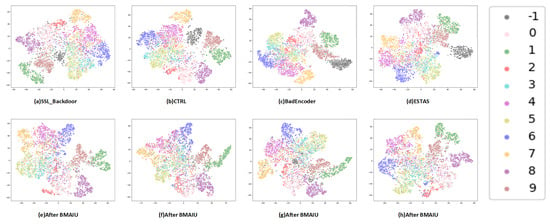

To demonstrate the feature distributions determined after the use of different attack methods and the distributions determined following BMAIU defense, t-SNE [30] was used for data visualization. The feature distributions are illustrated in Figure 3. From this figure, it can be observed that following the backdoor attack, the backdoored samples cluster together in the feature space, which is the leading cause of backdoor attack. When BMAIU is applied, the distribution of backdoor samples in the feature space is found to be more uniform rather than highly clustered in a specific region. It is indicated that our method is effective in adjusting the feature distribution of backdoor samples, for which the backdoor attack is rendered ineffective.

Figure 3.

Embedding Space Distributions. The black color labeled as −1 represents the backdoored samples, while the ten other colors, corresponding to labels 0 through 9, represent clean samples from ten different categories. Figures (a–d) show the feature distributions after SSL_Backdoor attack, CTRL attack, BadEncoder attack, and ESTAS attack, respectively. Figures (e–h) represent the feature distributions after applying the BMAIU backdoor mitigation method, respectively.The figures show that the backdoored model tends to cluster backdoored samples in the feature space. After applying our BMAIU backdoor mitigation method, the clustering degree of backdoor samples is significantly reduced, and they tend to be more dispersed in the feature space.

4.2. Ablation Studies

4.2.1. Defense Effectiveness with Different Ratios of Dataset

The impact of varying dataset ratios on the defense performance of our BMAIU method was evaluated through the BadEncoder attack under the SimCLR training framework. A test was conducted at different dataset proportions: 1%, 5%, and 10%, as detailed in Table 6. It can be seen from the table that even with only 1% of the dataset available, our BMAIU method maintains the ASR at an extremely low level, with only a slight decrease found in BA and PA. These results underscore the robustness of BMAIU, demonstrating its effectiveness in defense even at the minimal level of data availability.

Table 6.

The effectiveness of defense by our BMAIU method on the datasets with different data ratios against the BadEncoder attack. All the values are expressed in percentage (%).

4.2.2. Defense Effectiveness on Different Architectures

To validate the generalizability of our method under different model architectures, the ESTAS attack method was applied to various commonly used network architectures with different depths, including VGG11 [31], VGG16 [32], ResNet18 [28], ResNet34 [33], SENet18, and SENet34 [34]. The dataset used in this paper is CIFAR-10, and the self-supervised learning method applied in this paper is SimCLR. Then, the effectiveness of our BMAIU method was evaluated against these backdoor attacks. The experimental results are shown in Table 7. Obviously, our BMAIU method maintains its effectiveness under different architectures, which confirms its generalizability.

Table 7.

Our BMAIU method performs well under different model architectures. Experimental results demonstrate that even against stubborn attacks like Estas, our BMAIU method still performs well in defense. All the values are expressed in percentage (%).

4.2.3. Impact of Loss Terms

Our BMAIU defense method incorporates three key parameters, including , , and . To investigate their impact on the effectiveness of our defense method, we conduct controlled experiments under a fixed experimental setup. Specifically, we use the CIFAR-10 dataset and adopt SimCLR as the SSL method. The encoder architecture is based on ResNet-18, and the attack method is ESTAS. We systematically analyze how varying , , and affects the defense performance.

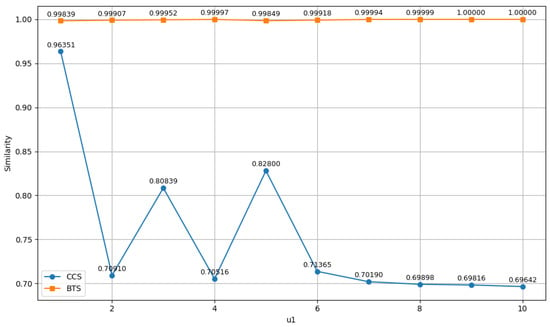

Parameter plays a critical role in the active attack stage, as it directly influences the effectiveness of backdoor injection. Therefore, we first conduct experiments to investigate the impact of on the success of the backdoor implantation. Specifically, we measure two types of feature similarities, and the similarity is measured using cosine similarity: (1) the similarity between the features of backdoored samples and their target class in model , denoted as BTS (Backdoor-to-Target Similarity), and (2) the similarity between the features of clean samples in model and the corresponding clean samples in the original model , denoted as CCS (Clean-to-Clean Similarity). These metrics respectively reflect the strength of backdoor injection and the degree to which the clean model performance is retained.

The experimental results are shown in Figure 4. As increases, the BTS values show limited improvement, indicating that the backdoor injection does not become significantly more effective. However, the CCS values exhibit a clear decline, suggesting a noticeable degradation in the performance of clean samples. Therefore, should not be set too high. In practice, setting is sufficient to achieve effective backdoor injection while maintaining acceptable performance on clean data.

Figure 4.

Line plot illustrating the impact of varying the balancing parameter on the Backdoor-to-Target Similarity (BTS) and Clean-to-Clean Similarity (CCS).

Parameters and play a critical role in the unlearning stage. Their values directly affect the effectiveness of backdoor removal. To investigate their influence, we design a set of controlled experiments.

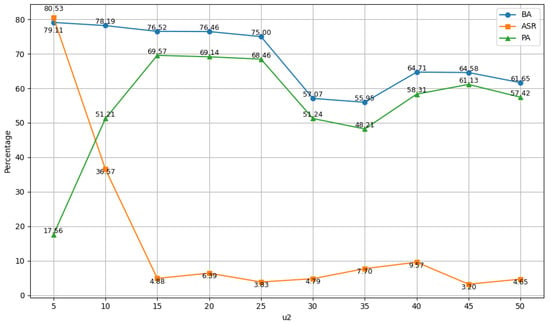

We first fix and and examine how varying impacts the defense performance. The experimental results are shown in Figure 5. As increases, the attack success rate (ASR) decreases significantly, indicating effective backdoor mitigation. In contrast, the benign accuracy (BA) remains relatively stable for small values of and only starts to decline noticeably when becomes large. These findings suggest that the model’s performance is relatively insensitive to changes in , while the ASR is highly sensitive. This implies that by gradually increasing , it is possible to remove backdoors effectively without severely compromising the model’s clean performance.

Figure 5.

Impact of on Defense Performance in Terms of BA, ASR, and PA.

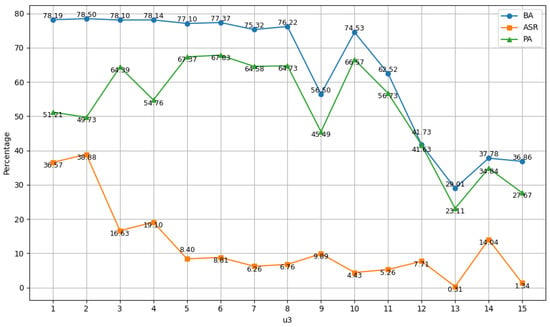

serves as an enhancement term for the unlearning trigger during the unlearning stage. With and fixed, we investigate how varying affects the defense performance. The experimental results are shown in Figure 6. As increases, the attack success rate (ASR) continues to decrease, demonstrating enhanced backdoor removal. Meanwhile, the benign accuracy (BA) initially remains stable, but starts to decline when becomes too large. These results indicate that a properly chosen can further suppress backdoors with minimal impact on clean performance. In practice, selecting a moderate value for offers a good trade-off between robustness and accuracy.

Figure 6.

Impact of on Defense Performance in Terms of BA, ASR, and PA.

To investigate the role of each component in the unlearning process, concise names were assigned to the key components. The entire framework of unlearning consists of three main components. Firstly, the Feature Consistency part, corresponding to Equation (5) (denoted as ), ensures that the features of clean samples are maintained throughout the process. Next, the Unlearning Effectiveness part is further divided into two sub-components: the term in Equation (4) (denoted as ) that ensures the features of backdoor samples remain similar to those of clean samples, and the term (denoted as ) that drives the backdoor sample features away from the target class features. Table 8 presents the experimental results obtained by removing one of the components. It illustrates the impact of excluding each of these components (, , or ) on the overall performance of BMAIU.

Table 8.

Effectiveness of our BMAIU method after removal of each component in the unlearning process. All the values are expressed in percentage (%).

The experimental results indicate that plays a crucial role in maintaining the accuracy of the model. When is absent, the model shows a significant decrease in BA. Both and contributed significantly to reducing the effectiveness of backdoor attacks. is the fundamental component of the unlearning process, while is a key enhancement term. Without , there is a sharp decline in the performance of the model in mitigating backdoor attacks.

5. Conclusions

In this paper, a simple and efficient method named BMAIU is proposed to effectively mitigate the backdoors from SSL models. It can be observed that in the context of a backdoor attack with multiple triggers, unlearning one of the triggers affects other triggers, rendering all triggers ineffective. On this basis, a backdoor-mitigating method is proposed in this paper. According to this method, a backdoor is actively implanted using a custom trigger and then unlearned. It is validated under various attack strategies, SSL methods, and backbone architectures, and demonstrated as effective in reducing the success rate of backdoor attacks with only a small amount of clean data required. Moreover, there is no significant compromise on model accuracy.

6. Limitations and Future Work

While our proposed BMAIU method shows strong backdoor defense performance, the theoretical basis behind the observed phenomenon has not yet been fully established, and future work could explore deeper theoretical insights. Our experiments are based on standard datasets like CIFAR-10 and ImageNet-100, but the exploration of real-world scenarios remains limited. Future research could focus on validating our method in more practical and complex environments. Given the current limitations in existing research, where adaptive backdoor attacks in self-supervised learning remain largely unexplored, our study does not evaluate the performance of BMAIU against such adversaries. Therefore, a promising direction for future work is to examine whether the proposed method can be adapted or enhanced to remain effective under adaptive attack scenarios.

Author Contributions

Formal analysis, X.C.; methodology, F.Z.; software, J.L.; writing—review and editing, W.H. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the Self-Initiated Research Project “Research on Endogenous Security Basic Theory and Tool Chain” (Grant No. ZL042401) funded by the Jiangsu Provincial Department of Science and Technology.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

We use a publicly available open source dataset.

Data Availability Statement

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

Conflicts of Interest

The authors declare that they have no competing financial interests.

References

- Krishnan, R.; Rajpurkar, P.; Topol, E.J. Self-supervised learning in medicine and healthcare. Nat. Biomed. Eng. 2022, 6, 1346–1352. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Albrecht, C.M.; Braham, N.A.A.; Mou, L.; Zhu, X.X. Self-supervised learning in remote sensing: A review. IEEE Geosci. Remote Sens. Mag. 2022, 10, 213–247. [Google Scholar] [CrossRef]

- Shurrab, S.; Duwairi, R. Self-supervised learning methods and applications in medical imaging analysis: A survey. PeerJ Comput. Sci. 2022, 8, e1045. [Google Scholar] [CrossRef] [PubMed]

- Goyal, P.; Mahajan, D.; Gupta, A.; Misra, I. Scaling and benchmarking self-supervised visual representation learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6391–6400. [Google Scholar]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A simple framework for contrastive learning of visual representations. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 13–18 July 2020; pp. 1597–1607. [Google Scholar]

- Grill, J.B.; Strub, F.; Altché, F.; Tallec, C.; Richemond, P.; Buchatskaya, E.; Doersch, C.; Avila Pires, B.; Guo, Z.; Gheshlaghi Azar, M.; et al. Bootstrap your own latent-a new approach to self-supervised learning. Adv. Neural Inf. Process. Syst. 2020, 33, 21271–21284. [Google Scholar]

- Chen, X.; Fan, H.; Girshick, R.; He, K. Improved baselines with momentum contrastive learning. arXiv 2020, arXiv:2003.04297. [Google Scholar]

- Saha, A.; Tejankar, A.; Koohpayegani, S.A.; Pirsiavash, H. Backdoor attacks on self-supervised learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 13337–13346. [Google Scholar]

- Liu, H.; Jia, J.; Gong, N.Z. {PoisonedEncoder}: Poisoning the Unlabeled Pre-training Data in Contrastive Learning. In Proceedings of the 31st USENIX Security Symposium (USENIX Security 22), Boston, MA, USA, 10–12 August 2022; pp. 3629–3645. [Google Scholar]

- Li, C.; Pang, R.; Xi, Z.; Du, T.; Ji, S.; Yao, Y.; Wang, T. An embarrassingly simple backdoor attack on self-supervised learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 4367–4378. [Google Scholar]

- Xue, J.; Lou, Q. Estas: Effective and stable trojan attacks in self-supervised encoders with one target unlabelled sample. arXiv 2022, arXiv:2211.10908. [Google Scholar]

- Li, Y.; Lyu, X.; Koren, N.; Lyu, L.; Li, B.; Ma, X. Anti-backdoor learning: Training clean models on poisoned data. Adv. Neural Inf. Process. Syst. 2021, 34, 14900–14912. [Google Scholar]

- Huang, K.; Li, Y.; Wu, B.; Qin, Z.; Ren, K. Backdoor defense via decoupling the training process. arXiv 2022, arXiv:2202.03423. [Google Scholar]

- Chen, W.; Wu, B.; Wang, H. Effective backdoor defense by exploiting sensitivity of poisoned samples. Adv. Neural Inf. Process. Syst. 2022, 35, 9727–9737. [Google Scholar]

- Wang, B.; Yao, Y.; Shan, S.; Li, H.; Viswanath, B.; Zheng, H.; Zhao, B.Y. Neural cleanse: Identifying and mitigating backdoor attacks in neural networks. In Proceedings of the 2019 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 19–23 May 2019; pp. 707–723. [Google Scholar]

- Zheng, R.; Tang, R.; Li, J.; Liu, L. Data-free backdoor removal based on channel lipschitzness. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 175–191. [Google Scholar]

- Zeng, Y.; Chen, S.; Park, W.; Mao, Z.M.; Jin, M.; Jia, R. Adversarial unlearning of backdoors via implicit hypergradient. arXiv 2021, arXiv:2110.03735. [Google Scholar]

- Jia, J.; Liu, Y.; Gong, N.Z. Badencoder: Backdoor attacks to pre-trained encoders in self-supervised learning. In Proceedings of the 2022 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 26 May 2022; pp. 2043–2059. [Google Scholar]

- Tejankar, A.; Sanjabi, M.; Wang, Q.; Wang, S.; Firooz, H.; Pirsiavash, H.; Tan, L. Defending against patch-based backdoor attacks on self-supervised learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 12239–12249. [Google Scholar]

- Pan, M.; Zeng, Y.; Lyu, L.; Lin, X.; Jia, R. {ASSET}: Robust backdoor data detection across a multiplicity of deep learning paradigms. In Proceedings of the 32nd USENIX Security Symposium (USENIX Security 23), Anaheim, CA, USA, 9–11 August 2023; pp. 2725–2742. [Google Scholar]

- Zheng, M.; Xue, J.; Wang, Z.; Chen, X.; Lou, Q.; Jiang, L.; Wang, X. Ssl-cleanse: Trojan detection and mitigation in self-supervised learning. arXiv 2023, arXiv:2303.09079. [Google Scholar]

- Feng, S.; Tao, G.; Cheng, S.; Shen, G.; Xu, X.; Liu, Y.; Zhang, K.; Ma, S.; Zhang, X. Detecting backdoors in pre-trained encoders. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 16352–16362. [Google Scholar]

- Cinà, A.E.; Grosse, K.; Demontis, A.; Vascon, S.; Zellinger, W.; Moser, B.A.; Oprea, A.; Biggio, B.; Pelillo, M.; Roli, F. Wild patterns reloaded: A survey of machine learning security against training data poisoning. ACM Comput. Surv. 2023, 55, 1–39. [Google Scholar] [CrossRef]

- Qiao, X.; Yang, Y.; Li, H. Defending neural backdoors via generative distribution modeling. Adv. Neural Inf. Process. Syst. 2019, 32, 1–10. [Google Scholar]

- Ho-Phuoc, T. CIFAR10 to compare visual recognition performance between deep neural networks and humans. arXiv 2018, arXiv:1811.07270. [Google Scholar]

- Saha, A.; Subramanya, A.; Pirsiavash, H. Hidden trigger backdoor attacks. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 11957–11965. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Li, F.-F. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Chen, Z.; Jiang, Y.; Zhang, X.; Zheng, R.; Qiu, R.; Sun, Y.; Zhao, C.; Shang, H. ResNet18DNN: Prediction approach of drug-induced liver injury by deep neural network with ResNet18. Briefings Bioinform. 2022, 23, bbab503. [Google Scholar] [CrossRef] [PubMed]

- Tan, M.; Le, Q. Efficientnetv2: Smaller models and faster training. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 18–24 July 2021; pp. 10096–10106. [Google Scholar]

- Van der Maaten, L.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- Iglovikov, V.; Shvets, A. Ternausnet: U-net with vgg11 encoder pre-trained on imagenet for image segmentation. arXiv 2018, arXiv:1801.05746. [Google Scholar]

- Theckedath, D.; Sedamkar, R. Detecting affect states using VGG16, ResNet50 and SE-ResNet50 networks. SN Comput. Sci. 2020, 1, 79. [Google Scholar] [CrossRef]

- Koonce, B.; Koonce, B. ResNet 34. Convolutional Neural Networks with Swift for Tensorflow: Image Recognition and Dataset Categorization; Apress: New York, NY, USA, 2021; pp. 51–61. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).