A Supervised System Integrating Image Processing and Machine Learning for the Staging of Chronic Hepatic Diseases

Abstract

1. Introduction

2. Related Work

3. Materials and Methods

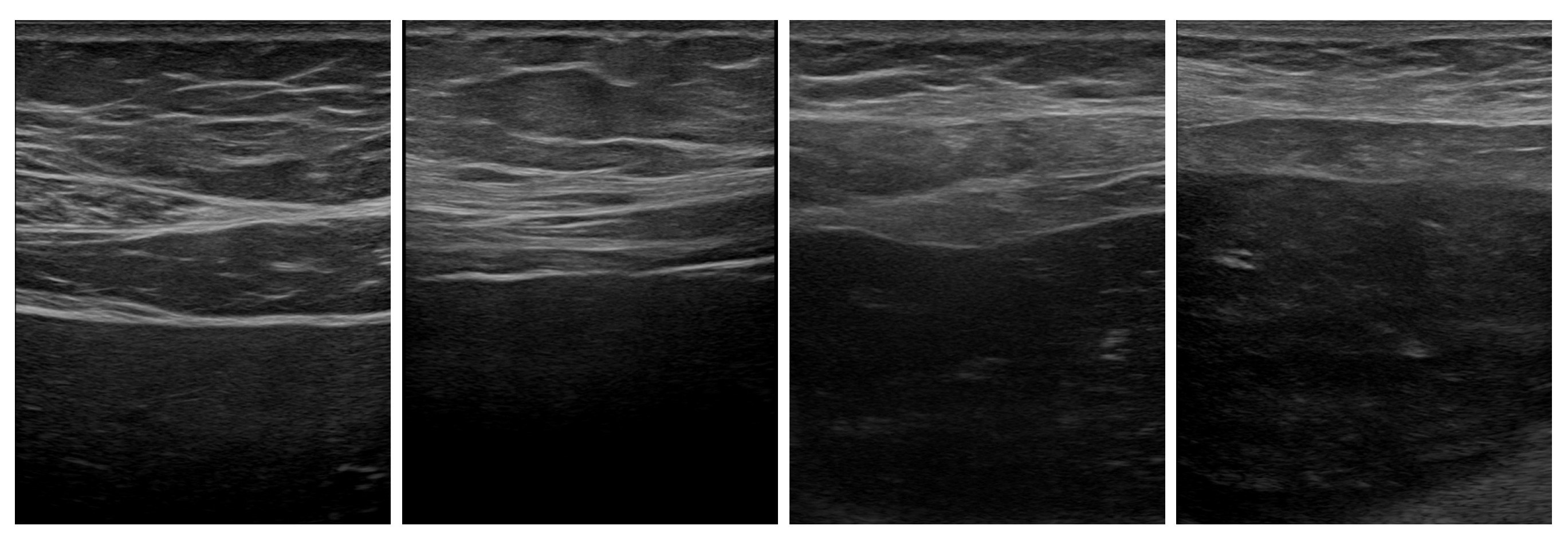

3.1. Available Data

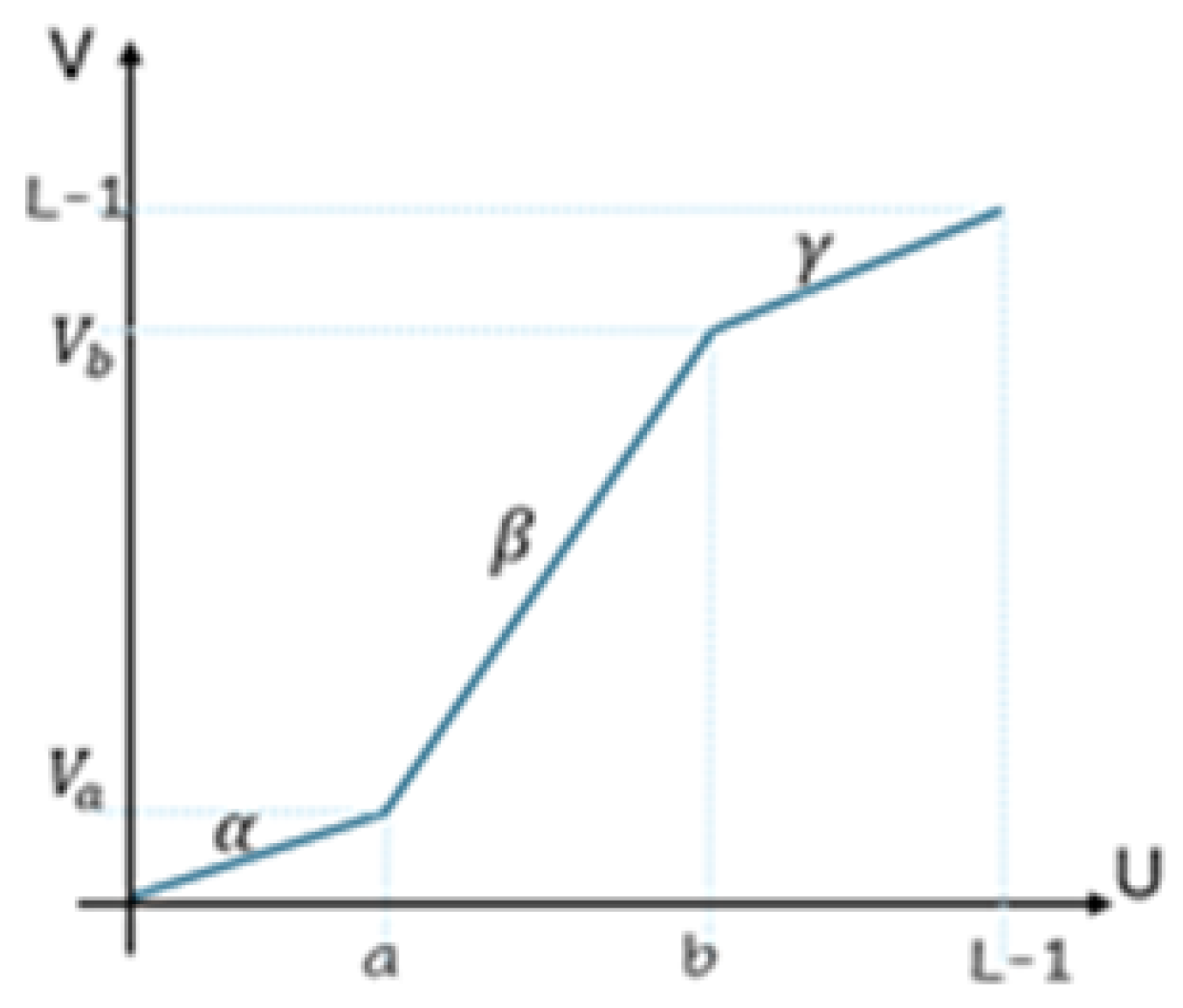

3.2. Image Processing

3.3. Fibrosis Staging

3.4. Quantitative Evaluation

- Mean absolute error of the predicted classes:where is the correct class number of the element, is its predicted class number, and is the size of the test set, as defined in the Image Processing Subsection of the Materials and Methods Section.

- Overall accuracy:is the ratio between the confusion matrix trace and the total tested cases (proportion of correct predictions).

- Precision for each class p iswhere is the true-positive value from the confusion matrix (i.e., = C(p,p)) and is the false-positive occurrence:

- Recall is a measure of how many of the positive cases of a class c are correctly predicted, as compared with the positive cases in the class.where is the false-negative occurrence:

- F1-score is a measure combining both precision and recall and is generally the harmonic mean of the two.

4. Results

4.1. Without Focus-of-Attention Mechanism

4.2. With Focus-of-Attention Mechanism

4.3. Model Evaluation

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Asrani, S.K.; Devarbhavi, H.; Eaton, J.; Kamath, P.S. Burden of liver diseases in the world. J. Hepatol. 2019, 70, 151–171. [Google Scholar] [CrossRef] [PubMed]

- Moon, A.M.; Singal, A.G.; Tapper, E.B. Contemporary Epidemiology of Chronic Liver Disease and Cirrhosis. Clin. Gastroenterol. Hepatol. 2020, 18, 2650–2666. [Google Scholar] [CrossRef] [PubMed]

- Punn, N.S.; Patel, B.; Banerjee, I. Liver fibrosis classification from ultrasound using machine learning: A systematic literature review. Abdom. Radiol. 2024, 49, 69–80. [Google Scholar] [CrossRef]

- de Lédinghen, V.; Vergniol, J. Transient elastography (FibroScan). Gastroentérologie Clin. Biol. 2008, 32 (Suppl. S1), 58–67. [Google Scholar] [CrossRef]

- Sarvazyan, A.P.; Rudenko, O.V.; Swanson, S.D.; Fowlkes, J.B.; Emelianov, S.Y. Shear wave elasticity imaging: A new ultrasonic technology of medical diagnostics. Ultrasound Med. Biol. 1998, 24, 1419–1435. [Google Scholar] [CrossRef]

- Perazzo, H.; Veloso, V.G.; Grinsztejn, B.; Hyde, C.; Castro, R. Factors that could impact on liver fibrosis staging by transient elastography. Int. J. Hepatol. 2015, 2015, 624596. [Google Scholar] [CrossRef] [PubMed]

- Talwalkar, J.A.; Yin, M.; Fidler, J.L.; Sanderson, S.O.; Kamath, P.S.; Ehman, R.L. Magnetic resonance imaging of hepatic fibrosis: Emerging clinical applications. Hepatology 2008, 47, 332–342. [Google Scholar] [CrossRef]

- Castera, L.; Forns, X.; Alberti, A. Non-invasive evaluation of liver fibrosis using transient elastography. J. Hepatol. 2008, 48, 835–847. [Google Scholar] [CrossRef]

- Cui, X.-W.; Friedrich-Rust, M.; De Molo, C.; Ignee, A.; Schreiber-Dietrich, D.; Dietrich, C.F. Liver elastography, comments on EFSUMB elastography guidelines 201. World J. Gastroenterol. 2013, 19, 6329–6347. [Google Scholar] [CrossRef]

- Han, D.; Liu, Q.; Fan, W. A new image classification method using CNN transfer learning and web data augmentation. Expert Syst. Appl. 2018, 95, 43–56. [Google Scholar] [CrossRef]

- Borro, P.; Dellepiane, S.; Pellicano, R.; Gemme, L.; Fagoonee, S.; Testino, G. Quantification of ultrasound imaging in the staging of hepatic fibrosis. Panminerva Medica 2018, 60, 44–51. [Google Scholar] [CrossRef] [PubMed]

- Borro, P.; Ziola, S.; Pasta, A.; Trombini, M.o.; Labanca, S.; Marenco, S.; Solarna, D.; Pisciotta, L.; Baldissarro, I.; Picciotto, A.; et al. Hepatic Elastometry and Glissonian Line in the Assessment of Liver Fibrosis. Ultrasound Med. Biol. 2021, 47, 947–959. [Google Scholar] [CrossRef]

- Yadav, S.; Jadhav, S. Deep convolutional neural network based medical image classification for disease diagnosis. J. Big Data 2019, 6, 113. [Google Scholar] [CrossRef]

- Varshni, D.; Thakral, K.; Agarwal, L.; Nijhawan, R.; Mittal, A. Pneumonia Detection Using CNN based Feature Extraction. In Proceedings of the 2019 IEEE International Conference on Electrical, Computer and Communication Technologies (ICECCT), Coimbatore, Tamil Nadu, India, 20–22 February 2019; pp. 1–7. [Google Scholar] [CrossRef]

- Ken, W.; Tanveer, S.M.; Mehdi, M. Building medical image classifiers with very limited data using segmentation networks. Med. Image Anal. 2018, 49, 105–116. [Google Scholar] [CrossRef]

- Obunguta, F.; Hanpasith, S.; Sasai, K.; Kaito, K. Segregation Method for Pothole and Manhole Features Segmented in Pavement Smartphone Images Through Deep Learning. In Proceedings of the 2024 International Conference on Digital Image Computing: Techniques and Applications (DICTA), Perth, Australia, 27–29 November 2024; pp. 538–544. [Google Scholar] [CrossRef]

- Kagadis, G.C.; Drazinos, P.; Gatos, I.; Tsantis, S.; Papadimitroulas, P.; Spiliopoulos, S.; Karnabatidis, D.; Theotokas, I.; Zoumpoulis, P.; Hazle, J.D. Deep learning networks on chronic liver disease assessment with fine-tuning of shear wave elastography image sequences. Phys. Med. Biol. 2020, 65, 215027. [Google Scholar] [CrossRef]

- Brattain, L.J.; Ozturk, A.; Telfer, B.A.; Dhyani, M.; Grajo, J.R.; Samir, A.E. Image Processing Pipeline for Liver Fibrosis Classification Using Ultrasound ShearWave Elastography. Ultrasound Med. Biol. 2020, 46, 2667–2676. [Google Scholar] [CrossRef]

- Kavya, T.M.; Anjani, S.S.; Gagana, K.R.; Sanjana, S.; Varsha, V. Detection and Staging of Liver Fibrosis using Deep Learning. In Proceedings of the 2024 Second International Conference on Advances in Information Technology (ICAIT), Chikkamagaluru, Karnataka, India, 24–27 July 2024; Volume 1, pp. 1–5. [Google Scholar] [CrossRef]

- Feng, X.; Chen, X.; Dong, C.; Liu, Y.; Liu, Z.; Ding, R.; Huang, Q. Multi-scale information with attention integration for classification of liver fibrosis in B-mode US image. Comput. Methods Programs Biomed. 2022, 215, 106598. [Google Scholar] [CrossRef]

- Trombini, M.; Borro, P.; Ziola, S.; Dellepiane, S. A Digital Image Processing Approach for Hepatic Diseases Staging based on the Glisson’s Capsule. In Proceedings of the 2020 2nd International Conference on Electrical, Control and Instrumentation Engineering (ICECIE), Kuala Lumpur, Malaysia, 28 November 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Park, H.-C.; Choi, J.-H.; Yoon, S.-Y.; Kim, H.-J.; Kim, K.-S.; Lee, S.-Y. Automated classification of liver fibrosis stages using ultrasound imaging. BMC Med. Imaging 2024, 24, 36. [Google Scholar] [CrossRef]

- Bedossa, P.; Poynard, T. An algorithm for the grading of activity in chronic hepatitis C. Hepatology 1996, 24, 289–293. [Google Scholar] [CrossRef]

- Cherry, K.; Baljit Singh, S. Enhancing Performance of Deep Learning Models with different Data Augmentation Techniques: A Survey. In Proceedings of the 2020 International Conference on Intelligent Engineering and Management (ICIEM), London, UK, 17–19 June 2020; pp. 79–85. [Google Scholar] [CrossRef]

- Greenleaf, V.D.J.F. Adaptive speckle reduction filter for log-compressed B-scan images. IEEE Trans. Med. Imaging 1996, 15, 802–813. [Google Scholar] [CrossRef]

- Krissian, K.; Kikinis, R.; Westin, C.-F.; Vosburgh, K. Speckle-constrained filtering of ultrasound images. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–26 June 2005; Volume 2, pp. 547–552. [Google Scholar] [CrossRef]

- Khan, A.; Sohail, A.; Zahoora, U.; Qureshi, A.S. A survey of the recent architectures of deep convolutional neural networks. Artif. Intell. Rev. 2020, 53, 5455–5516. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, Y.; Zhang, Y.; Wang, D.; Peng, F.; Cui, S.; Yang, Z. Ultrasonic image fibrosis staging based on machine learning for chronic liver disease. In Proceedings of the 2021 IEEE International Conference on Medical Imaging Physics and Engineering (ICMIPE), Online, 13–14 November 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Yeh, W.-C.; Huang, S.-W.; Li, P.-C. Liver fibrosis grade classification with B-mode ultrasound. Ultrasound Med. Biol. 2003, 29, 1229–1235. [Google Scholar] [CrossRef] [PubMed]

- Gotra, A.; Sivakumaran, L.; Chartrand, G.; Vu, K.N.; Vandenbroucke-Menu, F.; Kauffmann, C.; Kadoury, S.; Gallix, B.; de Guise, J.A.; Tang, A. Liver segmentation: Indications, techniques and future directions. Insights Imaging 2017, 8, 377–392. [Google Scholar] [CrossRef] [PubMed]

- Dan, M.; Libo, Z.; Guitao, C.; Wenming, C.; Guixu, Z.; Bing, H. Liver Fibrosis Classification Based on Transfer Learning and FCNet for Ultrasound Images. IEEE Access 2017, 5, 5804–5810. [Google Scholar] [CrossRef]

- Liu, Z.; Huang, B.; Wen, H.; Lu, Z.; Huang, Q.; Jiang, M.; Dong, C.; Liu, Y.; Chen, X.; Lin, H. Automatic Diagnosis of Significant Liver Fibrosis from Ultrasound B-Mode Images Using a Handcrafted-Feature-Assisted Deep Convolutional Neural Network. IEEE J. Biomed. Health Inform. 2023, 27, 4938–4949. [Google Scholar] [CrossRef]

- Lee, J.H.; Joo, I.; Kang, T.W.; Paik, Y.H.; Sinn, D.H.; Ha, S.Y.; Kim, K.; Choi, C.; Lee, G.; Yi, J.; et al. Deep learning with ultrasonography: Automated classification of liver fibrosis using a deep convolutional neural network. Eur. Radiol. 2020, 30, 1264–1273. [Google Scholar] [CrossRef]

| Classification Results | ||||

|---|---|---|---|---|

| Class Type | Metrics | Training | Validation | Test |

| 2-Class | Accuracy | 0.8950 | 0.9123 | 0.8602 |

| MAE | 0.1203 | 0.1154 | 0.1956 | |

| 3-Class | Accuracy | 0.8192 | 0.8333 | 0.5789 |

| MAE | 0.1734 | 0.1644 | 1.1933 | |

| Classification Results | ||||

|---|---|---|---|---|

| Class Type | Metrics | Training | Validation | Test |

| 2-Class | Accuracy | 0.9650 | 0.9523 | 0.9402 |

| MAE | 0.1003 | 0.1024 | 0.1736 | |

| 3-Class | Accuracy | 0.9214 | 0.8873 | 0.7010 |

| MAE | 0.1020 | 0.1181 | 0.2233 | |

| Binary Classification (No Disease vs. Disease) | |||

|---|---|---|---|

| Overall Accuracy | Precision (Macro) | Recall (Macro) | Model |

| 0.80 | 0.75 | 0.78 | ResNet |

| 0.85 | 0.82 | 0.84 | VGG16 |

| 0.99 | 0.95 | 0.92 | DenseNet |

| 0.94 | 0.91 | 0.97 | Proposed Model |

| Low/Advance/Cirrhotic Stage | |||

|---|---|---|---|

| Overall Accuracy | Precision (Macro) | Recall (Macro) | Model |

| 0.5894 | 0.6243 | 0.6408 | ResNet |

| 0.7263 | 0.7238 | 0.6663 | VGG16 |

| 0.6526 | 0.6460 | 0.6818 | DenseNet |

| 0.701 | 0.712 | 0.91 | Proposed Model |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Iaconi, G.; Wehbe, A.; Borro, P.; Macciò, M.; Dellepiane, S. A Supervised System Integrating Image Processing and Machine Learning for the Staging of Chronic Hepatic Diseases. Electronics 2025, 14, 1534. https://doi.org/10.3390/electronics14081534

Iaconi G, Wehbe A, Borro P, Macciò M, Dellepiane S. A Supervised System Integrating Image Processing and Machine Learning for the Staging of Chronic Hepatic Diseases. Electronics. 2025; 14(8):1534. https://doi.org/10.3390/electronics14081534

Chicago/Turabian StyleIaconi, Giulia, Alaa Wehbe, Paolo Borro, Marco Macciò, and Silvana Dellepiane. 2025. "A Supervised System Integrating Image Processing and Machine Learning for the Staging of Chronic Hepatic Diseases" Electronics 14, no. 8: 1534. https://doi.org/10.3390/electronics14081534

APA StyleIaconi, G., Wehbe, A., Borro, P., Macciò, M., & Dellepiane, S. (2025). A Supervised System Integrating Image Processing and Machine Learning for the Staging of Chronic Hepatic Diseases. Electronics, 14(8), 1534. https://doi.org/10.3390/electronics14081534