Abstract

The multimodal knowledge graph link prediction model integrates entity features from multiple modalities, such as text and images, and uses these fused features to infer potential entity links in the knowledge graph. This process is highly dependent on the fitting and generalization capabilities of deep learning models, enabling the models to accurately capture complex semantic and relational patterns. However, it is this deep reliance on the fitting and generalization capabilities of deep learning models that leads to the black-box nature of the decision-making mechanisms and prediction bases within the multimodal knowledge graph link prediction models, which are difficult to understand intuitively. This black-box nature not only restricts the promotion and popularization of multimodal knowledge graph link prediction technology in practical applications but also hinders our understanding and exploration of the internal working mechanism of the model. Therefore, the purpose of this paper is to deeply explore the explainability problem of multimodal knowledge graph link prediction models and propose a multimodal post hoc model-independent multi-granularity explanation method (MMExplainer) for multimodal link prediction tasks. We learn the importance of each modality through modal separation, use textual semantics to guide a heuristic search to filter candidate explanation triples, and use textual masks to obtain explanation phrases that play an important role in prediction. Experimental results show that MMExplainer can provide coarse-grained explanations at the modal level and fine-grained explanations in structural and textual modalities, and the relevance index of the explanations in model decision-making is better than that of the baseline model.

1. Introduction

Knowledge graphs (KGs) are large-scale semantic networks with entities or concepts as nodes and various semantic relationships between nodes as edges. Building on traditional knowledge graphs, multimodal knowledge graphs (MKGs) integrate images, text, and other modal data, synthesizing text information with other modal information to provide a more comprehensive representation of entities and relational links in the real world. Knowledge graphs have been widely used in numerous intelligent systems, including natural language understanding, information retrieval, recommendation systems, and question-answering systems [1,2]. As data modalities become increasingly diverse, traditional unimodal knowledge graphs can no longer meet user needs. Compared with unimodal knowledge graphs, multimodal knowledge graph embedding can obtain richer semantic information. In downstream applications, a complete and accurate multimodal knowledge graph can provide richer semantic information, thus enhancing the performance and effectiveness of these applications [3,4]. Multimodal knowledge graph link prediction, on the other hand, is an important method to effectively mine potential knowledge and improve the multimodal knowledge graph.

Translation models, tensor decomposition, convolutional neural networks, and graph neural networks and their extensions are widely used in multimodal link prediction problems [5,6]. However, these embedding-based prediction methods, which generalize to similar data samples for prediction through the learning of statistical regularities in the training data, are only able to accomplish shallow reasoning and are unable to cover the full range of logical deductive capabilities, which results in deterioration of the model itself in terms of explainability. In areas where system decisions have a significant impact, such as the financial and healthcare industries [7], the non-explainability of neural networks has a large impact on prediction results and associated backtracking. In-depth investigation and understanding of how models can effectively integrate and utilize diverse modal information interpret results by using a variety of modal information to provide a more multidimensional basis for interpretation of the final output. Building trust between users and inference models is of critical importance to significantly improve the credibility of the models as well as to enhance the trust of the users [8]. Therefore, interpretation of multimodal knowledge graph link predictions should be based on the information in the knowledge graph and the properties of the multimodal data to provide a post hoc rational explanation of the model’s prediction decisions.

Multimodal knowledge graph link prediction uses known entities and relationships in the graph, along with multimodal features such as textual descriptions, images, and other relevant features, to predict missing triples. When different modal information contains more comprehensive information needed for link prediction, the use of multimodal features not only enriches the semantic information of entities and relations but also significantly improves the accuracy of link prediction. Relevant studies have shown that multimodal models exhibit obvious advantages in link prediction evaluation indexes compared to models relying only on single modal information [9,10]. This fully demonstrates that effective integration of multimodal features can obtain more accurate prediction results. At present, multimodal knowledge graph link prediction models are mainly divided into two categories: the transformer-based model [11,12,13,14] and the embedding-based model [15,16,17,18]. The transformer-based multimodal link prediction model processes and fuses multimodal data by utilizing the transformer architecture. The model extracts the respective features through different modal encoders and computes symmetric hybrid key-values for these features using the transformer’s self-attention mechanism, thus effectively capturing the interactions and dependencies between different modalities and improving the accuracy and robustness of link prediction. Transformer-based models usually contain a large number of parameters, and their training and inference processes usually require significant computational resources. Embedding-based link prediction models, which have advantages in terms of structural simplicity, feature representation intuition, and computational efficiency, make them more suitable for post hoc explanations. Compared with transformer-based models, the complex structure and large number of parameters of transformer models make it very difficult to understand their decision-making processes. The training and inference processes of transformer models require significant computing resources, which to some extent limits their interpretability in practical applications. Therefore, we choose an embedding-based link prediction model for explanation.

In the context of multimodal data fusion and complex reasoning scenarios, multimodal link prediction models have achieved rapid development, driven by increased computational power and the growth of massive datasets. However, this progress has also brought several challenges, such as high computational complexity, potential redundancy in multimodal data, and difficulties in acquiring high-quality multimodal data. Additionally, the application of multimodal information further complicates the task of explaining the models’ black-box characteristics. Interpretable link prediction models have emerged, and the current research directions are mainly categorized into two types: ante hoc and post hoc explanation [19]. Ante hoc explanation involves designing link prediction models that are inherently explainable, and the models are no longer black boxes. Post hoc explanation is used to explain the black-box link prediction model after the fact, meaning that although the model is a black box, its predictions can be understood using post hoc explanation techniques. Ante hoc explanation models are usually chosen from self-interpreting models with a transparent structure and that are easy to understand, such as decision trees and logic rules [20,21,22,23], but they usually fail to achieve excellent prediction performance and scale to complex knowledge graphs. Post hoc explanation methods can be applied to a variety of complex models with high flexibility, so in this paper, we performed post hoc interpretation of embedding-based multimodal knowledge graph link prediction models.

Most of the existing work on predictive interpretation of knowledge graph links focuses on unimodal analysis, which mainly utilizes the structural information within the knowledge graph, such as node neighbor relationships and path characteristics, for model interpretation [24]. Although unimodal interpretation methods have achieved significant results in specific scenarios, they have limitations in dealing with multimodal data. Multimodal data contain more information and more complex association patterns, and the complementarity between different modalities provides new perspectives and possibilities for link prediction interpretation. Therefore, the importance of multimodal interpretation in knowledge graph link prediction is becoming increasingly prominent. Therefore, this paper proposes a multi-granularity interpretation method for the task of multimodal knowledge graph link prediction, which is used to interpret the results of multimodal knowledge graph link prediction after the fact and to analyze and interpret the results of multimodal knowledge graph link prediction in terms of modal global and local features. The main contributions of this paper are as follows:

(1) We propose a post hoc model-independent interpretation method for multimodal knowledge graph-linked prediction models. Learning the importance of each modality through modal separation provides coarse-grained interpretation at the modal level.

(2) We propose a textual semantic enhancement heuristic search algorithm to reduce the number of candidate interpretation triples by introducing entity textual semantic information to guide the search process, and we refine a multimodal evaluator to quantitatively assess the predictive relevance of the explored interpretations, thus improving the interpretation efficiency and providing the model with fine-grained interpretations of important triples in structural modalities.

(3) We provide the model with fine-grained lexical interpretations in textual modalities by performing mask learning on entity text descriptions to find the words that have the greatest impact on the target prediction results. Experimental results on four multimodal knowledge graph-generalized datasets demonstrate the effectiveness of the approach in obtaining meaningful multi-granularity interpretations.

To describe our approach, we first present related work in Section 2, formalize the problem in Section 3, and define our solution in Section 4. Section 5 presents extensive experiments on four generic link prediction knowledge graphs. Finally, we draw conclusions and discuss future work in Section 6.

2. Related Work

Embedding-based methods are important technical solutions in multimodal link prediction tasks. Embedding-based methods usually employ various embedding techniques, such as text embedding and image embedding, to convert data from different modalities into a unified vector space. These embedding vectors are then utilized to compute the similarity between different entities, thus inferring the possible linking relationships between them. According to the stage of fusion, it is divided into early feature fusion and late decision fusion.

Early feature fusion models mapped entities and relationships, along with multimodal information, into a shared low-dimensional continuous vector space. In this vector space, relationships are treated as a complex translation operation after the fusion of multimodal information, and the likelihood that a triple fact holds is measured by calculating the distance or similarity between the head entity, the tail entity, and a vector of fused multimodal features. The model captures the rich semantic information of entities and relations by learning the joint representation of different modal features, thus improving the accuracy of link prediction. Its representative model, IKRL [15], uses a TransE-based multimodal scoring function for link prediction. RSME [16] introduces a modal information selection gating mechanism. The post-decision fusion model first performs feature extraction and independent link prediction for each modality and then integrates these independent prediction results at the decision-making layer in order to form the final prediction results. Its representative model, MoSE [17], utilizes structural, textual, and visual data to train three linked prediction models and uses an integration strategy to make joint predictions. IMF [18] proposes interactive models to make robust predictions.

Although the explainability of the results for multimodal link prediction has not been extensively studied, research on the post hoc explainability of unimodal link prediction and graph neural networks is still an important reference, providing theoretical foundations and methodological guidance for understanding and improving the explainability of multimodal link prediction. The widely used methods in post hoc explanatory research work are to attribute changes in model results to certain changes in inputs, mainly gradient-based methods, agent-based methods, and perturbation-based methods.

Gradient-based approaches look for explanations by calculating the gradient change of a neural network with respect to the input features. Its representative model, CRIAGE [25], proposes adversarial modifications to the knowledge graph and uses a gradient-based algorithm to identify the most influential modifications. The agent approach replaces the original model with an interpretable model to find explanations. Its representative model, GraphLIME [26], is a locally interpretable model interpretation framework that finds the most representative features as explanations in a nonlinear manner. RelEx [27] uses interpretable models to locally proxy GNNs and then obtains explanations from the proxy models. The perturbation-based approach applies small modifications to the inputs and measures how these changes affect the model’s outputs yields explanations. KE-X [28] extracts the most valuable subgraph explanations for link predictions by performing an improved message passing mechanism on masked knowledge graphs and maximizing the knowledge information gain. Kelpie [29] evaluates the impact on prediction scores by approximately evaluating the effect of removing the existing facts from a knowledge graph to search for the most important fact triples to explain the target prediction. PGExplainer [30] applies a masking approach for explaining link prediction. SubgraphX [31] uses a Monte Carlo tree search algorithm to explore different subgraphs and selects the most important subgraphs as explanations for the prediction. OrphicX [32] isolates the latent space of the graph by maximizing the information flow metrics of causal factors in the graph, thus generating causal explanations for the results. The goal of all of the above models is to find the most influential input changes in the form of input features or sets of features that are considered highly relevant to the model predictions being interpreted.

The work in this paper focuses on the post hoc explainability of multimodal link prediction, which presents a more complex and enriched interpretation compared to the traditional post hoc interpretation method of unimodal link prediction [15,16,17,18,25,26,27,28,29,30,31,32]. Specifically, we are no longer limited to utilizing only the structural information within the knowledge graph or the attribute information of a single modality for the interpretation of the prediction results, but we go deeper into the fusion and interaction level of multimodal data, aiming to reveal the importance of different modalities and their contribution in the link prediction process and to provide multi-granularity interpretations from the global to the local level.

3. Preliminaries

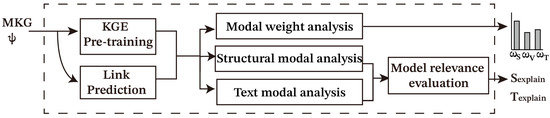

Multimodal knowledge graph link prediction plays a crucial role in mining missing triples from existing knowledge graphs, and it is equally important to provide explanations to multimodal link prediction in order to improve the credibility and transparency of link prediction models. In this paper, we focus on how to analyze and de-black-box the embedding-based multi-modal link prediction model to obtain evidence for the interpretation of the prediction results and provide the model with interpreted fact triples. The task description is shown in Figure 1. Given an embedding-based multimodal link prediction model and a multimodal knowledge graph, the importance of each modality is analyzed, and explanations at the modality level are extracted. The structural modalities are analyzed to extract the triads that play an important role in the prediction process, and the textual modalities are analyzed to extract the key phrases in the prediction process.

Figure 1.

Task description.

The following definitions are provided for the symbols and concepts used in this text.

Definition 1.

The symbols related to the multi-modal knowledge graph. A traditional knowledge graph is usually defined as a directed graph , where E and R denote the set of entities and relations, respectively, and the triplet is denoted as . A multimodal knowledge graph is an extension of the traditional knowledge graph, where each entity has its corresponding different modal data, which are usually defined as , where the definitions of E, R, and ζ are the same as in the traditional knowledge graph; V is the set of images corresponding to all entities; and T is the set of texts corresponding to all entities.

Definition 2.

Multimodal knowledge graph link prediction. The multimodal link prediction model is used to predict missing links between entities. Given a query of entity–relationship pairs , the multimodal link prediction aims to find the target entities satisfying or belonging to the multi-modal knowledge graph MKG, and the likelihood of the link’s existence is obtained by calculating the score of or . The multimodal knowledge graph link prediction model to be explained is denoted as the target link prediction model ψ.

Definition 3.

Target explanation triplet and predictive relevance. The target explanation triad is selected from the triad obtained after the training of the target link prediction model, which is denoted as , and the modality is denoted as , with S standing for the structural modality, V for the image modality, and T for the textual modality. The words in the text are denoted as . The predictive relevance scores of structure and text are denoted as and . The set of coarse-grained modal-level explanations is represented as . The set of fine-grained explanations under the structure is represented as . The set of fine-grained explanations under the text is represented as .

4. Methodology

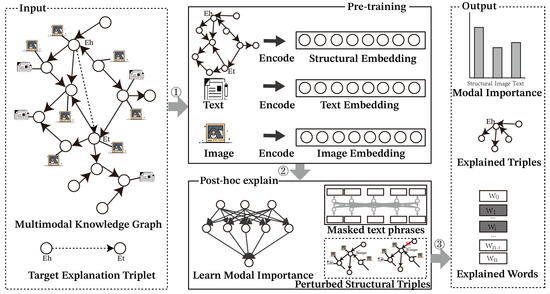

In this paper, we propose a multi-granularity explanation method (MMExplainer) for the task of multimodal link prediction, as illustrated in Figure 2. MMExplainer explains the target link prediction in three steps. Firstly, it learns modal importance, analyzing the relevance of each modality in decision-making through modal separation learning. Secondly, it perturbs structural ternary, identifying important ternary under structural modalities through model post-training. Finally, it masks textual lexical phrases, using mask learning to find words in the textual modality that play a key role in link prediction.

Figure 2.

Model structure of MMExplainer. The red “×” indicates the triplet connection is deleted.

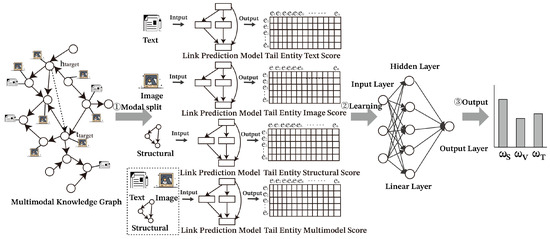

4.1. Learning Modal Importance Coarse-Grained Explanation

Multimodal knowledge graph link prediction models often employ an encoding and decoding framework, where entities and relationships are represented as embeddings during the encoding phase, using pre-trained encoders such as VGG to extract image features and BERT to extract entity textual description features. Embedding-based approaches typically freeze these encoders during training and use the extracted data to initialize the modal embedding of entities. In the multimodal link prediction interpretation task, in order to fully exploit the degree of contribution of different modal information to the prediction results, we first implement a modality-independent pre-training strategy for the target multimodal link prediction model, as shown in Figure 3. The core of this strategy is to enable the model to deeply understand and learn the unique features and intrinsic laws of each modality through the independent pre-training process of each modal datapoint separately, so as to provide independent modal representations for the subsequent modal importance analysis and interpretation tasks.

Figure 3.

Learning modal importance coarse-grained explanation.

First, the different modal information in the multimodal dataset is separated to ensure that the data of each modality can be processed and analyzed independently. For the multimodal knowledge graph containing text, image and structured data, modal data such as text descriptions, image features, and structural triads in the graph are extracted separately. The structural triad, visual information, textual information, and fusion of each modality datapoint are used as inputs to train the target multimodal link prediction model individually, which means that the embedding representation of each modality is independent. The corresponding embedding representation is generated for each modality through embedding training, and the independent modal representations are decoded into the corresponding score matrices using the score function of the target multimodal link prediction model.

Second, the multilayer perceptron (MLP) containing a hidden layer is used as a modal meta-learner to combine the tail entity scores of the target interpretation triad in each modality and approximate the tail entities obtained from the target interpretation model training. For the triad , we use the tail entity scores in each modality as input to the modal meta-learner and train the weighted scores to fit the tail entities predicted by the target link prediction model, as shown in Equation (1). The weights represent the relative importance of each modality in the link prediction task.

In order to quantify the contribution of different modalities to the prediction results, we obtain the modal weights by updating them through the cross-entropy loss function . Our loss function can be written as , as shown in Equation (2). is the predicted tail entity score in the mixed mode. The weights represent the relative importance of structural modalities in the link prediction task. The weights represent the relative importance of textual modalities in the link prediction task. The weights represent the relative importance of image modalities in the link prediction task. The larger the weight coefficient of the corresponding modality, the more important the role played by that modality in the prediction in the target link prediction model. Modalities with larger weights have a greater impact on the final prediction result, and the information they provide is considered to be more critical to the prediction task, providing a coarse-grained modal-level interpretation of the multimodal link prediction model, as represented by in Equation (3).

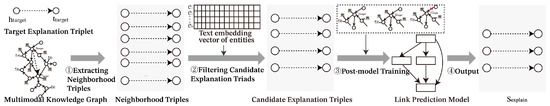

4.2. Perturbing Structural Triples to Obtain Fine-Grained Structural Modal Explanation

In order to provide fine-grained structural modal explanations for the target link prediction model, we use the perturbation structural triad strategy, as shown in Figure 4, which applies the perturbation to the structural inputs of the multimodal knowledge graph and measures the impact of the structural triad based on the outputs of the link prediction model. First, the initial search space is reduced using the textual semantic heuristic search strategy, and the entity textual semantic similarity is utilized to guide the search process of the candidate explanation triad, reduce the number of candidate explanation triads, and improve the relevance and explanation efficiency of the candidate explanation triads.

Figure 4.

Perturbed structural triples to obtain fine-grained structural modal explanation. The red “×” indicates the triplet connection is deleted.

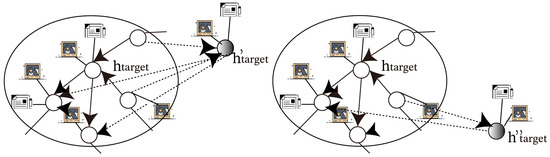

Second, in order to reduce the time complexity of retraining the model due to each perturbation, we perform a multimodal extension based on the post-training method proposed by Kelpie [29] in order to apply it to the interpretation task of multimodal link prediction. The multimodal link prediction model post-training adds homologous simulation entities and non-homologous simulation entities to the trained model, as shown in Figure 5. Homologous simulation entities are entities with the same neighbor structure as the head entity of the target triad. Non-homologous simulation entities are entities that delete the candidate explanation triad based on the same neighbor structure of the head entity of the target explanation triad. We add text features and image features for homologous simulation entities and non-homologous simulation entities that are the same as the target explanation triad head entity. Meanwhile, other embeddings and parameters are frozen, and the predicted relevance of the triad is calculated according to Equation (4). Obtaining ternaries with high link prediction relevance in the structural modality provides an explanation for the target ternary in the structural modality , as shown in Equation (5).

Figure 5.

Homologous simulation entities and non-homologous simulation entities .

4.3. Masking Text Phrases to Obtain Fine-Grained Text Modal Explanation

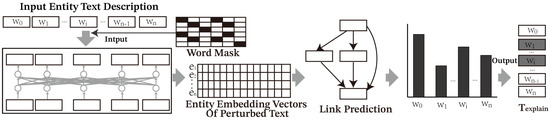

In order to provide fine-grained textual modal interpretation for the target link prediction model, we propose a word masking-based strategy, as shown in Figure 6. First, the words in the textual description of the entity are masked sequentially, and the generated masked text is used as input to the text encoder in the target link prediction model, which is utilized for feature extraction of the masked text to generate a high-dimensional vector representation.

Figure 6.

Masked text phrases to obtain fine-grained text modal explanation.

Second, the text features of the entities in the multimodal link prediction model are replaced with the text features obtained through masking, and post-model training prediction is performed to measure the impact of each phrase on the prediction results. The predictive relevance of the phrases is calculated using Equation (6) to assess their contribution to the prediction results. The n words with the highest relevance score are used as the explanation output , as shown in Equation (7).

In summary, the MMExplainer algorithm is described as shown in Algorithm 1. The target explanation triad is used as model input. First we pre-train the multimodal link prediction model to obtain the multimodal embedded representations of entities and relations, and we obtain the modal weights by learning the prediction scores of the tail entities in each modality. Then, we compute the predicted relevance of the triad containing the head entity and obtain the triad interpretation in the structural modality. Finally, the predictive relevance of words in the textual description of the head entity is calculated to obtain the explanation of words that play an important role in link prediction under the textual modality. The final outputs are the weights of each modality, the set of explanation triples, and the set of explanation words.

| Algorithm 1 Multi-Granularity Interpretation for MKG Link Prediction |

| Input: The target prediction triad ; Multimodal knowledge graph ; Multimodal link prediction model ; The number of explanation triples m; The number of explanation words n; |

| Output: Multi-granularity explanations for the target link prediction, including modal weight , the set of explanation triples S, and the set of explanation words T. |

| # Model pre-training to obtain entity structure embedding vectors, text embedding vectors, image embedding vectors. |

| Step 1 |

| # Learn the importance of each modality |

| Step 2 |

| modal importance score |

| # Model post-training to obtain structural ternary explanations. |

| Step 3 filter semantically similar triple list |

| for do |

| select triple list |

| # Mask text to get keywords. |

| Step 4 filter header entity text word list |

| for do |

| select triple list |

| # Output multi-granularity explanation results for target link prediction. |

| Return |

5. Experiments

The original training and explanation experiments for the multimodal knowledge graph link prediction model were run on a single server configured with an Intel(R) Core(TM) i9-14900 CPU (Manufacturer: Intel Corporation, Santa Clara, CA, USA), 64 GB of RAM (Manufacturer: Kingston Technology Company, Fountain Valley, CA, USA), and an NVIDIA GeForce RTX 3070 (Manufacturer: NVIDIA Corporation, Santa Clara, CA, USA). The operating system was Ubuntu 22.04 with CUDA version 12.2 and PyTorch 2.2.1.

5.1. Datasets

We conducted tests on four datasets, including FB15K, FB15K-237, WN18, and WN18RR. These datasets encompass diverse domains and scales of knowledge graphs to ensure the breadth and representativeness of our experimental results, as illustrated in Table 1. FB15K and FB15K-237 are subsets of Freebase, entity pairs that contains knowledge base relation triplets and textual mentions. WN18 and WN18RR are subsets of WordNet and cover a broader range of English semantic knowledge bases. For WN18 and WN18RR, we use synonym set definitions as entity sentences. For all the datasets, the image data of the corresponding entities in MMKG [33] were used.

Table 1.

The statistics of the datasets.

5.2. Evaluation Metrics

The predictive performance of a link prediction model is often measured using two evaluation indicators: Mean Reciprocal Rank (MRR) and Hits@k (k = 1). To evaluate the effectiveness of the explanation model, we analyze its impact on Hits@1 and MRR for the target link prediction model, denoted as and . The metric consists of quantifying the difference in linking predictive metrics between the results of the original predictive model and the results of the model after removing the explanatory facts from the dataset. A higher absolute value of and signifies that the effectiveness of the explanation is greater.

5.3. Evaluation Settings

To validate the performance of the model, we trained the model using complex as a decoder for multimodal link prediction on four generalized multimodal knowledge graph datasets, and a set of ternaries containing 100 correct predictions was randomly selected as the target explanation ternary from the results predicted by the model. After the explanation models extracted the explanations, the link prediction models were removed from the training set and retrained to verify the explanations. In our experiment, we selected triples that could be correctly predicted by the link prediction model as the interpretation objects, so their initial values of and were both 1.

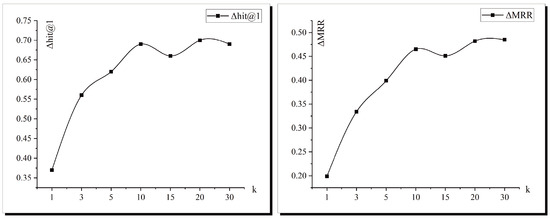

To improve the efficiency of interpretation, we used a textual semantic heuristic search strategy to find k candidate interpretation triples, which narrowed the space of candidate explanation to the top k most promising combinations of facts. Therefore, we conducted experiments to analyze how changes in the number of candidate explanation triples affect the explanation results by varying the value of k. The results are shown in Figure 7, which demonstrate the effectiveness of interpreting different candidate triplet numbers K on the multimodal dataset FB15K-237. The horizontal axis represents the path length k, and the vertical axis represents the explained performance metrics. The left panel shows the model explanation metric , and the right panel shows the model explanation metric . The experimental results indicate that when the value of k exceeds 10, the absolute values of both key assessment metrics and tend to level off. This indicates that increasing the value of k beyond 10 does not significantly improve these two evaluation metrics. Therefore, setting k to 10 can effectively achieve better explanatory performance.

Figure 7.

Parameter discussion experiments for the number k of candidate explanation triples. The plots show the absolute values of the effectiveness metrics evaluating the link prediction model such as and for different k lengths.

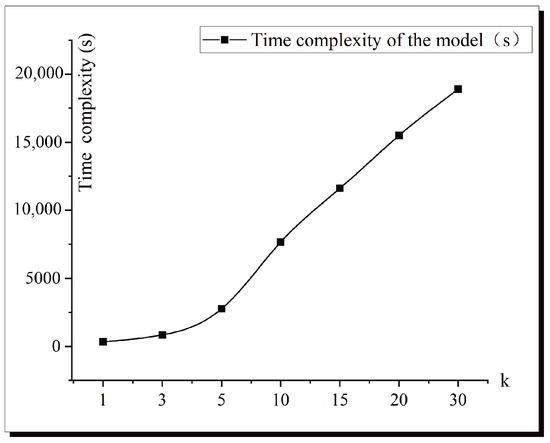

The results are shown in Figure 8, which illustrates the experimental results of the model’s running time complexity under the multimodal dataset FB15K-237. The horizontal axis represents the path length k, and the vertical axis represents the time complexity. The time complexity increases exponentially with the increase in k. Although increasing the number of candidate explanation triples may bring some degree of performance improvement, it also significantly increases the computational cost, which in turn affects the efficiency and feasibility of the experiment. Based on the above experimental results, after weighing the relationship between performance improvement and computational cost, the parameter of the number of candidate explanatory triples k is set to 10, which ensures the efficiency and practicability of the explanatory model.

Figure 8.

The plots show the time complexity of the explanatory model for different k lengths.

5.4. Experimental Results

In order to verify whether the model is able to find the key influences in the target link prediction model, this paper conducts comparative experiments with the following baseline explanatory models for the complex-based link prediction model. The baseline explanatory models are Data Poisoning (DP) [34], which obtains the optimal perturbation gain by targeting the data attacks embedded in the knowledge graph, identifying the individual facts that are most relevant to the link prediction. Criage [25] uses a Taylor approximation influence function to estimate the change in scores due to deletion of facts. Kelpie [29] explains the prediction by calculating a subset of the training facts via model post-training, divided into the Kelpie version explained by combinations of multiple facts and the K1 version explained by a single fact.

The validity of the explanation method was measured using the and indicators, with the experimental results presented in Table 2. We used the metric to evaluate the overall ranking change of link prediction results, where our method outperformed the baseline model with the highest metric by 1% on the WN18RR dataset, 2% on the WN18 dataset, 3% on the FB15K dataset, and 17% on the FB15K-237 dataset. Additionally, improvements in the metric were observed across all four datasets. These results demonstrate that the text semantic heuristic search strategy is effective in identifying the triples most likely to serve as link prediction explanations. Furthermore, this strategy performs particularly well in identifying explanatory triples under the structural modality. The superiority of WN18 over FB15K in explaining the experimental results can be attributed to the simpler relationship types in WN18, which facilitate the model’s ability to identify key explanatory facts more easily. In contrast, the greater complexity of relationships in FB15K results in a more limited explanatory effect.

Table 2.

Complex-based link prediction model for post-hoc explanation of comparative experiments.

5.5. Case Study

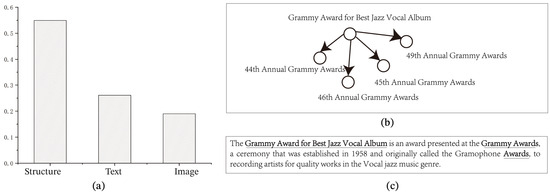

Under the FB15K-237 dataset, we perform a post hoc multi-granularity explanation analysis of the prediction results to obtain the contribution of each modality in the link prediction task. As shown in Figure 9, we observe that structural modal information contributes most significantly to the prediction results, with a contribution rate of 0.549. This result further confirms the importance of structural modalities in revealing the potential relationships among entities in the knowledge graph, as well as their irreplaceability in the link prediction task. Secondly, the contribution of textual modal information to the prediction result is 0.261. Textual modality plays an important supporting role in the prediction process by providing rich semantic information that helps the model to understand the correlations among entities in a deeper way. The information contribution of image modality is relatively low, with a contribution rate of 0.19. Compared with structural and textual modalities, the information representation of the image modality is more complex and abstract, which leads to the loss of part of the useful information after complex preprocessing and feature extraction steps. In addition, the problem of the noise present in the image data can also lead to its relatively poor contribution.

Figure 9.

An example of post hoc multi-granularity interpretation for a multimodal link prediction task. (a) Contribution of each modality to link prediction results in FB15K-237 dataset. (b) <Grammy Award for Best Jazz Vocal Album, award, 48th Annual Grammy Awards> structural explanation. (c) <Grammy Award for Best Jazz Vocal Album, award, 48th Annual Grammy Awards> textual explanation.

When interpreting the target interpretation triad <Grammy Award for Best Jazz Vocal Album, award, 48th Annual Grammy Awards>, the model gives the interpretation triad <Grammy Award for Best Jazz Vocal Album, award, 44th Annual Grammy Awards>, <Grammy Award for Best Jazz Vocal Album, award, 46th Annual Grammy Awards>, <Grammy Award for Best Jazz Vocal Album, award, 49th Annual Grammy Awards>, <Grammy Award for Best Jazz Vocal Album, award, 45th Annual Grammy Awards>. The results show that the explanation model is able to understand the semantic information in the target triad, and it is able to find entities that are semantically similar to the target triad in the knowledge graph.

In its header text description, “The Grammy Award for Best Jazz Vocal Album is an award presented at the Grammy Awards, a ceremony that was established in 1958 and originally called the Gramophone Awards, to record artists for quality works in the Vocal jazz music genre. Originally called the Gramophone Awards, to recording artists for quality works in the Vocal jazz music genre.”, the model identified the key phrases ‘Grammy Award for Best Jazz Vocal Album’ and ‘Grammy Awards’. ‘Grammy Award for Best Jazz Vocal Album’ serves as the subject of this description, and ‘Grammy Awards’, another key concept that is closely related to the header entity, reveals the series to which the awards belong, the Grammy Awards. Together, these two key phrases constitute important contextual information for the target interpretation triad, <Grammy Award for Best Jazz Vocal Album, award, 48th Annual Grammy Awards>. The model is able to recognize these key phrases as key information that can be used by the link prediction model to provide a fine-grained interpretation in the textual modality of the link prediction model.

6. Conclusions

In this paper, we propose a post hoc model-independent multimodal link prediction interpretation model. Through modal separation pre-training, structural perturbation, and text mask learning, our model provides both modal-level coarse-grained interpretation and fine-grained interpretation under structural and textual modalities. We propose a strategy for modal separation learning when obtaining coarse-grained explanations at the modal level. We introduce a heuristic search for interpretation triplets based on entity text semantic information when obtaining structural modality explanations. Additionally, we employ a strategy where the model is retrained after masked learning of entity text descriptions when obtaining text explanations. Experimental results on the multimodal datasets FB15K, FB15K-237, WN18, and WN18RR show that our method performs well in identifying key modal information, which significantly improves the explainability of the model and provides important support for understanding the behavior of link prediction models.

Through experimental validation on multiple benchmark datasets, our method is able to obtain modal information that plays a key role in the model’s prediction, which is important for understanding the behavior of embedding-based multimodal link prediction models. Since structural and textual modalities play a major role in link prediction, the MMExplainer model only considers structural and textual modalities. However, image modality, as another important source of information, may also provide valuable clues for link prediction. In addition, the complexity of domain datasets can impact the interpretation performance. Therefore, an in-depth analysis of image modalities will be considered in future work, along with an evaluation of its practical usability in real-world applications.

Author Contributions

X.H.: writing—original draft preparation, software, methodology, and conceptualization. X.Z.: writing—review and editing, supervision, and formal analysis. H.W.: validation and software. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by Hebei Natural Science Foundation (under grants F2022208002) and the Shijiazhuang Science and Technology Plan Project (under grants 241790867A).

Data Availability Statement

The original data presented in this study are openly available in FB15K at https://github.com/mniepert/mmkb (accessed on 30 January 2025), FB15K-237 at https://github.com/rollben/kg-bert/tree/master/data/FB15k-237 (accessed on 30 January 2025), WN18RR at https://github.com/rollben/kg-bert/tree/master/data/WN18RR (accessed on 30 January 2025), WN18 at https://github.com/rollben/kg-bert/tree/master/data/WN18 (accessed on 30 January 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Peng, J.; Hu, X.; Huang, W.; Yang, J. What is a multi-modal knowledge graph: A survey. Big Data Res. 2023, 32, 100380. [Google Scholar] [CrossRef]

- Peng, C.; Xia, F.; Naseriparsa, M.; Osborne, F. Knowledge graphs: Opportunities and challenges. Artif. Intell. Rev. 2023, 56, 13071–13102. [Google Scholar]

- Zhu, X.; Li, Z.; Wang, X.; Jiang, X.; Sun, P.; Wang, X.; Xiao, Y.; Yuan, N.J. Multi-modal knowledge graph construction and application: A survey. IEEE Trans. Knowl. Data Eng. 2022, 36, 715–735. [Google Scholar] [CrossRef]

- Zhu, X.; Li, Z.; Wang, X.; Jiang, X.; Sun, P.; Wang, X.; Xiao, Y.; Yuan, N.J. Fusing visual and textual content for knowledge graph embedding via dual-track model. Appl. Soft Comput. 2022, 128, 109524. [Google Scholar]

- Rossi, A.; Barbosa, D.; Firmani, D.; Matinata, A.; Merialdo, P. Knowledge graph embedding for link prediction: A comparative analysis. ACM Trans. Knowl. Discov. Data (TKDD) 2021, 15, 1–49. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, X.; Wang, F.; Zhang, B.; Huang, F. Comprehensive Survey on Knowledge Graph Embedding. Comput. Eng. Appl. 2022, 58, 30–50. [Google Scholar]

- Xu, G.; Chen, H.; Li, F.L.; Sun, F.; Shi, Y.; Zeng, Z.; Zhou, W.; Zhao, Z.; Zhang, J. Alime mkg: A multi-modal knowledge graph for live-streaming e-commerce. In Proceedings of the 30th ACM International Conference on Information & Knowledge Management, Gold Coast, Australia, 1–5 November 2021; pp. 4808–4812. [Google Scholar]

- Rodis, N.; Sardianos, C.; Radoglou-Grammatikis, P.; Sarigiannidis, P.; Varlamis, I.; Papadopoulos, G.T. Multimodal explainable artificial intelligence: A comprehensive review of methodological advances and future research directions. IEEE Access 2024, 12, 159794–159820. [Google Scholar] [CrossRef]

- Han, T.; Wang, P.; Niu, S.; Li, C. Modality matches modality: Pretraining modality-disentangled item representations for recommendation. In Proceedings of the ACM Web Conference 2022, Virtual Event, 25–29 April 2022; pp. 2058–2066. [Google Scholar]

- Shu, D.; Chen, T.; Jin, M.; Zhang, C.; Du, M.; Zhang, Y. Knowledge graph large language model (KG-LLM) for link prediction. arXiv 2024, arXiv:2403.07311. [Google Scholar]

- Xie, X.; Zhang, N.; Li, Z.; Deng, S.; Chen, H.; Xiong, F.; Chen, M.; Chen, H. From discrimination to generation: Knowledge graph completion with generative transformer. In Proceedings of the WWW ’22: Companion Proceedings of the Web Conference 2022, Virtual Event, 25–29 April 2022; pp. 162–165. [Google Scholar]

- Chen, X.; Zhang, N.; Li, L.; Deng, S.; Tan, C.; Xu, C.; Huang, F.; Si, L.; Chen, H. Hybrid transformer with multi-level fusion for multimodal knowledge graph completion. In Proceedings of the 45th International ACM SIGIR Conference on Research and Development in Information Retrieval, Madrid, Spain, 7 July 2022; pp. 904–915. [Google Scholar]

- Liang, K.; Zhou, S.; Liu, Y.; Meng, L.; Liu, M.; Liu, X. Structure guided multi-modal pre-trained transformer for knowledge graph reasoning. arXiv 2023, arXiv:2307.03591. [Google Scholar]

- Wang, L.; Zhao, W.; Wei, Z.; Liu, J. Simkgc: Simple contrastive knowledge graph completion with pre-trained language models. In ACL (1); Association for Computational Linguistics: Stroudsburg, PA, USA, 2022; pp. 4281–4294. [Google Scholar]

- Xie, R.; Liu, Z.; Luan, H.; Sun, M. Image-embodied knowledge representation learning. In Proceedings of the 26th International Joint Conference on Artificial Intelligence, Melbourne, Australia, 19–25 August 2017; pp. 3140–3146. [Google Scholar]

- Wang, M.; Wang, S.; Yang, H.; Zhang, Z.; Chen, X.; Qi, G. Is visual context really helpful for knowledge graph? A representation learning perspective. In Proceedings of the 29th ACM International Conference on Multimedia, Chengdu, China, 20–24 October 2021; pp. 2735–2743. [Google Scholar]

- Zhao, Y.; Cai, X.; Wu, Y.; Zhang, H.; Zhang, Y.; Zhao, G.; Jiang, N. MoSE: Modality Split and Ensemble for Multimodal Knowledge Graph Completion. In Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing, Abu Dhabi, United Arab Emirates, 7–11 December 2022; pp. 10527–10536. [Google Scholar]

- Li, X.; Zhao, X.; Xu, J.; Zhang, Y.; Xing, C. IMF: Interactive multimodal fusion model for link prediction. In Proceedings of the ACM Web Conference 2023, Austin, TX, USA, 30 April–4 May 2023; pp. 2572–2580. [Google Scholar]

- Sun, S.; An, W.; Tian, F.; Nan, F.; Liu, Q.; Liu, J.; Shah, N.; Chen, P. A review of multimodal explainable artificial intelligence: Past, present and future. arXiv 2024, arXiv:2412.14056. [Google Scholar]

- Pan, Y.; Liu, J.; Zhang, L.; Huang, Y. Incorporating logic rules with textual representations for interpretable knowledge graph reasoning. Knowl.-Based Syst. 2023, 277, 110787. [Google Scholar] [CrossRef]

- Lin, F.; Li, D.; Zhang, W.; Shi, D.; Jiao, Y.; Chen, Q.; Lin, Y.; Zhu, W. Multi-modal knowledge graph inference via media convergence and logic rule. CAAI Trans. Intell. Technol. 2024, 9, 211–221. [Google Scholar]

- Liu, Y.; Lai, Y.; Chen, C. Applying Logical Rules to Reinforcement Learning for Interpretable Knowledge Graph Reasoning. In Proceedings of the 2024 International Joint Conference on Neural Networks (IJCNN), Yokohama, Japan, 30 June–5 July 2024; pp. 1–9. [Google Scholar]

- Sapkota, R.; Köhler, D.; Heindorf, S. EDGE: Evaluation Framework for Logical vs. Subgraph Explanations for Node Classifiers on Knowledge Graphs. In Proceedings of the 33rd ACM International Conference on Information and Knowledge Management, Boise, ID, USA, 21–25 October 2024; pp. 4026–4030. [Google Scholar]

- Chen, Z.; Wang, X.; Wang, C.; Li, J. Explainable link prediction in knowledge hypergraphs. In Proceedings of the 31st ACM International Conference on Information & Knowledge Management, Atlanta, GA, USA, 17–21 October 2022; pp. 262–271. [Google Scholar]

- Pezeshkpour, P.; Tian, Y.; Singh, S. Investigating Robustness and Interpretability of Link Prediction via Adversarial Modifications. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; Volume 1 (Long and Short Papers), pp. 3336–3347. [Google Scholar]

- Huang, Q.; Yamada, M.; Tian, Y.; Singh, D.; Chang, Y. Graphlime: Local interpretable model explanations for graph neural networks. IEEE Trans. Knowl. Data Eng. 2022, 35, 6968–6972. [Google Scholar]

- Zhang, Y.; Defazio, D.; Ramesh, A. Relex: A model-agnostic relational model explainer. In Proceedings of the 2021 AAAI/ACM Conference on AI, Ethics, and Society, Virtually, 19–21 May 2021; pp. 1042–1049. [Google Scholar]

- Zhao, D.; Wan, G.; Zhan, Y.; Wang, Z.; Ding, L.; Zheng, Z.; Du, B. KE-X: Towards subgraph explanations of knowledge graph embedding based on knowledge information gain. Knowl.-Based Syst. 2023, 278, 110772. [Google Scholar]

- Rossi, A.; Firmani, D.; Merialdo, P.; Teofili, T. Explaining link prediction systems based on knowledge graph embeddings. In Proceedings of the 2022 International Conference on Management of Data, Copenhagen, Denmark, 6–8 July 2022; pp. 2062–2075. [Google Scholar]

- Luo, D.; Cheng, W.; Xu, D.; Yu, W.; Zong, B.; Chen, H.; Zhang, X. Parameterized explainer for graph neural network. Adv. Neural Inf. Process. Syst. 2020, 33, 19620–19631. [Google Scholar]

- Yuan, H.; Yu, H.; Wang, J.; Li, K.; Ji, S. On explainability of graph neural networks via subgraph explorations. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 18–24 July 2021; pp. 12241–12252. [Google Scholar]

- Lin, W.; Lan, H.; Wang, H.; Li, B. Orphicx: A causality-inspired latent variable model for interpreting graph neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 13729–13738. [Google Scholar]

- Liu, Y.; Li, H.; Garcia-Duran, A.; Niepert, M.; Onoro-Rubio, D.; Rosenblum, D.S. MMKG: Multi-modal knowledge graphs. In Proceedings of the Semantic Web: 16th International Conference, ESWC 2019, Portorož, Slovenia, 2–6 June 2019; Proceedings 16. Springer International Publishing: Berlin/Heidelberg, Germany, 2019; pp. 459–474. [Google Scholar]

- Zhang, H.; Zheng, T.; Gao, J.; Miao, C.; Su, L.; Li, Y.; Ren, K. Data poisoning attack against knowledge graph embedding. In Proceedings of the 28th International Joint Conference on Artificial Intelligence, Macao, China, 10–16 August 2019; pp. 4853–4859. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).