Abstract

Road safety for vulnerable road users, particularly cyclists, remains a critical global issue. This study explores the potential of multimodal visual and haptic interaction technologies to improve cyclists’ perception of and responsiveness to their surroundings. Through a systematic evaluation of various visual displays and Haptic Feedback mechanisms, this research aims to identify effective strategies for recognizing and localizing potential traffic hazards. Study 1 examines the design and effectiveness of Visual Feedback, focusing on factors such as feedback type, traffic scenarios, and target locations. Study 2 investigates the integration of Haptic Feedback through wearable vests to enhance cyclists’ awareness of peripheral vehicular activities. By conducting experiments in realistic traffic conditions, this research seeks to develop safety systems that are intuitive, cognitively efficient, and tailored to the needs of diverse user groups. This work advances multimodal interaction design for road safety and aims to contribute to a global reduction in traffic incidents involving vulnerable road users. The findings offer empirical insights for designing effective assistance systems for cyclists and other non-motorized vehicle users, thereby ensuring their safety within complex traffic environments.

1. Introduction

Road safety has emerged as a significant global issue in contemporary society. According to the World Health Organization (WHO), approximately 1.19 million individuals lose their lives each year due to road traffic collisions. Additionally, there is a substantial number of non-fatal injuries resulting from these incidents, estimated to range from 20 to 50 million annually, with a considerable proportion leading to long-term disabilities [1]. Notably, over half of the fatalities associated with road traffic incidents involve vulnerable road users, such as pedestrians, cyclists, and motorcyclists [1]. These groups occupy a marginalized status within the traffic ecosystem, making them particularly susceptible to accidents, especially in areas with high-speed traffic. The disparity in safety provisions for non-motorized versus motorized vehicles further exacerbates the risks faced by these vulnerable populations.

In the field of traffic safety, peripheral information perception technology is a crucial tool for enhancing driving safety and reducing the incidence of accidents. A wealth of research has introduced various visual guidance solutions aimed at improving users’ peripheral perception efficiency, particularly those based on head-mounted displays (HMDs) and portable augmented reality (AR) devices, which are widely utilized in medicine [2], tourism [3], and industry [4]. Furthermore, within HMD-based visual guidance solutions, numerous studies have proposed different strategies to enhance users’ efficiency in locating targets, including Arrow [5], Halo [6], Wedge [7], and Box [8].

In addition to visual guidance, other modalities, such as Haptic Feedback, have been extensively applied across various scenarios, including surgical robots [9]. Research has demonstrated that high-fidelity Haptic Feedback facilitates more natural interactions between surgical tools and tissues, thereby improving the outcomes of remote surgeries. In virtual reality (VR) gaming [10], Haptic Feedback effectively expands the range of interactions and enhances user perception. Studies on traffic signal alerts [11] have shown that haptic alerts with appropriate frequency and amplitude can assist users in perceiving traffic signals. Additional application areas include mobile commerce [12], parent-child learning [13], and controlling personal jet packs [14].

Drivers must continuously monitor both the road ahead and the situation behind them while driving. In complex information environments, the challenge of receiving information from multiple directions and conducting risk assessments is particularly demanding. Numerous studies have focused on enhancing users’ efficiency in perceiving their surroundings and reducing their stress levels. For instance, experiments have demonstrated that heads-up displays (HUDs) significantly improve driving safety and the overall driving experience, with effects that are more pronounced among women, students, and elderly user groups [15]. Furthermore, research has indicated that unpredictable stimuli that suddenly appear in peripheral vision (e.g., hazards displayed on the HUD) can lead users to overlook unexpected events, resulting in longer response times [16]. Both the position of the visual interface within the field of view and the type of visual interface are crucial factors influencing user experience.

Many studies have proposed solutions for visual interfaces on HUDs, such as virtual shadows, which project virtual shadows near obstacles on augmented reality (AR) HUDs to warn users of collision risks [17]. Additionally, research has shown that compared to virtual shadow AR-HUDs, arrow AR-HUDs effectively reduce visual interference and improve the efficiency of visual information acquisition; however, the effectiveness of these two interfaces varies across different traffic scenarios [18]. Therefore, the design of visual prompt interfaces on HUDs must be tailored to the specific user group and usage scenario.

Given the heightened risks faced by non-motorized vehicle users within complex and variable traffic environments, as well as the limitations of existing sensory-assistance methods in high-noise or visually obstructed situations, this article explores the potential application of multimodal visual and haptic interaction technologies to enhance the safety of these vulnerable road users. This study systematically assesses the effectiveness of various visual displays and Haptic Feedback mechanisms in aiding the recognition and localization of potential traffic hazards. Its aim is to demonstrate how multimodal interaction design can effectively alleviate cognitive load and improve users’ perception and responsiveness to their surroundings. In addressing the pressing challenges in traffic safety, this paper seeks not only to provide empirical, research-based guidance for designing safer and more effective assistance systems for non-motorized vehicles but also to contribute to the global reduction in traffic incident rates, ensuring the safety of all road users.

2. Related Works

A considerable body of research has been directed towards examining the interplay between visual, haptic, and multimodal feedback mechanisms within traffic settings, primarily with the objective of enhancing safety and hazard detection for vulnerable road users. These collaborative research endeavors have furnished these individuals with more innate perceptions of their environment and more efficacious alert systems. Furthermore, they have established a robust groundwork for the advancement of visual and haptic guidance frameworks for target identification within intricate settings, thereby aiding vulnerable road users in better recognizing potential dangers and elements of risk. The purpose of this section is to succinctly review the extant research pertinent to this field.

2.1. Information Awareness in Transportation

Driving is predominantly a visual task [19], and effective visual search is crucial for maintaining safety on the road [20]. In 37% of traffic accidents, vulnerable road users such as cyclists are implicated due to their failure to adequately monitor their environment, leading to delayed recognition and response to potential hazards [21]. Researchers have been actively investigating methodologies to assist these individuals in becoming more attuned to traffic dangers.

Waldner et al. demonstrated that strategic highlighting can effectively direct a user’s gaze to crucial information points or objects [22]. Dziennus et al. introduced ambient lighting on the edges of a simulated car’s interior, utilizing variable lighting patterns to alert drivers of the presence and status of other road users, which could facilitate timely acceleration, lane changes, and overtaking maneuvers [23].

Van Veen et al. examined the impact of peripheral vision lights on glasses worn by drivers engaged in reading tasks within an autonomous driving context, employing light variations to signal changes in the traffic environment [24]. While this ambient light effectively enhances the driver’s situational awareness, it was noted that its continued use could lead to visual fatigue, diminishing its effectiveness over time.

Von Sawitzky et al. addressed the risk posed by suddenly opening car doors by incorporating distance cues into their system. By equipping cyclists with Microsoft HoloLens 2, their study provided visual prompts in the form of icons, indicating the direction and proximity of an opening car door [25]. These notifications could potentially reduce the cyclist’s field of vision and are intuitive and visually appealing, potentially aiding cyclists in safer navigation through traffic.

Schinke et al. developed a mobile augmented reality application for smartphones, utilizing embedded arrows to guide users’ attention to specified viewpoints [26]. Warden et al. emphasized the value of head-mounted displays in environmental perception, particularly highlighting the effectiveness of augmented reality arrows in isolating and drawing attention to potential hazards [27].

Renner et al. explored three novel visual guidance techniques: a combination of peripheral flicker with a static display, an integration of arrow cues with peripheral flicker, and guidance through dynamic changes in spherical wave velocities [5]. Among these, the arrow-guidance technique stood out as the most efficient and was also highly rated by the participants. Wieland et al. proposed three distinct types of visual cues for augmented reality (AR) interfaces: the world-referenced arrow, the screen-referenced icon, and the screen-referenced minimap [28]. Their research demonstrated that arrow cues, in particular, significantly enhanced the efficiency of target localization when used with headset devices.

Woodworth et al. examined a variety of visual cues aimed at directing or redirecting attention to objects of interest. Their research indicated that arrow cues were particularly effective in reducing distractions [29]. Matviienko et al. provided a meticulous comparison of cueing mechanisms, specifically within automated cycling contexts, noting that helmet-mounted ambient lights and head-up display arrows were more efficacious than handlebar vibrations and auditory cues in facilitating timely observations and appropriate reactions to environmental changes [30].

Hence, a diverse array of solutions has been shown to effectively direct the attention of vulnerable road users to their surroundings through visual stimuli, including ambient light, icons, arrows, and other graphic cues. Empirical evidence suggests that arrow indicators are particularly effective in navigational guidance, with curvilinear cues serving as a potent alternative for visual direction [28]. Additionally, ambient lighting, particularly when presented in the periphery of one’s field of view, emerges as a viable cueing mechanism to augment users’ awareness of traffic conditions [31]. However, the placement of lighting directly in front of users can be obstructive and lead to inferior guidance outcomes; there is a discernible preference for cues positioned laterally [32].

In outdoor environments, the visibility of visual cues, including but not limited to lights and arrows, needs amplification to ensure these cues are reflective and discernible under varying lighting conditions. The central placement of visual signals, if disproportionately large [25], can impede the timely observation of complex road scenarios. Therefore, designers should carefully consider the scale of visual cues in the design process. Furthermore, the integration of traffic-related data into visual guidance systems, such as the proximities of vehicles [33], can considerably enhance the overall safety of road users. With existing technologies capable of recognizing elements in complex scenes [34], overlaying Visual Feedback cues can be particularly effective. Implementing such data-centric visual cues could provide a more informed and responsive navigational experience for the user.

2.2. Haptic Displays in Traffic Environments

In traffic environments, driver attention is principally engaged with the road ahead [19]. When secondary tasks excessively capture visual focus, cognitive fatigue may ensue [35], elevating the risk of vehicular accidents. Indeed, statistical analyses suggest that visual distractions contribute to approximately 60% of traffic accidents. One mitigation strategy to decrease the diversion of visual attention from the road—and subsequently reduce the incidence of traffic accidents—is the utilization of non-visual cues, such as Haptic Feedback [36].

Haptic Feedback is recognized as an efficacious conduit for information transfer. Empirical research indicates that this modality of feedback does not adversely affect either reaction time or perceptual workload [37]. Moreover, the transport sector has shown considerable interest in vibrotactile feedback technology, with numerous automotive manufacturers incorporating it to generate tactile warnings. The primary objective of these warnings is to alert drivers to potential safety hazards [38].

The application of vibrotactile feedback extends beyond the confines of vehicular operation; it is also increasingly adopted to enhance the safety of vulnerable road users. This technology serves as a critical tool in the broader effort to mitigate risks and safeguard individuals within the traffic ecosystem [39].

There are two primary applications for vibrotactile feedback: as navigational aids and within the realms of health and sports [40]. Focusing on the former, Poppinga et al. pioneered the use of the Tacticcycle, which integrates vibrotactile sensors on a bicycle’s handlebars, thereby providing navigational cues to cyclists en route to their destinations [41]. Building on this, Pielot et al. expanded the functionality of the Tacticcycle by incorporating variable vibratory frequencies, thereby not only guiding cyclists but also encouraging the exploration of their surroundings at differentiated vibratory rates [42].

Furthermore, the application of vibrotactile feedback has been extended to wearable devices such as gloves [43], vests [44], and shoes [40], aimed at assisting vulnerable road users. Diverging from the conventional, Steltenpohl et al. innovated by attaching vibrators to waistbands, offering lumbar-region vibrations to signal directional changes for cyclists [45]. This novel approach has sparked a surge of research into traffic safety strategies tailored for vulnerable road users.

Kiss et al. contributed to this research trajectory by providing motorcyclists with lumbar vibrations through navigational aids, thereby mitigating risks associated with turning maneuvers [46] and advancing traffic safety strategies for this group of vulnerable road users.

Matviienko et al. have taken vibrotactile feedback a step further by investigating its potential for child cyclists. They have explored handlebar-mounted vibrations that enable children to communicate traffic signals to others [47], as well as vibrotactile cues for collision avoidance strategies for motorized skateboard riders [39].

Lately, Vo et al. examined the implementation of vibrotactile feedback in cyclists’ helmets, designed to alert the wearer to upcoming obstacles and facilitate evasive decision-making. Their findings revealed that participants could accurately identify up to 91% of proximity cues and up to 85% of directional cues [48], demonstrating the efficacy of vibrotactile feedback in perceptual tasks.

In driving contexts, operators are frequently required to manage the challenges of multitasking, with the inherent complexity of such tasks often leading to distraction [49], thereby elevating the potential for risk. Nevertheless, dynamic Haptic Feedback can aid drivers by sharpening their focus, enhancing their reaction times [50], and steering their actions at pivotal moments. This is particularly crucial in autonomous driving scenarios, where drivers must be prepared to reassume control of the vehicle [51], ensuring their safety within the traffic system.

Petermeijera et al. demonstrated that vibrotactile feedback can effectively augment visual and auditory displays and holds promise for facilitating take-over tasks [52]. In further research, they explored directional vibrotactile feedback as a means of instructing drivers on when and how to execute left or right lane changes [53].

Huang et al. differentiated between informative and instructive vibrotactile feedback specific to the take-over driving task and developed 13 coded messaging strategies to aid drivers in comprehending the traffic environment and responding to unfolding traffic conditions. Their findings indicated that vibration of the seat back was perceived as the most efficacious and satisfactory form of feedback [54]. Collectively, these studies underscore the efficacy of vibrotactile feedback in reorienting drivers’ attention, enabling them to accurately perceive the traffic milieu and take over the driving task when necessary.

2.3. Multimodal Displays in Traffic Environments

Multimodal feedback methods have achieved significant prominence in driving environments to the extent that they are often considered the method of choice in numerous automotive applications [55]. Prewett et al. have corroborated in their research that feedback derived from a combination of multiple modalities surpasses unimodal feedback in terms of reaction time, task completion time, and error rate [56].

Within traffic environments, Matviienko et al. provided evidence in prior research that multimodal feedback is not only suitable for alerting vulnerable road users but is also effective in averting collisions between motor vehicles and cyclists, particularly for the demographic of children on bicycles [57]. Concurrently, their experimental findings revealed that multimodal feedback significantly lowered the incidence of accidents in a simulated traffic setting for vulnerable road users.

In contemporary traffic environments, the principal applications of multimodal feedback can be categorized into combinations of visual-auditory [58], visual-tactile [59], tactile-auditory [60], and visual-tactile-auditory [61] forms. For example, Wang et al. employed a synthesis of 3D sound with visual traffic information systems, enabling drivers to discern peripheral traffic orientation using various color zones to signify levels of urgency [58]. Matviienko et al. also demonstrated that the amalgamation of visual lights with auditory feedback can effectively aid child cyclists in distinguishing between left and right [47]. In a subsequent study, they investigated the use of helmet light cues combined with bicycle handlebar vibrations [62]. Their results indicate that multimodal feedback is more noticeable to child cyclists in complex traffic scenarios.

The research by Müller et al. focused on a multimodal feedback configuration involving LED strips embedded in vehicle doors for visual cues, seat cushion vibrators for tactile feedback, and in-vehicle acoustic alerts. Their findings suggest that multimodal feedback yields the quickest response times in both critical and non-critical traffic situations, and it was highly rated by users [63]. These observations are not entirely aligned with the conclusions of another study [64], which posited that multimodal feedback is favored in high-urgency scenarios, while a single modality is often the preferred choice in low-urgency and information processing contexts. This discrepancy may imply that the deployment of two or more forms of modal feedback could provide users with a more diversified set of interaction cues, potentially making it more adaptable to a broader range of traffic situations.

When incorporating multimodal feedback to create early warning cues for vulnerable road users, it is imperative to take into account the variable factors inherent in each modality to prevent cognitive overload in combined usage, which may lead to traffic hazards. Variables such as the color of lights [65], flashing frequency [31], and motion dynamics [66] of visual peripheral indicators significantly influence users’ perception of the traffic context and provide corresponding interactive guidance [24]. By strategically designing these elements, the synergy between the driver and multimodal interactions can be optimized, thereby reducing cognitive demands during driving.

Simultaneously, research indicates that drivers exhibit a preference for multimodal cueing methods in emergent situations, whereas single-modal cues are deemed adequate for routine driving tasks or when the required information is straightforward. This suggests that an intelligent selection and amalgamation of cueing methods, contingent upon the specificities of varying traffic contexts, could be helpful [67]. The judicious application of these modalities has the potential not only to mitigate the occurrence of traffic incidents involving vulnerable road users but, in some cases, to prevent them entirely.

Research on multimodal feedback, with a focus on visual and haptic modalities, has offered significant insights into the enhancement of user performance within intricate settings. Nonetheless, there remains a need for a more refined understanding of the deployment of these modalities in scenarios that require multitasking and concurrent goal-oriented searches. Moreover, the nuances of design and the intermodal interactions during such multitasking scenarios are not yet fully understood, particularly with regard to the impacts of dynamic visual and haptic guidance across varied tasks. Hence, it is imperative to enrich our understanding of multimodal feedback involving visual and haptic inputs in contexts that necessitate multitasking in pursuit of specific goals. This avenue of research not only contributes to the expansion of theoretical knowledge but also paves the way for practical applications in the design of interfaces and systems that are more conducive to user engagement and ease of use.

The objective of this study is to enhance the perception of vulnerable road users regarding peripheral vehicular activities within a traffic context by employing visual cues, such as directional arrows, and Haptic Feedback delivered through vests. This examination of multimodal interaction aims not only to expand our understanding of the application of visual and Haptic Feedback in complex multitasking environments but also to aid in the development of safety systems that are both more intuitive and impose a reduced cognitive burden. Through further experiments and research, we seek to explore the integration and interplay of different feedback mechanisms in real-world traffic scenarios, assessing their effectiveness in improving the safety of vulnerable road users and tailoring these systems for optimal alignment with the unique needs of diverse user groups.

3. Study 1: Visual Feedback for Enhancing Cyclist Awareness

3.1. Study Design

We provided detailed information on the experimental evaluation to examine key design factors for visual guidance, which include the type of Visual Feedback, the traffic scenario, the target location, and the specifics of system implementation and experimental procedures.

3.1.1. Visual Feedback Design

Augmented Visual Feedback has potential utility in scenarios presenting hazards, including those involving vehicles executing turns [68] and in anticipating collision events at intersections [69]. Research by Kim and Gabbard [70] delved into the effects of AR components providing drivers with varied informational cues regarding pedestrian crossings on driver distraction levels. Notably, the “virtual shadow” feature they developed was found to increase driver awareness of pedestrians while maintaining unimpaired perception of other vehicular elements [71], benefit driver performance [17], and lead to lower cognitive workload and better usability [72].

In terms of providing effective visual guidance, arrows have also proven to be a straightforward and effective method for directing user attention [5]. Studies have demonstrated the efficacy of arrows as visual cues in various settings, including finding out-of-view objects [28], cueing targets in manual assembly assistance systems [5], performing 180-degree visual search tasks [27], and searching for locations on a digital map [26,29]. Arrow guidance can also effectively reduce visual interference and improve the efficiency of acquiring visual information [18]. Therefore, in our first study, we opted to utilize Arrow and Shadow as the visual guidance technique. We also explored a combined approach integrating both methods to provide complementary spatial information. This combination aims to leverage arrows for clear directional guidance while utilizing shadows for enhanced depth perception and spatial context in driving environments.

3.1.2. Experimental Settings

In our study, the Microsoft Hololens 2 served as the experimental platform. The experimental software was developed utilizing Unity 2019.4 in conjunction with Mixed Reality Toolkit (MRTK) version 2.6 and Microsoft Visual Studio 2019 (version 16.9.4) on a personal computer running the Windows 10 operating system.

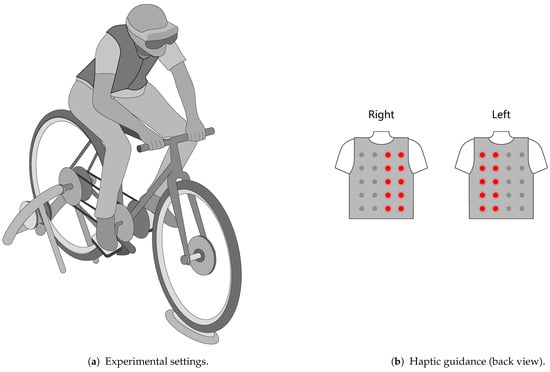

While performing the experiment, participants were riding on a 24-inch bike mounted on a Decathlon In’Ride 500 Fluid Braking Bike Trainer (Decathlon China, Shanghai, China). The experimental settings and target layout are illustrated in Figure 1a.

Figure 1.

Experimental settings and Visual Feedback design.

When the participants confirmed vehicle identification, they pressed a button mounted on the bike handlebar, and the confirmation data were broadcast using the MQTT (Message Queuing Telemetry Transport, https://mqtt.org/ (accessed on 25 March 2025)) communication standard. The MQTT server, hosted on Tencent Cloud (https://cloud.tencent.com/product/lighthouse (accessed on 25 March 2025)), utilized EMQX V4.0.4 (https://www.emqx.io (accessed on 25 March 2025)) on a centOS 7 operating system.

3.1.3. Independent Variables

There were three independent variables in the first study:

- Visual Feedback (3 levels): Arrow, Shadow, and Arrow-Shadow;

- Traffic Scenario (4 levels): 1 (Taxi); 2 (Heavy Vehicle); 3 (Bike/Motorbike); 4 (Sports Car);

- Vehicle Distance (2 levels): Near (less than 4 m); far (4 m–8 m).

3.1.4. Experimental Design

The research adopted a within-subjects repeated measures design. It comprised three sessions aligned with Visual Feedback designs: Arrow, Shadow, and a combined Arrow-Shadow feedback, elaborated in Figure 1b. To control for order effects, the sequence of the session presentation was counterbalanced across participants. Sessions were separated by a five-minute break. During each session, there were eight traffic videos, and participants were exposed to each traffic video once. Prior to the commencement of testing, a five-minute introduction period was provided to allow participants to acquaint themselves with the experimental setup. Across all sessions, each participant completed a total of 24 experimental trials.

3.1.5. Participants and Procedure

The study recruited 12 university campus volunteers, comprising 8 females and 4 males, with a mean age of 21.92 . All participants were right-handed and had experience cycling in urban traffic environments. A total of 10 participants had prior exposure to three-dimensional (3D) gaming, while half reported experience with virtual reality (VR) technology. Throughout the experiment, each participant was instructed to keep a standard cycling position on a bike trainer, maintaining relaxation and comfort.

Simulated real-world traffic scenarios were created using recorded panoramic videos, with the task for participants being to identify potential hazard vehicles via Visual Feedback.

During each experimental session, participants engaged in a hazard identification exercise involving eight videos presented in a randomized sequence. Each video depicted a potential traffic hazard involving a vehicle that might emerge from the blind spots on the user’s left rear or right rear. Participants were tasked with identifying the vehicle by pressing a specific button located on the bicycle’s right handlebar. Upon successful detection of the vehicle and pressing the button, video playback would stop, indicating the task had been completed. Participants then started the next video task by pressing the same button again.

The videos were systematically divided into four sets, each representing one hazard vehicle scenario (Figure 1c). Visual cues were triggered when the vehicle approached a predefined proximity to the participant (a close-range hazard within 4 m or a distant hazard between 4 to 8 m). The timing of the Visual Feedback varied across videos, and the duration of each Visual Feedback lasted 4 s.

The directional interface design incorporated both directional information and details regarding the vehicle type and distance (see Figure 1b). The vehicle icon, together with the distance text, spanned a width of 11.5 cm and a height of 4.5 cm. A yellow square arrow icon measuring 4.5 cm in length and a semi-transparent orange arc (80% transparency) measuring 20 cm in both width and height were employed as the Arrow and Shadow Visual Feedback to indicate the direction of a hazard vehicle. The Visual Feedback was positioned 35 cm in front of the participants and 30 cm below their eye level.

Upon concluding the experimental tasks, the participants were requested to fill out a questionnaire evaluating their Visual Feedback preferences, rated on a scale from 1 (strong dislike) to 9 (strong preference). They were also prompted to offer further qualitative insights via commentary and group discussions about the various interactive designs encountered. The entire experimental procedure spanned an approximate total of 30 min.

3.2. Results

Performance metrics, including reaction time, search time, and user behavioral data, such as head rotation and head movement, were recorded to evaluate user performance. A repeated-measures analysis of variance (ANOVA) across Visual Feedback, Traffic Scenario, and Vehicle Distance was utilized to analyze these performance measurements.

3.2.1. Reaction Time, Search Time, and User Behavior Data in the Primary Task of Study 1

Reaction Time

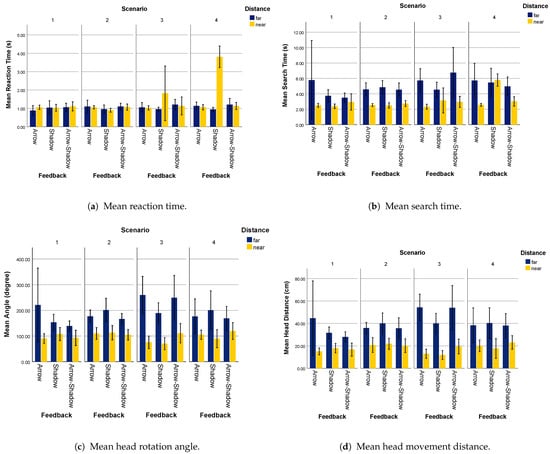

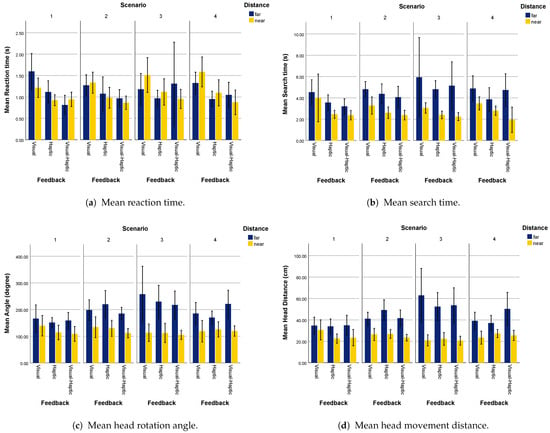

Main effects were found for Visual Feedback , Traffic Scenario (the sphericity assumption was not met, so the Greenhouse-Geisser correction was applied; the corrected degrees of freedom are shown), and Vehicle Distance . The interaction effects were found for Visual Feedback × Traffic Scenario , Visual Feedback × Vehicle Distance , and Traffic Scenario × Vehicle Distance . The mean reaction time across Visual Feedback, Traffic Scenario, and Vehicle Distance is illustrated in Figure 2a.

Figure 2.

Mean reaction time, search time, head rotation angle, and head movement distance results for Study 1.

Post-hoc Bonferroni pairwise comparisons showed that the reaction time of Arrow (1.05 s) was significantly faster than Shadow (1.43 s) , the reaction time of traffic scenario 1 (1.03 s) was significantly faster than scenario 4 (1.55 s) , the reaction time of scenario 2 (1.03 s) was significantly faster than scenario 4 (1.55 s) , and the reaction time of far distance (1.05 s) was significantly faster than near distance (1.35 s) .

Search Time

Main effects were found for Traffic Scenario and Vehicle Distance . The interaction effects were found for Visual Feedback × Vehicle Distance . No other main effect or interaction effect was found. The mean selection time across Visual Feedback, Traffic Scenario, and Vehicle Distance is illustrated in Figure 2b. Post-hoc Bonferroni pairwise comparisons showed that the search time of scenario 2 (3.63 s) was significantly faster than scenario 4 (4.59 s) , the search time of near vehicle distance (2.95 s) was significantly faster than far distance (5.02 s) .

Head Rotation Angle

A main effect was found for Vehicle Distance . An interaction effect was found for Traffic Scenario × Vehicle Distance . No other main effect or interaction effect was found. The mean head rotation angle across Visual Feedback, Traffic Scenario, and Vehicle Distance is illustrated in Figure 2c. Post-hoc Bonferroni pairwise comparisons showed that head rotation angle for near vehicle distance (14.78 degrees) was significantly smaller than far vehicle distance (99.07 degrees) .

Head Movement Distance

A main effect was found for Vehicle Distance . An Interaction effect was found for Traffic Scenario × Vehicle Distance . No other main effect or interaction effect was found. The mean head movement distance across Visual Feedback, Traffic Scenario, and Vehicle Distance is illustrated in Figure 2d. Post-hoc Bonferroni pairwise comparisons showed that head movement distance of far vehicle distance (40.05 cm) was significantly longer than near vehicle distance (18.11 cm) .

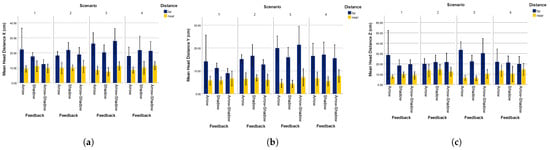

We also recorded the head movement distance along the X, Y, and Z dimensions. For the X dimension, a main effect was found for Vehicle Distance . An Interaction effect was found for Traffic Scenario × Vehicle Distance . No other main effect or interaction effect was found. Post-hoc Bonferroni pairwise comparisons showed that head movement distance along the X dimension of far vehicle distance (20.53 cm) was significantly longer than near vehicle distance (9.9 cm) .

For the Y dimension, main effects were found for Traffic Scenario and Vehicle Distance . An interaction effect was found for Traffic Scenario × Vehicle Distance . No other main effect or interaction effect was found. Post-hoc Bonferroni pairwise comparisons showed that head movement distance along the Y dimension of traffic scenario 1 (8.75 cm) was significantly shorter than scenario 3 (12.20 cm) . Head movement distance along the Y dimension of far vehicle distance (15.42 cm) was significantly longer than near vehicle distance (6.14 cm) .

For the Z dimension, a main effect was found for Vehicle Distance . An interaction effect was found for Scenario × Vehicle Distance . No other main effect or interaction effect was found. Post-hoc Bonferroni pairwise comparisons showed that head movement distance along the Z dimension of far vehicle distance (23.24 cm) was significantly longer than near vehicle distance (10.67 cm) .

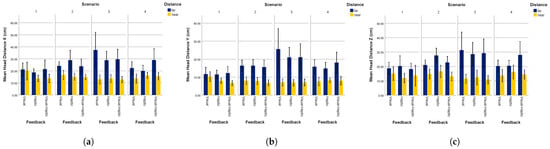

The mean head movement distance along different dimensions across Visual Feedback, Traffic Scenario, and Vehicle Distance is illustrated in Figure 3.

Figure 3.

Mean head movement distance along different dimensions for Study 1. (a) Mean head movement distance along X. (b) Mean head movement distance along Y. (c) Mean head movement distance along Z.

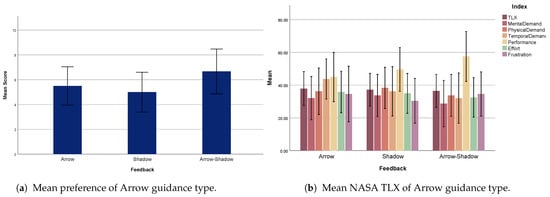

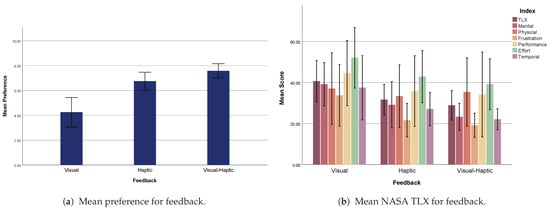

3.2.2. User Preference and Task Load

Upon completing the test, participants were instructed to complete preference and raw NASA Task Load Index (TLX) questionnaires [73,74,75] and to share their feedback and observations. Analysis using a one-way repeated-measures ANOVA revealed no significant effects on user preferences, as shown in Figure 4a. Additionally, the one-way repeated-measures ANOVA indicated no significant impact on the NASA Task Load Index (TLX), as illustrated in Figure 4b.

Figure 4.

Mean user preference and NASA TLX for Study 1.

The participants reported that Arrow was effective and straightforward, facilitating the identification of potential hazards. Its design was prominent without overwhelming their field of view. In contrast, Shadow was perceived as less visible, particularly against bright or complex backgrounds, often requiring additional effort to discern. Some participants expressed concerns that Shadow could be misleading, erroneously suggesting the imminent proximity of hazardous vehicles, which could increase stress levels. To improve visibility, several participants reached a consensus on the need to modify the color of Shadow to create better contrast with varying environmental conditions and to avoid colors that could induce stress.

Interestingly, participants with experience in first-person 3D shooting games appeared to acclimate to Shadow feedback more readily, whereas two individuals lacking this background did not immediately recognize Shadow as a directional indicator. This observation suggests that gamers, due to their familiarity with similar visual elements in 3D environments, may adapt more quickly to Shadow feedback. Furthermore, this finding indicates a potentially steeper learning curve for interpreting Shadow compared to Arrow.

Additionally, while some participants acknowledged the utility of Visual Feedback in identifying potential hazards, they expressed concerns that constant vigilance for these cues could lead to fatigue, particularly in real-world scenarios. Recognizing that reliance on a single Visual Feedback modality may contribute to sensory overload and increased fatigue, our subsequent research will investigate alternative or supplementary feedback mechanisms beyond visual modalities.

4. Study 2: Visual-Haptic Guidance Design and Evaluation

To reduce cognitive burden and to effectively assist users in the detection of potential hazards, this section details the incorporation of Haptic Feedback alongside visual guidance. We devised a wearable system that delivers haptic sensations to the user’s upper body and subsequently executed an experimental evaluation. The aim was to evaluate the effectiveness of Haptic Feedback in improving user performance during tasks that require the identification of traffic-related hazards.

4.1. Haptic Feedback Design for Target Searching Task

The utility of Haptic Feedback extends across numerous user scenarios, including the detection of objects outside the field of view [76,77,78], navigation in search tasks [79], the enhancement of multimedia experiences [80,81], and support in automotive operations [82]. Haptic feedback could also improve user performance in complex driving scenarios [83]. Following the findings from Study 1, we further integrated the effectiveness of Haptic Feedback. Earlier research indicates that users can interpret multiple tactile elements as a unified location [84,85]. Leveraging this insight, we mapped directional cues to the user’s back using a haptic vest with an array of tactile elements, as illustrated in Figure 5b.

Figure 5.

Experimental settings and haptic guidance design.

4.2. Study Design

In accordance with the methods employed in Study 1, we continued to use the Microsoft HoloLens 2 (Microsoft Corporation, Redmond, WA, USA) as the experimental device, utilizing the same development tools. For the wearable Haptic Feedback component, we incorporated bHaptics TactSuit X40 (bHaptics Inc., Seoul, Republic of Korea), which features 40 tactile feedback units distributed across the upper body.

4.2.1. Experimental Settings

Consistent with Study 1, we utilized Microsoft HoloLens 2. For the wearable Haptic Feedback component, we employed bHaptics TactSuit X40, which is configured with 40 tactile feedback units—20 located on the chest and 20 on the back. Directional haptic guidance was generated using tactile elements positioned on the left and right sides of the wearer’s back, as illustrated in Figure 5b. The haptic system was controlled through a custom application developed in Unity 2019.4, incorporating the bHaptics Haptic Plugin. This application operated on a Windows 11 desktop computer. Drawing on findings from a prior study [86], the Haptic Feedback was programmed to deliver four pulses per second, with each pulse lasting 100 ms. The haptic control software subscribed to the MQTT topic for directional guidance, activating the Haptic Feedback at the onset of the vehicle identification task.

4.2.2. Independent Variables

For Study 2, we applied the optimal user experience settings derived from the findings of Study 1. Consequently, Arrow was utilized as Visual Feedback, which was then compared with Haptic Feedback and a combined Visual-Haptic Feedback approach. The independent variables include the following:

- Feedback Modality (3 levels): Visual Feedback, Haptic Feedback, and Visual-Haptic Feedback;

- Traffic Scenario (4 levels): 1 (Taxi); 2 (Heavy Vehicle); 3 (Bike/Motorbike); 4 (Sports Car);

- Vehicle Distance (2 levels): Near (less than 4 m); far (4 m–8 m).

4.2.3. Experimental Design

Study 2 utilized a within-subjects repeated measures design and comprised three phases, each employing a different type of feedback: Visual Feedback, Haptic Feedback, and Visual-Haptic Feedback. The order of the phases was counterbalanced, with a five-minute rest period between each phase. The experimental setup for each phase was consistent with that of Study 1, and each participant completed a total of 24 trials across all phases.

4.2.4. Participants and Procedure

The study recruited 12 participants (5 men and 7 women) from the university campus. Their mean age was 25.5 . All participants had prior experience cycling in urban traffic environments. Additionally, all participants were familiar with 3D gaming, and four had previous experience with virtual reality (VR). The total duration of the experimental procedure was approximately 30 min.

4.3. Results

As in Study 1, we documented metrics, including reaction time and search time, as well as user behavior data, such as head rotation and movement, to assess performance. To analyze these user performance metrics, we employed a repeated-measures ANOVA, considering the factors of Feedback Modality, Traffic Scenario, and Vehicle Distance. The definitions for all user performance measurements were consistent with those established in Study 1.

4.3.1. Reaction Time, Search Time, and User Behavior Data for Study 2

Reaction Time

A main effect was found for Feedback Modality . No other main effect or interaction effect was found. The mean reaction time across Feedback Modality, Traffic Scenario, and Vehicle Distance is illustrated in Figure 6a.

Figure 6.

Mean reaction time, search time, head rotation angle, and head movement distance results for Study 2.

Post-hoc Bonferroni pairwise comparisons showed that the reaction time of Visual Feedback (1.38 s) was significantly slower than Haptic Feedback (1.03 s) and Visual-Haptic Feedback (0.97 s) .

Search Time

Main effects were found for Feedback Modality and Vehicle Distance . No other main interaction or interaction effect was found. The mean search time across Feedback Modality, Traffic Scenario, and Vehicle Distance is illustrated in Figure 6b. Post-hoc Bonferroni pairwise comparisons showed that the search time of Haptic Feedback (3.36 s) was significantly faster than Visual Feedback (4.25 s) , and the search time for a vehicle near the user (2.75 s) was significantly faster than far from the user (4.50 s) .

Head Rotation Angle

Main effects were found for Vehicle Distance . An interaction effect was found for Traffic Scenario × Vehicle Distance . The mean head rotation angle across Feedback Modality, Traffic Scenario, and Vehicle Distance is illustrated in Figure 6c. Post-hoc Bonferroni pairwise comparisons showed that head rotation angle for a vehicle near the user (119.41 degrees) was significantly smaller than that far from the user (196.91 degrees) .

Head Movement Distance

A main effect was observed for Vehicle Distance . Additionally, there was a significant interaction effect between Traffic Scenario and Vehicle Distance . The average reaction times for the various instances of Feedback Modality, Traffic Scenario, and Vehicle Distance are depicted in Figure 6d. Subsequent post-hoc Bonferroni pairwise comparisons revealed that the distance of head movement while searching for vehicles situated far from the user (44.21 cm) was significantly greater than that for vehicles closer to the user (24.42 cm) .

We also recorded the head movement distance along the X, Y, and Z dimensions. For the X dimension, a main effect was found for Vehicle Distance . An interaction effect was found for Feedback Modality x Traffic Scenario and Traffic Scenario × Vehicle Distance . Post-hoc Bonferroni pairwise comparisons showed that head movement distance along the X dimension for a vehicle far from the user (25.44 cm) was significantly longer than near the user (14.87 cm) .

For the Y dimension, main effects were found for Traffic Scenario and Vehicle Distance . An interaction effect was found for Traffic Scenario × Vehicle Distance . Post-hoc Bonferroni pairwise comparisons showed that head movement along the Y dimension in scenario 2 (11.84 cm) and scenario 4 (12.09 cm) was significantly longer than in scenario 1 (10.06 cm) , and head movement along the Y dimension for a vehicle far from the user (16.66 cm) was significantly longer than near to the user (7.71 cm) .

For the Z dimension, a main effect was found for Vehicle Distance . An interaction effect was found for Traffic Scenario × Vehicle Distance . Post-hoc Bonferroni pairwise comparisons showed that head movement distance along the Z dimension for a vehicle far from the user (23.83 cm) was significantly longer than near the user (13.65 s) .

The mean head movement distance along different dimensions across Feedback Modality, Traffic Scenario, and Vehicle Distance is illustrated in Figure 7.

Figure 7.

Mean head movement distance along different dimensions for Study 2. (a) Mean head movement distance along X. (b) Mean head movement distance along Y. (c) Mean head movement distance along Z.

4.3.2. User Preference

Following the test, the participants were required to provide their preferences and fill out a NASA TLX questionnaire to measure their workload. A one-way repeated-measures ANOVA examining Feedback Modality revealed a significant effect on user preferences , as illustrated in Figure 8a. Post-hoc Bonferroni pairwise comparisons indicated that the preference for Visual Feedback (4.25) was significantly lower than that for Haptic Feedback (6.75) and Visual-Haptic Feedback (7.58) . Additionally, a one-way repeated-measures ANOVA on the NASA TLX scores found a significant effect on workload , detailed in Figure 8b. Post-hoc Bonferroni pairwise comparisons revealed that the workload associated with Visual Feedback (40.69) was significantly higher than that for Haptic Feedback (31.68) and Visual-Haptic Feedback (28.89) .

Figure 8.

Mean user preference and NASA TLX for Study 2.

Feedback from users indicated that Haptic Feedback elicited quicker responses than visual cues, thereby reducing the time users spent identifying and following visual Arrow indicators. The participants reported that Haptic Feedback was immediate, facilitating swift reactions with a lower cognitive burden, which, in turn, diminished mental and visual demands. Notably, users also observed an increased response speed when relying solely on Haptic Feedback. In contrast, Visual Feedback presented several disadvantages, including the constant redirection of attention, potential occlusion of the user’s view, and the effects of visual engagement and fatigue.

In our study, Haptic Feedback was limited to left and right directional signals. The participants suggested that more detailed information about the target’s location would be beneficial. Some reported that the area of vibration was excessively broad, causing delays in determining the intended direction. Consequently, users expressed a desire for a more confined area of vibration to enhance the precision of directional discernment. Furthermore, users generally preferred more pronounced Haptic Feedback intensities.

5. Discussion

In this section, we explore the implications of guidance design, drawing on the experimental findings from our studies.

5.1. Effect of Visual Guidance

Our research underscores the significant influence of visual interface design on users’ reaction times. The experimental findings indicate that Arrow feedback effectively reduces users’ response times. This enhancement can be attributed to the heightened visibility and intuitive learning curve associated with Arrow feedback. In contrast, Shadow feedback may introduce ambiguity regarding its intended purpose. Moreover, shadows used as directional cues may be obscured by environmental factors, which could hinder their immediate recognition by users. Conversely, Arrow feedback promotes a rapid and intuitive understanding of the intended direction, thereby facilitating a more efficient information processing period.

Additionally, our study revealed that participants exhibited faster reaction times yet longer search durations for distant vehicles compared to nearby vehicles. This phenomenon may stem from some users not fully processing information about distant vehicles, prompting them to turn their heads immediately to locate the target. However, they encountered challenges in identifying potential hazards over a broader area, resulting in extended search times. Consequently, distant vehicle scenarios often necessitate greater head rotation angles and longer head travel distances.

Based on these findings, we recommend that designers consider refining the Arrow-Shadow combination by establishing a clearer functional distinction between these elements. Arrow could remain the primary directional indicator given its visibility advantages, while Shadow could be redesigned with enhanced color contrast and be adapted to convey proximity and hazard severity information. This approach might address the visibility challenges identified in complex backgrounds and accommodate the observed learning curve differences among users. Future experimental evaluation would be necessary to determine if such improvements to the Arrow-Shadow method effectively enhance visual guidance while addressing participant concerns about fatigue during extended cycling sessions.

5.2. Effect of Haptic Feedback

Study 2 demonstrates that Haptic Feedback significantly enhances users’ response times. The improvements in reaction time, user preference, and task load index from Visual Feedback to Visual-Haptic Feedback are summarized in Table 1. This enhancement is likely attributed to Haptic Feedback reducing visual cognitive load by providing directional cues through an additional sensory channel. This dual-channel sensory input facilitates more efficient information processing and alleviates visual cognitive load, enabling quicker responses. Furthermore, participant feedback indicated that Haptic Feedback was perceived as more immediate and responsive than Visual Feedback, suggesting that it enables users to detect and comprehend information more effectively, thereby accelerating response times.

Table 1.

Comparison of Visual Feedback and Visual-Haptic Feedback for secondary tasks in Study 2.

Additionally, search times were shorter for users experiencing Haptic Feedback compared to those receiving Visual Feedback. Coupled with feedback from participants who reported difficulties with orientation, it is plausible that the distinct and memorable nature of Haptic Feedback enhances user confidence. This confidence ensures that users feel assured in their interpretation of cues, reducing hesitation and promoting smoother interactions. The choice of feedback device location is another key factor affecting interaction quality. While our study used a vibration vest, other research has explored handlebar vibration feedback [30,32,47] and helmet vibration [48]. Notably, Matviienko et al. found that inherent bicycle vibrations during riding may interfere with tactile signal perception on handlebars, causing some signals to be missed [30]. Although Vo et al. explored TactiHelm head-based tactile feedback, it faces difficulties with signal recognition on the back of the head due to hair and helmet fit issues [48]. In comparison, vibration vests provide larger body contact areas and higher recognition rates [87], potentially making them more suitable for directional warning information. Future research should directly compare these different feedback locations while considering the advantages and disadvantages of various solutions in terms of comfort and practicality.

Therefore, we recommend that designers of cyclist danger feedback systems leverage the complementary nature of Visual-Haptic Feedback. While both signals are delivered simultaneously, our findings suggest cyclists often process haptic signals more immediately due to the intuitive nature of the tactile channel. This allows cyclists to quickly identify danger direction through tactile cues while simultaneously receiving contextual details through Visual Feedback. This multimodal approach enhances information processing efficiency while providing comprehensive danger information without increasing cognitive load. Visual feedback works in concert with haptic alerts by offering richer contextual details and reinforcing directional information through intuitive visual cues. Future research should examine these multimodal interactions under more demanding scenarios, such as high-speed cycling or complex traffic environments, to determine if sensory conflicts might emerge when cognitive resources are further constrained and to optimize feedback design accordingly.

5.3. Effect of Traffic Environment

The traffic environment can significantly influence how users respond to potential hazards. For instance, in the first study, reaction times and search times in scenario 2 were considerably faster than in scenario 4. This difference can be attributed to the fact that heavy vehicles, being larger and more visually prominent, are easier to detect. Furthermore, the presence of a heavy vehicle, associated with a higher risk of severe accidents, may increase users’ vigilance, leading to quicker reactions.

In both studies, greater vehicle distances resulted in longer search times, as well as larger head movement angles and distances. This may be due to users needing to scan a broader area to locate potential hazards, which diminished their search efficiency. Therefore, when designing traffic alert systems, it is essential to carefully select the appropriate alert distance based on vehicle type and distance. For example, for heavy vehicles, which pose a greater danger and are easier to detect, alerts could be issued to cyclists earlier. Conversely, for smaller and less hazardous targets, the system could issue alerts when users are closer, thereby minimizing the time cyclists spend scanning their surroundings.

Environmental factors beyond traffic conditions should also be considered in alert system design. Research indicates that strong sunlight can reduce visual cue visibility [32,47], while cold weather may decrease sensitivity to vibration feedback [62], particularly when multiple clothing layers are worn. Future studies should also control for variables such as ambient noise levels and environmental distractions during experiments to enhance methodological rigor and provide more reliable assessments of system effectiveness. While our current work offers valuable preliminary insights into system application, environmental variables were not comprehensively evaluated in this phase; future research would benefit from extending testing to diverse real-world cycling conditions to more thoroughly assess system efficacy across various practical settings.

6. Conclusions

This research investigates the application of multimodal visual and haptic interaction technologies to enhance the road safety of vulnerable users, particularly cyclists. Through a systematic assessment of various visual displays and Haptic Feedback mechanisms, we identified effective strategies for aiding the recognition and localization of potential traffic hazards. The findings from Study 1 underscore the significance of designing intuitive and visually salient cues, such as directional arrows, to direct cyclists’ attention to critical areas within the traffic environment. Study 2 demonstrates the potential of integrating Haptic Feedback, delivered through vests, to further enhance cyclists’ awareness of peripheral vehicular activities without imposing additional cognitive burden. Based on participant feedback, the placement and delivery of tactile signals should be optimized to better meet user preferences.

The experiments conducted in realistic traffic scenarios provide valuable insights into the efficacy of multimodal interaction design in improving road safety. The results indicate that tailoring visual and haptic cues to the specific needs and preferences of diverse user groups can significantly enhance the usability and effectiveness of safety systems. By reducing the cognitive load associated with monitoring complex traffic environments, these multimodal assistance systems can enable cyclists to navigate more safely and confidently. The insights gained from this research contribute to the broader goal of creating safer and more inclusive traffic environments for all road users. Future studies should include larger and more diverse participant samples across age groups and cycling experience levels to improve generalizability. In our subsequent research, we will further explore the types of information most effectively communicated through Haptic Feedback and investigate optimal methods for its integration with Visual Feedback, along with examining long-term user habituation effects for practical applicability.

Author Contributions

Conceptualization, G.R., J.-H.L. and G.W.; methodology, G.R. and T.H.; software, G.R.; validation, T.H. and J.-H.L.; formal analysis, W.L. and N.M.; investigation, Z.H.; resources, G.W.; data curation, W.L. and N.M.; writing—original draft preparation, G.R., Z.H. and W.L.; writing—review and editing, Z.H., J.-H.L. and G.W.; visualization, W.L.; supervision, G.W.; project administration, G.R. and G.W.; funding acquisition, G.W. and J.-H.L. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by the (1) Korea Institute of Police Technology (KIPoT; Police Lab 2.0 program) grant funded by MSIT (RS-2023-00281194), (2) a Research Grant (2024-0035) funded by HAII Corporation, (3) Fujian Province Social Science Foundation Project (No. FJ2025MGCA042), and (4) 2024 Fujian Provincial Lifelong Education Quality Improvement Project (No. ZS24005).

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki and approved by the Institutional Review Board of School of Design Arts, Xiamen University of Technology (Approval Date: 4 March 2024).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

All data are contained within the manuscript. Raw data are available from the corresponding author upon request.

Acknowledgments

We appreciate all participants who took part in the studies.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| VR | Virtual reality |

| HMDs | Head-mounted displays |

| MRTK | Mixed Reality Toolkit |

| 3D | Three-dimensional |

References

- World Health Organization. Global Status Report on Road Safety 2023; Technical Report; World Health Organization: Geneva, Switzerland, 2023. [Google Scholar]

- Xie, D.; Duan, X.; Ma, L.; Zhao, M.; Lu, J.; Li, C. Mixed Reality Assisted Orbital Reconstruction Navigation System for Reduction Surgery of Orbital Fracture. In Proceedings of the 2022 IEEE International Conference on Real-time Computing and Robotics (RCAR), Kunming, China, 17–21 July 2022; pp. 316–321. [Google Scholar] [CrossRef]

- Hammady, R.; Ma, M.; AL-Kalha, Z.; Strathearn, C. A framework for constructing and evaluating the role of MR as a holographic virtual guide in museums. Virtual Real. 2021, 25, 895–918. [Google Scholar] [CrossRef]

- Xiao, J.; Qian, Y.; Du, W.; Wang, Y.; Jiang, Y.; Liu, Y. VR/AR/MR in the Electricity Industry: Concepts, Techniques, and Applications. In Proceedings of the 2023 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Shanghai, China, 25–26 March 2023; pp. 82–88. [Google Scholar] [CrossRef]

- Renner, P.; Pfeiffer, T. Attention guiding techniques using peripheral vision and eye tracking for feedback in augmented-reality-based assistance systems. In Proceedings of the 2017 IEEE Symposium on 3D User Interfaces (3DUI), Los Angeles, CA, USA, 18–19 March 2017; pp. 186–194. [Google Scholar] [CrossRef]

- Baudisch, P.; Rosenholtz, R. Halo: A technique for visualizing off-screen objects. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI ’03), Fort Lauderdale, FL, USA, 5–10 April 2003; Association for Computing Machinery: New York, NY, USA, 2003; pp. 481–488. [Google Scholar] [CrossRef]

- Gruenefeld, U.; Ali, A.E.; Heuten, W.; Boll, S. Visualizing out-of-view objects in head-mounted augmented reality. In Proceedings of the 19th International Conference on Human-Computer Interaction with Mobile Devices and Services (MobileHCI ’17), Vienna, Austria, 47 September 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 1–7. [Google Scholar] [CrossRef]

- Bolton, A.; Burnett, G.; Large, D.R. An investigation of augmented reality presentations of landmark-based navigation using a head-up display. In Proceedings of the 7th International Conference on Automotive User Interfaces and Interactive Vehicular Applications (AutomotiveUI ’15), Nottingham, UK, 1–3 September 2015; Association for Computing Machinery: New York, NY, USA, 2015; pp. 56–63. [Google Scholar] [CrossRef]

- Patel, R.V.; Atashzar, S.F.; Tavakoli, M. Haptic Feedback and Force-Based Teleoperation in Surgical Robotics. Proc. IEEE 2022, 110, 1012–1027. [Google Scholar] [CrossRef]

- Jeong, S.; Yun, H.H.; Lee, Y.; Han, Y. Glow the Buzz: A VR Puzzle Adventure Game Mainly Played Through Haptic Feedback. In Proceedings of the CHI ’23: CHI Conference on Human Factors in Computing Systems, Hamburg, Germany, 23–28 April 2023. [Google Scholar]

- Jeong, C.; Kim, E.; Hwang, M.H.; Cha, H.R. Enhancing Drivers’ Perception of Traffic Lights through Haptic Alerts on the Brake Pedal. In Proceedings of the 2023 IEEE 6th International Conference on Knowledge Innovation and Invention (ICKII), Jeju Island, Republic of Korea, 27–30 July 2023; pp. 131–135. [Google Scholar] [CrossRef]

- Racat, M.; Plotkina, D. Sensory-enabling Technology in M-commerce: The Effect of Haptic Stimulation on Consumer Purchasing Behavior. Int. J. Electron. Commer. 2023, 27, 354–384. [Google Scholar] [CrossRef]

- Beheshti, E.; Borgos-Rodriguez, K.; Piper, A.M. Supporting Parent-Child Collaborative Learning through Haptic Feedback Displays. In Proceedings of the 18th ACM International Conference on Interaction Design and Children (IDC ’19), Boise, ID, USA, 12–15 June 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 58–70. [Google Scholar] [CrossRef]

- Pacchierotti, C.; Magdanz, V.; Medina-Sánchez, M.; Schmidt, O.G.; Prattichizzo, D.; Misra, S. Intuitive control of self-propelled microjets with haptic feedback. J. Micro-Bio Robot. 2015, 10, 37–53. [Google Scholar] [CrossRef]

- Luzuriaga, M.; Aydogdu, S.; Schick, B. Boosting Advanced Driving Information: A Real-world Experiment About the Effect of HUD on HMI, Driving Effort, and Safety. Int. J. Intell. Transp. Syst. Res. 2022, 20, 181–191. [Google Scholar] [CrossRef]

- Chen, W.; Song, J.; Wang, Y.; Wu, C.; Ma, S.; Wang, D.; Yang, Z.; Li, H. Inattentional blindness to unexpected hazard in augmented reality head-up display assisted driving: The impact of the relative position between stimulus and augmented graph. Traffic Inj. Prev. 2023, 24, 344–351. [Google Scholar] [CrossRef]

- Kim, H.; Isleib, J.D.; Gabbard, J.L. Virtual Shadow: Making Cross Traffic Dynamics Visible through Augmented Reality Head Up Display. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2016, 60, 2093–2097. [Google Scholar] [CrossRef]

- Jing, C.; Shang, C.; Yu, D.; Chen, Y.; Zhi, J. The impact of different AR-HUD virtual warning interfaces on the takeover performance and visual characteristics of autonomous vehicles. Traffic Inj. Prev. 2022, 23, 277–282. [Google Scholar] [CrossRef]

- Irwin, C.; Mollica, J.A.; Desbrow, B. Sensitive and Reliable Measures of Driver Performance in Simulated Motor-Racing. Int. J. Exerc. Sci. 2019, 12, 971. [Google Scholar] [CrossRef]

- Carsten, O.; Brookhuis, K. Issues arising from the HASTE experiments. Transp. Res. Part F Traffic Psychol. Behav. 2005, 8, 191–196. [Google Scholar] [CrossRef]

- Hwang, J. A Study on the Location of Bicycle Crossing considering Safety of Bicycle Users at Intersection. J. Korean Soc. Road Eng. 2014, 16, 91–98. [Google Scholar] [CrossRef]

- Waldner, M.; Le Muzic, M.; Bernhard, M.; Purgathofer, W.; Viola, I. Attractive Flicker—Guiding Attention in Dynamic Narrative Visualizations. IEEE Trans. Vis. Comput. Graph. 2014, 20, 2456–2465. [Google Scholar] [CrossRef] [PubMed]

- Dziennus, M.; Kelsch, J.; Schieben, A. Ambient light based interaction concept for an integrative driver assistance system—A driving simulator study. In Proceedings of the 2015 IEEE 18th International Conference on Intelligent Transportation Systems (ITSC), Las Palmas, Spain, 15–18 September 2015. [Google Scholar]

- Van Veen, T.; Karjanto, J.; Terken, J. Situation Awareness in Automated Vehicles through Proximal Peripheral Light Signals. In Proceedings of the 9th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Oldenburg, Germany, 24–27 September 2017; ACM: Oldenburg, Germany, 2017; pp. 287–292. [Google Scholar] [CrossRef]

- Von Sawitzky, T.; Grauschopf, T.; Riener, A. “Attention! A Door Could Open.”—Introducing Awareness Messages for Cyclists to Safely Evade Potential Hazards. Multimodal Technol. Interact. 2021, 6, 3. [Google Scholar] [CrossRef]

- Schinke, T.; Henze, N.; Boll, S. Visualization of off-screen objects in mobile augmented reality. In Proceedings of the 12th International Conference on Human Computer Interaction With Mobile Devices and Services, Lisbon, Portugal, 7–10 September 2010; ACM: Lisbon, Portugal, 2010; pp. 313–316. [Google Scholar] [CrossRef]

- Warden, A.C.; Wickens, C.D.; Mifsud, D.; Ourada, S.; Clegg, B.A.; Ortega, F.R. Visual Search in Augmented Reality: Effect of Target Cue Type and Location. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2022, 66, 373–377. [Google Scholar] [CrossRef]

- Wieland, J.; Garcia, R.C.H.; Reiterer, H.; Feuchtner, T. Arrow, Bézier Curve, or Halos?—Comparing 3D Out-of-View Object Visualization Techniques for Handheld Augmented Reality. In Proceedings of the 2022 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Singapore, 17–21 October 2022; IEEE: Singapore, 2022; pp. 797–806. [Google Scholar] [CrossRef]

- Woodworth, J.W.; Yoshimura, A.; Lipari, N.G.; Borst, C.W. Design and Evaluation of Visual Cues for Restoring and Guiding Visual Attention in Eye-Tracked VR. In Proceedings of the 2023 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Shanghai, China, 25–26 March 2023; pp. 442–450, ISBN 9798350348392. [Google Scholar] [CrossRef]

- Matviienko, A.; Mehmedovic, D.; Müller, F.; Mühlhäuser, M. “Baby, You can Ride my Bike”: Exploring Maneuver Indications of Self-Driving Bicycles using a Tandem Simulator. Proc. ACM Hum.-Comput. Interact. 2022, 6, 1–21. [Google Scholar] [CrossRef]

- Tseng, H.Y.; Liang, R.H.; Chan, L.; Chen, B.Y. LEaD: Utilizing Light Movement as Peripheral Visual Guidance for Scooter Navigation. In Proceedings of the 17th International Conference on Human-Computer Interaction with Mobile Devices and Services, Copenhagen, Denmark, 24–27 August 2015; pp. 323–326. [Google Scholar] [CrossRef]

- Matviienko, A.; Ananthanarayan, S.; Brewster, S.; Heuten, W.; Boll, S. Comparing unimodal lane keeping cues for child cyclists. In Proceedings of the 18th International Conference on Mobile and Ubiquitous Multimedia, Pisa, Italy, 27–30 November 2019; pp. 1–11, ISBN 9781450376242. [Google Scholar] [CrossRef]

- Von Sawitzky, T.; Grauschopf, T.; Riener, A. No Need to Slow Down! A Head-up Display Based Warning System for Cyclists for Safe Passage of Parked Vehicles. In Proceedings of the 12th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Virtual Event, 21–22 September 2020; pp. 1–3, ISBN 9781450380669. [Google Scholar] [CrossRef]

- Chen, H.; Zendehdel, N.; Leu, M.C.; Yin, Z. Fine-grained activity classification in assembly based on multi-visual modalities. J. Intell. Manuf. 2024, 35, 2215–2233. [Google Scholar] [CrossRef]

- Herslund, M.B.; Jørgensen, N.O. Looked-but-failed-to-see-errors in traffic. Accid. Anal. Prev. 2003, 35, 885–891. [Google Scholar] [CrossRef]

- Shakeri, G.; Ng, A.; Williamson, J.H.; Brewster, S.A. Evaluation of Haptic Patterns on a Steering Wheel. In Proceedings of the 8th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Ann Arbor, MI, USA, 24–26 October 2016; pp. 129–136, ISBN 9781450345330. [Google Scholar] [CrossRef]

- Van Erp, J.B.; Van Veen, H.A. Vibrotactile in-vehicle navigation system. Transp. Res. Part F Traffic Psychol. Behav. 2004, 7, 247–256. [Google Scholar] [CrossRef]

- Birrell, S.A.; Young, M.S.; Weldon, A.M. Vibrotactile pedals: Provision of haptic feedback to support economical driving. Ergonomics 2013, 56, 282–292. [Google Scholar] [CrossRef]

- Matviienko, A.; Müller, F.; Schön, D.; Fayard, R.; Abaspur, S.; Li, Y.; Mühlhäuser, M. E-ScootAR: Exploring Unimodal Warnings for E-Scooter Riders in Augmented Reality. In Proceedings of the CHI Conference on Human Factors in Computing Systems Extended Abstracts (CHI ’22), New Orleans, LA, USA, 30 April–5 May 2022; pp. 1–7. [Google Scholar] [CrossRef]

- Bial, D.; Appelmann, T.; Rukzio, E.; Schmidt, A. Improving Cyclists Training with Tactile Feedback on Feet. In Haptic and Audio Interaction Design Series Title: Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2012; Volume 7468, pp. 41–50. [Google Scholar] [CrossRef]

- Poppinga, B.; Pielot, M.; Boll, S. Tacticycle: A tactile display for supporting tourists on a bicycle trip. In Proceedings of the 11th International Conference on Human-Computer Interaction with Mobile Devices and Services, Bonn, Germany, 15–18 September 2009; ACM: Bonn, Germany, 2009; pp. 1–4. [Google Scholar] [CrossRef]

- Pielot, M.; Poppinga, B.; Heuten, W.; Boll, S. Tacticycle: Supporting exploratory bicycle trips. In Proceedings of the 14th International Conference on Human-Computer Interaction With Mobile Devices and Services (MobileHCI ’12), San Francisco, CA, USA, 21–24 September 2012; pp. 369–378, ISBN 9781450311052. [Google Scholar] [CrossRef]

- Bial, D.; Kern, D.; Alt, F.; Schmidt, A. Enhancing outdoor navigation systems through vibrotactile feedback. In Proceedings of the CHI ’11 Extended Abstracts on Human Factors in Computing Systems, Vancouver, BC, Canada, 7–12 May 2011; pp. 1273–1278, ISBN 9781450302685. [Google Scholar] [CrossRef]

- Prasad, M.; Taele, P.; Goldberg, D.; Hammond, T.A. HaptiMoto: Turn-by-turn haptic route guidance interface for motorcyclists. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI ’14), Toronto, ON, Canada, 26 April–1 May 2014; pp. 3597–3606. [Google Scholar] [CrossRef]

- Steltenpohl, H.; Bouwer, A. Vibrobelt: Tactile navigation support for cyclists. In Proceedings of the 2013 International Conference on Intelligent User Interfaces, Santa Monica, CA, USA, 19–22 March 2013; ACM: Santa Monica, CA, USA, 2013; pp. 417–426. [Google Scholar] [CrossRef]

- Kiss, F.; Boldt, R.; Pfleging, B.; Schneegass, S. Navigation Systems for Motorcyclists: Exploring Wearable Tactile Feedback for Route Guidance in the Real World. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (CHI ’18), Montreal, QC, Canada, 21–26 April 2018; pp. 1–7, ISBN 9781450356206. [Google Scholar] [CrossRef]

- Matviienko, A.; Ananthanarayan, S.; El Ali, A.; Heuten, W.; Boll, S. NaviBike: Comparing Unimodal Navigation Cues for Child Cyclists. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (CHI ’19), Glasgow, UK, 4–9 May 2019; pp. 1–12, ISBN 9781450359702. [Google Scholar] [CrossRef]

- Vo, D.B.; Saari, J.; Brewster, S. TactiHelm: Tactile Feedback in a Cycling Helmet for Collision Avoidance. In Proceedings of the Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems (CHI ’21), Yokohama, Japan, 8–13 May 2021; pp. 1–5, ISBN 9781450380959. [Google Scholar] [CrossRef]

- Geitner, C.; Biondi, F.; Skrypchuk, L.; Jennings, P.; Birrell, S. The comparison of auditory, tactile, and multimodal warnings for the effective communication of unexpected events during an automated driving scenario. Transp. Res. Part F Traffic Psychol. Behav. 2019, 65, 23–33. [Google Scholar] [CrossRef]

- Ho, C.; Gray, R.; Spence, C. Reorienting Driver Attention with Dynamic Tactile Cues. IEEE Trans. Haptics 2014, 7, 86–94. [Google Scholar] [CrossRef] [PubMed]

- Wu, C.; Wu, H.; Lyu, N.; Zheng, M. Take-Over Performance and Safety Analysis Under Different Scenarios and Secondary Tasks in Conditionally Automated Driving. IEEE Access 2019, 7, 136924–136933. [Google Scholar] [CrossRef]

- Petermeijera, M.; Cielerb, S.; Winterc, J.C.F.D. Comparing spatially static and dynamic vibrotactile take-over requests in the driver seat. In Proceedings of the 9th International Conference on Automotive User Interfaces and Interactive Vehicular Applications (AutomotiveUI ’17), Oldenburg, Germany, 24–27 September 2017; pp. 287–292. [Google Scholar] [CrossRef]

- Petermeijer, S.; Bazilinskyy, P.; Bengler, K.; De Winter, J. Take-over again: Investigating multimodal and directional TORs to get the driver back into the loop. Appl. Ergon. 2017, 62, 204–215. [Google Scholar] [CrossRef] [PubMed]

- Huang, G.; Pitts, B.J. To Inform or to Instruct? An Evaluation of Meaningful Vibrotactile Patterns to Support Automated Vehicle Takeover Performance. IEEE Trans. Hum.-Mach. Syst. 2023, 53, 678–687. [Google Scholar] [CrossRef]

- Petermeijer, S.M.; De Winter, J.C.F.; Bengler, K.J. Vibrotactile Displays: A Survey With a View on Highly Automated Driving. IEEE Trans. Intell. Transp. Syst. 2016, 17, 897–907. [Google Scholar] [CrossRef]

- Prewett, M.S.; Elliott, L.R.; Walvoord, A.G.; Coovert, M.D. A Meta-Analysis of Vibrotactile and Visual Information Displays for Improving Task Performance. IEEE Trans. Syst. Man Cybern. Part C (Appl. Rev.) 2012, 42, 123–132. [Google Scholar] [CrossRef]

- Matviienko, A.; Ananthanarayan, S.; Borojeni, S.S.; Feld, Y.; Heuten, W.; Boll, S. Augmenting bicycles and helmets with multimodal warnings for children. In Proceedings of the 20th International Conference on Human-Computer Interaction with Mobile Devices and Services (MobileHCI ’18), Barcelona, Spain, 3–6 September 2018; pp. 1–13, ISBN 9781450358989. [Google Scholar] [CrossRef]

- Wang, M.; Liao, Y.; Lyckvi, S.L.; Chen, F. How drivers respond to visual vs. auditory information in advisory traffic information systems. Behav. Inf. Technol. 2020, 39, 1308–1319. [Google Scholar] [CrossRef]

- Pietra, A.; Vazquez Rull, M.; Etzi, R.; Gallace, A.; Scurati, G.W.; Ferrise, F.; Bordegoni, M. Promoting eco-driving behavior through multisensory stimulation: A preliminary study on the use of visual and haptic feedback in a virtual reality driving simulator. Virtual Real. 2021, 25, 945–959. [Google Scholar] [CrossRef]

- Fitch, G.M.; Kiefer, R.J.; Hankey, J.M.; Kleiner, B.M. Toward Developing an Approach for Alerting Drivers to the Direction of a Crash Threat. Hum. Factors J. Hum. Factors Ergon. Soc. 2007, 49, 710–720. [Google Scholar] [CrossRef]

- Yu, D.; Park, C.; Choi, H.; Kim, D.; Hwang, S.H. Takeover Safety Analysis with Driver Monitoring Systems and Driver–Vehicle Interfaces in Highly Automated Vehicles. Appl. Sci. 2021, 11, 6685. [Google Scholar] [CrossRef]

- Matviienko, A.; Ananthanarayan, S.; Kappes, R.; Heuten, W.; Boll, S. Reminding child cyclists about safety gestures. In Proceedings of the 9TH ACM International Symposium on Pervasive Displays, Virtual Event, 4–5 June 2020; pp. 1–7. [Google Scholar] [CrossRef]

- Müller, A.; Ogrizek, M.; Bier, L.; Abendroth, B. Design concept for a visual, vibrotactile and acoustic take-over request in a conditional automated vehicle during non-driving-related tasks. In Proceedings of the 10th International Conference on Automotive User Interfaces and Interactive Vehicular Applications (AutomotiveUI ’18), Toronto, ON, Canada, 23–25 September 2018; pp. 210–220. [Google Scholar] [CrossRef]

- Bazilinskyy, P.; Petermeijer, S.; Petrovych, V.; Dodou, D.; De Winter, J. Take-over requests in highly automated driving: A crowdsourcing survey on auditory, vibrotactile, and visual displays. Transp. Res. Part F Traffic Psychol. Behav. 2018, 56, 82–98. [Google Scholar] [CrossRef]

- Cobus, V.; Meyer, H.; Ananthanarayan, S.; Boll, S.; Heuten, W. Towards reducing alarm fatigue: Peripheral light pattern design for critical care alarms. In Proceedings of the 10th Nordic Conference on Human-Computer Interaction, Oslo, Norway, 29 September–3 October 2018; pp. 654–663. [Google Scholar] [CrossRef]

- Gruenefeld, U.; Stratmann, T.C.; Jung, J.; Lee, H.; Choi, J.; Nanda, A.; Heuten, W. Guiding Smombies: Augmenting Peripheral Vision with Low-Cost Glasses to Shift the Attention of Smartphone Users. In Proceedings of the 2018 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Munich, Germany, 16–20 October 2018; pp. 127–131. [Google Scholar] [CrossRef]

- Yun, H.; Yang, J.H. Multimodal warning design for take-over request in conditionally automated driving. Eur. Transp. Res. Rev. 2020, 12, 34. [Google Scholar] [CrossRef]

- Calvi, A.; D’Amico, F.; Ferrante, C.; Bianchini Ciampoli, L. Evaluation of augmented reality cues to improve the safety of left-turn maneuvers in a connected environment: A driving simulator study. Accid. Anal. Prev. 2020, 148, 105793. [Google Scholar] [CrossRef]