1. Introduction

Facial emotions hold significant importance in the fields of computer vision and artificial intelligence, as they serve as a crucial means of conveying intentions through non-verbal communication. Emotions, whether openly expressed or not, play an important role in our interactions with others [

1]. To detect and identify symptoms regardless of their visibility, trained doctors must be equipped with the right early detection and analysis tools [

2]. Emotion recognition technology, especially emotion recognition technology based on R-CNN classification through deep learning, has been widely studied in various fields such as medicine, human–computer interface, urban noise perception, and animation. Deep learning, particularly CNN, has demonstrated the ability to extract meaningful features in different applications of neural networks, including human faces and healthcare environments, etc. [

3,

4,

5,

6,

7,

8]. With the advancement of artificial intelligence (AI) technology and the growing demand for applications in the era of big data, experts expect facial emotion recognition (FER) solutions to work effectively in complex environments with occlusions, multiple views, and many large objects [

9]. This technology has been used to diagnose children with autism spectrum disorders and keep them safe in various situations. The basis of emotion recognition includes various functions such as electroencephalography (EEG), facial emotion (FE), text analysis, and speech processing [

10,

11,

12,

13,

14,

15,

16,

17]. Among these features, facial emotions are widely recognized and offer several advantages, including their remarkable visibility, ease of recognition, and availability of large facial datasets for training purposes [

18,

19]. Therefore, collecting the most relevant data under optimal conditions is crucial for accurate classification and training of facial emotion (FE) classifiers [

20,

21,

22]. Traditionally, FER systems preprocess input images by performing face detection. Although several areas of the face can be used for FE recognition, the most commonly used areas are the nose and mouth, while other areas, such as the cheeks, forehead, and eyes, provide additional insight into the different types of facial emotions [

23]. Recent studies have even explored the detection of facial emotions using data collected from the ear and hair [

24].

Given the significance of the mouth and eyes in detecting facial emotions, it is essential for machine vision models to assign utmost importance to these regions while considering other facial areas as less informative. In this research, we propose a CNN-based framework for human facial emotion recognition (HFER) that incorporates the findings and observations mentioned above [

25]. Specifically, the attention mechanism is employed to focus on crucial facial features. By leveraging attention convolutional networks, it is possible to achieve reputable accuracy even with limited layers (50 layers or less) [

26]. This approach enhances the performance and efficiency of the proposed HFER model.

In the field of intelligent medical systems, accurate recording and understanding of human emotional states are essential for personalized and effective care. Existing solutions are based on traditional machine learning or simple CNN architecture with some success but often fail to manage the complexity and diversity of human emotions, resulting in poor accuracy and performance. Despite considerable advances in deep learning technologies, there are still challenges, such as overload, high computing costs, and limited generalization. To address these problems, we propose an advanced human emotion recognition (HER) model that integrates four cutting-edge CNN architectures: DenseNet, ResNet-50, Enhanced Inception-V3, and Inception-V2. By extracting high-size features from these models and combining them into a single extended characteristic vector, our method greatly improves the accuracy and robustness of emotional recognition. In particular, the Inception-V3 framework, known for its powerful image recognition capabilities, solves the complex identification needs of complex emotional states. Although previous studies have demonstrated the potential of CNN-based models, they often fail to achieve the precision and efficiency required for practical use due to overload and high computational requirements. Our model seeks to overcome these limits by leveraging the combined advantages of several cutting-edge CNN architectures, although challenges such as data diversity, model interpretation, and real-time application still require further research.

The current study addresses this gap by proposing a new method called HFER, which combines advanced feature extraction with powerful machine learning algorithms to improve the accuracy and efficiency of HER. The methodology aims to achieve real-time recognition of facial emotions in challenging environments, anticipating a smart healthcare system helping to improve efficiency in healthcare.

This work focuses on developing deep learning models by virtue of deep ensemble classification and an improved version of Faster R-CNN for real-time observation of human emotions during patients’ observations in a way that will be coupled with mobile robots for the healthcare environment.

Notable contributions include detailed research on human facial emotion recognition (HFER) in video and image formats by utilizing a customized Faster R-CNN method to ensure accurate facial region recognition, transfer learning techniques utilizing state-of-the-art CNN models, a rigorous reference data verification set, and deploying data augmentation techniques to improve model accuracy.

Overall, this study proposes a comprehensive human facial emotion recognition model that anticipates a smart healthcare system while demonstrating promising results in accurately capturing and understanding human emotional states. The structure of a research article includes an introduction, literature review, methodology, experimental results, and conclusion, providing insights into future research directions.

2. Related Work

The literature review section provides a comprehensive overview of existing works in the field of emotion recognition. Six primary emotions, namely joy, fear, hate, sadness, disgust, and surprise, along with neutral emotions, have been widely recognized and studied [

27]. Notably, Ekman introduced the Facial Action Coding System (FACS) [

28], which has since emerged as the standard for emotion recognition research. As research progressed, the concept of neutral emotions was incorporated as the seventh basic emotion in many cognitive datasets.

Figure 1 illustrates sample images depicting different emotions sourced from notable reference datasets such as FER-2013, EMOTIC, CK+, and AffectNet. These datasets encompass a range of emotions, including happy, angry, disgusted, fearful, sad, surprised, and despised emotions.

By analyzing and synthesizing the findings from these reference datasets, researchers have made significant strides in understanding and identifying different states of emotions. The availability of these datasets has facilitated the development of robust and accurate emotion recognition systems. The study of different emotions of humans plays a very important role in various applications, such as medical diagnosis, affective computing, and human–computer interaction. However, to progress in this area and address the associated challenges, it is crucial to study and evaluate the effectiveness of existing methods and techniques. The literature review aims to investigate these efforts, examine previous work from different perspectives, and reveal the state of the art in emotion recognition in various domains.

Early research on emotion recognition used a two-stage machine learning method, including image attribute extraction in the first stage and emotion classification in the second stage. Typically, manual feature extraction methods are used, such as linear binary models (LBP), Gabor wavelets, Haar features, and edge histogram descriptors. On datasets that have extensive intra-class variability, these methods are limited.

Figure 1 illustrates some of the challenges, such as partial face coverage and blurred facial features.

Figure 1 shows sample pictures of various emotions from the four state-of-the-art datasets that are used in this study (CK+, FER-2013, EMOTIC, and AffectNet datasets). The primary emotions are anger, disgust, fear, happiness, contempt, sadness, and surprise. Several platforms have made significant progress in the areas of neural networks, deep learning, image classification, and vision challenges [

28] demonstrated the excellence of convolutional neural networks (CNNs) in emotion recognition, using datasets like the Toronto Face Dataset (TFD) and the Cohn–Kanade Dataset (CK+). Similarly, other studies focused on deep learning for transforming human images into animated faces and developing network architectures for facial emotion recognition. State-of-the-art accuracy on CK+ and EMOTIC datasets was achieved using Bidirectional Deep Belief Networks (BDBNs), while discriminant neurons were used to enhance spontaneous facial emotion recognition [

29,

30,

31,

32,

33,

34,

35,

36,

37,

38,

39,

40].

We proposed end-to-end network topologies and self-healing algorithms to resolve unclear and blurry facial pictures, while CNN (IA-CNN) aimed to minimize fluctuations in representation-related information during identification learning [

41,

42,

43,

44,

45]. Regional Attention Network (RAN) considered positional changes and occlusions during facial emotion recognition. Several studies, including Deep Learning Attention Networks, Multi-Attention Networks for Facial Emotion Recognition, and A Review of Emotion Recognition Using Facial Emotion Recognition, anticipated significant improvements in emotion recognition. However, the identification of essential facial regions for emotion perception remains challenging [

46,

47,

48].

The approach mentioned by [

49,

50] introduces a novel framework based on the attentional co-evolution of neural networks, specifically designed to focus on key regions of the face crucial for accurate emotion perception. By addressing the limitations of previous approaches and leveraging the power of attention mechanisms, the authors propose a framework that aims to enhance the effectiveness of emotion recognition in facial analysis.

In a recent study [

51], the ENCASE framework was introduced, which integrates expert features and deep neural networks (DNNs) for the classification of electrocardiograms (ECG). Researchers have explored the application of deep feature extraction techniques [

52] in various fields, such as statistics, signal processing, and medicine. Furthermore, the respective study presents a novel algorithm that identifies and extracts the central wave, representing a prominent feature from long-term ECG recordings. These extracted features are then combined and grouped for classification purposes.

The authors propose remote sensing scene categorization as an additional sophisticated technique [

53,

54]. The technique employs a group of powerful deep rule-based (DRB) classifiers, each trained with varying levels of spatial awareness. The DRB classifiers use zero-order fuzzy rules with prototypical properties that are transparent, parallel, and comprehensible to humans. The authors mentioned in their study that the DRB set uses pre-trained neural networks as feature descriptors to achieve excellent performance, enabling transparent and parallel training [

55,

56]. Numerical examples using reference datasets demonstrate the excellent accuracy of the suggested method, particularly in producing human-intelligible blurred lines [

57,

58,

59,

60,

61,

62].

Through an extensive review of existing literature, numerous researchers have focused on enhancing the performance of CNN models on Human facial emotion datasets encompassing realistic facial emotions, real-world images, and in-laboratory training pictures. Therefore, there is a need for robust models that accurately classify Human emotions and extract significant image features through the integration of deep learning fusion techniques. In our study, we propose the utilization of a DenseNet model based on CNN. This study evaluated HFER models, demonstrating the high performance and accuracy of the DenseNet model. Furthermore, a comprehensive evaluation is performed on several FER datasets, including EMOTIK, CK+, FER-2013, and AffectNet datasets, and the superior performance of the proposed model is systematically demonstrated.

2.1. Transfer Learning in Pre-Train CNNs

The subsequent subsections discussed in detail the transfer learning for our proposed framework.

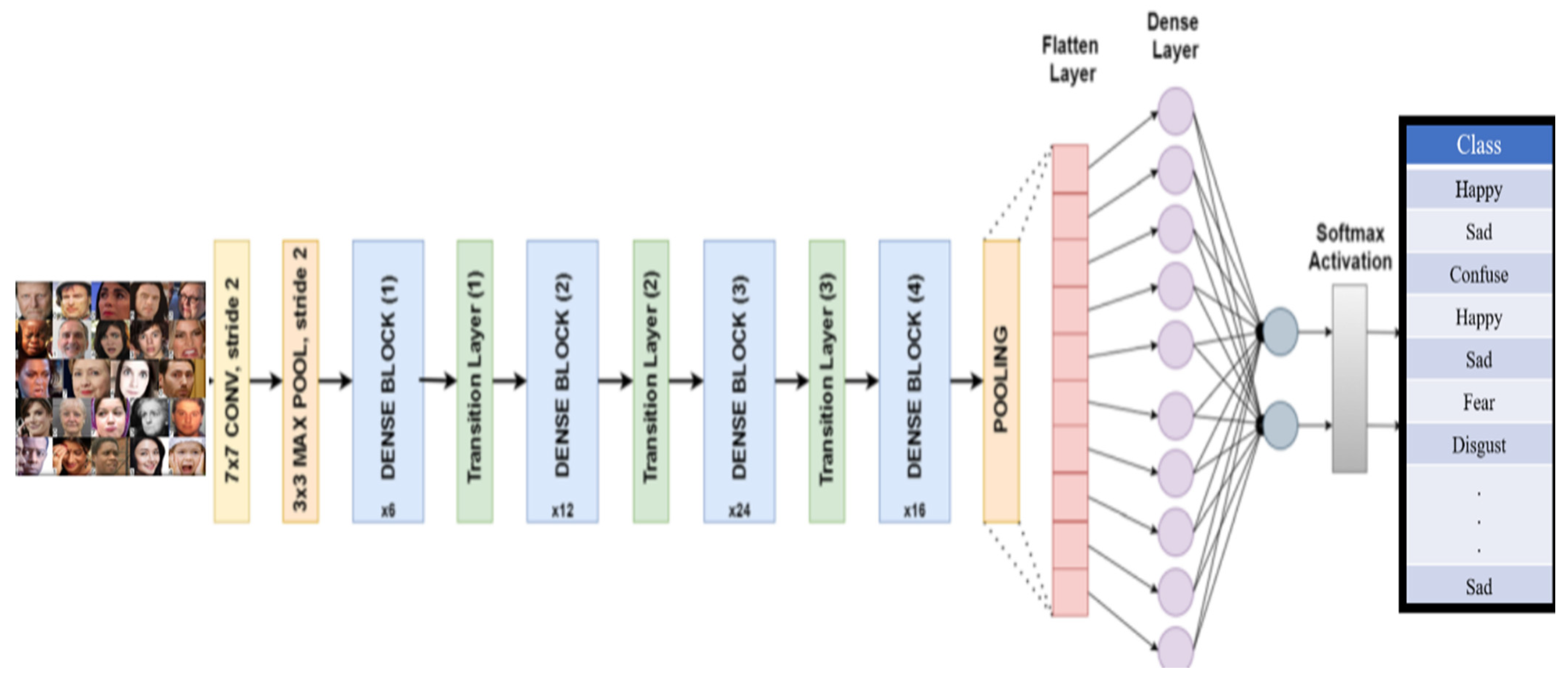

2.1.1. DenseNet

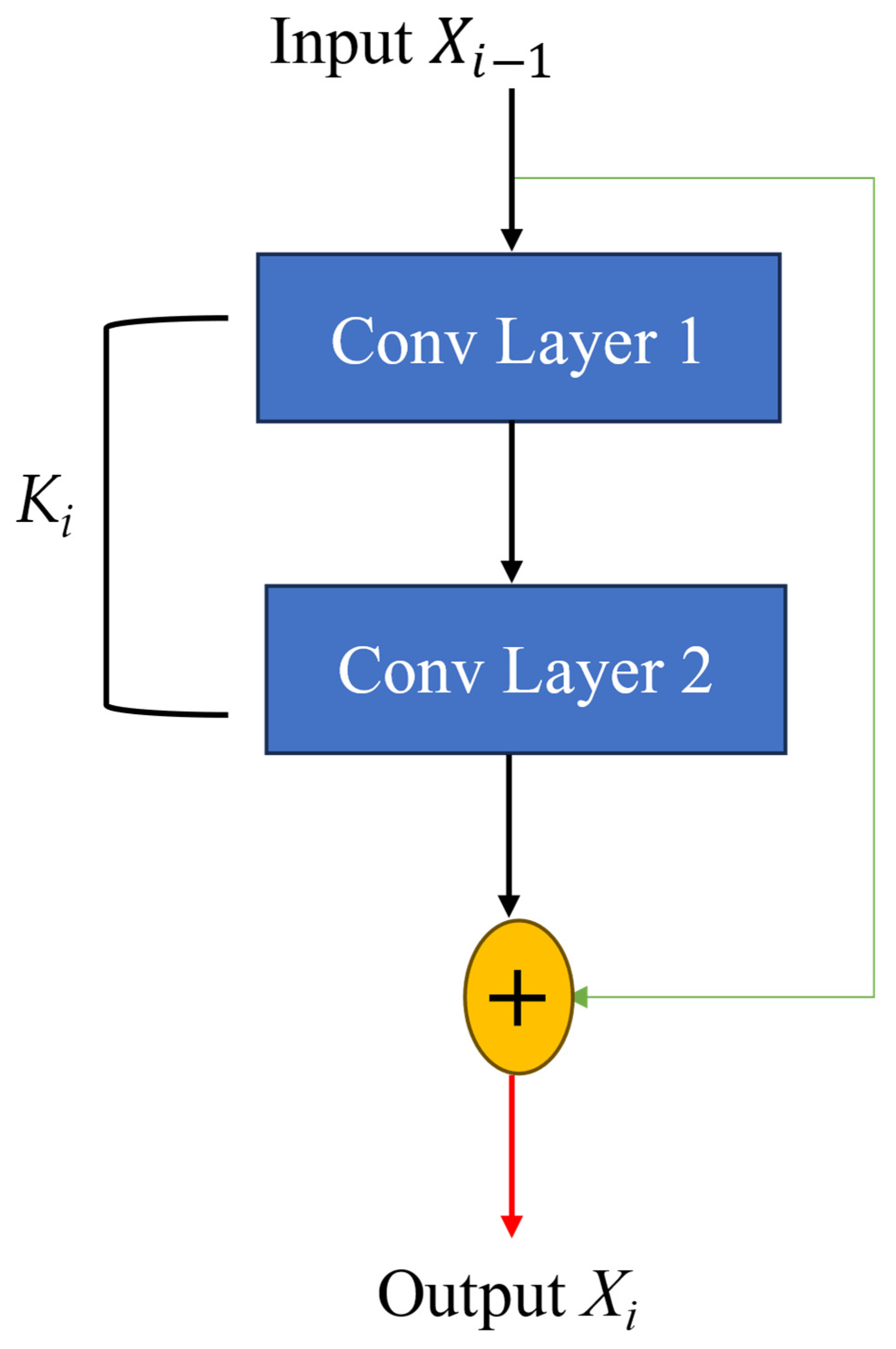

The ResNet structure, as depicted in

Figure 2, showcases the utilization of convolutional layers in training the CNN. The intermediate values,

Xi and

Ki, are passed to the subsequent CNN phase. Unlike a conventional CNN, where all layers are connected, as represented by Equation (1), ResNet addresses the challenge of deep networks by incorporating skip connections. These connections facilitate the bypassing of at least two levels, mitigating issues such as the vanishing or exploding gradient. Through these short connections, ResNet introduces the concept of residual learning. Under specific transformations and conditions, the input and output of the convolutional layers are combined with the input layer, yielding the overall output as defined by Equation (2).

DenseNet introduces a distinctive approach by incorporating feature map concatenation to adapt the model. The different arrow colors in

Figure 2 represent specific functional pathways within the ResNet block, such as blue arrows indicating the standard forward propagation of features through convolutional layers. Green arrows represent shortcut connections that skip at least two layers, addressing vanishing gradient issues and enabling residual learning, and red arrows highlight transitions or operations that include summation or concatenation of outputs to ensure seamless integration across layers. This merging process is represented by Equation (3), wherein the features are mapped from the preceding layer instead of the summation of outputs. By employing this strategy, DenseNet enables direct connections between all layers, facilitating the propagation of information throughout the network [

61].

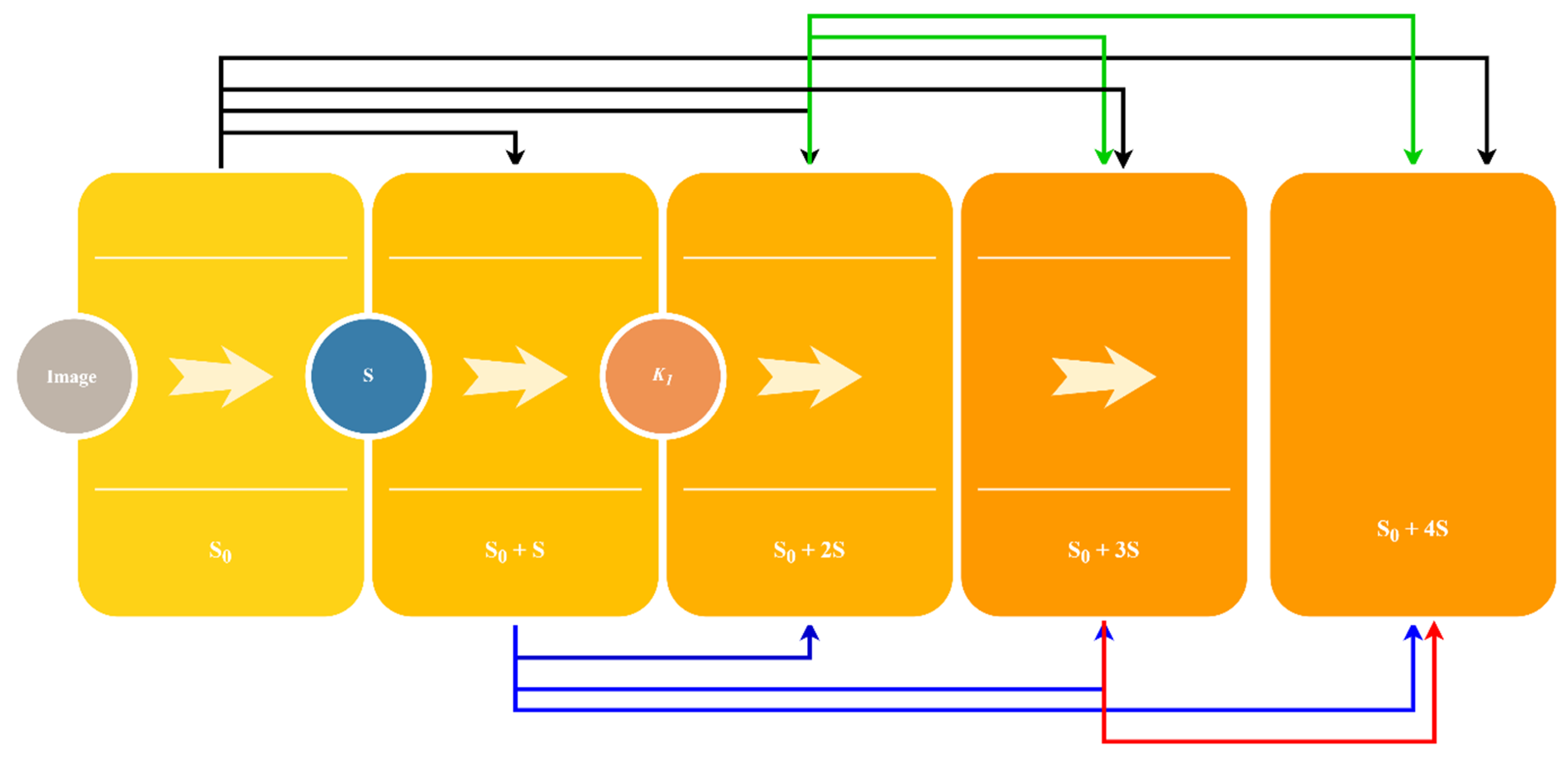

In the proposed DenseNet architecture [

63], the level index is denoted as “

i”, the nonlinear operation as “

K”, and the l-th level characteristic as “

Xi”. A block diagram of DenseNet illustrating its structure is presented in

Figure 3. By considering Equation (2), DenseNet establishes connections between previous layers by leveraging previously mapped feature maps. The term “collect” signifies the aggregation of feature maps, which are then routed to generate a new feature map. This novel approach in DenseNet offers several advantages, including alleviating the issue of vanishing gradients and promoting feature reuse. However, to ensure the feasibility of the DenseNet structure, certain modifications are necessary. Downsampling is employed to enable potential concatenations, and the overall connectivity pattern depicted in

Figure 3 progresses from left to right, increasing by

S + 1 at each step. Here,

S represents the actual modulus, and the additional values, such as

S + 2,

S + 2, and

S + 4, correspond to patch tabs within the structure.

The connectivity between levels is established through a total of 10 transitions in the DenseNet architecture.

Figure 4 illustrates that each image used to generate attribute

S is associated with one of the operations in

K1, resulting in a total of 5 tiers. Each layer introduces Sth values, starting from the top layer of

S0

+ 4

S. Here,

qac represents the number of features assigned to the previous layer. In this research study, the default value for

S is set to 32. However, given the large input size of the network, DenseNet incorporates a layer known as the “bottleneck” before the convolutional layer. This bottleneck layer aids in reducing the computational cost by reducing the number of image features. Additionally, transition layers are employed to downscale the feature maps within a particular process, taking into account the model’s accuracy [

62]. The dense blocks generate maps of S-function features, and a compression factor is assumed to limit the number of feature maps. This ensures that the number of feature maps remains consistent throughout. For further insight into the relationship between dense blocks and transition layers, refer to

Figure 4.

Figure 4 illustrates the comprehensive structure and process of DenseNet, which encompasses various key components. The architecture encompasses input layers, Global Average Pooling (GAP) layers, dense blocks, and transition layers, all of which contribute to seamless transitions between different layers. The inclusion of transition layers, composed of convolutional layer values and a merged hidden layer, ensures consistency across the layers, particularly in terms of batch normalization. It is noteworthy that the GAP layer functions similarly to conventional pooling methods but with an enhanced emphasis on feature reduction, leading to a condensed feature map representation. Consequently, the GAP layer reduces the segment-level feature map to a single numerical value, emphasizing its role in effective feature reduction.

2.1.2. Features Weights Optimization

To optimize the CNN optimization and detailed training model on the pre-training dataset, perform separate evaluations. Based on the results, we utilized DenseNet’s pre-trained CNN, which offered unique image enhancement capabilities. In addition, we employed augmentation techniques to generate additional datasets for training. This approach served to address overfitting concerns by augmenting the training data alongside the CNN model during the training process. For our study, we applied random vertical and horizontal offsets of 10% to the original dimensions of the images. This adjustment was further complemented by random rotations of up to 20%, effectively diversifying and expanding the dataset. Horizontal image flipping was also employed to augment the dataset size. To optimize each network, we removed all fully connected layers and focused solely on the convolutional parts of the model architectures.

Lastly, we add a global mean pooling layer to the convolutional layers, followed by a SoftMax nonlinear classification layer. We used 50 iterations of stochastic gradient descent (SGD) with a learning rate of 0.0001 and a decay rate of 0.90 to maximize the network’s performance. We use cross-entropy squared as our loss function, and the validation set is used to carefully adjust the hyperparameters. To ensure the utmost clarity and consistency, distinct approaches are undertaken for inputting data into each network. During the initial stage of data preparation, all images are appropriately scaled to align with the specific model inputs and subsequently saved in various file formats. Use a uniform initialization rule and learning rate to train both models to ensure consistency throughout the process. This consistency can be likened to creating a standard foundation for a building structure, where each layer is built with the same quality of materials and precision, ensuring the overall structure is stable and coherent.

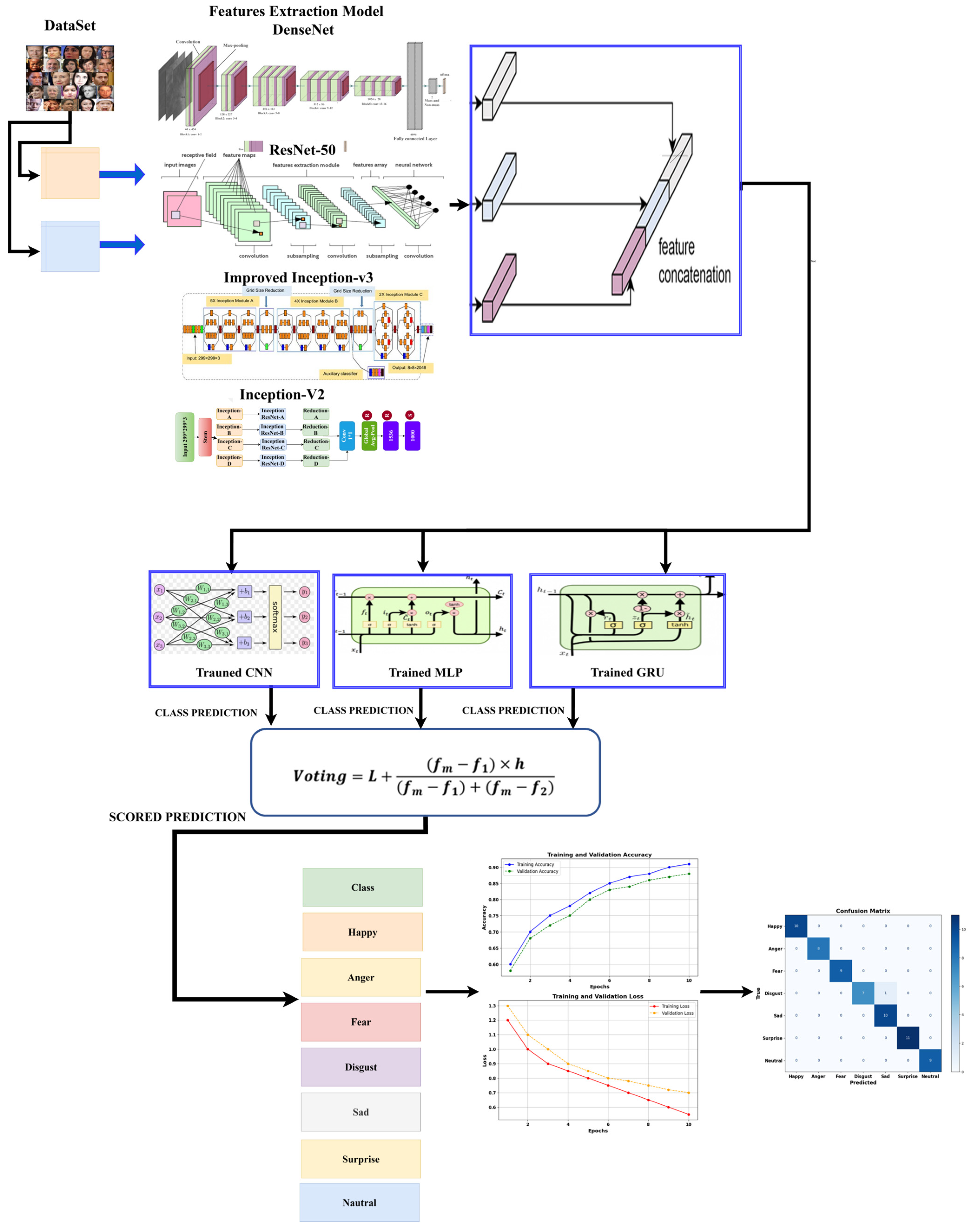

3. Materials and Methods

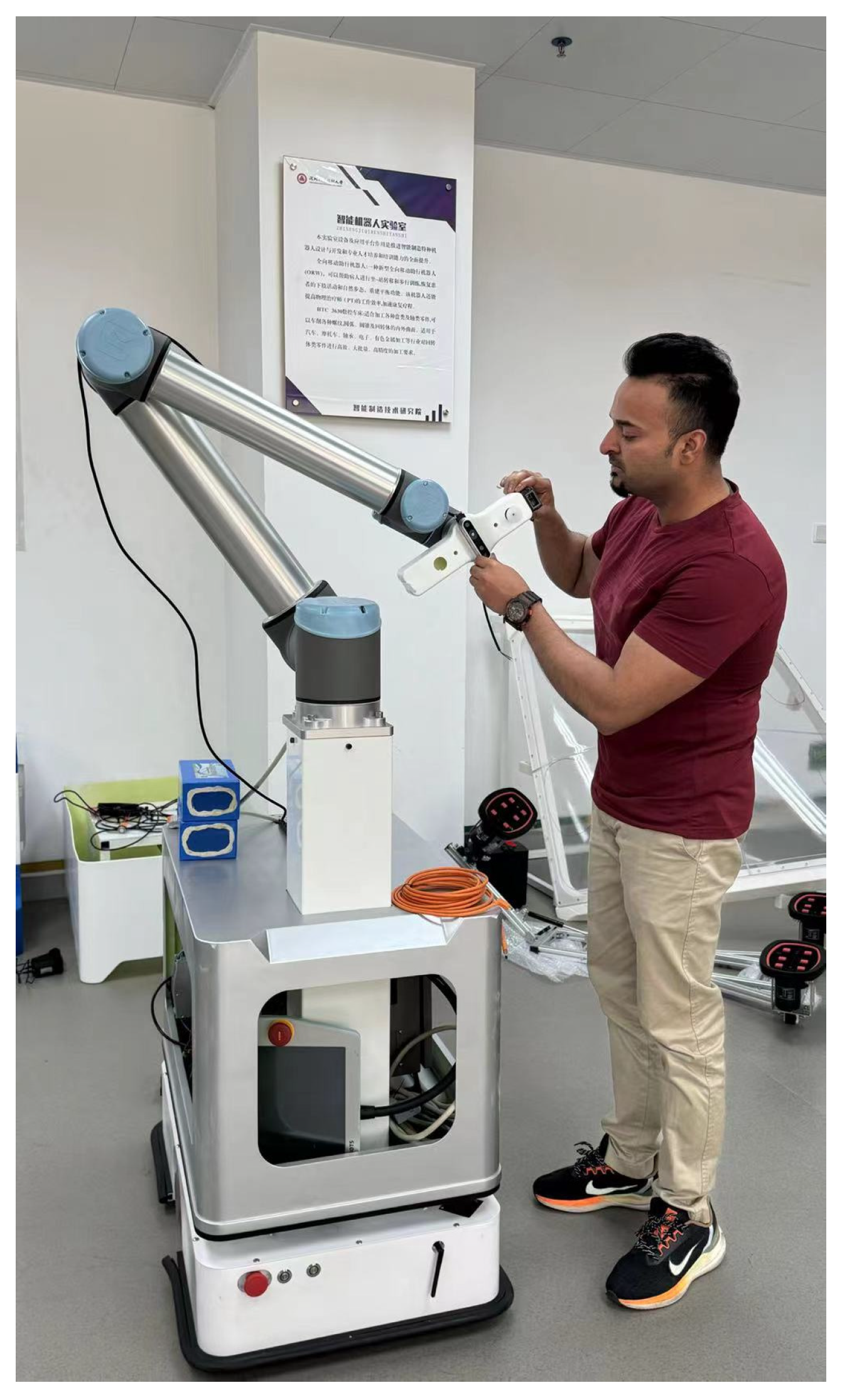

In this study, we proposed an ensemble learning framework comprising a diverse range of multi-level classifier combinations trained on distinct feature sets. To begin with, the hardware structure of our mobile robot is shown in

Figure 5, and an overview of the overall process of this framework is shown in

Figure 6. Various feature sets are prepared through different feature extraction techniques, as illustrated in the feature preparation levels within

Figure 6. Additionally, feature sets obtained through a specific method undergo further refinement using diverse feature selection techniques, resulting in different subsets of features. Our approach leverages four advanced CNN models, namely DenseNet, ResNet-50, Improved Inception-V3 deep architecture, and Inception-V2, to extract high-dimensional features from training and validation images. These extracted features are subsequently fused to form a single consolidated feature vector.

The Inception-v3, adopted in this study, is a sophisticated deep learning framework designed for high-performance image recognition tasks, specifically engineered for the intricate demands of high-level image recognition.

Figure 5 shows a methodically structured model, beginning with an input block that leads into a series of three diverse Inception Modules—A, B, and C. These modules are strategically designed to optimize spatial dimensions progressively through two additional grid-size reduction blocks, while an auxiliary classifier and a concluding output block are integral components of this system.

Distinctive enhancements such as the RMSProp optimizer, batch normalization, and label smoothing have been incorporated, each contributing to substantial improvements over its predecessors. These innovations mitigate overfitting and refine the network’s loss functions, elevating the model’s operational effectiveness. The Inception-v3 network is composed of 42 layers, adept at handling input images with dimensions of 299 × 299 × 3. Within this framework, the inception modules utilize factorized convolutions to minimize parameter counts while preserving the network’s overall efficiency in a way that Module A transforms a single 5 × 5 convolution into a pair of 3 × 3 convolutions, while Module B decomposes a 3 × 3 convolution into a 1 × 3 and a subsequent 3 × 1 convolution, optimizing both computational resources and parameter efficiency. Likewise, module C is designed to improve the high-dimensional representation, following the efficiency found in module B.

Additionally, Inception-v3’s grid size reduction block aims to efficiently compress feature maps, reminiscent of techniques used in famous models such as AlexNet or VGGNet, by reducing the number of filters and increasing the depth to improve the reduction in computational costs. The architecture incorporates policy clustering operations to further reduce computational requirements. Inception-v3’s auxiliary classifier sits on top of the final 17 × 17 layer grid and acts as a regularizer to promote more robust network training and convergence. This elaborate and precisely designed architecture not only pushes the boundaries of convolutional network capabilities but also establishes an advanced benchmark for operational efficiency and effectiveness in sophisticated image-processing tasks.

Furthermore, a classification ensemble is developed using three advanced deep learning classification techniques: CNN, Gated Recurrent Unit (GRU), and Multi-layer Perceptron (MLP). The final output is determined through a scoring scheme that takes into account the anticipated class labels in a majority of classifications. In real-world scenarios, ensemble learning allows for a more flexible configuration beyond the one shown in

Figure 6.

The proposed framework not only serves as a systematic approach to structured thinking but also presents a solution to the problem of HFER. For instance, a primary set can be created by combining several base classifiers that were trained on the same feature, which can then be further enriched by incorporating other base classifiers to create subsets. This multi-level integration strategy enables the formation of different levels of integration. In this context, granular computing can be viewed as a philosophical strategy for structured thinking, effectively addressing the challenge of perceiving human emotions. Considering that each ensemble comprises multiple classifiers, it can be conceptualized as a unified model within the ensemble learning framework. To account for varying image sizes, the concept of grain size is introduced, wherein the image size is proportional to the model size, thereby improving the overall class-wise performance. As images and representations can significantly vary in length and width, the proposed learning framework treats each level of classification fusion as distinct levels of granularity, effectively capturing diverse levels of detail. MLP models are well-established supervised learning tools widely used in computational neuroscience and parallel distributed computing. As a type of RNN, GRU models provide a more efficient alternative to Long Short-Term Memory (LSTM) networks, making them ideal for scenarios where data efficiency is critical. Although LSTM models generally provide better performance on large datasets, GRU still has advantages due to its efficiency and effectiveness in various applications. Each model processes data through a unique architecture and capabilities, such as B. combinations and correlations tailored for specific tasks to accurately recognize early human facial emotions in healthcare systems.

This approach facilitates a higher level of integration, leading to enhanced performance. Moreover, utilizing these classifications results in quadratic integral outcomes. Additionally, it is possible to create second-level integrations from any set of features and combine various first-level or lowest-level integrations to generate comprehensive integration results. The model processes tabular data to efficiently estimate and create a confusion matrix. Simulation plots are then overlaid on this matrix to demonstrate the model’s predictive performance and verify its effectiveness in real-time emotion recognition scenarios. For training the proposed CNN ensemble classifier, we use different benchmark datasets such as AffectNet, CK+, FER-2013, and EMOTIC datasets. It is important to emphasize that separate models were trained for all datasets. Different testing and validation packages are used to tune the model parameters and evaluate the class-wise performance. The confusion matrix generated includes crucial metrics such as TP, FP, FN, and TN outcomes. It can be utilized to assess the model’s performance by calculating precision, recall, and F1-score values.

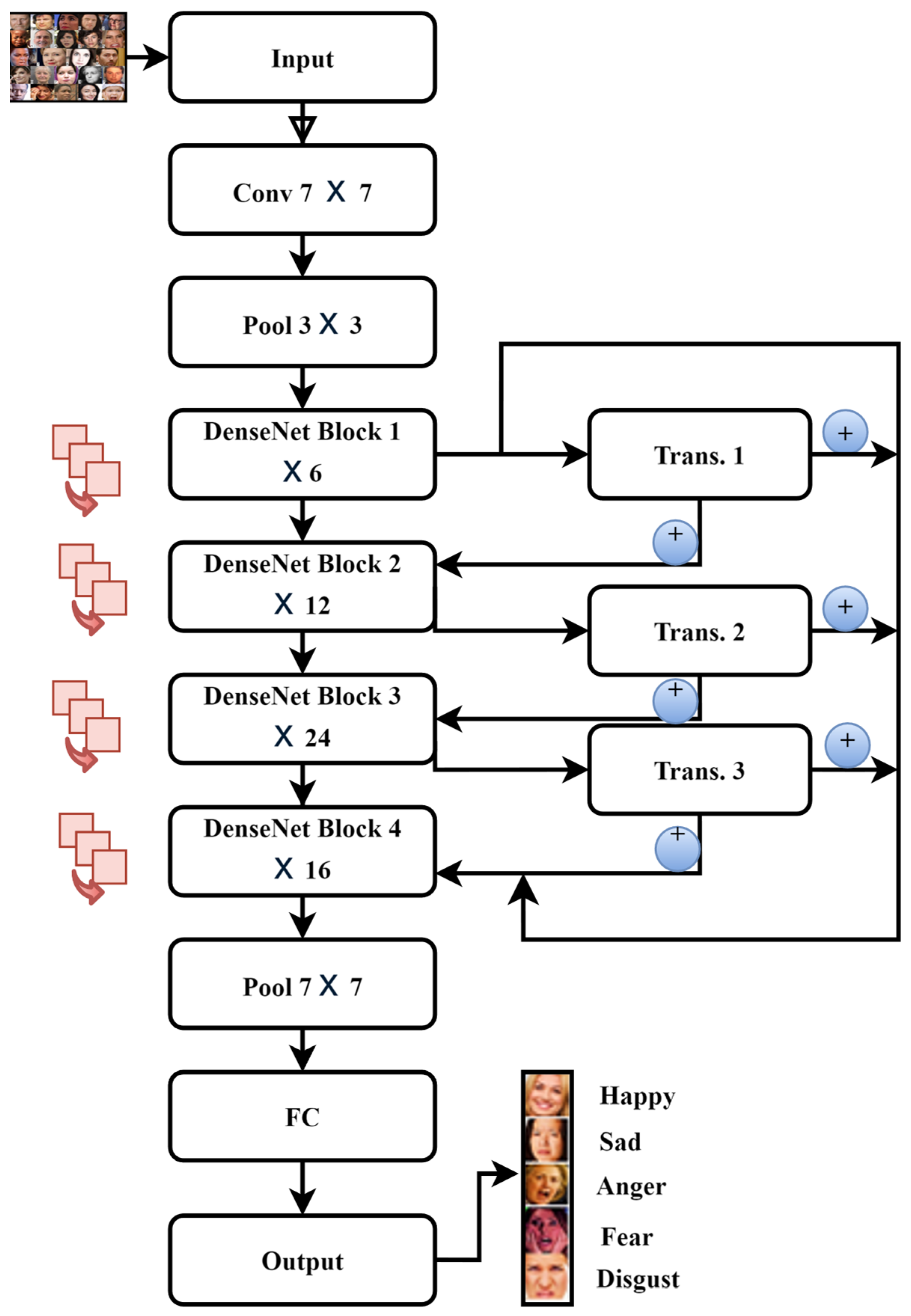

The proposed system integrates multiple components for emotion recognition. First, the face detection system (

Figure 7) identifies regions of interest in the input images. Next, these regions are processed through the ensemble framework (

Figure 6), where features are extracted, fused, and classified using CNN, GRU, and MLP models.

Figure 8 illustrates the CNN component, showing the probability outputs for each emotion class that contribute to the final ensemble decision.

3.1. Detailed Overview of the Proposed Ensemble Classification Model HFER

Dataset Input: Images of human faces are collected from multiple benchmark datasets (e.g., EMOTIC, CK+, FER-2013, and AffectNet). These datasets consist of images representing various emotions, such as happy, sad, confused, fear, and disgust. We use four datasets, each with 70% of the data randomly divided into training and 30% into validation, to compare the performance of the suggested system.

Preprocessing Stage: Before feeding the images into the model, images are resized to a standard resolution to ensure uniformity across the input. Normalization techniques are applied to enhance image quality and remove noise. Data augmentation (e.g., rotation, flipping, scaling) is used to improve model generalization and performance, especially for imbalanced datasets.

Feature Extraction Stage: After preprocessing, images are input into four pre-trained CNN models: DenseNet, ResNet-50, Improved Inception-V3, and Inception-V2. These models are used to extract high-dimensional features from the images. Each model extracts a different set of features, capturing diverse patterns and representations (e.g., facial texture, edges, and spatial details).

Feature Concatenation: The extracted features from all four models are fused together through a feature concatenation process to form a unified, comprehensive feature vector. This step combines the strengths of all CNN models and ensures that the most relevant information from the images is retained.

Furthermore, the two flows in the architecture are as follows:

Flow 1: Feature Extraction Flow: In this flow, images from the dataset are processed by the four CNN models (DenseNet, ResNet-50, Improved Inception-V3, and Inception-V2). Each CNN extracts distinct features from the images. These features are then concatenated into a single feature vector.

This flow ensures that the model captures multiple levels of features (e.g., low-level edges, mid-level textures, and high-level semantic patterns) for robust facial expression representation.

Flow 2: Classification Flow: The concatenated feature vector is input to an ensemble of classifiers that are trained CNN for convolutional processing, trained MLP (multi-layer perceptron) for feature fusion and classification, and trained GRU (gate recurrent unit) for sequence learning tasks. Each classifier independently predicts the class label (emotion) for the input image. The outputs from these classifiers are combined using a weighted voting scheme (as shown in the formula in

Figure 6). This formula (4) ensures that the final prediction is based on the majority vote while weighting the confidence scores of individual classifiers. This ensemble-based approach enhances the robustness, accuracy, and generalizability of the model, as each classifier contributes to the final prediction.

The purpose of voting is to improve accuracy. It combines the strengths of multiple classifiers, reducing the risk of errors from any single model. Robust predictions: The weighted scheme ensures that the model gives more importance to classifiers with higher confidence in their predictions. Flexibility: The voting mechanism can adapt to different levels of confidence from each classifier, making the ensemble approach dynamic and reliable.

The voting mechanism aggregates predictions from CNN, MLP, and GRU classifiers using a weighted formula. The class with the highest combined score is selected as the final prediction, ensuring robust and accurate human facial emotion recognition. The ensemble classification model uses a weighted voting scheme to make the final prediction based on the outputs of three trained classifiers: CNN, MLP, and GRU. This approach ensures that the final decision incorporates contributions from all classifiers while prioritizing more confident predictions.

where

is the feature score of the current model (e.g., CNN, MLP, or GRU).

are the feature scores of other models in the ensemble.

h The weight assigned based on the classifier’s performance or confidence and

L A baseline threshold or reference value to ensure stable scoring.

Additionally, voting schemes that work in the proposed ensemble model are discussed further.

Feature scores from classifiers: Each classifier (CNN, MLP, and GRU) outputs a class prediction with an associated confidence score or probability for each class.

Weighting confidence scores are the confidence scores (feature scores ) of the classifiers that are compared. The weighted voting formula adjusts the influence of each classifier’s score based on its relative difference from the others. Classifiers with higher confidence or better alignment with the ensemble’s overall performance are given more weight.

Combining predictions: The scores are combined using the weighted voting formula. The result reflects the contribution of all classifiers but prioritizes those with higher confidence.

The final class decision is the final class label (e.g., happy, sad, and fear) is assigned based on the class with the highest aggregated score after voting. If two classes have equal votes, a tie-breaking mechanism (e.g., selecting the classifier with the highest confidence) is used.

The CNN framework for HFER employs several key techniques and mathematical formulations (Equations (5)–(8)) to achieve high accuracy and efficiency. The convolution operation is defined as:

It is the backbone of feature extraction in our CNN models. To introduce non-linearity, the ReLU activation function,

, is applied. Pooling layers, specifically max pooling, are utilized to reduce spatial dimensions, as described by

In the face detection component, the RPN generates object proposals with a loss function combining classification and regression losses:

The RoI pooling mechanism ensures consistent input sizes for subsequent layers, defined by

DenseNet’s architecture [

61,

62,

63,

64], critical for feature extraction, is characterized by dense connectivity, where each layer receives inputs from all preceding layers,

. The ensemble model integrates a multi-layer perceptron (MLP) with each layer described by

, enhancing the system’s classification robustness. These formulas are applied in the feature extraction and face detection stages of our architecture to achieve robust and accurate HFER. The novelty of this study is in the integration of these standard components within the ensemble classification framework, leading to improved performance and classification accuracy.

3.2. Emotion Detection Network

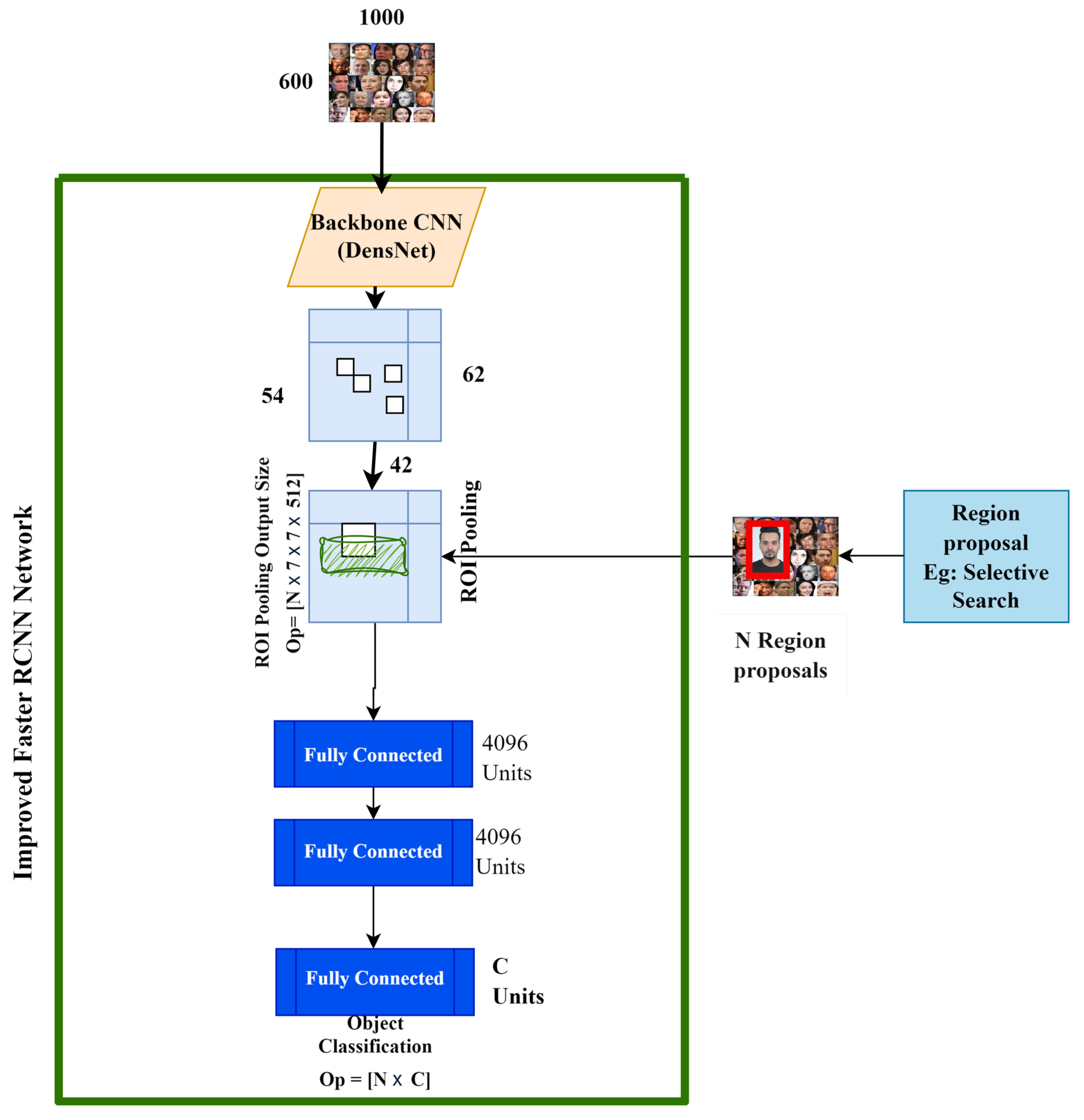

Emotion detection networks employ a specific face recognition process on images, utilizing existing datasets for detection. In our research, we propose an integration of R-CNN and deep learning techniques with CNN functions to comprehensively analyze images and patterns within rectangular regions. The Faster R-CNN model operates through two distinct phases. As in

Figure 3, the initial stage involves identifying regions within the target image that contain relevant features, while the subsequent stage focuses on classifying these features across all identified regions. Furthermore,

Figure 4 shows the improvement of the face recognition system by replacing the accelerated R-CNN training block with a modified CNN block (Inception V3). The R-CNN implementation enables high-precision CNNs from classification tasks to face detection, primarily by transferring a supervised pre-trained image representation from image classification to face detection. The improved Faster R-CNN, the proposed face detector, accomplishes emotion identification through two stages. We first fully integrate the detector’s image and then apply multiple layers to the segmented images and the central images. The system extracts several features by scanning a dataset comprising regions of interest with an input image. We compare these locations and visual representations with the dataset for additional analysis and detector identification. The second module applies the enhanced Faster R-CNN model for image regions.

Figure 7 displays the segmented output image and the original test image, providing details about the facial recognition system. These modifications aim to dramatically increase the precision and overall effectiveness of face recognition jobs in intelligent medical systems.

The proposed framework uses advanced deep learning techniques to improve facial emotion recognition accuracy, which is very important for smart medical systems. DenseNet (Densely Connected Convolutional Networks) efficiently transfers features and gradients and elegantly captures the complex details required for accurate emotion recognition. The workflow starts with an input image, which is processed by DenseNet, and robust features are extracted. The region suggestion process identifies promising areas in the image that may contain faces or facial features, allowing you to focus on important elements of the image. The 600 × 1000 image represents the input image dimensions used for processing by the Improved Faster R-CNN Network. This input image typically contains multiple faces (or regions of interest), which are analyzed by the backbone CNN (DenseNet) for feature extraction. These dimensions are standardized to maintain consistency across different input images, ensuring compatibility with the network’s architecture. The choice of 600 × 1000 aligns with standard object detection pipelines, where the aspect ratio is preserved while resizing the input image to meet computational and memory constraints. Once a region is selected, it is considered a region of interest (ROI). Here, the proposed area is normalized to a uniform size (typically 7 × 7 × 512) to ensure a consistent input size in subsequent layers. For example, in an emotion recognition task, the network may detect and classify faces into predefined emotion categories, such as “happy”, “angry”, or “neutral”. The “Region Proposal” block in the diagram generates multiple candidate ROIs using methods such as Selective Search or Region Proposal Networks (RPNs). These ROIs are processed by the backbone CNN and subsequent layers to classify the detected regions. This standardization is important to maintain system consistency and improve computational efficiency for facial emotion recognition tasks in smart medical systems. The system focuses on detecting faces or objects within the input image and classifying their associated attributes (e.g., emotion or identity). While the current example shows a face with a bounding box, the system can generalize to other tasks depending on the dataset and training objectives.

Normalized ROIs are processed by two fully connected layers with 4096 units each to achieve high-level feature abstraction for accurate classification and localization tasks. Format the aggregation function as. A fully connected layer is split into two branches. One for bounding box regression and one for object classification. In this architecture, a multi-layer perceptron (MLP) is integrated into the deep ensemble model to improve the accuracy of the system. This ensemble model integrates the predictions of multiple neural networks to create a powerful and accurate classification system for early recognition of human facial emotions in smart medical systems. This cutting-edge approach effectively addresses challenges such as changing illumination, occlusion, and facial dynamics, providing a powerful solution for advanced human–computer interaction in medical environments. This advanced system improves detection accuracy and classification efficiency and operates perfectly in real-time scenarios, highlighting its importance in modern medical technology.

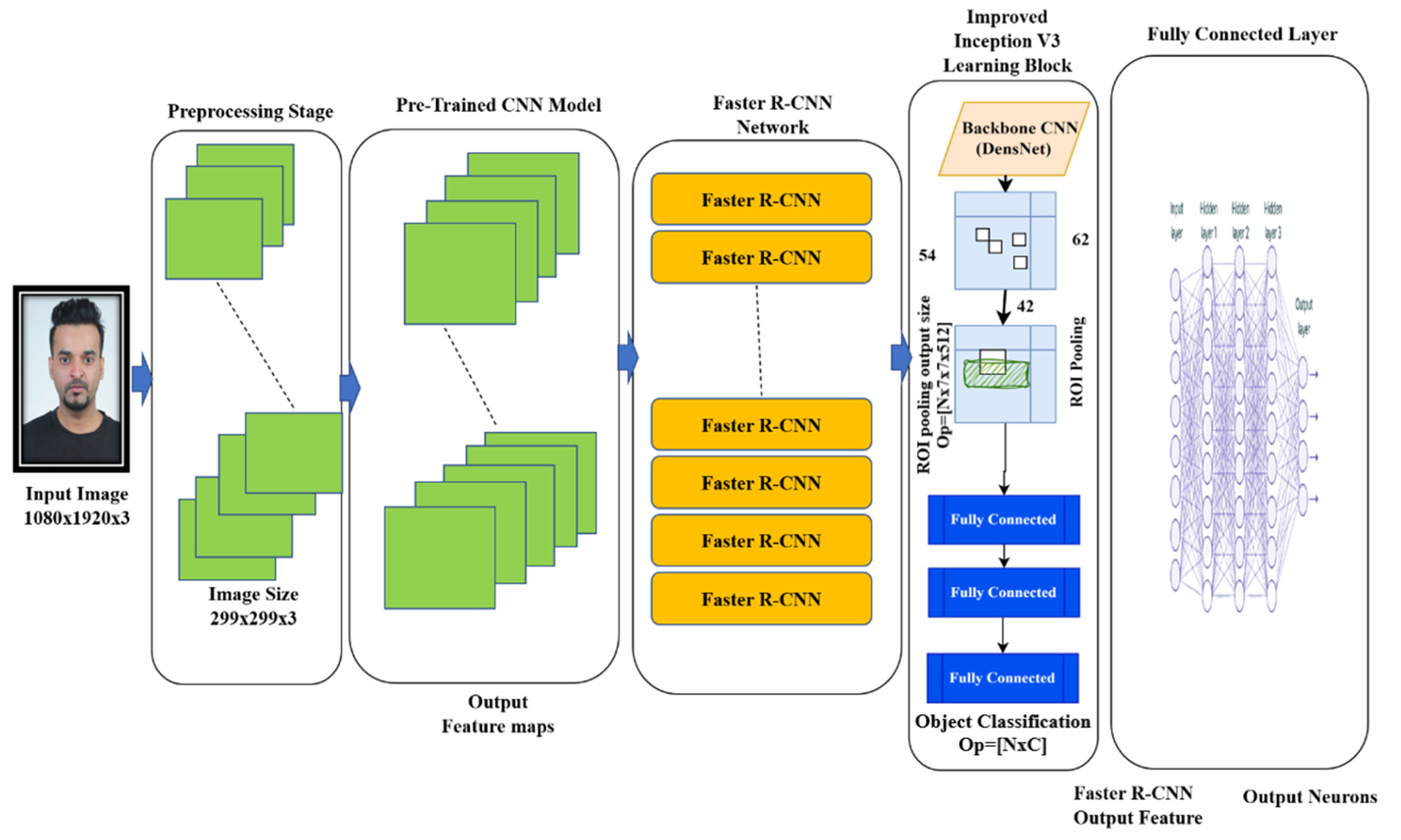

Figure 8 illustrates the high-level integration of accelerated R-CNN models and deep learning approaches. The process starts with an input image (typically 1080 × 1920 × 3), preprocessed and initially downsampled to 299 × 299 × 3 to ensure consistency and compatibility with subsequent neural network layers. These resized images are fed into a trained CNN (DenseNet, ResNet-50, Improved Inception-V3 deep architecture, and Inception-V2). CNNs are trained on various datasets to extract important features, create feature maps, and capture important facial features required for accurate emotion recognition. This capability is enhanced by integrating advanced Inception V3 learning building blocks and incorporating multiple convolutional layers. This standardization step is important to maintain the integrity of geographic information. The merged ROIs are then passed through multiple fully connected layers, each consisting of 4096 units, to achieve a high level of feature abstraction to generate features for final training. The output of this layer is divided into two branches. One branch focuses on bounding box regression to adjust coordinates to detect faces accurately. The second branch deals with object classification, classifying each ROI based on different facial emotions and identifying underlying emotions. In the final stage, the system creates neurons that represent different facial emotions and is able to perform a detailed classification of human emotions. This innovative approach not only improves the accuracy of detection and classification tasks but also creates a reliable framework for advanced human–computer interaction.

3.3. Regional-CNN

The custom R-CNN Faster method uses a bounding box to define the ROI in the input image during the image processing step. The system incorporates scaling and cropping techniques to guarantee accuracy and alignment in subsequent processing stages. This CNN component leverages learned SVM features to generate accurate classification results after receiving input regarding the size and structure of the image.

3.4. Data Augmentation

Data augmentation extends and enriches existing datasets by applying different image processing techniques to a single image, thereby increasing the amount and variety of training data. This approach is especially useful when the original dataset is limited, as it helps avoid overfitting and improves model generalization. Versatile and extensible with techniques such as changing RGB color channels, applying affine transformations, performing rotations and transformations, adjusting contrast and brightness, introducing and removing noise, sharpening, flipping, cropping, and resizing. Create a dataset. These transformations ensure that the model can accurately recognize emotions under different conditions and distortions. In smart healthcare systems, such enriched datasets enable deep learning models to reliably and accurately classify facial emotions, thereby enhancing patient interaction and care.

Figure 9 illustrates the diversity of transformations applied, highlighting how each technique contributes to a robust dataset that improves the performance and resilience of emotion recognition models in real-world healthcare applications. This approach uses image processing techniques to process a single image, effectively increasing the amount and variety of available image data.

3.5. Transfer Learning Based on Human Emotion Recognition (HER)

As part of our approach, we leverage the capabilities of deep learning models and transfer learning strategies to improve their performance on one-to-many tasks. While this approach has predominantly been employed in object recognition domains, such as speech recognition from images and computer vision-based image recognition [

50], its applicability can extend to diverse domains. In our specific investigation, we adopt this approach to analyze and evaluate high-resolution images captured by the detector, paving the way for subsequent assessments. To facilitate this process, we leverage a reference dataset for training purposes and employ a data augmentation strategy. By utilizing transfer learning, we alleviate the need to train an entirely new model from scratch, thereby mitigating the computational burden associated with extensive training epochs.

3.6. Transfer Learning

Transfer learning is a widely employed technique in various processes and stages, as illustrated in

Figure 10. Each stage plays a distinct role in the overall transfer learning methodology.

The initial stage of the methodology involves uploading the data to a pre-trained network for comprehensive analysis. The weights assigned to the network are dependent on the availability of data. Subsequently, the last layer is loaded and replaced with a reduced-channel variant to expedite the learning process. Following this, a new layer is introduced, encompassing a training phase that utilizes 100 images comprising 7 classes. The subsequent stage entails predicting and evaluating the accuracy of the network. Ultimately, the results are disseminated, and pertinent outputs are generated.

In this study, transfer learning was applied to improve the efficiency and accuracy of the proposed HFER. Transfer learning allows us to leverage pre-trained CNN models such as DenseNet (201 layers), ResNet-50, and Improved Inception-V3, which have already been trained on large-scale datasets (e.g., ImageNet). The rationale behind using transfer learning is to exploit the robust feature extraction capabilities of these models while avoiding the need to train deep neural networks from scratch, which is computationally expensive and time-consuming. DenseNet was chosen specifically due to its dense connectivity property, where each layer receives inputs from all preceding layers. This architecture ensures feature reuse, mitigates the vanishing gradient problem, and improves gradient flow through the network. DenseNet generates more compact feature maps by concatenating feature outputs rather than summing them, which makes it ideal for extracting rich and high-dimensional features from facial expression images.

Steps for the transfer learning and DenseNet model

Dataset Preprocessing: Images were collected from benchmark datasets (FER-2013, CK+, EMOTIC, and AffectNet). Preprocessing steps included resizing images to match the input dimensions of the pre-trained CNN models, normalizing pixel values, and performing data augmentation (e.g., rotation, flipping, and contrast adjustment) to increase dataset variability and reduce overfitting.

Feature Extraction with Transfer Learning: Pre-trained DenseNet, ResNet-50, Improved Inception-V3, and Inception V2 models were employed as feature extractors. The convolutional base of each model was retained to extract high-level features from input images. Fully connected layers were removed, and a Global Average Pooling (GAP) layer was added at the end of the convolutional stage to obtain a compact feature representation. DenseNet extracted concatenated feature maps, leveraging its efficient layer connections to capture fine-grained facial details critical for HFER.

Feature Fusion and Concatenation: The features extracted from the four CNN models (DenseNet, ResNet-50, Improved Inception-V3, and Inception V2 models) were fused and concatenated to form a single, unified feature vector. This fusion process integrates diverse representations of facial features, enhancing the robustness of the system by capturing complementary information from each model.

Classification: The feature vector was passed to an ensemble classification model consisting of CNN, GRU (gate recurrent unit), and MLP (multi-layer perceptron) classifiers. Each classifier produced predictions for HFER, and the final class label was determined using a weighted voting mechanism, as shown in

Figure 6 of our model configuration.

By using DenseNet and other pre-trained models through transfer learning, we reduced the computational cost and training time while achieving high accuracy. DenseNet’s dense connections allowed the model to effectively utilize features from earlier layers, which are crucial for recognizing subtle facial expressions that convey HFER. Transfer learning provided a generalizable solution, as the models were fine-tuned on domain-specific datasets without requiring extensive retraining.

Transfer Learning Process

The proposed model leverages transfer learning with pretrained models, including DenseNet-121, ResNet-50, Inception-V3, and Inception-V2, to extract robust and diverse features for HFER. The pretrained models were chosen due to their proven ability to learn high-quality feature representations from large-scale datasets such as ImageNet.

DenseNet-121: Dense connections ensure feature reuse and improve gradient flow, capturing fine-grained details for robust feature extraction.

ResNet-50: Residual connections mitigate the vanishing gradient problem, allowing the model to learn deep hierarchical features.

Inception-V3 and Inception-V2: Multi-scale parallel convolutional filters capture features at different scales, which is critical for identifying subtle facial expressions.

Feature Extraction: For each pretrained model, the convolutional layers were retained to serve as fixed feature extractors.

Fine-Tuning: The fully connected layers of the pretrained models were replaced with task-specific layers (e.g., dense layers with a SoftMax classifier for emotion categories).

Selected layers from the convolutional base of the pretrained models were fine-tuned to adapt the feature extraction process to the emotion recognition task.

Ensemble Integration: Features from the pretrained models were concatenated to form a unified feature vector, which was fed into the ensemble of classifiers (CNN, GRU, MLP).

Learning Rate: A learning rate of 0.0001 was used for fine-tuning the convolutional layers, while a learning rate of 0.001 was used for training the fully connected layers.

Batch Size: A batch size of 32 was selected to balance computational efficiency and memory usage.

Optimizer: The Adam optimizer was employed for its ability to handle sparse gradients and adapt the learning rate dynamically.

Regularization: Dropout (rate = 0.5) was applied to prevent overfitting in fully connected layers. L2 weight decay regularization (λ = 0.0001) was used to penalize large weights.

The training process involved freezing the early convolutional layers of the pretrained models during the initial epochs to preserve learned features from ImageNet and gradually unfreezing these layers to fine-tune the feature extraction for emotion recognition.

Data augmentation techniques, such as horizontal flipping, random rotation, and brightness adjustments, were applied to improve generalization.

4. Results

The ensemble learning architecture incorporates a diverse range of multi-level classifier combinations trained on a variety of feature sets. First, we produce distinct feature sets using distinct feature extraction approaches, as demonstrated by the feature preparation stages. Our methodology uses four sophisticated CNN models to extract high-dimensional features from training and validation images: DenseNet, ResNet-50, Improved Inception-V3 deep architecture, and Inception-V2. We then fuse these extracted characteristics together to create a single, consolidated feature vector. This section assesses the performance of the suggested HFER approach on the benchmark dataset. We conducted a thorough analysis comparing the methods currently in use with the Residual Ensemble System (RES), which we employed in this investigation. Both qualitative and quantitative analyses of the gathered data are part of the appraisal process. We use four datasets, each with 70% of the data randomly divided into training and 30% into validation, to compare the performance of the suggested system. For MATLAB, R2021a is used for the simulation with a GPU, and the Nvidia model RTX2080Ti has 4 GB GDDR4 memory, and the bus width is 352 bits.

4.1. Datasets

When evaluating the HFER method proposed in this study, several factors should be considered, including work and time constraints and the need for a comprehensive evaluation of algorithm performance. This study includes the FER-2013, CK+, AffectNet, and EMOTIC datasets. By combining these different datasets, the evaluation covers a wide range of emotions and enables a robust and detailed assessment of the model’s performance in different scenarios. This versatile evaluation method enables a robust analysis of the performance of the HFER method on various benchmark datasets, highlighting its advantages over existing methods. The detailed results of this evaluation demonstrate the capabilities and potential of our proposed HFER approach and are discussed in the next section.

4.1.1. FER-2013 Dataset

The FER-2013 dataset covers this important aspect of facial recognition well; other datasets may have limitations, such as low contrast or occlusion of facial features. To visually represent the FER-2013 dataset,

Figure 11 shows some example images. Using the FER-2013 dataset and additional images obtained using Google APIs, we provided a comprehensive and diverse set of facial emotions for analysis. This ensures a robust and comprehensive evaluation of the method. To extend the dataset, we used Google’s application programming interfaces (APIs) to automatically retrieve additional images from Google Images. This combined dataset provides a solid foundation for evaluating the effectiveness of our proposed method. In the following paragraphs, we enter into a detailed analysis and evaluation of the model’s performance, explain its functionality, and demonstrate the results obtained in facial emotion recognition in different situations.

Our study makes extensive use of the FER-2013 dataset to facilitate the analysis and evaluation of the proposed method. The dataset consists of 35,887 images, all normalized to a resolution of 48 × 48 pixels, and most of the images were captured in real-world environments to ensure their applicability in real-world scenarios. During the training and testing phases, we assigned 28,709 images to the training set and kept 3589 images for the test set. Note that an important aspect of our study is to effectively identify people’s neutral emotions as a basic reference for sentiment analysis.

4.1.2. CK+ Dataset

The CK+ dataset, also known as Cohn–Kanade, consists of a collection of facial emotion images specifically designed for HFER (46). This is a publicly available dataset for HFE detection and analysis and is a valuable resource for activity-based identification. Datasets are created in a standardized format to enable comprehensive analysis. The dataset contains a total of 593 consecutive images from 123 subjects. In our study, we extracted the final image from each sequence. This image has been widely used in previous studies because it can be a reference for FER.

Figure 12 shows a representative example of seven images from this dataset, giving an overview of their content and illustrating the different representations captured. This standardized approach ensures consistency and reliability when evaluating the proposed method.

4.1.3. EMOTIC Dataset

The EMOTIC dataset is a large collection of images showing people in various situations, each accompanied by a comment indicating the person’s emotional state. In this study, we propose the EMOTIC dataset (from Emotions in Context), a dataset containing images of people in real environments labeled with their apparent emotions. It is very important to feel the emotions of others’ daily lives. People often change their behavior based on the emotions they perceive when interacting with others. Exist automatic emotion recognition has been used in particular. Various applications include human–machine interaction monitoring, robots, gaming, entertainment, and more. Emotions are modeled as distinct categories or points in a continuous space of emotional dimensions. This dataset contains 23,571 annotated images from 34,320 people and represents a rich resource for facial emotion recognition research. This results in a variety of images showing people doing different activities in different environments.

Figure 13 shows example images from the EMOTIC dataset. In this platform, annotators are tasked with labeling each image based on their perception of a person’s emotional state. This approach exploits the empathy, common sense, and natural ability of human observers to infer emotions from visual cues and provides a solid basis for the evaluation of our HFER method.

4.1.4. AffectNet Dataset

The AffectNet dataset is of great importance in the field of emotion recognition systems (ERS) [

48] and provides a large number of annotated facial images from real scenes. AffectNet provides a comprehensive dataset for studying facial emotions (FE) using over 1 million images taken from the internet. The images contain 1250 sentiments from 6 countries and were collected through 3 popular search engines. A major subset of this dataset includes 440,000 collected images focused on seven different emotional attributes. As a result, AffectNet is the most comprehensive publicly available dataset for evaluating emotion prediction (EP), titration, and pacing, and it enables researchers to conduct automated research in the field of facial emotion recognition. You will be able to implement it.

This study used deep neural networks to identify images and predict emotional intensity and valence through a hierarchical model with two primary branches. As seen in

Figure 14, the seven emotion categories that comprise the accuracy rating are happiness, surprise, sadness, fear, disgust, wrath, contempt, and neutrality. This method is worldwide and enables a thorough assessment of the model’s identification and analysis performance while, examining the thedifferent feelings: Certain emotional categories are associated with specific facial emotions.

4.2. Experimental Evaluation

As discussed in the previous section, the effectiveness of the proposed HFER method was extensively evaluated using several standard datasets that were also used by many researchers for multifuel purposes, but in this study, we used it specifically for emotion recognition of humans selected for this study. We carefully analyzed the collected data, performed a detailed process, and then made a comparison of the proposed technique with the current state-of-the-art RES techniques to demonstrate its superiority. The obtained results were comprehensively evaluated using both quantitative and qualitative measures. More specifically, the proposed system uses a set of four benchmark datasets. Each dataset is randomly split into two subsets: a training set and a test set. Note that the size of the training set is much larger than the size of the test set, thus ensuring a robust and comprehensive training process. This formula calculates the average precision as follows:

Here,

Accuracyi represents the accuracy of the i-th class, and n is the total number of classes. The summation symbol

∑ examines all classes, sums the accuracies of each, and divides the result by the total number of classes to obtain the average accuracy:

Again, this formula calculates the average loss. Here, Lossi represents the loss of the i-th category. The sum is repeated over all classes, adding each loss and dividing the result by the total number of classes to obtain the average loss.

4.2.1. Evaluation of the EMOTIC Dataset

The experiments conducted on the EMOTIC dataset followed a random split strategy, which ensured the generation of highly accurate results. In the initial phase, the EMOTIC augmented dataset was introduced, incorporating an additional 6237 training images to further enhance the model’s performance. For model validation, 792 images were used.

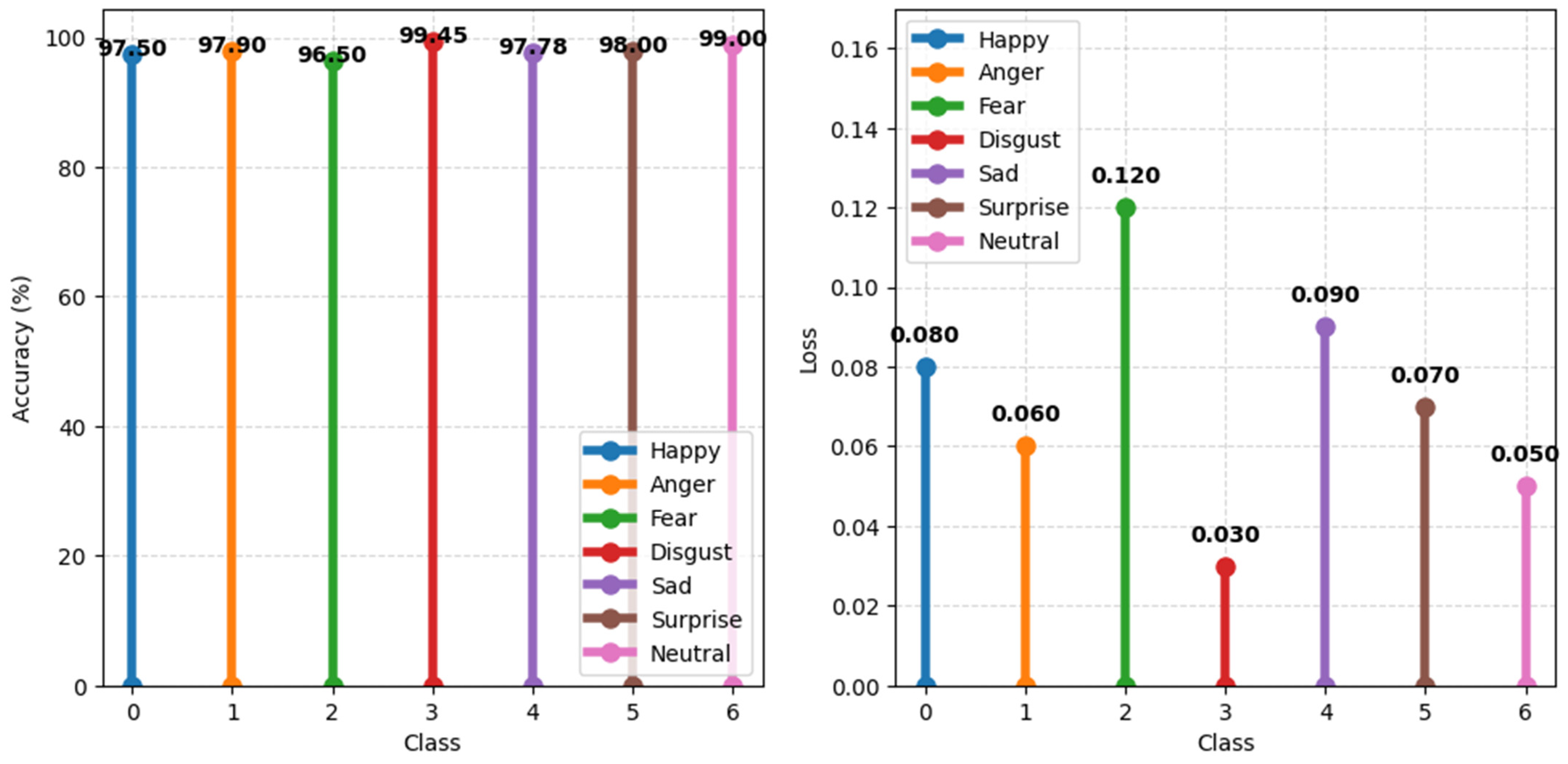

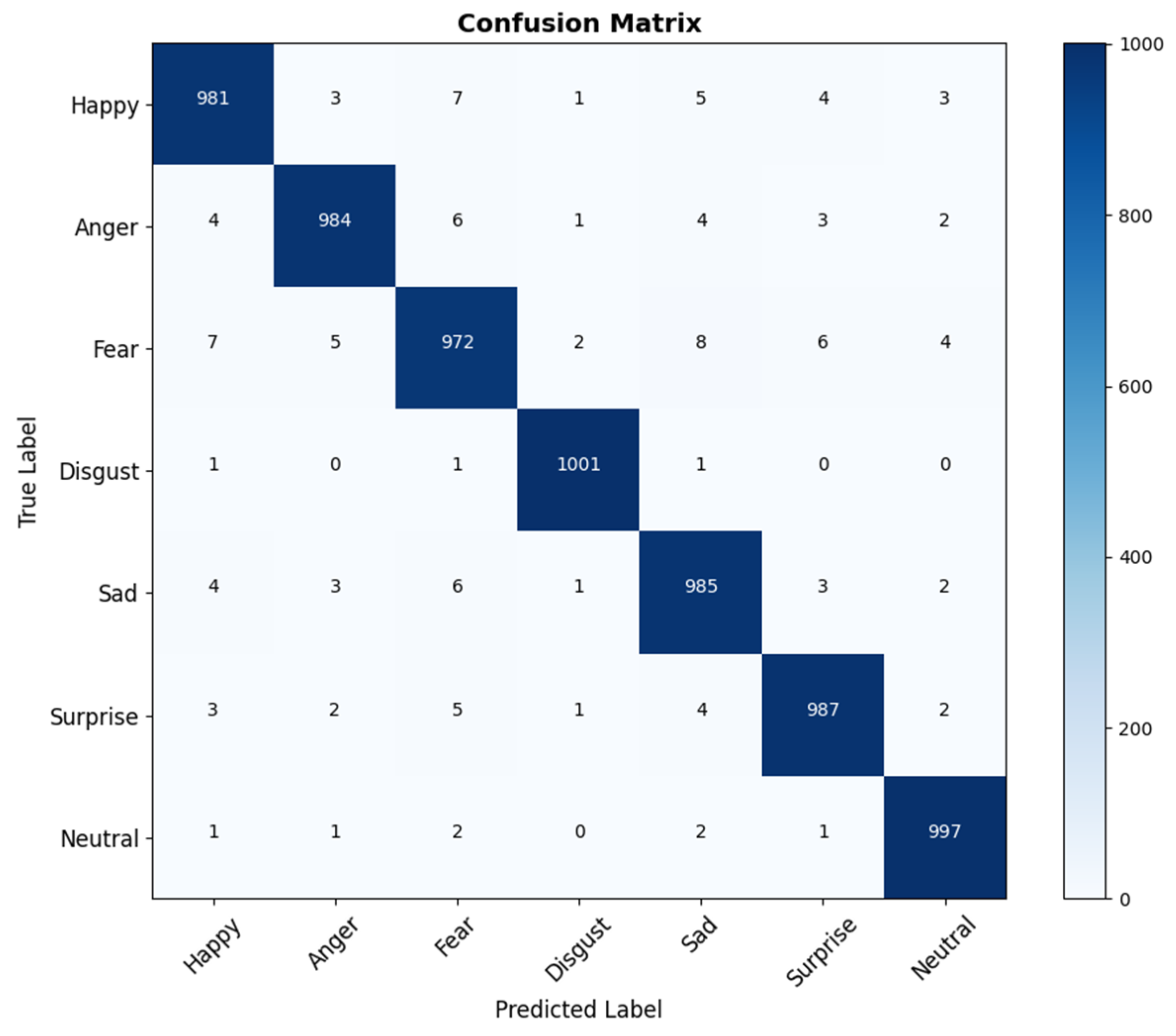

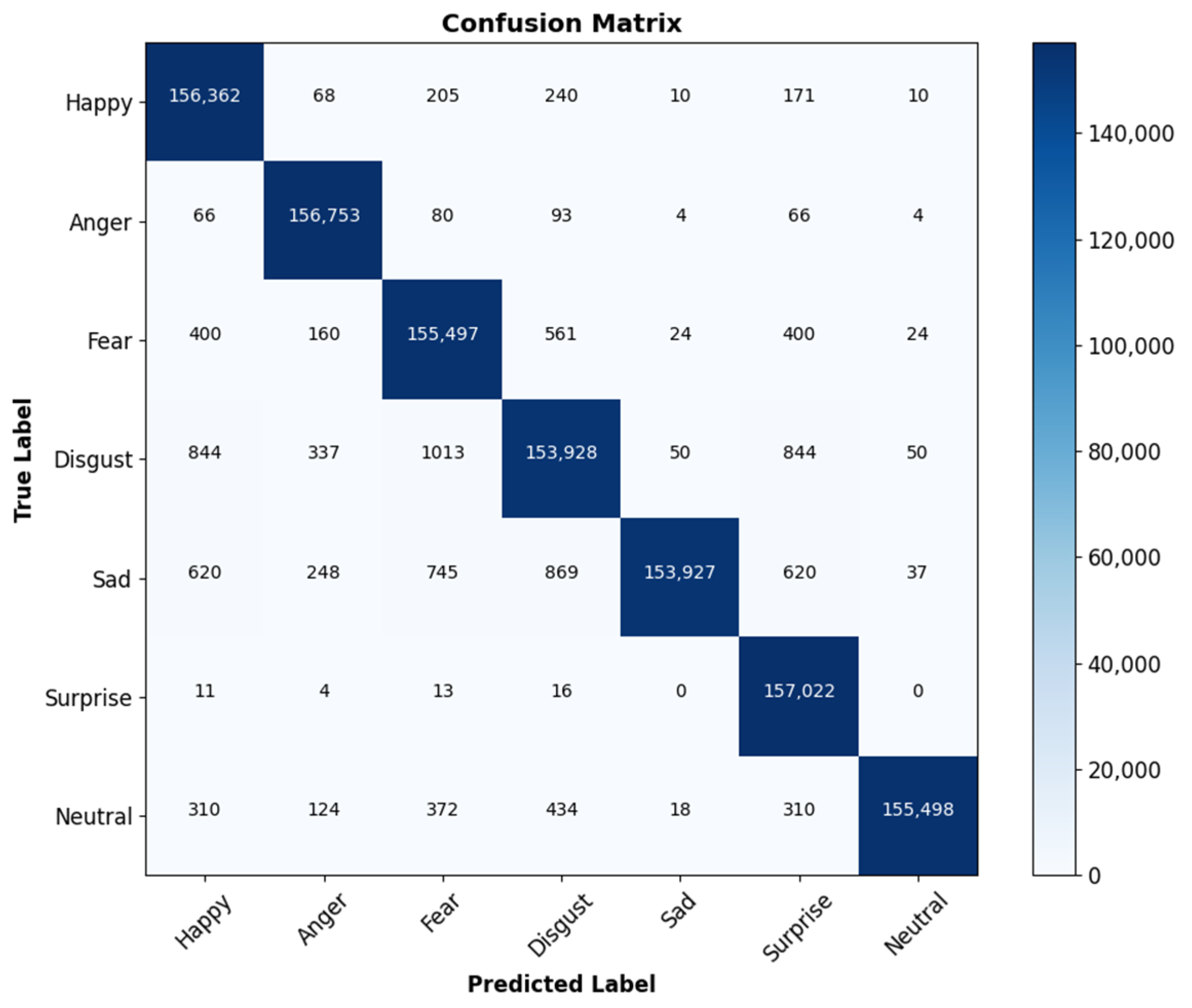

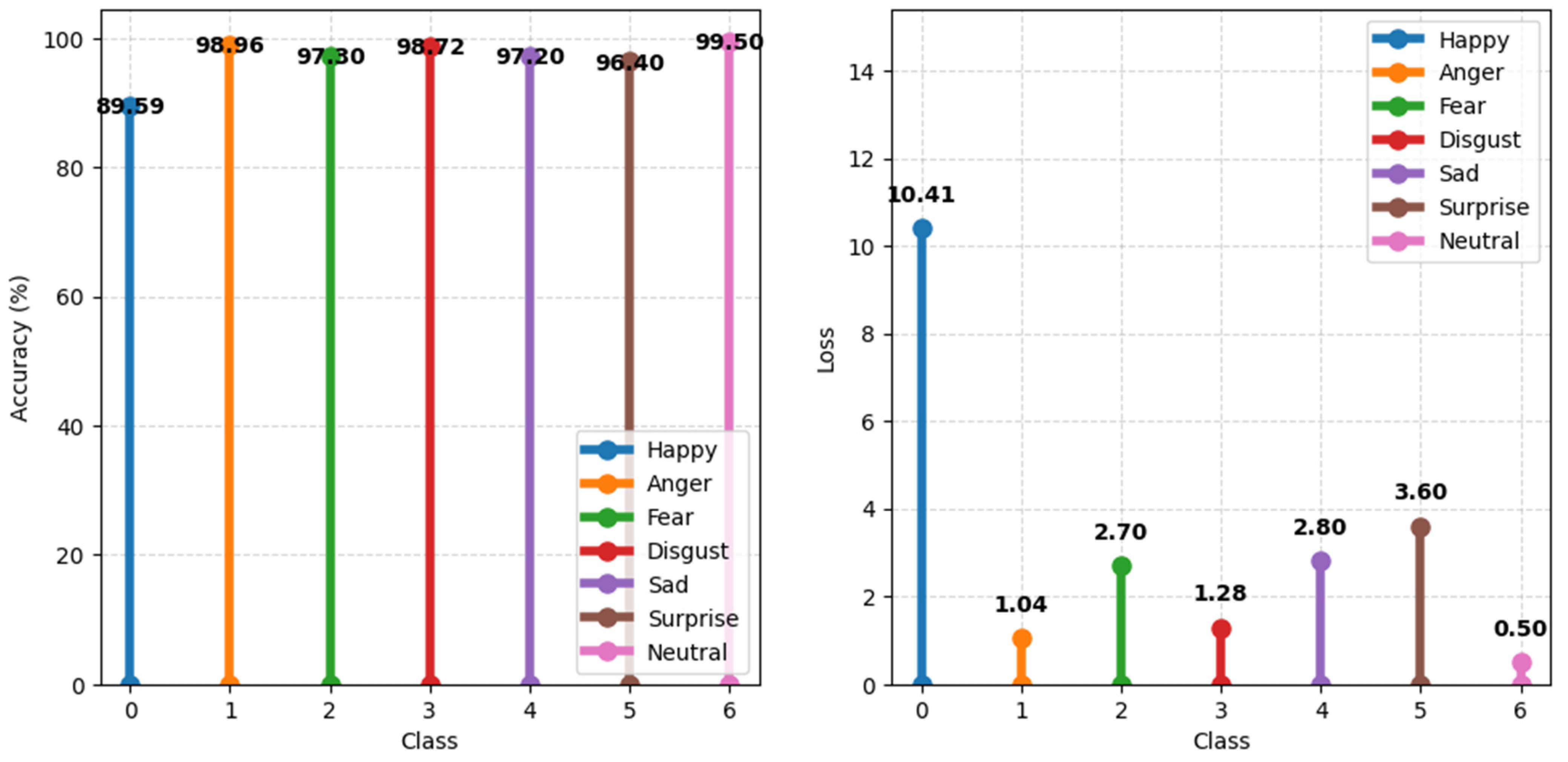

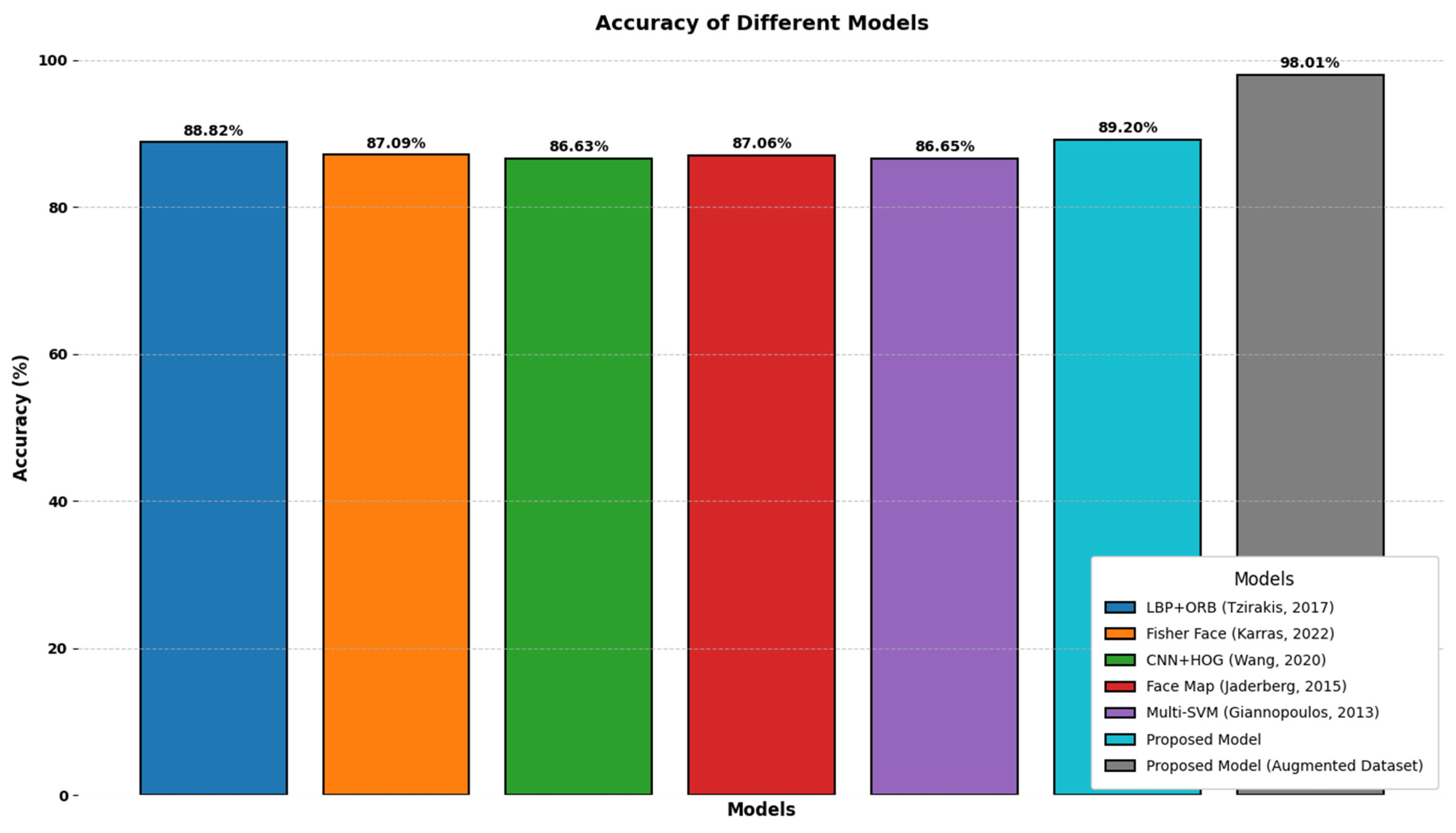

Figure 15 demonstrates the remarkable performance of the DenseNet CNN model on each class when trained using the EMOTIC augmented dataset, achieving an average accuracy of all class-wise 98.01%, while

Figure 16 demonstrates the confusion matrix. Notably, the scalability of the proposed DenseNet CNN model was observed as it exhibited a linear relationship with the number of epochs, particularly for large datasets.

4.2.2. Evaluation on the CK+ Dataset

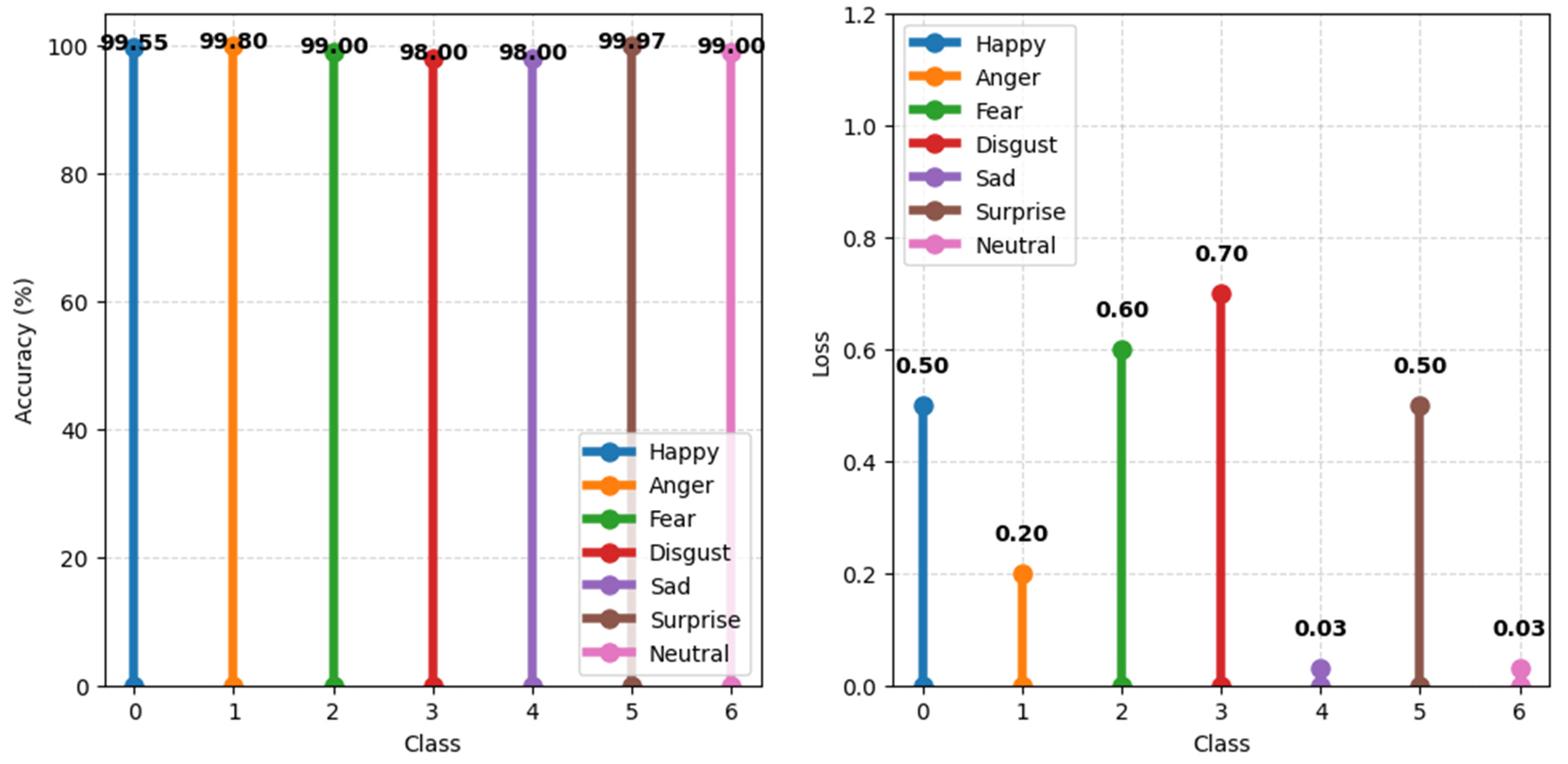

During the training and validation stages, we used the CK+ augmented dataset to test the DenseNet-CNN model’s class-wise accuracy and loss. We used a randomized methodology to ensure robust experimentation. The assessment procedure utilized an augmented CK+ dataset. The dataset comprises 6336 validation images and 14,652 training images for further analysis.

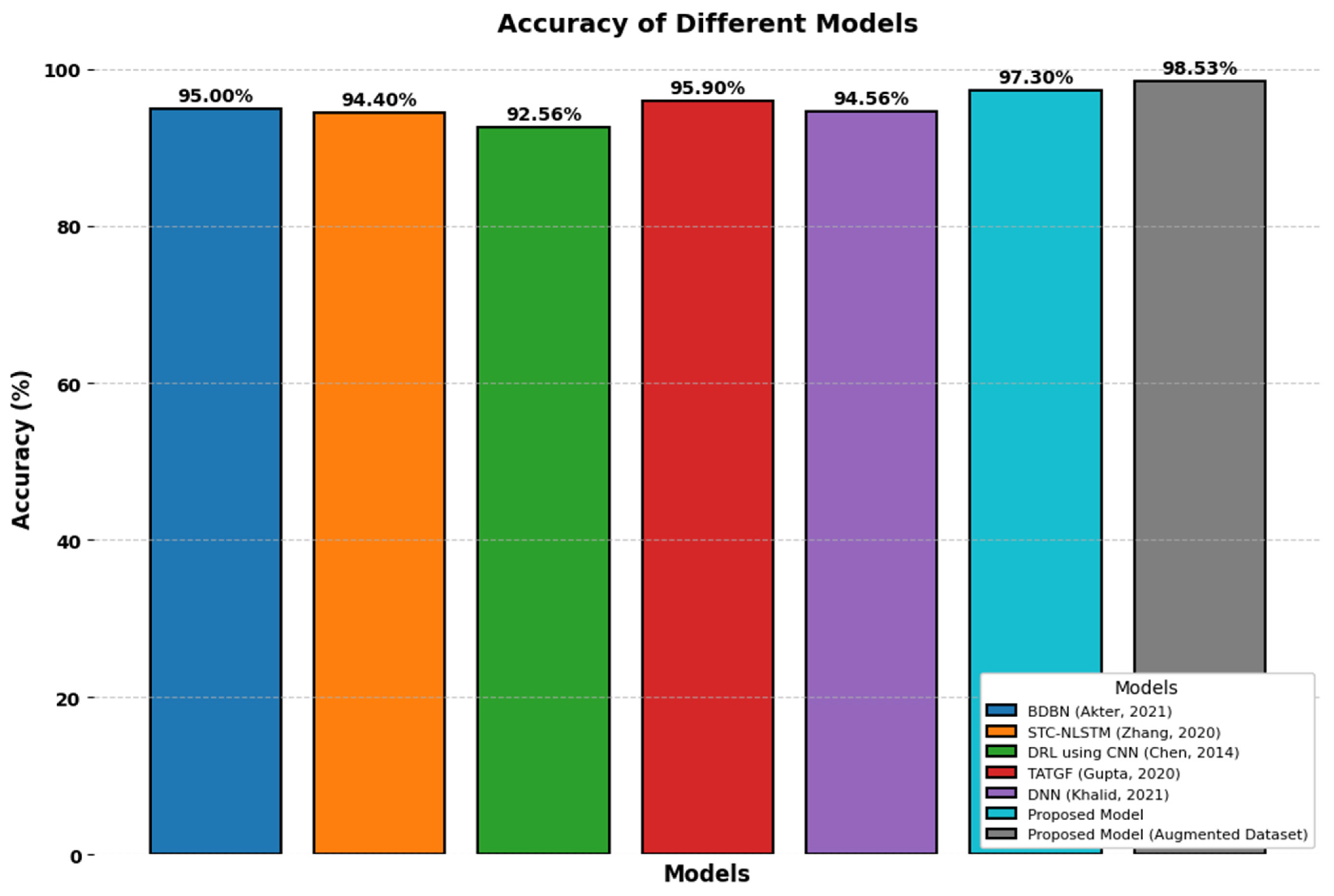

Figure 17 shows the class-wise accuracy and loss plot that was used to test how well the DenseNet-CNN model did on the CK+ dataset, while

Figure 18 shows the confusion matrix. The findings show how well the augmented CK+ dataset performs on average; across all classes, the average accuracy was 98.53%. When trained on the augmented CK+ dataset, the accompanying loss graph further demonstrates the efficacy of the DenseNet-CNN model.

4.2.3. Evaluation on the FER-2013 Dataset

Figure 19 illustrates plots of accuracy and loss for each class-wise during the training and validation stages using the DenseNet-CNN model. To ensure the analysis’s dependability, we employ random segmentation techniques. We added a large number of new images to the FER-2013 augmented dataset collection. A total of 321,691 validation images and 777,777 training images made up the augmented dataset. Furthermore,

Figure 19 demonstrates the utilization of the augmented FER-2013 dataset, achieving an overall class-wise average accuracy of 99.32%. Notably, the DenseNet-CNN model creates an advanced validation leak map that can be used as an extra test to see how well the proposed method works on the FER-2013 dataset, while

Figure 20 shows the confusion matrix.

4.2.4. Evaluation of the AffectNet Dataset

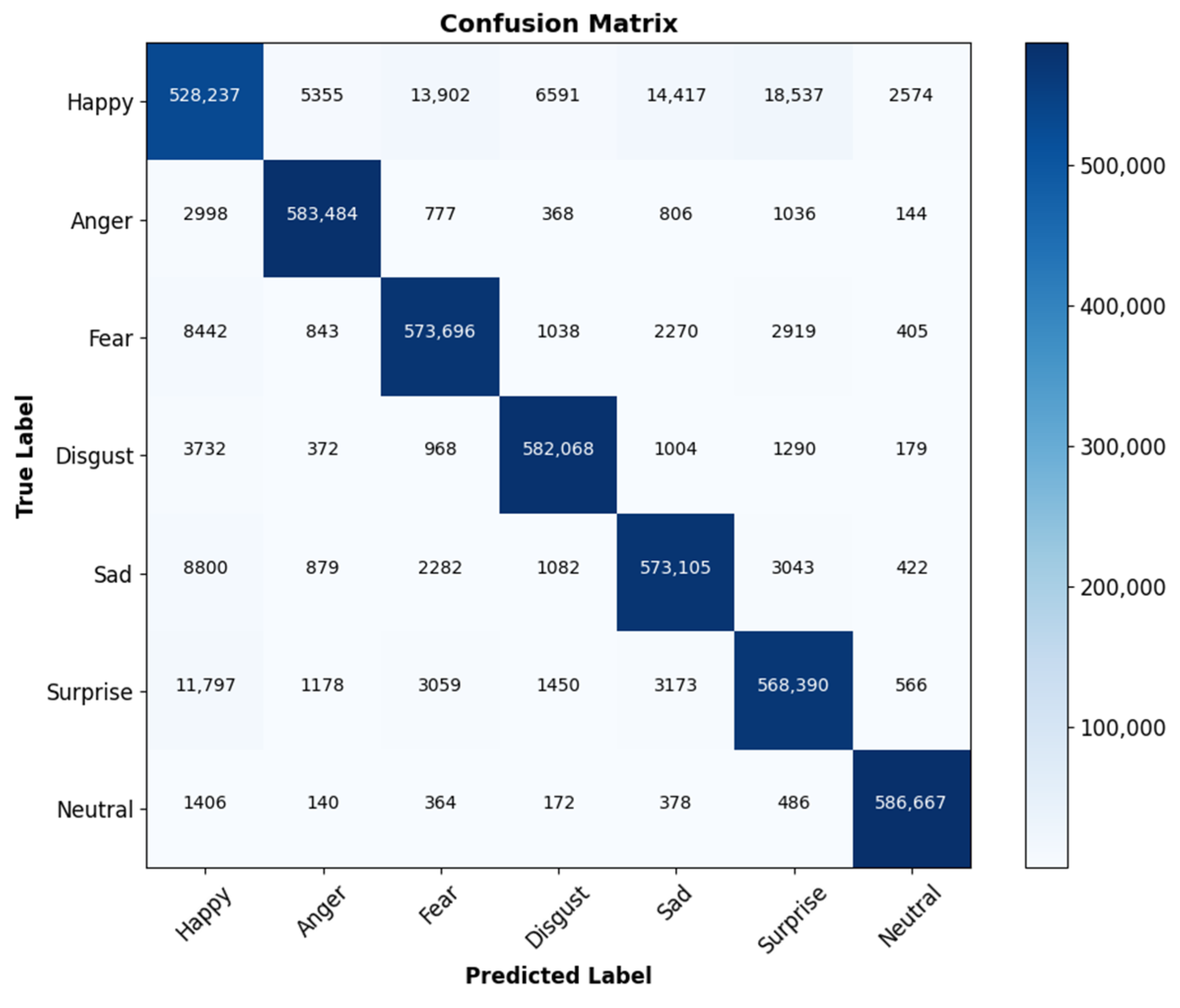

Figure 21 illustrates the outcomes of the performance evaluation conducted on the AffectNet-augmented dataset using the DenseNet-CNN model. The evaluation encompasses training and validation accuracy. We employed random data splits to ensure robust analysis. In the initial phase, we utilized a comprehensive collection of training and validation images from the extensive AffectNet-augmented dataset. Augmented data comprise 1,310,190 (30%) validation images and 2,817,105 (70%) training images. Using the DenseNet-CNN model, we obtained impressive results.

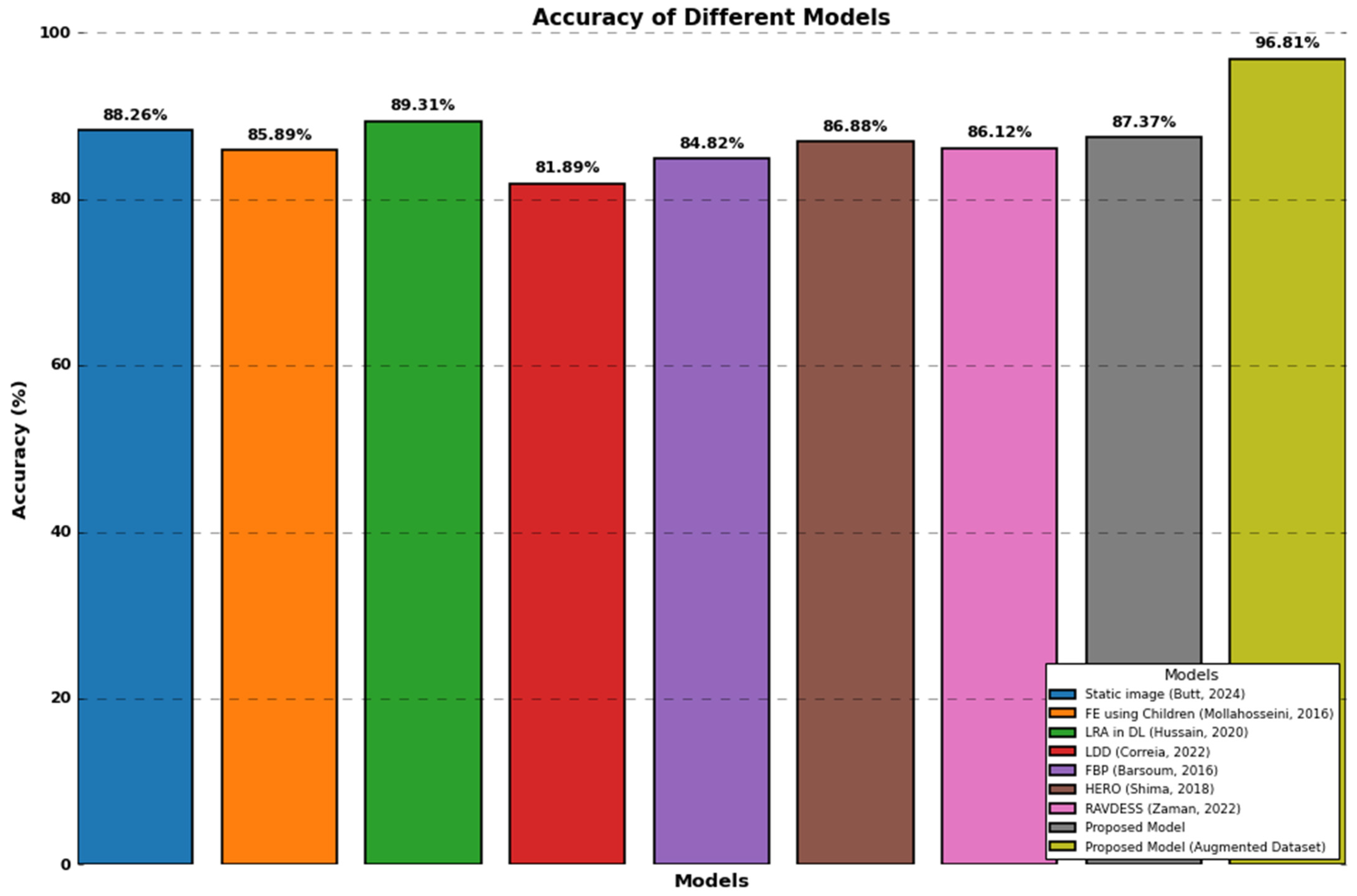

To achieve excellent average class-wise accuracy across all categories and further analyze the leakage graph. Additionally,

Figure 21 shows the impact of augmenting the AffectNet dataset by adding more classes using augmentation techniques. This significantly improved the average accuracy for all classes to 96.81%. The DenseNet CNN model’s leakage graph clearly shows this improvement. These surprising results confirm the effectiveness of the proposed method in accurately identifying emotions in the AffectNet dataset, while

Figure 22 shows the confusion matrix.

4.2.5. Computational Efficiency Analysis

The analysis compares the computational performance of this architecture (which uses DenseNet, ResNet-50, Improved Inception-v3, and Inception-v2) with traditional models (e.g., ResNet-50 or VGG16). The detailed analysis is shown in

Table 1.

Training Time: The proposed architecture requires slightly more time per epoch compared to ResNet-50 due to the ensemble feature extraction process and concatenation. However, the ensemble’s combined feature representation justifies this trade-off, as it enhances overall accuracy and robustness.

Inference Time: Inference for a single image takes 19 ms, which is slightly higher than ResNet-50 but competitive with VGG16. The ensemble classifiers (CNN, MLP, GRU) contribute to this increase, but they also provide improved classification performance.

Memory Usage: The architecture uses 8.1 GB of memory during training, higher than ResNet-50 due to the simultaneous use of multiple feature extraction models (DenseNet, ResNet-50, Inception-v3, and Inception-v2). During inference, memory usage is 3.3 GB, which is within acceptable limits for high-performance architectures.

FLOPs (Computational Cost): The proposed model requires 9.2 billion FLOPs per forward pass. This is higher than both ResNet-50 and VGG16 due to the ensemble of multiple feature extractors and classifiers, but the increased accuracy and robustness justify this computational cost.

Feature Extraction Advantage: By combining DenseNet, ResNet-50, and two versions of Inception models, the proposed architecture captures multi-scale, diverse features that significantly enhance performance, particularly for subtle and complex emotional states.

Ensemble Classifiers: The use of CNN, GRU, and MLP as an ensemble improves classification accuracy by leveraging different decision mechanisms. The weighted voting mechanism further ensures robust predictions, and the hypothetical results are shown in

Table 2.

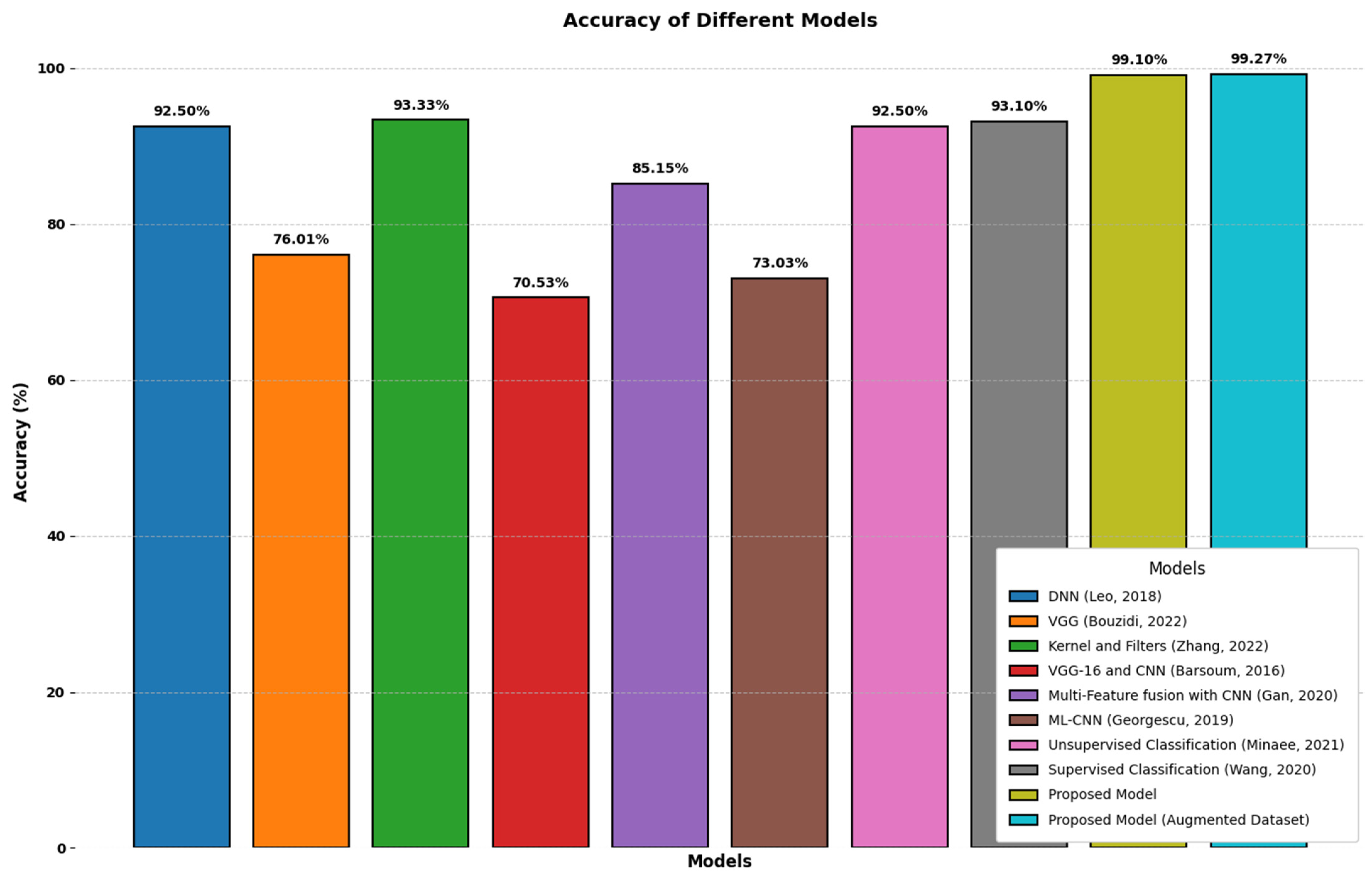

4.3. Comparison of Experimental Analysis

In this study, we perform a comprehensive evaluation of the proposed HFER model using four different datasets: EMOTIC, FER-2013, CK+, and AffectNet datasets. Rigorously assess model performance through validation, leak testing, training, accuracy, and leak analysis. Our goal is to demonstrate the effectiveness of the proposed model on these datasets while validating loss and accuracy. Before proceeding with the evaluation process, we provide an overview of the training method to ensure that the architecture and hyperparameters of the training and test datasets are preserved. To initialize the network weights, use Gaussian variables convolved with random integers bounded to a range of 0.05 and zero. Use an Adam-like optimizer with a learning rate of 0.005 and a simplified stochastic gradient. Through experiments, we found that reducing the weights to 0.001 gives better results. Additionally, an improved version of L2 regularization was implemented to improve model performance.

Our proposed model shows good performance on the FER-2013, EMOTIC, CK+, and AffectNet datasets. Additionally, we apply oversampling during model training to address data imbalance issues, especially for classes with limited image representation. This method effectively alleviates the data imbalance problem and promotes better generalization of the model. This allows you to train meaningful models on different categories of your dataset. The availability of the FER-2013 dataset further increases the accessibility of other facial emotion recognition datasets. However, it should be noted that some categories, such as neutral and happy, have more examples than others, and the imbalance between different emotional categories poses research challenges.

To validate the proposed model, we used a training image set consisting of 28,709 images. Of these, 3500 images were used for validation, and 3589 images were used for testing. Our results provide strong evidence of the effectiveness of the proposed facial emotion recognition model on multiple datasets and demonstrate the potential for accurate and efficient facial emotion recognition. Future research should focus on exploring techniques that address the issue of emotion category imbalance in the dataset and further improve model performance. The performance of the proposed DenseNet model is evaluated on various datasets and compared with benchmark results. First, the model was tested on the FER-2013 dataset and achieved an accuracy of 99.10%. However, applying the model to the improved FER-2013 dataset showed a significant improvement with an average accuracy of 99.27% in the simulated environment. The accuracy graph in

Figure 23 clearly shows the results of these improvements.

Next is the EMOTIC dataset. We used a training set of 120 images and a validation set of 23 images and saved 70 images for testing. Overall, the accuracy that can be obtained from the EMOTIC dataset is 89.20%. However, as shown in

Figure 24, the model trained on the augmented EMOTIC dataset showed significant improvement, achieving 98.01% accuracy compared to the base dataset. These results highlight the evidence of the superior performance of FER-2013 and the DenseNet model proposed in FER-2013. The EMOTIC dataset exceeds the level of accuracy achieved by the dataset. The augmentation techniques applied to these datasets were effective in improving the model’s accuracy and demonstrated the model’s ability to generalize and classify facial emotions more accurately.

Figure 25 visually compares the proposed and reference datasets. The simulation environment used a training set of 120 images. We performed validation on 23 images from the CK+ dataset, using 70 exclusively for testing. The accuracy achieved on the CK+ dataset reached 97.30%, while the proposed model showed significantly higher accuracy (98.53%) than the extended CK+ dataset. These results highlight the outperformance of the proposed model against state-of-the-art datasets in a simulated environment. These results further demonstrate the effectiveness of the proposed dataset in improving the performance of facial emotion recognition models, making it a valuable asset for smart medical systems.

We assess the performance of our suggested model on the most recent AffectNet dataset in a simulated setting. We used 70 images for training in the simulation environment and a subset of 23 images from the AffectNet dataset for validation. The AffectNet dataset’s overall accuracy rate was 87.37%. However, upon making adjustments to the AffectNet dataset, the accuracy of the suggested model surged to 98.81%.

Figure 26 results offer strong proof that our model can outperform existing datasets and attain more accuracy. This review emphasizes the advancements made in FER and how critical it is to incorporate high-quality datasets in order to enhance model performance. The study’s findings support the validity of the suggested dataset and advance the field of intelligent healthcare systems research by highlighting the model’s superior performance.

4.4. Statistics and Visualization

Statistical analysis is complemented by visualizations that allow a comprehensive examination of model performance and changes in the dataset. Gender can be effectively evaluated by analyzing the distribution of degrees of freedom, the sum of squares, and the mean square and understanding its relationship with the F-value and p-value to evaluate the validity and reliability of the proposed HFER framework. This paper proposes an emotion recognition system based on FER that can greatly improve human–computer interaction. Seven basic emotions derived from the acoustic processing of interpreted emotions were tested to create a set of features sufficient to distinguish seven emotions. To assess the importance of each emotion feature, a statistical analysis was performed, and an artificial neural network was trained to classify emotions based on 30 input vectors providing information about the human prosody throughout the sentence. This metric is important to show the statistical significance of the model and its performance on different datasets. This comprehensive approach highlights the potential of the proposed method to accurately recognize facial emotions, thus making a significant contribution to smart medical systems. Detailed statistical insights support the model’s robustness and applicability to real-world scenarios, highlighting its value in improving patient care through accurate emotion recognition.

4.4.1. Classification Report

A major challenge in developing emotion recognition systems is extracting appropriate features that effectively represent different emotions. Since pattern recognition methods are rarely domain-independent, appropriate feature selection is believed to have a significant impact on classification performance. After training the model on the dataset, the classification performance is comprehensively evaluated. The accuracy of the model is evaluated using metrics such as the confusion matrix shown in

Figure 27 and Cohen’s kappa coefficient. The confusion matrix provides details of correct and incorrect predictions for all classes, providing insight into model performance by class.

The HFER consists of two stages: (1) an external processing unit that extracts relevant features from the available (emotion) data and (2) a classifier that determines the main emotion of an emotion. In fact, most current research on ER focuses on this step because it represents the interface between the problem domain and classification methods. In contrast, almost traditional classifications were used. Additionally, precision, recall, and F1 scores are calculated for each category and displayed in the ranking report. Cohen’s Kappa is an important measure of agreement between actual and predicted labels, providing a reliable assessment of the model’s reliability and effectiveness in accurately classifying facial emotions. This comprehensive evaluation framework highlights the model’s capabilities and potential utility in developing intelligent healthcare systems through accurate emotion recognition.

A comprehensive classification report details the evaluation of the DenseNet model on the AffectNet dataset. This report includes important metrics such as precision, recall, F1 score, and support for each class. Accuracy measures how well one positive is predicted out of all predicted positives. Recall, instead, measures the accuracy of positive predictions for all true positives in a class. The F1 score is the harmonic mean of precision and recall, providing a balanced measure of overall model performance. The classification of the DenseNet model was trained and tested on the AffectNet dataset, which consists of emotional images with corresponding category labels. This dataset includes seven main emotion categories, namely happy, sad, anger, disgust, fear, natural, and surprise. To generally evaluate classification systems, we first evaluate their performance using metrics such as accuracy, loss, etc. This detailed statistical analysis helps evaluate the robustness and effectiveness of the DenseNet model to accurately classify facial emotions in the context of smart health systems.

4.4.2. Cohen’s Kappa

This study discusses Cohen’s Kappa coefficient method. Using emotions as stimuli to test a mixed emotion model composed of factually labeled emotion categories. Cohen’s kappa coefficient, 10, a measure of agreement between predicted and actual labels, was calculated as 1.0, indicating perfect agreement for this assessment. Additionally, an analysis of variance (ANOVA) test was performed to assess whether there was a statistically significant difference between the estimated label mean and the actual label mean. This method is carried out by determining the degree of inter-rater reliability among seven selected subjects. This statistical analysis is important to understand the performance and variability of models to accurately predict human facial emotions in smart medical systems. They are happy, fearful, sad, disgusted, natural, surprised, and angry. These values indicate the degree of agreement between subjects. They are wonderful. In fact, the labeled emotion categories are selected based on the distribution of the human.

4.4.3. ANOVA Results

We exactly identify seven primary emotions (happiness, sorrow, fear, anger, surprise, disgust, and neutrality) using a feature selection framework. We use ANOVA to eliminate unimportant features.

Table 3 displays the results of the ANOVA test for the predictions generated by the DenseNet model with the AffectNet dataset. The purpose of this statistical study is to determine whether the actual and expected label averages differ in a way that is statistically significant. The table includes important metrics such as degrees of freedom (DF), mean squares (MS), sum of squares (SS), F-statistics (F), and

p-values (including PR > F). The table is based on tests that demonstrate the monotonicity and consistency qualities of filter-based techniques. The ensuing images 28 demonstrate that the ANOVA method is the most effective for filter-based layers. The model transforms the observed variance into its predictive power. In the context of smart medical systems, this thorough assessment contributes to the validation of the model’s efficacy in properly predicting human facial emotions.

Figure 28 below shows a series of subplots depicting the results of ANOVA tests performed on the DenseNet model in the AffectNet dataset. This visualization is important for understanding the distribution of degrees of freedom (df), mean square (min_sq), the sum of squares (sum_sq), mean square (min_sq), and the sum of the squares (sum_sq), which is important for understanding the mean and variance of the data population. To assess the model’s performance, one scatter plot relates the F-value to the mean square (min_sq), and another scatter plot relates the F-value to the

p-value (PR(>F)) to determine statistical significance. Line plots show how the sum of squares (sum_sq) and mean square (min_sq) change with degrees of freedom (df), revealing their dynamics under different dataset conditions. Each figure provides a comprehensive overview of the statistical relationships and characteristics of the dataset, which are important to verify the predictive accuracy of HFER models in smart health applications. The figure below displays a comprehensive graph for dataset analysis. It includes a history diagram, a distribution diagram, and a line diagram to visualize the distribution, relationships, and trends in the data.

The statistical tests conducted, including ANOVA and descriptive statistics, aim to evaluate the variability and consistency in DenseNet’s performance across different datasets. This analysis provides insights into the model’s robustness and adaptability to varying data characteristics, such as dataset imbalance and emotion category diversity. For example, the ANOVA results indicate significant performance improvements when data augmentation strategies are employed, emphasizing the model’s capacity to adapt to augmented datasets while maintaining generalization. Descriptive statistics further confirm this by highlighting consistent accuracy and low variance across classes. While this study focuses on applying DenseNet to emotion recognition using visual data, future research could explore integrating multimodal inputs, such as audio signals and textual data. Combining visual data with these modalities could further enhance emotion recognition performance, particularly in scenarios requiring a contextual understanding of emotions.

Nonetheless, the present work provides a comprehensive analysis of DenseNet’s capabilities in visual emotion recognition, laying a strong foundation for potential multimodal expansions. The statistical evaluation confirms that DenseNet achieves robust performance across diverse datasets, with significant improvements observed when employing augmentation techniques. These findings not only validate the effectiveness of the model but also provide a quantitative basis for its application in real-world scenarios.