WLAM Attention: Plug-and-Play Wavelet Transform Linear Attention

Abstract

1. Introduction

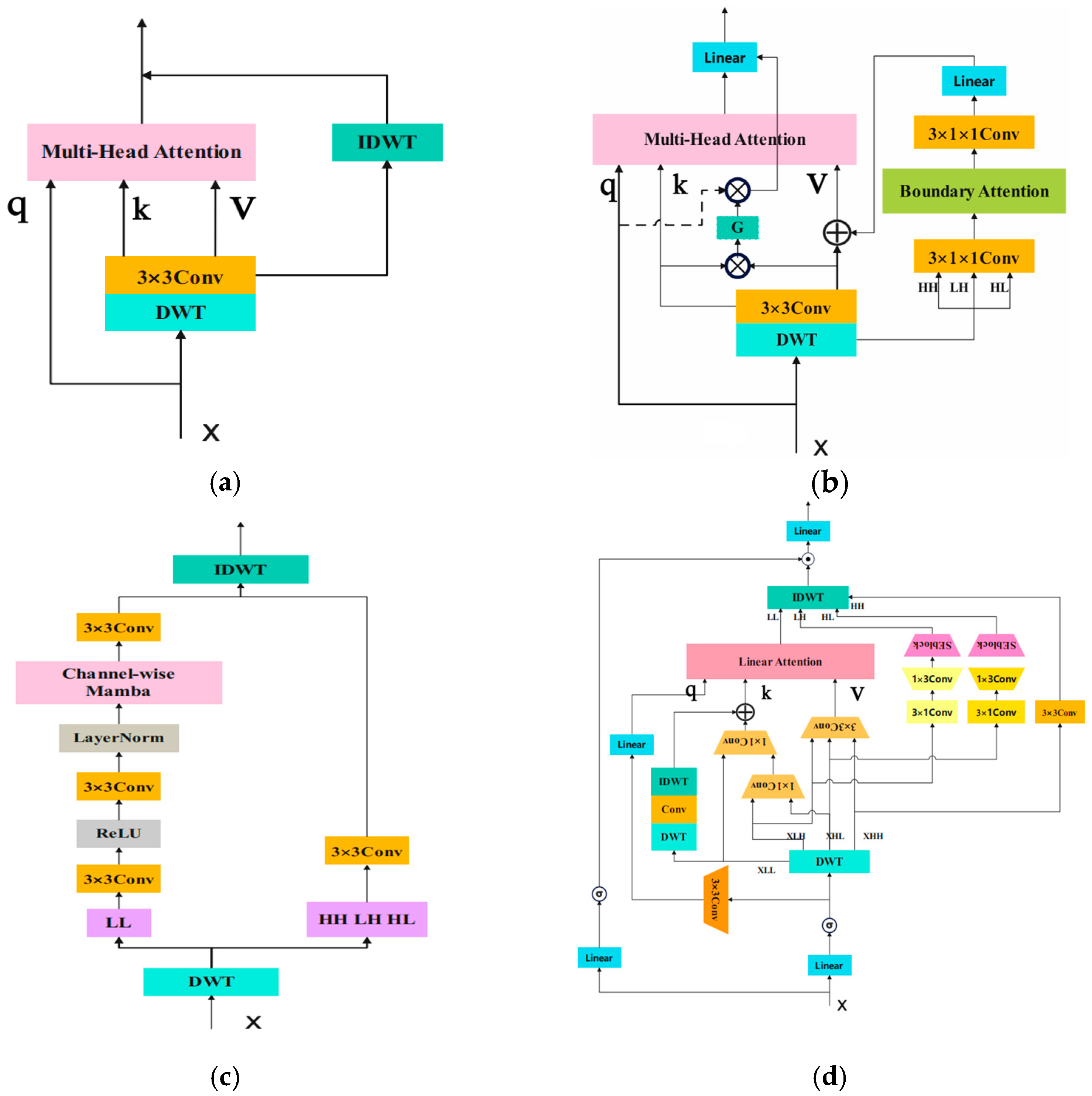

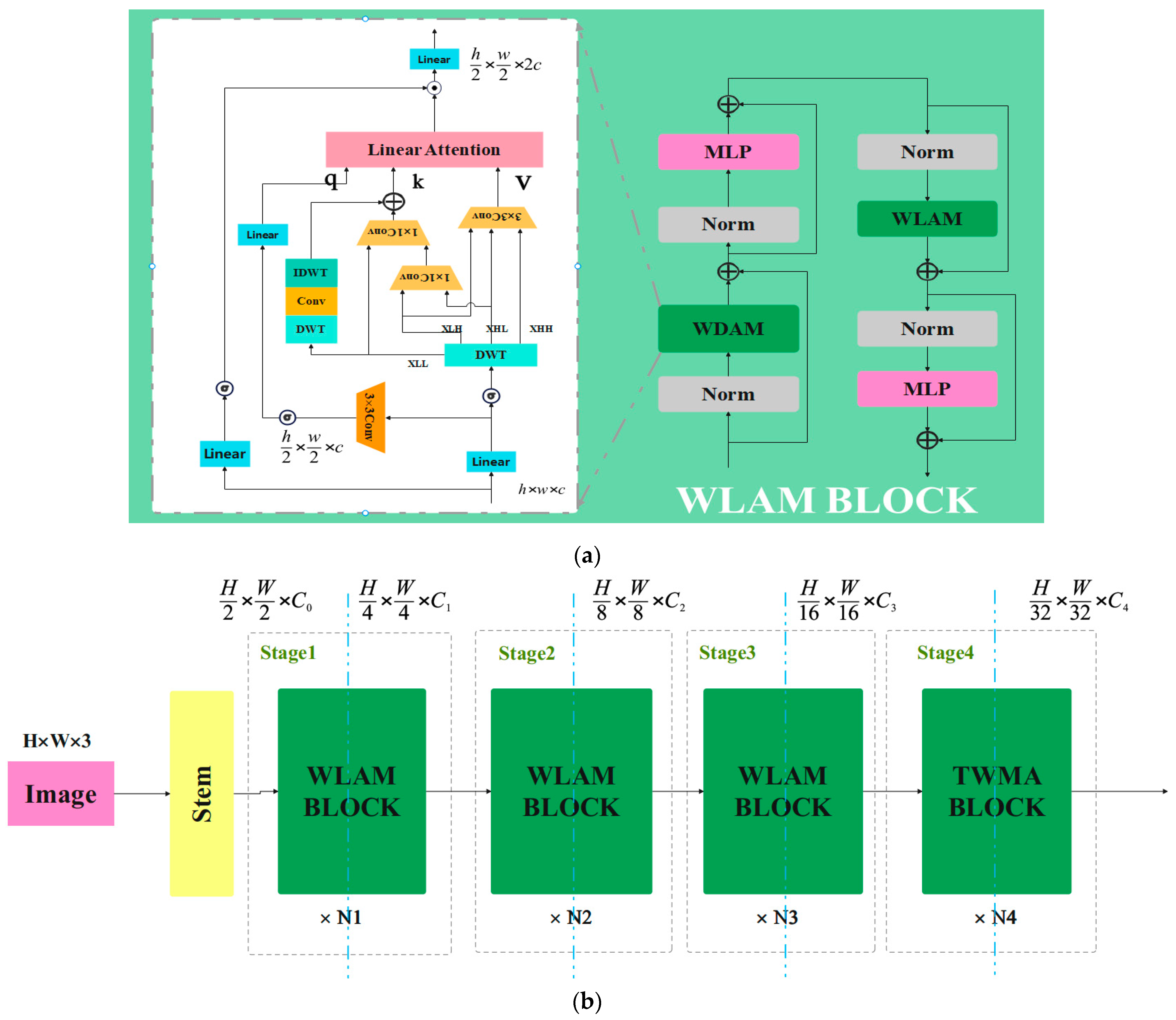

- Integration of Discrete Wavelet Transform (DWT) with Linear Attention: The proposed method incorporates the DWT into the attention mechanism by decomposing the input features for different attention constructs. Specifically, the input features are utilized to generate the attention queries (Q), low-frequency information is employed to generate the attention keys (K), and high-frequency information, processed through convolution, is used to generate the values (V). This approach effectively enhances the model’s ability to capture both local and global features, improving the perception of details and overall structure.

- Multi-Scale Processing of Wavelet Coefficients: The high-frequency wavelet coefficients are processed through convolutional layers with varying kernel sizes to extract features at different scales. This is complemented by the Squeeze-and-Excitation (SE) module, which enhances the selectivity of the features. An inverse discrete wavelet transform (IDWT) is utilized to reintegrate the multi-scale decomposed information back into the spatial domain, compensating for the limitations of linear attention in handling multi-scale and local information.

- Structural Mimicry of Mamba Network: The proposed wavelet linear attention incorporates elements from the Mamba network, including a forget gate and a modified block design. This adaptation retains the core advantages of Mamba, making it more suitable for visual tasks compared to the original Mamba model.

- Wavelet Downsampling Attention (WDSA) Module: By exploiting the lossless downsampling property of wavelet transforms, we introduce the WDSA module, which combines downsampling and attention mechanisms. This integration reduces the network size and computational load while minimizing information loss caused by downsampling.

2. Related Work

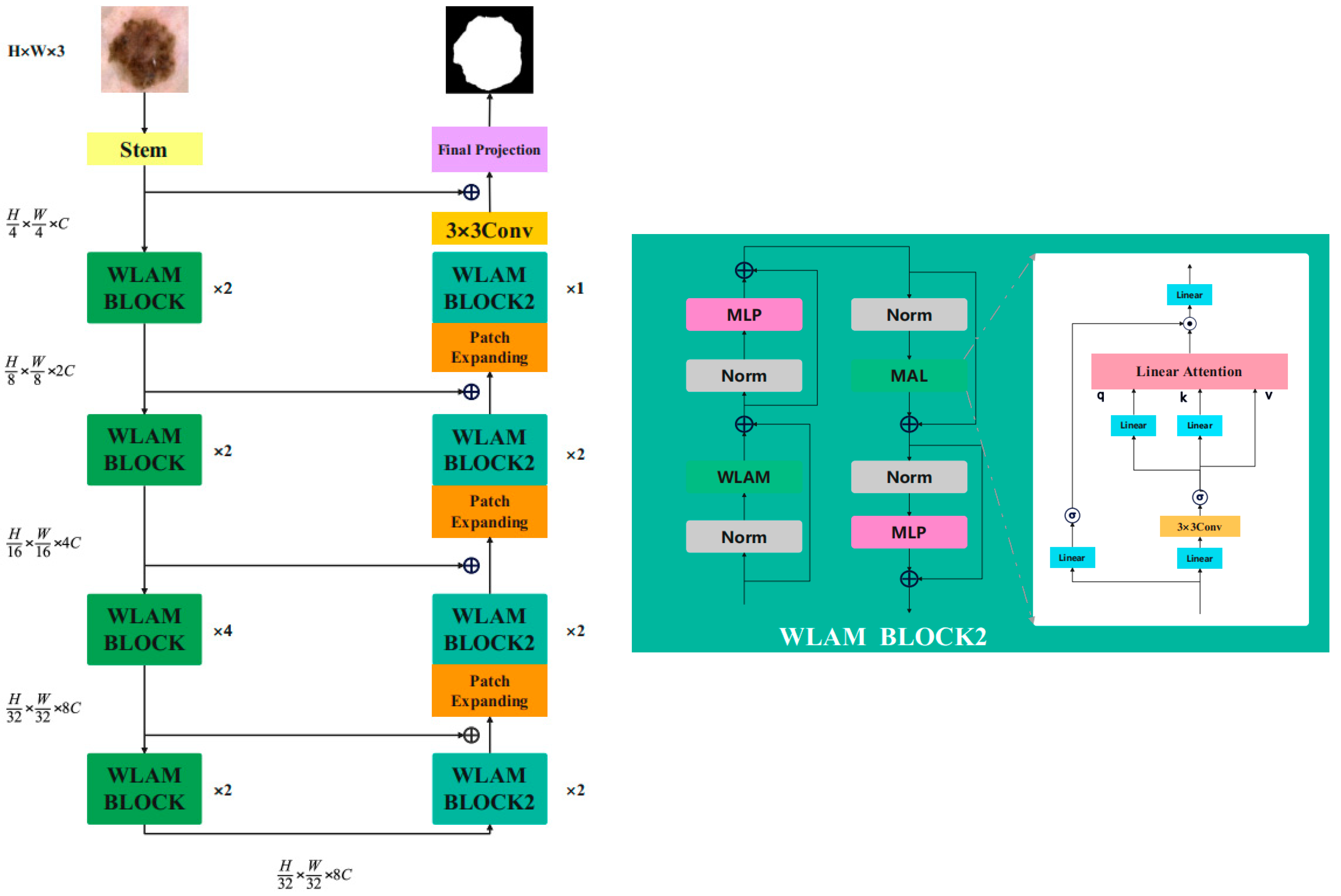

3. Our Work

3.1. Plug-and-Play WLAM Attention Module

- This sub-band preserves most of the image’s energy and structural information, making it the richest in content. Typically, the primary structures and general shapes of the image are contained within this sub-band.

- This sub-band represents the horizontal details of the image, capturing high-frequency components such as horizontal edges or textures. However, it contains relatively less information, primarily focusing on changes in the horizontal direction.

- This sub-band captures the vertical details of the image, including vertical edges and textures. However, it contains relatively less information, primarily focusing on variations in the vertical direction.

- This sub-band represents the finest details of the image, encompassing diagonal features such as diagonal edges. It contains high-frequency noise and very subtle details, resulting in the least amount of information.

- We emulate Mamba’s forget gate. The forget gate provides the model with two key attributes: local bias and positional information. In this paper, we replace the forget gate with RoPE, modifying it to integrate positional information into the vector representation of the sum, allowing the attention scores to inherently carry positional relationships. This modification offers a 0.8% accuracy gain while only reducing throughput by 3%.

- Inspired by Mamba, we incorporate a learnable shortcut mechanism into the linear attention framework. This enhancement results in an accuracy improvement of 0.2%.

3.2. Lossless Downsampling Attention Module

3.3. Macro Architecture Design

4. Experiments

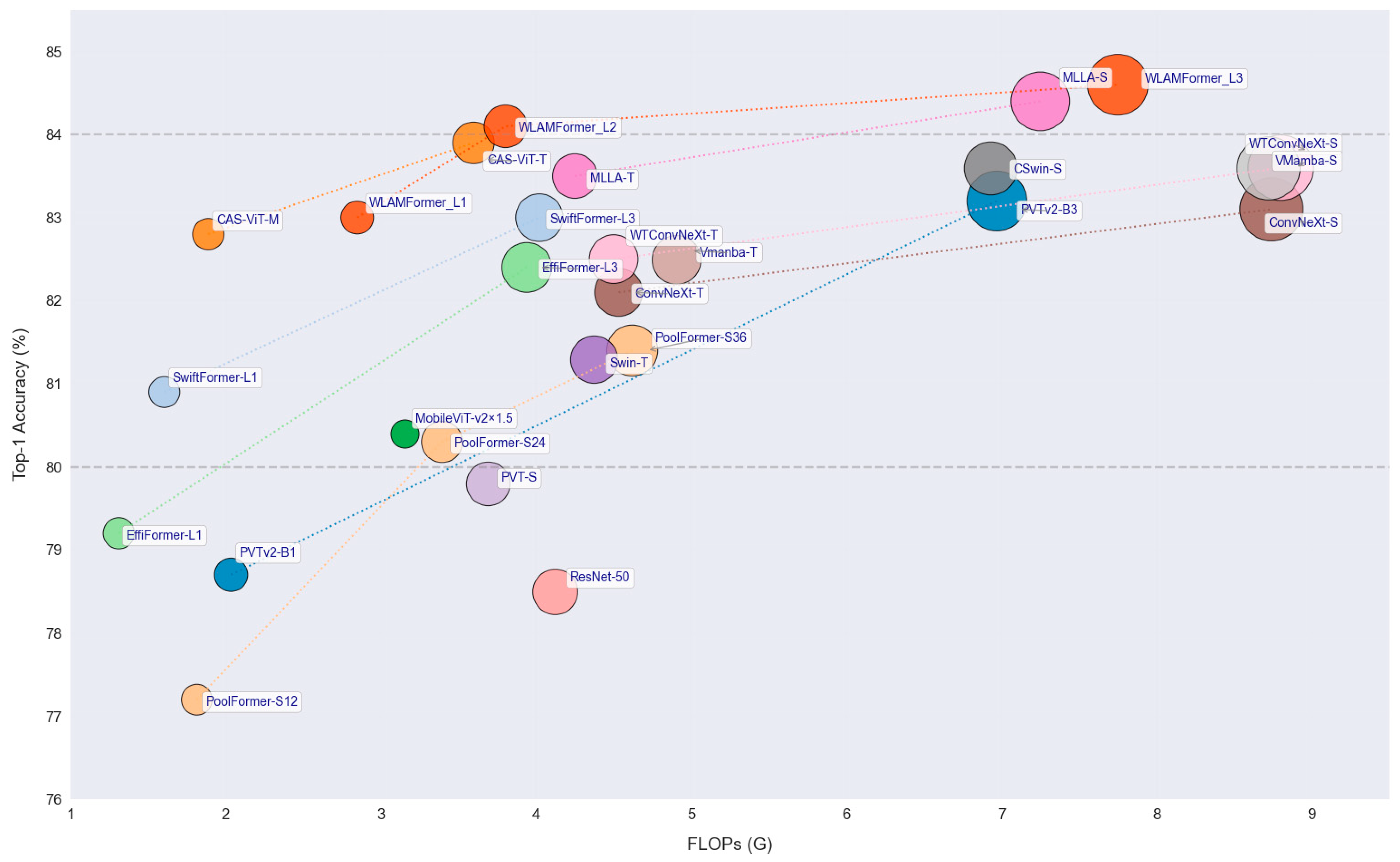

4.1. Image Classification

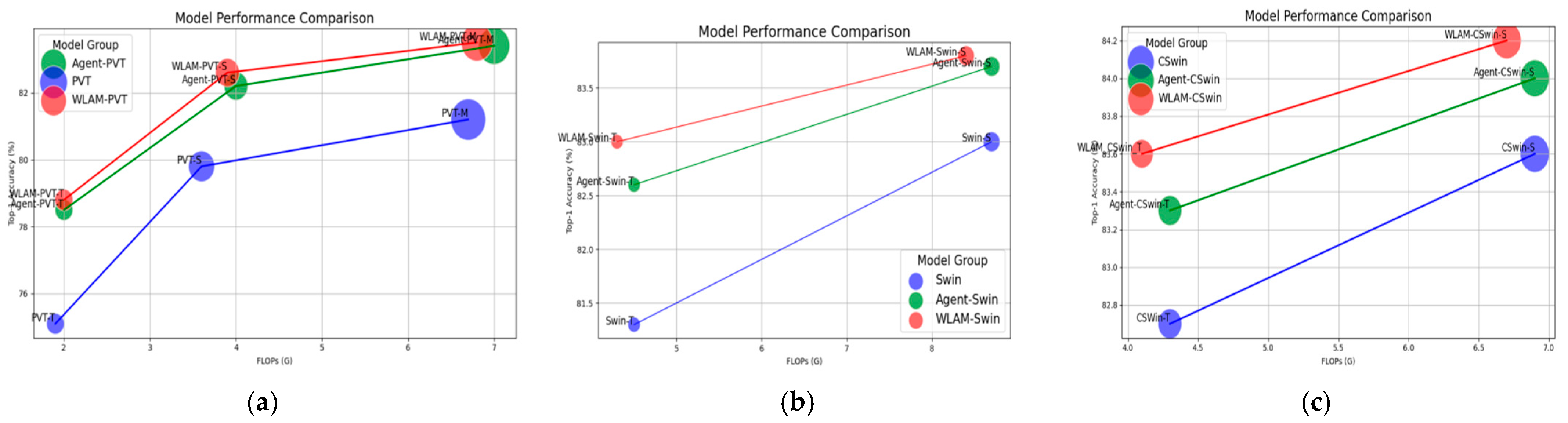

- Performance Improvement on the PVT Architecture

- Performance Improvement on the Swin Architecture

- Performance Improvement on the CSwin Architecture

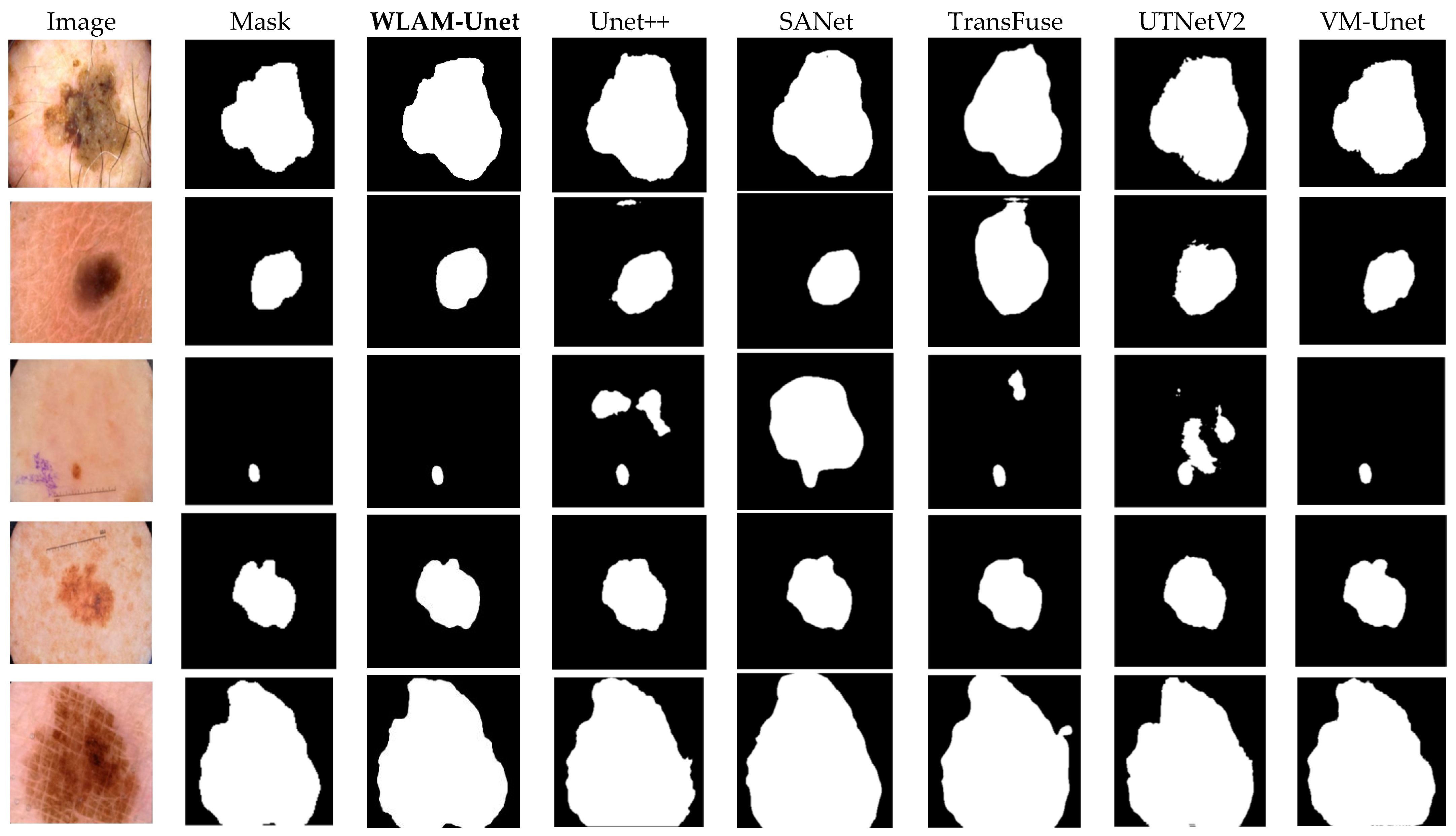

4.2. Image Segmentation

4.3. Ablation Study

- We removed the structure that mimics Mamba, while keeping all other components unchanged.

- We discontinued the use of the structure that imitates MobileNetV3 for processing high-frequency sub-bands; instead, we employed a single 3 × 3 convolution for high-frequency sub-bands, similar to the approach outlined in [36].

- We eliminated the multi-resolution input from the attention module, following the methodology of [36], and solely utilized the low-frequency components as inputs for linear attention.

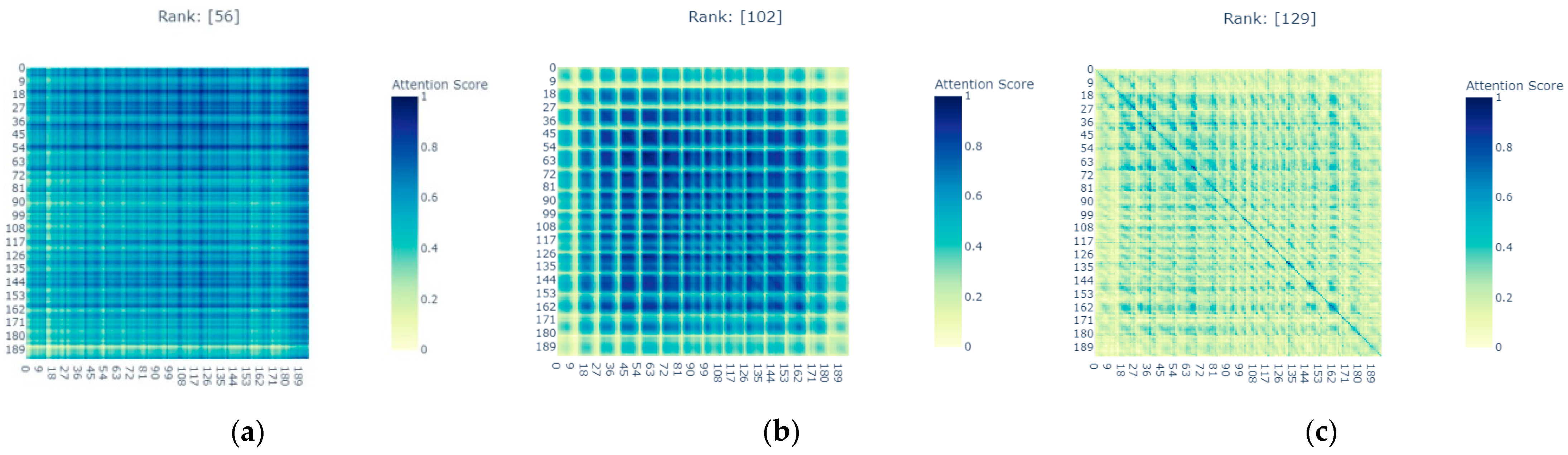

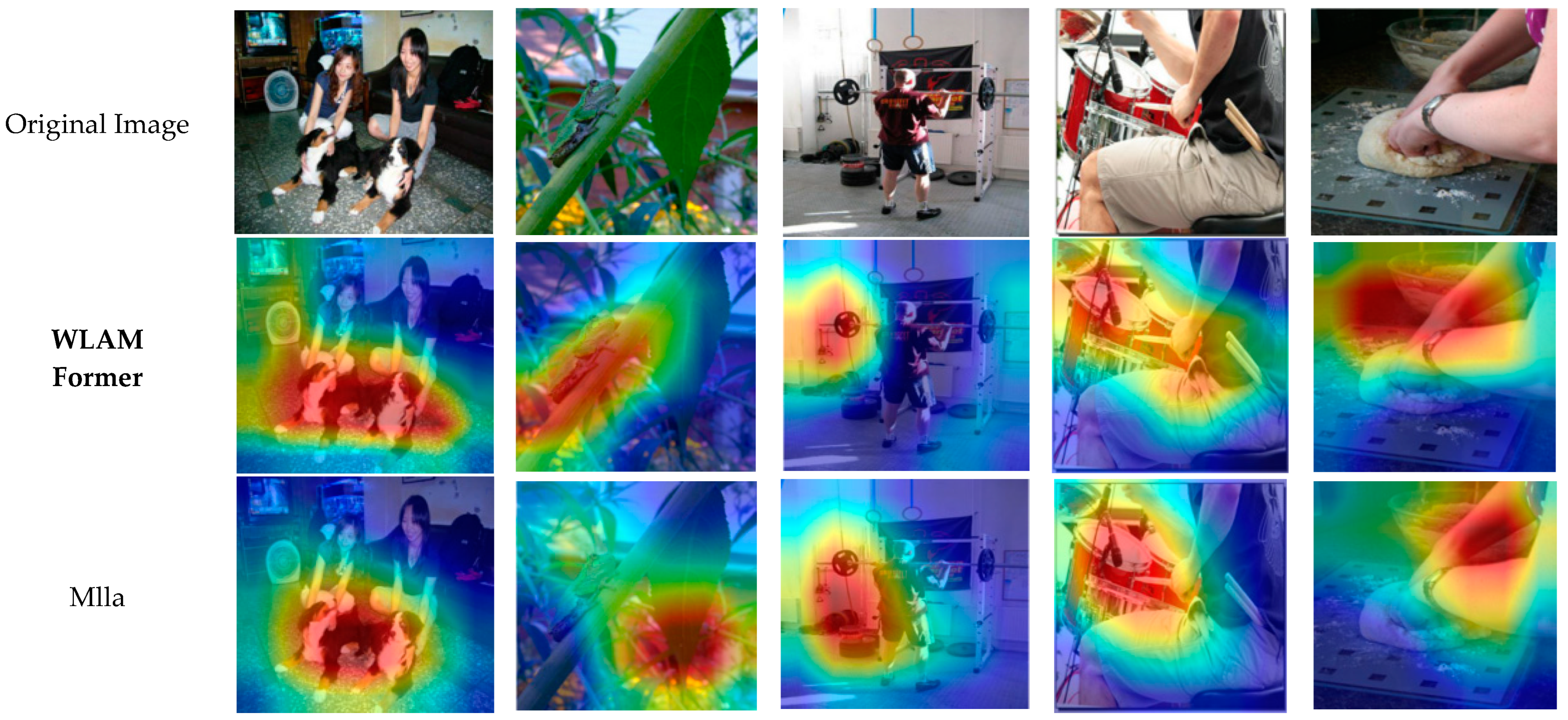

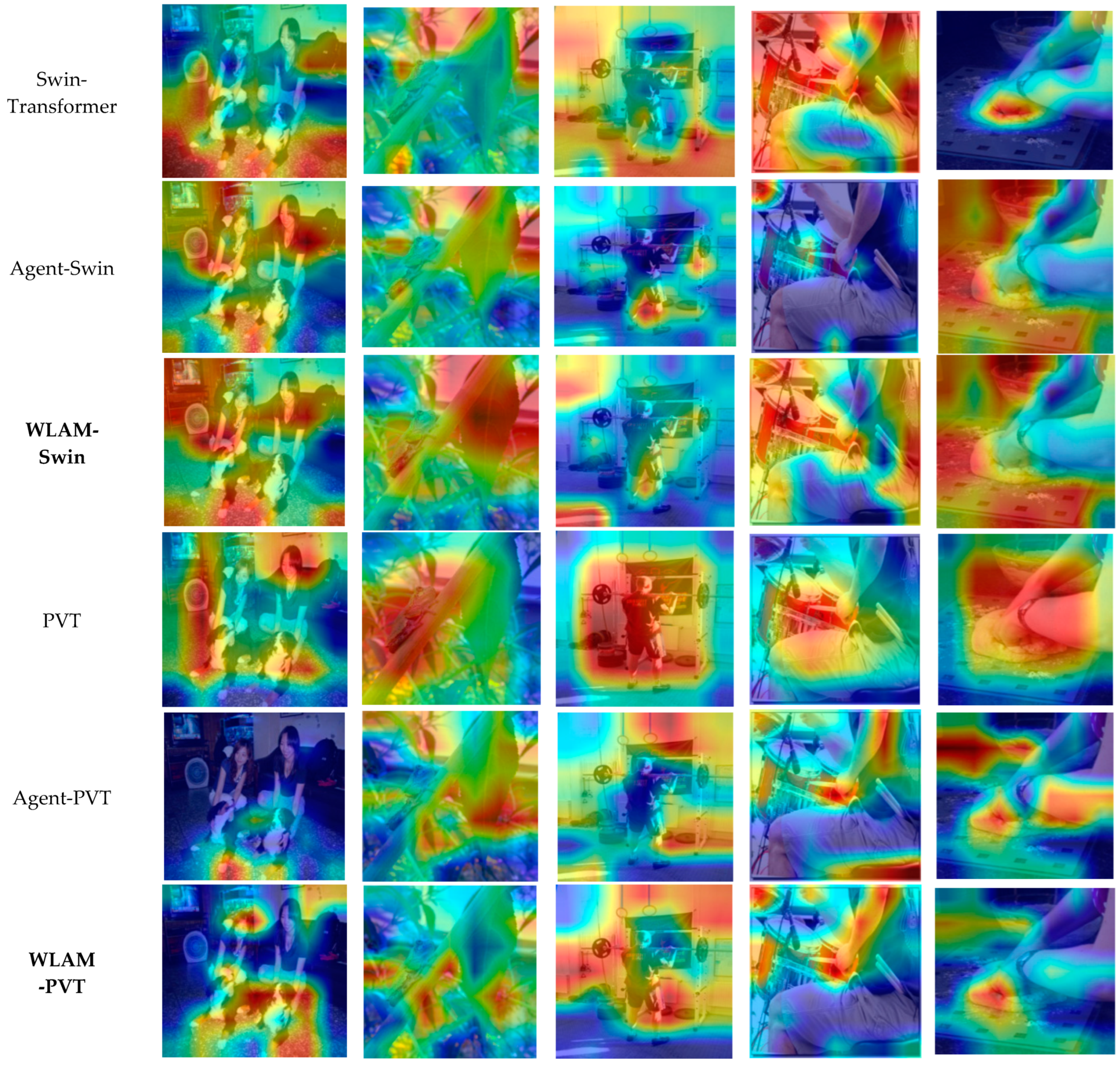

4.4. Network Visualization

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

| Stage | Output | WLAM_Swin_T | WLAM_Swin_S | WLAM_Swin_B | |||

|---|---|---|---|---|---|---|---|

| WLAM_Block | Swin_Blocck | WLAM_Block | Swin_Blocck | WLAM_Block | Swin_Blocck | ||

| 1 | 56 × 56 | Concat 4 × 4, 96, LN | Concat 4 × 4, 96, LN | Concat 4 × 4128, LN | |||

| 2 | 28 × 28 | Concat 4 × 4192, LN | Concat 4 × 4192, LN | Concat 4 × 4256, LN | |||

| 3 | 14 × 14 | Concat 4 × 4384, LN | Concat 4 × 4384, LN | Concat 4 × 4512, LN | |||

| × 3 | × 3 | × 9 | × 9 | × 9 | × 9 | ||

| 4 | 7 × 7 | Concat 4 × 4768, LN | Concat 4 × 4768, LN | Concat 4 × 41024, LN | |||

| None | × 2 | None | × 2 | None | × 2 | ||

| Stage | Output | WLAM_CSwin_T | WLAM_CSwin_S | WLAM_CSwin_B | |||

| WLAM_Block | CSwin_Blocck | WLAM_Block | CSwin_Blocck | WLAM_Block | CSwin_Blocck | ||

| 1 | 56 × 56 | Concat 7 × 7, stride = 4, 64, LN | Concat 7 × 7, stride = 4, 64, LN | Concat 7 × 7, stride = 4, 96, LN | |||

| 2 | 28 × 28 | Concat 7 × 7, stride = 4128, LN | Concat 7 × 7, stride = 4128, LN | Concat 7 × 7, stride = 4192, LN | |||

| 3 | 14 × 14 | Concat 7 × 7, stride = 4256, LN | Concat 7 × 7, stride = 4256, LN | Concat 7 × 7, stride = 4384, LN | |||

| × 9 | × 9 | × 15 | × 14 | × 15 | × 14 | ||

| 4 | 7 × 7 | Concat 7 × 7, stride = 4512, LN | Concat 7 × 7, stride = 4512, LN | Concat 7 × 7, stride = 4768, LN | |||

| None | × 1 | None | × 2 | None | × 2 | ||

Appendix B

| Stage | Output | WLAMFormer_L1 | WLAMFormer_L2 | WLAMFormer_L3 | |||

|---|---|---|---|---|---|---|---|

| WLAM_Block | Liner_Blocck | WLAM_Block | Liner_Blocck | WLAM_Block | Liner_Blocck | ||

| 1 | 56 × 56 | stem, 32 | stem, 32 | stem, 42 | |||

| ownSampling, 64 | ownSampling, 64 | ownSampling, 84 | |||||

| None | None | None | |||||

| 2 | 28 × 28 | ownSampling, 128 | ownSampling, 128 | ownSampling, 168 | |||

| None | None | None | |||||

| 3 | 14 × 14 | ownSampling, 256 | ownSampling, 256 | ownSampling, 336 | |||

| × 3 | × 2 | × 4 | × 3 | × 6 | × 5 | ||

| 4 | 7 × 7 | ownSampling, 512 | ownSampling, 512 | ownSampling, 672 | |||

| None | × 2 | None | × 3 | None | × 3 | ||

Appendix C

References

- Alexey, D. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Zhao, S.; Yang, J.; Wu, N.; Wu, Y.; Zhang, T. Pyramid vision transformer: A versatile backbone for dense prediction without convolutions. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 568–578. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Guo, B. Swin transformer v2: Scaling up capacity and resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 12009–12019. [Google Scholar]

- Xia, Z.; Pan, X.; Song, S.; Li, L.E.; Huang, G. Vision transformer with deformable attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 4794–4803. [Google Scholar]

- Zhou, H.; Zhang, Y.; Guo, H.; Liu, C.; Zhang, X.; Xu, J.; Gu, J. Neural architecture transformer. arXiv 2021, arXiv:2106.04247. [Google Scholar]

- Zhu, L.; Wang, X.; Ke, Z.; Zhang, W.; Lau, R.W. Biformer: Vision transformer with bi-level routing attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 10323–10333. [Google Scholar]

- Wang, S.; Li, B.Z.; Khabsa, M.; Fang, H.; Ma, H. Linformer: Self-attention with linear complexity. arXiv 2020, arXiv:2006.04768. [Google Scholar]

- Qin, Z.; Sun, W.; Deng, H.; Li, D.; Wei, Y.; Lv, B.; Zhong, Y. cosformer: Rethinking softmax in attention. arXiv 2022, arXiv:2202.08791. [Google Scholar] [CrossRef]

- Ma, X.; Kong, X.; Wang, S.; Zhou, C.; May, J.; Ma, H.; Zettlemoyer, L. Luna: Linear unified nested attention. Adv. Neural Inf. Process. Syst. 2021, 34, 2441–2453. [Google Scholar]

- Shen, Z.; Zhang, M.; Zhao, H.; Yi, S.; Li, H. Efficient attention: Attention with linear complexities. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Virtual, 5–9 January 2021; pp. 3531–3539. [Google Scholar]

- Gao, Y.; Chen, Y.; Wang, K. SOFT: A simple and efficient attention mechanism. arXiv 2021, arXiv:2104.02544. [Google Scholar]

- Xiong, Y.; Zeng, Z.; Chakraborty, R.; Tan, M.; Fung, G.; Li, Y.; Singh, V. Nyströmformer: A nyström-based algorithm for approximating self-attention. Proc. AAAI Conf. Artif. Intell. 2021, 35, 14138–14148. [Google Scholar] [CrossRef]

- You, H.; Xiong, Y.; Dai, X.; Wu, B.; Zhang, P.; Fan, H.; Vajda, P.; Lin, Y. Castling-vit: Compressing self-attention via switching towards linear-angular attention at vision transformer inference. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 14431–14442. [Google Scholar]

- Han, D.; Pan, X.; Han, Y.; Song, S.; Huang, G. Flatten transformer: Vision transformer using focused linear attention. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 5961–5971. [Google Scholar]

- Han, D.; Ye, T.; Han, Y.; Xia, Z.; Pan, S.; Wan, P.; Huang, G. Agent attention. In European Conference on Computer Vision 2024; Springer Nature: Cham, Switzerland, 2024; pp. 124–140. [Google Scholar]

- Xu, Z.; Wu, D.; Yu, C.; Chu, X.; Sang, N.; Gao, C. SCTNet: Single-Branch CNN with Transformer Semantic Information for Real-Time Segmentation. Proc. AAAI Conf. Artif. Intell. 2024, 38, 6378–6386. [Google Scholar] [CrossRef]

- Jiang, J.; Zhang, P.; Luo, Y.; Li, C.; Kim, J.B.; Zhang, K.; Kim, S. AdaMCT: Adaptive mixture of CNN-transformer for sequential recommendation. In Proceedings of the 32nd ACM International Conference on Information and Knowledge Management, Birmingham, UK, 21–25 October 2023; pp. 976–986. [Google Scholar] [CrossRef]

- Lou, M.; Zhou, H.Y.; Yang, S.; Yu, Y. TransXNet: Learning both global and local dynamics with a dual dynamic token mixer for visual recognition. arXiv 2023, arXiv:2310.19380. [Google Scholar] [CrossRef]

- Yoo, J.; Kim, T.; Lee, S.; Kim, S.H.; Lee, H.; Kim, T.H. Enriched cnn-transformer feature aggregation networks for super-resolution. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 2–7 January 2023; pp. 4956–4965. [Google Scholar]

- Maaz, M.; Shaker, A.; Cholakkal, H.; Khan, S.; Zamir, S.W.; Anwer, R.M.; Shahbaz Khan, F. Edgenext: Efficiently amalgamated cnn-transformer architecture for mobile vision applications. In European Conference on Computer Vision 2022; Springer Nature: Cham, Switzerland, 2022; pp. 3–20. [Google Scholar]

- Wu, H.; Xiao, B.; Codella, N.; Liu, M.; Dai, X.; Yuan, L.; Zhang, L. Cvt: Introducing convolutions to vision transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 22–31. [Google Scholar]

- Mehta, S.; Rastegari, M. Separable self-attention for mobile vision transformers. arXiv 2022, arXiv:2206.02680. [Google Scholar] [CrossRef]

- Wadekar, S.N.; Chaurasia, A. MobileViTv3: Mobile-friendly vision transformer with simple and effective fusion of local, global, and input features. arXiv 2022, arXiv:2209.15159. [Google Scholar] [CrossRef]

- Han, D.; Wang, Z.; Xia, Z.; Han, Y.; Pu, Y.; Ge, C.; Huang, G. Demystifying Mamba in Vision: A Linear Attention Perspective. arXiv 2024, arXiv:2405.16605. [Google Scholar] [CrossRef]

- Bae, W.; Yoo, J.; Chul, Y.J. Beyond deep residual learning for image restoration: Persistent homology-guided manifold simplification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 145–153. [Google Scholar]

- Fujieda, S.; Takayama, K.; Hachisuka, T. Wavelet convolutional neural networks. arXiv 2018, arXiv:1805.08620. [Google Scholar] [CrossRef]

- Yao, T.; Pan, Y.; Li, Y.; Ngo, C.W.; Mei, T. Wave-vit: Unifying wavelet and transformers for visual representation learning. In European Conference on Computer Vision 2022; Springer Nature: Cham, Switzerland, 2022; pp. 328–345. [Google Scholar]

- Li, J.; Cheng, B.; Chen, Y.; Gao, G.; Shi, J.; Zeng, T. EWT: Efficient Wavelet-Transformer for single image denoising. Neural Netw. 2024, 177, 106378. [Google Scholar] [CrossRef] [PubMed]

- Azad, R.; Kazerouni, A.; Sulaiman, A.; Bozorgpour, A.; Aghdam, E.K.; Jose, A.; Merhof, D. Unlocking fine-grained details with wavelet-based high-frequency enhancement in transformers. In International Workshop on Machine Learning in Medical Imaging; Springer Nature: Cham, Switzerland, 2023; pp. 207–216. [Google Scholar]

- Gao, X.; Qiu, T.; Zhang, X.; Bai, H.; Liu, K.; Huang, X.; Liu, H. Efficient multi-scale network with learnable discrete wavelet transform for blind motion deblurring. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 2733–2742. [Google Scholar]

- Roy, A.; Sarkar, S.; Ghosal, S.; Kaplun, D.; Lyanova, A.; Sarkar, R. A wavelet guided attention module for skin cancer classification with gradient-based feature fusion. In Proceedings of the 2024 IEEE International Symposium on Biomedical Imaging (ISBI), Athens, Greece, 27–30 May 2024; pp. 1–4. [Google Scholar]

- Tan, J.; Pei, S.; Qin, W.; Fu, B.; Li, X.; Huang, L. Wavelet-based Mamba with Fourier Adjustment for Low-light Image Enhancement. In Proceedings of the Asian Conference on Computer Vision, Hanoi, Vietnam, 8–12 December 2024; pp. 3449–3464. [Google Scholar]

- Finder, S.E.; Amoyal, R.; Treister, E.; Freifeld, O. Wavelet convolutions for large receptive fields. In European Conference on Computer Vision 2024; Springer Nature: Cham, Switzerland, 2024; pp. 363–380. [Google Scholar]

- Loshchilov, I.; Hutter, F. Sgdr: Stochastic gradient descent with warm restarts. In Proceedings of the International Conference on Learning Representations, San Juan, Puerto Rico, 2–4 May 2016. [Google Scholar]

- Koonce, B.; Koonce, B.E. Convolutional Neural Networks with Swift for Tensorflow: Image Recognition and Dataset Categorization; Apress: New York, NY, USA, 2021; pp. 109–123. [Google Scholar]

- Zhu, L.; Liao, B.; Zhang, Q.; Wang, X.; Liu, W.; Wang, X. Vision mamba: Efficient visual representation learning with bidirectional state space model. arXiv 2024, arXiv:2401.09417. [Google Scholar] [CrossRef]

- Dong, X.; Bao, J.; Chen, D.; Zhang, W.; Yu, N.; Yuan, L.; Guo, B. Cswin transformer: A general vision transformer backbone with cross-shaped windows. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 12124–12134. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Wang, W.; Xie, E.; Li, X.; Fan, D.P.; Song, K.; Liang, D.; Shao, L. Pvt v2: Improved baselines with pyramid vision transformer. Comput. Vis. Media 2022, 8, 415–424. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Li, F.F. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Cubuk, E.D.; Zoph, B.; Shlens, J.; Le, Q.V. Randaugment: Practical automated data augmentation with a reduced search space. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 702–703. [Google Scholar]

- Shaker, A.; Maaz, M.; Rasheed, H.; Khan, S.; Yang, M.H.; Khan, F.S. Swiftformer: Efficient additive attention for transformer-based real-time mobile vision applications. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 17425–17436. [Google Scholar]

- Zhang, T.; Li, L.; Zhou, Y.; Liu, W.; Qian, C.; Ji, X. Cas-vit: Convolutional additive self-attention vision transformers for efficient mobile applications. arXiv 2024, arXiv:2408.03703. [Google Scholar]

- Yu, W.; Luo, M.; Zhou, P.; Si, C.; Zhou, Y.; Wang, X.; Yan, S. Metaformer is actually what you need for vision. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 10819–10829. [Google Scholar]

- Woo, S.; Debnath, S.; Hu, R.; Chen, X.; Liu, Z.; Kweon, I.S. Convnext v2: Co-designing and scaling convnets with masked autoencoders. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 16133–16142. [Google Scholar]

- Huang, T.; Pei, X.; You, S.; Wang, F.; Qian, C.; Xu, C. Localmamba: Visual state space model with windowed selective scan. arXiv 2024, arXiv:2403.09338. [Google Scholar]

- Liu, Y.; Tian, J. Probabilistic Attention Map: A Probabilistic Attention Mechanism for Convolutional Neural Networks. Sensors 2024, 24, 8187. [Google Scholar] [CrossRef]

- Liu, X.; Peng, H.; Zheng, N.; Yang, Y.; Hu, H.; Yuan, Y. Efficientvit:Memory efficient vision transformer with cascaded group attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 14420–14430. [Google Scholar]

- Krizhevsky, A.; Hinton, D. Learning Multiple Layers of Features from Tiny Images; Technical Report; University of Toronto: Toronto, ON, Canada, 2009. [Google Scholar]

- Pan, J.; Bulat, A.; Tan, F.; Zhu, X.; Dudziak, L.; Li, H.; Tzimiropoulos, G.; Martinez, B. Edgevits: Competing light-weight cnns on mobile devices with vision transformers. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2022; pp. 294–311. [Google Scholar]

- Available online: https://challenge.isic-archive.com/data/#2017 (accessed on 15 January 2025).

- Available online: https://challenge.isic-archive.com/data/#2018 (accessed on 15 January 2025).

- Gao, Y.; Zhou, M.; Liu, D.; Metaxas, D. A multi-scale transformer for medical image segmentation: Architectures, model efficiency, and benchmarks. arXiv 2022, arXiv:2203.00131. [Google Scholar]

- Zhang, Y.; Liu, H.; Hu, Q. Transfuse: Fusing transformers and cnns for medical image segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Cham, Switzerland, 2021; pp. 14–24. [Google Scholar]

- Ruan, J.; Xiang, S.; Xie, M.; Liu, T.; Fu, Y. Malunet: A multi-attention and light-weight unet for skin lesion segmentation. In Proceedings of the 2022 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Las Vegas, NV, USA, 6–8 December 2022; pp. 1150–1156. [Google Scholar]

- Ruan, J.; Li, J.; Xiang, S. Vm-unet: Vision mamba unet for medical image segmentation. arXiv 2024, arXiv:2402.02491. [Google Scholar]

- Zhou, Z.; Siddiquee, M.M.R.; Tajbakhsh, N.; Liang, J. Unet++: A nested u-net architecture for medical image segmentation. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support; Springer: Cham, Switzerland, 2018; pp. 3–11. [Google Scholar]

- Oktay, O.; Schlemper, J.; Folgoc, L.L.; Lee, M.; Heinrich, M.; Misawa, K.; Mori, K.; McDonagh, S.; Hammerla, N.Y.; Kainz, B.; et al. Attention u-net: Learning where to look for the pancreas. arXiv 2018, arXiv:1804.03999. [Google Scholar]

- Wei, J.; Hu, Y.; Zhang, R.; Li, Z.; Zhou, S.K.; Cui, S. Shallow attention network for polyp segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Cham, Switzerland, 2021; pp. 699–708. [Google Scholar]

| Model | Par. ↓ (M) | Flops ↓ (G) | Throughput (A100) | Type | Top-1 ↑ |

|---|---|---|---|---|---|

| PVTv2-B1 [40] | 14.02 | 2.034 | 1945 | Transformer | 78.7 |

| SwiftFormer-L1 [44] | 12.05 | 1.604 | 5051 | Hybrid | 80.9 |

| CAS_ViT_M [45] | 12.42 | 1.887 | 2254 | Hybrid | 82.8 |

| PoolFormer-S12 [46] | 11.9 | 1.813 | 3327 | Pool | 77.2 |

| MobileViT-v2 × 1.5 [23] | 10.0 | 3.151 | 2356 | Hybrid | 80.4 |

| EffiFormer-L1 [11] | 12.28 | 1.310 | 5046 | Hybrid | 79.2 |

| WLAMFormer_L1 | 13.5 | 2.847 | 2296 | DWT-Transformer | 83.0 |

| ResNet-50 | 25.5 | 4.123 | 4835 | ConvNet | 78.5 |

| PoolFormer-S24 [46] | 21.35 | 3.394 | 2156 | Pool | 80.3 |

| PoolFormer-S36 [46] | 32.80 | 4.620 | 1114 | Pool | 81.4 |

| SwiftFormer-L3 [44] | 28.48 | 4.021 | 2896 | Hybrid | 83.0 |

| Swin-T [3] | 28.27 | 4.372 | 1246 | Transformer | 81.3 |

| PVT-S [2] | 24.10 | 3.687 | 1156 | Transformer | 79.8 |

| ConvNeXt-T [47] | 29.1 | 4.532 | 3235 | ConvNet | 82.1 |

| CAS-ViT-T [45] | 21.76 | 3.597 | 1084 | Hybrid | 83.9 |

| EffiFormer-L3 [11] | 31.3 | 3.940 | 2691 | Hybrid | 82.4 |

| Vmanba-T [48] | 30.2 | 4.902 | 1686 | Mamba | 82.5 |

| MLLA-T [25] | 25.12 | 4.250 | 1009 | mlla | 83.5 |

| WTConvNeXt-T [34] | 30 M | 4.5 G | 2514 | DWT-ConvNet | 82.5 |

| WLAMFormer_L2 | 25.07 | 3.803 | 1280 | DWT-Transformer | 84.1 |

| ConvNeXt-S [47] | 50.2 | 8.74 | 1255 | ConvNet | 83.1 |

| PVTv2-B3 [40] | 45.2 | 6.97 | 403 | Transformer | 83.2 |

| CSwin-S [38] | 35.4 | 6.93 | 625 | Transformer | 83.6 |

| VMamba-S [48] | 50.4 | 8.72 | 877 | Mamba | 83.6 |

| MLLA-S [25] | 47.6 | 8.13 | 851 | mlla | 84.4 |

| WTConvNeXt-S [34] | 54.2 | 8.8 G | 1045 | DWT-ConvNet | 83.6 |

| WLAMFormer_L3 | 46.6 | 7.75 | 861 | DWT-Transformer | 84.6 |

| Model | Par. ↓ (M) | Flops ↓ (G) | REs | Top-1 ↑ |

|---|---|---|---|---|

| PVT-T | 11.2 | 1.9 | 224 × 224 | 75.1 |

| Agent-PVT-T | 11.6 | 2.0 | 224 × 224 | 78.5 |

| WLAM-PVT-T | 11.8 | 2.0 | 224 × 224 | 78.8 |

| PVT-S | 24.5 | 3.6 | 224 × 224 | 79.8 |

| Agent-PVT-S | 20.6 | 4.0 | 224 × 224 | 82.2 |

| WLAM-PVT-S | 20.8 | 3.9 | 224 × 224 | 82.6 |

| PVT-M | 44.2 | 6.7 | 224 × 224 | 81.2 |

| Agent-PVT-M | 35.9 | 7.0 | 224 × 224 | 83.4 |

| WLAM-PVT-M | 35.6 | 6.8 | 224 × 224 | 83.5 |

| Swin-T | 29 | 4.5 | 224 × 224 | 81.3 |

| Agent-Swin-T | 29 | 4.5 | 224 × 224 | 82.6 |

| WLAM-Swin-T | 27 | 4.3 | 224 × 224 | 83.0 |

| Swin-S | 50 | 8.7 | 224 × 224 | 83.0 |

| Agent-Swin-S | 50 | 8.7 | 224 × 224 | 83.7 |

| WLAM-Swin-S | 49 | 8.4 | 224 × 224 | 83.8 |

| CSWin-T | 23 | 4.3 | 224 × 224 | 82.7 |

| Agent-CSwin-T | 23 | 4.3 | 224 × 224 | 83.3 |

| WLAM_CSwin_T | 21 | 4.1 | 224 × 224 | 83.6 |

| CSwin-S | 35 | 6.9 | 224 × 224 | 83.6 |

| Agent-CSwin-S | 35 | 6.9 | 224 × 224 | 84.0 |

| WLAM-CSwin-S | 34 | 6.7 | 224 × 224 | 84.2 |

| Model | Par. ↓ (M) | Flops ↓ (G) | Type | Top-1 ↑ (Cifar10) | Top-1 ↑ (Cifar100) |

|---|---|---|---|---|---|

| MobileViT-v2 × 1.5 | 10.0 | 3.151 | Hybrid | 96.2 | 79.5 |

| EfficientFormer-L1 [50] | 12.3 | 2.4 | Hybrid | 97.5 | 83.2 |

| EdgeViT-S [52] | 11.1 | 1.1 | Transformer | 97.8 | 81.2 |

| EdgeViT-M [52] | 13.6 | 2.3 | Transformer | 98.2 | 82.7 |

| PVT-Tiny | 11.2 | 1.9 | Transformer | 95.8 | 77.6 |

| WLAM-PVT-T | 11.8 | 2.0 | DWT-Transformer | 96.9 | 82.1 |

| WLAMFormer_L1 | 13.5 | 2.8 | DWT-Transformer | 97.7 | 84.5 |

| PVT-Small | 24.5 | 3.8 | Transformer | 96.5 | 79.8 |

| WLAM-PVT-S | 20.8 | 3.9 | DWT-Transformer | 98.4 | 84.8 |

| PoolFormer-S24 | 21 | 3.5 | Pool | 96.8 | 81.8 |

| EfficientFormer-L3 [50] | 31.9 | 5.3 | Hybrid | 98.2 | 85.7 |

| ConvNeXt | 28 | 4.5 | ConvNet | 98.7 | 87.5 |

| ConvNeXt V2-Tiny | 28 | 4.5 | ConvNet | 99.0 | 90.0 |

| EfficientNetV2-S | 24 | 8.8 | ConvNet | 98.1 | 90.3 |

| WLAMFormer_L2 | 23 | 3.8 | DWT-Transformer | 98.2 | 87.1 |

| Dataset | Model | mIoU (%)↑ | DSC (%)↑ | Acc (%)↑ | Spe (%)↑ | Sen (%)↑ |

|---|---|---|---|---|---|---|

| ISIC17 | UNet [39] | 79.98 | 86.99 | 95.65 | 97.43 | 86.82 |

| UTNetV2 [55] | 77.35 | 87.23 | 95.84 | 98.05 | 84.85 | |

| TransFuse [56] | 79.21 | 88.40 | 96.17 | 97.98 | 87.14 | |

| MALUNet [57] | 78.78 | 88.13 | 96.18 | 98.47 | 84.78 | |

| VM-UNet [58] | 80.23 | 89.03 | 96.29 | 97.58 | 89.90 | |

| WLAM-UNet | 80.41 | 89.23 | 96.45 | 97.55 | 90.10 | |

| ISIC18 | UNet [55] | 77.86 | 87.55 | 94.05 | 96.69 | 85.86 |

| UNet++ [59] | 78.31 | 87.83 | 94.02 | 95.75 | 88.65 | |

| Att-UNet [60] | 78.43 | 87.91 | 94.13 | 96.23 | 87.60 | |

| UTNetV2 [55] | 78.91 | 88.25 | 94.32 | 96.48 | 87.60 | |

| SANet [61] | 79.52 | 88.59 | 94.39 | 95.97 | 89.46 | |

| TransFuse [56] | 80.33 | 89.27 | 94.66 | 95.74 | 91.28 | |

| MALUNet [57] | 80.25 | 89.04 | 94.62 | 96.19 | 89.74 | |

| VM-UNet [58] | 80.35 | 89.71 | 94.91 | 96.13 | 91.12 | |

| WLAM-UNet | 80.43 | 89.84 | 95.00 | 96.20 | 91.22 |

| Model | Par. ↓ (M) | Flops ↓ (G) | Throughput (A100) | Top-1 ↑ | Difference |

|---|---|---|---|---|---|

| 1 | 25.0 | 3.8 | 1389 | 82.9 | −1.2 |

| 2 | 24.9 | 4.2 | 1266 | 83.3 | −0.8 |

| 3 | 25.6 | 4.0 | 1401 | 82.1 | −2.0 |

| 4 | 25.2 | 4.2 | 1050 | 83.8 | −0.3 |

| WLAMFormer_L2 | 25.0 | 3.8 | 1280 | 84.1 | - |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Feng, B.; Xu, C.; Li, Z.; Liu, S. WLAM Attention: Plug-and-Play Wavelet Transform Linear Attention. Electronics 2025, 14, 1246. https://doi.org/10.3390/electronics14071246

Feng B, Xu C, Li Z, Liu S. WLAM Attention: Plug-and-Play Wavelet Transform Linear Attention. Electronics. 2025; 14(7):1246. https://doi.org/10.3390/electronics14071246

Chicago/Turabian StyleFeng, Bo, Chao Xu, Zhengping Li, and Shaohua Liu. 2025. "WLAM Attention: Plug-and-Play Wavelet Transform Linear Attention" Electronics 14, no. 7: 1246. https://doi.org/10.3390/electronics14071246

APA StyleFeng, B., Xu, C., Li, Z., & Liu, S. (2025). WLAM Attention: Plug-and-Play Wavelet Transform Linear Attention. Electronics, 14(7), 1246. https://doi.org/10.3390/electronics14071246