1. Introduction

As a core component of the power supply architecture, the main function of the distribution system is to efficiently distribute the current in the transmission lines to end-users to meet their diverse power needs. In the distribution system, distribution line insulators play a key role in electrical insulation and supporting the lines, ensuring the safe transmission of electricity and being an indispensable component of the distribution network system [

1,

2]. However, during power supply, insulators may be damaged and corroded due to overvoltage, thermal stress, environmental corrosion, pollution, and improper maintenance, affecting their performance [

3,

4], and even leading to line collapse and unstable power transmission, posing critical operational risks to the power distribution networks. To address the safety hazards caused by insulator defects in a timely manner, grid line inspection robots have been developed and utilized in the grid system [

5,

6].

Current distribution line insulator defect detection primarily depends on manual inspection of robotic-captured imagery, a process prone to inefficiency and oversight [

7,

8]. Advancements in imaging technologies have enabled automated solutions, with early-stage research employing traditional machine learning methods (morphological/geometric feature analysis) for defect identification [

9,

10,

11]. However, these approaches exhibit constrained effectiveness in complex operational scenarios. The emergence of deep learning-powered computer vision frameworks has revolutionized surface anomaly detection, offering enhanced adaptability to diverse defect patterns and environmental conditions [

12,

13].

For example, Kanika Bhalla et al. [

14] developed a defect segmentation approach for TFT-LCD panels using Singular Value Decomposition (SVD) and Kernelized Neutrosophic Entropy. The integration of fuzzy membership functions with SVD enables adaptive image contrast enhancement, effectively mitigating uneven lighting and color distortions. Gaussian kernel functions and nonlinear neutrosophic entropy thresholding are further incorporated to improve defect detection accuracy in complex backgrounds. However, the reliance on manually designed fuzzy and kernel functions in FSVD and GKNE restricts their generalization capability for diverse defect patterns. Pin Ning et al. [

15] enhanced the Faster-RCNN architecture by incorporating a Feature Pyramid Network (FPN), achieving improved detection performance. However, the increased computational overhead resulted in slower processing rates, posing challenges in meeting the real-time demands of high-frequency power line inspection tasks. Qinggang Wu et al. [

16] introduced a texture extraction technique for insulators utilizing localized operators within the Beltrami framework, enhancing the detection of uneven surface textures. However, the dynamic adjustment of its weighted parameters remains suboptimal, requiring further refinement. Ruihai Li et al. [

17] enhanced the SSD framework through the integration of a multi-branch architecture and dilated convolution, boosting detection precision in simple backgrounds while compromising performance in complex scenarios. MD. Faiyaz Ahmed et al. [

18] used deep learning to detect and identify breakdown defects in transmission line insulators, but the model size was too large and more suitable for large equipment for transmission line inspection, making it difficult to deploy on lightweight and small equipment for distribution line inspection. Tomaszewski et al. [

19] proposed a framework utilizing color intensity profile analysis and ensemble learning for insulator disk fault detection. The method identifies damaged disks through spectral analysis of color intensity profiles extracted from insulator images. However, its reliance on color features alone—without integrating deep learning-based multi-modal fusion—limits its ability to capture critical discriminative features.

Within the domain of object detection frameworks, YOLO-series models exhibit high efficacy in identifying insulator defects across diverse environments, as evidenced by studies [

20,

21,

22]. For example, Chuanyang Liu et al. [

23] proposed an enhanced YOLO-based framework for insulator defect detection in aerial imagery. By integrating Cross-Stage Partial Dense (CSPD) blocks to refine feature propagation and reuse, the method achieves superior accuracy. However, its scope is restricted to missing-cap faults in glass insulators, overlooking critical defects such as cracks, contamination, or mechanical degradation in distribution line insulators, thereby limiting practical applicability. Gujing Han et al. [

24] proposed an improved YOLOv5-based insulator fracture detection method, which enhances feature discriminability in complex backgrounds through the ECA-Net attention mechanism, preserves small target features using the bidirectional feature pyramid Bi-FPN, and optimizes overlapping target detection with the Soft-NMS algorithm. In this method, the ECA-Net employs a local cross-channel interaction strategy, which reduces computational costs but may neglect long-range channel dependencies. Jun SU et al. [

25] developed a lightweight insulator defect detection framework by integrating the Triplet Attention module into YOLOv8n, aiming to mitigate challenges including complex background interference, small-target under detection, and excessive computational complexity. However, the Slim-neck structure in their design directly employs the existing GSConv module without incorporating a spatial-channel decoupled convolution mechanism tailored to the slender morphology of insulators, potentially leading to localized feature loss. In addition, Chengyin Ru et al. [

26] proposed an enhanced lightweight framework, ECA-YOLOX-Tiny, for detecting self-explosion defects in UAV-captured insulator images. By integrating the Efficient Channel Attention (ECA) module into the YOLOX-Tiny backbone, the method improves feature discriminability for small defect regions. However, although the ECA reduces parameter overhead, its lack of spatial attention integration results in suboptimal feature focus performance for densely occluded insulators.

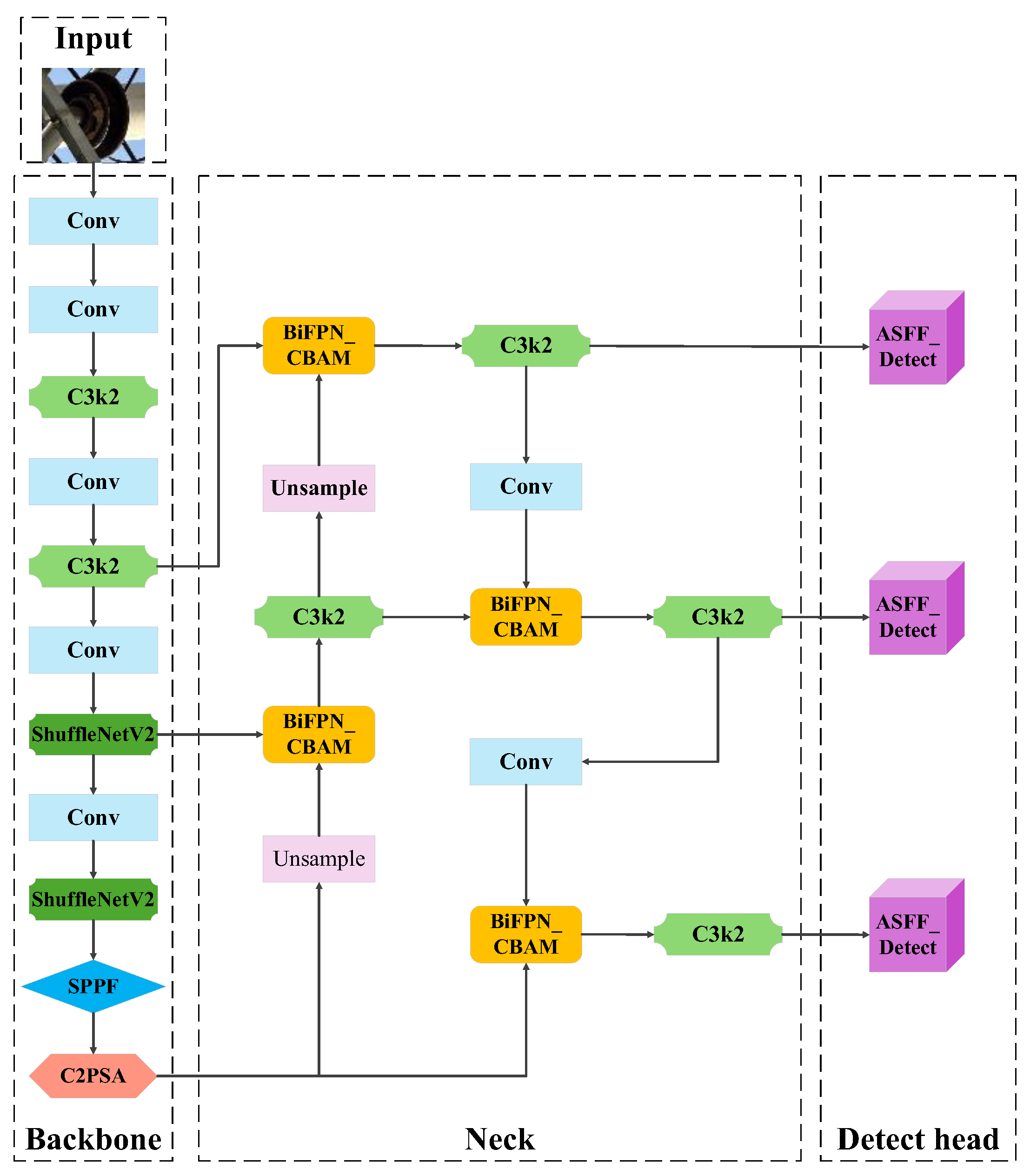

Building upon prior research in insulator defect detection, this study proposes an enhanced YOLOv11-based algorithm for multi-type distribution line insulators in complex environments to improve detection accuracy. Specifically, the original YOLOv11 architecture is optimized through structural enhancements to its backbone, neck, and detection head modules. The main improvements are as follows:

- (1)

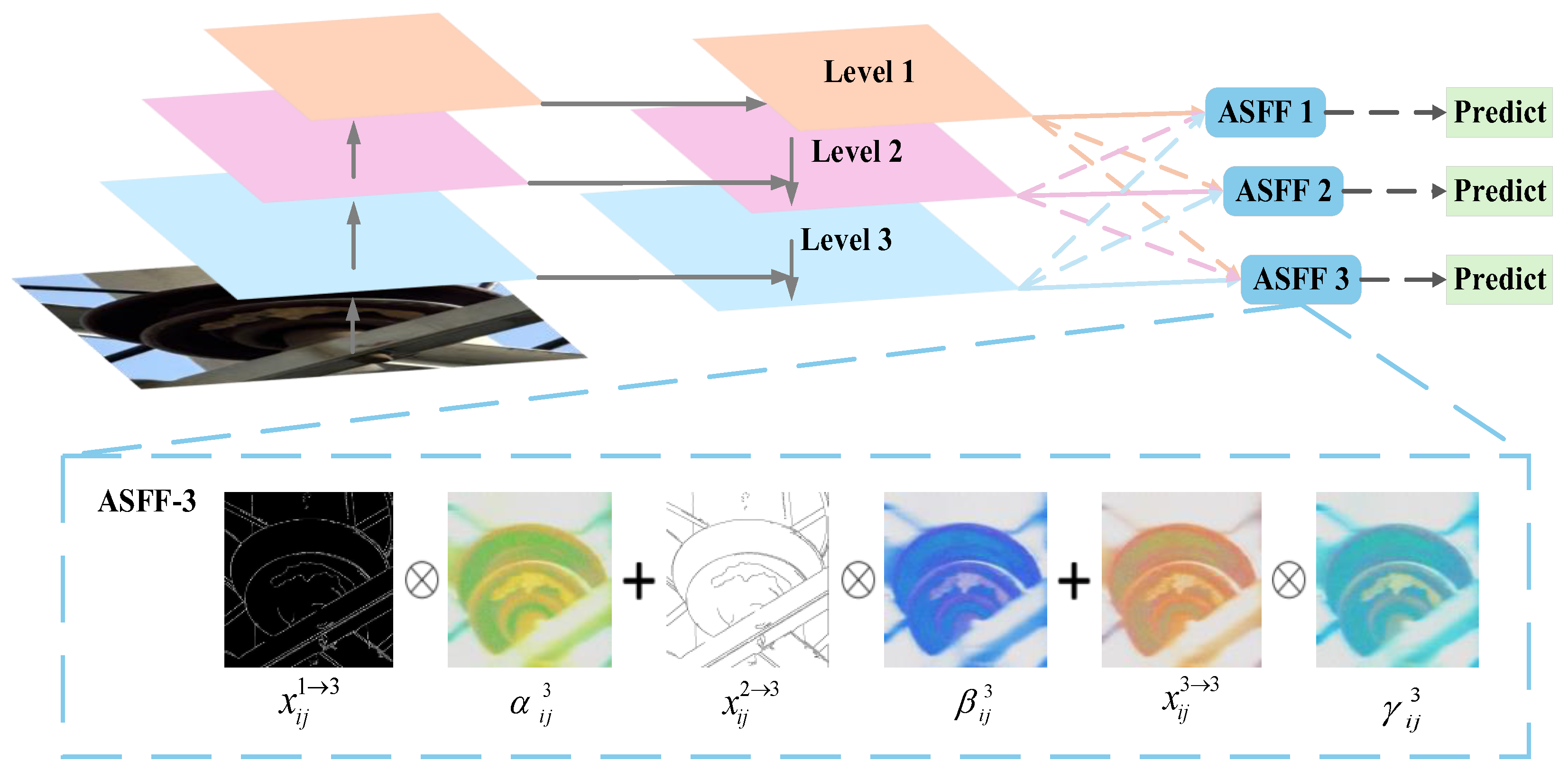

In order to solve the problem of scale inconsistency when identifying defect features due to different defect forms, this paper embeds the ASFF (Adaptively Spatial Feature Fusion) adaptive spatial feature fusion module in the original detection head module. This strengthens the feature fusion capacity of the detection head across multi-resolution feature hierarchies, thereby boosting multi-scale target detection performance while enhancing the algorithm’s accuracy and operational robustness.

- (2)

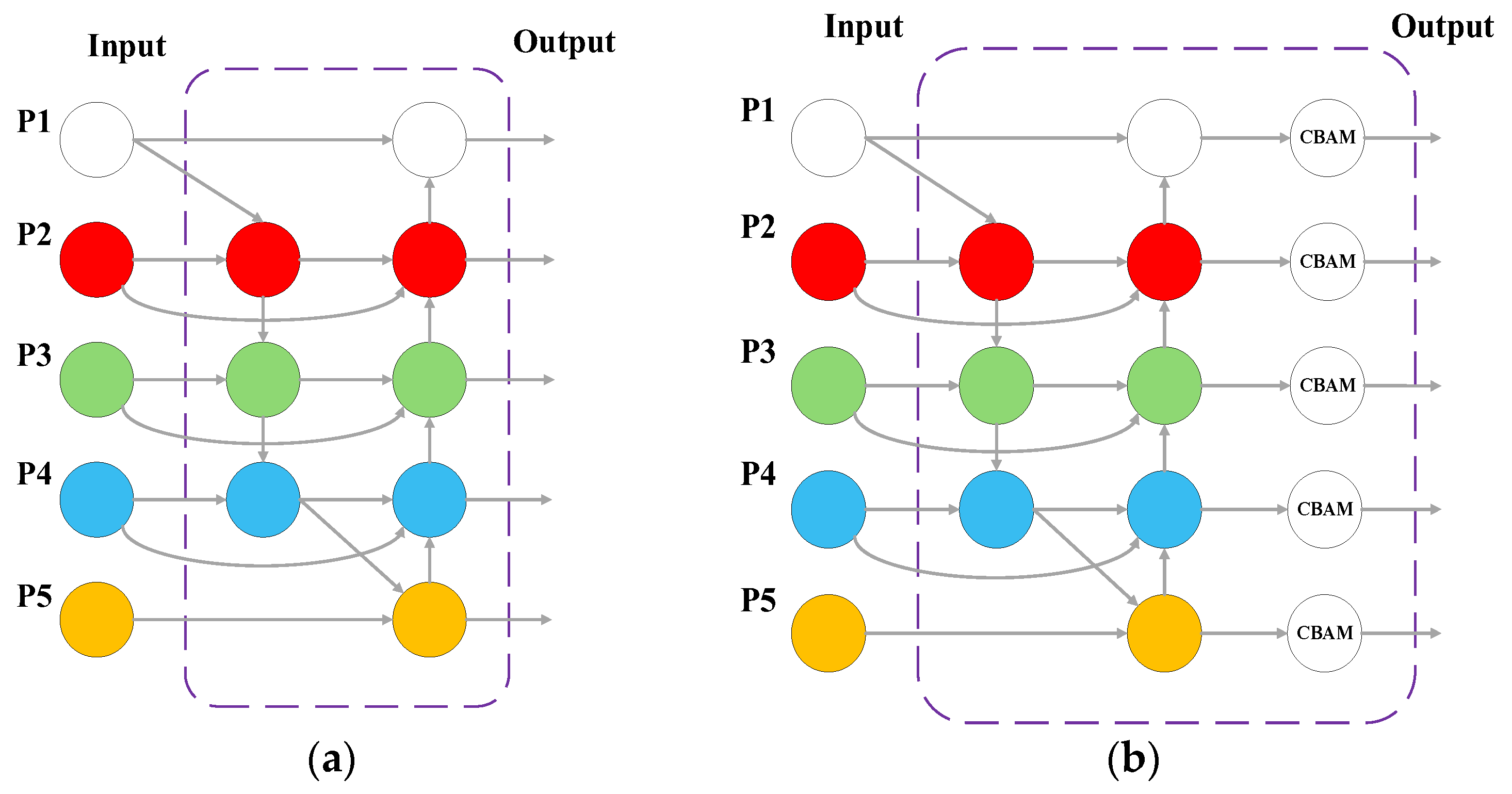

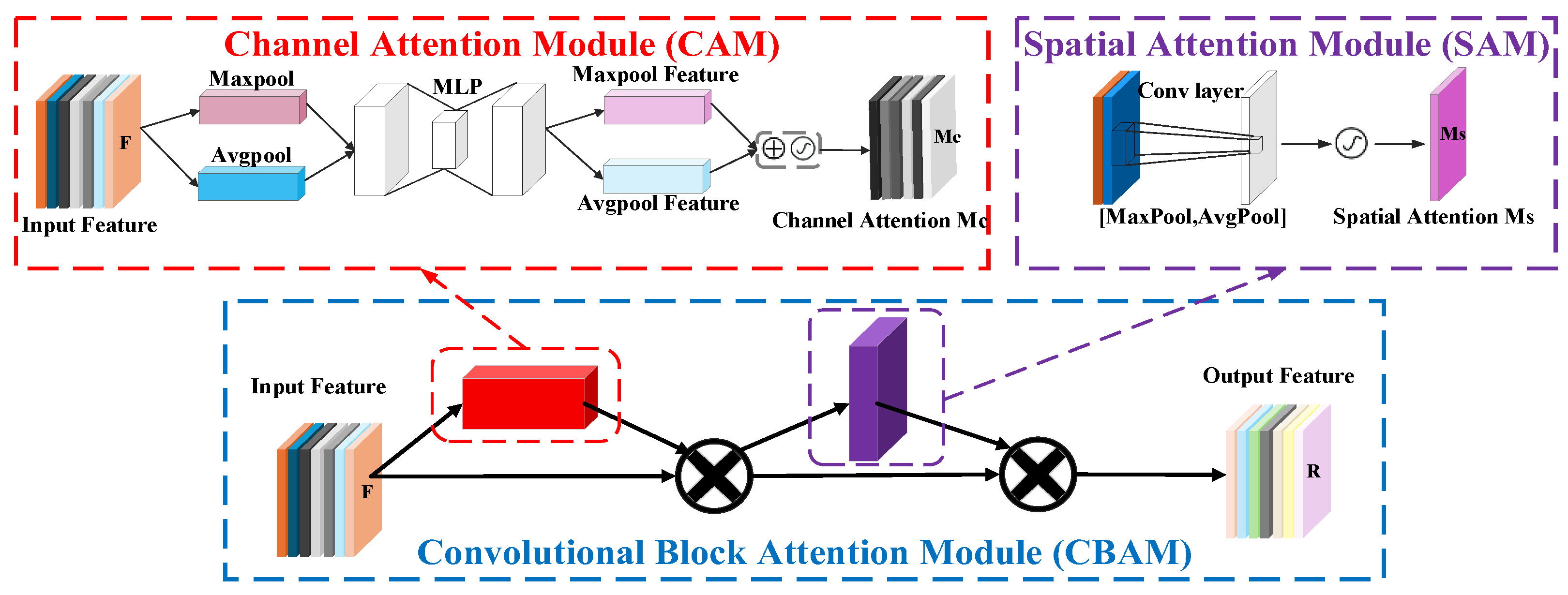

To enhance contextual information flow and enable weighted multi-scale defective feature fusion, this study replaces the original FPN + PAN neck structure with a Bidirectional Feature Pyramid Network (BiFPN), thereby optimizing cross-scale feature fusion efficiency in the neck module. Meanwhile, to prioritize defective features on insulators within complex recognition environments, the Convolutional Block Attention Module (CBAM) is integrated into the BiFPN output layer. This integration mitigates background interference during urban–rural distribution line inspections while enhancing feature representation learning, thereby improving detection precision.

- (3)

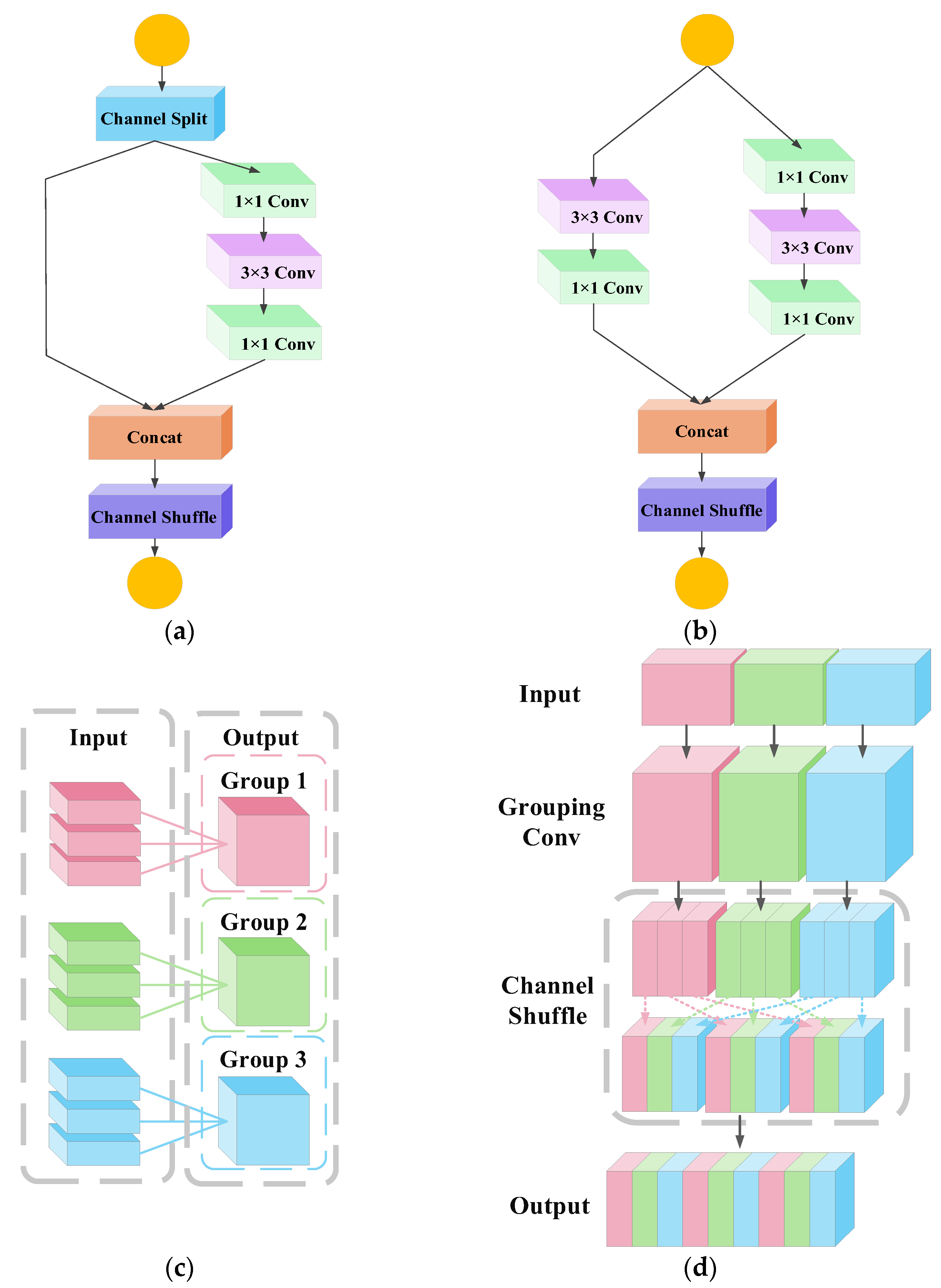

To mitigate the increase in model parameters brought about by the above improvements, improve detection efficiency, and facilitate deployment on small distribution inspection equipment, we replace the large-parameter C3k2 module at the end of the backbone network with a ShuffleNetV2 module. The number of model parameters is reduced without the excessive loss of detection accuracy.

3. Experimental Verification

3.1. Experimental Software and Hardware Configuration

The computational architecture proposed in this study leverages the PyTorch neural network development ecosystem.

The hardware and software used in the experiment were as follows: Windows 10 operating system; 13th Gen Intel(R) Core (TM) i5-13600KF @ 3.5 GHz CPU; RTX 4070 12 G GPU; PyTorch version 2.1.2; CUDA version 11.8.

3.2. Assessment of Indicators

To holistically evaluate target detection performance, this study employs five key metrics: precision, recall, mean average precision (mAP), F1-score, and AUC–ROC. These indicators quantify model capabilities across critical dimensions of detection reliability and generalization.

Precision quantifies the ratio of true positive instances among all samples classified as positive by the model, serving as an indicator of classification validity. This metric evaluates the model’s capability to minimize false positive predictions, mathematically expressed as the number of correctly identified positives divided by the total predicted positives (sum of true and false positives). The formula is as follows:

where

TP (true positive) represents the number of true positive samples, and

FP (false positive) represents the number of false positive samples.

Recall measures the proportion of all samples that are actually positive that are correctly predicted as positive by the model. It reflects the completeness of the model’s detection results, i.e., how many actual positive samples the model was able to detect.

Recall is calculated using the following formula:

where

FN (false negative) represents the number of false negative samples.

Mean average precision (mAP) is a composite performance metric that synthesizes the model’s precision performance at different levels of recall.

mAP is calculated by involving the integration of the precision–recall curves for each category, and then averaging the mean precision across all categories. The specific calculation formula is as follows:

where

represents the average precision of the

i-th category and N represents the total number of categories.

The

F1-score is the harmonic mean of precision and recall, used to balance the trade-off between these two metrics. When dealing with class imbalance in data, the

F1-score provides a more comprehensive measure of a model’s ability to identify minority classes.

F1-score is calculated using the following formula:

When there is a significant discrepancy between precision and recall, the F1-score will be notably lower than their arithmetic mean, indicating that the model requires further optimization of its classification boundaries.

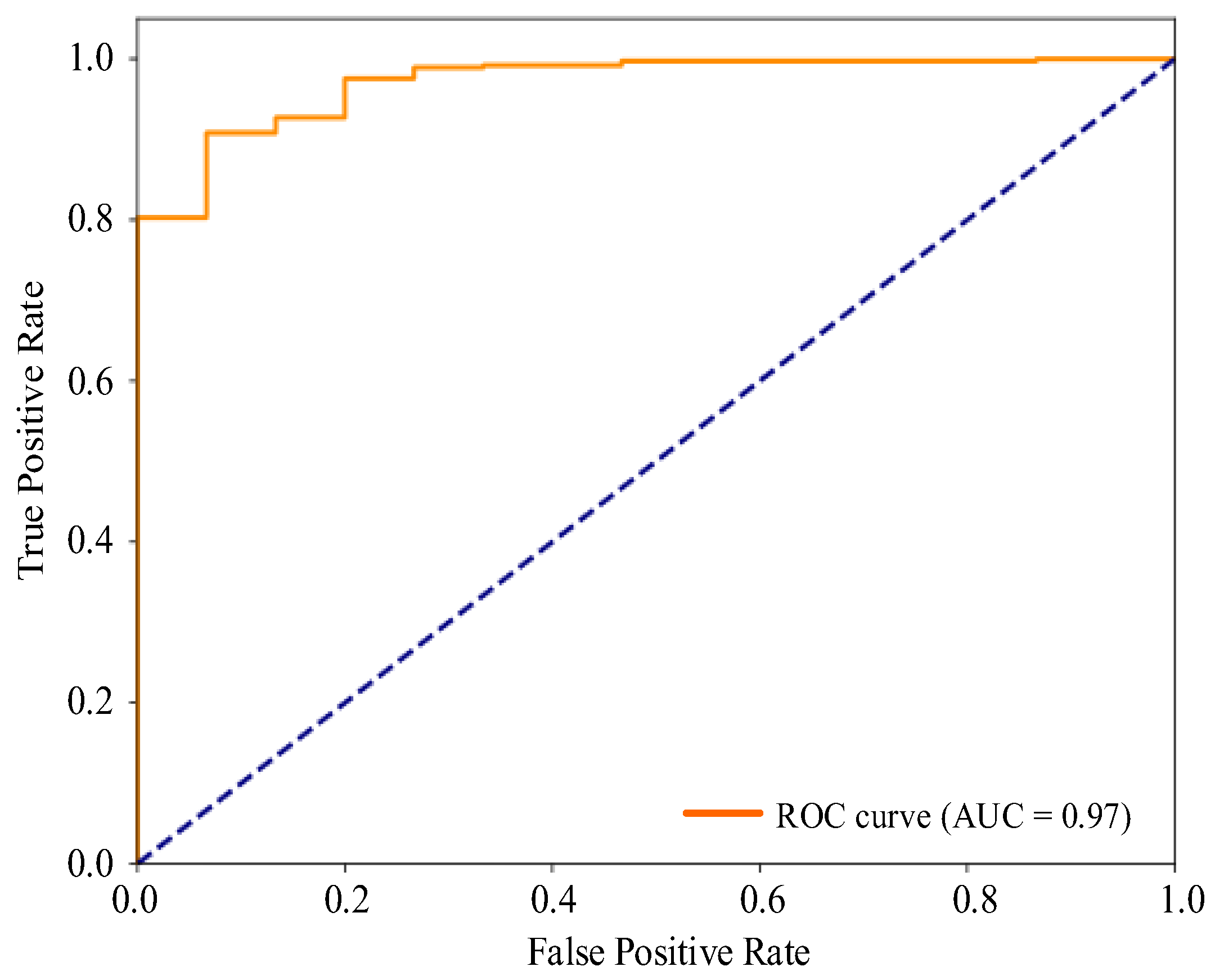

AUC–ROC (area under the ROC curve) evaluates a model’s overall performance across different classification thresholds by calculating the area under the ROC curve. The ROC curve plots the

FPR (false positive rate) on the x-axis against the

TPR (true positive rate) on the y-axis. The closer the AUC value is to 1, the stronger the model’s ability to distinguish between positive and negative samples. The formula is expressed as

Unlike metrics that rely on a single threshold, the AUC–ROC captures a model’s generalization performance across all possible thresholds, making it particularly suitable for class-imbalanced scenarios.

These evaluation metrics not only reflect model performance in specific aspects individually but also provide a comprehensive view of model performance when used in combination. In practice, these metrics help us understand the strengths and limitations of the model on specific tasks, which in turn guides the optimization and improvement of the model.

3.3. Training and Prediction of Improved YOLO11

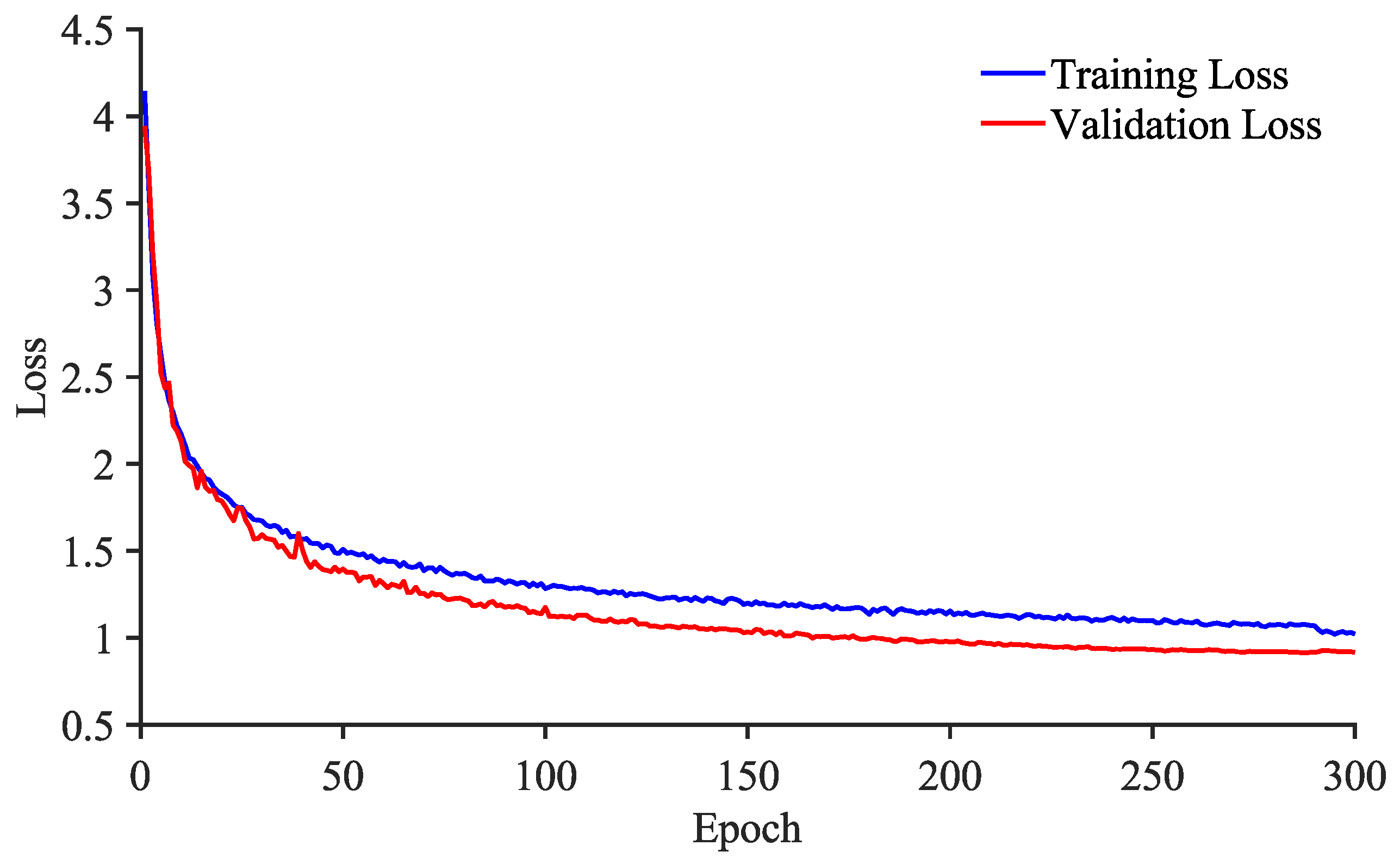

The post-implementation of the defect dataset for distribution network insulators, the improved YOLO11 model training was started. Considering the capacity of the computing platform, the training iterations were set to 300 times.

After the training is completed, the model’s performance is evaluated by statistically analyzing the processing and prediction results of the test set. During the training process, the loss value decreases with iterations, and the model converges when the validation loss value no longer decreases. The loss value change curve is shown in

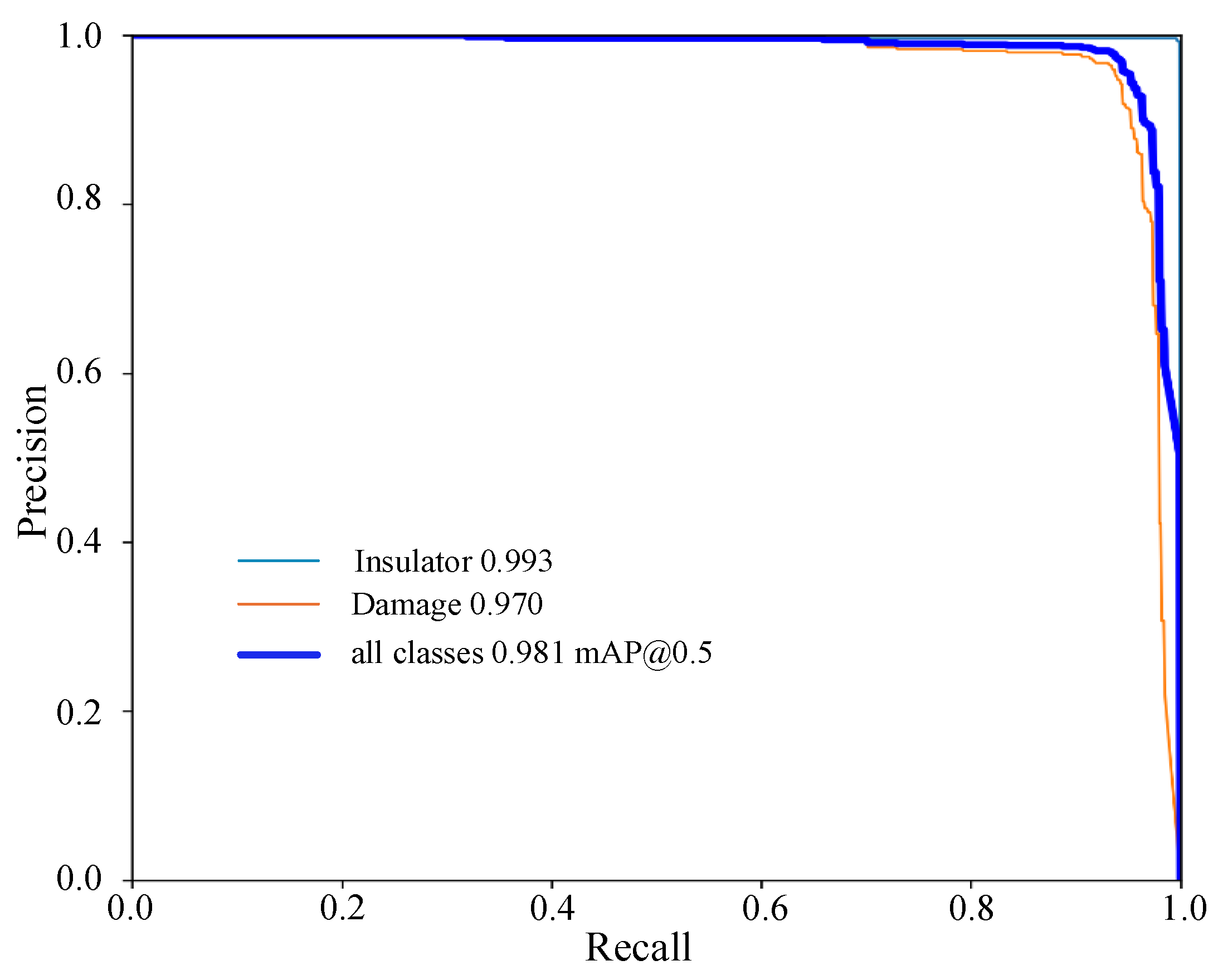

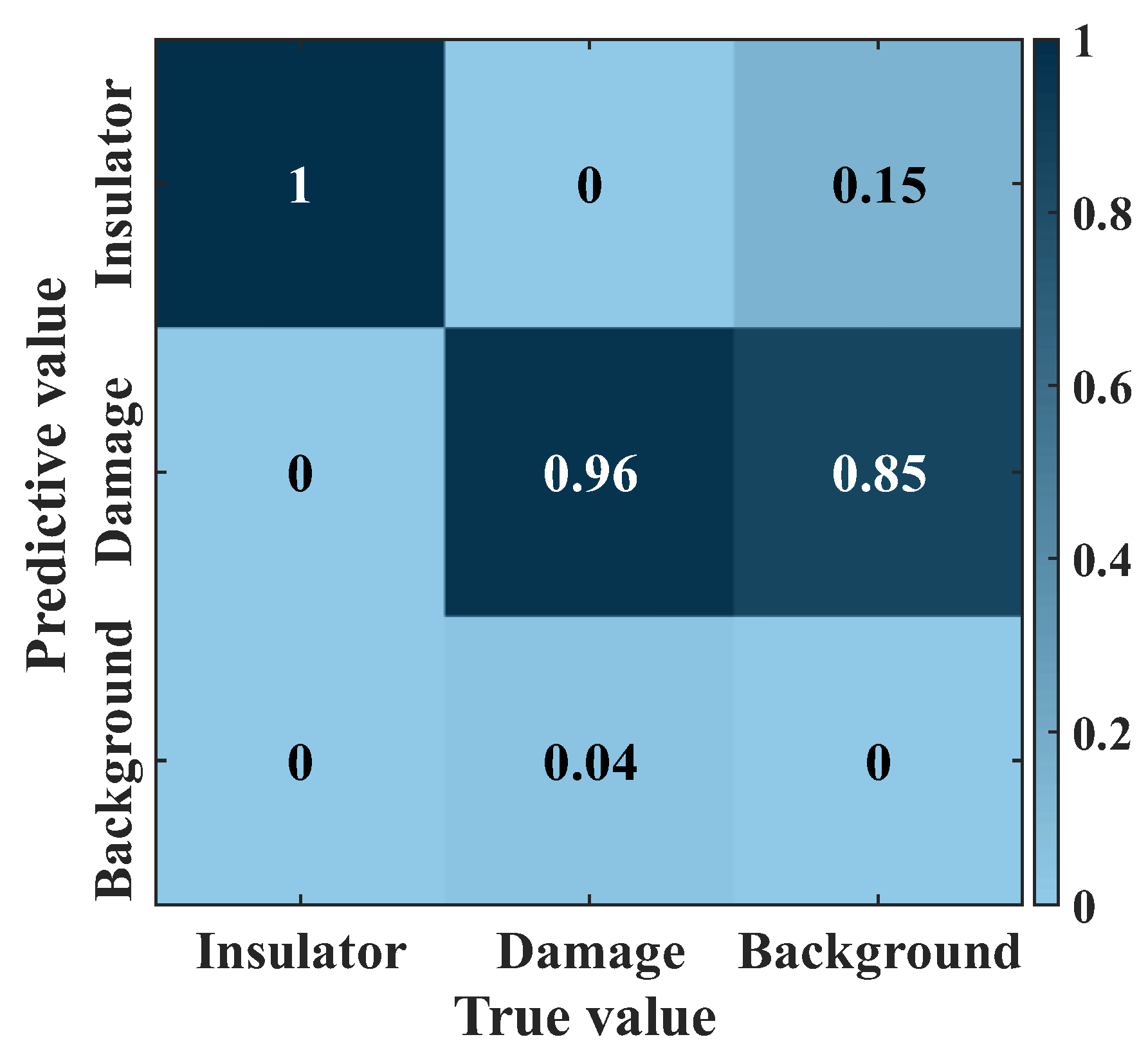

Figure 8. To comprehensively evaluate the model performance, the precision–recall (P-R) curve, the AUC–ROC curve, and confusion matrix are used as the basis for experimental validation. The P-R curve is presented in

Figure 9, the AUC–ROC curve in

Figure 10, and the confusion matrix in

Figure 11. The P-R curve clearly illustrates the balanced relationship between the model’s precision and recall under different thresholds, accurately reflecting its performance across varying confidence levels. The AUC–ROC curve demonstrates the trade-off between the true positive rate (TPR) and false positive rate (FPR) at different thresholds, effectively showcasing the model’s ability to distinguish between positive and negative samples. The confusion matrix, on the other hand, provides an in-depth analysis of label classification through four key metrics: true positives (TPs), true negatives (TNs), false positives (FPs), and false negatives (FNs), offering a comprehensive assessment of the model’s classification effectiveness.

The training loss curves illustrated in

Figure 8 demonstrate a sharp decline in model loss values during the initial 50 epochs, followed by stabilization beyond the 200th epoch. This progressive reduction in the composite loss trajectory confirms robust convergence behavior of the model. As evidenced by the result in

Figure 9,

Figure 10 and

Figure 11, the enhanced YOLO11 architecture achieves superior performance in identifying insulator defects on power distribution line infrastructure.

Furthermore,

Figure 12 shows the prediction results of the model on different test sets. The distribution line insulators and their defects are marked out in the figure and distinguished using different colored boxes with the corresponding names and confidence levels shown on the boxes. From the experimental results of the improved model on different test sets, it can be seen that the improved model is able to accurately identify the insulators and whether they are defective or not, and the prediction results show consistency across different test sets with the overall prediction effect.

3.4. Comparative Experiments on Different Attention Mechanisms

To investigate the specific impact of various attention mechanisms on the detection performance of the YOLO11 model, we integrated BiFPN modules, incorporating three different attention mechanisms, SE, CA, and CBAM, respectively, in the same location of the model architecture. The detection results of each module on the distribution line insulator defect dataset are shown in

Table 2. The experimental results show that the network incorporating the CBAM performs on the defect recognition task with a high defect detection accuracy of 96.0% and a high mean accuracy (mAP) of 97.6%. The advantage of the CBAM is its ability to add attention to both channel and spatial dimensions simultaneously, which significantly improves the model’s ability to capture key features. This not only preserves the positional and spatial information in the feature map but also effectively localizes the defective features, which in turn enhances the performance of the model. In contrast, the CA module, which mainly focuses on the spatial location information in the feature map, expands the parameter scale of the model, but its detection performance enhancement is not significant. The SE module, on the other hand, mainly concentrates its attention on the channel dimension and lacks the sufficient consideration of the spatial dimension feature information, making its improvement in detection performance insignificant.

As shown in

Table 2, the results of the attention mechanism comparison experiments indicate that the integration of the CBAM attention mechanism into the YOLO11 network is an effective solution to improve defect detection performance. Due to its simultaneous attention in both channel and spatial dimensions, the model is able to identify and localize insulator defects more accurately, thus improving the overall detection performance.

3.5. Ablation Experiment

This research implemented architectural optimizations across the backbone network, neck architecture, and detection head of the baseline model. To systematically evaluate the efficacy of these modifications, ablation studies were conducted on the three proposed enhancement modules. The quantitative comparisons of how distinct optimization strategies influence detection capabilities are presented in

Table 3.

As demonstrated in

Table 3, integrating the ASFF module into YOLOv11’s detection head achieves accuracy gains of 1.0% (AP) and 0.5% (mAP) over the baseline. After integrating the BiFPN_CBAM into the neck network of the YOLO11 model, the defect detection accuracy and average detection accuracy are improved by 0.4% and 0.2%, respectively, compared with the original model. After replacing the large-parameter C3k2 module at the end of the backbone network of the YOLO11 model with the ShuffleNetV2 module, both the defect detection accuracy and the average detection accuracy decreased by only 0.1% compared to the original model, but the model size was significantly reduced. Similarly, when compared to models only incorporating ASFF and BiFPN_CBAM, the improved YOLO11 model maintained comparable detection accuracy for insulator defects while achieving a marked reduction in model size, demonstrating that the ShuffleNetV2 module effectively reduces model size and accomplishes model lightweighting.

The experimental results demonstrate that both the ASFF module and the BiFPN_CBAM significantly enhance the model’s performance. Specifically, the ASFF module strengthens the model’s feature extraction capability for multi-scale defective targets, thereby improving its detection capacity for diverse defect types. Meanwhile, the BiFPN_CBAM enables efficient cross-scale feature information flow, with the CBAM at the output stage effectively mitigating interference from complex backgrounds on the perception of critical insulator defect features. The synergistic interaction between ASFF and BiFPN_CBAM collectively elevates the detection accuracy of the improved model. Additionally, the incorporation of the ShuffleNetV2 module achieves model lightweighting, significantly reducing model size while preserving detection accuracy and enhancing generalization capability.

The synergistic integration of these three enhancements demonstrates marked superiority over both the baseline configuration and individual optimization approaches. The model achieves 97.0% precision in flaw identification, with a mean accuracy of 98.1% across all detection metrics.

3.6. Comparison of Detection Performance of Different Models

A benchmarking framework was established to assess the proposed model’s advancements. Multiple representative architectures—Faster R-CNN (convolutional neural network), DEYO-N, YOLOv5, YOLOv8, YOLOv9, YOLOv10, and the baseline YOLO11 implementation—were trained under identical conditions using the power line insulator defect dataset. The comparison evaluation mainly includes average precision (AP), mean average precision (mAP), F1-score, Mbyte, and model inference speed (FPS), as shown in

Table 4.

The experimental results indicate that the improved model significantly outperforms the original Faster R-CNN, DEYO-N, YOLOv5, YOLOv8, YOLOv9, YOLOv10, and YOLO11 models across AP, mAP, and F1-score metrics, while maintaining a respectable FPS and effectively accomplishing recognition tasks. In summary, the enhanced model demonstrates remarkable effectiveness in detecting defects in distribution line insulators, achieving superior performance compared to the other seven algorithmic models.

4. Discussion

4.1. Practical Significance

In power systems, the accuracy of insulator defect detection is directly related to the fault leakage and misdetection rates. Although this improved model only improves the accuracy of insulator defect detection by 1.4%, it can reduce thousands of false positives in millions of image analyses, thus shortening the fault response time and reducing the cost of manual review. Insulator defects can cause tens of thousands of dollars in financial losses in a single fault, so a 1.4% improvement may seem limited, but hundreds of potential faults can be avoided when performing intense distribution line inspections with millions of image analyses, and millions in maintenance savings can be expected.

4.2. Limitations

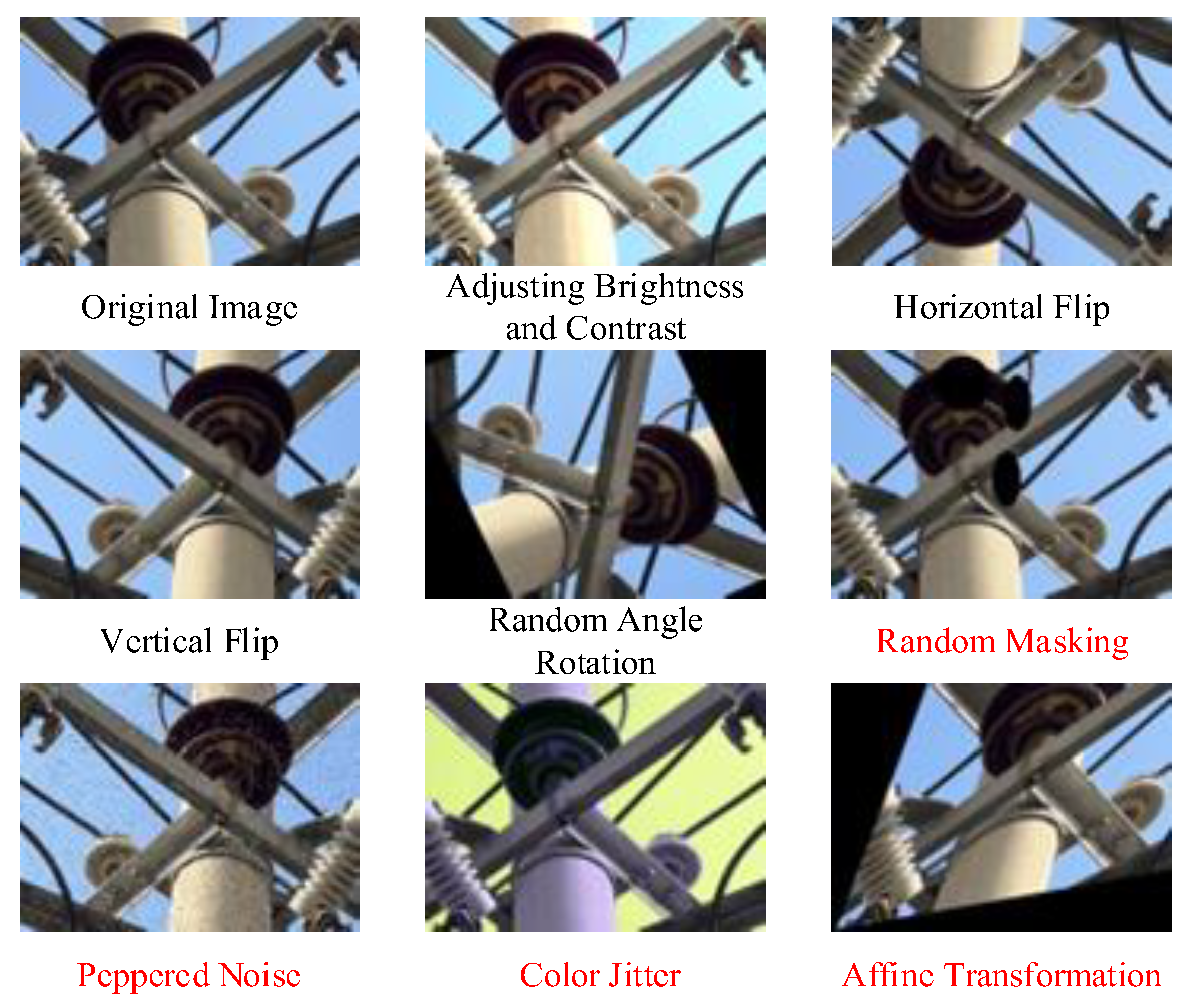

This study has the following three limitations at the data construction level: firstly, the dataset size is too small (only 1205 images after enhancement), which makes it difficult to adequately cover the various features of the target scene, and even if a data enhancement strategy is adopted, there may be a generalization bottleneck in the model detection performance for cross-scene testing. Secondly, there are constraints regarding the labeling of raw data. Due to the limited granularity of the labeling of damage types in the raw data, the sample size of some categories did not meet the requirement of statistical significance, and in order to ensure the consistency of the analysis, this study adopted a uniform naming convention for all the damage types, a treatment that may result in the loss of some of the detailed information. In addition, although a balanced strategy of 50% real data and 50% synthetic data was used to mitigate domain bias, due to the distributional differences between synthetic and real data, there may still be some domain gaps in practical applications, which may lead to model fitness problems.

In terms of model architecture, there are efficiency bottlenecks in the model. As shown in

Table 3, the introduction of the ASFF and BiFPN_CBAMs increased the model parameter count from 5.23 Mb to 9.65 Mb. Although the ShuffleV2 lightweight module reduced the parameter count of the ASFF and BiFPN_CBAM-enhanced model from 9.65 Mb to 8.88 Mb, the overall improved model still occupies 3.65 Mb more than the baseline model. Additionally, as indicated in

Table 4, the improved model exhibits a 1.33 FPS decrease compared to the original model. This suggests that while the improved model achieved a 1.4% accuracy gain in defect detection, the incorporation of ASFF and BiFPN_CBAMs introduces computational redundancy due to multi-scale feature fusion. Furthermore, the ShuffleV2 module demonstrates insufficient lightweight capability for mitigating the increased complexity caused by the ASFF and BiFPN_CBAMs.

4.3. Practical Deployment and Real-Time Analysis

Although the current experiments focus on improving algorithmic accuracy, the model’s lightweight design and single-stage detection architecture have laid the foundation for practical deployment. The compact structure of our model theoretically enables an inference speed of ≥82 FPS on equivalent hardware, meeting the real-time requirements of most inspection scenarios. In future deployments, detection speed and accuracy can be further balanced by optimizing the quantization of the multi-scale feature fusion module and refining lightweight module deployment strategies. Additionally, commonly used edge-computing techniques such as model pruning and hardware acceleration libraries are expected to reduce computational redundancy, decreasing inference latency by approximately 30%, thereby supporting real-time processing demands for drone platforms.

In practical deployment, considerations such as heterogeneous hardware adaptation and power consumption constraints must also be addressed. For instance, mobile devices may require further model compression to minimize energy consumption, while server-side deployments could leverage multi-GPU parallel processing for large-scale data handling. Although this study does not include hardware-specific testing, the algorithmic-level deployment optimizations provide critical support for engineering implementation. Subsequent collaborations with power enterprises will be conducted to validate full-chain deployment in real-world scenarios.

4.4. Future Work

To address the aforementioned limitations, future research will focus on improvements in three key directions: Firstly, expanding real-world defect datasets of power line insulators through scenario-specific data collection to gradually increase data scale and enhance model generalization. Secondly, establishing a cross-institutional data collaboration mechanism by developing a progressive training framework based on horizontal federated learning. Thirdly, exploring a co-optimized model that integrates attention-guided channel dynamic pruning with multi-scale feature refinement. Specifically, a channel dynamic pruning module will be designed to optimize computational redundancy in complex multi-scale feature fusion architectures, aiming to identify an optimal subnetwork structure that preserves critical parameters while eliminating non-essential computations. This approach targets efficiency–accuracy trade-offs in the enhanced model architecture.

5. Conclusions

In this paper, a defect detection algorithm for distribution line insulators based on improved YOLO11 is proposed. The algorithm enhances the multi-scale feature fusion capability of the model by introducing the ASFF module, optimizes the weighted fusion efficiency and attention focusing performance of the feature pyramid by using the BiFPN_CBAM structure, and realizes model lightweighting by using the ShuffleNetV2 module. The accuracy (AP) and mean average precision (mAP) of the improved model on the self-constructed distribution line insulator defects dataset reach 97.0% and 98.1%, respectively, which are 1.4% and 0.7% higher than in the original YOLO11 model.

The experimental results show that the proposed fusion model significantly improves the accuracy of insulator defect detection and reliably detects the different types of defects on distribution line insulators even in complex background detection environments. This improvement is a major breakthrough in insulator defect detection technology, emphasizing the ability of the target detection algorithm to operate effectively in complex environments, thus expanding its practical application in distribution line inspection work. The timely detection of insulator defects is a key link to ensure the safe and stable operation of the power system, and the efficient detection capability of the improved model can significantly reduce the potential risks caused by insulator defects, providing strong support for the safe operation and reliable power supply of the power industry.

However, the scope of the dataset used in this study is relatively limited, and future research needs to further increase the data on insulator defects of distribution lines in more complex scenarios and enlarge the dataset size to enhance the generalization ability of the model. Meanwhile, the optimization of real-time deployment of the model on embedded devices needs to be explored in the future to promote the practical application of intelligent grid inspection.