Abstract

Heterogeneous chips, by integrating multiple processing units such as central processing unit(CPU), graphics processing unit (GPU) and field programmable gate array (FPGA), are capable of providing optimized processing power for different types of computational tasks. In modern computing environments, heterogeneous chips have gained increasing attention due to their superior performance. However, the performance of heterogeneous chips falls short of that of traditional chips without an appropriate task-scheduling method. This paper reviews the current research progress on task-scheduling methods for heterogeneous chips, focusing on key issues such as task-scheduling frameworks, scheduling algorithms, and experimental and evaluation methods. Research indicates that task scheduling has become a core technology for enhancing the performance of heterogeneous chips. However, in high-dimensional and complex application environments, the challenges of multi-objective and dynamic demands remain insufficiently addressed by existing scheduling methods. Furthermore, the current experimental and evaluation methods are still in the early stages, particularly in software-in-the-loop testing, where test scenarios are limited, and there is a lack of standardized evaluation criteria. In the future, further exploration of scenario generation methods combining large-scale models and simulation platforms is required, along with efforts to establish standardized test scene definitions and feasible evaluation metrics. In addition, in-depth research on the impact of artificial intelligence algorithms on task-scheduling methods should be conducted, emphasizing leveraging the complementary advantages of algorithms such as reinforcement learning.

1. Introduction

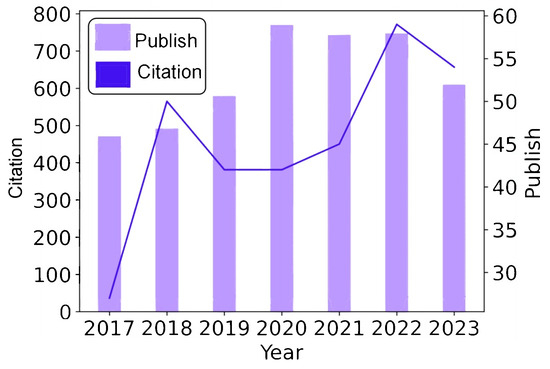

As Moore’s Law gradually becomes less effective, traditional chip architectures that rely on improving the performance of a single computing core are increasingly facing significant difficulties. The enhancement of computing power is no longer dependent on increasing the number of transistors. To address this challenge, heterogeneous chip architectures have gradually become an important key technology in modern computing systems. Figure 1 presents the publication and citation statistics of papers related to heterogeneous chips and task scheduling. The data in the figure come from the Web of Science [1]. From 2017 to 2023, the annual average citation growth rate was 29.51%, while the number of papers published each year increased by 100%, as shown in Figure 1. By integrating various types of computing units, such as central processing unit(CPU), graphics processing unit (GPU), field programmable gate array (FPGA), and specialized accelerators, heterogeneous chips can optimize for different task requirements, which provides more flexible and efficient computational ability. This architecture not only improves computational density and overcomes the performance limitations of single-processor architectures but also achieves a better balance between power consumption, performance, and cost [2].

Figure 1.

Publication and citation trends of papers in the past five years.

Although heterogeneous chips have significant performance improvement potential, their performance is inferior to that of homogeneous chips without appropriate task-scheduling methods. The task-scheduling method of heterogeneous chips faces the following challenges:

- The computing power, memory bandwidth, and latency of different processing units of heterogeneous chips are very different. Choosing the most suitable processing unit according to the characteristics of the task to avoid the waste of resources is the key problem in task scheduling [3].

- Computational tasks and dynamic changes in heterogeneous chip states increase the complexity of scheduling methods. The arrival time, execution time, priority, and other factors of tasks will change at any time. In contrast, the system load, resource availability, and other factors are also constantly fluctuating, which requires a high degree of real-time and adaptiveness [2].

- Scheduling methods must find a balance between real-time and complexity, especially in heterogeneous chips that accommodate multiple optimization goals. The delay of the scheduling methods directly affects the efficiency of the heterogeneous chip. In addition, the resource conflict between tasks is also a non-negligible problem. Some tasks need to share processing units or have dependencies with each other to improve the problem’s complexity [4].

To address these challenges, this paper systematically reviews the first task-scheduling approach for heterogeneous chips. Specifically, the contributions of this paper include three aspects:

- This paper discusses the challenges of heterogeneous chip task scheduling in-depth, innovatively dividing the scheduling method into two parts: the scheduling framework and the scheduling algorithm, systematically combing the current research status of the heterogeneous chip task-scheduling method. In terms of scheduling framework, this study divides the existing task-scheduling framework into two main types, centralized and distributed, according to the differences in the system architecture and control mode. For the scheduling algorithm, according to the scheduling mechanism, i.e., dynamic, heuristic, mixed, and optimized, five categories are created, and a detailed summary and analysis is provided for each category.

- Based on the in-depth investigation and systematic analysis of recent experiments and evaluation examples, this paper compares the heterogeneous chip task-scheduling method. On the one hand, the experimental evaluation system is based on multiple dimensions, such as load balancing, energy efficiency optimization, and real-time guarantee. On the other hand, a series of experimental methods are introduced, covering different hardware configurations, task load types, and application scenarios, to explore the key points and optimal experimental methods in the experimental simulation process further.

- Based on the research and analysis of heterogeneous chip task-scheduling methods in recent years, this paper provides a comprehensive research prospect for heterogeneous chip task-scheduling methods from an interdisciplinary perspective and combines computer science, artificial intelligence, and system optimization knowledge. This interdisciplinary discussion enriches existing research and provides ideas for future innovation in heterogeneous chip task-scheduling methods.

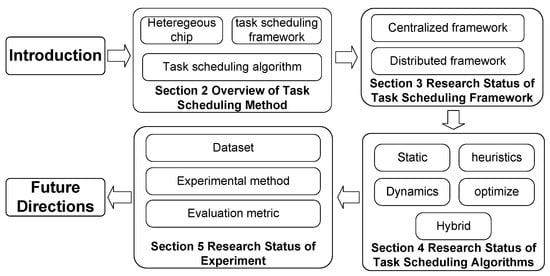

The subsequent structure arrangement of this paper is presented in Figure 2. Section 2 describes the basic knowledge of heterogeneous chip task scheduling. Section 3 focuses on classifying the task-scheduling framework emerging in recent years. Section 4 classifies and summarizes the recent task-scheduling algorithm in detail. Section 5 introduces the experimental method and evaluation metric of the task-scheduling method in detail. Section 6 analyzes challenges, limitations, and the future direction of task-scheduling methods for heterogeneous chips. Section 7 is a conclusion of the full text.

Figure 2.

Paper structure.

2. Overview of Task-Scheduling Method

2.1. Heterogeneous Chips

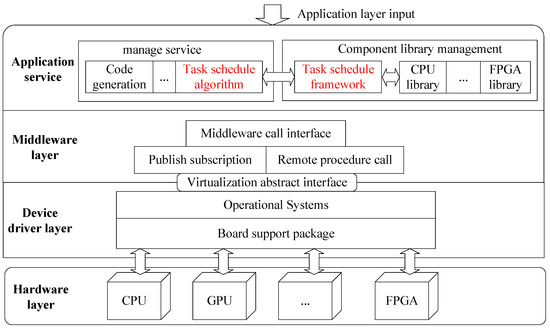

A heterogeneous chip refers to the integration of many different types of processor cores on a single chip, and these cores are different in instruction set architecture, microstructure, manufacturing technology, and even packaging technology. These differences mean that heterogeneous chips have unique advantages, according to the characteristics of different flexible tasks, and allocate different types of core resources in order to achieve better computing efficiency and resource utilization efficiency. In contrast, homogeneous chips are different in dealing with diverse tasks and may face the limitation of insufficient resource adaptation [5]. The common architecture of heterogeneous chip platforms is shown in Figure 3. Figure 3 illustrates a layered architecture for heterogeneous chip task scheduling, where the middleware layer comprises code generation, task-scheduling algorithms, and component libraries for various hardware such as CPU, GPU, and FPGA. It interacts with the device driver layer through virtualization abstract interfaces and operational systems, ultimately managing the task scheduling across the hardware layer.

Figure 3.

The framework of a heterogeneous chip.

2.2. Task-Scheduling Method

The heterogeneous chip task-scheduling method is a set of features of the heterogeneous chip architecture and the design of a task management strategy and algorithm system. It aims to efficiently assign many tasks to chips in different types of computing units and reasonably arrange the task execution order and time in order to realize the optimal utilization of resources and the maximization of system performance. The task-scheduling method can be divided into the scheduling framework and the scheduling algorithm according to the functional level and the division of responsibilities. Among them, the scheduling framework provides the necessary structure and interface for the scheduling algorithm. It defines the basic rules and mechanisms of task management and resource allocation. In contrast, the scheduling algorithm is implemented in this framework to realize the specific allocation and execution of tasks.

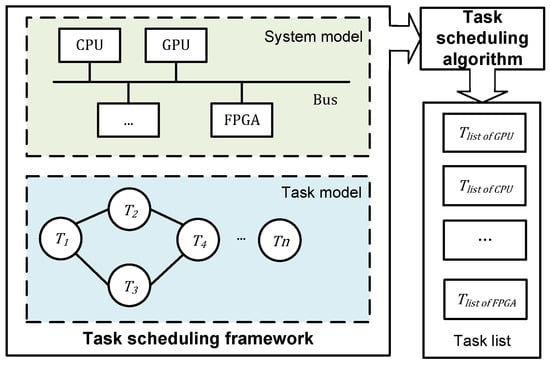

The task-scheduling framework of heterogeneous chips is used for the overall planning and management of the software architecture system of task allocation and resource application in heterogeneous chips. Relying on various computing units as the core resources, it can accurately and reasonably allocate diversified tasks to the appropriate computing units with its built-in scheduling algorithm mechanism. The core objectives of the framework focus on maximizing resource utilization, significantly improving overall system performance, and effectively reducing in energy consumption [6,7]. Figure 4 describes the scheduling framework and scheduling algorithm of the heterogeneous chip. As shown in the figure, the scheduling framework, as the key carrier for the effective operation of the scheduling algorithm, provides functional support for the smooth operation of the algorithm, including the task model and system model, to ensure the scheduling algorithm obtains an appropriate task list [8]. Task-scheduling algorithms are key techniques in heterogeneous chip systems that determine how to allocate and perform various computational tasks efficiently. The main goal of task-scheduling algorithms is to optimize the allocation of tasks between different computing resources to improve the overall performance and resource utilization of the system while reducing energy consumption [9]. Scheduling algorithms guide the framework on rationally assigning tasks to various computing units of heterogeneous chips through specific computation and decision rules. The heterogeneous task-scheduling method is an NP-hard problem called [10].

Figure 4.

Heterogeneous chip task-scheduling method, including the task-scheduler framework and algorithm.

General task-scheduling methods are usually based on homogeneous computational resource assumptions, focusing on task execution order and resource allocation to optimize overall performance metrics. The heterogeneous chip integrates multiple types of processors, computing units of different architectures, and various storage hierarchies, and the task-scheduling method must consider the hardware heterogeneity deeply. In the task allocation stage, it is necessary to match the appropriate processor type according to the task calculation requirements and the data access mode and give full play to the advantages of different hardware resources. At the same time, heterogeneous chip task-scheduling must also deal with the complex inter-task communication overhead because the data transmission speed and delay between different processors vary greatly, and the task location and execution order need to be reasonably arranged to reduce the communication cost. The management of task synchronization and dependency is also more complex as we must ensure that tasks are performed correctly and orderly in a heterogeneous environment to avoid deadlock or performance bottlenecks caused by resource competition or communication delays. This essential difference makes the heterogeneous chip task-scheduling method require a more refined resource perception, more complex task allocation strategy, and more effective communication management mechanism to adapt to the diversity of hardware resources and the complexity of task execution so as to optimize the overall performance of the system [2].

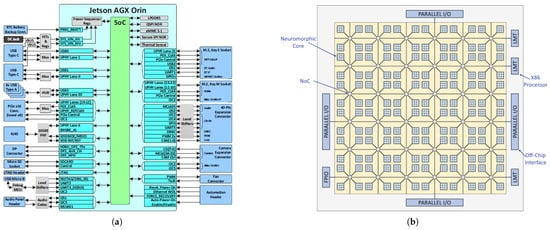

3. Research Status of the Task-Scheduling Framework

The task-scheduling framework of heterogeneous chips mainly consists of two parts: the system model and the task model. The system model is mainly used to mathematically describe the whole heterogeneous chip and abstract it into concrete mathematical expressions. The common system model is the basic system model [11]. The task model is an abstract description of the attributes, requirements, dependencies, and execution environments of computing tasks to guide efficient scheduling and resource allocation in heterogeneous computing systems. Common task models include the classical periodic task model [12], the periodic task model [13] for heterogeneous multi-core processors, the elastic task-scheduling algorithm [14], and the multi-frame task model [15]. Among the task models, the most representative and well-known is the directed acyclic graph task model. In the task model building of the heterogeneous multi-core system, the most significant advantage of the directed acyclic graph over the periodic task model is that it can represent both the task overhead and the inter-task dependency [16]. The scheduling framework can be divided into centralized and distributed types according to the system management scheduling decisions. Figure 5 presents the common task-scheduling frameworks, including NVIDIA Jetson AGX Orin and the Intel Loihi 2 neuromorphic chip. Table 1 presents a comparison of the scheduling frameworks.

Figure 5.

Common task-scheduling frameworks, such as NVIDIA Jetson AGX Orin (a) and Intel Loihi 2 neuromorphic chip (b).

Table 1.

Comparison of scheduling frameworks.

3.1. Centralized Scheduling Framework

The centralized scheduling framework of heterogeneous chips manages the resource allocation and task scheduling of the whole system through a task scheduler. As shown in Figure 5a, NVIDIA Jetson AGX Orin [17] adopts a centralized task-scheduling framework. The central CPU core uniformly coordinates the allocation of hardware resources and dispatches tasks to the GPU, deep learning accelerator, and computer vision modules. The core advantage of this framework design lies in the centralized processing of scheduling decisions, which allows the system administrators or scheduling algorithms to have a global perspective so as to conduct unified resource optimization and task-scheduling decisions [6]. Since all decisions are based on comprehensive system state information, centralized scheduling often enables efficient resource utilization and low task response times.

Although the centralized framework has the advantages of a global perspective and unified scheduling decisions in design, its limitations have become increasingly significant with the complexity of the heterogeneous chip architecture and the continuous expansion of the task’s scale. First, the centralized framework is less scalable. As the core component of the system, the performance bottleneck of the central scheduler will gradually appear with the growth of the number and complexity of tasks, and it will be challenging to meet the requirements of efficient scheduling in the large-scale heterogeneous computing environment. At the same time, with the expansion of the system scale, the amount of data and computational complexity of the scheduler increase significantly, leading to a significant increase in its scheduling delay and system response time. Secondly, once the centralized framework fails, the task scheduling and resource management of the whole system will be paralyzed. It is often necessary to design redundancy or backup mechanisms for the central schedulers, but this will significantly increase the complexity and cost of the system.

3.2. Distributed Scheduling Framework

Compared to centralized scheduling, the distributed scheduling framework of heterogeneous chips has decentralized scheduling responsibilities into multiple scheduling components, which are usually distributed on different physical or logical nodes [7,8]. Each node or component is responsible for the scheduling of a portion of the resources, allowing the system to process scheduling decisions in parallel at multiple levels, significantly improving the scalability and fault tolerance of the system. As shown in Figure 5b, the Intel Loihi 2 neuromorphic chip [18] is based on a distributed task-scheduling framework and uses the asynchronous pulse routing protocol to achieve autonomous task negotiation and dynamic load balancing among 128 neural core clusters and supports sparse event-driven computing of spiking neural networks. Moreover, distributed scheduling has local decision-making power to better adapt to local changes in the system state.

However, the framework has some shortcomings. First, the design and implementation of a distributed scheduling framework are usually accompanied by high communication overhead, especially when frequent synchronization and coordination between nodes are required. This may result in decreased overall system performance. Second, since each node makes independent decisions, maintaining the consistency and fairness of the scheduling method on a global scale is a challenge, especially in resource competition or fierce competition. In addition, when a node failure occurs, although the distributed framework has some fault tolerance, the fault propagation and recovery mechanism may still affect the stability of the system and the task completion time. Due to the dependencies of complex tasks in the heterogeneous chip environment, distributed scheduling makes it difficult to optimize the global execution efficiency of tasks comprehensively, and suboptimization problems can easily occur.

4. Research Status of the Task-Scheduling Algorithm

In this subsection, we review and compare the well-known scheduling algorithms in heterogeneous chips. The scheduling framework provides the necessary structure and interface for the scheduling algorithm and defines the basic rules and mechanisms of task management and resource allocation. The scheduling algorithm is implemented explicitly within this framework to realize the specific allocation and execution of tasks. Table 2 summarizes the literature on heterogeneous chip task-scheduling algorithms. Based on the scheduling algorithm, the decision timing, strategy, goal, and flexibility of the task-scheduling algorithm are divided into five categories: (1) the static scheduling algorithm, (2) the dynamic scheduling algorithm, (3) the heuristic scheduling algorithm, (4) the optimized scheduling algorithm, and (5) the hybrid scheduling algorithm. Table 2 is a comparison of the different types of scheduling algorithms.

Table 2.

Comparison of task-scheduling algorithms.

4.1. Static Scheduling Algorithm

A static scheduling algorithm is an algorithm in which a heterogeneous chip determines scheduling policies according to predetermined resource allocation and task characteristics before task execution. It suits scenarios where task and resource requirements are fixed and do not change with time. In static scheduling algorithms, the task execution order, resource allocation, and scheduling are predetermined before the program runs, which usually relies on prior analysis of the task and exhaustive knowledge of the system resources. Common static scheduling algorithms include the max–min algorithm [19], the min–min algorithm [19], and the minimum completion time algorithm [8].

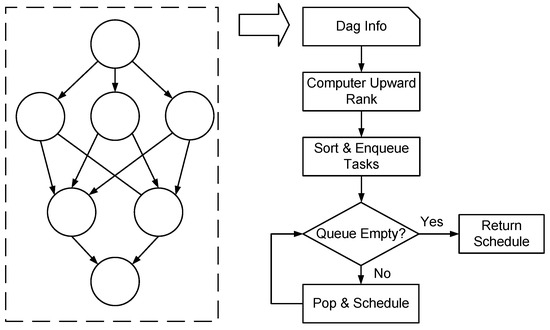

Topcuogl et al. [4] proposed the heterogeneous earliest-finish-time (HEFT) algorithm. Figure 6 illustrates the process of HEFT list scheduling. The principle of HEFT is to calculate task priorities and arrange them in descending order. For each task, a processor that can achieve the earliest completion time and consider communication overhead is selected to achieve efficient task-scheduling optimization in a heterogeneous environment. Bittencourt et al. [20] proposed a variant of the HEFT algorithm, which prioritizes the task by calculating the average execution time and communication cost of the task on each heterogeneous chip processing unit when making the scheduling decision, and considers the subsequent sub-tasks of the task through the foresight mechanism to achieve the overall optimum. The static scheduling algorithm calculates the optimal or approximately optimal scheduling scheme in advance, thus reducing the time overhead of decision-making when the system runs and ensuring that tasks are performed on a strict schedule. However, in the static task-scheduling direction, most existing algorithms have disadvantages such as unbalanced task allocation and too long of a total task execution time [21].

Figure 6.

Illustration of the HEFT list scheduling process [3].

4.2. Dynamic Scheduling Algorithm

The dynamic scheduling algorithm is a scheduling method that adjusts task allocation strategy in real time according to heterogeneous chip status. It is mainly used to deal with resource requirements and workload changes during the execution process. Chen et al. proposed an online real-time task-scheduling algorithm specifically designed for real-time scheduling of dynamic workloads, especially in terms of task priority management and system utilization optimization [22]. The scheduling mechanism is based on the framework of a dual-core heterogeneous system, which uses different scheduling methods to handle the task-order relationship and non-pre-emptive task execution problems between the main and collaborative processors.

Mandal et al. proposed a dynamic resource management algorithm based on imitation learning [23]. It dynamically adjusts the task allocation and resource utilization according to the state of the heterogeneous chip at the runtime, mainly the frequency, number, and type of the processing unit core. The scheduling mechanism constructs Oracle strategies through imitation learning and utilizes linear regression and regression trees to generate low-overhead online control strategies. Mack et al. utilized a modified HEFT algorithm to add designs adaptive to dynamic running environments, including task merging, runtime task constraints, and dynamic dependency processing [2]. There are processes with active memory allocations that should be in the physical memory, but their total size exceeds the physical memory range. In such cases, the operating system starts swapping pages in and out of the memory on every context switch. M. Reuven et al. minimized this thrashing by splitting the processes into several bins, using bin-packing approximation algorithms [24].

4.3. Heuristic Scheduling Algorithm

Heuristic scheduling algorithms rely on empirical rules or simplified problem models and aim to determine approximate solutions quickly. They seek the approximately optimal scheduling scheme by simulating natural processes or applying statistical principles, which is suitable for solving the NP-difficult problem of scheduling. Due to the effective solutions of different processing units in the heterogeneous environment, heuristic and meta-heuristic algorithms can explore several feasible scheduling methods in a reasonable time and find the best balance between performance and cost. The heuristic scheduling algorithm aims to discover a solution to the problem at hand at the right time. Heuristic methods are usually more flexible and adaptable to better respond to real-time changing environments and incomplete information; common heuristic algorithms can be roughly divided into three categories: the table scheduling algorithm, the cluster-based scheduling algorithm, and the task replication-based scheduling algorithm [25].

Sakellariou et al. used list-scheduling techniques to rank nodes in the DAG and then group these nodes so that tasks in each group can be scheduled independently [26]. Sakellariou also proposed a new weight calculation method, aiming to reduce the difference in scheduling results caused by different weight calculation methods. Sulaiman et al. combined a genetic algorithm and a list-based approach [27]. The convergence rate of the algorithm is accelerated by introducing two guiding chromosomes to improve the initial genetic algorithm population. Sung et al. used deep learning for task ordering, which uses the real-time heterogeneous chip scheduling simulator design process and two novel neural network design features to improve the performance of task execution: hierarchical operation and task graph embedding [28]. The heuristic scheduling algorithm rules of heterogeneous chips cannot fully cover all possible situations, resulting in severe performance degradation in the face of some dynamic changes.

4.4. Optimized Scheduling Algorithm

The task-scheduling algorithm based on optimization usually adopts mathematical models and complex optimization techniques, such as linear programming and integer programming, to determine the optimal scheduling scheme [29]. Optimization-based task-scheduling algorithms for heterogeneous chips represent a mathematically rigorous approach to task allocation and execution sequencing, where the scheduling problem is formulated as a constrained optimization model to systematically minimize objectives such as makespan, energy consumption, or resource contention. Implementation typically involves three stages: abstracting tasks and hardware into computational graphs and capability matrices, formulating objectives with constraints on precedence, resource limits, and PE compatibility, and deploying solvers such as branch-and-bound for exact solutions or swarm intelligence for scalability. Compared with the heuristic scheduling algorithm, the optimization-based method optimizes the scheduling effect through rigorous mathematical modeling, assuming that the computational resources allow it. Optimization of scheduling algorithms can provide a globally optimal solution.

Diwan et al. proposed a classification-based scheduling algorithm with minimal hardware overhead for many instruction set architecture (ISA) scenarios [30]. It utilizes a smaller set of microarchitectural parameters obtained via online performance counters to determine the most affine ISA for upcoming program phases. Nikseresht et al. modeled the task and heterogeneous chip, represented the task diagram with the Kahn process network model [31], assigned the task to the processing unit, and considered its execution time and memory access time. The task-scheduling scheme was then encoded as chromosomes, and new populations were generated by genetic operator operation, where parental chromosomes were selected according to different rules and progeny chromosomes are generated. Finally, the chromosome was evaluated according to the defined fitness function, and the Pareto optimal solution was obtained as the final scheduling scheme. Suhendra et al. proposed a method to model the architecture model (including processor, bus, memory, etc.) and task map (including task execution time, data dependence, traffic, etc.) [32]. They also mapped the task to a heterogeneous chip processing unit into a linear planning (integer linear programming, ILP) problem, represented the task assignment through decision variables, and solved the task-scheduling scheme according to the constraints and target function. The ILP formula was then extended to handle pipeline scheduling, considering constraints such as pipeline stage assignments and communication tasks to optimize the overall performance. However, the complexity of this approach grew exponentially with the problem size and heterogeneous chip complexity, requiring significant computational resources to solve it, making a trade-off between optimization effect and computational cost in practical applications.

4.5. Hybrid Scheduling Algorithm

The hybrid scheduling algorithm uses a combination of different algorithms to determine the optimal solution. The algorithm considers not only the computational load and data dependence of the task but also the performance characteristics of the processor, the energy consumption requirements, and the load balance of the system. A hybrid scheduling method usually includes four key components: task feature analysis, system state monitoring, a task processor matching algorithm, and scheduling decision execution. Through the coordinated work of these components, the hybrid scheduling method can optimize energy consumption and improve the overall efficiency of the system [33] while ensuring the system’s performance.

Peng et al. propose a multi-application scheduling algorithm [34], which combines a hybrid scheduling approach, including two stages: a neural network-based task priority scheduling and a heuristic-based task mapping scheduling for handling processor task scheduling and resource allocation problems. Taheri et al. proposed a two-stage hybrid task-scheduling algorithm specifically for heterogeneous chips [35]. This algorithm first decomposes the input task graph using optimization-based spectral partitioning techniques and then schedules tasks using a heuristic genetic algorithm to minimize power consumption and execution time. Abdi et al. combined the characteristics of heuristics (decision-making through fuzzy logic and neural networks) and dynamic scheduling algorithms [36]. The scheduler first trains a fuzzy neural network through a multi-objective evolution algorithm. Then, they use the trained network as an online scheduler to optimize the key performance indicators of the multi-core system in real time. The task is mapped to the most appropriate heterogeneous chip processing unit by fuzzy rules and dynamic voltage frequency adjustment.

Hybrid scheduling algorithms can combine the advantages of various algorithms to effectively deal with the heterogeneity of tasks and processing units and improve the flexibility and adaptability of heterogeneous chip task scheduling. However, such algorithms usually have high computational complexity and fail to fully consider many complex factors in real scenarios, such as bus conflict and dynamic changes in task execution time.

5. Research Status of Experiment

This section elaborates on the experiment of a heterogeneous chip task-scheduling method structured around three principal components. First, we introduce the experimental dataset supporting heterogeneous chip task-scheduling research. Secondly, we present the detailed experimental methodology implemented to validate the proposed approach. Finally, we present the common evaluation metrics for systematic performance assessment.

5.1. Dataset

This paper describes the datasets used in the task-scheduling method of heterogeneous chips, which are divided into two categories: real-world workloads and simulated synthetic datasets. Table 3 compares common datasets for task-scheduling methods.

Table 3.

Common datasets for task-scheduling methods.

5.1.1. Real Load Dataset

Real loads are tasks and workloads recorded from heterogeneous chips for applications such as scientific computing, data analysis, image processing, and machine learning. For example, ref. [37] deployed six different applications from the wireless communication and radar fields to the heterogeneous chip Xilinx Zynq ZCU102 FPGA development board and the Odroid XU3 development board to collect data, as shown in Table 4. The real load is highly representative and of practical application value. The real load includes task characteristics from different fields, which cover the resource requirements, computing load, data transmission mode, and task dependencies in the actual operation, so it can provide a more realistic evaluation basis for the task-scheduling algorithm.

Table 4.

An example of the real load dataset [37].

However, because its data originates from specific scenarios, its characteristics and distribution show certain particularity, and it is difficult to directly extrapolate to heterogeneous chips in different application scenarios. At the same time, data privacy and security constitute an important constraint on the application of real load datasets. Such data may contain the critical business information and private data of heterogeneous chip users, which poses a threat to data security. In addition, due to the scope, conditions, and cost of data collection, real load datasets have inherent deficiencies in terms of data volume and diversity, and it is difficult to cover all possible load patterns.

5.1.2. Synthetic Datasets

Synthetic datasets are generated by specific algorithms, which are used to simulate datasets generated with specific types of workloads. These datasets allow the investigator to control the various parameters of the load to test the performance of the system in extreme or special cases. Synthetic datasets are of great value for studying system limit performance, verifying theoretical models, or developing new scheduling methods. In the experiment of the task-scheduling method of the heterogeneous chip, the synthetic dataset can be customized according to specific research purposes and requirements, simulate various task scenarios and data distribution, and carry out targeted tests on specific task-scheduling algorithms or extreme situations.

For example, Cordeiro et al. [38] provided a method based on the layer-by-layer method, improving it to obtain a random directed acyclic graph (DAG) task set. Yao et al. [39] used the open-source DAG generation software Daggen (https://github.com/frs69wq/daggen) [40] to generate a random DAG. Synthetic datasets are more customizable to simulate various situations to help evaluate the performance of task-scheduling algorithms under extreme conditions. However, the synthetic dataset is built based on assumptions and simulation, and there is a certain gap between its data generation mode and the real-world application scenarios, so it is difficult to comprehensively cover the complex task types, data characteristics, and user behavior patterns in practical applications. Therefore, there is insufficient confidence in the experimental results of the synthetic dataset.

5.1.3. Standard Benchmark

Standard benchmark datasets consist of a series of standard test procedures designed to evaluate the performance of heterogeneous chips. These benchmarks provide a unified way to compare performance across different systems, such as the DSP-oriented benchmarking methodology [41], the Princeton Application Repository for Shared-Memory Computers [42], Rodinia [43], and the HPC Challenge Benchmark [44]. These standard benchmarks provide a range of programs and tasks designed to assess performance in a heterogeneous parallel computing environment. They are often used to test scheduling algorithms for heterogeneous computing systems. These benchmarks are specifically tuned to evaluate the heterogeneous chips. These tests are designed to evaluate the performance of heterogeneous chips when working and, therefore, are suitable for testing and evaluating the performance of heterogeneous chips.

Compared with real workload data or synthetic datasets, the standard benchmark is easy to implement, has higher repeatability and universality, and can ensure the fairness and reliability of algorithm evaluation. Moreover, another advantage of standard benchmarking is that there is unified comparison benchmarking, which thus enables a more consistent and comparative experimental comparison between different methods. However, standard benchmarks also have some limitations, which often fail to fully reflect the complexity and dynamic changes of task scheduling in the real world, especially in highly heterogeneous computing environments, where standard benchmark datasets may not cover all potential load patterns and hardware configurations. Therefore, standard benchmarks are suitable for preliminary evaluation and algorithm comparison, but they must be combined with real load or synthetic datasets to cope with different practical scenarios and requirements.

5.2. Experimental Methods

Experimental methods play an important role in the research of heterogeneous chip task-scheduling methods. It is the core means to evaluate the performance of the method accurately. By carefully designing the experimental environment, it can simulate real or diversified application scenarios and set comprehensive and reasonable parameters. It can effectively measure key performance indicators, such as the execution efficiency of the task-scheduling algorithm under different conditions.

According to the different experimental subjects, the experimental methods can be further subdivided into model-in-loop (MIL) simulation, software-in-loop (SIL) simulation, and hardware-in-loop (HIL) simulation. Through SIL and MIL, the simulation can partially or completely mathematically model the actual hardware resource management and task-scheduling method, and the effectiveness of the scheduling method can be verified without deployment on the actual hardware. This not only improves the efficiency and flexibility of the test but also significantly reduces the development cost. By simulating various possible operational scenarios, the simulation enables developers to optimize task allocation and resource utilization strategies at the software level and to identify and solve potential performance bottlenecks and scheduling conflicts in advance. The MIL/SIL method is mainly applied to the detailed design stage of the software to test the function of heterogeneous chips. The HIL is conducted in the system on a chip as a final stage test to verify the actual performance of the task-scheduling method. In the HIL, the hardware test evaluates the heterogeneous chip under real load. With the integration of test objects, the confidence of test results increases accordingly, and the cost also increases. Table 5 compares the characteristics of these different experiment methods.

Table 5.

Comparison of experimental methods.

5.2.1. Model-in-Loop

MIL simulation is a closed-loop pure numerical experimental method that connects the algorithm model with the controlled object model. This approach uses mathematical modeling to simulate heterogeneous chip behavior and scheduling methods, enabling developers to predict and optimize the performance of the system prior to heterogeneous chip development. In MIL simulation, commonly used models include DAG models, which can effectively represent the dependencies between tasks and their execution order, thus supporting the verification of complex scheduling logic. Moreover, cohort theoretical models are widely used to simulate task scheduling and resource allocation strategies to evaluate system performance and resource bottlenecks under different approaches.

Usually, MIL simulations utilize powerful numerical computing and data processing environments such as MATLAB 2023 or Python 3.6 to implement [45]. MATLAB Is particularly favored for its extensive engineering support and built-in simulation framework. At the same time, Python is widely used in more customized simulation requirements for its flexibility and rich library support (such as NumPy 1.8.1 and SciPy 1.15.0). With these tools, developers can quickly implement model building, run simulations, and analyze results, thus effectively accelerating the development cycle and optimizing the overall performance of heterogeneous chip task scheduling.

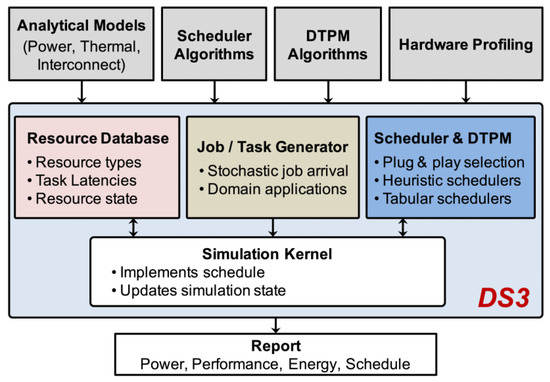

5.2.2. Software-in-Loop

SIL simulation simulates heterogeneous chips through software that researchers can use to test the scheduling method without actual hardware. Common software-in-loop simulations include domain-specific system-on-chip simulation (DS3) [37], Multi2Sim [46], Gem5 [47], and Simics [48]. The advantages of such platforms are their low cost, high flexibility, and the ability to quickly iterate and test different scheduling methods. The main drawback, however, is that all of the features of the hardware may not be accurately reproduced.

Figure 7 illustrates the DS3 lifecycle from job generation to task execution. First, the job generator injects the job into the job queue and loads the related tasks into the corresponding task queue. Next, the scheduler selects the task from the ready queue and assigns it to the idle processing unit for execution. In this process, the remaining tasks in the executable queue can be reloaded into the ready queue and scheduled again. Once the task is completed, it will be moved to the completed queue. DS3 can simulate benchmark applications and model them as directed acyclic graphs to evaluate the performance of different scheduling algorithms on target heterogeneous chip platforms. The list of the processing units and the computational cost and power consumption data of the application tasks are stored in the resource database. The job generator determines the average arrival interval of frames through a user-defined distribution and injects it into the simulation. The simulator calls the scheduler during each scheduling cycle, prepares the task list, and maps the tasks to the processing units based on the information in the resource database. Finally, the task is moved to the completed queue after completion.

Figure 7.

General mechanism of simulation platform DS3, which describes the inputs and key functional components to perform rapid design space exploration and validation [37].

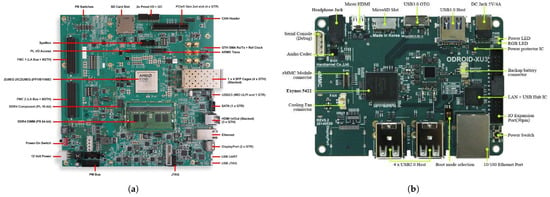

5.2.3. Hardware-in-Loop

HIL is a test of task-scheduling methods directly on a heterogeneous chip, such as an on-chip system that integrates CPU, GPU, and FPGA. HIL provides the closest test environment to practical applications, which allows researchers to test and optimize task-scheduling methods on the system on the chip. Figure 8 presents the common system on the chip in HIL, including the Xilinx Zynq UltraScale + ZCU102 FPGA and Odroid-XU 3 board. For example, by developing the board [49] using the Texas Instruments Digital Video Evaluation module, Chen et al. [22] and Mandal et al. [23] performed data characterization and experimental evaluation on a Odroid-XU 3 board [50] operating the Ubuntu operating system. Goksoy et al. [51] completed the hardware in a loop on a Xilinx Zynq UltraScale + ZCU102 FPGA [52].

Figure 8.

Common hardware-in-loop, such as the Xilinx Zynq UltraScale + ZCU102 FPGA (a) and Odroid-XU 3 board (b).

HIL can genuinely reflect the performance of task-scheduling methods in heterogeneous chips. The obtained data and results are extremely authentic and reliable and can accurately present the details of the interaction between the algorithm and hardware. However, the HIL operation process is highly complex, involving the delicate setting and precise control of the heterogeneous chip hardware platform. In addition, the cost of HIL is high, which requires the purchase of special heterogeneous chip testing equipment and tools. Hardware energy consumption, equipment wear, and manpower input also have large costs.

5.3. Evaluation Metric

This section introduces the common evaluation methods of the seven categories of task-scheduling methods for evaluating heterogeneous chips, as shown in Table 6. The following is a detailed analysis of how the different scheduling methods are compared against these evaluation methods:

Table 6.

Comparison of evaluation metrics.

- Complexity: Complexity measures the cost of the algorithm in terms of computing resources, time, space, etc. The commonly used evaluation indicators include execution time and maximum completion time (makespan). Execution time is required by the task-scheduling algorithm to generate scheduling for a given application graph [27]. Makespan [53] refers to the total time required to complete a series of tasks or assignments with the formula shown below.where is the actual completion time of the DAG exit task.

- Performance awareness: Performance-aware requirements refer to the scheduling algorithm that needs to take into account the maximization of the overall performance of the system when making decisions. The commonly used evaluation index has an acceleration ratio [54]. The speedup refers to the ratio of the task order execution time to the worst response time. The situation of the acceleration of the current algorithm for a task schedule can be obtained. The formula is shown below.where represents the time overhead of task sequential execution. To prevent contingency, multiple datasets are averaged.

- Optimality: Optimality evaluates whether the scheduling algorithm can determine the optimal or near-optimal solution scheduling task for heterogeneous chips under given constraints. One of the common evaluation indicators is the scheduling length ratio (scheduling length ratio, SLR). SLR refers to the ratio [55] of makespan to the theoretical optimal scheduling length. The formula is defined as follows:where the denominator is the sum of the minimum computational cost of the task belonging to the critical path called .

- Power awareness: The power awareness monitors and manages the immediate energy consumption of the device. It is designed to reduce the peak power demand of the device during operation. [53]. The goal of this metric is to minimize the overall parameters such as energy. [56] or the energy-delay product (EDP). [57]. The EDP provides a compromise measure by considering the energy consumption and execution time. The EDP formula is defined as follows:where represents the total energy consumption of the whole system and is the total execution time of the whole cluster system.

- Energy awareness: The energy awareness focuses on the total energy consumption of heterogeneous chips over a period of time. The goal of this approach is to reduce long-term energy consumption and reduce overall energy costs [56] by improving energy efficiency. In task scheduling, resource allocation or the system design strategy, how to minimize energy consumption while completing the necessary calculation tasks is an important consideration. The commonly used indicators are average power consumption, total energy consumption, and real energy consumption RE(i, j) [29]. The real energy consumption RE(i, j) of a task i on processor j can be defined aswhere is a binary variables and denotes whether task i execute in processing unit j. is used to represent the energy consumption of task i on the processing unit j.

- Online adaptation: Online adaptability evaluates the ability of a heterogeneous chip to dynamically adapt to task changes during operation, especially the response ability during task arrival, system load, or resource status changes [22]. Common indicators include the task migration time, scheduling delay, and throughput [58]. Throughput refers to the number of tasks that the scheduling algorithm performs in a unit of time. Online adaptability of the algorithm can be evaluated by observing the change in throughput with increasing task volume [54].

- Load balancing: Load balancing refers to the reasonable allocation of workload among the processing units of the heterogeneous chip to ensure that no single processing unit becomes a performance bottleneck due to overload while avoiding idle processing units. One of the commonly used evaluation indicators is the relaxation degree, which is called Slack. Slack reflects the size of the time window for absorbing the calculation delay provided by the scheduling results of the algorithm without increasing the makespan [59]. There is a conflict between the Slack measures and the SLR measures, and a lower SLR generally implies lower Slack. The formula of Slack is defined as follows:where n is the number of task nodes, indicating the longest path length from the entry node to the task node. represents the length of the longest path from the task node to the termination node.

6. Discussion

6.1. Challenges and Limitations

The current research on scheduling frameworks for heterogeneous chips faces multifaceted challenges and limitations across both centralized and distributed paradigms. Centralized architectures inherently grapple with scalability bottlenecks due to single-point decision-making, where the exponential growth of scheduling latency under large-scale task queues severely constrains their practical deployment in modern many-core systems. This is further compounded by the curse of dimensionality in modeling heterogeneous resources, as contemporary architectures integrating GPU, FPGA, and domain-specific accelerators require joint optimization across 50+ hardware features, which lead to computationally intractable solution spaces. Distributed frameworks introduce new complexities through the global–local optimization paradox, where local node autonomy frequently conflicts with system-wide efficiency. Both paradigms struggle with dynamic environment adaptability, particularly in handling workload fluctuations and temporal task dependencies, while the energy–latency trade-off remains fundamentally unresolved. Emerging approaches exploring hybrid architectures and digital twin-enhanced scheduling show promise but face validation challenges in extreme heterogeneity scenarios.

Existing static, heuristic, and optimized scheduling algorithms often focus on heterogeneous chips for specific applications and lack sufficient versatility and flexibility to adapt to rapidly evolving and diverse hardware architectures. At the same time, it is difficult to effectively balance performance optimization and energy efficiency in practice, especially in the face of large-scale and complex task loads. Due to its relative lack of adaptability to dynamic changes, the static algorithm finds it gradually difficult to meet the demand. In contrast, mixed and dynamic task-scheduling algorithms have more development potential. The hybrid algorithm can combine the advantages of various algorithms to deal with the complex task processing and load balance problems of heterogeneous chips, while the dynamic algorithm can better adapt to the dynamic environment changes of heterogeneous chips in the operation process and truthfully deal with the task load and dependence relationship. These two are usually able to meet the task-scheduling requirements of heterogeneous chips with multiple objectives, such as power management and load balancing. However, the time overhead of these two is also greatly increased.

In addition, memory management considerations critically influence scheduling methods in heterogeneous chips by imposing constraints on data locality, access latency, and bandwidth partitioning across disparate memory hierarchies, necessitating adaptive scheduling strategies that optimize task-to-core mappings while balancing inter-kernel dependencies and memory coherence overhead. The interplay between dynamic memory allocation patterns and spatial–temporal resource contention further compels schedulers to co-design memory-aware prioritization heuristics and pre-emptive migration policies, ensuring both resource efficiency and computational throughput across heterogeneous processing units.

The current research on the heterogeneous chip task-scheduling method shows that the evaluation method does not have a unified evaluation standard. The existing evaluation indicators mainly focus on traditional aspects such as task completion time, resource utilization, and load balancing. Although these indicators can reflect basic performance, they often fail to fully reveal the comprehensive performance of task scheduling in complex and heterogeneous environments. There are also trade-offs between indicators, such as complexity and performance, and it is often difficult to balance energy consumption and performance. In addition, although online adaptability has attracted some attention, there is still room for further research. In the future, it is necessary to comprehensively consider optimizing multiple evaluation methods and designing efficient task-scheduling methods in different application scenarios.

SIP simulation is dominant in the current research because of its low cost and high test efficiency. Due to its complex operation and high cost, hardware testing has relatively few applications. However, with in-depth research and the improvement of the compatibility requirements for practical application scenarios, the importance of hardware testing is expected to increase, and the research will develop towards a more comprehensive and suitable direction for practical application. Secondly, the real load is the mainstream of current research because it can reflect working characteristics in the practical application scenario. However, it lacks universality, and it is difficult to fully reflect variability and complexity in the practical application. Therefore, future studies need to build more representative multi-scene and multi-task load datasets for these problems and improve the generality and fine-grained simulation capabilities of the simulation platform so as to better support the optimization of heterogeneous chip task-scheduling methods.

6.2. Future Direction

The future development direction of the heterogeneous chip task-scheduling framework will combine more advanced technologies to improve the intelligence, flexibility, and reliability of the system. Among them, the distributed ledger technology based on blockchain provides new possibilities for building a decentralized collaborative mechanism. Through the immutability and distributed storage characteristics of blockchain, it can not only effectively solve the data consistency and trust problems in the task-scheduling process in a heterogeneous environment but also eliminate the dependence on central scheduling nodes in the traditional framework, thus significantly enhancing the fault tolerance and robustness [60] of the system. In addition, blockchain technology can record the whole process of task scheduling, realize the traceability of task allocation and resource use, and provide reliable data support for further optimization of scheduling methods. This innovative integration has laid a solid foundation for the continuous development of the heterogeneous chip task-scheduling framework and also provides more efficient and credible solutions for resource management and task allocation in complex computing environments. Moreover, the system model of the current scheduling framework has obvious deficiencies in handling the dynamic configuration of heterogeneous computing resources and real-time communication optimization, especially in its poor adaptability to the rapidly changing task demands and resource states. To solve this problem, future research can develop a dynamically adjusted system model based on graph theory [61] and network flow theory [62] and focus on improving the management efficiency of data flow and task scheduling. These models will optimize resource allocation and system response speed by implementing objective functions that minimize latency and maximize throughput. Furthermore, these models will support real-time monitoring and prediction capabilities that enable the system to respond quickly according to immediate changes in task load and resource status. In this way, it not only improves the adaptability of the system but also enhances its ability to handle complex computing tasks.

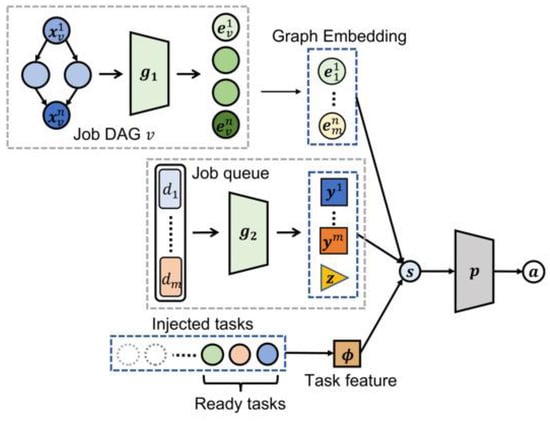

The future development direction of the heterogeneous chip task-scheduling algorithm will move towards the direction of intelligence and adaptive optimization, among which deep integration with reinforcement learning is a key trend. Figure 9 describes the overall DeepSoCS network structure based on reinforcement learning. DeepSoCS simulates the interaction between the environment and the agent and constantly optimizes the scheduling method, which can dynamically adapt to the complex and variable resource states and task requirements in the heterogeneous chip system [63]. Compared with the traditional scheduling algorithm based on heuristic or static rules, the reinforcement learning method can perform online learning and real-time decision-making and can optimize strategies according to feedback in the operation, effectively improving the efficiency of task allocation and resource utilization. In addition, reinforcement learning algorithms can achieve global optimization in multi-task and multi-constraint heterogeneous environments through experience accumulation and policy migration, providing more flexible, efficient, and robust solutions for complex scheduling problems. However, heterogeneous chips have different processing units for computing power, memory bandwidth, power consumption, and other characteristics. Therefore, reinforcement learning algorithms must be able to choose appropriate task-scheduling strategies under these resource constraints. The state space needs to contain information such as load situation, data dependency, and task priority. In contrast, the action space includes the allocation mode of different tasks, such as the division and scheduling of tasks between different processing units. The reward function must be designed according to the task completion delay, resource utilization rate, load balance, and other indicators to promote the reinforcement learning model and learn the optimal scheduling strategy in the training process. Due to the complexity of the task-scheduling problem, reinforcement learning training may face high-dimensional state space and long training periods, leading to sample inefficiency and time overhead in the exploration process.

Figure 9.

Task ordering is trained via the DeepSoCS architecture. The state is composed of graph embeddings and task features. A node-level MPNN computes embedding nodes for each job injected in the job queue, and a job-level MPNN computes local and global summaries using node embeddings and injected job information. Then, the onward task information constructs task features, which represent the number of possible actions. We use conventional policy networks to select a task. All vectors have time step subscripts but were not displayed in this diagram for readability [14].

The deep fusion of experimental simulation, test software, and large models is the key development direction. Through their excellent feature extraction and high-dimensional data modeling capabilities, large models can accurately model complex features in heterogeneous computing environments, covering core issues [64] such as multi-task parallel processing, resource competition dynamics, and scheduling optimization. Their advantage lies in the efficient analysis of non-linear correlations in task scheduling through end-to-end learning methods and the generation of high-fidelity simulation scenarios and workloads in real time to expand the coverage of the test platform, especially the boundary conditions and extreme situations that are difficult to reach by traditional simulation methods. Through the adaptive learning and feedback mechanism of large models, the simulation test platform can achieve continuous improvement of performance in continuous iteration. The combination of a large model and simulation platform not only provides strong technical support for the research and development of heterogeneous chip task-scheduling methods but also significantly shortens the transformation cycle of innovative algorithms from theoretical design to practical application and accelerates the technological progress of the whole field in the direction of efficient computing and resource optimization. However, large models have huge data transfer and communication latency, and bandwidth limitations between processing units can become a performance bottleneck. The simulation test platform must design an appropriate load-balancing mechanism for the calculation mode of large models (such as hierarchy calculation, parameter update, activation function calculation, etc.) so as to avoid resource waste and performance bottlenecks.

7. Conclusions

The task-scheduling method of heterogeneous chips is a current research hotspot in academia and industry. Heterogeneous chips can fully exploit the advantages of different types of processing units, showing significant advantages in embedded fields requiring high performance and low power consumption. The choice of task-scheduling methods is crucial in the design and implementation of heterogeneous chips. To ensure the efficient operation of heterogeneous chips, the computing power of different processing units, energy consumption, development flexibility, and specific requirements of application scenarios must be considered. This paper makes a detailed comparative analysis of the recent typical heterogeneous chip task-scheduling method, which provides a reference for researchers and promotes optimizing and deploying the scheduling algorithm. In addition, this paper starts with the three key technologies of the scheduling framework, scheduling algorithm, and experimental method and comprehensively investigates and summarizes their development. It also presents the key technical challenges faced in the future development process, aiming to provide some enlightenment for the researchers of heterogeneous chips.

At present, the task-scheduling methods of heterogeneous chips are mainly derived from the scheduling algorithms of traditional homogeneous chips, and the research in the field of heterogeneous chips is still in its infancy. However, it is not feasible to directly migrate the scheduling algorithm to heterogeneous chips. The diversity of the heterogeneous chip architecture and the particularity and complexity of heterogeneous chip tasks contain enormous challenges. Therefore, the selection and optimization of the task-scheduling algorithm according to the characteristics of heterogeneous chips still needs to be further studied and explored.

Author Contributions

Z.M.: writing—review and editing, conceptualization, methodology, formal analysis, and investigation. C.S.: formal analysis, investigation, and funding acquisition. Z.T. and H.L.: writing—review and editing and funding acquisition. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Shenzhen Basic Research Project with Grants JCYJ20210324101210027 and JCYJ20220818100814033, the Guangdong University Featured Innovation Program Project with Grant 2024KTSCX025, and the Guangdong Provincial Key Laboratory of Computility Microelectronics with Grant 2024B1212010007.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Citation Report: Heterogeneous Chip and Task Scheduling. 2024. Available online: https://webofscience.clarivate.cn/wos/alldb/citation-report/939b0a4e-656e-4c89-86f8-66de26f7c757-0101ee96ed?page=1 (accessed on 8 October 2024).

- Mack, J.; Arda, S.E.; Ogras, U.Y.; Akoglu, A. Performant, multi-objective scheduling of highly interleaved task graphs on heterogeneous system on chip devices. IEEE Trans. Parallel Distrib. Syst. 2021, 33, 2148–2162. [Google Scholar] [CrossRef]

- Basaklar, T.; Goksoy, A.A.; Krishnakumar, A.; Gumussoy, S.; Ogras, U.Y. DTRL: Decision Tree-based Multi-Objective Reinforcement Learning for Runtime Task Scheduling in Domain-Specific System-on-Chips. ACM Trans. Embed. Comput. Syst. 2023, 22, 113. [Google Scholar] [CrossRef]

- Topcuoglu, H.; Hariri, S.; Wu, M.Y. Performance-effective and low-complexity task scheduling for heterogeneous computing. IEEE Trans. Parallel Distrib. Syst. 2002, 13, 260–274. [Google Scholar] [CrossRef]

- Fang, J.; Huang, C.; Tang, T.; Wang, Z. Parallel programming models for heterogeneous many-cores: A comprehensive survey. CCF Trans. High Perform. Comput. 2020, 2, 382–400. [Google Scholar] [CrossRef]

- Nollet, V.; Marescaux, T.; Avasare, P.; Verkest, D.; Mignolet, J.Y. Centralized run-time resource management in a network-on-chip containing reconfigurable hardware tiles. In Proceedings of the Design, Automation and Test in Europe, Munich, Germany, 7–11 March 2005; pp. 234–239. [Google Scholar]

- Luo, J.; Jha, N.K. Power-efficient scheduling for heterogeneous distributed real-time embedded systems. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2007, 26, 1161–1170. [Google Scholar] [CrossRef]

- Braun, T.D.; Siegel, H.J.; Beck, N.; Bölöni, L.L.; Maheswaran, M.; Reuther, A.I.; Robertson, J.P.; Theys, M.D.; Yao, B.; Hensgen, D.; et al. A comparison of eleven static heuristics for mapping a class of independent tasks onto heterogeneous distributed computing systems. J. Parallel Distrib. Comput. 2001, 61, 810–837. [Google Scholar] [CrossRef]

- Karande, P.; Dhotre, S.; Patil, S. Task management for heterogeneous multi-core scheduling. Int. J. Comput. Sci. Inf. Technol. 2014, 5, 636–639. [Google Scholar]

- Bao, Z.; Chen, C.; Zhang, W. Task Scheduling of Data-Parallel Applications on HSA Platform; Springer: Berlin/Heidelberg, Germany, 2018; pp. 452–461. [Google Scholar]

- Phanibhushana, B.; Kundu, S. Network-on-chip design for heterogeneous multiprocessor system-on-chip. In Proceedings of the 2014 IEEE Computer Society Annual Symposium on VLSI, Tampa, FL, USA, 9–11 July 2014; pp. 486–491. [Google Scholar]

- Liu, C.L.; Layland, J.W. Scheduling algorithms for multiprogramming in a hard-real-time environment. J. ACM (JACM) 1973, 20, 46–61. [Google Scholar] [CrossRef]

- Baruah, S. Feasibility analysis of preemptive real-time systems upon heterogeneous multiprocessor platforms. In Proceedings of the 25th IEEE International Real-Time Systems Symposium, Lisbon, Portugal, 5–8 December 2004; pp. 37–46. [Google Scholar]

- Orr, J.; Baruah, S. Algorithms for implementing elastic tasks on multiprocessor platforms: A comparative evaluation. Real-Time Syst. 2021, 57, 227–264. [Google Scholar] [CrossRef]

- Peng, B.; Fisher, N. Parameter adaption for generalized multiframe tasks and applications to self-suspending tasks. In Proceedings of the 2016 IEEE 22nd International Conference on Embedded and Real-Time Computing Systems and Applications (RTCSA), Daegu, Republic of Korea, 17–19 August 2016; pp. 49–58. [Google Scholar]

- Han, M.; Guan, N.; Sun, J.; He, Q.; Deng, Q.; Liu, W. Response time bounds for typed DAG parallel tasks on heterogeneous multi-cores. IEEE Trans. Parallel Distrib. Syst. 2019, 30, 2567–2581. [Google Scholar] [CrossRef]

- Karumbunathan, L.S. NVIDIA Jetson AGX Orin Series. 2022. Available online: https://www.nvidia.cn/content/dam/en-zz/Solutions/gtcf21/jetson-orin/nvidia-jetson-agx-orin-technical-brief.pdf (accessed on 12 October 2024).

- Davies, M.; Srinivasa, N.; Lin, T.H.; Chinya, G.; Cao, Y.; Choday, S.H.; Dimou, G.; Joshi, P.; Imam, N.; Jain, S.; et al. Loihi: A neuromorphic manycore processor with on-chip learning. IEEE Micro 2018, 38, 82–99. [Google Scholar] [CrossRef]

- Maheswaran, M.; Ali, S.; Siegel, H.J.; Hensgen, D.; Freund, R.F. Dynamic mapping of a class of independent tasks onto heterogeneous computing systems. J. Parallel Distrib. Comput. 1999, 59, 107–131. [Google Scholar] [CrossRef]

- Bittencourt, L.F.; Sakellariou, R.; Madeira, E.R. Dag scheduling using a lookahead variant of the heterogeneous earliest finish time algorithm. In Proceedings of the 2010 18th Euromicro Conference on Parallel, Distributed and Network-Based Processing, Pisa, Italy, 17–19 February 2010; pp. 27–34. [Google Scholar]

- Li, Q.-Y.; Kang, J.-J.; Guo, W.-F. Research and Simulation of embedded burst task scheduling method. Comput. Simul. 2022, 39, 423–426, 435. [Google Scholar] [CrossRef]

- Chen, Y.S.; Liao, H.C.; Tsai, T.H. Online real-time task scheduling in heterogeneous multicore system-on-a-chip. IEEE Trans. Parallel Distrib. Syst. 2012, 24, 118–130. [Google Scholar] [CrossRef]

- Mandal, S.K.; Bhat, G.; Patil, C.A.; Doppa, J.R.; Pande, P.P.; Ogras, U.Y. Dynamic resource management of heterogeneous mobile platforms via imitation learning. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2019, 27, 2842–2854. [Google Scholar] [CrossRef]

- Reuven, M.; Wiseman, Y. Medium-term scheduler as a solution for the thrashing effect. Comput. J. 2006, 49, 297–309. [Google Scholar] [CrossRef]

- Gholami, H.; Zakerian, R. A list-based heuristic algorithm for static task scheduling in heterogeneous distributed computing systems. In Proceedings of the 2020 6th international conference on web research (ICWR), Tehran, Iran, 22–23 April 2020; pp. 21–26. [Google Scholar]

- Sakellariou, R.; Zhao, H. A hybrid heuristic for DAG scheduling on heterogeneous systems. In Proceedings of the 18th International Parallel and Distributed Processing Symposium, 2004. Proceedings, Santa Fe, NM, USA, 26–30 April 2004; p. 111. [Google Scholar]

- Sulaiman, M.; Halim, Z.; Lebbah, M.; Waqas, M.; Tu, S. An evolutionary computing-based efficient hybrid task scheduling approach for heterogeneous computing environment. J. Grid Comput. 2021, 19, 1–31. [Google Scholar] [CrossRef]

- Sung, T.T.; Ha, J.; Kim, J.; Yahja, A.; Sohn, C.B.; Ryu, B. Deepsocs: A neural scheduler for heterogeneous system-on-chip (soc) resource scheduling. Electronics 2020, 9, 936. [Google Scholar] [CrossRef]

- Wang, Y.; Li, K.; Chen, H.; He, L.; Li, K. Energy-aware data allocation and task scheduling on heterogeneous multiprocessor systems with time constraints. IEEE Trans. Emerg. Top. Comput. 2014, 2, 134–148. [Google Scholar] [CrossRef]

- Diwan, P.; Toraskar, S.; Venkitaraman, V.; Boran, N.K.; Chaudhary, C.; Singh, V. MIST: Many-ISA Scheduling Technique for Heterogeneous-ISA Architectures. In Proceedings of the 2024 37th International Conference on VLSI Design and 2024 23rd International Conference on Embedded Systems (VLSID), Bengal, India, 6–10 January 2024; pp. 348–353. [Google Scholar]

- Nikseresht, M.; Raji, M. MOGATS: A multi-objective genetic algorithm-based task scheduling for heterogeneous embedded systems. Int. J. Embed. Syst. 2021, 14, 171–184. [Google Scholar] [CrossRef]

- Suhendra, V.; Raghavan, C.; Mitra, T. Integrated scratchpad memory optimization and task scheduling for MPSoC architectures. In Proceedings of the 2006 International Conference on Compilers, Architecture and Synthesis for Embedded Systems, Seoul, Republic of Korea, 22–25 October 2006; pp. 401–410. [Google Scholar]

- Hu, W.; Gan, Y.; Lv, X.; Wang, Y.; Wen, Y. A improved list heuristic scheduling algorithm for heterogeneous computing systems. In Proceedings of the 2020 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Toronto, ON, Canada, 11–14 October 2020; pp. 1111–1116. [Google Scholar]

- Peng, Q.; Wang, S. Masa: Multi-application scheduling algorithm for heterogeneous resource platform. Electronics 2023, 12, 4056. [Google Scholar] [CrossRef]

- Taheri, G.; Khonsari, A.; Entezari-Maleki, R.; Sousa, L. A hybrid algorithm for task scheduling on heterogeneous multiprocessor embedded systems. Appl. Soft Comput. 2020, 91, 106202. [Google Scholar] [CrossRef]

- Abdi, A.; Salimi-Badr, A. ENF-S: An evolutionary-neuro-fuzzy multi-objective task scheduler for heterogeneous multi-core processors. IEEE Trans. Sustain. Comput. 2023, 8, 479–491. [Google Scholar] [CrossRef]

- Arda, S.E.; Krishnakumar, A.; Goksoy, A.A.; Kumbhare, N.; Mack, J.; Sartor, A.L.; Akoglu, A.; Marculescu, R.; Ogras, U.Y. DS3: A system-level domain-specific system-on-chip simulation framework. IEEE Trans. Comput. 2020, 69, 1248–1262. [Google Scholar] [CrossRef]

- Cordeiro, D.; Mounié, G.; Perarnau, S.; Trystram, D.; Vincent, J.M.; Wagner, F. Random graph generation for scheduling simulations. In Proceedings of the 3rd International ICST Conference on Simulation Tools and Techniques (SIMUTools 2010). ICST, Malaga, Spain, 15–19 March 2010; p. 10. [Google Scholar]

- Yao, Y.; Song, Y.; Huang, Y.; Ni, W.; Zhang, D. A memory-constraint-aware list scheduling algorithm for memory-constraint heterogeneous muti-processor system. IEEE Trans. Parallel Distrib. Syst. 2022, 34, 1082–1099. [Google Scholar] [CrossRef]

- Suter, F.; Hunold, S. Daggen: A Synthetic Task Graph Generator. 2013. Available online: https://github.com/frs69wq/daggen (accessed on 10 October 2024).

- Catania, V.; Mineo, A.; Monteleone, S.; Palesi, M.; Patti, D. Noxim: An open, extensible and cycle-accurate network on chip simulator. In Proceedings of the 2015 IEEE 26th International Conference on Application-Specific systems, Architectures and Processors (ASAP), Toronto, ON, Canada, 27–29 July 2015; pp. 162–163. [Google Scholar]

- Bienia, C.; Kumar, S.; Singh, J.P.; Li, K. The PARSEC benchmark suite: Characterization and architectural implications. In Proceedings of the 17th International Conference on Parallel Architectures and Compilation Techniques, Toronto, ON, Canada, 25–29 October 2008; pp. 72–81. [Google Scholar]

- Che, S.; Boyer, M.; Meng, J.; Tarjan, D.; Sheaffer, J.W.; Lee, S.H.; Skadron, K. Rodinia: A benchmark suite for heterogeneous computing. In Proceedings of the 2009 IEEE International Symposium on Workload Characterization (IISWC), Austin, TX, USA, 4–6 October 2009; pp. 44–54. [Google Scholar]

- Dongarra, J.J.; Takahashi, D.; Bailey, D.; Koester, D.; Luszczek, P.; Rabenseifner, R.; Lucas, B.; McCalpin, J. Introduction to the HPC Challenge Benchmark Suite. 2005. Available online: https://escholarship.org/content/qt6sv079jp/qt6sv079jp.pdf (accessed on 10 October 2024).

- Krishnamoorthy, R.; Krishnan, K.; Chokkalingam, B.; Padmanaban, S.; Leonowicz, Z.; Holm-Nielsen, J.B.; Mitolo, M. Systematic approach for state-of-the-art architectures and system-on-chip selection for heterogeneous IoT applications. IEEE Access 2021, 9, 25594–25622. [Google Scholar] [CrossRef]

- Ubal, R.; Jang, B.; Mistry, P.; Schaa, D.; Kaeli, D. Multi2Sim: A simulation framework for CPU-GPU computing. In Proceedings of the 21st International Conference on Parallel Architectures and Compilation Techniques, Minneapolis, MN, USA, 19–23 September 2012; pp. 335–344. [Google Scholar]

- Lowe-Power, J.; Ahmad, A.M.; Akram, A.; Alian, M.; Amslinger, R.; Andreozzi, M.; Armejach, A.; Asmussen, N.; Beckmann, B.; Bharadwaj, S. The gem5 simulator: Version 20.0+. arXiv 2020, arXiv:2007.03152. [Google Scholar]

- Magnusson, P.S.; Christensson, M.; Eskilson, J.; Forsgren, D.; Hallberg, G.; Hogberg, J.; Larsson, F.; Moestedt, A.; Werner, B. Simics: A full system simulation platform. Computer 2002, 35, 50–58. [Google Scholar] [CrossRef]

- Data, B.M. Texas Instruments Incorporated. 2002. Available online: https://www.sprow.co.uk/bbc/hardware/speech/tms6100.pdf (accessed on 10 October 2024).

- Gensh, R.; Aalsaud, A.; Rafiev, A.; Xia, F.; Iliasov, A.; Romanovsky, A.; Yakovlev, A. Experiments with Odroid-XU3 board. In School of Computing Science Technical Report Series; Newcastle University: Newcastle upon Tyne, UK, 2015. [Google Scholar]

- Goksoy, A.A.; Hassan, S.; Krishnakumar, A.; Marculescu, R.; Akoglu, A.; Ogras, U.Y. Theoretical validation and hardware implementation of dynamic adaptive scheduling for heterogeneous systems on chip. J. Low Power Electron. Appl. 2023, 13, 56. [Google Scholar] [CrossRef]

- Soni, R.S.; Asati, D. Development of Embedded Web Server Configured on FPGA Using Soft-core Processor and Web Client on PC. Int. J. Eng. Adv. Technol. (IJEAT) 2012, 1, 295–298. [Google Scholar]

- Bunde, D.P. Power-aware scheduling for makespan and flow. In Proceedings of the Eighteenth Annual ACM Symposium on Parallelism in Algorithms and Architectures, Cambridge, MA, USA, 30 July–2 August 2006; pp. 190–196. [Google Scholar]

- Chen, J.; Soomro, P.N.; Abduljabbar, M.; Pericàs, M. An Adaptive Performance-oriented Scheduler for Static and Dynamic Heterogeneity. arXiv 2019, arXiv:1905.00673. [Google Scholar]

- Baskiyar, S.; SaiRanga, P.C. Scheduling directed a-cyclic task graphs on heterogeneous network of workstations to minimize schedule length. In Proceedings of the 2003 International Conference on Parallel Processing Workshops, 2003. Proceedings, Kaohsiung, Taiwan, 6–9 October 2003; pp. 97–103. [Google Scholar]

- Huang, J.; Buckl, C.; Raabe, A.; Knoll, A. Energy-aware task allocation for network-on-chip based heterogeneous multiprocessor systems. In Proceedings of the 2011 19th International Euromicro Conference on Parallel, Distributed and Network-Based Processing, Ayia Napa, Cyprus, 9–11 February 2011; pp. 447–454. [Google Scholar]

- Hamano, T.; Endo, T.; Matsuoka, S. Power-aware dynamic task scheduling for heterogeneous accelerated clusters. In Proceedings of the 2009 IEEE International Symposium on Parallel & Distributed Processing, Rome, Italy, 23–29 May 2009; pp. 1–8. [Google Scholar]

- Amalarethinam, D.G.; Josphin, A.M. Dynamic task scheduling methods in heterogeneous systems: A survey. Int. J. Comput. Appl. 2015, 110, 12–18. [Google Scholar]

- Shi, Z.; Jeannot, E.; Dongarra, J.J. Robust task scheduling in non-deterministic heterogeneous computing systems. In Proceedings of the 2006 IEEE International Conference on Cluster Computing, Barcelona, Spain, 25–28 September 2006; pp. 1–10. [Google Scholar]

- Bonnet, S.; Teuteberg, F. Impact of blockchain and distributed ledger technology for the management of the intellectual property life cycle: A multiple case study analysis. Comput. Ind. 2023, 144, 103789. [Google Scholar] [CrossRef]

- Leus, G.; Marques, A.G.; Moura, J.M.; Ortega, A.; Shuman, D.I. Graph Signal Processing: History, development, impact, and outlook. IEEE Signal Process. Mag. 2023, 40, 49–60. [Google Scholar] [CrossRef]

- Chen, J.; Wang, W.; Yu, K.; Hu, X.; Cai, M.; Guizani, M. Node connection strength matrix-based graph convolution network for traffic flow prediction. IEEE Trans. Veh. Technol. 2023, 72, 12063–12074. [Google Scholar] [CrossRef]

- Cheng, Y.; Cao, Z.; Zhang, X.; Cao, Q.; Zhang, D.J.T. Multi objective dynamic task scheduling optimization algorithm based on deep reinforcement learning. J. Supercomput. 2024, 80, 6917–6945. [Google Scholar] [CrossRef]

- Yin, S.; Fu, C.; Zhao, S.; Li, K.; Sun, X.; Xu, T.; Chen, E. A survey on multimodal large language models. arXiv 2023, arXiv:2306.13549. [Google Scholar] [CrossRef]