Abstract

The aim of this research is to investigate and use a variety of immersive multisensory media techniques in order to create convincing digital models of fossilised tree trunks for use in XR (Extended Reality). This is made possible through the use of geospatial data derived from aerial imaging using UASs, terrestrial material captured using cameras and the incorporation of both the visual and audio elements for better immersion, accessed and explored in 6 Degrees of Freedom (6DoF). Immersiveness is a key factor of output that is especially engaging to the user. Both conventional and alternative methods are explored and compared, emphasising the advantages made possible with the help of Machine Learning Computational Photography. The material is collected using both UAS and terrestrial camera devices, including a multi-sensor 3D-360° camera, using stitched panoramas as sources for photogrammetry processing. Difficulties such as capturing large free-standing objects using terrestrial means are overcome using practical solutions involving mounts and remote streaming solutions. The key research contributions are comparisons between different imaging techniques and photogrammetry processes, resulting in significantly higher fidelity outputs. Conclusions indicate that superior fidelity can be achieved through the help of Machine Learning Computational Photography processes, and higher resolutions and technical specs of equipment do not necessarily translate into superior outputs.

1. Introduction

Extended Reality (XR) is a term encompassing all current and future real and virtual combined environments such as VR (Virtual Reality), AR (Augmented Reality) and MR (Mixed Reality). In the last 5 years, vast advancements have been taking place in the field of immersive media [1], both in terms of production and consumption. In terms of production, computing and especially graphical processing technology has gone through three generations of evolution, now having features such as real-time ray tracing in both consumer GPUs (NVIDIA RTX) as well as game consoles (ray tracing-capable APUs by AMD in Microsoft Xbox X and Sony PlayStation 5). In terms of video capture, RED, Kandao, Insta360, Vuze and more have released cameras that can capture the world in 3D-360. In terms of media consumption, companies such as Oculus, HTC, Valve, HP and more have introduced affordable VR headsets capable of displaying immersive content. Efforts for AR and MR have also been massive from all the leading companies such as Apple’s ARKit [2], Google’s ARCore [3] and Holo/MR efforts from Microsoft with their Hololens systems [4]. Apple’s more recent release of the Apple Vision Pro device has also promised to push boundaries, focusing on spatial computing and offering industry-leading resolution and fidelity.

XR can be used for immersive 3D visualisation in geoinformation and geological sciences, where virtual locations can be based on geospatial datasets [5]. Such virtual geosites can be used for popularising geoheritage for a general audience as well as engaging younger demographics, usually interested in more cutting-edge forms of communication [6]. Another advantages of XR on geoheritage sites is the ability to visit locations around the clock, regardless of weather conditions, or observe features that are difficult to access; for example, a fossilised tree trunk might be too tall, necessitating the use of scaffolding to access up close, a potential health and safety risk for general public observation. Multiple observers on the same location/artefact are also an added advantage, making it possible for a variety of audiences to observe a specific artefact up close at the same time, including conservation professionals.

UASs refer to unmanned aerial systems. UASs have been rapidly advancing, with companies such as DJI continually updating their model offerings with drones aimed at both professionals and hobbyists, ranging from portable foldable models such as the Spark, the Mavic and the Mini series, to larger and more versatile drones with changeable payloads such as the Matrice series. Drones offer us rather versatile options in terms of optical viewpoints, practically allowing us to position cameras or other scanning equipment in areas that are not as easily reachable by terrestrial means. They are also efficient when it comes to photographing, mapping or otherwise gathering sensor data of wide areas. UAS surveys have also been used in archaeology, using a combination of LiDAR scans and photogrammetry techniques, to help make observations of historical areas, interpret locations and make new discoveries that may not be visible to the naked eye such as other possible structures at the same location [7]. Geospatially aware datasets ensure accurate placement, aiding both in the reconstruction of an XR environment as well as navigation within it.

When it comes to cameras, there have also been developments in both software and hardware domains, enabling us to capture higher resolutions with greater fidelity as well as different formats and all possible fields of view. One of those developments is the 360° camera, where fisheye optics are used in conjunction with multiple sensors, resulting in real-time panoramas [8], previously requiring extra stitching work in order to be produced and also being less suitable to capture visual material when there are moving elements in the target area. Multiple sensor fisheye arrangements also make it possible to obtain 3D-360 stereoscopic results for use in immersive media.

Another major development in digital cameras has been in the onboard processing aspect, with newer technologies over the years allowing us to acquire images with less sensor noise and also enabling more pixels to be included in smaller sensors [9]. The advent of the smartphone also helped speed up development in that area since every smartphone user was in fact a digital camera user, the target audience no longer being focused mainly on photography professionals and enthusiasts. That, combined with fierce competition in that sector, has brought us vast camera improvements every year, with fidelity rapidly improving and, in certain cases, resulting in smartphones rivalling professional digital cameras in terms of fidelity, as that sector has been evolving faster. Machine learning and artificial intelligence have also been integral to mobile phone chipsets, further aiding processes such as better detail extraction during the process of taking pictures with the integrated camera, practically resulting in Machine Learning Computational Photography. This exciting development essentially democratises high-fidelity photography, a field once exclusive to high-end equipment. Material utilising such methods will also be collected and investigated, comparing results in the software.

When it comes to digitization of subjects in 3D, photogrammetry is a versatile method, and it relies on material captured with cameras. In order to create believable immersive content to be deployed in XR, the physical objects need to be captured with as much detail as our current technology allows us to capture. In order to aid better immersion, both the visual and audio domains will be included, the audio being in the form of 360° spatial audio (ambisonic).

XR applications have been implemented in geoheritage sites via a variety of methods. Both Augmented Reality (AR) and Virtual Reality (VR) techniques have been used in order to digitally represent geoheritage sites and artefacts; however, the process tends to be focused more on the transmissibility of information and the preservation of sites and artefacts in digital form, with less emphasis on factors such as immersion and realistic approximation of the actual artefacts and sites [1]. While the areas and objects can be accurately digitised and represented from a size, dimension and geolocation perspective, they can lack important details such as high-resolution meshes and textures and appropriate photorealistic shading, even completely ignoring the audio aspect, all of which are important to completely represent the environment of a location when experienced in XR.

This paper investigates and uses a variety of the aforementioned means as multisensory media techniques in order to create convincing XR representations of fossilised tree trunks. A clear problem with XR and photogrammetry has been lower fidelity visuals with dense geometry, resulting in low performances in VR and XR applications due to resource-hungry outputs. This research addresses those points by investigating ML-/Computational Photography-assisted imaging, achieving visually superior results using image sets of lower pixel resolutions. Visual material from a variety of sources, aerial and terrestrial, is compared and appropriate processes are applied to achieve more realistic detail and therefore more immersive results when deployed in XR. The innovation of this study is to implement computational photography-aided imagery, derived from mobile phones, in the 3D modelling and visualisation of geoheritage sites. Such imagery results in superior fidelity, suitable for extra detailed representations when it comes to 3D digitisation of petrified tree trunks. Additionally, the resulting 3D model is fused with the model derived from scale-accurate RTK UAS imagery, as well as 360 panoramic imagery, resulting in a comprehensive model that includes both the surrounding environment area as well as the extra high-fidelity tree trunk. The extra fidelity derived from our methodology allows us to produce a more realistic visual result, suitable for extra immersive XR experiences.

2. Materials and Methods

2.1. Study Area

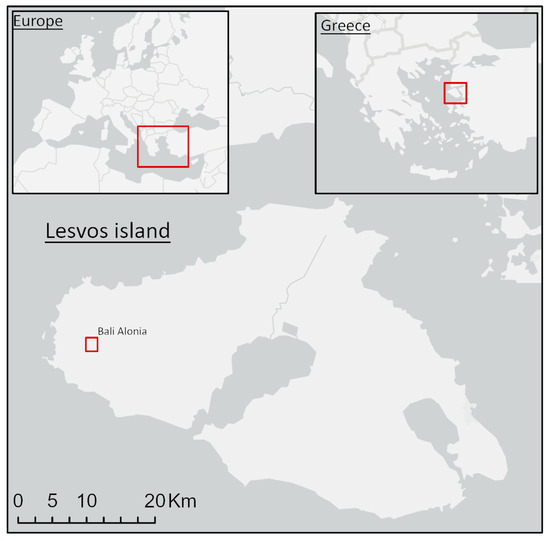

The Lesvos Island UNESCO Global Geopark was the case study site. Its petrified forest, formed some 15 to 20 million years ago, features rare and impressive fossilised tree trunks [10]. Some of those trunks can still be seen today, in their upright position with intact roots up to seven metres, while others are found in a fallen position measuring up to 20 metres. The fossilised trunks have retained fine details of their bark, and their interior reveals a variety of colours. Such details were accurately digitised in 3D using a combination of UASs, 3D-360 imagery and audiovisual capture devices. Specifically, Bali Alonia Park was chosen due to the size and positioning of its large fossils (Figure 1).

Figure 1.

Lesvos Geopark: Bali Alonia Park location map, red box marking the zoomed-in area.

2.2. Methods

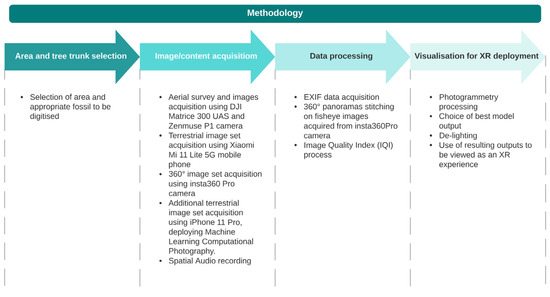

The methodology followed for this project took place in the following stages: area and tree trunk selection, image/content acquisition, data processing and visualisation for XR deployment (Figure 2).

Figure 2.

Flowchart of the methodology followed.

2.2.1. Area and Tree Trunk Selection

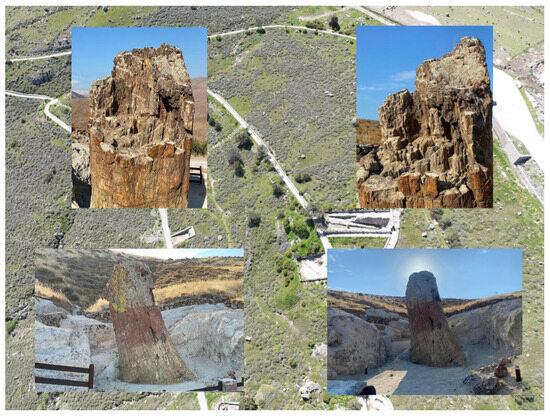

The Bali Alonia Park was chosen due to the size and positioning of its large fossils. The chosen tree trunk was Fossil Tree Trunk N°69 (Figure 3).

Figure 3.

Petrified forest and Fossil Tree Trunk 69 study area.

Fossil Tree Trunk 69 is the largest standing fossilised tree trunk in the world, standing at 7.20 m with an 8.58 m perimeter. It is an ancestral form of Sequoia, belonging to Taxodioxylon albertense. For reference, a more modern representative Sequoia (Semprevirens) is the type of Sequoia found in national parks in California and Oregon [11]. The conservation works as well as the cleaning and aesthetic restoration of the trunk resulted in a rather impressive monument of nature, hence our selection of this area as the subject to be digitised and 3D-visualised for XR deployment.

2.2.2. Image/Content Acquisition

An aerial survey was conducted using a DJI Matrice 300 RTK UAS, equipped with a DJI Zenmuse P1, in order to capture the general area, including the main fossilised tree trunk. A total of 459 pictures were taken, covering a wide area of that section of the geopark. The resolution of those images was 8192 × 5460 pixels, which is in line with the advertised 45-megapixel specification.

Following that, another set of pictures was taken around the tree trunk with a Xiaomi Mi 11 Lite 5G mobile phone, in order to obtain more closeup content and for comparison purposes during the data processing and visualisation stages. A total of 214 pictures were taken using that mobile phone at the impressive resolution of 6944 × 9280 pixels, exceeding its 64-megapixel advertised specification.

For further testing of different available image acquisition techniques, an additional set of pictures was taken, using an Insta360 Pro multi-sensor 3D-360 camera, equipped with 6 sensors and lenses onboard. That camera was placed in 9 different positions around the tree trunk, and a picture from all the sensors was taken from each position for a total of 54 fisheye images at a resolution of 4000 × 3000 pixels. All three companies (Insta360, Xiaomi, DJI) are Chinese manufacturers, DJI and Insta360 headquartered in Shenzen, China, Xiaomi in Beijing, China.

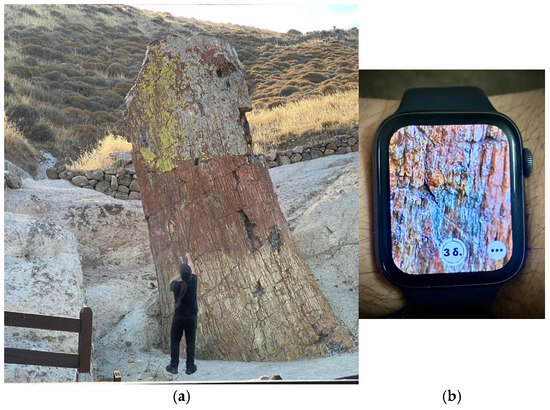

Additionally, an extra set of mobile phone pictures was taken, using an Apple iPhone 11 Pro. This was carried out in order to test that specific phone’s computational photography capabilities when it comes to image capture and fidelity, especially focusing on fine details. A set of 177 pictures were taken, a task that proved to be extra challenging, especially when it came to capturing the top parts of the tree trunk. A 3 m long monopod was used with a mobile phone adapter, and the mobile phone was streaming as well as being remote controlled by an Apple Watch Series 4 Smartwatch, in order to monitor where the camera was pointing and to remotely trigger the shutter button (Figure 4). Apple is an American manufacturer, headquartered in Cupertino, CA, USA.

Figure 4.

Image acquisition for large tree trunk: (a) 3 m long monopod used with iPhone 11 Pro. (b) Smartwatch camera control.

These specific models of hardware were chosen as it was equipment we already had at our disposal while being both appropriate and capable for the purposes of this research.

Following the acquisition of the different image sets, spatial audio of the area was also recorded to capture the area’s aural ambience in 360°. Hearing is the fastest sense of humans, much faster in response times than vision [12], making Virtual Auditory Display (VAD) systems an important part of any XR application. Spatial audio and ambisonics are used for deploying such a system.

Ambisonics and spatial audio are sound techniques that capture audio in a spherical way. Ambisonics is a method developed in the 70s by British academics Michael Gerzon (University of Oxford) and Professor Peter Fellgett (University of Reading), designed to reproduce recordings in an immersive way, captured with specially arranged microphone arrays [13]. A Zoom H2N multi-capsule recorder [14] was used, with a Rycote cover to avoid unwanted distortion due to wind, fastened on a shock mount to avoid vibration transferring into audio.

2.2.3. Data Processing

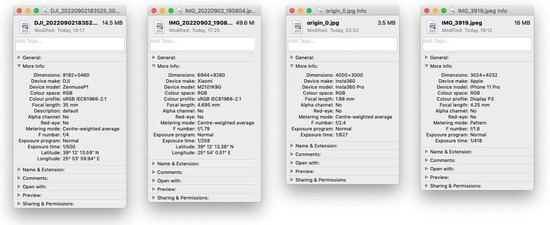

All image specifications were acquired by reading the metadata available through the Exchangeable Image File format (EXIF) embedded in the files (Figure 5).

Figure 5.

EXIF data from the different cameras used.

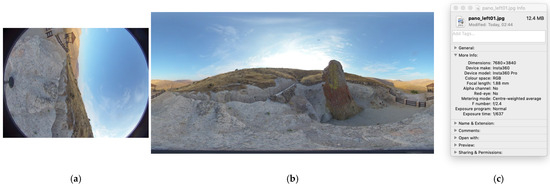

Observing the values in Table 1, it is clear that the Zenmuse P1 camera had the highest resolution compared to the rest, which was to be expected as it used a full-frame camera sensor, compared to the other cameras using smaller sensors appropriate for mobile phones and smaller devices. The Xiaomi phone was the second highest, followed by the iPhone 11 Pro and the Insta360 Pro camera. Due to the nature of how 360° photogrammetry works, the images from the Insta360 Pro camera were reduced from 54 fisheye images to 9 stitched panoramas, one for each position where the camera was placed. The resolution of each panorama was 7680 × 3840 (Figure 6).

Table 1.

Image/content acquisition by device.

Figure 6.

Captured 360 content: (a) individual fisheye image, (b) panorama, and (c) EXIF data of stitched panorama.

Images previously acquired during the image/content acquisition stage went through a quality control process in order to be used for photogrammetry with the Agisoft Metashape Pro Version 2.0.2 software package. The Image Quality Index (IQI) was used in order to determine unusable imagery and to compare fidelity between the different cameras.

Observing the IQI values in Table 2, it appears that the Insta360 Pro scored the highest, followed by the Zenmuse camera, the iPhone and the lowest of them all, the Xiaomi phone. A surprising result since, on resolution values alone, the Xiaomi phone excelled with only the Zenmuse camera offering a higher pixel count. Moreover, it also produced pictures with an IQI score lower than 0.5, which were discarded during the photogrammetry process. Similarly, the iPhone had the lowest resolution compared to all cameras; however, its IQI score was rather high, and the imagery appeared to be of quite high fidelity.

Table 2.

Image Quality Index (IQI) by device.

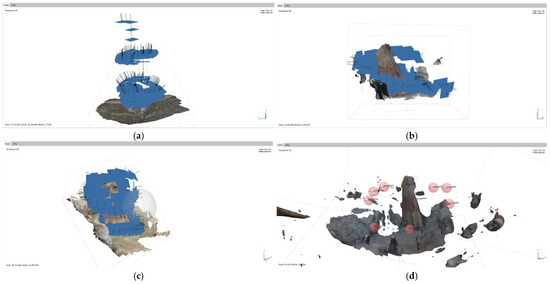

2.2.4. Visualisation for XR Deployment

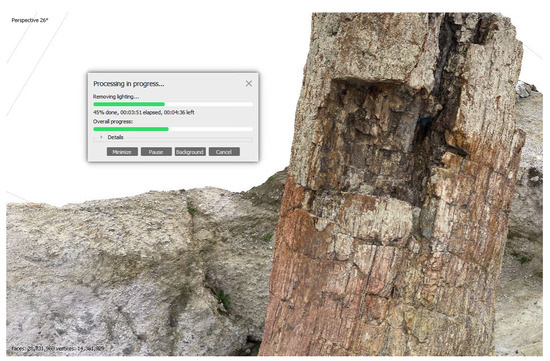

After quality control concluded, all images were processed with photogrammetry software Agisoft Metashape Pro Version 2.0.2 [15] (Figure 7) in order to visualise the content as 3D scenes for XR deployment. Concerning volumetric accuracy, the DJI Matrice 300 RTK UAS was used for the first dataset so the scale accuracy was achieved through RTK (Real Time Kinematics). For subsequent models, the tree trunk was adapted and visually checked against the RTK-based version. Following photogrammetry processing, the different resulting models were observed and compared in order to conclude the most suitable approach for photorealistic immersive XR use.

Figure 7.

Photogrammetry processing showing camera positioning for each picture used: (a) Zenmuse P1, (b) Xiaomi Mi 11 Lite 5G, (c) Apple iPhone 11 Pro, and (d) Insta360 Pro.

3. Results

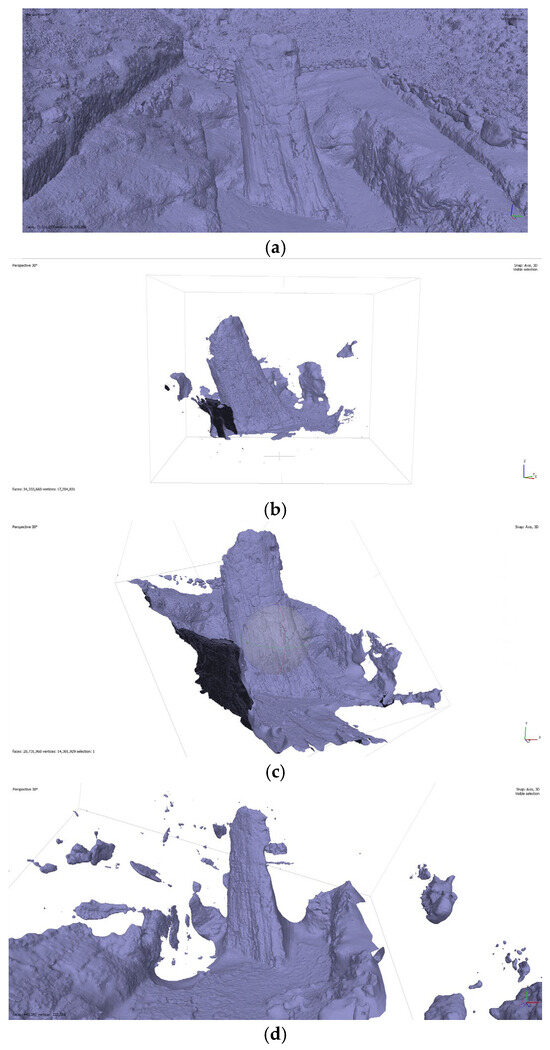

3.1. Geometry, Confidence and Shaded Views

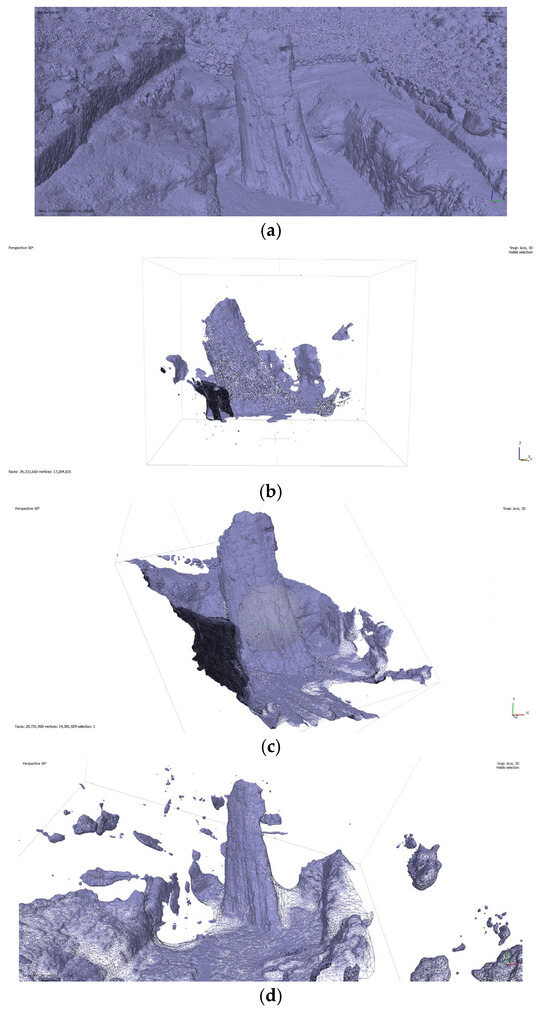

Photogrammetry processing had its own set of challenges, considering the large image base of the dataset and the aim of high-fidelity outputs. To speed up processing times, multiple GPUs were used; however, a bottleneck was observed based on VRAM capacity, with cards using less than 24 GB of VRAM causing the process to fail mid-way and requiring the process to be restarted. The proposed cluster of GPUs comprised two RTX3090-based GPUs, an RTX3080 (10 GB) and an RTX2070 (8 GB). Only the 3090 and 4090 cards were used since they all had 24 GB VRAM, large enough to fit our image sets. That also drew the conclusion that photorealistic large-scale photogrammetry processing is currently limited to high-end devices due to VRAM limitations. Following photogrammetry processing, the resulting geometry was displayed in the following views, presented within Agisoft Metashape v2.0.2: Wireframe, Solid, Confidence and Shaded. Observing the Wireframe view, we could see the density of the geometry, while the Solid view showed us a solid mesh, more accurately displaying the surface. The Confidence view visualised the model in a way that highlighted problem areas where for example, there was not enough overlap to achieve a more accurate reconstruction. The Shaded view provided a more realistic view of the model, also including texture. Wireframe was the first view to be observed (Figure 8).

Figure 8.

Wireframe view of models produced through photogrammetry processing: (a) Zenmuse P1, (b) Xiaomi Mi 11 Lite 5G, (c) Apple iPhone 11 Pro, and (d) Insta360 Pro.

Observing the views, it is obvious that Zenmuse P1 had the densest geometry, also covering a wider area. This reflects the fact that it had the densest camera sensor of all other methods, as well as having a larger number of pictures since it was an aerial scan of the area. The iPhone model seemed to be the second densest, while the Xiaomi phone and Insta360 model produced results that were not really usable due to inaccuracies and large gaps. When comparing the Solid model views, the observations were rather similar (Figure 9).

Figure 9.

Solid view of models produced through photogrammetry processing: (a) Zenmuse P1, (b) Xiaomi Mi 11 Lite 5G, (c) Apple iPhone 11 Pro, and (d) Insta360 Pro.

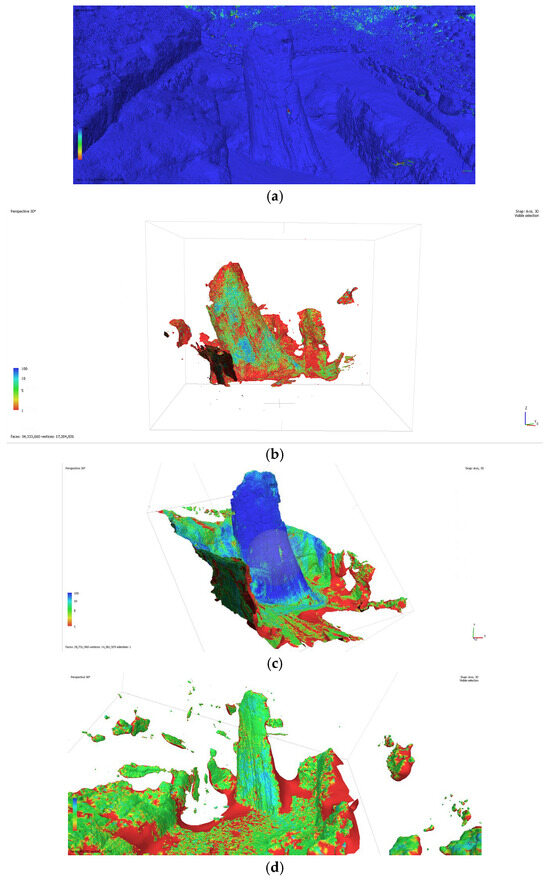

The Confidence view uses a colour range from red to blue, with reds being the lows and blues being the highs. High values represent a more accurate model with less problematic reconstruction areas while low values highlight issues. All four models were compared (Figure 10). The model with the Zenmuse camera again appeared to be the least problematic of the four, followed by the iPhone model, the Xiaomi model and lastly, the one generated using content from the Insta360 Pro camera. At this point, it was rather obvious that the Insta360 Pro model was unusable as did not have any blue areas at all, with the Xiaomi model being similarly flawed. Furthermore, the insta360 Pro model had quite large gaps.

Figure 10.

Confidence view of models produced through photogrammetry processing: (a) Zenmuse P1, (b) Xiaomi Mi 11 Lite 5G, (c) Apple iPhone 11 Pro, and (d) Insta360 Pro.

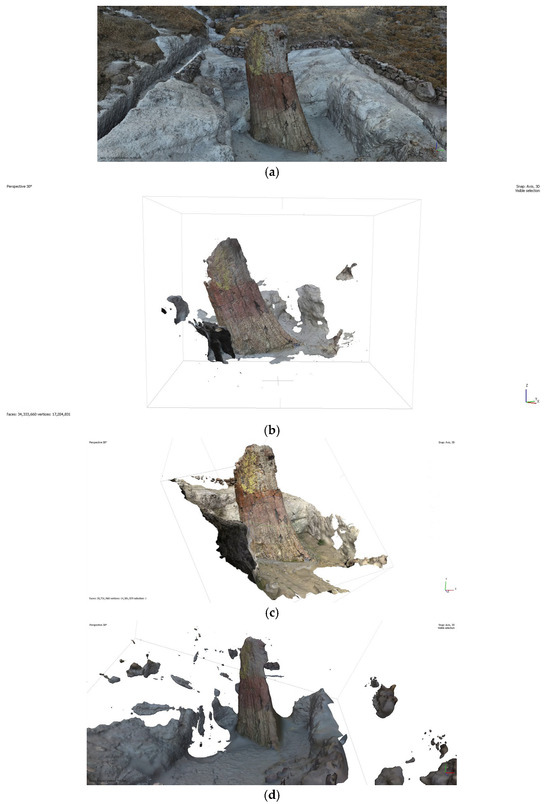

The Shaded view provided a more realistic image of the models; however, it also made obvious the shortcomings of each device when it comes to capturing detail (Figure 11).

Figure 11.

Shaded view of models produced through photogrammetry processing: (a) Zenmuse P1, (b) Xiaomi Mi 11 Lite 5G, (c) Apple iPhone 11 Pro, and (d) Insta360 Pro.

3.2. Detail Fidelity

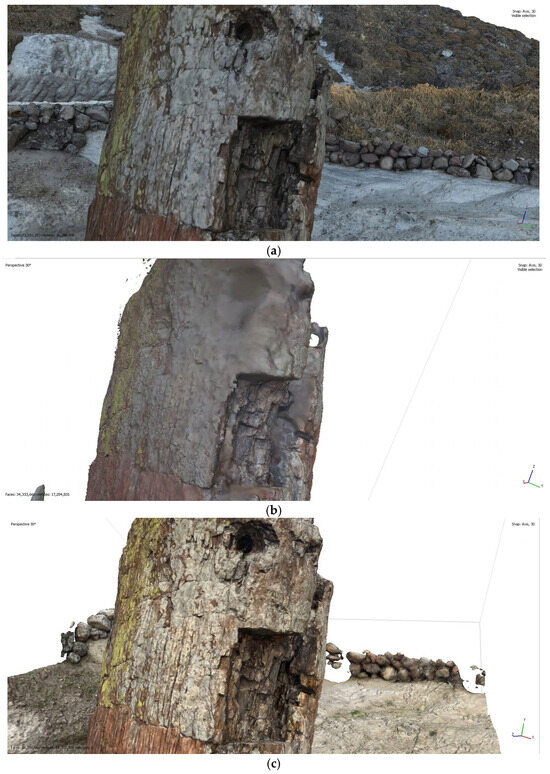

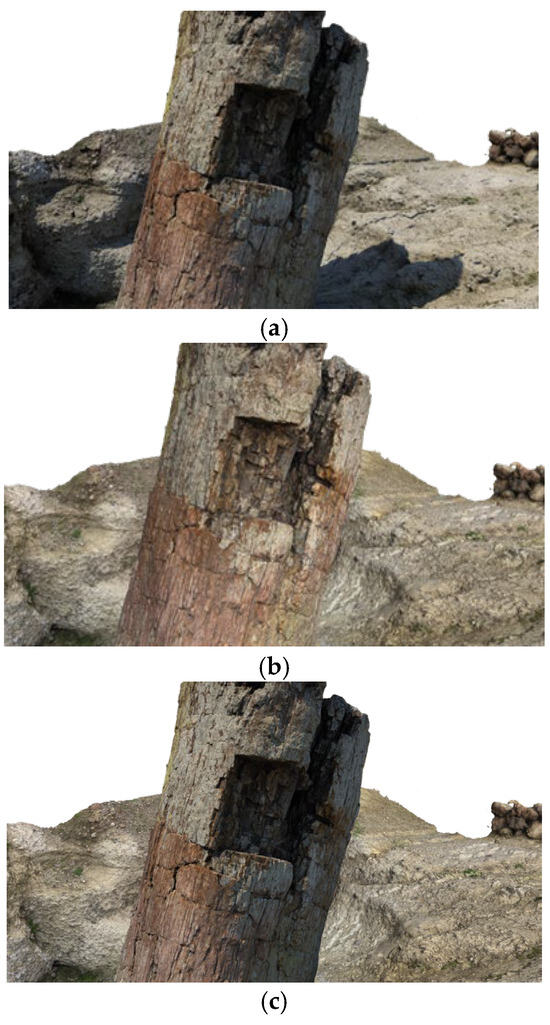

In order to select the most realistic model to be used for XR, the detail fidelity of the models had to be observed. At this stage, the Insta360 model had to be omitted since the large parts missing from the tree trunk area made it unsuitable for such use. Its source 360° panoramas were still of use for the environment of the final XR visualisation though. The remaining models that had at least the tree trunk reconstructed were examined through observation from a closer viewpoint (Figure 12).

Figure 12.

Closeup views of models: (a) Zenmuse P1, (b) Xiaomi Mi 11 Lite 5G, and (c) Apple iPhone 11 Pro.

It is quite obvious that the model derived from the iPhone image set presented rather superior fidelity in terms of details, a result perhaps surprising, considering the resolutions used by the Zenmuse camera and the Xiaomi phone as well as the fact that it is a nearly 4-year-old mobile phone (released on September 2019). This occurred due to a number of reasons. One of them is due to machine learning being used during the picture-taking process of iPhones of the 11 Pro model onwards, named Deep Fusion.

Deep Fusion is a computational photography approach that uses nine shots in order to produce a picture; four shots before the shutter button is pressed (taken from the device’s preview/viewfinder buffer), four shots after pressing the shutter button and one long exposure shot. Then, within one second, the phone chipset’s neural engine analyses the short and long exposure shots, picking the highest fidelity ones and examining them on a pixel level in order to optimise details and achieve low noise. The result is a high-fidelity picture rivalling sensors of 4× the pixel count, as demonstrated in the above comparison. Both the Zenmuse and the Xiaomi devices only used standard de-mosaic processes in order to produce the pictures, with no machine learning to help bring out details. Following a detail fidelity comparison, the iPhone 11 Pro-derived model was the model of choice for XR use since the higher fidelity images resulted in more detailed 3D models. To achieve an accurate scale, the iPhone 11 Pro-derived model was adapted to and visually checked against the model produced by the Zenmuse camera since it was used with the DJI Matrice 300 UAS RTK, an RTK technology ensuring accurate scale. The selection of the model was carried out purely on visual criteria, with the one appearing to exhibit greater details being the preferred one. As an added bonus, the preferred model was also derived from the smallest image dataset since each source picture was a quarter of the resolution (12 vs. 48 megapixels) compared to the full-frame camera sensor, even more so when compared to the 64-megapixel Android smartphone, resulting in the use of a fraction of the resources and storage needed.

3.3. Finalising Material for XR

Following model selection, further processes needed to be conducted to further improve the model to be used for XR. One of the processes was de-lighting. While it is good practice to capture images for photogrammetry with no strong shadows present, that is not always possible; therefore, de-lighting is used as a post-process, in order to make the model suitable for any desirable lighting conditions while in XR (Figure 13).

Figure 13.

De-lighting tool in Agisoft Metashape.

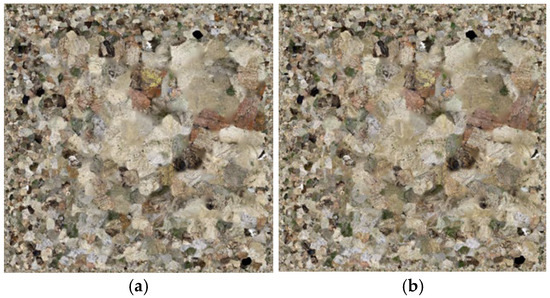

The de-lighting process alters the texture of the geometry, so any in-built shadows are softened or even eliminated (Figure 14). That gives the freedom of altering the lighting in the real-time engine, making the model suitable to be viewed at any desirable time in the day within the virtual world, without the shadows being unrealistic.

Figure 14.

Removing shadows: (a) original texture; (b) de-lighted.

An example of a model that would look bad without de-lighting would be if the pictures for photogrammetry were taken when the sun was hitting the subject from one side and casting hard shadows on the other side, then attempting to use that model in XR in a scene where the sun is shining in a different direction, at a different time of the day (Figure 15). An unprocessed model would still have its original shadows embedded, looking rather unrealistic and thus affecting immersion.

Figure 15.

De-lighting demonstration: (a) unprocessed model with sun at side; (b) de-lighted model with sun in front; (c) unprocessed model with sun in front, with inaccurate embedded shadows.

Following the de-lighting process, the geometry was placed within 360° panoramic imagery in order to have an environment around it, and the previously captured spatial audio was also included, for the purpose of being viewed as an XR experience (Figure 16).

Figure 16.

Processed model + 360° environment compiled and viewed in XR [16].

4. Discussion and Conclusions

The aim of this research was to investigate and use a variety of immersive multisensory media techniques in order to create convincing digital models of fossilised tree trunks for use in XR. Immersion and realism were key focus points in the early stages, in order to be able to approximate the digitally reconstructed output as close to the real artefact as possible. In order to do that, extra factors were also included such as capturing the spatial audio of the area using ambisonic microphones as well as that of the surrounding environment using multi-sensor 3D-360° camera equipment.

Throughout this research, both common and experimental methods were used, challenging familiar techniques with potentially improved new alternatives. The familiar method included image sets taken with more commonplace methods using conventional (flat) photography with normal lenses and sensors [17,18]. A slightly different approach was also capturing one additional image set using the camera of a Xiaomi Mi11 Lite 5G mobile phone since it features an impressive 64-megapixel main camera within its specifications sheet. The new alternatives were 360 cameras using multiple sensors and fisheye ultra-wide-field-of-view sensors. At times, the alternative method produced disappointing outputs, with the content produced using the 360° cameras resulting in inferior results as the resulting geometry lacked precision and had both distortions and rather large gaps. Panorama-based photogrammetry has not been available for as long as conventional flat-imagery photogrammetry, so it is expected to further improve as the technology further matures.

Being an avid photographer and cinematographer in my spare time, I have become familiar with the advantages of computational photography in the last several years, driven by machine learning, and have repeatedly noticed a smaller and cheaper device, like an iPhone, challenging my professional equipment in terms of fidelity when it comes to outputs straight from the device. Based on that, I hypothesised how beneficial it would be to use such technology to capture the source image sets for photogrammetry capture; therefore, I used an iPhone 11 Pro mobile for one extra set and the results were exceedingly impressive.

While there is always some basic computational process involved in digital cameras [9] in terms of converting the sensor data to an image file, the advent of smart camera phones has made such processes even more commonplace. The rapid evolution of such camera phones essentially brought a good quality camera into most peoples’ pockets and the included ‘app stores’ made it much easier and more accessible to alter the way the camera module works, compared to altering the software of a dedicated digital camera. Since certain picture qualities such as a shallow depth of field and low light/low noise were normally the characteristics of cameras with high-quality large sensors and optics, mobile phones had to find software solutions in order to calculate and realistically recreate such characteristics formerly reserved for professional cameras.

The one recent development I focused my interest on in relation to this research was Deep Fusion by Apple since the claimed advantage was added details using multiple shots and machine learning to determine the areas of interest. Impressively, when put to the test, the additional dataset from the 12-megapixel iPhone 11 Pro rivalled all my previous results, when used for photogrammetry, in terms of fidelity. It was rather surprising to see the Zenmuse P1 professional UAS camera, using a full-frame (35 mm+) sensor boasting 45 megapixels of resolution, ending up with less detail fidelity compared to a small (10 mm−) sensor with 12 megapixels of resolution, not to mention the stark contrast compared to the results produced by the otherwise colossal 64-megapixel content shot with the Xiaomi mobile phone. The advantages of the Machine Learning Computational Photography approach were rather obvious in the results, to the point of convincing me to carry out all future photogrammetry work with it from now on.

Naturally, not all photogrammetry tasks are possible with a mobile phone, based on area and size requirements. This is also partly true with the fossilised tree trunk being rather tall and normally not possible to reach with a handheld device. In that case, this was solved by using rather long monopods; however, when collecting visual material from rather large structures, it would be impractical or even impossible to use or construct monopods to match large heights. Seeing what is being photographed can also be an issue since these devices use their displays as a preview screen; this was also resolved during this project by using proprietary solutions to live preview and control the mobile phone with a smartwatch.

Camera technology is constantly evolving, especially digital cameras that rely on sensors and internal processing for results. While computational photography-assisted devices are not as widely available outside of the smartphone domain, it is likely that technology will find its way to becoming embedded in all digital camera equipment in the near future, including cameras such as the Zenmuse P1 used with the UAS for this research. Until such a development appears in readily available products, I am already building a custom mount where I can attach computational photography-capable mobile phones to a UAS as well as signal-repeating equipment for remote controlling and previewing purposes.

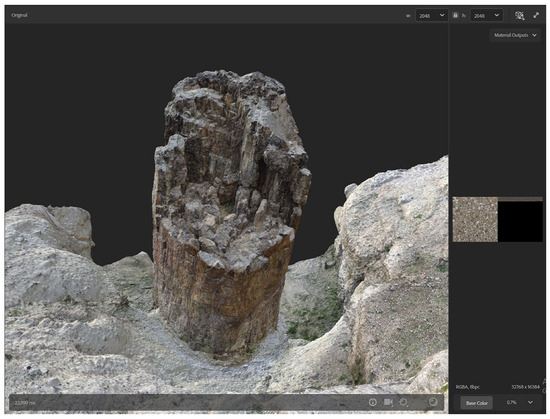

More modern technologies on photogrammetry shall also be used in the future, for further comparisons and experimentation. Some initial tests have already been made with the 3D Capture tool within the recently released beta of Adobe Substance 3D Sampler [19], with surprisingly good results for accuracy (Figure 17) at a fraction of the processing time compared to Agisoft Metashape.

Figure 17.

Highly detailed photogrammetry result using Adobe Substance 3D Sampler.

Advanced Physically Based Rendering (PBR) materials are also being considered for future use, aiming for even more realism and flexibility. Moreover, in order to be further compatible with a wider range of systems, retopology techniques are also considered for the future, essentially reconstructing the mesh with lower polygon equivalents while simulating the depth information using clever PBR materials. Advanced real-time geometry processes such as Nanite of UnrealEngine 5 are also considered for the future, enabling the use of really high polygon counts while making them workable in lower spec hardware.

The findings of this research reveal the advantages of recent developments in mobile imaging technology to essentially democratise processes to enable higher-end fidelity results for smaller organisations or enthusiasts with limited budgets as well as further optimise the use of resources of more established institutions and anyone involved in XR industry applications. Observing superior results from a sensor a fraction of the size of typical full-frame photography sensors and a quarter of the resolution in comparison means that more people can effectively engage with such processes, once requiring larger budgets allocated for such an endeavour, usually limited to either academic/scientific or otherwise industrial organisations. The fact that mobile imaging is being improved every single year due to fierce competition in that field also adds extra potential for even higher quality results in the near future, compared to a less competitive field when it comes to more conventional cameras and imaging hardware.

Before experimenting with such techniques, a more traditional workflow was used, as in obtaining a budget and ordering the ‘industry standard’ equipment for aerial imaging, which was the best imager the UAS manufacturer could offer, something that was both more costly and also more resource intensive since the images were 48 megapixels each, compared to the phone’s 12 megapixels. Common sense would dictate that bigger and ‘established’ techniques would warrant better results; however, as shown in our results, that proved not to be the case as the more sophisticated approach using images from the smartphone delivered visibly superior outputs, resulting in significantly more detailed photogrammetry outputs. Considering how rapidly smartphones are evolving, the future of digital imaging sure seems exciting.

Author Contributions

Conceptualisation, methodology, software, validation, formal analysis, investigation, resources, data curation, writing—original draft preparation, writing and visualisation were conducted by C.P. Review and editing, supervision, project administration and funding acquisition by N.Z. and N.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Research e-Infrastructure “Interregional Digital Transformation for Culture and Tourism in Aegean Archipelagos” {Code Number MIS 5047046}, which is implemented within the framework of the “Regional Excellence” Action of the “Competitiveness, Entrepreneurship and Innovation” Operational Programme. The action was co-funded by the European Regional Development Fund (ERDF) and the Greek State [Partnership Agreement 2014–2020].

Data Availability Statement

Data are contained within the article.

Acknowledgments

We thank Stavros Proestakis for his help with the Xiaomi mobile phone terrestrial image set and Giorgos Tataris for kindly helping with mapping the location of the geopark. We also wholeheartedly appreciate the staff of the Lesvos Petrified Forest for welcoming us to the geopark and assisting with all our needs.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Banfi, F.; Previtali, M. Human-Computer Interaction Based on Scan-to-BIM Models, Digital Photogrammetry, Visual Programming Language and eXtended Reality (XR). Appl. Sci. 2021, 11, 6109. [Google Scholar] [CrossRef]

- Apple ARKit. Available online: https://developer.apple.com/augmented-reality/arkit/ (accessed on 1 July 2023).

- Google ARCore. Available online: https://developers.google.com/ar (accessed on 1 July 2023).

- Microsoft Hololens. Available online: https://www.microsoft.com/en-us/hololens (accessed on 1 July 2023).

- Edler, D.; Keil, J.; Wiedenlübbert, T.; Sossna, M.; Kühne, O.; Dickmann, F. Immersive VR Experience of Redeveloped Post-Industrial Sites: The Example of “Zeche Holland”, Bochum-Wattenscheid. J. Cartogr. Geogr. Inf. 2019, 69, 267–284. [Google Scholar] [CrossRef]

- Chang, S.C.; Hsu, T.C.; Jong, M.S.Y. Integration of the peer assessment approach with a virtual reality design system for learning earth science. Comput. Educ. 2020, 146, 103758. [Google Scholar] [CrossRef]

- Bates-Domingo, I.; Gates, A.; Hunter, P.; Neal, B.; Snowden, K.; Webster, D. Unmanned Aircraft Systems for Archaeology Using Photogrammetry and LiDAR in Southwestern United States. 2021. Available online: https://commons.erau.edu/study-america/1 (accessed on 20 October 2024).

- Zhang, F.; Zhao, J.; Zhang, Y.; Zollmann, S. A survey on 360° images and videos in mixed reality: Algorithms and applications. J. Comput. Sci. Technol. 2023, 38, 473–491. [Google Scholar] [CrossRef]

- Delbracio, M.; Kelly, D.; Brown, M.S.; Milanfar, P. Mobile computational photography: A tour. Annu. Rev. Vis. Sci. 2021, 7, 571–604. [Google Scholar] [CrossRef] [PubMed]

- Zouros, N. European Geoparks Network. Episodes 2004, 27, 165–171. [Google Scholar] [CrossRef]

- Zouros, N. Petrified Forest Park, Bali Alonia. Available online: https://www.lesvosmuseum.gr/en/parks/petrified-forest-park-bali-alonia (accessed on 1 July 2023).

- Horowitz, S. The Universal Sense: How Hearing Shapes The Mind; Bloomsbury Publishing: New York, NY, USA, 2012. [Google Scholar]

- Gerzon, M.A. Periphony: With-Height Sound Reproduction. J. Audio Eng. Soc. 1973, 21, 2–10. [Google Scholar]

- Zoom H2n, Zoom Corporation, Tokyo, Japan. Available online: https://zoomcorp.com/en/gb/handheld-recorders/handheld-recorders/h2n-handy-recorder/ (accessed on 1 July 2023).

- Agisoft Metashape. Agisoft Metashape Professional Edition; Agisoft LLC: St. Petersbourg, Russia, 2023. [Google Scholar]

- Epic Games Unreal Engine. Unreal Engine, version 5.2; Epic Games Inc.: Cary, NC, USA, 2023.

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. “Structure-from-Motion” photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef]

- Smith, M.W.; Carrivick, J.L.; Quincey, D.J. Structure from motion photogrammetry in physical geography. Prog. Phys. Geogr. 2015, 40, 247–275. [Google Scholar] [CrossRef]

- Adobe Substance 3D Sampler. Adobe Substance 3D Sampler 3D Capture Beta Edition; Adobe Inc.: San Jose, CA, USA, 2023. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).