1. Introduction

Heart rate (HR) is a crucial indicator of an individual’s health status [

1]. HR can also be measured using contact-based devices that rely on physiological signals, such as electrocardiography (ECG) and photoplethysmography (PPG) [

2,

3]. However, remote photoplethysmography (rPPG) enables noncontact estimation based on the PPG signal [

4]. Since its development, rPPG-based algorithms have occupied a prominent position in remote heart rate estimation [

5]. However, accurately capturing heart rate during dynamic cardiovascular activities, such as treadmill running or elliptical training, remains a persistent challenge. These limitations could potentially be addressed by gaining a deeper understanding of the light spectrum [

6], or investigating the overlap between computer vision/neural networks and health monitoring [

7,

8,

9], or another sensor [

10]. We aimed to explore methods for enhancing the performance of rPPG algorithms, particularly in environments with regular movement patterns, such as treadmill workouts. To achieve this, we also conducted an in-depth study of existing algorithms.

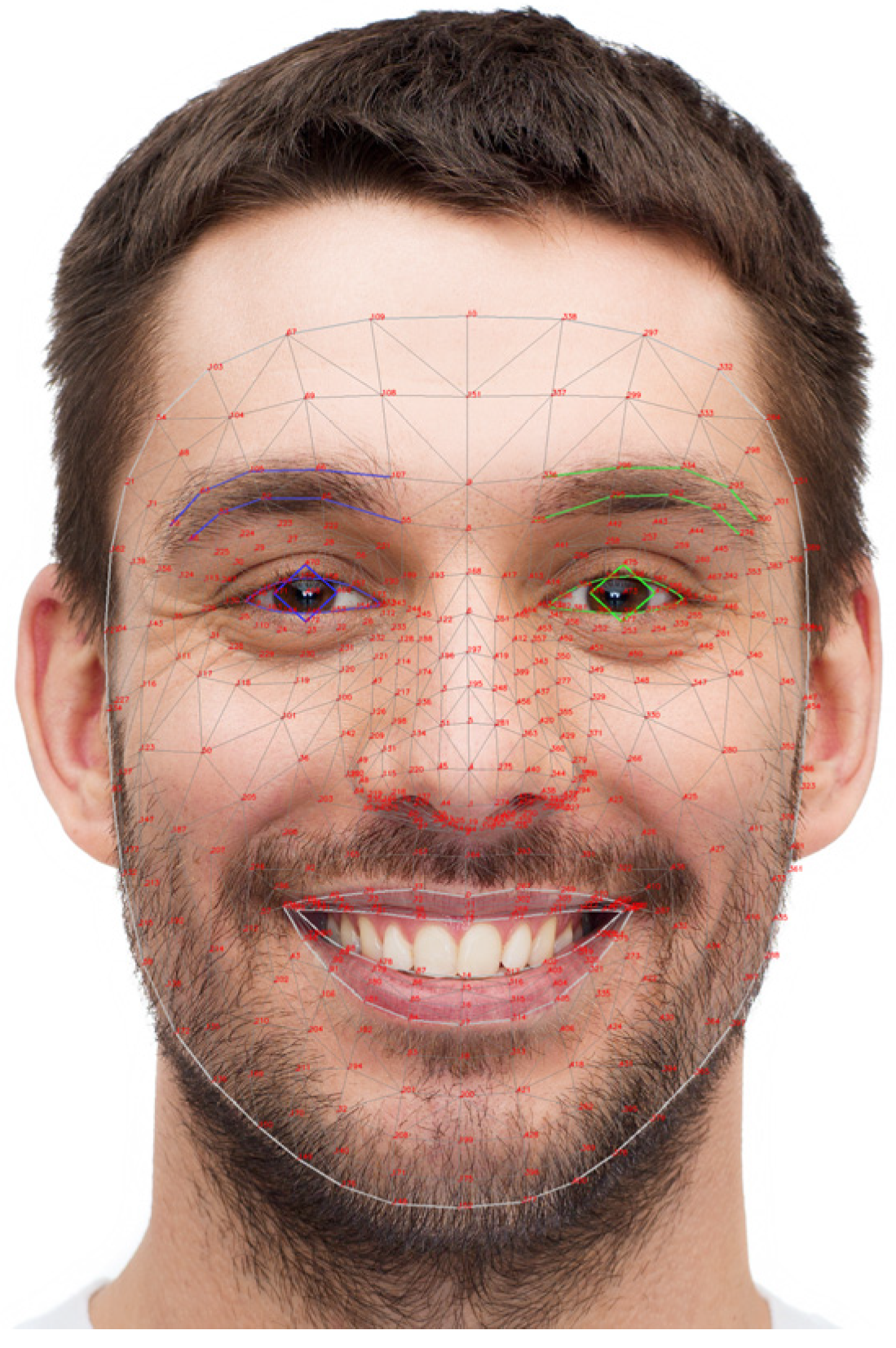

The rPPG-based algorithms primarily extract the blood volume pulse (BVP) from skin pixels in facial video frames for HR estimation [

11,

12,

13,

14,

15]. The algorithm estimates heart rate based on changes in skin color caused by variations in capillary blood flow due to the heartbeat. To concretize this methodology, it is essential to model the light reflected from the skin. This reflected light can be mathematically represented as follows [

5]:

In Equation (1), uc represents the unit vector of skin color. I0 denotes the stationary part, and i(t) denotes the time-varying part. The us represents the unit vector of the light spectrum, and s(t) denotes the component caused by motion. up is the unit vector of the pulse component in the RGB signal, and p(t) represents the pulse signal. The goal of rPPG algorithms is to separate and extract the pulse component (BVP) from the RGB signal in Equation (1).

Blind source separation (BSS) is an algorithm that can separate sources from mixed signals, and it could be used to isolate pulse components from RGB signals that consist of various types of light reflected from the skin. In particular, independent component analysis (ICA) is a representative example that can be used with RGB signals to extract heart rate [

11]. If ICA separates the BVP in Equation (1), the GREEN algorithm assumes that the green channel of the RGB signal contains sufficient information to estimate the BVP and directly utilizes this channel for heart rate estimation. Research on the characteristics of the GREEN channel has shown that it has a higher signal-to-noise ratio compared to other channels and effectively captures subtle BVP variations while minimizing the impact of motion artifacts [

4]. The local group invariance (LGI) algorithm maintains consistent signal extraction across various facial regions and lighting conditions by analyzing local pixel groups within predefined regions of interest (ROIs) [

12]. This approach reduces the susceptibility of rPPG signals to external perturbations, ensuring reliable HR estimations even with changes in facial expressions or minor movements. The Orthogonal Matrix Image Transformation (OMIT) algorithm specifically addresses the challenge of motion-induced artifacts by employing adaptive filtering techniques that distinguish between genuine physiological signals and noise caused by subject movement [

13]. Additionally, OMIT primarily employs a reduced QR decomposition algorithm that utilizes Householder reflections. Furthermore, the pulse blood volume (PBV) algorithm focuses on accurately capturing blood volume changes within the microvasculature of facial skin [

14]. Analyzing temporal fluctuations across RGB channels and integrating spatial averaging techniques, PBV enhances signal quality and reliability even under challenging lighting and movement conditions. The CHROM algorithm leverages chrominance information to isolate physiological signals from common noise sources like lighting fluctuations and subject movement, thereby enhancing the stability and accuracy of HR measurements in dynamic environments [

15]. Moreover, the

up in Equation (1) is separated by projecting the chrominance signal onto a plane orthogonal to the standardized skin-tone vector. In other words, this process involves removing the specular reflection component. Following that, alpha tuning is carried out based on the intensity of the chrominance signal. This tuning is calculated using a scaling factor that is dynamically determined from the standard deviation of the signal after the specular reflection has been removed. Through this process, the

us and

up in Equation (1) are effectively separated. The plane-orthogonal-to-skin (POS) algorithm is a state-of-the-art approach that transforms the RGB color space to isolate pulsatile signals from ambient color variations [

5]. POS decomposes

up using a process similar to that of CHROM. However, in contrast to CHROM, POS first removes intensity before performing alpha tuning with the specular reflection component. Furthermore, it is regarded as a more robust algorithm than CHROM because it defines the projection axis based on physiological principles.

All of the aforementioned algorithms originate from the concept of extracting BVP from video by utilizing the subtle color variations in the skin caused by heartbeats and have been meticulously designed to be robust against motion-induced noise. Many studies have been conducted to test and improve the performance of rPPG-based algorithms under relatively static conditions, such as head movements or facial expression changes, compared to more dynamic exercise scenarios, such as walking [

16]. However, in physical exercise applications, such as treadmill and stepping, these algorithms encounter a greater diversity and higher levels of movement-induced noise than in controlled laboratory environments. Furthermore, these exercise conditions adversely affect the performance of rPPG-based algorithms for remote heart rate estimation.

We specifically focused on running scenarios, such as running—which involves instances where both feet are simultaneously off the ground, inducing faster and more vigorous movements, unlike other activities such as cycling or stepping. These circumstances generate movement noise, which is more difficult to mitigate than that encountered in other testing environments [

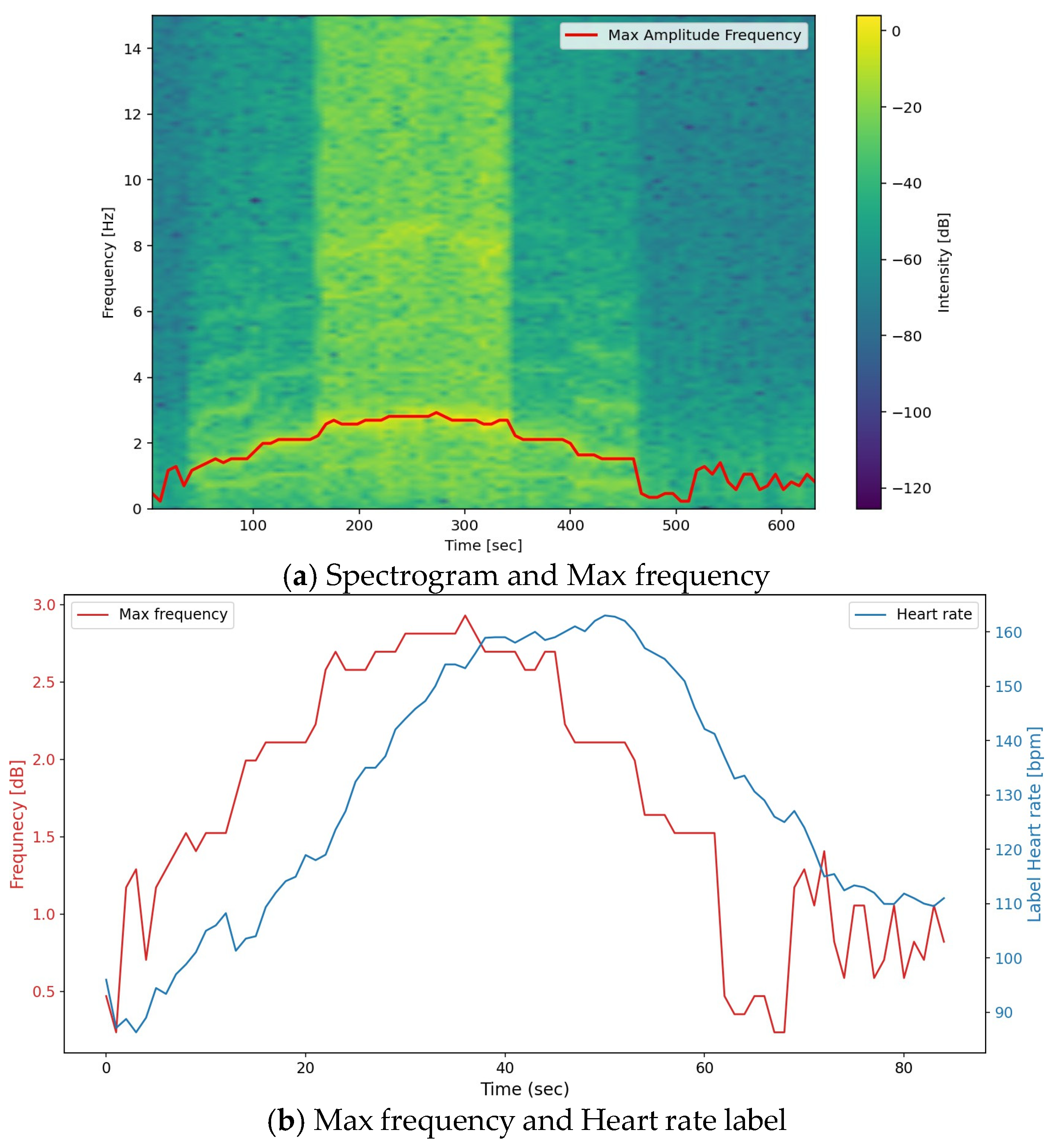

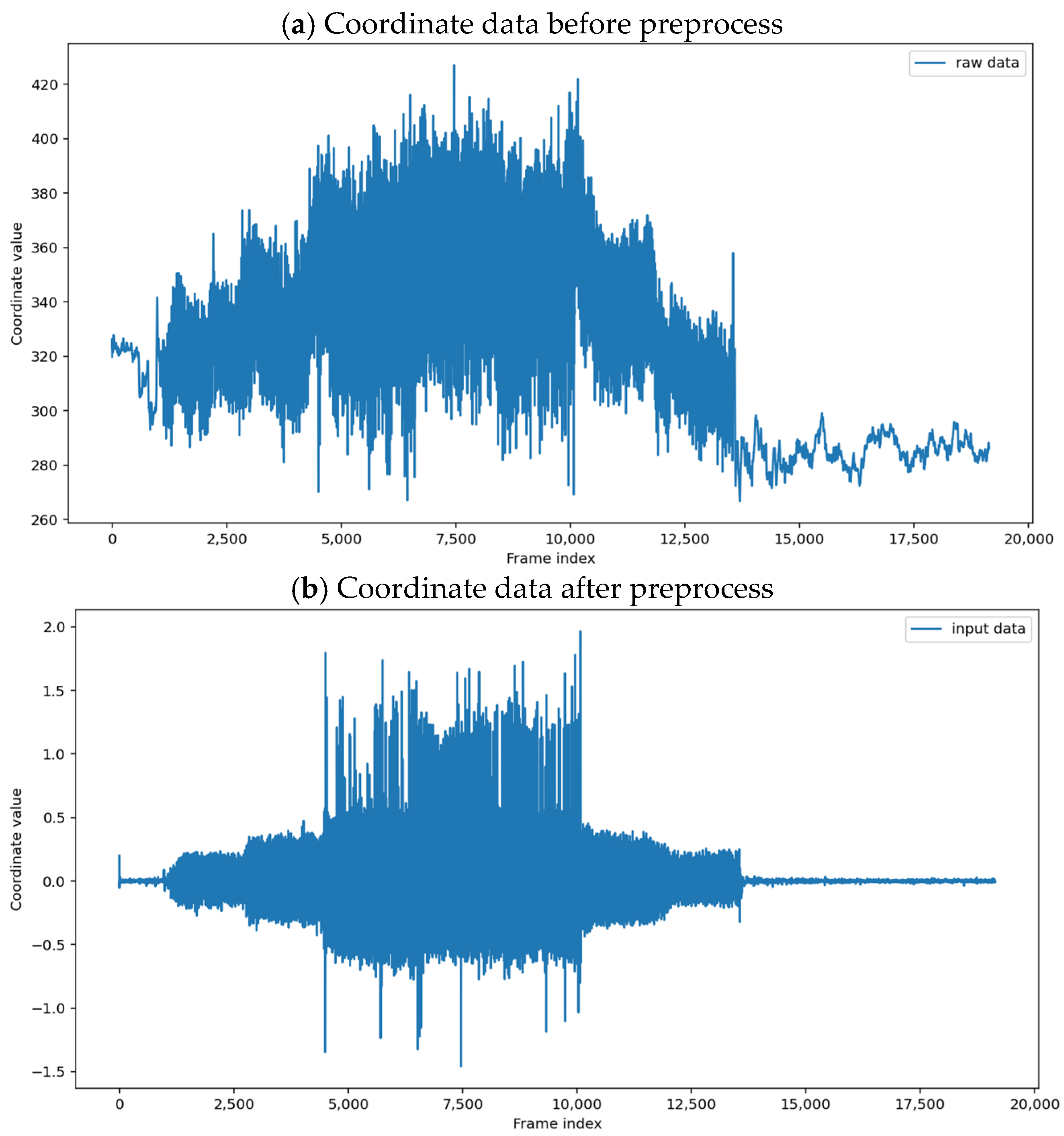

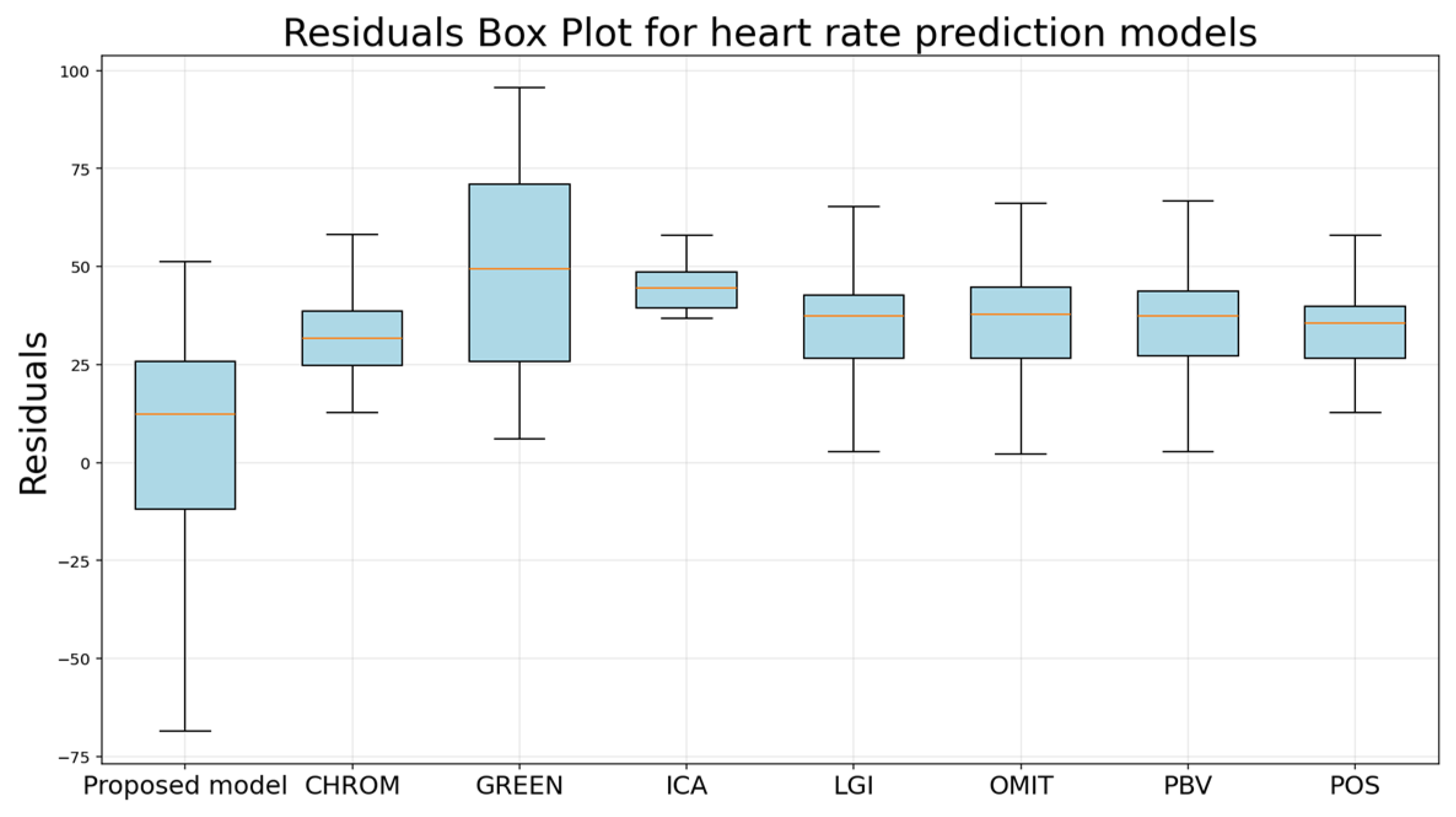

16]. We propose the following novel algorithm, which estimates heart rate by leveraging human motion to overcome these challenges.