Abstract

In this study, we propose a novel method for generating untargeted adversarial examples using a Generative Adversarial Network (GAN) in an unrestricted black-box environment. The proposed approach produces adversarial examples that are classified into random classes distinct from their original labels, while maintaining high visual similarity to the original samples from a human perspective. This is achieved by leveraging the capabilities of StyleGAN to manipulate the latent space representation of images, enabling precise control over visual distortions. To evaluate the efficacy of the proposed method, we conducted experiments using the CelebA-HQ dataset and TensorFlow as the machine learning framework, with ResNet18 serving as the target classifier. The experimental results demonstrate the effectiveness of the method, achieving a 100% attack success rate in a black-box environment after 3000 iterations. Moreover, the adversarial examples generated by our approach exhibit a distortion value of 0.069 based on the Learned Perceptual Image Patch Similarity (LPIPS) metric, highlighting the balance between attack success and perceptual similarity. These findings underscore the potential of GAN-based approaches in crafting robust adversarial examples while preserving visual fidelity.

1. Introduction

Deep neural networks (DNNs) [1] have demonstrated exceptional performance across a wide range of applications, including image classification [2], speech recognition [3], text classification [4], and pattern recognition. These models, owing to their deep architectures and ability to capture complex data distributions, have driven significant advancements in machine learning. Their success has paved the way for breakthroughs in various fields, such as autonomous driving, healthcare diagnostics, and natural language processing. However, despite these remarkable achievements, deep neural networks remain highly vulnerable to adversarial examples [5,6].

Adversarial examples are carefully crafted perturbations that, when added to inputs, cause the model to misclassify them, even though the altered inputs appear normal to human observers. These examples pose serious threats to critical systems reliant on deep neural networks, such as self-driving cars, where misclassifications can lead to catastrophic consequences, and medical diagnostic tools, where errors in interpreting medical images can have life-altering implications. Consequently, the study of adversarial examples has become a key area of research aimed at understanding and mitigating the vulnerabilities of neural networks.

Adversarial examples are typically generated in two primary settings: white-box and black-box. In the white-box setting, where an attacker has access to the model’s parameters and gradients, adversarial examples are generated by optimizing a loss function designed to maximize the model’s misclassification probability. This is achieved through gradient-based methods, such as the Fast Gradient Sign Method (FGSM) [7] or Projected Gradient Descent (PGD) [8], which introduce small perturbations to the input sample to increase the loss. Conversely, in the black-box setting, where the attacker lacks access to the model’s internal parameters and relies only on its outputs (predictions), generating adversarial examples is considerably more challenging. Without access to the model’s gradients, the attacker cannot directly compute the optimal direction for perturbation. This limitation has led to the development of alternative methods, such as transfer-based attacks and decision boundary attacks, which exploit the decision boundaries of the model to craft adversarial examples.

In the black-box scenario, where no direct information about the model’s internal workings is available, decision boundary attacks have proven particularly effective. These approaches synthesize adversarial examples by gradually adjusting the interpolation rate between a target image and the original sample. By strategically positioning the adversarial example near the model’s decision boundary, attackers can induce misclassification. This method is especially useful when the input sample’s probability distribution under the target model is unknown, rendering traditional gradient-based approaches infeasible.

To enhance adversarial attack strategies in black-box environments, generative models—particularly Generative Adversarial Networks (GANs) [9]—have shown significant promise. GANs, composed of a generator and a discriminator network, are widely used to create realistic data samples. In the context of adversarial attack generation, GANs can be utilized to produce adversarial examples that deceive the model while remaining visually indistinguishable from the original images to human observers. Notably, StyleGAN [10] has demonstrated its capability to generate high-quality images by learning the latent structure of image data. StyleGAN’s fine-grained control over image generation enables the creation of adversarial examples that preserve the key features of the original image while subtly modifying its appearance, thereby fooling the model into making incorrect predictions.

In this paper, we propose a novel approach for generating untargeted adversarial examples in an unrestricted black-box environment using StyleGAN. The adversarial examples produced by the proposed method are classified as a random class different from the original sample, while appearing visually similar to the original image to human observers. The key contributions of this paper are as follows:

- We introduce a method for generating untargeted adversarial examples in the black-box environment. Unlike traditional methods that require a specific target class, our approach generates adversarial examples that are classified into a random class, demonstrating the potential for broader applicability in real-world attack scenarios.

- We provide a detailed analysis of the proposed method, evaluating its performance in terms of attack success rate, image distortion, and visual quality. We show how the method balances between adversarial effectiveness and perceptual similarity.

- To demonstrate the efficacy of the proposed approach, we evaluate the method using the ResNet18 model [11] as the target classifier and the CelebA-HQ dataset [12] for image data. The experimental results showcase the method’s robustness in generating high-quality adversarial examples.

The remainder of this paper is organized as follows: Section 2 describes the related work. Section 3 describes the proposed method in detail. In Section 4, we present the experimental setup and results of the proposed approach. Section 5 provides a discussion on the effectiveness and limitations of the method. Finally, Section 6 concludes the paper and discusses potential avenues for future research in adversarial attack generation.

2. Related Work

The study of adversarial examples has garnered significant attention in recent years, driven by the increasing deployment of deep neural networks (DNNs) in safety-critical applications. This section reviews key developments in adversarial attacks, defenses, and the use of generative models for adversarial example generation, with a focus on both white-box and black-box settings.

2.1. Adversarial Attack Techniques

Adversarial attack methods [13,14] can broadly be classified into white-box and black-box approaches based on the attacker’s knowledge of the target model. In the white-box setting, where the attacker has full access to the model’s architecture and parameters, gradient-based methods such as the Fast Gradient Sign Method (FGSM) [7] and Projected Gradient Descent (PGD) [8] have been extensively studied. These methods craft adversarial perturbations by leveraging the gradient of the model’s loss function with respect to the input data, ensuring a high probability of misclassification while keeping the perturbations imperceptible to human observers.

In contrast, black-box attacks operate under more restrictive conditions, where the attacker lacks access to the model’s internal parameters or gradients. Transfer-based attacks, such as those described in [15], exploit the transferability property of adversarial examples, where examples crafted for one model are often effective against other models with similar architectures. Decision-based attacks, including the Boundary Attack [16], iteratively refine the adversarial example by querying the target model and moving toward the decision boundary. These methods are particularly suited for scenarios where only the model’s output predictions are available.

2.2. Generative Models in Adversarial Attacks

Generative models, particularly Generative Adversarial Networks (GANs) [17], have emerged as powerful tools for generating adversarial examples. GANs consist of a generator network that synthesizes realistic data samples and a discriminator network that distinguishes between real and generated samples. By leveraging GANs, researchers have demonstrated the ability to produce adversarial examples that not only deceive DNNs but also maintain high visual fidelity.

Among various GAN-based approaches, StyleGAN [18] has gained prominence for its ability to generate high-quality images with fine-grained control over features. StyleGAN’s latent space allows for the manipulation of semantic attributes in images, enabling the creation of adversarial examples that preserve key characteristics of the original sample. In [19], the authors employed GANs to craft adversarial examples by learning the distribution of clean and adversarial images, demonstrating the potential of generative models to generate diverse and effective attacks. Additionally, studies such as [20] have explored conditional GANs to generate class-specific adversarial examples, showcasing the adaptability of generative approaches.

2.3. Adversarial Defenses and Limitations

To counteract adversarial attacks, various defense mechanisms have been proposed, ranging from adversarial training [21] to input preprocessing techniques [22]. Adversarial training augments the training dataset with adversarial examples, thereby improving the model’s robustness against such attacks. However, this approach is computationally expensive and often limited to specific attack types. Input preprocessing methods, such as image compression or denoising, aim to remove adversarial perturbations before feeding inputs into the model. While effective against certain attacks, these defenses are often circumvented by adaptive adversarial strategies.

Despite significant progress in adversarial defense, many approaches exhibit limitations, particularly in black-box settings where the attacker’s knowledge is restricted. The trade-off between robustness and generalization remains a key challenge, as overly robust models may suffer from degraded performance on clean data. Moreover, most defenses focus on white-box attacks, leaving black-box scenarios relatively underexplored.

2.4. Advances in Black-Box Adversarial Attacks

Recent research has highlighted the challenges of generating adversarial examples [23,24,25,26,27] in black-box environments, where the attack relies on limited information about the target model. Query-efficient methods, such as the Natural Evolution Strategy (NES) [28], optimize adversarial perturbations with a minimal number of queries, improving practicality in real-world scenarios. Additionally, methods like the Surrogate Model approach [29] train a local model to approximate the target model’s decision boundaries, enabling effective adversarial example generation without direct access to the target.

Generative models have also been applied in black-box settings to enhance attack efficiency. In [30], the authors introduced a generative framework for crafting adversarial examples by modeling the spatial transformations of images. These advancements underline the growing interest in designing attacks that are both effective and computationally feasible under constrained settings.

2.5. Motivation for the Proposed Method

While significant strides have been made in adversarial attack techniques, most existing methods either focus on targeted attacks or require substantial prior knowledge of the target model. The proposed method seeks to address these limitations by introducing an untargeted attack framework based on StyleGAN, tailored for black-box environments. By leveraging StyleGAN’s latent space, the method generates adversarial examples that are visually indistinguishable from the original samples, achieving high attack success rates with minimal distortion. This approach bridges the gap between traditional adversarial attacks and generative techniques, offering a robust solution for real-world adversarial scenarios.

This study builds upon the foundational work in adversarial example generation and seeks to advance the field by combining the strengths of generative models with practical considerations for black-box settings. The proposed method contributes to a deeper understanding of neural network vulnerabilities and emphasizes the importance of developing more robust defense mechanisms.

2.6. Proposed Method for Generating Adversarial Examples

The first step in the proposed scheme involves transforming both the original sample and a random sample into latent vectors. For this purpose, we employ the pixel2style2pixel (pSp) encoder [31], which is a powerful framework designed to encode an image into a latent vector space. The pSp encoder leverages a pre-trained StyleGAN generator to extract features from images, mapping them into the extended latent space () of the generator. This process generates a set of latent vectors, one for each layer of the StyleGAN generator, allowing for multi-scale representation and fine-grained manipulation of image semantics.

In our approach, the original image and the random image are passed through the encoder to obtain their corresponding latent vectors:

where each represents a latent vector corresponding to a specific layer of the StyleGAN generator. The pSp encoder is particularly effective in this task because it generates a multi-scale feature pyramid from the StyleGAN latent space, which can capture different granularities of style information. By encoding both the original and random samples into the same latent space, we ensure that they share a common representation that allows for meaningful manipulations to be performed in the next step.

3. Proposed Scheme

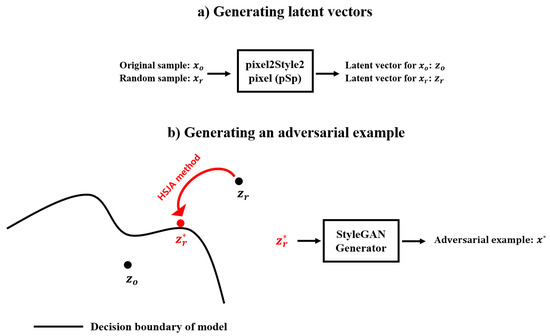

In this section, we present the proposed method for generating adversarial examples by combining the HopSkipJumpAttack (HSJA) method [32] with the StyleGAN model. The overall structure of the proposed scheme is illustrated in Figure 1. The method consists of two main steps: first, the conversion of the original and random samples into latent vectors, and second, the generation of adversarial examples by manipulating these latent vectors.

Figure 1.

Overview of the proposed scheme.

The first step in the proposed scheme involves transforming both the original sample and a random sample into latent vectors. For this purpose, we employ the pixel2style2pixel (pSp) encoder [31], which is a powerful framework designed to encode an image into a latent vector space. The pSp encoder leverages a pre-trained StyleGAN generator to extract features from images, mapping them into the extended latent space () of the generator. This latent space representation allows us to manipulate images at a semantic level, preserving high-level features such as facial structures, object types, or scene layouts, while providing flexibility to adjust the style or identity of the image.

In our approach, the original image and the random image are passed through the encoder to obtain their corresponding latent vectors:

where each represents a latent vector corresponding to a specific layer of the StyleGAN generator. The pSp encoder generates a multi-scale feature pyramid from the StyleGAN latent space, capturing different granularities of style information. By encoding both the original and random samples into the same extended latent space, we ensure that they share a common representation that allows for meaningful manipulations to be performed in the next step.

The second step involves the generation of adversarial examples by modifying the latent vector of the random sample to make it classified as a different class by the target model, while ensuring that the generated adversarial example remains perceptually similar to the original sample. This is achieved by applying the HSJA method, a decision-based attack method that minimizes the distance between the latent vectors of the original and random samples.

Specifically, the HSJA algorithm performs iterative updates to the latent vector , moving it in such a way that the adversarial example generated from the new latent vector is misclassified by the target model. The updates to are guided by the norm, which measures the Euclidean distance between the latent vectors of the original and random samples. By minimizing this distance, we ensure that the latent vectors remain close to each other, thereby preserving the essential content of the original image.

Once the updated latent vector is obtained, it is fed into the StyleGAN generator to produce the final adversarial example . The adversarial example is semantically similar to the original image, as it is derived from a latent space that closely resembles that of the original image, but it is misclassified by the model as an arbitrary class other than the original class. The iterative process of latent vector updates and adversarial example generation is performed several times, allowing the algorithm to converge to a point where the adversarial example is highly effective in deceiving the model. The overall procedure for generating adversarial examples using the proposed scheme is summarized in Algorithm 1.

| Algorithm 1 Proposed adversarial example generation |

Input: Original sample , Random sample , StyleGAN generator , operation function of model . Output: Generated proposed adversarial example .

|

The proposed method offers several key advantages that distinguish it from traditional adversarial attack methods. First, by leveraging the power of StyleGAN and the pSp encoder, the generated adversarial examples retain high perceptual quality, making them indistinguishable from the original samples to human observers. This is critical in applications where the visual integrity of the adversarial examples is important, such as in image-based security systems or medical image analysis.

Second, the use of the HSJA method allows for decision-based attacks in black-box settings, where the attacker does not have access to the model’s internal parameters or gradients. The iterative process of minimizing the distance between the latent vectors of the original and random samples ensures that the adversarial examples are crafted in a way that optimally exploits the model’s decision boundaries, leading to high attack success rates.

Finally, the ability to generate untargeted adversarial examples, where the class label of the original sample is changed to a random class, opens up new possibilities for adversarial attacks in scenarios where the attacker does not know the target class in advance. This makes the method more versatile and applicable to a wide range of adversarial attack scenarios.

4. Experiment and Evaluation

This section presents the experimental setup and the evaluation of the proposed method. We demonstrate the effectiveness of the proposed adversarial attack by using the CelebA-HQ dataset and ResNet18 model for facial recognition tasks. The results show that the adversarial examples generated by our method are successful in misleading the model while maintaining high perceptual similarity to the original images.

4.1. Experimental Setup

4.1.1. Dataset

The CelebA-HQ dataset [12], a high-quality face classification dataset, was used for our experiments. The dataset contains a total of 30,000 high-resolution face images with dimensions of 1024 × 1024 pixels. These images represent 6217 distinct identities, with each identity possessing 40 attribute labels. The images were split into a training set of 4263 images from 307 individuals, and a test set of 1215 images.

For the facial gender classification task, we utilized the gender-specific subset of the CelebA-HQ dataset, which contains 11,057 male images and 18,943 female images. The dataset was divided into training and test sets with an 80%–20% split, ensuring a sufficient amount of data for training the model and evaluating its performance.

4.1.2. Model

We used the ResNet18 model [11] as the target model for face classification tasks. ResNet18 is a convolutional neural network (CNN) that utilizes residual learning to address the vanishing gradient problem by incorporating skip connections. This model has been widely used in facial recognition tasks due to its ability to effectively capture hierarchical features of face images. In our experiments, the ResNet18 model achieved an accuracy of 87.8% on the original dataset. Since the model parameters remain unchanged when generating adversarial examples using StyleGAN, the accuracy on the original dataset remains consistent at 87.8%.

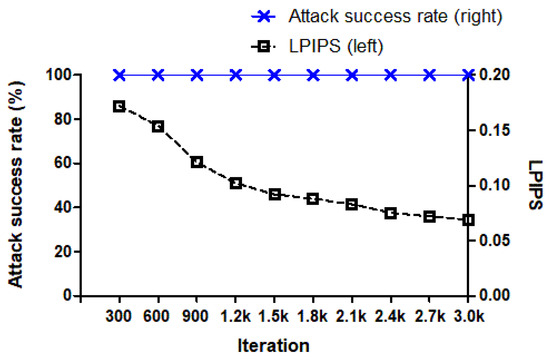

The misclassification of images generated through StyleGAN is illustrated in Figure 2 of the manuscript. This figure provides a detailed depiction of how the adversarial examples lead to incorrect predictions, demonstrating the effectiveness of the attack.

Figure 2.

Attack success rate and LPIPS value of the proposed adversarial example according to iteration.

Adversarial examples were generated by perturbing the latent vectors in the StyleGAN framework [10]. The StyleGAN generator was used to create realistic adversarial images, and the original images were mapped to the latent space using pSp encoding [31] to generate adversarial examples that could effectively mislead the ResNet18 model.

4.2. Experimental Results

Table 1 presents examples of adversarial examples generated by our method, along with their recognition class probabilities and the effect of iterations on the attack. The adversarial examples are compared with the original images, and the recognition class probabilities for each iteration are shown. As the number of iterations increases, the generated adversarial images become progressively more effective at misdirecting the classifier, as reflected in the decreasing classification probabilities for the original class.

Table 1.

Examples of proposed adversarial examples and original samples and recognition classes and probabilities according to iteration.

The "iterations” refer to the process of generating the adversarial examples by modifying the latent vector and inputting it into the pre-trained ResNet18 model multiple times. With each iteration, the latent vector is progressively altered, resulting in adversarial examples that increasingly resemble the original image but become more effective at misclassification.

For example, in Table 1, at iteration 300, the adversarial example of the first row is misclassified with a probability of 14.28%, compared to the original image’s classification probability of 63.63%. This significant decrease in the classification probability demonstrates that the adversarial attack has successfully misled the model. As the iterations continue, the misclassification becomes more pronounced, with the classification probability decreasing further, indicating that the adversarial image becomes more challenging for the classifier to identify correctly. This progression shows that the adversarial examples, while visually similar to the original images, are progressively more effective at fooling the model.

Figure 2 presents the attack success rate and the learned perceptual image patch similarity (LPIPS) [33] value of the proposed adversarial examples according to the number of iterations. LPIPS measures the perceptual similarity between two images by comparing their features extracted from intermediate layers of a pre-trained VGG network.

As the number of iterations increases, the LPIPS value decreases, indicating that the generated adversarial examples become perceptually more similar to the original images. Moreover, from iteration 300 onwards, the attack success rate reaches 100%, indicating that the adversarial attack is fully successful in misleading the model after a certain number of iterations. This result demonstrates that 300 iterations are the minimal number of attack iterations required to consistently generate adversarial examples that successfully fool the classifier.

To assess the generalizability of the proposed method, additional experiments were conducted using the FFHQ (Flickr-Faces-HQ) dataset [34] with the DenseNet121 model [35]. FFHQ is a high-quality dataset comprising a diverse set of face images with more variations compared to CelebA-HQ, providing a broader context for evaluating the robustness and applicability of the adversarial attack method.

In these experiments, the DenseNet121 model was employed due to its proven efficiency in feature reuse through dense connections and its strong performance in various image classification tasks. The results demonstrate that the proposed method effectively generates adversarial examples capable of misleading the DenseNet121 model. The impact of the adversarial examples, however, varied depending on specific characteristics of the FFHQ dataset, highlighting the influence of dataset attributes on attack performance.

Table 2 presents the analysis of the proposed method’s attack success rate and LPIPS (Learned Perceptual Image Patch Similarity) over iterations when evaluated on the FFHQ dataset using the DenseNet121 model. The results indicate a consistent trend in the attack success rate and the perceptual similarity of adversarial examples as iterations increase. At 100 iterations, the attack success rate is 67%, accompanied by an LPIPS value of 0.24, indicating relatively higher perceptual similarity differences. As the number of iterations increases to 300, the attack success rate reaches 100%, and the LPIPS value decreases to 0.19, demonstrating the improved efficiency of the adversarial examples with reduced perceptual differences. The attack success rate remains at 100% for iterations beyond 300, with a corresponding gradual reduction in LPIPS values. Specifically, the LPIPS value drops to 0.16 at 600 iterations, 0.13 at 1000 iterations, and eventually reaches 0.06 at 3000 iterations. This consistent decrease in LPIPS suggests that the adversarial examples become increasingly imperceptible to human observers while maintaining a high success rate in deceiving the DenseNet121 model.

Table 2.

Analysis of the proposed method’s attack success rate and LPIPS over iterations on the FFHQ dataset using the DenseNet121 model.

These findings highlight the effectiveness of the proposed method in generating adversarial examples that achieve high attack success rates while minimizing perceptual differences, even on a high-quality and diverse dataset such as FFHQ.

5. Discussion

5.1. Assumption

The proposed method is particularly advantageous in black-box attack scenarios, where the attacker only has access to the classification output of the target model, rather than the probability distribution over all classes. This is a realistic setting, especially in practical applications such as attacking commercial facial recognition systems. Unlike white-box attacks, which assume complete knowledge of the target model, the proposed method operates effectively with minimal prior information, demonstrating its practicality and adaptability in real-world situations.

5.2. Threat or Attack Model

The proposed attack method assumes a realistic and constrained black-box attack scenario, where the attacker has limited access to the internal parameters of the target system. In this scenario, the attacker can only query the system to obtain classification outputs, such as the predicted class, without having knowledge of the underlying model architecture, parameter values, or the probability distribution over all classes. This assumption reflects practical applications, including commercial facial recognition systems, where internal details of the models are typically inaccessible to attackers.

The attack leverages vulnerabilities in the decision boundary of the target model by applying subtle perturbations to input data, which mislead the model into making incorrect classifications. By utilizing a generative model such as StyleGAN, the proposed method identifies adversarial directions in the feature space. These directions enable the generation of adversarial examples that remain imperceptible to human observers and undetectable by automated defense mechanisms. This aspect is particularly significant in high-dimensional data domains, such as images, where exhaustive testing of all potential perturbations is infeasible for defenders.

The attacker in this scenario is assumed to have the capability to query the target model and observe its classification results. Additionally, the attacker is presumed to operate with minimal prior information, as they do not possess knowledge of the model’s architecture, training data, or parameter values. However, they are assumed to have access to a pretrained generative model, such as StyleGAN, which allows for the generation and modification of high-quality samples in the data domain of interest.

Executing the attack requires certain tools and resources. Specifically, the attacker needs access to the pretrained StyleGAN model to generate synthetic samples and the Hierarchical Joint Style-Attention (HJSA) method to effectively identify and apply adversarial perturbations. Furthermore, computational resources such as GPUs are necessary to perform iterative optimization, ensuring the efficiency of the attack process.

A potential attack scenario can be envisioned in the context of a commercial facial recognition system used for user authentication. In such a scenario, an attacker could collect publicly available images of the target individual and use StyleGAN to generate synthetic facial images that closely resemble the target. Subsequently, adversarial perturbations are applied iteratively until the generated images successfully deceive the recognition system without being detected by automated defenses. By detailing the system’s vulnerabilities, the attacker’s capabilities, and the required tools, this threat model illustrates the feasibility of the proposed attack.

5.3. Contribution

The proposed method introduces a novel approach for generating untargeted adversarial examples, aiming to create adversarial samples that are misclassified as any class other than the original class. This untargeted nature allows the method to achieve adversarial examples with less distortion and fewer iterations compared to targeted attacks, which require aligning the adversarial example with a specific class. By integrating advanced techniques such as StyleGAN and the Hierarchical Joint Style-Attention (HJSA) method, the proposed method effectively enhances both the efficiency and success rate of adversarial attacks, demonstrating the potential of combining generative models with adversarial methodologies.

5.4. Attack Success Rate

In our study, the attack success rate is defined as the proportion of adversarial examples that are misclassified as any class other than the original class. Specifically, for a given adversarial example, the attack is considered successful if the target model outputs a predicted label different from the original class label. This approach aligns with the definition of untargeted adversarial attacks, where the primary objective is to cause misclassification without targeting a specific alternative class.

For instance, if the evaluation dataset contains 100 samples and 95 of these samples are misclassified into classes other than their respective original classes, the attack success rate is calculated as

In the experiments presented in the paper, no additional thresholding mechanism was applied beyond the fundamental condition of misclassification. The success rate is directly computed based on the aforementioned definition across all samples in the test dataset. This ensures that the reported metric accurately reflects the method’s ability to induce misclassification under realistic conditions.

5.5. Attack Behavior and Class Probability Fluctuations

As the number of attack iterations increases, the perceptual image patch similarity (LPIPS) value decreases consistently, indicating that the generated adversarial examples become perceptually more similar to the original images. However, the attack success rate can exhibit irregular fluctuations across different iteration counts. This irregularity in the predicted class probability can be attributed to the nature of the adversarial perturbations generated during the optimization process.

During the attack, the adversarial perturbations are continuously adjusted in an attempt to mislead the classifier. The method operates in a high-dimensional space, where multiple local minima may exist. This can lead to variations in the class probabilities across iterations, causing the class probability to deviate and fluctuate even as the LPIPS value steadily decreases, indicating improved perceptual similarity. Ultimately, while the attack success rate stabilizes and reaches 100% around 300 iterations, the fluctuations in the class probabilities reflect the complexity and sensitivity of the classifier to adversarial perturbations at various stages of the attack process.

5.6. Attack Effectiveness

In terms of attack effectiveness, the method achieves a high success rate in misleading facial recognition models while preserving the perceptual similarity of adversarial examples to the original images. This is particularly important in practical scenarios, where subtle perturbations that remain undetectable to human observers and automated defenses are critical. The iterative optimization process employed in the method achieves a 100% attack success rate within 300 iterations, highlighting the robustness of the approach. Furthermore, the use of StyleGAN enables the generation of adversarial examples with high visual fidelity, ensuring that the perturbations are imperceptible while maintaining the intended adversarial effects.

5.7. Class-Wise Distribution of Misclassified Images

The proposed method generates untargeted adversarial examples by explicitly introducing random values to determine the target classes for misclassification. This ensures that no specific bias is introduced during the attack process, resulting in a random and uniform distribution of misclassified classes across all available categories. An analysis of the class-wise distribution of misclassified images was conducted to validate the randomness of the method. The results confirm that the misclassified examples are evenly distributed among the classes, demonstrating that the attack method induces random misclassification as intended. This characteristic underscores the untargeted nature of the proposed approach, ensuring that the adversarial perturbations effectively push the input data beyond the decision boundary of the original class without favoring any specific alternative class.

5.8. Domain-Specific Application of StyleGAN

The StyleGAN model employed in this study was trained using the CelebA-HQ dataset [12], a high-quality dataset comprising 30,000 high-resolution facial images with dimensions of 1024 × 1024 pixels. The dataset includes 6217 distinct identities, with each image annotated with 40 attribute labels. For our experiments, a publicly available pre-trained StyleGAN2 model, designed to generate high-fidelity facial images, was utilized. This model, which served as the foundation for generating adversarial examples, is available at the following repository (accessed on 1 January 2025): https://github.com/justinpinkney/awesome-pretrained-stylegan2.

In this study, adversarial examples were generated to attack a facial gender classification model trained on the CelebA-HQ dataset. The classification model utilized a ResNet18 architecture, initialized with pre-trained weights from PyTorch’s model zoo. The model was fine-tuned using the CelebA-HQ dataset to achieve optimal performance on the gender classification task.

The domain-specific nature of StyleGAN plays a critical role in its application. Since the latent space representations of StyleGAN are inherently tied to the dataset used for training, the generated adversarial examples remain within the domain of the training data. For instance, a StyleGAN model trained to generate images of cats would produce adversarial examples specific to the domain of cat images. Consequently, using a StyleGAN trained on a different domain, such as cats, would not effectively generate adversarial examples for a classifier trained on an unrelated domain, such as traffic signs. Furthermore, the proposed method is tailored to targeted adversarial attacks within the classification classes provided by the dataset used in the experiments. The adversarial examples leverage the latent space of the StyleGAN model, which is specific to the dataset used for training. As a result, misclassifications are induced within the classes of the corresponding dataset. Extending the method to other domains or datasets would require training or fine-tuning the StyleGAN model on datasets relevant to those specific domains.

5.9. Perceptual Quality

The perceptual quality of the generated adversarial examples is quantified using the Learned Perceptual Image Patch Similarity (LPIPS) metric, which measures the perceptual difference between two images. A lower LPIPS score indicates greater similarity to the original sample. The proposed method achieves an LPIPS score of 0.069 after 3000 iterations, which demonstrates that the adversarial examples are nearly indistinguishable from the original images in terms of visual appearance. This low level of distortion ensures the effectiveness of the attack while minimizing artifacts that might otherwise alert detection mechanisms. In addition, based on our experimental setup, it takes approximately 4 min and 20 s to complete 3000 iterations.

5.10. Limitation and Future Research

Certain limitations of the proposed method must be acknowledged. One notable limitation is the reliance on multiple queries to the target model during the adversarial example generation process. Although the method achieves high attack success rates with 300 iterations, the iterative nature of the process could be computationally expensive in scenarios with strict query limits or time constraints. Additionally, subtle changes in attributes such as position, expression, or color of the adversarial examples may occur compared to the original images. While these changes are generally imperceptible to the human eye, they could pose challenges under specific conditions, such as variations in lighting or pose, which may slightly affect the robustness of the attack.

These findings emphasize the importance of developing robust defense mechanisms against adversarial attacks, particularly for facial recognition systems. The demonstrated success of the proposed method, even with minimal perceptual distortion, highlights the need for proactive measures such as adversarial training and model enhancement. The use of generative models like StyleGAN for adversarial purposes also suggests the necessity of future research into model interpretability and robustness to address vulnerabilities exposed by such advanced attack techniques.

In addition, the proposed method leverages iterative optimization and multiple queries to the target model, which results in considerable computational overhead. Future work will explore more efficient optimization strategies to mitigate the computational cost, enabling faster and more practical applications in resource-constrained environments. Furthermore, the current approach is heavily dependent on the capabilities of StyleGAN, which may limit its generalizability to other generative models. Future research will investigate the adaptability of the proposed method to different generative models. By exploring various alternatives, this approach aims to improve robustness and versatility, enhancing applicability to a broader range of tasks.

The current experimental evaluation primarily focuses on the CelebA-HQ dataset and FFHQ dataset. To ensure broader generalizability, future research will include datasets with distinct class variations, such as cat vs. dog, which represent a fundamentally different data distribution. Expanding the scope of experiments in this way will provide deeper insights into the method’s performance across diverse scenarios.

In conclusion, the proposed method offers an effective and practical approach for generating untargeted adversarial examples in black-box environments. While there are limitations related to query efficiency and minor perceptual changes, the method’s strengths, including its high success rate, low distortion, and applicability in real-world scenarios, underscore its significance in advancing the field of adversarial machine learning. Future research will focus on optimizing the query process, addressing the observed limitations, and expanding the experimental scope to improve the practicality and robustness of the method.

6. Conclusions

This paper presents a novel approach for generating untargeted adversarial examples in an unrestricted environment, addressing the challenges of creating adversarial samples that are effective, minimally distorted, and applicable in realistic black-box scenarios. The proposed method focuses on generating adversarial examples that are misclassified as arbitrary classes, rather than the original class, thus reducing the need for excessive perturbations. By incorporating StyleGAN, the method ensures that the generated adversarial examples maintain high visual fidelity, minimizing distortions to a level imperceptible to human observers.

The experimental results demonstrate the effectiveness of the proposed approach. In a black-box setting, where only the classification outcome of the target model is known, the method achieves a 100% attack success rate within 3000 iterations. This high success rate underscores the robustness of the attack, even in scenarios with limited information about the target model. Furthermore, the perceptual similarity between the original and adversarial samples, quantified using the Learned Perceptual Image Patch Similarity (LPIPS) metric, is measured at 0.069. This value indicates that the adversarial examples are almost indistinguishable from the original samples, highlighting the minimal distortion introduced by the proposed method.

The unrestricted nature of the proposed approach allows for greater flexibility in generating adversarial examples, making it a valuable contribution to the field of adversarial machine learning. Unlike targeted attacks, which require the adversarial example to be misclassified into a specific target class, this untargeted approach focuses on any misclassification other than the original class, significantly simplifying the optimization process while maintaining high attack success rates.

Despite its strengths, this study acknowledges several areas for future exploration. One potential direction is the application of the proposed method to other datasets, expanding its generalizability across diverse domains and use cases. Additionally, advancements in generative modeling, such as diffusion models, present a promising avenue for further research. These models could be explored as alternative mechanisms for generating adversarial examples, potentially improving both efficiency and effectiveness.

Author Contributions

Conceptualization, H.K.; methodology, H.K.; software, H.K.; validation, H.K.; writing—original draft preparation, H.K.; writing—review and editing, H.K.; visualization, H.K.; supervision, H.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Hwarang-Dae Research Institute of Korea Military Academy and National Research Foundation of Korea (NRF) grants funded by the Korea government (MSIT) (2021R1I1A1A01040308).

Data Availability Statement

The data used to support the findings of this study are available from the corresponding author upon request after acceptance.

Acknowledgments

We thank the editor and anonymous reviewers who provided very helpful comments that improved this paper.

Conflicts of Interest

The author declares no conflict of interest.

References

- Schmidhuber, J. Deep learning in neural networks: An overview. Neural Netw. 2015, 61, 85–117. [Google Scholar] [CrossRef] [PubMed]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Hinton, G.; Deng, L.; Yu, D.; Dahl, G.E.; Mohamed, A.r.; Jaitly, N.; Senior, A.; Vanhoucke, V.; Nguyen, P.; Sainath, T.N.; et al. Deep neural networks for acoustic modeling in speech recognition: The shared views of four research groups. IEEE Signal Process. Mag. 2012, 29, 82–97. [Google Scholar] [CrossRef]

- Collobert, R.; Weston, J. A unified architecture for natural language processing: Deep neural networks with multitask learning. In Proceedings of the 25th International Conference on Machine Learning, Helsinki, Finland, 5–9 July 2008; pp. 160–167. [Google Scholar]

- Szegedy, C.; Zaremba, W.; Sutskever, I.; Bruna, J.; Erhan, D.; Goodfellow, I.; Fergus, R. Intriguing properties of neural networks. In Proceedings of the International Conference on Learning Representations, Banff, AB, Canada, 14–16 April 2014. [Google Scholar]

- Kwon, H.; Baek, J.W. Targeted Discrepancy Attacks: Crafting Selective Adversarial Examples in Graph Neural Networks. IEEE Access 2025, 13, 13700–13710. [Google Scholar] [CrossRef]

- Liu, Y.; Mao, S.; Mei, X.; Yang, T.; Zhao, X. Sensitivity of adversarial perturbation in fast gradient sign method. In Proceedings of the 2019 IEEE Symposium Series on Computational Intelligence (SSCI), Xiamen, China, 6–9 December 2019; pp. 433–436. [Google Scholar]

- Gupta, H.; Jin, K.H.; Nguyen, H.Q.; McCann, M.T.; Unser, M. CNN-based projected gradient descent for consistent CT image reconstruction. IEEE Trans. Med. Imaging 2018, 37, 1440–1453. [Google Scholar] [CrossRef] [PubMed]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Abdal, R.; Qin, Y.; Wonka, P. Image2stylegan: How to embed images into the stylegan latent space? In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 4432–4441. [Google Scholar]

- Zhou, Y.; Ren, F.; Nishide, S.; Kang, X. Facial sentiment classification based on resnet-18 model. In Proceedings of the 2019 International Conference on Electronic Engineering and Informatics (EEI), Nanjing, China, 8–10 November 2019; pp. 463–466. [Google Scholar]

- Karras, T.; Aila, T.; Laine, S.; Lehtinen, J. Progressive growing of gans for improved quality, stability, and variation. arXiv 2017, arXiv:1710.10196. [Google Scholar]

- Kwon, H.; Park, D.; Jo, O. Silent-Hidden-Voice Attack on Speech Recognition System. IEEE Access 2024, 12, 173010–173019. [Google Scholar] [CrossRef]

- Kwon, H.; Kim, S. Restricted-Area Adversarial Example Attack for Image Captioning Model. Wirel. Commun. Mob. Comput. 2022, 2022, 9962972. [Google Scholar] [CrossRef]

- Cheng, S.; Dong, Y.; Pang, T.; Su, H.; Zhu, J. Improving black-box adversarial attacks with a transfer-based prior. Adv. Neural Inf. Process. Syst. 2019, 32. Available online: https://proceedings.neurips.cc/paper_files/paper/2019/file/32508f53f24c46f685870a075eaaa29c-Paper.pdf (accessed on 1 January 2025).

- Dong, Y.; Su, H.; Wu, B.; Li, Z.; Liu, W.; Zhang, T.; Zhu, J. Efficient decision-based black-box adversarial attacks on face recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 5–20 June 2019; pp. 7714–7722. [Google Scholar]

- Saxena, D.; Cao, J. Generative adversarial networks (GANs) challenges, solutions, and future directions. ACM Comput. Surv. (CSUR) 2021, 54, 1–42. [Google Scholar] [CrossRef]

- Karras, T.; Laine, S.; Aittala, M.; Hellsten, J.; Lehtinen, J.; Aila, T. Analyzing and improving the image quality of stylegan. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 8110–8119. [Google Scholar]

- Sharma, M.; Verma, A.; Vig, L. Learning to clean: A GAN perspective. In Proceedings of the Computer Vision–ACCV 2018 Workshops: 14th Asian Conference on Computer Vision, Perth, Australia, 2–6 December 2018; Revised Selected Papers 14. Springer: Berlin/Heidelberg, Germany, 2019; pp. 174–185. [Google Scholar]

- Hossain, K.F.; Kamran, S.A.; Tavakkoli, A.; Pan, L.; Ma, X.; Rajasegarar, S.; Karmaker, C. ECG-Adv-GAN: Detecting ECG adversarial examples with conditional generative adversarial networks. In Proceedings of the 2021 20th IEEE International Conference on Machine Learning and Applications (ICMLA), Pasadena, CA, USA, 13–16 December 2021; pp. 50–56. [Google Scholar]

- Wang, Z.; Pang, T.; Du, C.; Lin, M.; Liu, W.; Yan, S. Better diffusion models further improve adversarial training. In Proceedings of the International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023; pp. 36246–36263. [Google Scholar]

- Cao, D.; Wei, K.; Wu, Y.; Zhang, J.; Feng, B.; Chen, J. FePN: A robust feature purification network to defend against adversarial examples. Comput. Secur. 2023, 134, 103427. [Google Scholar] [CrossRef]

- Na, D.; Ji, S.; Kim, J. Unrestricted black-box adversarial attack using GAN with limited queries. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 467–482. [Google Scholar]

- Abdukhamidov, E.; Abuhamad, M.; Woo, S.S.; Chan-Tin, E.; Abuhmed, T. Microbial Genetic Algorithm-based Black-box Attack against Interpretable Deep Learning Systems. arXiv 2023, arXiv:2307.06496. [Google Scholar]

- Liu, Y.A.; Zhang, R.; Guo, J.; de Rijke, M.; Chen, W.; Fan, Y.; Cheng, X. Topic-oriented adversarial attacks against black-box neural ranking models. In Proceedings of the 46th International ACM SIGIR Conference on Research and Development in Information Retrieval, Taipei, Taiwan, 23–27 July 2023; pp. 1700–1709. [Google Scholar]

- Hong, H.; Zhang, X.; Wang, B.; Ba, Z.; Hong, Y. Certifiable Black-Box Attacks with Randomized Adversarial Examples: Breaking Defenses with Provable Confidence. In Proceedings of the 2024 on ACM SIGSAC Conference on Computer and Communications Security, Salt Lake City, UT, USA, 14–18 October 2024; pp. 600–614. [Google Scholar]

- Ding, D.; Zhang, M.; Feng, F.; Huang, Y.; Jiang, E.; Yang, M. Black-box adversarial attack on time series classification. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 7358–7368. [Google Scholar]

- Tong, C.; Zheng, X.; Li, J.; Ma, X.; Gao, L.; Xiang, Y. Query-efficient black-box adversarial attacks on automatic speech recognition. IEEE/ACM Trans. Audio Speech Lang. Process. 2023, 31, 3981–3992. [Google Scholar] [CrossRef]

- Feldsar, B.; Mayer, R.; Rauber, A. Detecting adversarial examples using surrogate models. Mach. Learn. Knowl. Extr. 2023, 5, 1796–1825. [Google Scholar] [CrossRef]

- Liu, J.; Lyu, X. Boosting the Transferability of Adversarial Examples via Local Mixup and Adaptive Step Size. arXiv 2024, arXiv:2401.13205. [Google Scholar]

- Richardson, E.; Alaluf, Y.; Patashnik, O.; Nitzan, Y.; Azar, Y.; Shapiro, S.; Cohen-Or, D. Encoding in style: A stylegan encoder for image-to-image translation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 2287–2296. [Google Scholar]

- Chen, J.; Jordan, M.I.; Wainwright, M.J. Hopskipjumpattack: A query-efficient decision-based attack. In Proceedings of the 2020 IEEE Symposium on Security and Privacy (sp), San Francisco, CA, USA, 18–20 May 2020; pp. 1277–1294. [Google Scholar]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 586–595. [Google Scholar]

- Karras, T. A Style-Based Generator Architecture for Generative Adversarial Networks. arXiv 2019, arXiv:1812.04948. [Google Scholar]

- Gonzalez-Ortiz, O.; Ubando, L.A.M.; Fuenzalida, G.A.S.; Garza, G.I.M. Evaluating DenseNet121 Neural Network Performance for Cervical Pathology Classification. In Proceedings of the 2024 IEEE 37th International Symposium on Computer-Based Medical Systems (CBMS), Guadalajara, Mexico, 26–28 June 2024; pp. 297–302. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).