Abstract

A wireless communication intelligent anti-jamming decision algorithm based on Deep Reinforcement Learning (DRL) can gradually optimize communication anti-jamming strategies without prior knowledge by continuously interacting with the jamming environment. This has become one of the hottest research directions in the field of communication anti-jamming. In order to address the joint anti-jamming problem in scenarios with multiple users and without prior knowledge of jamming power, this paper proposes an intelligent anti-jamming decision algorithm for wireless communication based on Multi-Agent Proximal Policy Optimization (MAPPO). This algorithm combines centralized training and decentralized execution (CTDE), allowing each user to make independent decisions while fully leveraging the local information of all users during training. Specifically, the proposed algorithm shares all users’ perceptions, actions, and reward information during the learning phase to obtain a global state. Then, it calculates the value function and advantage function for each user based on this global state and optimizes each user’s independent policy. Each user can complete the anti-jamming decision based solely on local perception results and their independent policy. Meanwhile, MAPPO can handle continuous action spaces, allowing it to gradually approach the optimal value within the communication power range even without prior knowledge of jamming power. Simulation results show that the proposed algorithm exhibits significantly faster convergence speed and higher convergence values compared to Deep Q-Network (DQN), Q-Learning (QL), and random frequency hopping algorithms under frequency sweeping jamming and dynamic probabilistic jamming.

1. Introduction

With the rapid development of communication technology, wireless communication is widely used in various fields, including in society, the economy, and military [1]. However, due to the open nature of wireless communication transmission channels, it is susceptible to various forms of malicious jamming. Therefore, it is essential to design effective anti-jamming methods under jamming threats to ensure the reliability and effectiveness of wireless communication [2].

To ensure that wireless communication networks are protected from jamming threats, mainstream anti-jamming technologies primarily consist of spread spectrum communication techniques such as frequency hopping and direct sequence spread spectrum, often combined with frequency/power/modulation coding adaptation technologies [3,4]. These methods significantly reduce the impact of conventional jamming and improve the reliability of wireless communication in electromagnetic jamming environments to some extent. However, the aforementioned communication anti-jamming techniques have limited adaptability to time-varying jamming environments and struggle to effectively cope with various complex jamming, such as dynamic, cognitive, and intelligent jamming. Artificial intelligence technologies provide new ideas for communication anti-jamming. Currently, many researchers are exploring the integration of conventional communication anti-jamming techniques with artificial intelligence technologies [5,6]. Intelligent agents interact continuously with the spectrum environment to acquire characteristics of jamming signals, such as frequency and power, and autonomously adjust their communication strategies based on reward values to ensure good communication quality, thereby achieving intelligent anti-jamming [7]. In particular, communication anti-jamming technologies based on DRL have gained attention due to their ability to generate anti-jamming strategies without requiring prior knowledge of the jamming environment. This has become an important research direction in intelligent communication anti-jamming. For instance, reference [8] proposed a DRL-based algorithm for communication frequency and power selection, which improved the throughput of communication users while maintaining low power consumption. Reference [9] introduced a DRL-based multi-domain anti-jamming algorithm that learns the patterns of multi-domain dynamic jamming transformations in time, frequency, and spatial domains from spectrum waterfalls, allowing it to select appropriate anti-jamming strategies to evade jamming. These studies demonstrate the effectiveness of applying DRL in the field of communication anti-jamming. However, most current research focuses on single-agent anti-jamming scenarios.

In multi-agent scenarios, each user using a single-agent learning algorithm alone will inevitably increase the difficulty of anti-jamming. For example, in a joint anti-jamming problem in a multi-user scenario, if each user independently employs a DQN algorithm for communication anti-jamming decision-making, the presence of mutual interference among users means that each user can only treat the other users as jamming. This results in a more complex jamming scenario than the actual conditions. If we assume the existence of a centralized agent that can gather information from all users to facilitate learning, decision-making, and information distribution, this requires significant computational power and signaling overhead. Otherwise, these processes may consume excessive time, reducing the actual communication time proportion. However, multi-agent learning algorithms have shown tremendous potential in cooperation and competition, information sharing, and learning efficiency. Their objective is to dynamically learn the optimal strategy for each agent to maximize long-term cumulative rewards [10,11]. MAPPO is a multi-agent algorithm that combines the characteristics of centralized training and decentralized execution [12]. During the learning phase, MAPPO shares the perception, action, and reward information of all agents to obtain a global state. It then uses this global state to compute the value function and advantage function for each agent and optimizes each agent’s independent policy. In the decision-making phase, each agent makes personalized decisions based on local observations and independent policies [13,14].

To address the challenge that conventional wireless communication anti-jamming methods face in effectively resisting various rapidly changing jamming threats in complex electromagnetic environments, this paper proposes a wireless communication intelligent anti-jamming decision algorithm based on MAPPO. The proposed algorithm models the communication anti-jamming problem under malicious jamming threats as a Markov Decision Process (MDP) and uses MAPPO to solve for the optimal joint anti-jamming strategy for the communication system. Historically, research on wireless communication anti-jamming has often been conducted in discrete action spaces, where the simulation parameters set the communication power as discrete values [15,16]. In such models, low communication power can only be used in the absence of jamming, while high communication power is only sufficient to counter low jamming power but fails against high jamming power. These settings are somewhat idealized, requiring prior knowledge of jamming power for the communication parties to set appropriate values in advance, which is often difficult in adversarial communication scenarios. In contrast, the algorithm proposed in this paper can handle continuous action spaces, allowing it to gradually converge to optimal communication power values even in the absence of prior knowledge about jamming power through the training process.

2. System Model and Problem Formulation

2.1. System Model

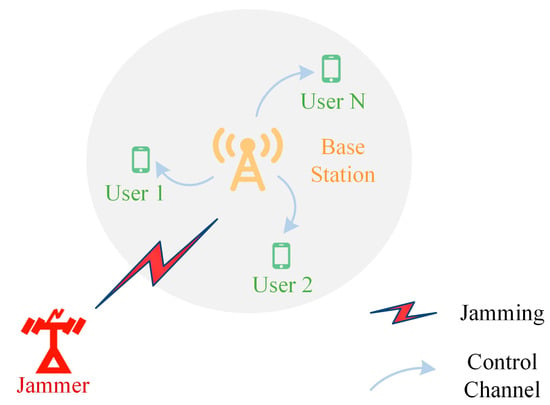

The article considers a wireless communication environment as shown in Figure 1. This environment includes a jammer, a central base station, communication users, and accessible channels. It is assumed that the wireless communication network can provide sufficient channels, hence the condition is established. Due to users operating in the same spectrum range, signals from different users can interfere with each other. This paper mainly considers co-frequency interference, which occurs when multiple users simultaneously occupy the same channel, leading to the phenomenon of mutual interference due to signal overlap.

Figure 1.

Wireless communication system model. By including a jammer and a central base station, communication users can interact with a small amount of information with the central base station through a protocol-enhanced low-capacity control channel.

Assuming that each user has the capability of broadband spectrum sensing, they can perceive the status of the target frequency band [17]. The central base station, as the core of the communication system, possesses learning capabilities and can interact with each user through a protocol-enhanced low-capacity control channel to exchange a small amount of information [18]. During the learning phase, each user transmits information to the central base station, including the status of the target frequency band obtained through interaction with the environment, the actions taken by the transmitter, and the rewards received from communication. The central base station uses the global state to calculate each agent’s value function and advantage function, optimizing each agent’s independent strategy. Users can then make decisions based on local sensing information and their independent strategies, including channel and power selection.

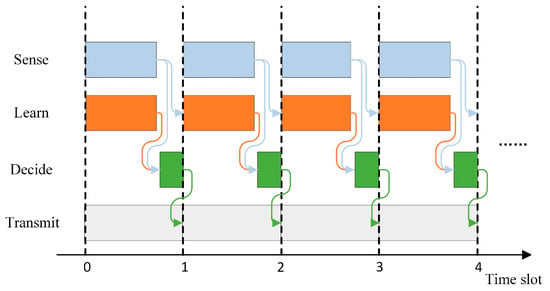

The transmission of communication data is organized into time slots of duration , which serve as the minimum time unit for continuous transmission. It is assumed that both jamming and communication change at the end of a time slot, with no changes occurring during the slot itself. The use of a parallel time slot structure as shown in Figure 2 can prevent sensing, learning, and decision-making from occupying the time for data transmission [19]. Specifically, by utilizing modern signal processing technologies, communication devices can perform spectrum sensing and data transmission simultaneously within the same time frame. This means that spectrum sensing and data transmission can be conducted in parallel by the communication devices, with learning carried out by a central base station and decision-making performed by individual intelligent agents. Therefore, the parallel time slot structure illustrated in Figure 2 is theoretically feasible.

Figure 2.

Parallel time slot structure. During the time slot, spectrum sensing, learning, decision-making, and data transmission are carried out in parallel.

2.2. Problem Formulation

This article models the anti-jamming problem of communication systems as a Markov decision process, which can be represented as [20,21]. Here, represents the state space; represents the action space; and represents the state transition probability, which is the probability of the external environment transitioning from the current state to a certain state after the transmitter takes an action. In this problem, the jamming is unknown, and the strategies of other users are also unknown, hence the state transition probabilities are also unknown; and represents the reward function; represents the discount factor, which reflects the agent’s emphasis on future rewards. The closer the discount factor is to one, the more emphasis is placed on long-term rewards. The specific definitions of each element are as follows:

The state space is the collection of all possible states of the target frequency band. The state of the target frequency band in the time slot is represented by the combination of the average received power of the user on each channel:

where, represents the average power received by the user on the channel .

The action space is the set of all possible independent actions that each user can choose. In this article, each user’s actions include channel selection and power selection. Channel selection involves choosing a single channel for data transmission from a total of available channels, while power selection involves choosing a value from a continuous interval to be used as the transmission power. In time slot , the independent actions of user are defined as follows:

where, represents the transmission channel and represents the transmission power.

A joint action is a combination of independent actions taken by all users, which can be expressed as follows:

The reward function is used to calculate the reward value obtained by taking transmission actions in a specific environmental state. The rewards are influenced by jamming, mutual interference, channel switching, and power loss. Regarding jamming and mutual interference, successful communication must be determined based on the average Signal to Jamming plus Noise Ratio (SJNR) at the receiver and the demodulation threshold. Since users need to interrupt communication and re-establish connections during channel switching, no information can be transmitted during this time. Thus, this article considers channel switching loss and sets the channel switching loss to . When the selected transmission power exceeds the required minimum transmission power, it is judged that power loss exists, and the power loss factor is set to .

In time slot , the user’s immediate reward can be expressed as follows:

where is the indicator function, which is equal to one if the condition is true, and zero otherwise; is the average SJNR received by the receiver on the channel selected by user in time slot , which includes the power effects of jamming and mutual interference; is the demodulation threshold for the receiver’s SJNR; and is the minimum transmission power required for the receiver to successfully demodulate.

Joint rewards are a combination of instant rewards for all users, which can be expressed as follows:

In the MAPPO algorithm, each user has an independent policy, which is represented as . The joint strategy can be expressed as a combination of independent strategies for all users:

The maximum expected discount reward based on joint strategy can be expressed using a value function:

where is a trajectory of the system under the joint strategy.

Assuming that the central base station can obtain global information through interaction with various users, and then learn based on sampling trajectories. Solving the optimal joint strategy is to find a joint strategy that maximizes the value function:

This optimal joint strategy can be approximated by deep reinforcement learning algorithms, such as the MAPPO algorithm used in this paper.

3. Intelligent Anti-Jamming Decision Algorithm for Wireless Communication Based on MAPPO

To obtain the optimal joint strategy, this article proposes a wireless communication intelligent anti-jamming algorithm based on the MAPPO algorithm. The MAPPO algorithm employs a critic-actor architecture, where each agent has a critic network to evaluate the performance of its corresponding actor network during the training process [22]. During execution, each agent independently makes action decisions based on its perceived local information.

Due to the ease of causing significant policy changes when directly using policy gradient updates, a clipping function is employed to limit the magnitude of policy updates, preventing drastic changes in the policy, which helps maintain the stability of learning. The clipping function is expressed as follows:

where represents the parameters of the actor network; is the ratio of the new policy to the old policy; is the advantage estimate; and is the clipping function that restricts to the range . The ratio of the new to the old policy is expressed as follows:

The advantage function is expressed as follows:

where is the temporal difference error of the state value function:

The loss function of the state value function of the critic network is expressed as follows:

where is the parameters of the critic network.

To encourage exploration of the policy, an entropy term is introduced. The overall loss function is expressed as follows:

where represents the entropy of the current policy, reflecting the uncertainty of the policy distribution. Both and are coefficients, with values set to 0.5.

The wireless communication intelligent anti-jamming algorithm based on MAPPO is shown in Algorithm 1.

| Algorithm 1: The wireless communication intelligent anti-jamming algorithm based on MAPPO |

|

4. Simulations and Analyses

4.1. Parameter Settings

This algorithm uses PyTorch for simulation experiments to verify the performance of the proposed algorithm. In the simulation, the proposed algorithm is compared with the QL algorithm [23], DQN algorithm [24], and the random frequency hopping algorithm.

QL Algorithm: This algorithm records and updates the Q-values for each state-action pair in a Q-table. By updating the Q-table, the algorithm gradually learns the optimal policy, aiming to maximize the cumulative reward.

DQN Algorithm: This algorithm stores the agent’s interactions with the environment in an experience replay buffer. It uses a deep neural network to replace the Q-table in traditional Q-learning, generating Q-values. The network parameters are adjusted during training by sampling from the experience replay buffer, gradually acquiring the optimal policy.

Random Frequency Hopping Algorithm: this algorithm randomly selects the communication channel and transmission power in each time slot, without considering the behavior of other users.

The comparison algorithm uses a centralized learning approach similar to MAPPO, but all decisions are made by a single agent and then distributed to each user for execution through a low-capacity control channel reinforced by a protocol. The DQN and QL algorithms lack the ability to handle continuous action spaces, so the power selection for comparison algorithms is limited to the upper and lower bounds of the transmission power range. To objectively analyze the performance of the proposed algorithm, the comparative algorithms are set to have the same parameter values as the proposed algorithm. The simulation parameters are shown in Table 1.

Table 1.

Simulation parameters.

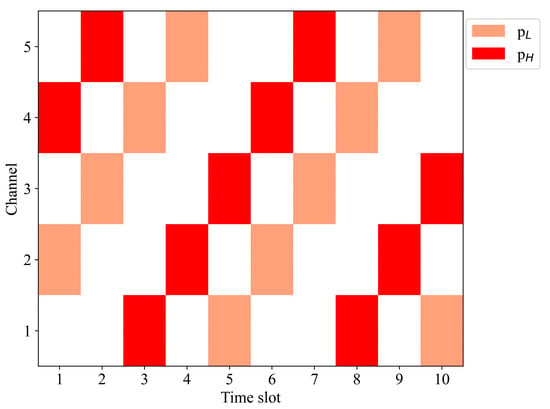

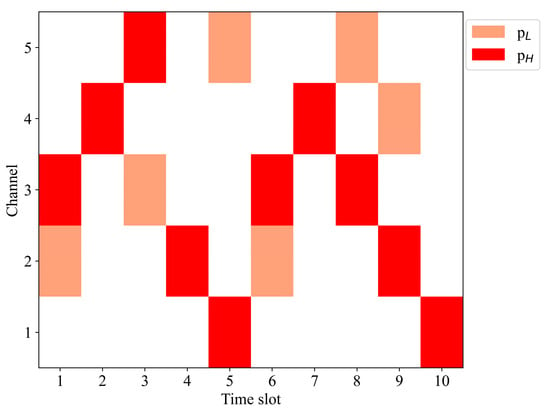

To effectively verify the algorithm proposed in this paper, we consider multiple frequency-sweeping jamming styles: the jamming power of the jamming signals is . Each jamming signal periodically sweeps across all channels in the target frequency band to implement the jamming, as shown in Figure 3, where the two colored blocks represent low-power jamming and high-power jamming, respectively.

Figure 3.

Time–frequency diagram of multiple frequency-sweeping jamming. Two types of color blocks represent low-power jamming and high-power jamming, respectively, with both types of power jamming present simultaneously in each time slot.

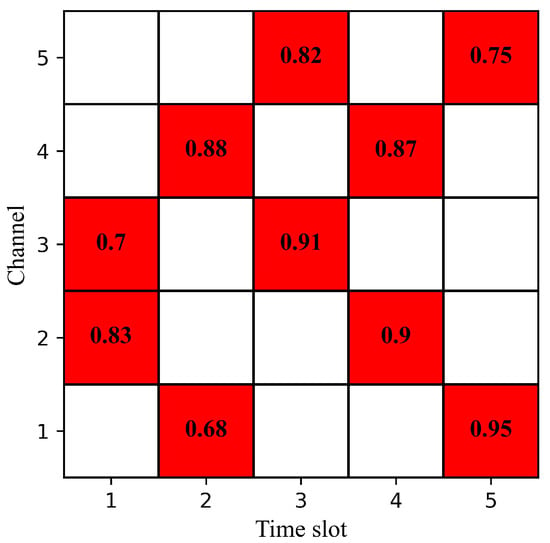

At the same time, this paper also considers dynamic probability jamming patterns [25]: the jammer randomly determines the jamming pattern for the current period according to the probability matrix shown in Figure 4. There are three possibilities for each time slot: no jamming, one high-power jamming, or one high-power jamming along with one low-power jamming. The dynamic probability jamming generated based on the probability matrix in Figure 4 is shown in Figure 5.

Figure 4.

Probability matrix of dynamic probability jamming. The values in the figure represent the probability of jamming, with a maximum of two episodes of jamming per time slot.

Figure 5.

Dynamic probability jamming. Dynamic probability jamming generated based on a probability matrix with five time slots as a cycle.

4.2. Simulation Results and Analysis

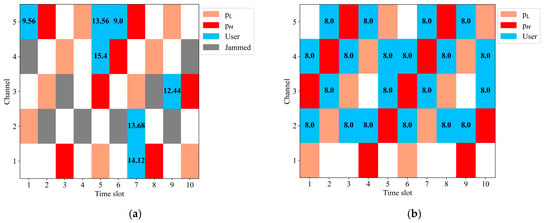

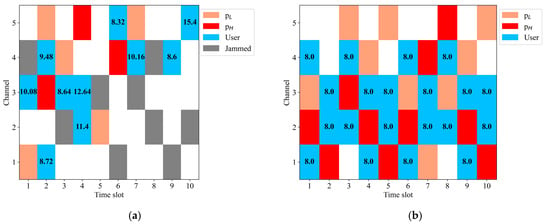

Figure 6 shows the time–frequency diagram of the target frequency band under frequency-sweeping jamming, where the blue blocks represent normal communication for users, and the gray blocks indicate jamming affecting user communication. The numbers in the blue blocks represent the communication power, rounded to two decimal places. Figure 6a displays the time–frequency diagram when the algorithm is untrained, showing that users are easily affected by malicious jamming and interference between users, rendering normal communication impossible. In contrast, Figure 6b displays the time–frequency diagram after the algorithm has converged, indicating that users can completely avoid both jamming and mutual interference, and communicate with the lowest communication power.

Figure 6.

Time–frequency diagram of the target frequency band under frequency-sweeping jamming. The blue color block represents the communication channel selected by the user, and the value in the color block represents the communication power value. (a) Time–frequency diagram when the algorithm is untrained; (b) time–frequency diagram after the algorithm has converged.

Figure 7 shows the time–frequency diagram of the target frequency band under dynamic probability jamming. It is evident that after the proposed algorithm converges, it can effectively avoid both jamming and user-to-user mutual interference, and communicate with the lowest communication power.

Figure 7.

Time–frequency diagram of the target frequency band under dynamic probability jamming. The blue color block represents the communication channel selected by the user, and the value in the color block represents the communication power value. (a) Time–frequency diagram when the algorithm is untrained; (b) time–frequency diagram after the algorithm has converged.

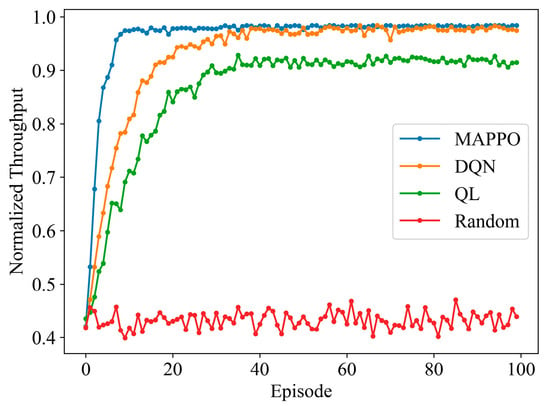

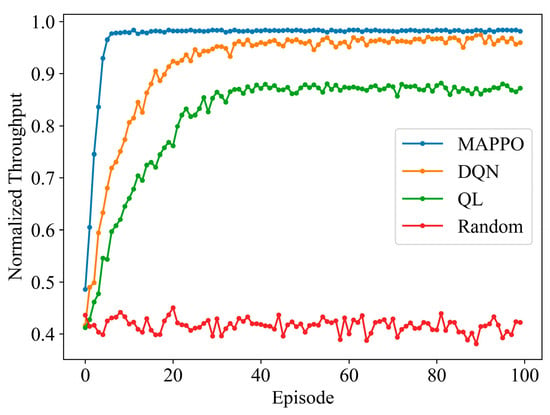

Figure 8 presents a comparison of the normalized throughput performance of DQN, QL, random hopping frequency, and the proposed algorithm based on MAPPO under frequency-sweeping jamming. The figure assumes that the upper limit of the transmission power range can effectively counter low-power jamming without causing power loss. The performance of random frequency hopping represents the performance achievable by randomly selecting communication channels and power levels in this jamming environment, with an average close to the initial values of the other algorithms, specifically an average of 0.432. The proposed algorithm begins to converge from the 9th episode, achieving a convergence value of 0.983; the DQN algorithm starts to converge at the 39th episode with a convergence value of 0.977; the QL algorithm shows convergence starting from the 37th episode with a convergence value of 0.917. The proposed algorithm converges significantly faster than the compared algorithms and achieves the highest convergence value, which is 0.61% and 7.20% higher than that of the DQN and QL algorithms, respectively.

Figure 8.

Normalized throughput performance of different algorithms under frequency-sweeping jamming.

Figure 9 presents a performance comparison of the algorithms under frequency-sweeping jamming with the preset communication power set relatively high. Compared to the scenario in Figure 8, the only difference is the setting of low-power jamming at 8 dBm. This lower value results in the preset high communication power values of the comparison algorithms being higher than the corresponding optimal communication power, leading to power loss and a decrease in the resulting reward values. The proposed MAPPO algorithm still converges to 0.983, while the DQN and QL algorithms converge to 0.964 and 0.871, respectively. Compared to Figure 8, the convergence value of the proposed MAPPO algorithm remains unchanged, while the convergence values of the DQN and QL algorithms decrease by 1.33% and 5.02%, respectively, thus expanding the advantage of the proposed algorithm. Additionally, the MAPPO algorithm starts to converge as early as the seventh episode, slightly earlier than in Figure 8. This faster convergence is due to the optimal communication power value for low-power jamming being located within the range and users still being able to achieve a high reward even when slightly above the optimal power. Therefore, it can be seen that the proposed MAPPO algorithm is better equipped to handle situations where the preset communication power is incorrectly set due to a lack of prior knowledge about jamming power.

Figure 9.

Normalized throughput performance of different algorithms under frequency-sweeping jamming with preset communication power being relatively high.

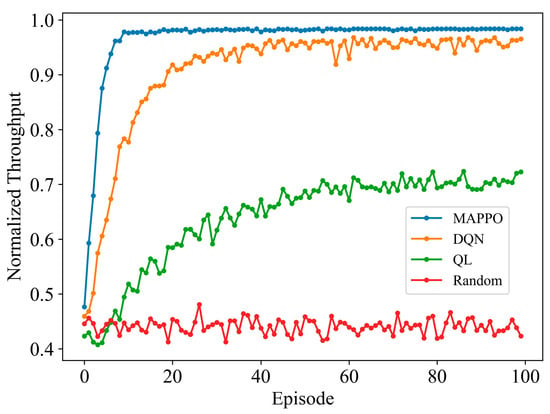

Figure 10 presents a comparison of the normalized throughput performance of the DQN, QL, random frequency hopping, and the proposed MAPPO algorithm under dynamic probability jamming with the preset communication power set relatively high. The proposed MAPPO algorithm converges to 0.984, while the DQN and QL algorithms converge to 0.959 and 0.704, respectively. The proposed algorithm shows a significantly faster convergence rate, achieving convergence values that are 2.61% and 39.77% higher than those of the DQN and QL algorithms, respectively. This demonstrates that the proposed MAPPO algorithm continues to perform excellently under dynamic probability jamming, exhibiting faster convergence, higher convergence values, and greater stability.

Figure 10.

Normalized throughput of different algorithms under dynamic probability jamming with preset communication power set relatively high.

5. Conclusions

This paper proposes a wireless communication intelligent anti-jamming algorithm based on MAPPO to address the issues of multi-user scenarios and a lack of prior knowledge about jamming power. Simulation results demonstrate that, compared to the DQN, QL, and random frequency hopping algorithms, the proposed algorithm converges significantly faster and achieves higher convergence values. Furthermore, in situations where the preset communication power is set too high due to the absence of jamming power prior knowledge, the convergence advantage of the proposed algorithm is even more pronounced. The algorithm proposed in this paper performs well in a scenario with two users and also shows potential for application in scenarios with more users and more complex jamming. In future work, we will explore the performance of the proposed algorithm in more complex scenarios, particularly in situations where users cannot completely avoid jamming in the frequency domain.

Author Contributions

Conceptualization, F.Z., Y.N. and W.Z.; methodology, F.Z., Y.N. and W.Z.; software, F.Z. and W.Z.; validation, F.Z. and W.Z.; formal analysis, F.Z., Y.N. and W.Z.; investigation, F.Z. and W.Z.; resources, F.Z. and W.Z.; data curation, F.Z. and W.Z.; writing—original draft preparation, F.Z. and W.Z.; writing—review and editing, Y.N.; visualization, F.Z. and W.Z.; supervision, Y.N.; project administration, Y.N.; funding acquisition, Y.N. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China, grant number 62371461.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

Author Wenhao Zhou was employed by Guizhou Space Appliance Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Zuo, Y.; Guo, J.; Gao, N.; Zhu, Y.; Jin, S.; Li, X. A survey of blockchain and artificial intelligence for 6G wireless communications. IEEE Commun. Surv. Tutor. 2023, 25, 2494–2528. [Google Scholar] [CrossRef]

- Pirayesh, H.; Zeng, H. Jamming attacks and anti-jamming strategies in wireless networks: A comprehensive survey. IEEE Commun. Surv. Tutor. 2022, 24, 767–809. [Google Scholar] [CrossRef]

- Torrieri, D. Principles of Spread-Spectrum Communication Systems; Springer Publishing Company: Berlin/Heidelberg, Germany, 2015. [Google Scholar]

- Yao, F. Communication Anti-Jamming Engineering and Practice; Publishing House of Electronics Industry: Beijing, China, 2012. [Google Scholar]

- Aref, M.A.; Jayaweera, S.K.; Yepez, E. Survey on cognitive anti-jamming communications. IET Commun. 2020, 14, 3110–3127. [Google Scholar] [CrossRef]

- Bell, J. What is machine learning. In Machine Learning and the City: Applications in Architecture and Urban Design; John Wiley & Sons: Hoboken, NJ, USA, 2022; Volume 9, pp. 207–216. [Google Scholar] [CrossRef]

- Zhou, Q.; Niu, Y.; Xiang, P.; Li, Y. Intra-domain knowledge reuse assisted reinforcement learning for fast Anti-Jamming communication. IEEE Trans. Inf. Forensics Secur. 2023, 18, 4707–4720. [Google Scholar] [CrossRef]

- Li, Y.; Xu, Y.; Wang, X.; Li, W.; Bai, W. Power and Frequency Selection optimization in Anti-Jamming Communication: A Deep Reinforcement Learning Approach. In Proceedings of the IEEE 5th International Conference on Computer and Communications, Chengdu, China, 6–9 December 2019; pp. 815–820. [Google Scholar] [CrossRef]

- Yuan, H.; Song, F.; Chu, X.; Li, W.; Wang, X.; Han, H.; Gong, Y. Joint Relay and Channel Selection Against Mobile and Smart Jammer: A Deep Reinforcement Learning Approach. IET Commun. 2021, 15, 2237–2251. [Google Scholar] [CrossRef]

- Foerster, J.N.; Assael, Y.M.; Freitas, N.D.; Whiteson, S. Learning to communicate with Deep multi-agent reinforcement learning. In Proceedings of the 30th International Conference on Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 2145–2153. [Google Scholar] [CrossRef]

- Gao, A.; Du, C.; Ng, S.X.; Liang, W. A Cooperative Spectrum Sensing With Multi-Agent Reinforcement Learning Approach in Cognitive Radio Networks. IEEE Commun. Lett. 2021, 25, 2604–2608. [Google Scholar] [CrossRef]

- Yu, C.; Velu, A.; Vinitsky, E.; Gao, J.; Wang, Y.; Bayen, A.; Wu, Y. The surprising effectiveness of PPO in cooperative, multi-agent games. arXiv 2022, arXiv:2103.01955. [Google Scholar]

- He, Y.; Gang, X.; Gao, Y. Intelligent Decentralized Multiple Access via Multi- Agent Deep Reinforcement Learning. In Proceedings of the IEEE Wireless Communications and Networking Conference, Dubai, United Arab Emirates, 21–24 April 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Sun, Y.; He, Q. Computational Offloading for MEC Networks with Energy Harvesting: A Hierarchical Multi-Agent Reinforcement Learning Approach. Electronics 2023, 12, 1304. [Google Scholar] [CrossRef]

- Pu, Z.; Niu, Y.; Zhang, G. A Multi-Parameter Intelligent Communication Anti-Jamming Method Based on Three-Dimensional Q-Learning. In Proceedings of the IEEE 2nd International Conference on Computer Communication and Artificial Intelligence, Beijing, China, 6–8 May 2022; pp. 205–210. [Google Scholar] [CrossRef]

- Zhou, Q.; Niu, Y.; Xiang, W.; Zhao, L. A Novel Reinforcement Learning Algorithm Based on Broad Learning System for Fast Communication Anti-jamming. IEEE Trans. Ind. Inform. 2024, 1–10. [Google Scholar] [CrossRef]

- Mughal, M.O.; Nawaz, T.; Marcenaro, L.; Regazzoni, C.S. Cyclostationary-based jammer detection algorithm for wide-band radios using compressed sensing. In Proceedings of the IEEE Global Conference on Signal and Information Processing, Orlando, FL, USA, 14–16 December 2015; pp. 280–284. [Google Scholar] [CrossRef]

- Chang, X.; Li, Y.B.; Zhao, Y.; Du, Y.F.; Liu, D.H. An Improved Anti-Jamming Method Based on Deep Reinforcement Learning and Feature Engineering. IEEE Access 2022, 10, 69992–70000. [Google Scholar] [CrossRef]

- Niu, Y.; Zhou, Z.; Pu, Z.; Wan, B. Anti-Jamming Communication Using Slotted Cross Q Learning. Electronics 2023, 12, 2879. [Google Scholar] [CrossRef]

- Watkins, C.J.C.H. Learning from Delayed Rewards. Ph.D. Thesis, University of Cambridge, Cambridge, UK, 1989. [Google Scholar] [CrossRef]

- Lin, X.; Liu, A.; Han, C.; Liang, X.; Sun, Y.; Ding, G.; Zhou, H. Intelligent Adaptive MIMO Transmission for Nonstationary Communication Environment: A Deep Reinforcement Learning Approach. IEEE Trans. Commun. 2025. [Google Scholar] [CrossRef]

- Zuo, J.; Ai, Q.; Wang, W.; Tao, W. Day-Ahead Economic Dispatch Strategy for Distribution Networks with Multi-Class Distributed Resources Based on Improved MAPPO Algorithm. Mathematics 2024, 12, 3993. [Google Scholar] [CrossRef]

- Ibrahim, K.; Ng, S.X.; Qureshi, I.M.; Malik, A.N.; Muhaidat, S. Anti-Jamming Game to Combat Intelligent Jamming for Cognitive Radio Networks. IEEE Access 2021, 9, 137941–137956. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Graves, A.; Antonoglou, I.; Wierstra, D.; Riedmiller, M. Playing Atari with Deep Reinforcement Learning. arXiv 2013, arXiv:1312.5602. [Google Scholar]

- Wang, Y.; Niu, Y.; Chen, J.; Fang, F.; Han, C. Q-Learning Based Adaptive Frequency Hopping Strategy Under Probabilistic Jamming. In Proceedings of the 2019 11th International Conference on Wireless Communications and Signal Processing, Xi’an, China, 23–25 October 2019; pp. 1–7. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).