BiGRMT: Bidirectional GRU–Recurrent Memory Transformer for Efficient Long-Sequence Anomaly Detection in High-Concurrency Microservices

Abstract

1. Introduction

2. Related Work

3. Problem Description

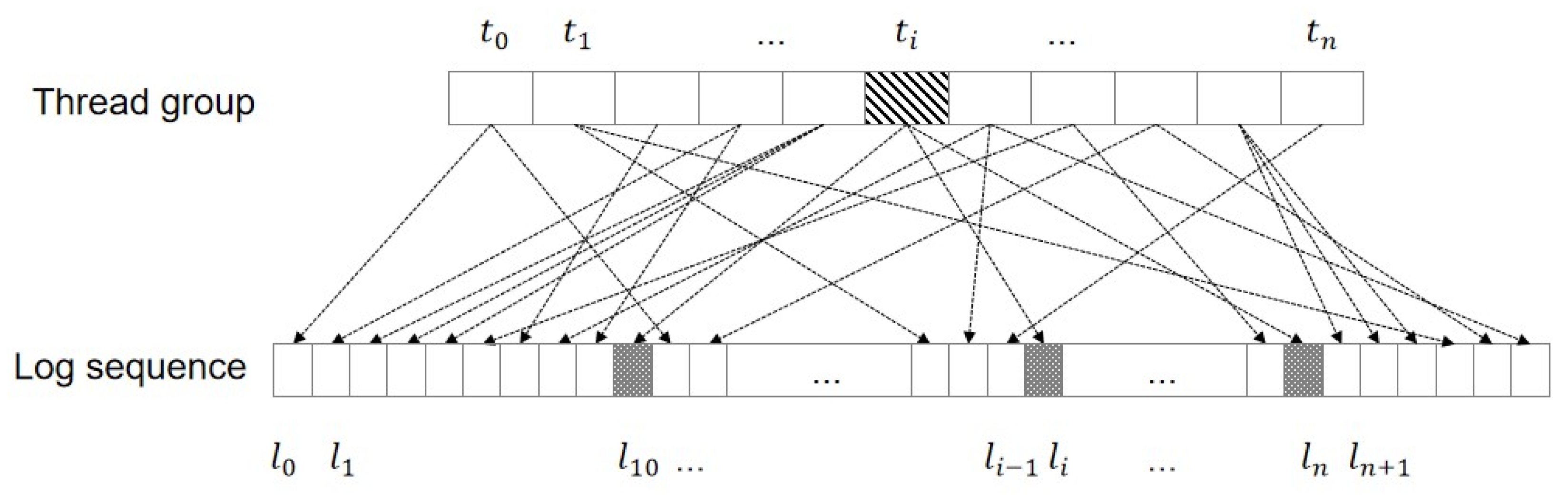

3.1. Log Data Have Strong Uncertainty in Their Time Series Attributes

3.2. Data Redundancy for High-Concurrency Logs

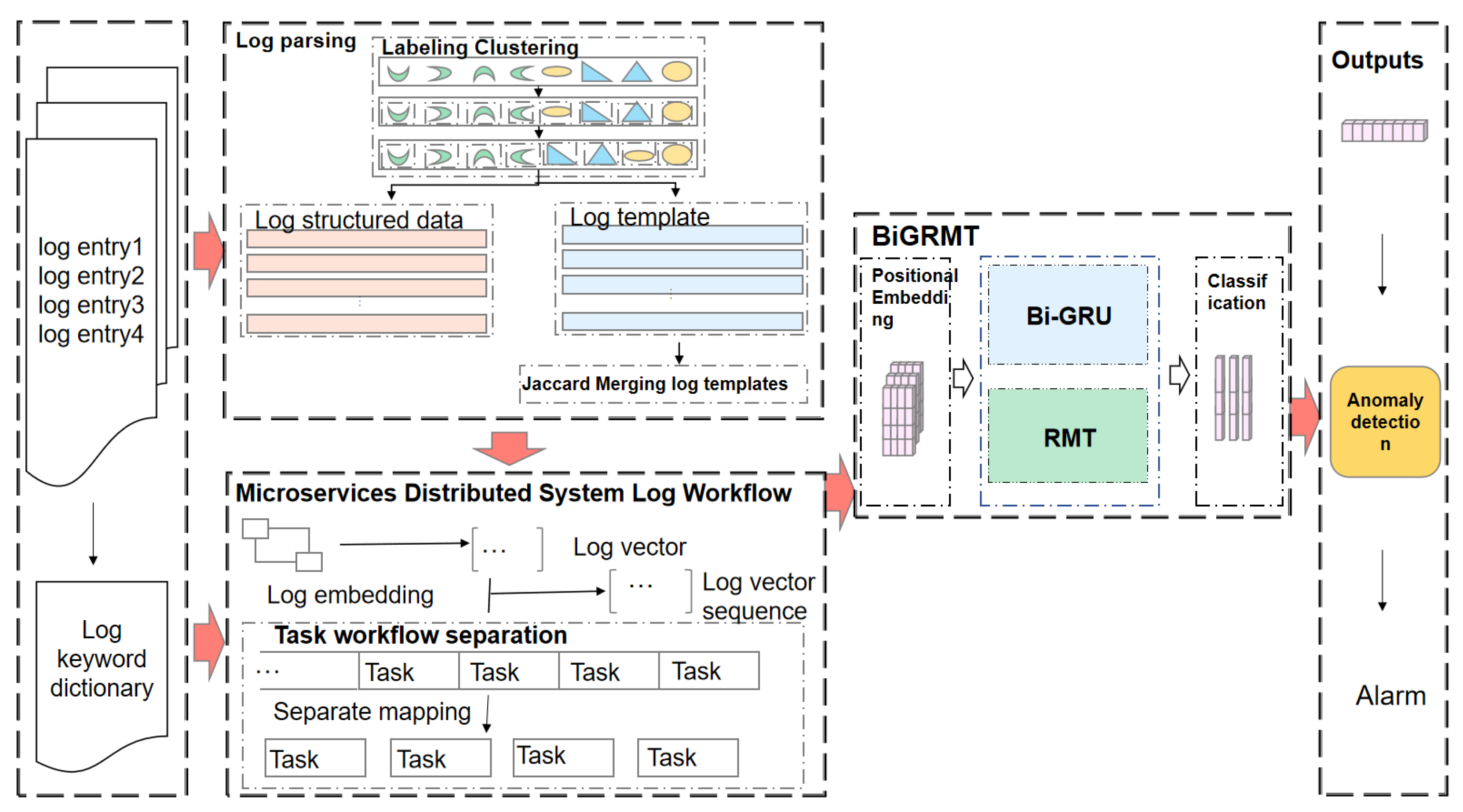

4. System Design

5. BiGRMT Anomaly Detection Model

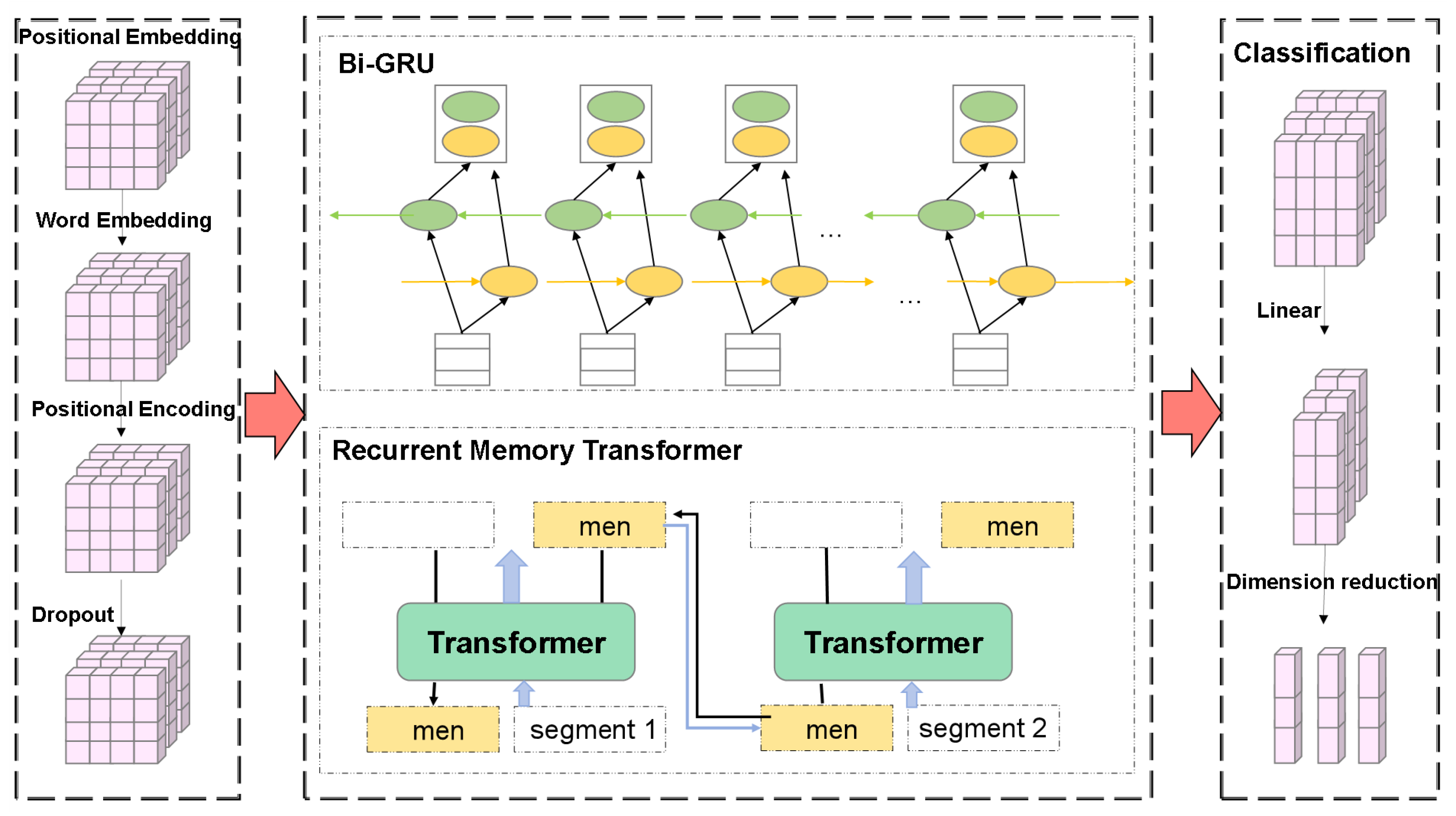

5.1. Input Layer

5.2. Model Layer

5.3. Output Layer

6. Experiments and Results

6.1. Experimental Setup

6.2. Experimental Results and Analysis

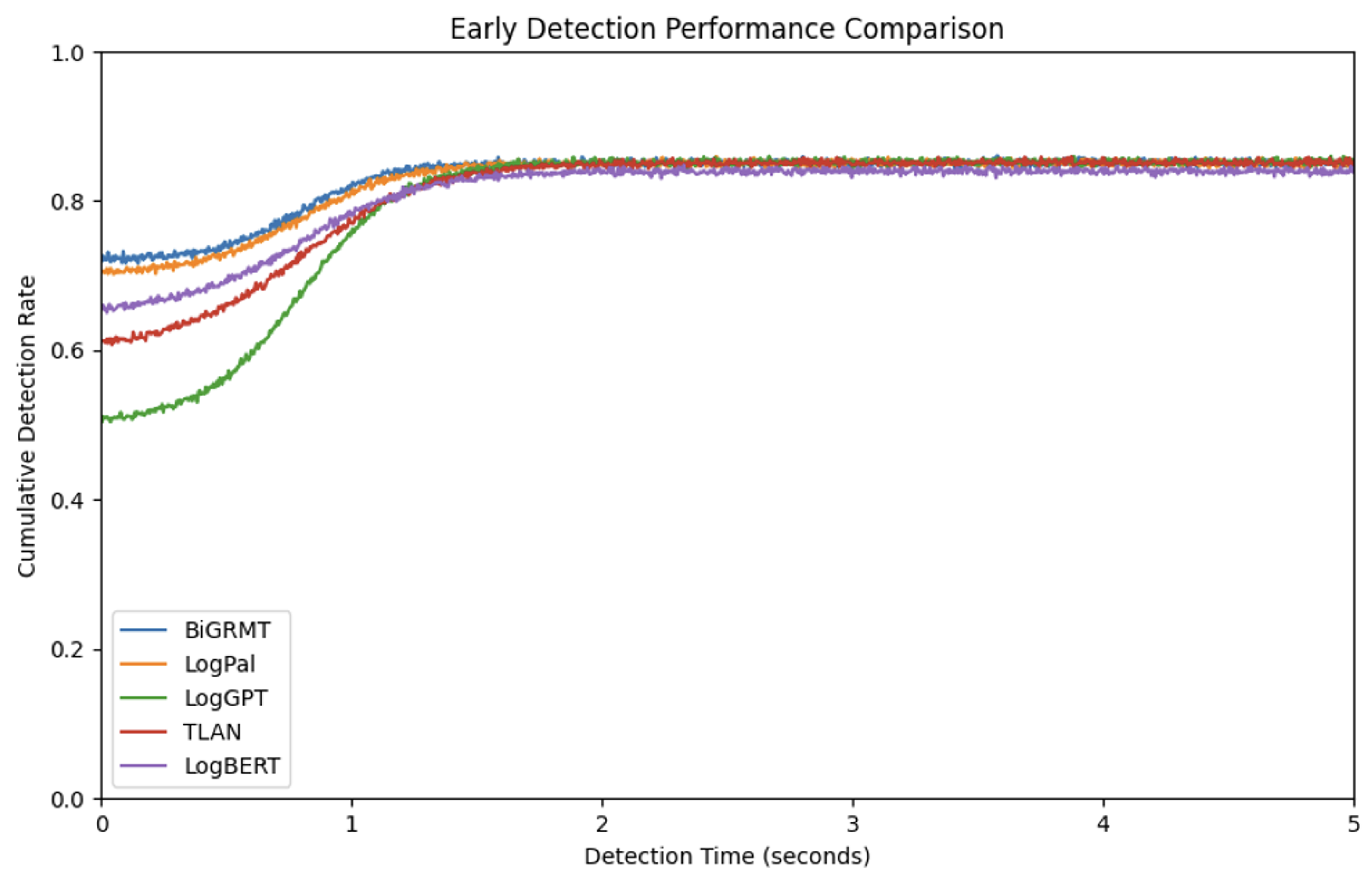

6.3. Performance Evaluation

6.4. Ablation Experiment

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Abgaz, Y.; McCarren, A.; Elger, P.; Solan, D.; Lapuz, N.; Bivol, M.; Jackson, G.; Yilmaz, M.; Buckley, J.; Clarke, P. Decomposition of monolith applications into microservices architectures: A systematic review. IEEE Trans. Softw. Eng. 2023, 49, 4213–4242. [Google Scholar] [CrossRef]

- Razzaq, A.; Ghayyur, S.A. A systematic mapping study: The new age of software architecture from monolithic to microservice architecture—Awareness and challenges. Comput. Appl. Eng. Educ. 2023, 31, 421–451. [Google Scholar] [CrossRef]

- Diaz-De-Arcaya, J.; Torre-Bastida, A.I.; Zárate, G.; Miñón, R.; Almeida, A. A joint study of the challenges, opportunities, and roadmap of mlops and aiops: A systematic survey. ACM Comput. Surv. 2023, 56, 1–30. [Google Scholar] [CrossRef]

- Guo, H.; Yang, J.; Liu, J.; Bai, J.; Wang, B.; Li, Z.; Zheng, T.; Zhang, B.; Peng, J.; Tian, Q. Logformer: A pre-train and tuning pipeline for log anomaly detection. Proc. AAAI Conf. Artif. Intell. 2024, 38, 135–143. [Google Scholar] [CrossRef]

- Lee, Y.; Kim, J.; Kang, P. Lanobert: System log anomaly detection based on bert masked language model. Appl. Soft Comput. 2023, 146, 110689. [Google Scholar] [CrossRef]

- Fu, Q.; Lou, J.G.; Wang, Y.; Li, J. Execution anomaly detection in distributed systems through unstructured log analysis. In Proceedings of the 2009 Ninth IEEE International Conference on Data Mining, Miami, FL, USA, 6–9 December 2009; pp. 149–158. [Google Scholar]

- Lin, Q.; Zhang, H.; Lou, J.G.; Zhang, Y.; Chen, X. Log clustering based problem identification for online service systems. In Proceedings of the 38th International Conference on Software Engineering Companion, Austin, TX, USA, 14–22 May 2016; pp. 102–111. [Google Scholar]

- Yang, L.; Chen, J.; Wang, Z.; Wang, W.; Jiang, J.; Dong, X.; Zhang, W. Semi-supervised log-based anomaly detection via probabilistic label estimation. In Proceedings of the 2021 IEEE/ACM 43rd International Conference on Software Engineering (ICSE), Virtual Event, 25–28 May 2021; pp. 1448–1460. [Google Scholar]

- Le, V.H.; Zhang, H. Log-based anomaly detection without log parsing. In Proceedings of the 2021 36th IEEE/ACM International Conference on Automated Software Engineering (ASE), Melbourne, Australia, 15–19 November 2021; pp. 492–504. [Google Scholar]

- Qi, J.; Huang, S.; Luan, Z.; Yang, S.; Fung, C.; Yang, H.; Qian, D.; Shang, J.; Xiao, Z.; Wu, Z. Loggpt: Exploring chatgpt for log-based anomaly detection. In Proceedings of the 2023 IEEE International Conference on High Performance Computing & Communications, Data Science & Systems, Smart City & Dependability in Sensor, Cloud & Big Data Systems & Application (HPCC/DSS/SmartCity/DependSys), Melbourne, Australia,, 17–21 December 2023; pp. 273–280. [Google Scholar]

- Liu, Y.; Ren, S.; Wang, X.; Zhou, M. Temporal logical attention network for log-based anomaly detection in distributed systems. Sensors 2024, 24, 7949. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Jia, T.; Jia, M.; Li, Y.; Yang, Y.; Wu, Z. Multivariate log-based anomaly detection for distributed database. In Proceedings of the 30th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Barcelona, Spain, 25–29 August 2024; pp. 4256–4267. [Google Scholar]

- Sun, L.; Xu, X. LogPal: A generic anomaly detection scheme of heterogeneous logs for network systems. Secur. Commun. Netw. 2023, 2023, 2803139. [Google Scholar] [CrossRef]

- Xie, Y.; Zhang, H.; Babar, M.A. Loggd: Detecting anomalies from system logs with graph neural networks. In Proceedings of the 2022 IEEE 22nd International Conference on Software Quality, Reliability and Security (QRS), Guangzhou, China, 5–9 December 2022; pp. 299–310. [Google Scholar]

- Catillo, M.; Pecchia, A.; Villano, U. AutoLog: Anomaly detection by deep autoencoding of system logs. Expert Syst. Appl. 2022, 191, 116263. [Google Scholar] [CrossRef]

- Beltagy, I.; Peters, M.E.; Cohan, A. Longformer: The long-document transformer. arXiv 2020, arXiv:2004.05150. [Google Scholar] [CrossRef]

- Kitaev, N.; Kaiser, Ł.; Levskaya, A. Reformer: The efficient transformer. arXiv 2020, arXiv:2001.04451. [Google Scholar] [CrossRef]

- Burtsev, M.S.; Kuratov, Y.; Peganov, A.; Sapunov, G.V. Memory transformer. arXiv 2020, arXiv:2006.11527. [Google Scholar]

- Meng, W.; Liu, Y.; Zhang, S.; Zaiter, F.; Zhang, Y.; Huang, Y.; Yu, Z.; Zhang, Y.; Song, L.; Zhang, M.; et al. Logclass: Anomalous log identification and classification with partial labels. IEEE Trans. Netw. Serv. Manag. 2021, 18, 1870–1884. [Google Scholar] [CrossRef]

- Meng, W.; Liu, Y.; Zhu, Y.; Zhang, S.; Pei, D.; Liu, Y.; Chen, Y.; Zhang, R.; Tao, S.; Sun, P.; et al. Loganomaly: Unsupervised detection of sequential and quantitative anomalies in unstructured logs. In Proceedings of the 28th International Joint Conference on Artificial Intelligence, Macao, China, 10–16 August 2019; Volume 19, pp. 4739–4745. [Google Scholar]

- Li, X.; Chen, P.; Jing, L.; He, Z.; Yu, G. Swisslog: Robust and unified deep learning based log anomaly detection for diverse faults. In Proceedings of the 2020 IEEE 31st International Symposium on Software Reliability Engineering (ISSRE), Online, 12–15 October 2020; pp. 92–103. [Google Scholar]

- Si, C.; Yu, W.; Zhou, P.; Zhou, Y.; Wang, X.; Yan, S. Inception transformer. In Advances in Neural Information Processing Systems; Curran Associates Inc.: Red Hook, NY, USA, 2022; Volume 35, pp. 23495–23509. [Google Scholar]

- Zhang, L.; Jia, T.; Wang, K.; Jia, M.; Yang, Y.; Li, Y. Reducing events to augment log-based anomaly detection models: An empirical study. In Proceedings of the 18th ACM/IEEE International Symposium on Empirical Software Engineering and Measurement, Barcelona, Spain, 24–25 October 2024; pp. 538–548. [Google Scholar]

- Cho, K.; Van Merriënboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning phrase representations using RNN encoder-decoder for statistical machine translation. arXiv 2014, arXiv:1406.1078. [Google Scholar] [CrossRef]

- Bulatov, A.; Kuratov, Y.; Kapushev, Y.; Burtsev, M. Beyond attention: Breaking the limits of transformer context length with recurrent memory. Proc. AAAI Conf. Artif. Intell. 2024, 38, 17700–17708. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Advances in Neural Information Processing Systems; Curran Associates Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Zhu, J.; He, S.; He, P.; Liu, J.; Lyu, M.R. Loghub: A large collection of system log datasets for ai-driven log analytics. In Proceedings of the 2023 IEEE 34th International Symposium on Software Reliability Engineering (ISSRE), Florence, Italy, 9–12 October 2023; pp. 355–366. [Google Scholar]

- Guo, H.; Yuan, S.; Wu, X. Logbert: Log anomaly detection via bert. In Proceedings of the 2021 International Joint Conference on Neural Networks (IJCNN), Shenzhen, China, 18–22 July 2021; pp. 1–8. [Google Scholar]

| LogGPT [10] | TLAN [11] | LogPal [13] | LogBERT [28] | BiGRMT | |

|---|---|---|---|---|---|

| Precision (%) | 48.7 | 91.2 | 98.3 | 87.0 | 91.3 |

| Recall (%) | 48.9 | 89.4 | 98.3 | 78.1 | 88.8 |

| F1-Score (%) | 55.6 | 90.3 | 98.6 | 82.3 | 90.0 |

| Computational Complexity |

| Dataset | Method | F1-Score (%) | Precision (%) | Recall (%) |

|---|---|---|---|---|

| Spark | LogGPT | 55.7 | 38.6 | 100.0 |

| TLAN | 89.7 | 89.1 | 90.3 | |

| LogPal | 99.0 | 99.0 | 99.0 | |

| BiGRMT | 90.0 | 91.3 | 88.8 | |

| HDFS | LogGPT | 50.7 | 34.0 | 100.0 |

| TLAN | 91.5 | 90.9 | 92.1 | |

| LogPal | 99.0 | 98.0 | 99.0 | |

| BiGRMT | 89.7 | 90.3 | 89.2 | |

| BGL | LogGPT | 44.4 | 28.6 | 100.0 |

| TLAN | 89.3 | 88.7 | 89.9 | |

| LogPal | 98.0 | 98.0 | 97.0 | |

| BiGRMT | 91.3 | 92.2 | 90.5 |

| Condition | Model | F1-Score (%) | FAR (%) | DL (s) |

|---|---|---|---|---|

| Normal | LogPal | 99.2 | 2.03 | 1.30 |

| BiGRMT | 90.3 | 5.12 | 1.45 | |

| High Load | LogPal | 82.8 | 10.07 | 1.75 |

| BiGRMT | 87.4 | 7.25 | 1.56 | |

| Noisy Logs | LogPal | 78.0 | 16.34 | 1.85 |

| BiGRMT | 83.6 | 12.08 | 1.68 | |

| Missing Data | LogPal | 74.1 | 19.57 | 1.92 |

| BiGRMT | 80.2 | 15.14 | 1.72 | |

| Multiple Anomalies | LogPal | 68.8 | 25.62 | 2.05 |

| BiGRMT | 75.4 | 20.49 | 1.80 |

| Sequence Length | GPU Memory Usage (MB) | Inference Time (ms) |

|---|---|---|

| 100 | 1200 | 15 |

| 200 | 1400 | 18 |

| 500 | 1800 | 25 |

| 1000 | 2200 | 35 |

| Model | Params (M) | Latency (ms) |

|---|---|---|

| LogGPT [10] | 175.2 | 120.5 |

| TLAN [11] | 68.7 | 45.2 |

| LogPal [13] | 92.3 | 65.8 |

| LogBERT [28] | 110.4 | 78.6 |

| BiGRMT | 45.1 | 35.0 |

| Model Configuration | Precision (%) | Recall (%) | F1-Score (%) |

|---|---|---|---|

| BiGRMT | 91.3 | 88.8 | 90.0 |

| BiGRMT without Bi-GRU | 87.8 | 84.5 | 86.1 |

| BiGRMT without RMT | 91.1 | 88.4 | 89.7 |

| BiGRMT without Adaptive Filtering | 90.0 | 87.5 | 88.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, R.; Zhang, R.; Wang, S.; Yang, K.; Xu, M.; Qiao, D.; Hu, X. BiGRMT: Bidirectional GRU–Recurrent Memory Transformer for Efficient Long-Sequence Anomaly Detection in High-Concurrency Microservices. Electronics 2025, 14, 4754. https://doi.org/10.3390/electronics14234754

Zhang R, Zhang R, Wang S, Yang K, Xu M, Qiao D, Hu X. BiGRMT: Bidirectional GRU–Recurrent Memory Transformer for Efficient Long-Sequence Anomaly Detection in High-Concurrency Microservices. Electronics. 2025; 14(23):4754. https://doi.org/10.3390/electronics14234754

Chicago/Turabian StyleZhang, Ruicheng, Renzun Zhang, Shuyuan Wang, Kun Yang, Miao Xu, Dongwei Qiao, and Xuanzheng Hu. 2025. "BiGRMT: Bidirectional GRU–Recurrent Memory Transformer for Efficient Long-Sequence Anomaly Detection in High-Concurrency Microservices" Electronics 14, no. 23: 4754. https://doi.org/10.3390/electronics14234754

APA StyleZhang, R., Zhang, R., Wang, S., Yang, K., Xu, M., Qiao, D., & Hu, X. (2025). BiGRMT: Bidirectional GRU–Recurrent Memory Transformer for Efficient Long-Sequence Anomaly Detection in High-Concurrency Microservices. Electronics, 14(23), 4754. https://doi.org/10.3390/electronics14234754