1. Introduction

Over the past few years, significant advancements in radar miniaturization have facilitated the deployment of radar systems on mobile platforms like unmanned aerial vehicles (UAVs) and unmanned ground vehicles (UGVs) [

1], thereby opening up novel opportunities for close-range remote sensing applications [

2]. These miniaturized radar systems (MRSs) have revolutionized various fields, including infrastructure monitoring [

3], 3D urban modeling [

4], security screening, and agricultural surveillance [

5] by providing higher revisit frequency, operational flexibility, and enhanced spatial resolution compared to traditional airborne and spaceborne platforms [

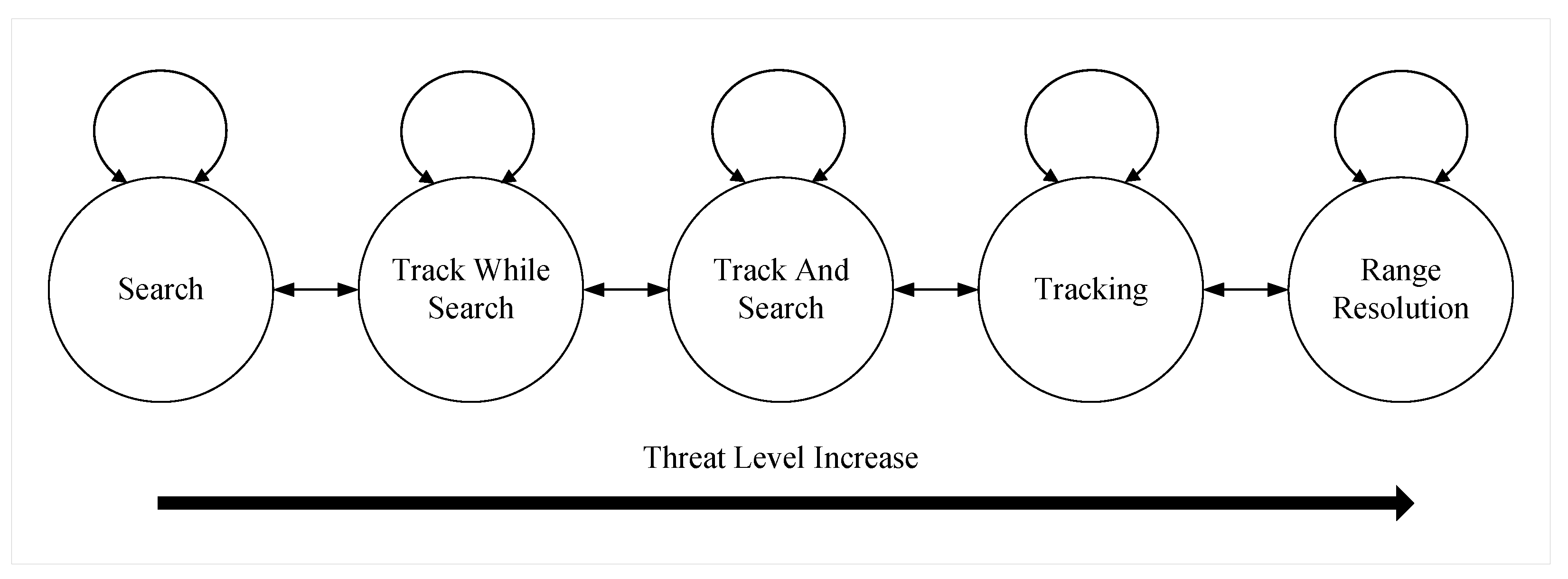

6]. However, advances in radar technology have rendered single-function radars insufficient in modern complex electromagnetic environments (CEE). This leads to the development of multi-function radars (MFR) that are flexible, feature diverse modes, and possess strong anti-interference capabilities [

7]. Unlike traditional single-function radars, MFRs can flexibly switch between various operating modes, including search, tracking, and identification [

8]. Notably, advancements in beam-scanning radar technologies have further enhanced the flexibility of MFRs in complex electromagnetic environments [

9,

10]. This flexibility enables MFRs to produce diverse radar signal characteristics, creating an electromagnetic landscape filled with heterogeneous signals [

11,

12]. And many other studies demonstrate that MFRs have been widely deployed and are now a dominant presence in modern electromagnetic environments [

13,

14,

15]. This inevitably leads to the MRS being detected and tracked by the MFR’s radar during close-range remote sensing missions. In most scenarios, the MRS does not wish to be exposed within the MFR’s field of view. This means these MRSs have to flexibly adjust their electromagnetic working modes to avoid being detected and tracked by MFRs [

16]. Therefore, designing a robust MRSs electromagnetic working mode decision-making (EWMDM) algorithm is essential.

Since EWMDM methods must contend with the flexibility of the working states of MFRs in CEE, RL algorithms [

17] can be introduced as an effective decision-making tool. The strong generalization capabilities and autonomous learning characteristics of RL algorithms align well with the challenges faced by MRSs in CEE [

18]. Currently, RL algorithms have achieved remarkable results in various domains such as gaming, virtual environments, robotics control, autonomous driving, and financial trading [

19,

20,

21]. Similarly, RL algorithms have already been applied to EWMDM methods. Li et al. proposed an EWMDM method based on improved Q-learning, which employs simulated annealing to enhance the exploration strategy, thus improving the efficiency of electromagnetic working mode decisions [

22]. Experimental results show that the proposed Q-learning algorithm can fully explore and converge the results to a better solution at a faster speed. Xu et al. introduced an improved Wolpertinger architecture based on the soft actor–critic (SAC) algorithm, significantly accelerated convergence for EWMDM methods in real electromagnetic environments [

23]. Due to the use of advanced RL algorithms, their algorithm obtained excellent performance in a variety of scenarios. Zhang et al. applied the improved sparrow search algorithm–support vector machine (ISSA-SVM) for EWMDM effect evaluation and designed a comprehensive interactive CEE using a heuristic accelerated Q-learning algorithm [

24]. They realized the modeling of the complete EWMDM process. To further enhance the efficiency and adaptability of electromagnetic working mode decisions, Zhang et al. proposed a hybrid algorithm combining ant colony optimization and Q-learning [

25]. Experimental results show that the Q-learning algorithm and the ant colony algorithm fuse well in the cooperative EWMDM scenario. Zhang et al. also simulated a multi-MRS party cooperating scenario against MFR and analyzed the performance of an EWMDM model based on a double deep Q network based on priority experience replay (PER-DDQN) [

26]. Moreover, Zhang et al. established the cooperative EWMDM in the frequency domain model and introduced the design idea of hierarchical reinforcement learning (HRL) [

27]. Their proposed method effectively realizes the design of intelligent EWMDM in the frequency domain.

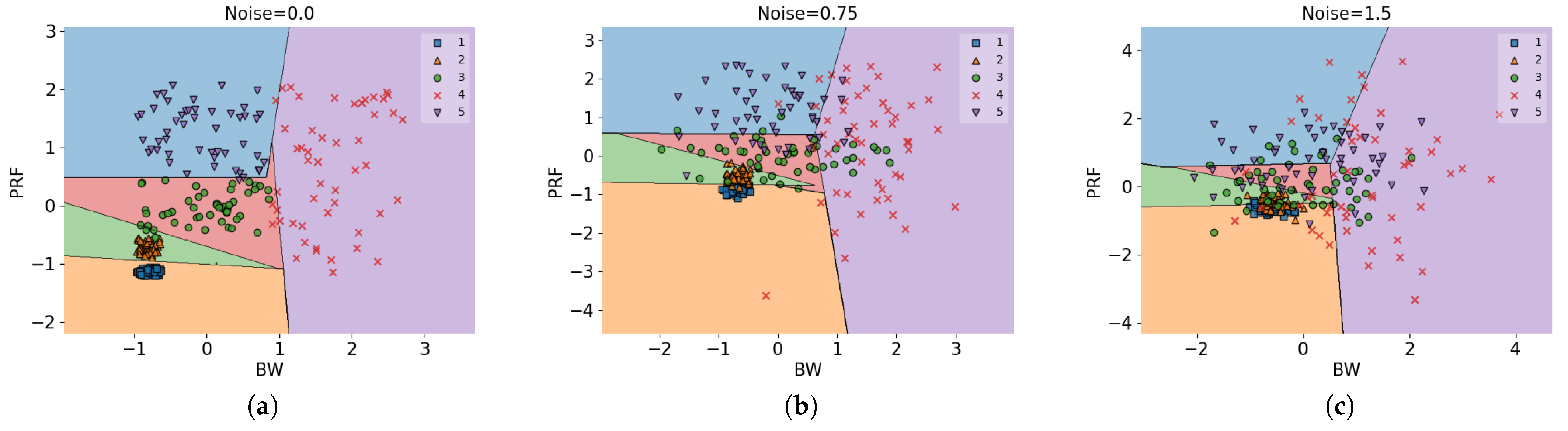

However, the EWMDM models established in the above studies do not consider the impact of CEE noise on decision-making algorithms and idealistically assume that the MRS can accurately identify the MFR working state. Such assumptions differ significantly from CEE. Therefore, RL-based EWMDM methods still require improvements in the following areas: (1) The MRS cannot accurately identify the MFR working state, which is a process that needs to be shown in the modeling; (2) Noise in CEE cannot be ignored, and designing specific reward functions that account for environmental noise is essential; (3) Given the environmental noise and stochasticity, more suitable EWMDM methods should be designed to address the scenario.

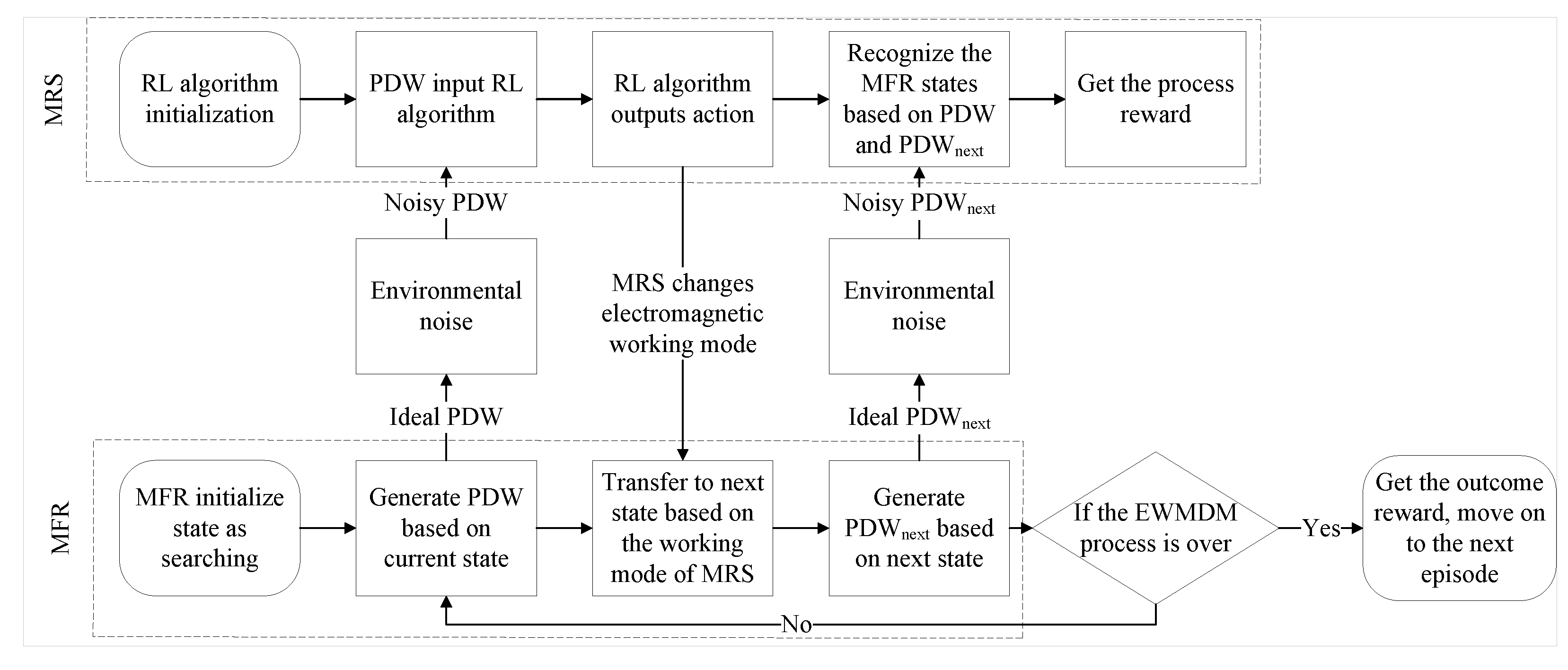

To address the limitations, we develop an EWMDM model for MFR in noisy CEE and propose an improved RL algorithm matched to the characteristics of the noisy environment. Our model simulates the process from the MRS receiving pulse description words (PDWs) to making electromagnetic working mode decisions and introduces enhancements to the reward function to minimize the impact of noise. Furthermore, we deployed the SAC algorithm and the SAC with prioritized experience replay (SAC-PER) algorithm on the proposed model, then analyzed the shortcomings of the existing SAC-PER algorithm in CEE and proposed corresponding improvements, resulting in the SAC with alpha decay prioritized experience replay (SAC-ADPER) algorithm suited for such applications. Finally, multiple simulation experiments were conducted to validate the effectiveness of the model and algorithm. The main contributions of this paper can be summarized as follows:

To approximate real-world conditions, we assumed that the MRS extracts PDWs of the transmitted waveform from the MFR to identify the MFR’s working state, which inherently contains a certain level of environmental noise. By incorporating this noise uncertainty, the EWMDM model better reflects practical application scenarios.

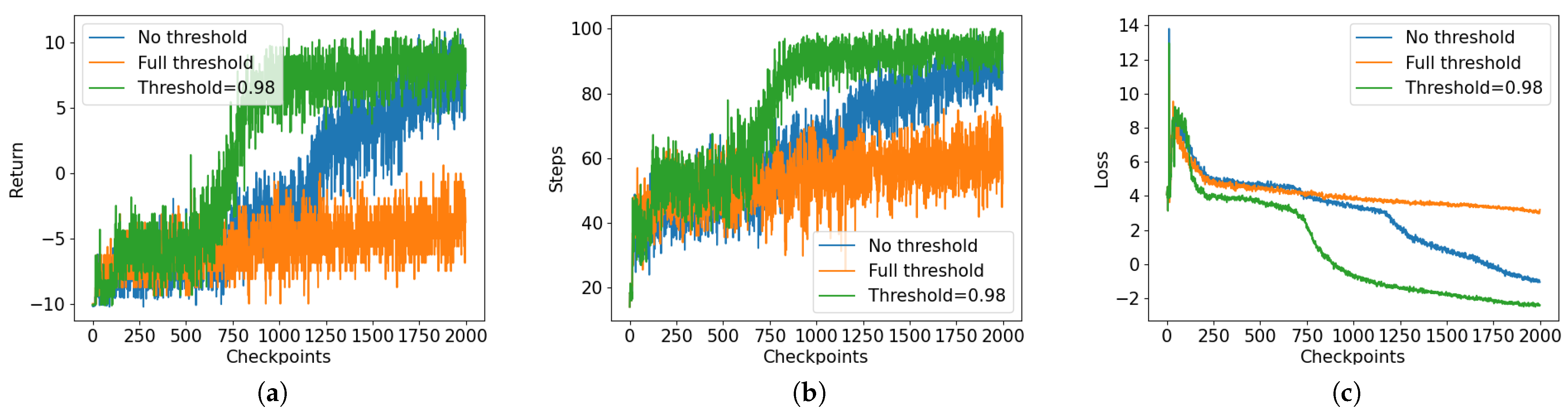

To alleviate the impact of noise on EWMDM, we designed a probability threshold mechanism to filter out uncertain MFR states. While this approach introduces some sparsity into the MRS’s reward function, it significantly reduces the degree of incorrect guidance in the reward function, thereby enhancing the model’s robustness.

The stochasticity of the EWMDM model and the noise in CEE introduce sparsity into the reward function. Initially, we employed the SAC-PER algorithm to handle this challenge. However, through analysis, we identified limitations of SAC-PER in noisy environments. To address these limitations, we introduced an alpha decay mechanism to adjust the PER, resulting in the SAC-ADPER algorithm. The alpha decay mechanism effectively reduces the sampling of unlearnable samples by PER.

The rest of this paper is organized as follows:

Section 2 describes the framework of EWMDM and reiterates the main content of this paper.

Section 3 describes the EWMDM effect evaluation method and the Gaussian noise model.

Section 4 explains how to model the EWMDM process as a Markov decision process (MDP) for RL.

Section 5 discusses the principles of the RL-based EWMDM methods and the improvements introduced in the SAC-ADPER algorithm.

Section 6 details the simulation experiments and analyzes the results. Finally,

Section 7 concludes the paper.

2. Electromagnetic Working Mode Decision-Making

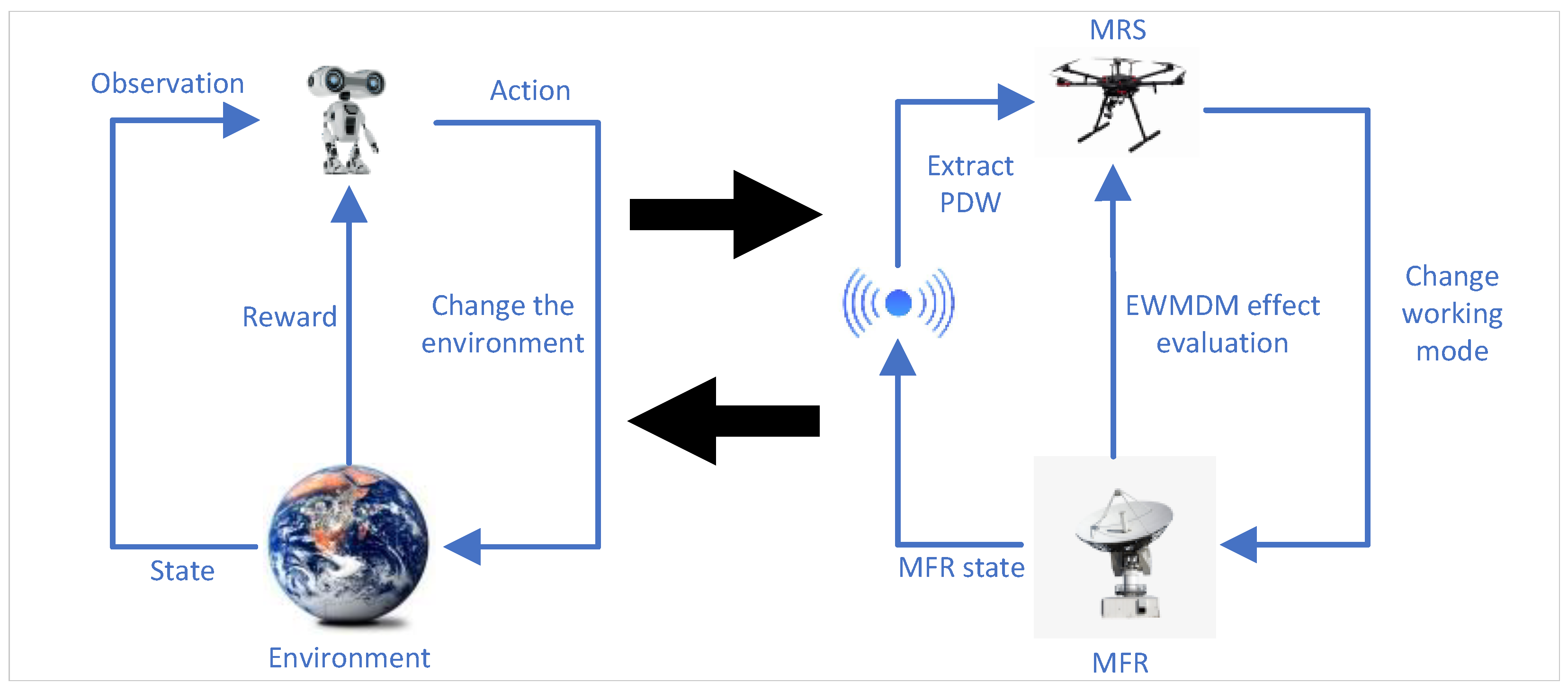

With the rapid development of MFRs, the traditional single electromagnetic working mode for MRS struggles to adapt to CEE dominated by MFR radar signals. In response, researchers have advocated for the integration of cognitive intelligence into the EWMDM process for MRS. As depicted in

Figure 1, EWMDM is a sequential decision-making framework where MRS, functioning as the agent, interacts with the MFR-dominated CEE. MFR and MRS maintain an adversarial relationship: once MFR detects the current electromagnetic working mode of MRS, it adjusts its own working state to conduct detecting and tracking of MRS, thereby acquiring position information about it. The objective of MRS is to ensure the completion of its close-range remote sensing missions by continuously altering its electromagnetic working mode to avoid being tracked down by MFR. MRS observes the environment through the PDWs of the MFR signals, assesses performance via reward derived from EWMDM effectiveness, and adapts its electromagnetic working modes accordingly.

This aligns well with reinforcement learning (RL) principles, where an agent interacts with an environment, learns from feedback, and continuously refines its strategy to maximize long-term performance. In RL environment, after the environment updates its state, the agent obtains an observation of the current state. Then, the agent uses an RL algorithm to make a decision and outputs the action to be taken in the current state. Upon receiving the agent’s action, the environment also undergoes corresponding changes. It can be seen that the MRS corresponds to the agent, the MFR corresponds to the environment, the EWMDM effect evaluation corresponds to the reward, the MFR radar signals intercepted by MRS correspond to the observation, the MFR working state corresponds to the state, and the decision of the MRS electromagnetic working mode corresponding action.

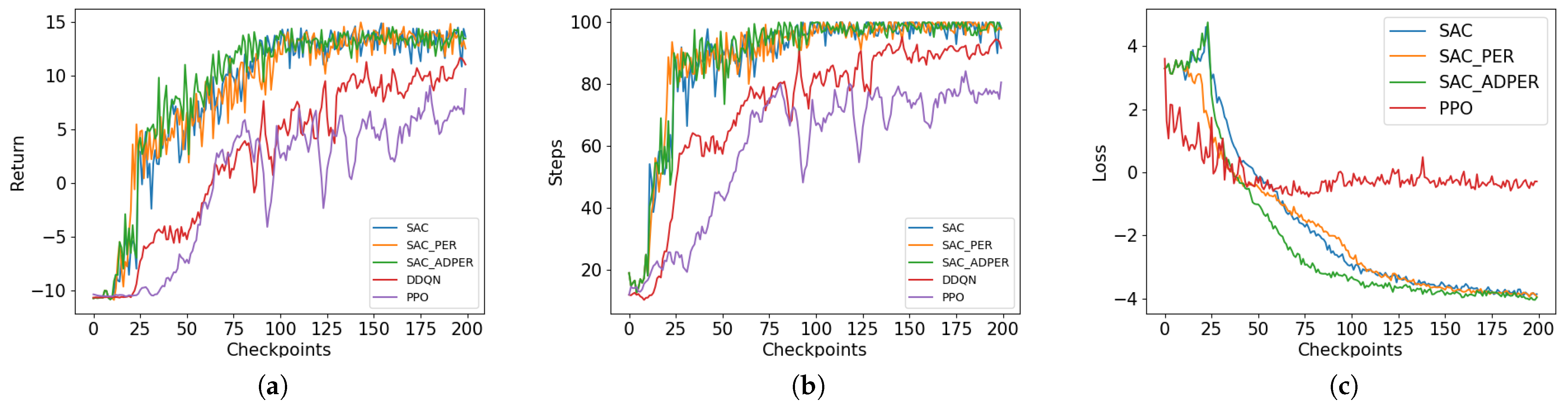

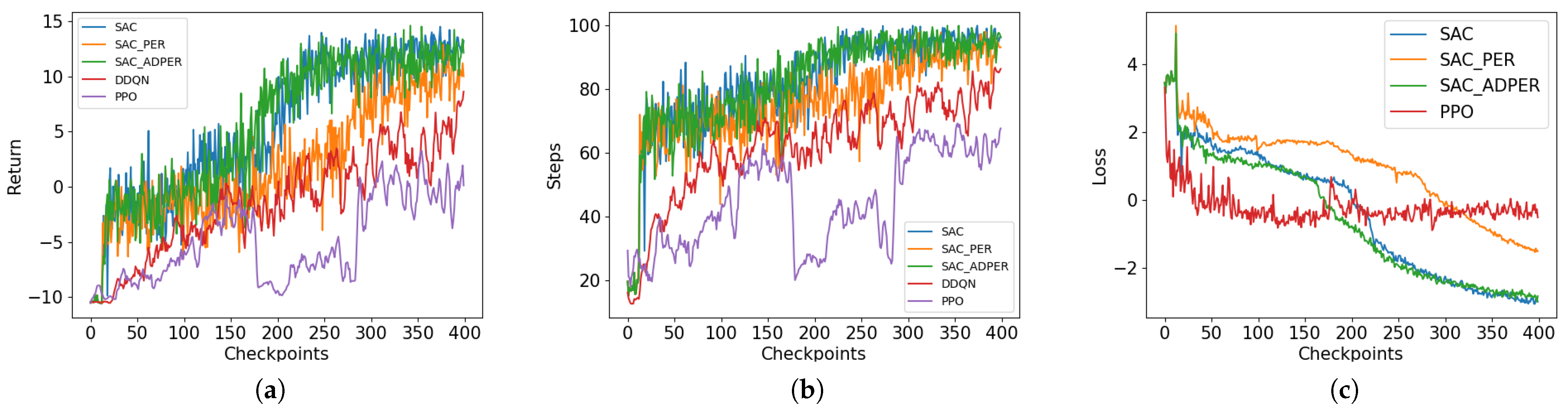

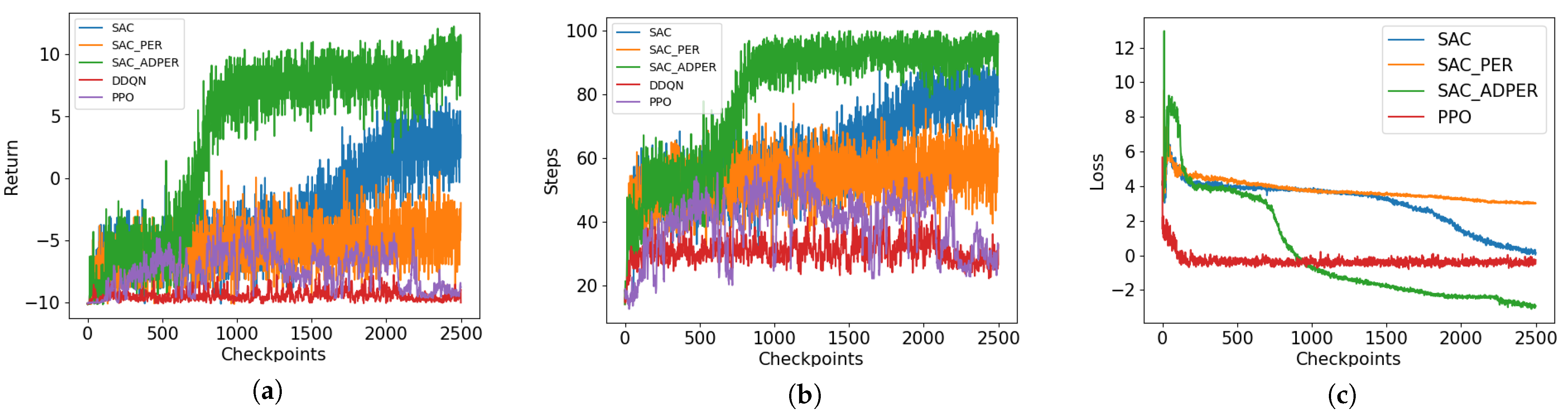

The main content of this paper includes constructing an EWMDM model for noisy CEE by simulating the process where the MRS receives PDWs from the MFR to identify its working state and incorporating environmental noise to better reflect real-world conditions. Then we propose an MFR state recognition probability threshold mechanism to filter out uncertain MFR states. This mechanism helps reduce incorrect guidance in the reward function and enhance model robustness. At last, we improve the SAC-PER algorithm by introducing an alpha decay mechanism to adjust the PER strategy, resulting in the SAC-ADPER algorithm. The fundamental difference between SAC-PER and SAC-ADPER lies in the gradual decay of the alpha parameter in SAC-ADPER to 0 as the number of training steps increases, thereby reducing the frequency of sampling unlearnable experiences by PER in the later stages of training. Multiple experiments conducted in noisy environments demonstrated that the SAC-ADPER algorithm significantly outperforms the traditional SAC-PER algorithm and SAC algorithm. Additionally, we conducted separate experiments for the probability threshold mechanism and the alpha decay mechanism to verify their effectiveness.

5. RL-Based EWMDM Methods

5.1. Soft Actor–Critic

The SAC algorithm is an off-policy deep RL method designed within the maximum entropy framework. Its primary objective is to optimize both reward maximization and policy entropy, striking a balance between efficient exploitation of learned policies and continuous exploration of the environment [

50]. By incorporating an entropy regularization term into the objective function, SAC encourages the agent to maintain stochasticity in its action selection, preventing premature convergence to suboptimal policies [

51].

Compared to traditional actor–critic approaches, SAC explicitly enhances the diversity of actions by maximizing the expected entropy of the policy. This results in improved robustness and adaptability, particularly in high-dimensional and complex decision-making tasks. The introduction of entropy regularization ensures that the agent explores a broader range of possible actions, which mitigates overfitting to specific experiences and helps avoid local optima. Additionally, SAC leverages an off-policy learning mechanism, enabling more efficient sample reuse through experience replay, thereby improving training stability and sample efficiency.

5.1.1. Maximum Entropy RL

Entropy denotes the degree of stochasticity of a random variable, and in RL, since the agent learns a stochastic strategy, the entropy can be used to denote the degree of stochasticity of the strategy in state s.

The idea of maximum entropy RL is that the agent not only maximizes the cumulative reward but also explores new state-action pairs [

52]. Therefore, an entropy regularity term is added to the objective of maximum entropy RL, defined as in Equation (

9), where

is a regularization factor to control the importance of entropy.

5.1.2. Soft Policy Iteration

Soft policy iteration incorporates the principle of maximum entropy RL into the policy iteration process, resulting in a more stable and efficient RL algorithm [

53]. In the maximum entropy RL framework, the objective function is modified, leading to a transformation of the Bellman equation into the Soft Bellman Equation (

10). And the state value function is expressed as Equation (

11).

5.1.3. Network Loss Function

For the critic network, the SAC algorithm adopts the idea of double DQN by utilizing two Q-networks. When selecting the Q-value, the smaller of the two is chosen to mitigate the issue of overestimation in Q-values. The loss function for any given Q-network is expressed as Equations (

12) and (

13).

For the actor network, the loss function of the policy

is derived from the Kullback–Leibler (KL) divergence, and after simplification, it is expressed as

5.1.4. Automatic Adjustment of Entropy Regularization Terms

In the SAC algorithm, the choice of the entropy regularization coefficient

is crucial, as different states require varying levels of policy entropy. To automatically adjust the entropy regularization term, SAC reformulates the RL objective into a constrained optimization problem as Equation (

15),

The expected return is maximized while constraining the mean entropy to be greater than

. After applying mathematical simplifications, the loss function for

is derived as

In other words, when the entropy of the policy falls below the target entropy , increases the value of , thereby enhancing policy stochasticity. Conversely, when the policy entropy exceeds the target entropy, decreases the value of , encouraging the agent to focus more on improving the value function.

5.1.5. Algorithm Flow

In conjunction with the previously discussed concepts of maximum entropy RL and soft policy iteration, the pseudocode of the SAC algorithm can be written as shown in Algorithm 1.

| Algorithm 1: SAC |

- 1:

Use randomized network parameters , and to initialize Critic network , and Actor network ; - 2:

Copy the same parameters and to initialize target network and ; - 3:

Initialize target entropy ; - 4:

Initialize experience replay buffer R; - 5:

for Episode do - 6:

Getting the initial state ; - 7:

for Time step do - 8:

Select action ; - 9:

Execute action , get the reward , state transfer to ; - 10:

for Training step do - 11:

Uniformly sample N tuple from R; - 12:

For each tuple, use the target network to compute: - 13:

where ; - 14:

Compute two Critic network loss functions and update the Critic network; - 15:

Sample the action with the reparameterization trick, compute the loss function of the Actor network, and update the Actor network; - 16:

Compute the loss function for and update ; - 17:

Soft update two Critic networks; - 18:

where is the soft update parameter; - 19:

end for - 20:

end for - 21:

end for

|

Although the SAC algorithm demonstrates robust performance, allowing the agent to rapidly learn reward values while maintaining a certain level of exploration, its uniform sampling strategy in the experience replay structure leads to relatively low sample efficiency. Therefore, improvements are needed to enhance the experience replay structure.

5.2. Prioritized Experience Replay

In standard experience replay, the agent stores its experiences in a replay buffer and uniformly samples from this buffer during training. However, this uniform sampling approach does not ensure the agent learns from the most critical or valuable experiences during each training step.

Prioritized experience replay (PER) is an enhanced experience replay technique in RL designed to accelerate the learning process [

54]. This technique is particularly effective in environments with sparse rewards. In sparse reward environments, the agent receives valuable feedback infrequently, but PER prioritizes replaying experiences with more value, thereby speeding up the learning process in such environments.

5.2.1. Priority

The core of PER is the use of a metric to assess whether an experience is worth learning from, referred to as the priority of experience i. A commonly used priority metric is the absolute value of the TD-error , where higher values indicate that the experience is more valuable for replay.

PER does not employ a purely greedy strategy for prioritized experience replay, meaning it does not always replay the experience with the highest priority. Instead, to maintain the exploration capabilities of the agent, PER uses a prioritized replay strategy that balances between purely greedy and uniform sampling. The probability of sampling experience

is defined as follows:

Here,

represents the priority of experience

i, and

determines the ratio between prioritized sampling and uniform sampling. Specifically, when

, the sampling process is purely uniform.

The priority is typically indicated in a direct form as , where is a small positive constant added to prevent the situation where equals zero, thus ensuring that the experience remains accessible.

5.2.2. Annealing the Bias

PER alters the sampling probability distribution of experiences, thereby introducing a bias. This bias can be mitigated by using importance sampling weights.

where

N represents the size of the experience replay buffer, and

denotes the degree of bias correction. Specifically, when

, all experience update weights are equal, and thus no bias correction is applied.

It is important to note that this parameter, in conjunction with the parameter, affects the priority of the experience. If an experience has a high , then during the algorithm’s update phase, a higher importance sampling weight will be used. Consequently, the for that experience will decrease in the next time step to ensure that the experience is not always sampled.

To ensure the stability of the RL algorithm, PER also normalizes the importance sampling weights using . Additionally, in practical applications, is typically annealed linearly to 1 over the course of training steps. This approach ensures strong bias correction in the early stages of training, while in the later stages, as the algorithm converges and most experiences have similar , the need for bias correction diminishes.

5.2.3. Algorithm Flow

Combining SAC with PER, the following pseudo-code for the SAC-PER algorithm can be written as Algorithm 2:

| Algorithm 2: SAC-PER |

- 1:

Use randomized network parameters , and to initialize Critic network , and Actor network ; - 2:

Copy the same parameters and to initialize target network and ; - 3:

Initialize target entropy ; - 4:

Initialize the prioritized experience replay buffer R and the parameters and ; - 5:

Store in the replay buffer R, assigning the maximum priority to each experience; - 6:

for Episode do - 7:

Getting the initial state ; - 8:

for Time step do - 9:

Select action ; - 10:

Execute action , get the reward , state transfer to ; - 11:

Store in the replay buffer R; - 12:

for Training step do - 13:

Compute the sampling probability for each experience according to ( 17), and sample N experiences based on these probabilities; - 14:

Compute the importance sampling weights for each experience according to ( 18); - 15:

Linearly anneal the parameter until it reaches 1; - 16:

Compute two Critic network loss functions and update the Critic network; - 17:

Sample the action with the reparameterization trick, compute the loss function of the Actor network, and update the Actor network; - 18:

Compute the loss function for and update ; - 19:

Soft update two Critic networks; - 20:

end for - 21:

end for - 22:

end for

|

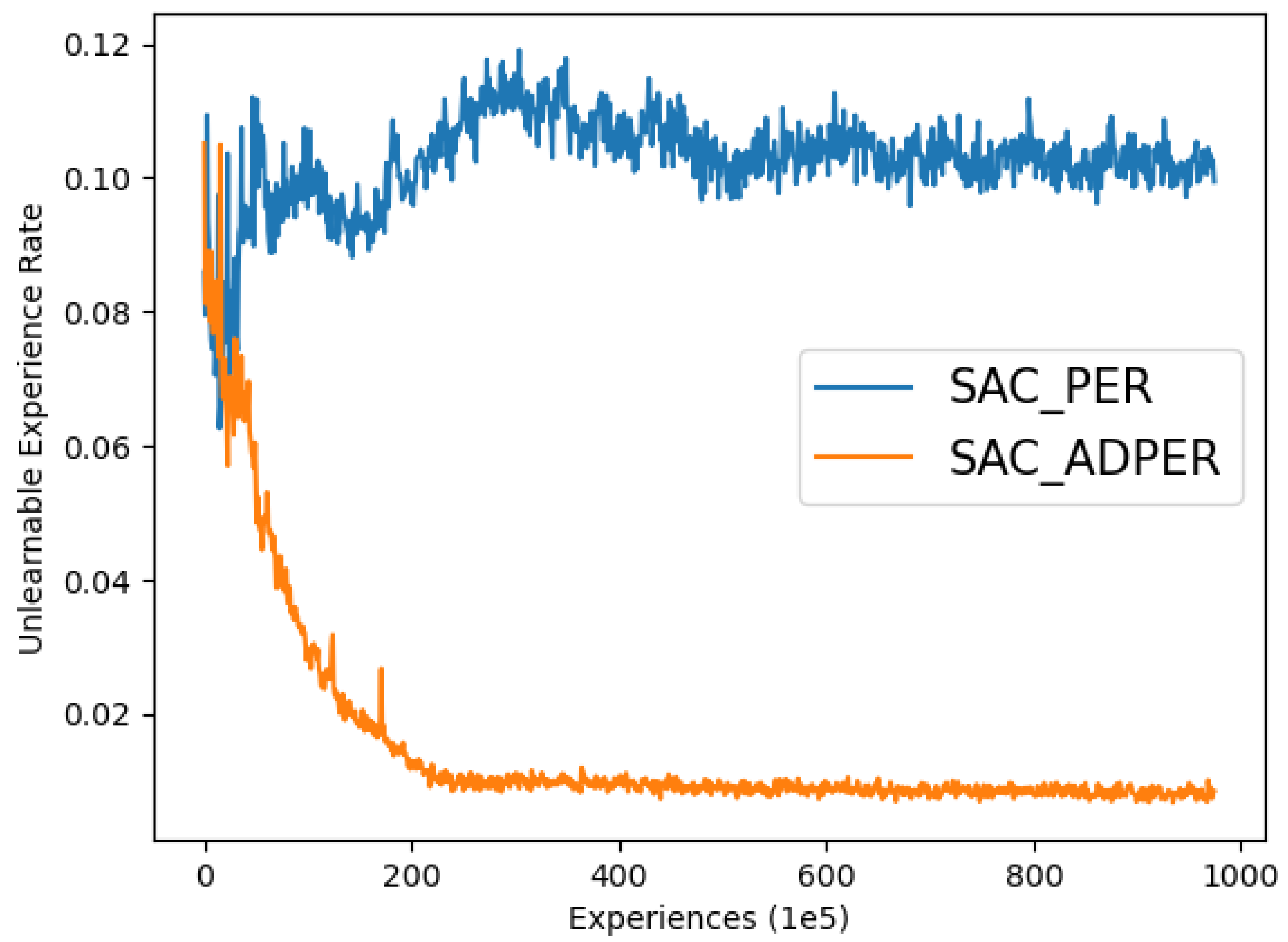

The algorithm combines SAC with PER, modifying the sampling probability distribution of experience replay to prioritize the retrieval of valuable experiences. While it shows significant improvement over SAC in relatively ideal environments, the presence of stochasticity in EWMDM environments introduces instability to the PER improvement. Therefore, we innovatively propose a noise-robust PER improvement based on parameter decay, termed Alpha Decay PER (ADPER).

5.3. Alpha Decay Prioritized Experience Replay

In EWMDM environments, environmental noise is an essential factor that cannot be ignored. As the electromagnetic environment becomes more complex, the accuracy of the MRS’s identification of MFR states decreases, causing the MRS to receive the incorrect reward. In addition, environmental stochasticity in EWMDM environments also affects RL algorithms. In

Section 4, we model the MFR state transition process as a probabilistic transition matrix. This matrix introduces stochasticity into the EWMDM environment, meaning that regardless of the EWMDM method employed, the MFR state has a probability of transitioning in one of three directions: increasing, maintaining, or decreasing in threat level. Merely, for the most effective EWMDM method in the current MFR state, the probability of transitioning to a lower threat state is the highest. During the mid-to-late stages of agent training, the agent may have already learned certain correct strategies, meaning it can make optimal decisions in some MFR states. So in the mid-to-late stages of agent training, if the agent employs the most effective method in a given MFR state, but the MFR state transitions towards a higher threat level, the

in this experience will be high, which will increase the sampling probability of the experience in PER improvement and sample the experience more frequently. This experience offers little left for the intelligent agent to learn, and in fact runs counter to the original intent of PER. In reality, we do not want the agent to learn from such unlearnable experiences, which will cause the agent to learn in the wrong direction.

Based on the aforementioned characteristics of the EWMDM environment, we propose the ADPER improvement, where the parameter is linearly annealed from its initial value to zero. Compared to the PER improvement, it can be anticipated that although this approach may reduce the agent’s sampling frequency from experiences with high to some extent, it will also decrease the frequency with which the agent is guided by unlearnable experiences. In the early stages of training, most experiences exhibit high due to the imperfection of the agent’s policy, rather than being caused by environmental noise or unexpected results. Therefore, sampling experiences based on priority during the early stages of training can effectively accelerate the algorithm’s convergence speed. However, in the mid to late stages of training, as the agent’s policy becomes more refined, most experiences will have low . At this point, if PER continues to prioritize sampling experiences with high , there is a high probability of sampling unlearnable experiences caused by environmental stochasticity, which will slow down the algorithm’s convergence. Consequently, due to the noise and stochasticity inherent in EWMDM environments, adopting ADPER improvement is necessary.

Algorithm 3 presents the pseudocode for the SAC combined with the ADPER improvement.

| Algorithm 3: SAC-ADPER |

- 1:

Use randomized network parameters , and to initialize Critic network , and Actor network ; - 2:

Copy the same parameters and to initialize target network and ; - 3:

Initialize target entropy ; - 4:

Initialize the prioritized experience replay buffer R and the parameters and ; - 5:

Store in the replay buffer R, assigning the maximum priority to each sample; - 6:

for Episode do - 7:

Getting the initial state ; - 8:

for Time step do - 9:

Select action ; - 10:

Execute action , get the reward , state transfer to ; - 11:

Store in the replay buffer R; - 12:

for Training step do - 13:

Compute the sampling probability for each experience according to ( 17), and sample N experiences based on these probabilities; - 14:

Compute the importance sampling weights for each experience according to ( 18); - 15:

Linearly anneal the parameter until it reaches 1; - 16:

Linearly anneal the parameter until it reaches 0; - 17:

Compute two Critic network loss functions and update the Critic network; - 18:

Sample the action with the reparameterization trick, compute the loss function of the Actor network, and update the Actor network; - 19:

Compute the loss function for and update ; - 20:

Soft update two Critic networks; - 21:

end for - 22:

end for - 23:

end for

|

7. Conclusions

With the rapid advancement of MFR and the growing complexity of noise in CEE, there is an urgent need for a noise-robust EWMDM algorithm. This paper systematically models the EWMDM problem in both ideal and noisy environments and proposes an improved RL algorithm. Initially, we construct an EWMDM model that simulates real-world electromagnetic noise by introducing noise into the PDWs, which interferes with the MRS’s ability to identify MFR states. To mitigate the impact of noise on the reward function, we innovatively introduce a probability threshold filtering mechanism that assigns zero rewards to uncertain MFR states, thereby preventing the agent from being misguided by incorrect rewards. Then we integrate PER into the SAC algorithm and propose the ADPER improvement. During training, the parameter is linearly annealed to zero, significantly reducing the agent’s sampling frequency of unlearnable experiences. Experimental results demonstrate that SAC-ADPER is highly effective for EWMDM in noisy environments, with the probability threshold being essential for effective decision-making. This adaptive prioritization approach, which dynamically adjusts the sampling strategy to focus on learnable experiences while minimizing the impact of noise, highlights the innovative advantages of the ADPER mechanism in enhancing learning efficiency and decision-making performance in challenging CEE.

Beyond theoretical contributions, the practical implications of this research are substantial, particularly in fields such as electronic warfare and stealth technology for UAVs. In electronic warfare, the ability to make accurate and timely decisions in noisy environments is critical for effective countermeasures against advanced radar systems. Similarly, in UAV radar stealth, optimizing decision-making under noisy conditions can significantly enhance the survivability and mission success rates of UAVs operating in contested airspaces. By providing a robust and adaptive algorithmic solution, this research not only advances the theoretical understanding of EWMDM but also offers tangible benefits for real-world applications, making the study both convincing and highly relevant. Looking forward, future research could focus on integrating advanced RL techniques and real-time data fusion to enhance algorithm adaptability and situational awareness. Collaborations with industry could lead to real-world prototypes, paving the way for smart MRS that make intelligent decisions in complex environments.