1. Introduction

The employment of Unmanned Aerial Vehicles (UAVs) for transmission line inspection, fault detection, and timely mitigation of potential risks has evolved into an essential auxiliary approach in the operation and maintenance of modern power systems [

1,

2]. However, images acquired by UAVs are susceptible to degradation in quality due to factors such as haze, consequently impairing the accuracy of subsequent visual tasks.

Currently, numerous methods exist in the field of image dehazing, which can generally be categorized into three major types: image enhancement, physics model-based methods, and deep learning-based approaches. The objective of image dehazing is to restore haze-free images from hazy inputs. Traditional image enhancement techniques improve visual quality by accentuating image details or enhancing contrast. Specific algorithms include the Retinex algorithm, histogram equalization, and partial differential equation-based methods [

3,

4,

5]. Although these approaches amplify image detail features through certain statistical and transformational means, they often result in incomplete haze removal to a significant extent.

The Atmospheric Scattering Model (ASM) [

6] is commonly employed to explain the process of image dehazing, as represented by Equation (1):

where

represents the hazy image;

denotes the restored image;

signifies the transmission map;

indicates the scene depth;

stands for the global atmospheric light.

The principle of image dehazing based on physical models simulates the scattering and absorption processes of light in the atmosphere to estimate the scene depth, subsequently derive the transmission map, and ultimately restore a clear image. The Dark Channel Prior (DCP) [

7] is a representative algorithm. While it improves dehazing performance in the atmospheric scattering model by providing more accurate scene depth estimation and atmospheric light estimation, it tends to over-enhance sky regions and is susceptible to image noise. For instance, in reference [

8], the gradient features of directly transmitted light intensity estimated by the DCP algorithm were used as guidance to solve and optimize the target degree of polarization for image dehazing. Although the resulting images are relatively clear to some extent, the high dependency on parameter accuracy leads to noise and color artifacts in the images. In reference [

9], bilateral filtering was employed as the transfer function for homomorphic filtering to estimate atmospheric light and scene reflectance. This approach maintains edge clarity from the perspective of color components, but the independent dehazing operations on each color channel result in inconsistent dehazing effects across channels, thereby weakening edge and texture information. To preserve edge information in clearly dehazed images, edge-preserving filters have been introduced. For example, reference [

10] proposed a weighted guided image filter. The method decomposes multiple underexposed images generated with different gamma values, refines the weights of each decomposed image block using this filter, and finally performs image fusion. However, due to the decomposition and fusion processes, the algorithm requires longer computational time, making it unsuitable for real-time image processing.

In addition to visual sensors such as cameras, other sensing technologies, including LiDAR, have also been applied in image dehazing. For instance, in reference [

11], single-photon counting was utilized to enhance the GM-APD LiDAR system. By performing maximum likelihood estimation on echo photons, the backscattering distribution was obtained, and target echo positions were extracted to achieve image dehazing. However, this method exhibits limited echo signal extraction capability under dense fog conditions. In reference [

12], a fusion strategy combining images and LiDAR data was adopted for dehazing. Nevertheless, relying solely on the mean pixel value to estimate the dark channel mean deviates from the scattering coefficient estimation model, leading to suboptimal dehazing performance. Moreover, LiDAR noise points increase significantly in heavy fog. Reference [

13] proposed a fusion approach integrating radar and LiDAR data through a bird’s-eye view representation to enhance edge clarity. However, this method requires substantial computational resources for dense bird’s-eye view queries and assigns uniform attention to all features, lacking adaptive emphasis. Although the aforementioned dehazing methods can produce relatively clear images to some extent, they struggle to achieve satisfactory performance in complex scenarios such as non-uniform fog.

Deep learning methods are capable of learning haze patterns and image priors from large-scale data, without imposing stringent requirements on the accuracy of model parameters, and demonstrate strong adaptability to non-uniform haze or complex scenes. Consequently, convolutional neural network (CNN)-based image dehazing algorithms have been extensively studied in recent years. In reference [

14], an edge extraction branch was incorporated as an additional edge prior, supplemented by an edge loss to impose secondary constraints on the network. Reference [

15] introduced a depth-refinement branch to recover structural information in the form of a depth map, which guided a dehazing branch to enhance contour restoration. However, this method performs poorly at depth discontinuities and tends to lose information in low-light regions. In reference [

16], multi-scale parallel large convolutional kernels and an enhanced parallel attention module were employed for feature extraction and reconstruction. Reference [

17] proposed a dynamic convolution mechanism that adaptively adjusts the weights of output channels and spatial dimensions based on input characteristics. This was combined with depthwise separable convolution to leverage multi-dimensional features for haze-free image recovery. Although these approaches improve dehazing performance by expanding multi-scale receptive fields to capture both global and local features, they often increase model complexity, making it difficult to meet practical requirements for real-time inspection. In reference [

18], a lightweight encoder–decoder dehazing architecture was used as a teacher network, while a student network constructed via channel multipliers distilled knowledge encoded from hazy images; the teacher-student collaboration aimed to reduce computational complexity. Reference [

19] adopted a parallel combination of standard and dilated convolutions to enlarge the receptive field, along with downsampling operations prior to haze extraction and removal modules to reduce computational load in subsequent layers. Nevertheless, this approach inherently increases the number of convolutional layers and kernels. Reference [

20] employed atrous convolutions to construct a multi-scale adaptive module for balancing dehazing performance and computational cost. It introduced a lightweight channel attention-guided fusion module to improve feature extraction. However, the conventional serial attention structure fails to adequately account for the non-uniform distribution of haze, leading to insufficient dehazing in dense haze regions.

Generative Adversarial Networks (GANs) have also been introduced into dehazing algorithms. Reference [

21] proposed an enhanced cycle-consistent GAN model that employs local-global discriminators to address non-uniformly distributed haze, thereby reducing residual haze in the restored images. Nevertheless, due to the lack of paired training data in image dehazing tasks, GAN-based methods often struggle to generate high-quality dehazed images with satisfactory perceptual and quantitative performance. Furthermore, With the remarkable performance of Transformer models in image-related tasks, numerous studies have begun to employ Transformer architectures for image dehazing. In reference [

22], a dual-branch collaborative dehazing network integrating both Transformer and CNN components was proposed. This network utilizes residual modules to extract features and incorporates a non-autoregressive mechanism with lateral connections in the Transformer blocks to preserve features at different depths. However, Transformer-based models generally involve a large number of parameters and high computational latency, making them difficult to deploy in practical scenarios.

In the field of engineering inspection, machine learning technologies have demonstrated the value of scenario-specific model optimization, which can significantly enhance their detection performance in complex engineering environments. For instance, in Reference [

23], researchers leveraged Bayesian optimization to automatically tune the hyperparameters of the LSTM model, addressing the inherent parameter tuning challenges of traditional machine learning methods and achieving high classification accuracy for Concrete-Filled Steel Tube (CFST) debonding defects of different levels.

Therefore, to address the core requirement of balancing dehazing performance and computational efficiency in the scenario of UAV-based transmission line inspection, this paper proposes a fast multi-patch hierarchical algorithm based on machine learning. This approach enhances defogging effects in drone inspection photos of power transmission lines by incorporating high-frequency guidance to improve the attention mechanism and utilizing an asymmetric encoder–decoder architecture. A Mix structure module is embedded in the encoder to establish a dual-branch attention coordination mechanism. Multi-source high-frequency features guide attention allocation, integrating Laplace, Sobel-X/Y, and Prewitt operators to extract multi-directional edge features. This enhances edge capture capabilities for critical targets such as horizontal conductors and vertical towers. Simultaneously, a new fog density estimation branch based on the dark channel mean is introduced. This dynamically adjusts the weights of the dual branches according to fog concentration, resolving attention failure caused by high-frequency signal attenuation in dense fog areas. At the decoder stage, depthwise separable convolutions (DSCs) are employed to build lightweight residual modules, significantly reducing computational load while preserving feature combination capabilities. An inter-block feature fusion module is introduced at the decoder output, utilizing a multi-scale weighted fusion strategy to eliminate edge artifacts accumulated through multi-level segmentation. This further enhances edge continuity and overall image quality in the defogged output.

2. Dehazing Treatment in Non-Uniform Haze Areas

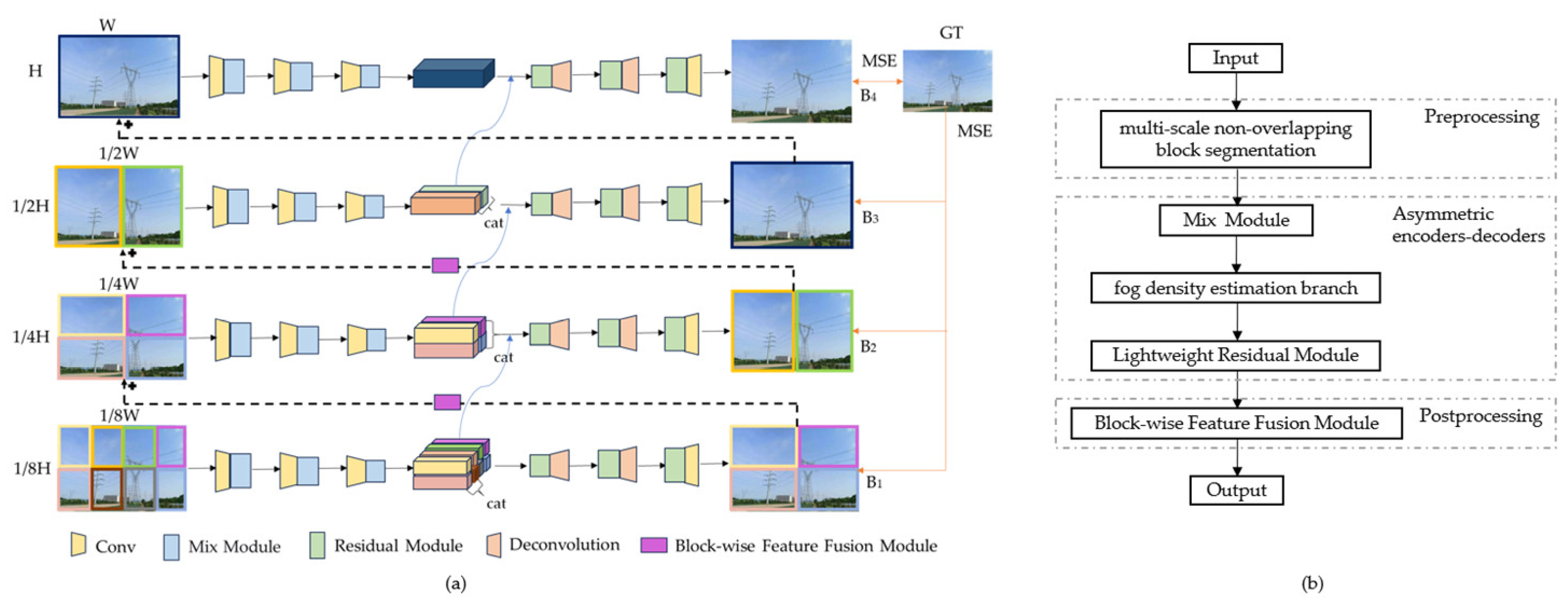

Multi-scale segmentation is a common strategy in fog removal, but existing methods suffer from issues such as fragmented inter-block features or poor scale adaptability. The algorithm proposed in this paper adopts a multi-layer architecture that progresses from coarse to fine, fully integrating features from different levels, as shown in

Figure 1a. Each layer employs an asymmetric encoder–decoder structure, with asymmetry primarily manifested in the distinct modules they comprise. For each pair of encoder–decoder modules at different levels, the algorithm processes image blocks at distinct scales, reconstructing corresponding clear images at each scale. Processing at multiple scales enables richer spatial feature information and defogged downsampled images. The input raw image is segmented into multiple non-overlapping blocks as inputs for each scale. This approach alters the receptive field by varying the image size, thereby capturing more feature information and enhancing image clarity.

This structure comprises four distinct scales (

,

), each of which segments the input image into

number of non-overlapping image blocks. Let

denote the input image,

represent the

scale, and the

block. Here,

indicates the number of image blocks at each scale, and

denotes the final defogged output image. Through the aforementioned multi-scale process, richer spatial guided features

can be obtained, which can be expressed as Equation (2):

where

denotes the non-uniform dehazing algorithm proposed in this paper, and

represents the training parameters. Since the algorithm is recurrent,

serves as the intermediate state features to enable cross-scale flow from

to

. Each encoder branch consists of 3 convolutions, with a Mix structure module following each convolutional layer. Furthermore, in each decoder branch, a residual module is placed prior to each deconvolutional layer. The blue arrow denotes the intermediate feature map

, which is obtained by performing dual upsampling on

and the input original image.

The workflow of the algorithm proposed in this paper is illustrated in

Figure 1b. First, the input hazy image is divided into non-overlapping blocks according to the aforementioned 4 scales, and then each block under different scales is separately fed into the asymmetric encoder–decoder at the corresponding level. Blocks and scales interact through cross-scale feature flow—after bilinear upsampling, the block features of the previous scale serve as the initialization features of the corresponding block in the next scale, ensuring the coherence of block features across different scales. Specifically, the encoder embeds a Mix structure module to construct the dual-branch attention mechanism, and at the same time, the fog density estimation branch calculates the haze concentration based on the dark channel mean to dynamically adjust the weight allocation between the low-frequency global perception branch and the high-frequency local enhancement branch in the Mix module; the decoder adopts DSC to construct lightweight residual modules for feature reconstruction. Then, the inter-block feature fusion module performs weighted fusion processing on the reconstructed features of each block at layers B

1 (1/2) and B

2 (1/4), eliminating inter-block artifacts caused by multi-block division. Finally, the fused features are output as haze-free images.

2.1. Asymmetric Encoders-Decoders

Current mainstream lightweight networks, such as MobileNet and EfficientNet, are designed to optimize end-to-end computational efficiency. They adopt strategies like depthwise separable convolution to reduce the parameter count and computational complexity; however, they focus on balanced efficiency improvement across all modules, leading to inadequate performance in complex scenarios. To address the problems of increased computational complexity and blurred edge feature regions in dehazing algorithms, this paper employs an asymmetric encoder–decoder structure.

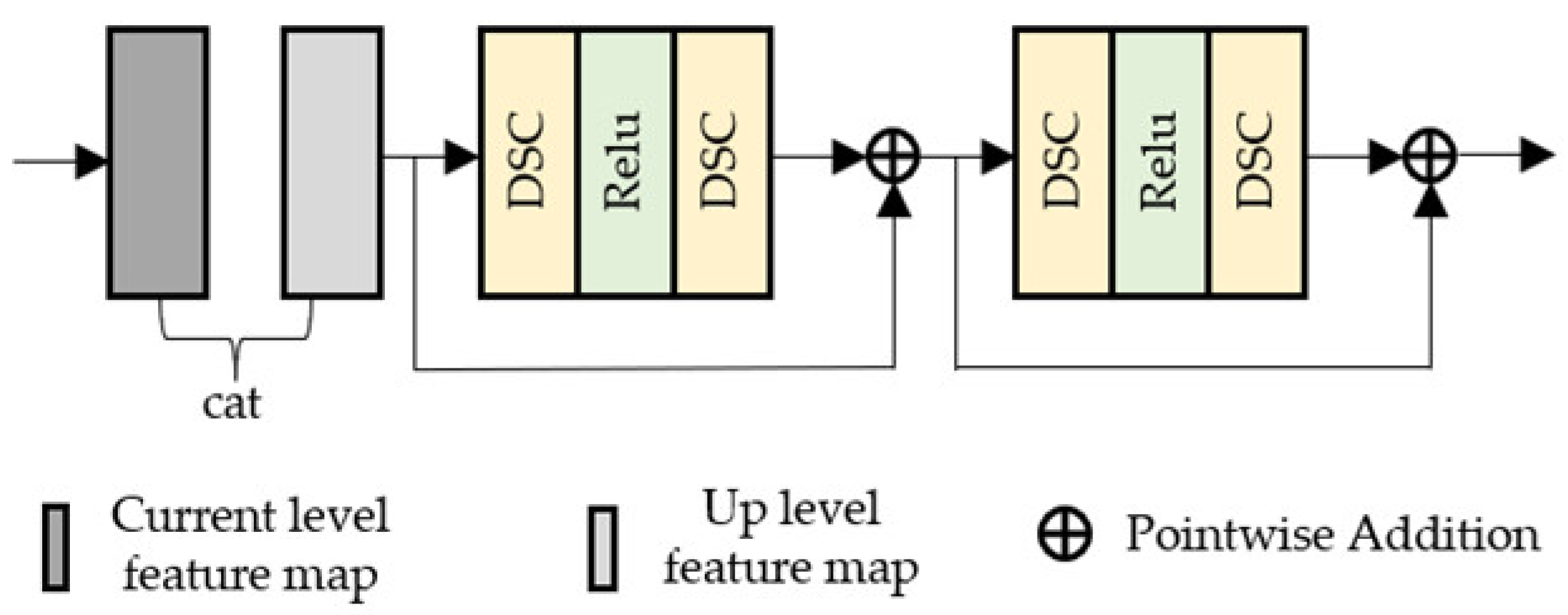

Within the architecture proposed in this paper, asymmetry is primarily achieved through the differentiation of modules in the encoder and decoder branches. The decoder module is composed of 2 deconvolutional layers, 3 lightweight residual modules, and 1 convolutional layer. Each of these 3 lightweight residual modules contains two DSC, with a ReLU activation function placed in the middle, as illustrated in

Figure 2. The encoder module consists of 3 convolutional layers and 3 hybrid modules. Specifically, each module within the residual modules comprises two standard convolutions, with a ReLU activation function inserted in between, as illustrated in

Figure 3.

In contrast, standard convolutions are employed in the hybrid structure modules of the encoder branches to avoid the inter-channel information disconnection caused by DSC. Additionally, an attention mechanism guided by high-frequency feature maps is introduced into these branches to ensure the complete extraction of density differences and edge details in non-uniform hazy regions. Meanwhile, the decoder adopts DSC to reconstruct the features extracted by the encoder into haze-free images, which can reduce computational complexity while maintaining the feature combination capability.

To eliminate the inter-block edge artifacts accumulated across multiple levels, such as the inter-block discontinuity of transmission lines, an inter-block feature fusion module is introduced at the output of the decoder. Input images of different scales are processed through a pair of encoders-decoders, denoted as

and

(

), yielding the global feature

, as formulated in Equation (3):

where

denotes the intermediate feature of the previous level, which enables cross-scale feature flow and avoids inter-block discontinuity. The inter-block weights are calculated via cosine similarity to achieve smooth fusion, as shown in Equation (4):

Scale

requires no fusion and directly outputs the global feature map for generating the final haze-free image

, as shown in Equation (5):

2.2. Mix Structure Module

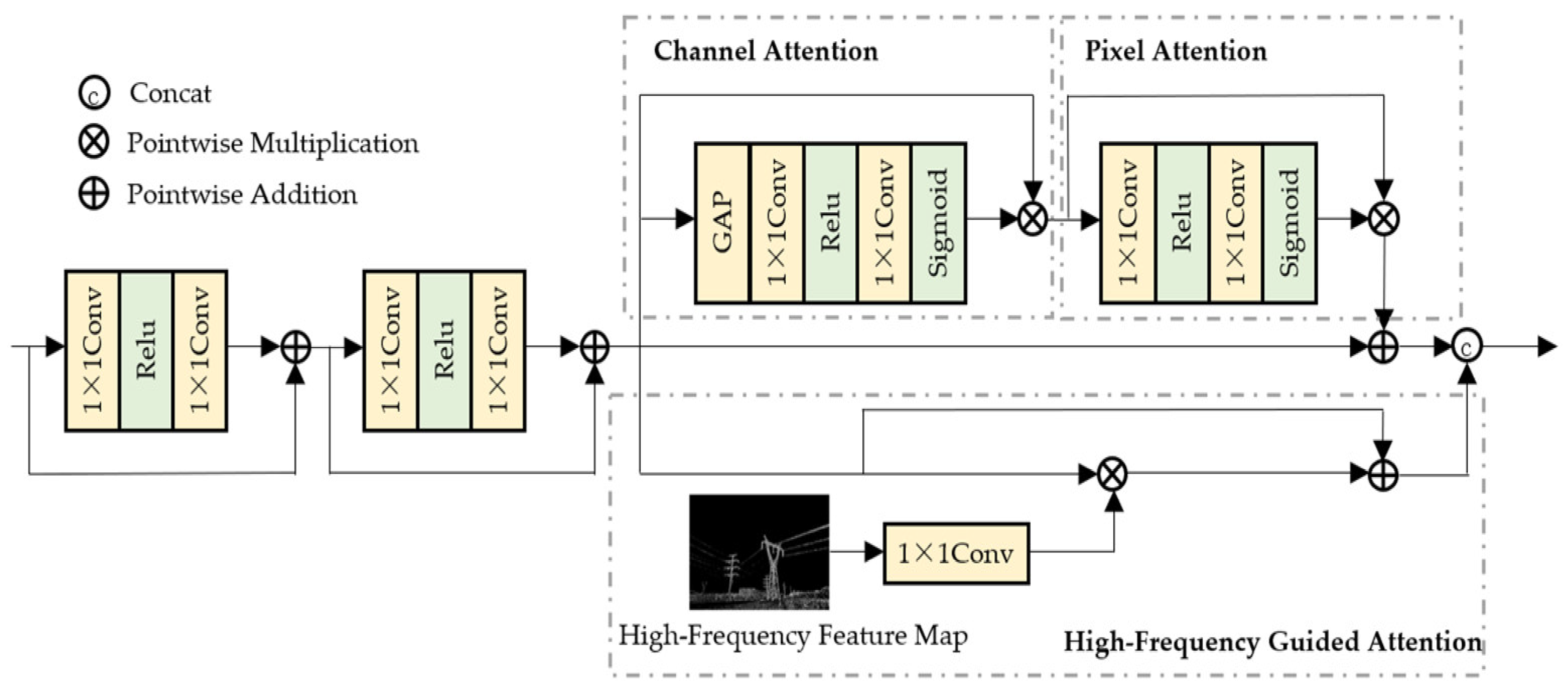

The Mix structure module is embedded in the encoder and comprises a residual module and an attention module guided by high-frequency feature maps. The overall structure of the module is illustrated in

Figure 3.

Existing high-frequency guided methods mostly suffer from problems such as a single branch, poor noise resistance, or low adaptability. To address these issues, this paper achieves differentiated advantages through a dual-branch collaboration and noise-resistant optimization approach. The core structure of the dual-branch attention collaboration module consists of two complementary branches: a low-frequency global perception branch and a high-frequency local enhancement branch. A frequency domain decomposition strategy is adopted to realize the collaborative optimization of global context modeling and local detail preservation, thereby improving the network’s adaptability to the non-uniform distribution of haze. This hierarchical attention mechanism can dynamically adjust the feature weights of different channels and spatial positions, effectively overcoming the limitations of traditional methods.

The low-frequency global perception branch cascades Channel Attention (CA) and Pixel Attention (PA) to model cross-channel dependencies and spatial local features, respectively. Feature extraction is performed on the input image via a residual module to obtain a shallow feature map, which is then fed into the attention module guided by high-frequency features.

CA primarily focuses on the importance of different channels in the feature map, enabling effective extraction of global information and adjustment of the channel dimension of features. It aggregates spatial information through a global average pooling operation and then employs convolutional layers to learn the weight of each channel, assisting the model in enhancing the feature channels most useful for the current task. Assuming the feature map is

, CA can be expressed as Equation (6):

where

denotes pointwise convolution;

represents the assignment of different weights to each channel; and

stands for global average pooling.

PA, by contrast, focuses more on the importance of each pixel in the feature map, enhancing the representational capability of the feature map and the detailed features of the image. The pixel attention module enables the model to better focus on high-frequency regions in the image, assigning higher weights to regions with significant variations in fog density, thereby improving the dehazing effect. Pixel attention can be expressed as Equation (7):

where

denotes the feature map after pixel attention.

In the high-frequency local enhancement branch, the given hazy image is denoised using a Gaussian function to pre-smooth the image. Subsequently, the Laplacian operator is employed to separate the texture edge information in the image, and information interaction is performed through convolution operations to obtain high-frequency features of the image. To compensate for the directional limitation of a single operator, multi-directional edge features—including Sobel-X (horizontal), Sobel-Y (vertical), and Prewitt (diagonal)—are fused to fully capture the edges of horizontal conductors and vertical towers of transmission lines. Finally, the two types of high-frequency edge features are fused and used as weight information to guide the model in learning the edge information of the image from the image features. Detailed explanations of each operator are provided in

Appendix A.

To address the issue of attention mechanism failure caused by high-frequency signal attenuation in dense haze regions, an additional fog density estimation branch is introduced, which adjusts the weights of the dual branches based on an adaptive fog density-guided mechanism. Firstly, the fog density

is estimated via the dark channel mean value, as shown in Equation (8):

where

denotes the RGB channels;

represents the local window of the image;

stands for the pixel value of channel

at coordinates

.

Subsequently, the weights of the dual branches are adjusted according to

: global guidance is enhanced in dense haze, while local guidance is strengthened in light haze, as shown in Equation (9):

where

denotes the finally output feature map;

is the fused multi-directional high-frequency edge feature map.

This feature attention mechanism adopts a differentiated weight allocation strategy to dynamically adjust inter-channel dependencies and spatial features, assigning adaptive attention weights to different channels and pixels. Furthermore, high-frequency features, which provide abundant multi-scale edge prior information, are introduced as attention modulation factors to guide the network in strengthening edge regions during feature learning. This mechanism allocates more weights to image edge regions, thereby improving the dehazing effect in dense haze regions.

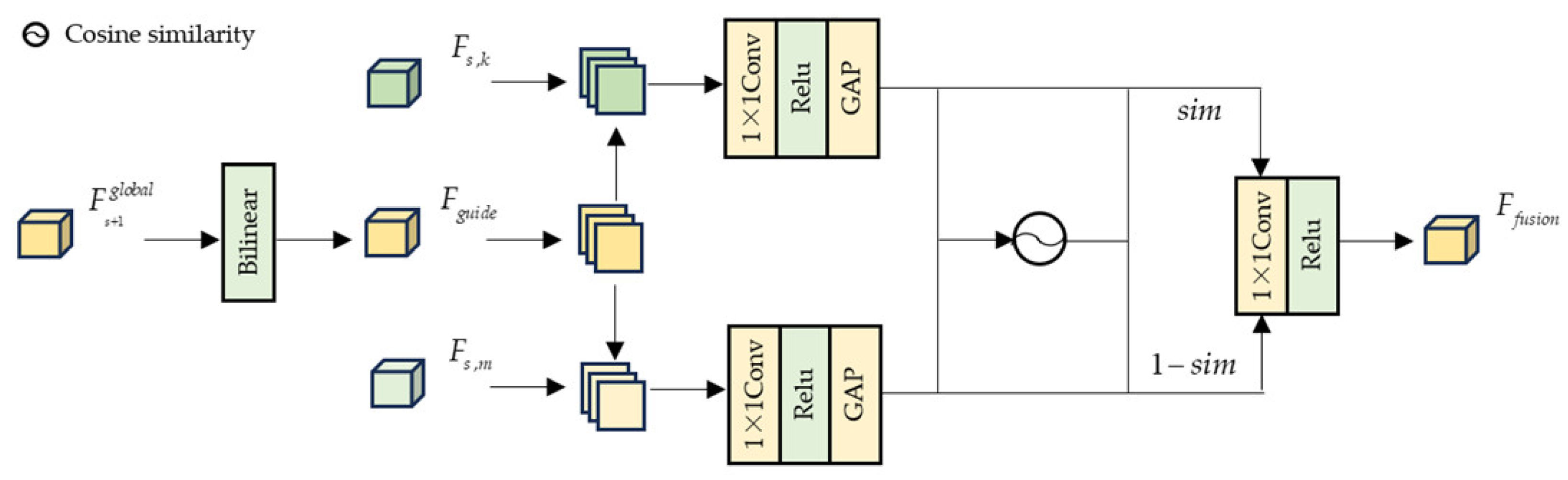

2.3. Block-Wise Feature Fusion Module

Although the block-wise features output by the decoder can effectively preserve local image details, the non-overlapping block processing method is prone to causing the problem of inter-block edge discontinuity. To address this issue, this paper proposes an inter-block attention fusion module at the output end of the decoder, as illustrated in

Figure 4. Through the core strategies of cross-scale feature alignment, cosine similarity-based edge continuity quantification, and region-wise weighted fusion, this module effectively resolves the inter-block edge discontinuity problem.

Feature alignment is guided by the global feature

after fusion at the previous scale, which calibrates the spatial deviation of adjacent blocks at the current scale and avoids edge misalignment caused by block cropping.

is enlarged to the resolution of the current scale via bilinear upsampling to obtain the guiding feature map

, as shown in Equation (10):

For adjacent blocks at the current scale, with as the reference, extract the right pixel region of the edge region of one adjacent block and the left pixel region of the edge region of the other adjacent block. Then, find the regions with the maximum grayscale correlation to them in , respectively, and calculate the spatial offsets and . We define the edge offset threshold . If condition or is satisfied, spatial translation calibration is performed on to ensure edge alignment.

Perform global average pooling only on the calibrated edge regions to compress the spatial dimensions and obtain edge feature vectors, as shown in Equation (11):

Calculate the cosine similarity of the feature vectors of adjacent blocks to quantify edge continuity. If the edges of the two blocks are continuous, sim approaches 1; if the edges are discontinuous, sim approaches below 0.3, as shown in Equation (12):

Weighted fusion only weights the edge connection region

, while retaining the original features in non-overlapping regions to avoid distortion in non-edge regions. For each pixel

in the connection region, weighting is performed according to similarity to highlight continuous edge features; original features are retained in non-connection regions, as shown in Equation (13):

2.4. Loss Function

The loss function serves as a performance evaluation metric that dynamically quantifies the prediction deviation of the model during training. Through gradient-based optimization strategies, it continuously drives parameter updates, guiding the model toward convergence to an optimal or sub-optimal solution. This process enhances the model’s representational learning capability and task-specific adaptability.

This paper adopts a multi-scale progressive optimization approach, where, within a coarse-to-fine hierarchical structure, the output images at each scale are constrained to restore the true haze-free scene. Accordingly, the training loss is defined as the Mean Square Error (MSE) between the dehazed result at each scale and the corresponding ground-truth image. This ensures consistent dehazing performance and detail fidelity across different resolution levels, as formalized in Equation (14):

where

denotes the

,

and

represent the dehazed image and the ground-truth image at the

scale, respectively,

indicates the weight assigned to each scale, and

,

,

specify the dimensions of the multi-scale images.

Furthermore, to mitigate stripe artifacts in the dehazed images, a Laplacian loss is introduced, as defined in Equation (15):

where

denotes the pixel coordinates in the image, and

represents the Laplacian operator.

Furthermore, to constrain the edge consistency after inter-block fusion, an additional edge consistency loss is introduced to ensure the matching degree between the edges of the dehazed image and the real edges, as defined in Equation (16):

Therefore, the total loss is defined as follows in Equation (17):

where

is used to control the influence of the Laplacian loss and the gradient consistency loss.

4. Conclusions

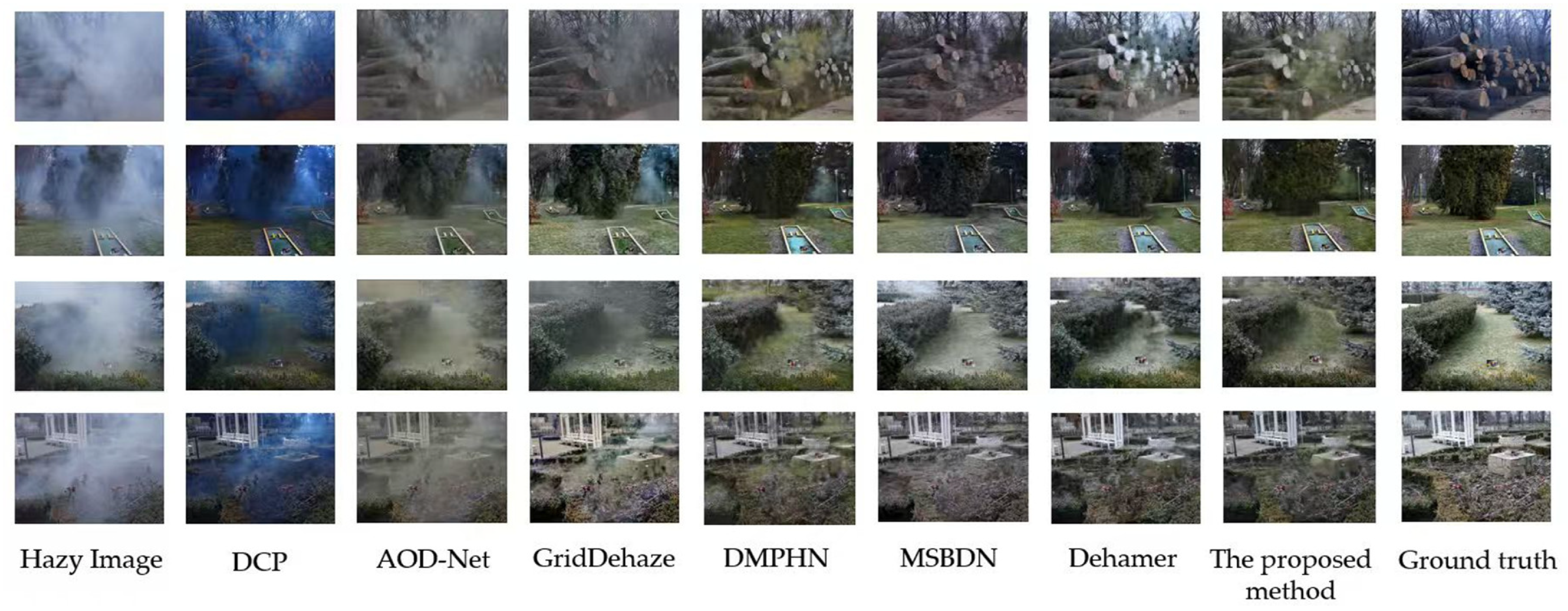

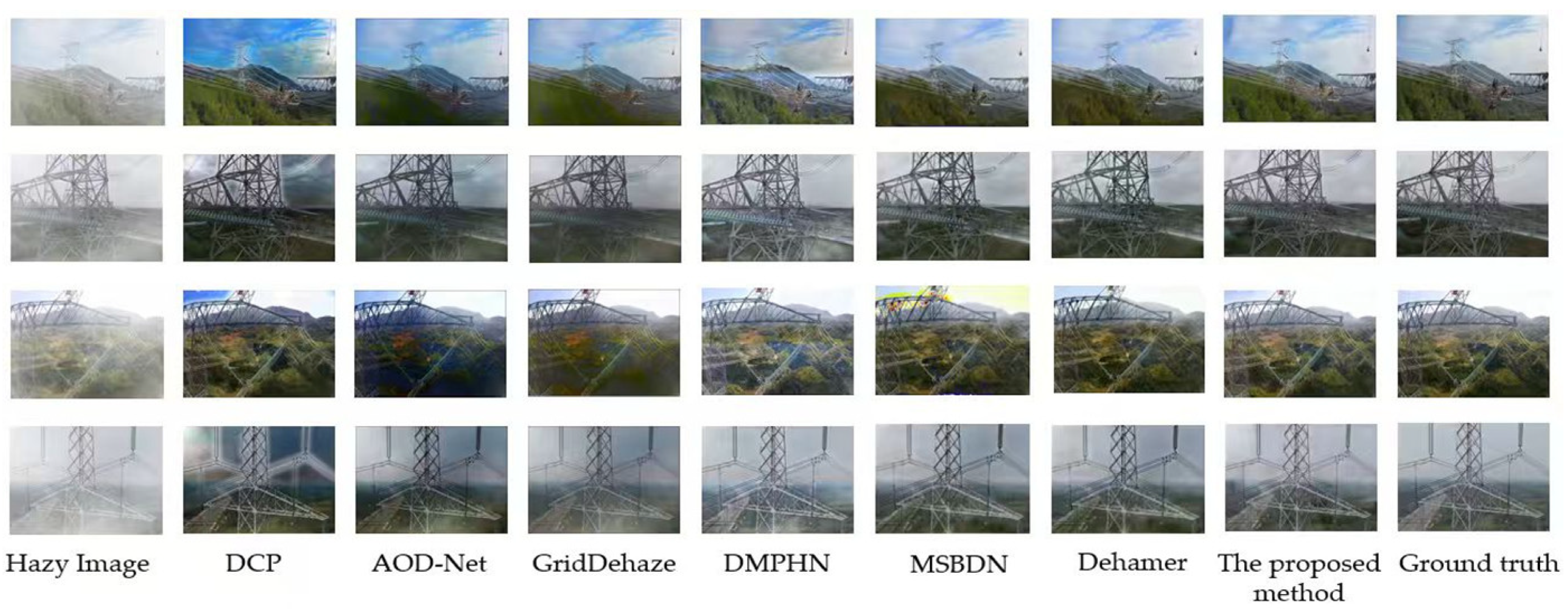

To address the problem of edge blurring in mountainous transmission line inspection images caused by non-uniform haze, as well as the drawback of low operational efficiency in traditional dehazing algorithms due to high network complexity, this paper proposes a multi-block hierarchical dehazing network based on high-frequency guided feature fusion. The network adopts an asymmetric encoder–decoder with residual channels as its core framework: at the encoder end, a Mix structure module is embedded to construct a dual-branch attention mechanism—the low-frequency branch cascades channel attention and pixel attention to model global features, while the high-frequency branch fuses Laplacian, Sobel-X/Y, and Prewitt operators to extract multi-directional edge features, adapting to the structural characteristics of transmission lines; an additional fog density estimation branch based on the dark channel mean dynamically adjusts the weights of the dual branches, solving the problem of attention failure in dense haze regions. At the decoder end, lightweight residual modules constructed with depthwise separable convolution reduce computational overhead while maintaining feature expression capability; the inter-block feature fusion module at the output stage eliminates cross-block artifacts through cross-scale feature alignment, cosine similarity-based edge continuity quantification, and region-wise weighted fusion. Experimental results demonstrate that the proposed algorithm outperforms other comparative algorithms on various datasets.

Despite these advantages, the algorithm still has limitations in extremely dense haze scenarios: when the haze concentration is excessively high (e.g., the area around the wooden stake in

Figure 5), the restored images will have a small amount of residual haze and slightly blurred edges. This is because high-frequency signals are severely attenuated in extremely dense haze environments, leading to insufficient feature extraction by the high-frequency branch in the Mix module. Two improvement directions will be adopted in the future: (1) Fuse infrared and visible images to supplement high-frequency information. (2) Design an adaptive weight adjustment strategy based on fog density and add a residual connection to the high-frequency branch.

In addition, the proposed algorithm can be migrated to other vision tasks requiring visibility enhancement. In the field of autonomous driving, by adjusting edge extraction operators to focus on road markings, vehicles, and pedestrians, the framework can be adapted to visibility enhancement tasks under adverse weather conditions; in the field of infrared image restoration, the high-frequency branch can be modified to extract thermal edge features, improving the clarity of thermal imaging targets under low-visibility conditions. This generalization potential is not limited to the scenario of transmission line inspection but also provides insights into visibility enhancement in various complex environments.