Explainable AI in IoT: A Survey of Challenges, Advancements, and Pathways to Trustworthy Automation

Abstract

1. Introduction

- We analyze surveys covering XAI frameworks in IoT ecosystems, the various ways XAI is being implemented in cybersecurity, as well as challenges, legislative policies, the current state of XAI in the market, ethical issues involving XAI, XAI taxonomies, and enhancing the security of XAI.

- We discuss various models that focus on using XAI in cybersecurity. We analyze the models using XAI to build efficient intrusion detection systems. We then discuss how XAI is being used to enhance security in the Internet of Medical Things. In Table A1, we list each dataset used in the models discussed. We list their type, their contributions to the models for which they are used, and the impact the datasets have on those models.

- We look at how XAI is being used with machine learning in a variety of fields, such as smart homes and agriculture. We discuss the benefits, core challenges, and ethical considerations of implementing XAI. We discuss ways to address various challenges in implementing secure and understandable XAI. By decentralizing model training and explanation generation, protecting explanation methods from being exploited, ensuring adaptable explanations, and using real-world datasets to train the models, we can create a secure XAI model that people can understand.

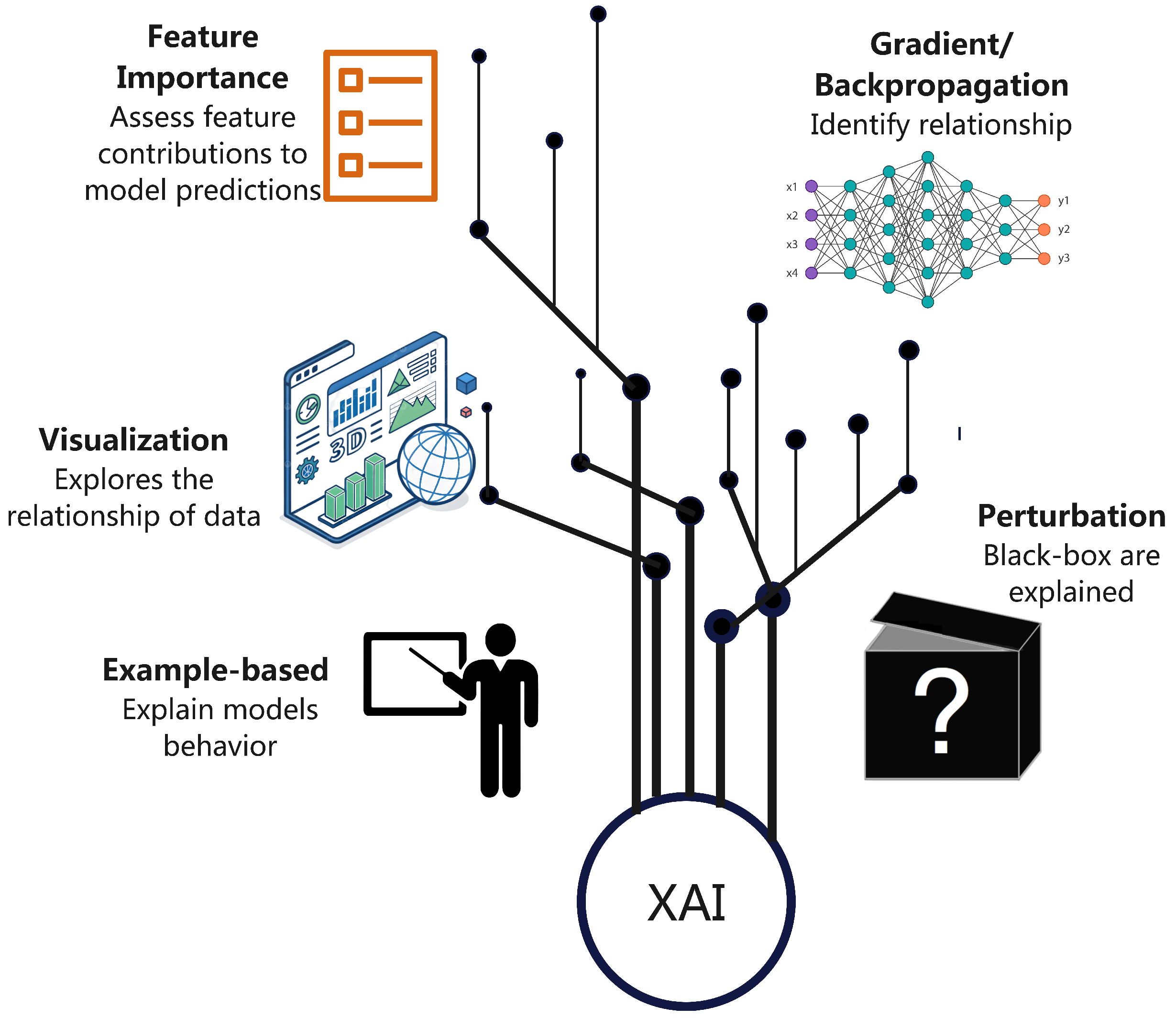

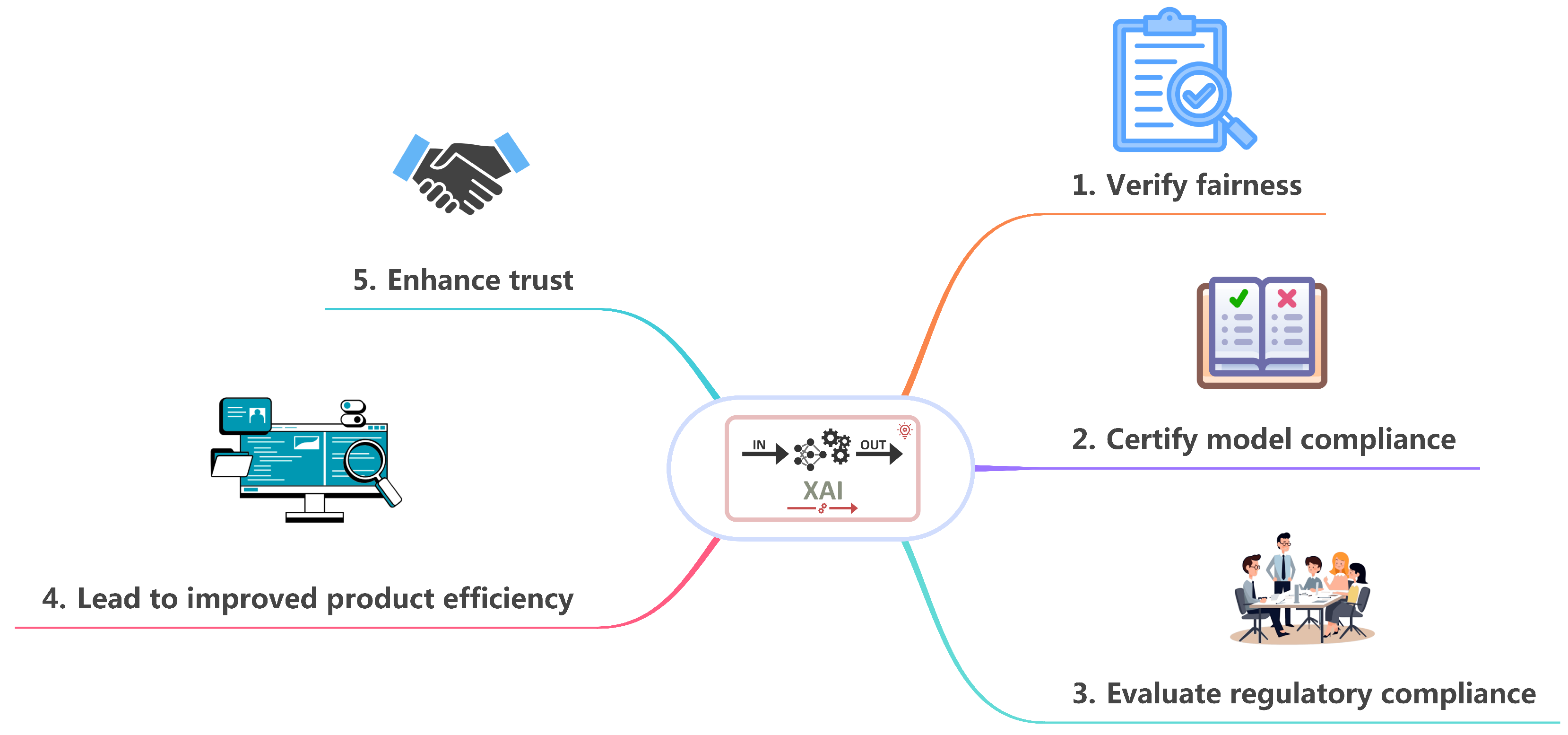

2. Current Trend of XAI

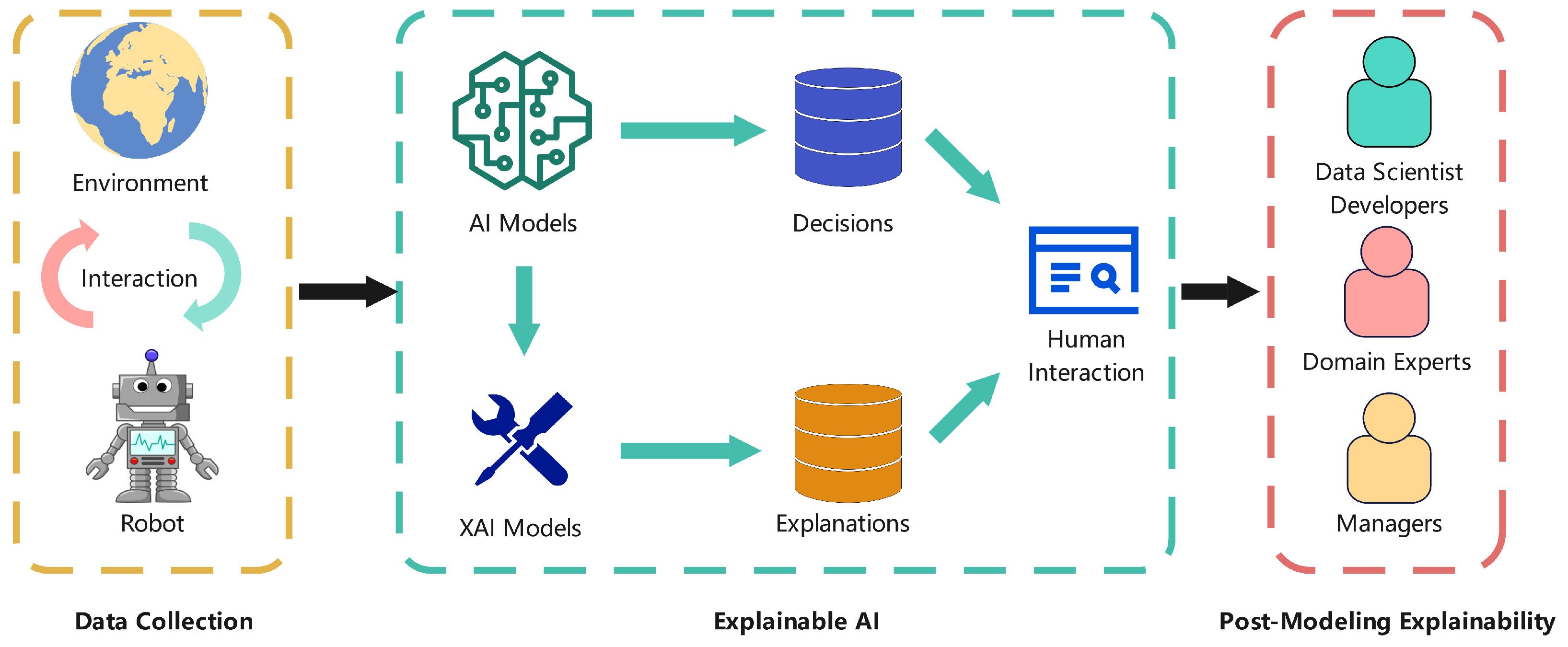

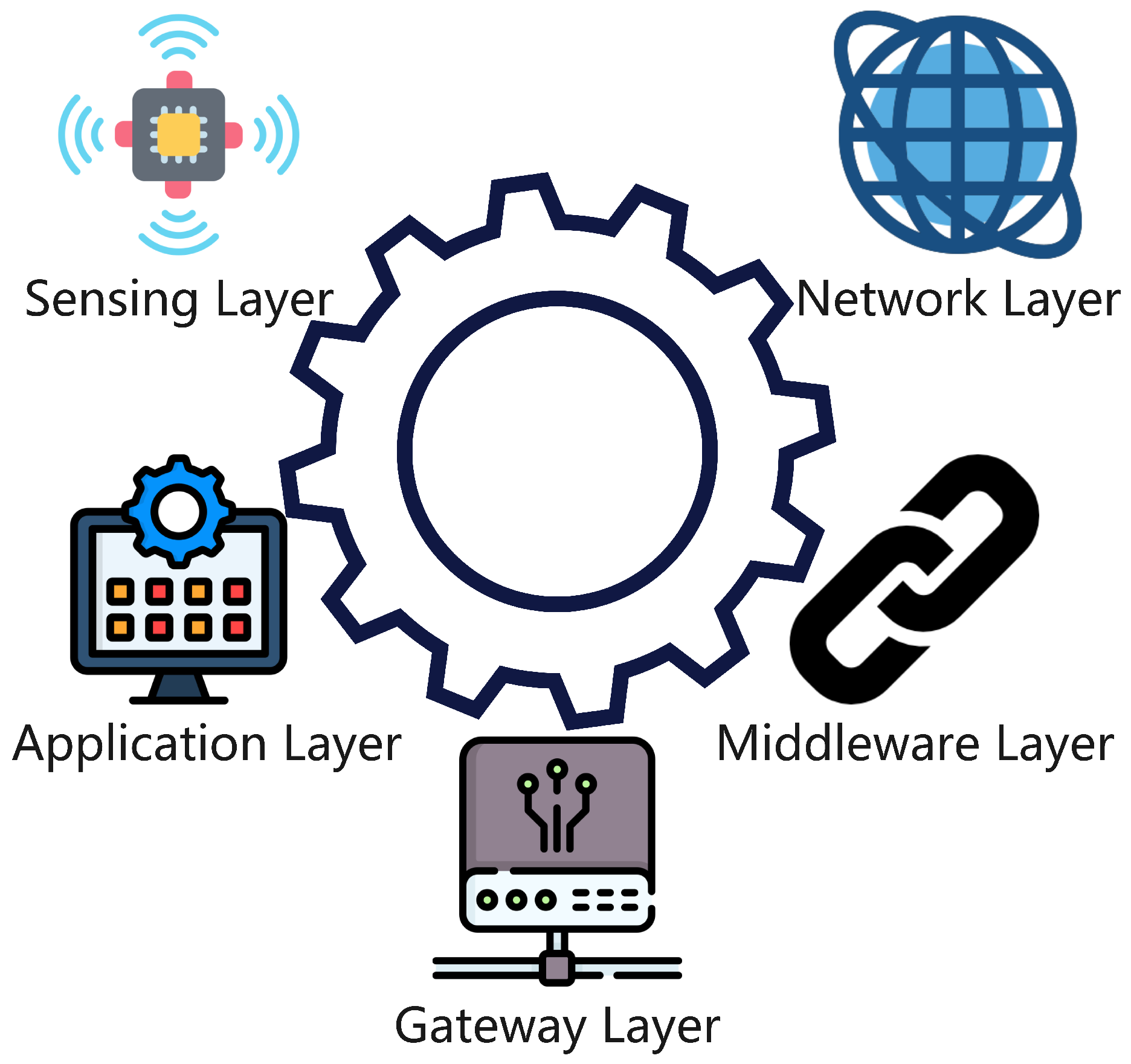

2.1. XAI Frameworks for IoT Ecosystems

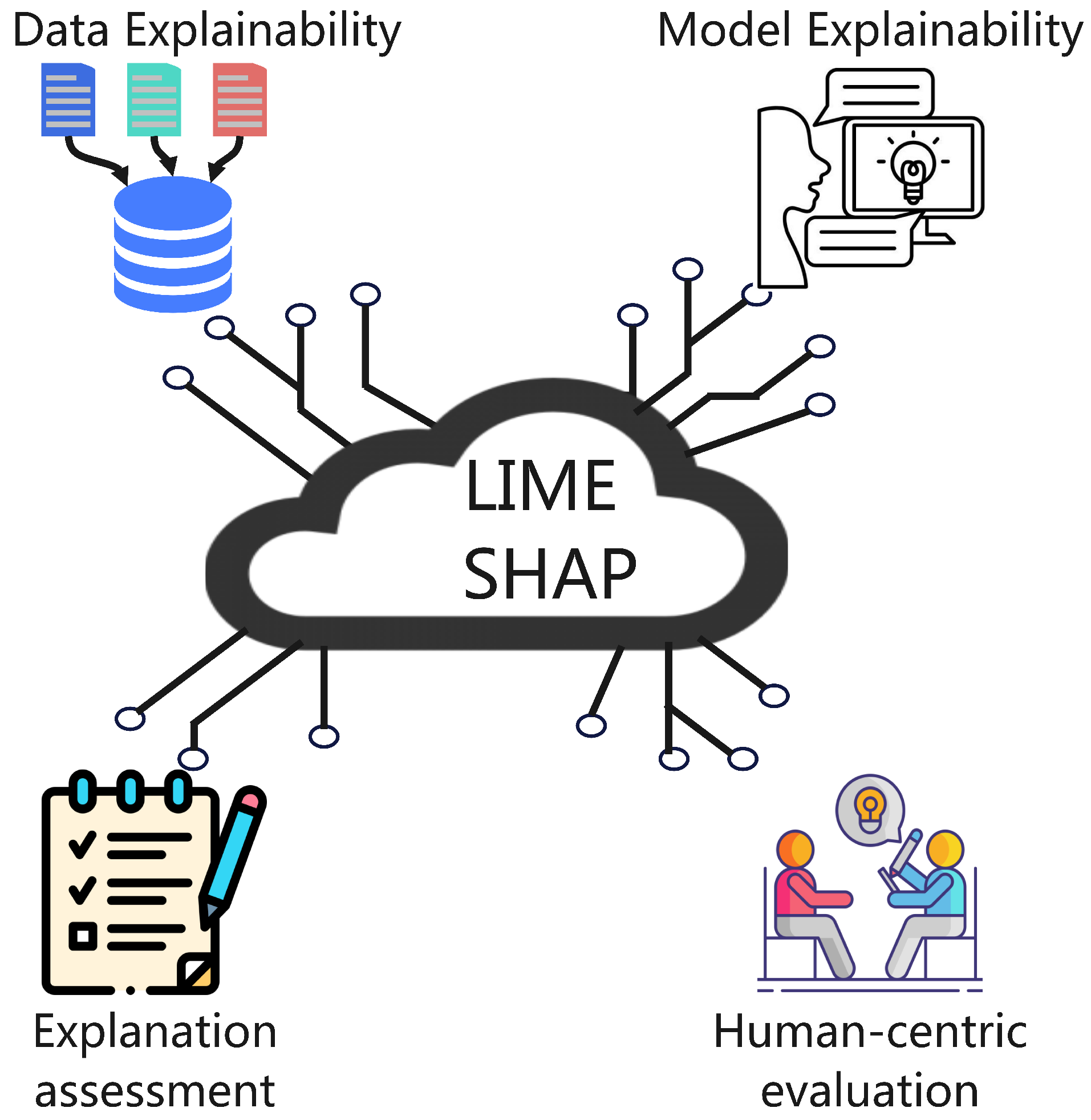

- Data explainability: This involves visualizing and interpreting data shifts in IoT sensor streams, enabling users to understand how input data influences model decisions.

- Model explainability: This axis distinguishes between intrinsic methods (e.g., decision trees, which are inherently interpretable) and post-hoc techniques (e.g., LIME or SHAP, which explain black-box models after training).

- Explanation assessment: This focuses on evaluating explanations using metrics like faithfulness (how accurately the explanation reflects the model’s decision process) and stability (the consistency of explanations across similar inputs).

- Human-centric evaluation: This emphasizes tailoring explanations to the expertise and needs of different users, such as network engineers requiring technical details or medical professionals needing simplified, actionable insights.

2.2. Privacy Vulnerabilities and Adversarial Threats to XAI

- Model-agnostic methods (e.g., LIME) make black-box models vulnerable to membership inference attacks.

- Backpropagation-based techniques (e.g., Grad-CAM) are susceptible to model inversion attacks, exposing sensitive training data.

- Perturbation-based methods (e.g., LIME) resist inference attacks but struggle with data drift. Privacy-enhancing techniques like differential privacy degrade explanation quality by adding noise, while federated learning increases vulnerability to model inversion. Ref. [9] systematizes threats under adversarial XAI (AdvXAI), including:

- -

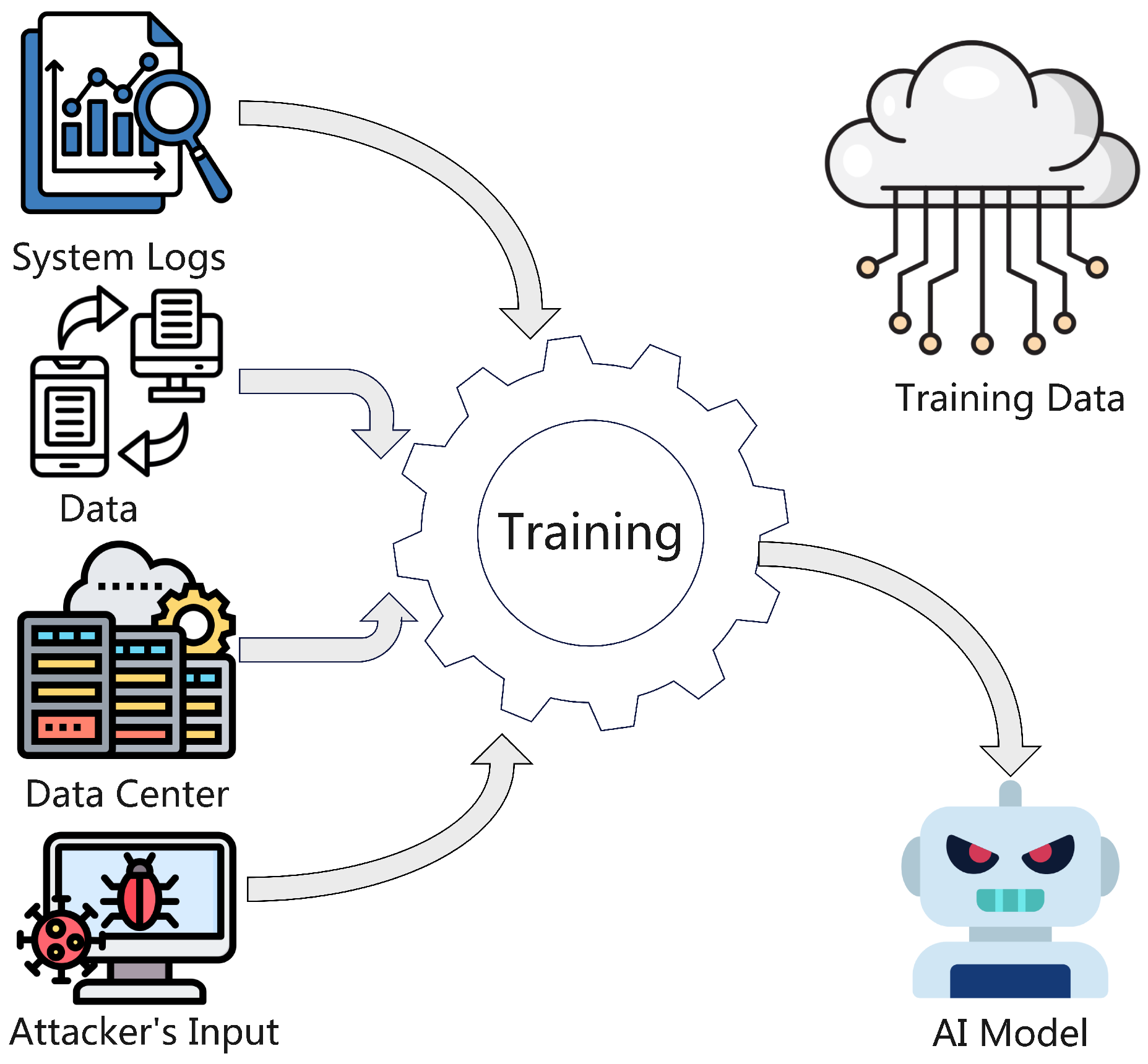

- Data poisoning: Corrupting training data to manipulate explanations. As you can see in Figure 4, the attacker corrupts the training data that was pulled from system logs, streaming data, and the data center to create a vulnerable XAI model.

- -

- Adversarial examples: Crafting inputs to mislead models and their explanations.

- -

- Model manipulation: Altering parameters to produce biased explanations.

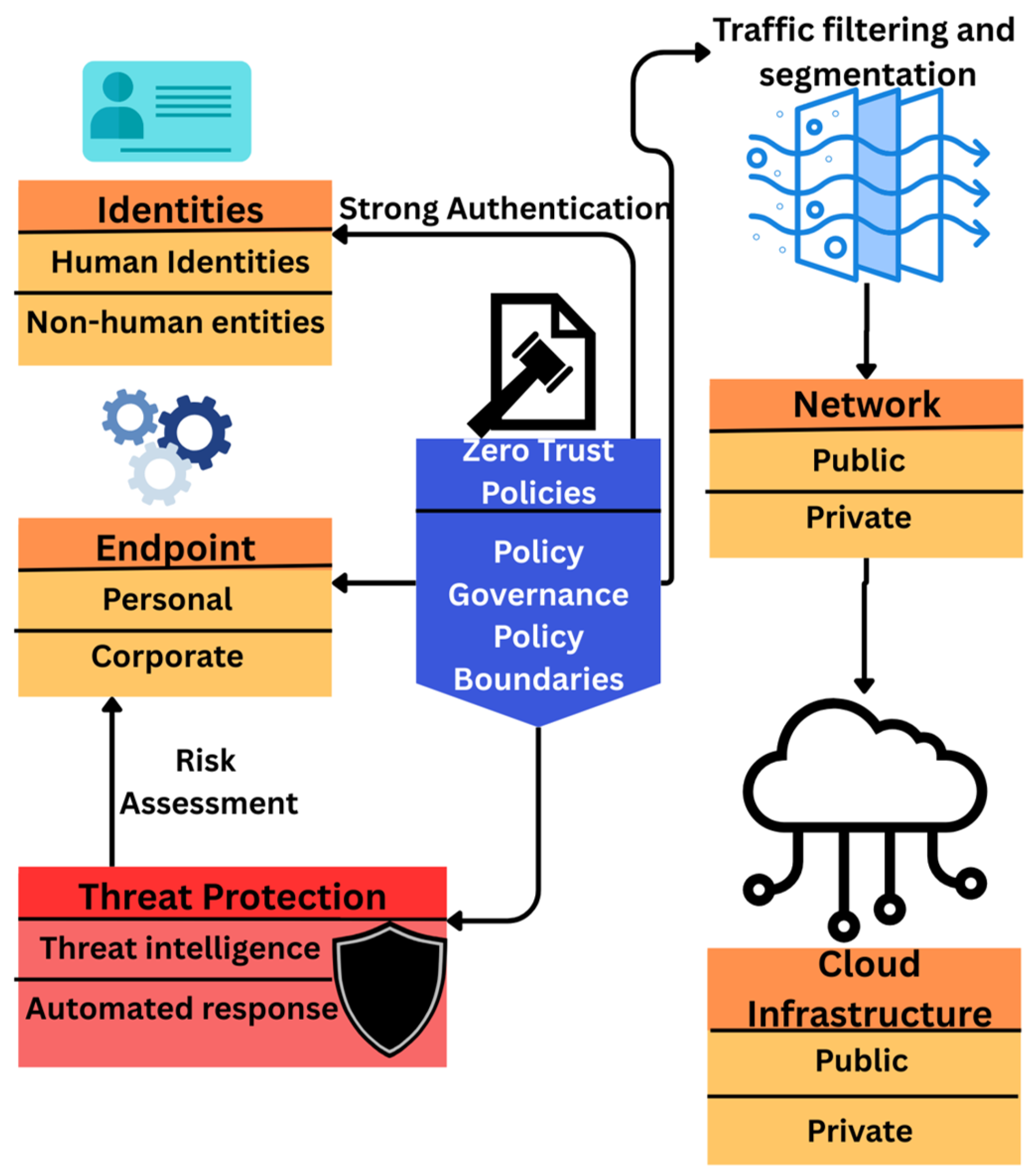

2.3. Enhancing IoT Security with Emerging Technologies

2.4. Legislative Policies, Ethical Concerns, and Market Realities

- Robustness: Inconsistent explanations for similar inputs.

- Adversarial attacks: Explanations manipulated to hide bias.

- Partial explanations: Overemphasizing irrelevant features (e.g., blaming benign IP addresses for intrusions).

- Data/concept drift: Outdated explanations in dynamic environments.

- Anthropomorphization: Assigning human intent to AI decisions.

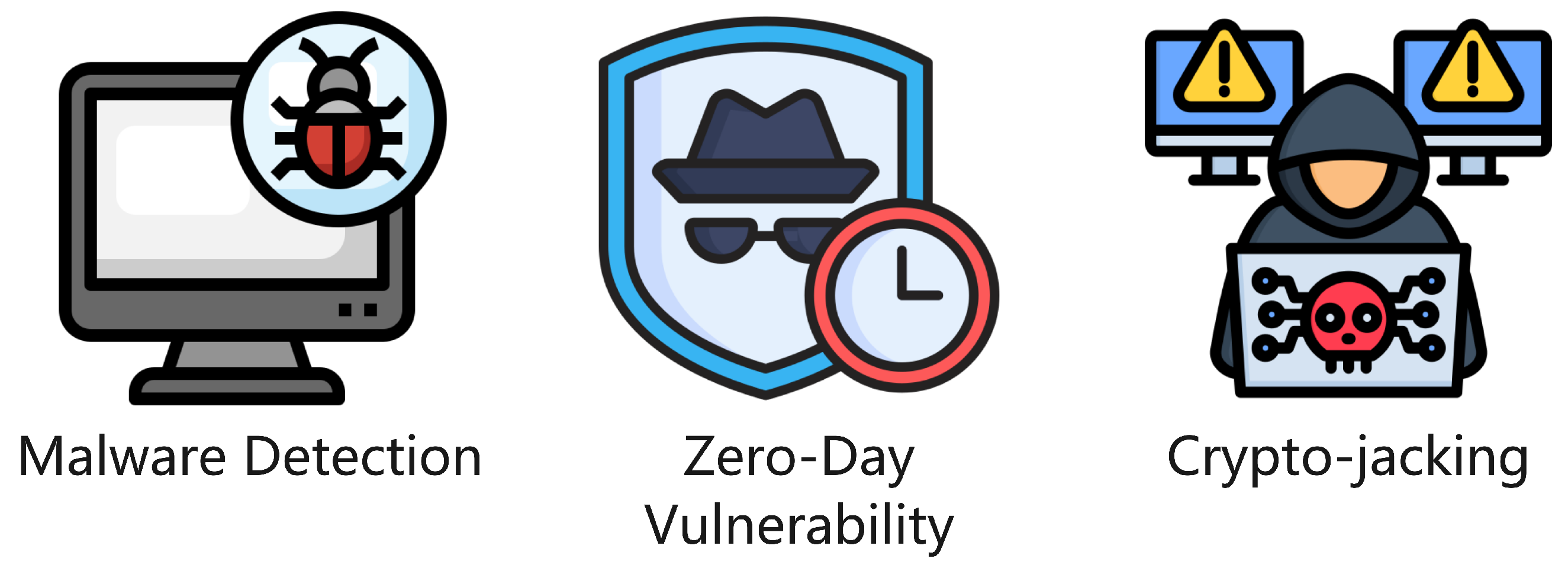

3. IoT Cybersecurity

- Malware detection: Signature-based (interpretable rule sets), anomaly-based (SHAP for outlier justification), and heuristic-based (behavioral analysis).

- Zero-day vulnerabilities: XAI traces model decisions to unknown attack patterns.

- Crypto-jacking: Highlighted as an under-researched area where XAI could map mining behaviors to network logs.

3.1. XAI with Intrusion Detection in IOT/IIOT

3.2. XAI in Internet of Medical Things (IoMT)

4. XAI Applications with Machine Learning

4.1. Emergency Response and Public Safety

4.2. Smart Home Automation and User-Centric Design

4.3. Industrial IoT and Predictive Maintenance

4.4. Sensor Reliability and Edge Computing

4.5. Agricultural IoT and Sustainability

4.6. IoT Security in Emerging 6G Networks

5. Discussion

5.1. Bridging Interpretability with Real-World Utility

5.2. Core Challenges: Technical, Operational, and Sociotechnical Constraints

- Resource Constraints at the Edge: Many IoT devices operate under severe constraints in memory, computation, and energy availability. Traditional XAI methods like SHAP and LIME are computationally expensive, often necessitating centralized inference.

- Adversarial Exploitation of Transparency: Explanations can be reverse-engineered by adversaries to manipulate outputs or infer sensitive training data. Membership inference, model inversion, and explanation-guided evasion attacks are increasingly documented.For instance, adversarial inputs crafted to evade IDS models have leveraged explanation outputs to modify payload entropy or mimic benign protocol structures.

- Superficial Compliance with Regulatory Frameworks: Current explainability tools often satisfy compliance checkboxes without delivering truly actionable insights.A feature importance chart stating “network traffic volume” as a cause for alert lacks depth unless it contextualizes the decision using features such as protocol type, connection duration, and correlation with threat intelligence.

- Human–AI Mismatch in Explanation Design: Explanation design often neglects user roles. Security analysts may need packet-level visibility, while non-expert stakeholders require natural language justifications.

5.3. Toward Scalable, Secure, and Human-Centric Explainability

- Federated and Edge-Aware Explainability: By decentralizing model training and explanation generation, federated learning enables local explanations (e.g., LIME) for immediate alerts, while cloud-based SHAP provides richer global insight.

- Adversarially-Robust XAI Pipelines: Explanation aggregation, adversarial training, and robust attribution masking can shield explanation methods from exploitation.

- Context-Aware, Role-Specific Interface Design: Explanation outputs must adapt to urgency and expertise level. In smart homes, natural-language explanations may suffice; in factories, layered visualizations may be needed.Multimodal interfaces—combining text, graphs, and heatmaps—can help bridge this divide.

5.4. Ethical and Governance Considerations

- Bias Auditing and Fairness: XAI systems must surface disparities in model behavior across demographic groups. For example, IoMT applications trained on skewed data may underperform for underrepresented patients. Explanations should reveal such imbalances and guide model refinement toward fairness.

- Traceability and Accountability: Explanations should link decisions to model states, inputs, and environmental context to enable meaningful auditing. Blockchain-based logging frameworks can preserve immutable records of decisions and their justifications, supporting post hoc investigation.

- Participatory Design: Stakeholder involvement through co-design, interviews, and usability studies ensures explanations are relevant and understandable. Engaging end-users, domain experts, and policymakers improves the likelihood of trust and adoption.

- Legal and Regulatory Alignment: XAI must meet requirements of frameworks like the EU AI Act and GDPR, which demand “meaningful information” about algorithmic logic. This calls for both technical clarity and accessible formats, including natural-language summaries and just-in-time user interfaces.

- Mitigating Misuse: Explanations may be selectively presented or misunderstood, especially if oversimplified. Developers must communicate limitations and uncertainties clearly, avoiding cherry-picking or deceptive presentation.

- Sociotechnical Responsibility: XAI should be integrated into broader systems of documentation, oversight, and continuous feedback. In critical contexts, explanations must address not just “what” the system did, but “why”, and under what conditions, limitations, and assumptions.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

| Dataset | Scope | Key Contributions | Accuracy/XAI Impact |

|---|---|---|---|

| NSL-KDD | General intrusion detection | Benchmark for LSTM/GRU models [25,26]; SHAP identifies SYN flood patterns; evaluate TRUST XAI model [32] | Over 95% TPR/TNR; 18% faster than DL-IDS; shows how the TRUST XAI models performs (average accuracy of 98%) |

| CICIDS2017 | Smart city attacks | Validates blockchain-GRU hybrid [30]; SHAP highlights SSL/TLS anomalies | 99.53% accuracy; 14% lower FPR than SOTA |

| N-BaIoT | Botnet detection | XGBoost with SMOTE achieves 100% binary accuracy [35]; SHAP exposes IoT device jitter; Used to test proposed model and compare to other algorithms [41] | 99% precision; 20% faster inference than CNN; Shows that the proposed method outperforms other algorithms such as RNN-LF |

| GSA-IIoT | Industrial sensor anomalies | Validates CNN-Autoencoder-LSTM [31]; SHAP explains sensor redundancy | 99.35% accuracy; 98.86% precision |

| WUSTL-IIoT | IIoT attacks | TRUST XAI [32] identifies PLC command spoofing via latent variables; | N/A (qualitative interpretability focus) |

| ToN IoT | Multi-class attacks | Random Forest/AdaBoost [51] classify 17 attack types; LIME explains DNS tunneling; evaluate efficiency of framework and train IDS [24]; Train framework [26]; Evaluate XSRU-IoMT [39] | >99% accuracy; 12% improvement over SVM; systems have above 99% accuracy; higher precision; shows that the model is more robust than other detection models such as the GRU model |

| BoT-IoT | Botnet analysis | Sequential backward selection [52]; SHAP reduces feature space by 40% | 99% precision; 30% lower computational overhead |

| UNSW-NB15 | Network attacks | Train framework [26]; evaluate TRUST XAI model [32] | Higher recall and accuracy; shows how well TRUST XAI performs(average accuracy of 98%) |

| CICIoT2023 | IoT attacks | Used to create a novel dataset to test TXAI-ADV [28]; Test model [29]; train proposed model [50] | 96% accuracy rate; 99% accuracy; 95.59% accuracy |

| Traffic Flow Dataset | Test anomaly detection algorithms | Tests model effectiveness [20] | 96.28% recall metric |

| USB-IDS | Network intrusion detection | Test model effectiveness [21,22] | 84% accuracy with benign data and 100% accuracy with attack data; 98% detection accuracy |

| 2023 Edge-IIoTset | IoT attacks | Tests model [29] | 92% accuracy |

| GSP Dataset | Anomaly detection | tests proposed model [31] | 99.35% accuracy |

| CIC IoT Dataset 2022 | IoT attacks | evaluate the proposed IDS [33] | Shows that the IDS can detect attacks effectively |

| IEC 69870-5 104 Intrusion Dataset | Cyberattacks | evaluate the proposed IDS [33] | Shows that the IDS can detect attacks effectively |

| IoTID20 Dataset | IoT attacks | train model [35] | 85-100% accuracy depending on the type of attack |

| Aposemat IoT-23 Dataset (IoT-23 Dataset) | IoT attacks | Okiru malware and samples from the dataset used to train the model [40] | Shows that detection models can be fooled with counterfactual explanations |

| IPFlow | IoT Network Devices | Used to test proposed method and compare to other algorithms [41] | Shows that the proposed method outperforms other algorithms such as RNN-LF |

| PTB-XL Dataset | electrocardiogram data | evaluate the two proposed models [44] | 96.21% accuracy on ID CNN and 95.53% accuracy on GoogLeNet |

| AI4I2020 Dataset | Predictive maintenance | Evaluate balanced K-Star Method [46] | Shows that the balanced K-Star method has 98.75% accuracy |

| Electrical fault detection dataset | Fault detection | Tested with various models such as GRU and is then compared to the proposed model (GSX) [47] | Shows that the GSX model outperforms other models |

| Invisible Systems LTD dataset | Fault prediction | Tested with various models such as GRU and is then compared to the proposed model (GSX) [47] | Shows that the GSX model outperforms other models |

References

- Jagatheesaperumal, S.K.; Pham, Q.V.; Ruby, R.; Yang, Z.; Xu, C.; Zhang, Z. Explainable AI over the Internet of Things (IoT): Overview, State-of-the-Art and Future Directions. IEEE Open J. Commun. Soc. 2022, 3, 2106–2136. [Google Scholar] [CrossRef]

- Chung, N.C.; Chung, H.; Lee, H.; Brocki, L.; Chung, H.; Dyer, G. False Sense of Security in Explainable Artificial Intelligence (XAI). arXiv 2024, arXiv:2405.03820. [Google Scholar] [CrossRef]

- Quincozes, V.E.; Quincozes, S.E.; Kazienko, J.F.; Gama, S.; Cheikhrouhou, O.; Koubaa, A. A survey on IoT application layer protocols, security challenges, and the role of explainable AI in IoT (XAIoT). Int. J. Inf. Secur. 2024, 23, 1975–2002. [Google Scholar] [CrossRef]

- Kök, İ.; Okay, F.Y.; Muyanlı, Ö.; Özdemir, S. Explainable Artificial Intelligence (XAI) for Internet of Things: A Survey. IEEE Internet Things J. 2023, 10, 14764–14779. [Google Scholar] [CrossRef]

- Eren, E.; Okay, F.Y.; Özdemir, S. Unveiling anomalies: A survey on XAI-based anomaly detection for IoT. Turk. J. Electr. Eng. Comput. Sci. 2024, 32, 358–381. [Google Scholar] [CrossRef]

- Ali, S.; Abuhmed, T.; El-Sappagh, S.; Muhammad, K.; Alonso-Moral, J.M.; Confalonieri, R.; Guidotti, R.; Del Ser, J.; Díaz-Rodríguez, N.; Herrera, F. Explainable Artificial Intelligence (XAI): What we know and what is left to attain Trustworthy Artificial Intelligence. Inf. Fusion 2023, 99, 101805. [Google Scholar] [CrossRef]

- Spartalis, C.N.; Semertzidis, T.; Daras, P. Balancing XAI with Privacy and Security Considerations. In Proceedings of the Computer Security. ESORICS 2023 International Workshops, The Hague, The Netherlands, 25–29 September 2023; Springer: Cham, Switzerland, 2024. [Google Scholar]

- Masud, M.T.; Keshk, M.; Moustafa, N.; Linkov, I.; Emge, D.K. Explainable Artificial Intelligence for Resilient Security Applications in the Internet of Things. IEEE Open J. Commun. Soc. 2024, 6, 2877–2906. [Google Scholar] [CrossRef]

- Baniecki, H.; Biecek, P. Adversarial attacks and defenses in explainable artificial intelligence: A survey. Inf. Fusion 2024, 107, 102303. [Google Scholar] [CrossRef]

- Senevirathna, T.; La, V.H.; Marchal, S.; Siniarski, B.; Liyanage, M.; Wang, S. A Survey on XAI for 5G and Beyond Security: Technical Aspects, Challenges and Research Directions. IEEE Commun. Surv. Tutor. 2024, 27, 941–973. [Google Scholar] [CrossRef]

- Sahu, S.K.; Mazumdar, K. Exploring security threats and solutions Techniques for Internet of Things (IoT): From vulnerabilities to vigilance. Front. Artif. Intell. 2024, 7, 1397480. [Google Scholar] [CrossRef] [PubMed]

- Bharati, S.; Podder, P. Machine and Deep Learning for IoT Security and Privacy: Applications, Challenges, and Future Directions. Secur. Commun. Netw. 2022, 2022, 8951961. [Google Scholar] [CrossRef]

- Hussain, F.; Hussain, R.; Hossain, E. Explainable Artificial Intelligence (XAI): An Engineering Perspective. arXiv 2021, arXiv:2101.03613. [Google Scholar] [CrossRef]

- Saeed, W.; Omlin, C. Explainable AI (XAI): A systematic meta-survey of current challenges and future opportunities. Knowl.-Based Syst. 2023, 263, 110273. [Google Scholar] [CrossRef]

- Barredo Arrieta, A.; Díaz-Rodríguez, N.; Del Ser, J.; Bennetot, A.; Tabik, S.; Barbado, A.; Garcia, S.; Gil-Lopez, S.; Molina, D.; Benjamins, R.; et al. Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Inf. Fusion 2020, 58, 82–115. [Google Scholar] [CrossRef]

- Moustafa, N.; Koroniotis, N.; Keshk, M.; Zomaya, A.Y.; Tari, Z. Explainable Intrusion Detection for Cyber Defences in the Internet of Things: Opportunities and Solutions. IEEE Commun. Surv. Tutor. 2023, 25, 1775–1807. [Google Scholar] [CrossRef]

- Capuano, N.; Fenza, G.; Loia, V.; Stanzione, C. Explainable Artificial Intelligence in CyberSecurity: A Survey. IEEE Access 2022, 10, 93575–93600. [Google Scholar] [CrossRef]

- Zhang, Z.; Hamadi, H.A.; Damiani, E.; Yeun, C.Y.; Taher, F. Explainable Artificial Intelligence Applications in Cyber Security: State-of-the-Art in Research. IEEE Access 2022, 10, 93104–93139. [Google Scholar] [CrossRef]

- Charmet, F.; Tanuwidjaja, H.C.; Ayoubi, S.; Gimenez, P.F.; Han, Y.; Jmila, H.; Blanc, G.; Takahashi, T.; Zhang, Z. Explainable artificial intelligence for cybersecurity: A literature survey. Ann. Telecommun. 2022, 77, 789–812. [Google Scholar] [CrossRef]

- Saghir, A.; Beniwal, H.; Tran, K.; Raza, A.; Koehl, L.; Zeng, X.; Tran, K. Explainable Transformer-Based Anomaly Detection for Internet of Things Security. In Proceedings of the The Seventh International Conference on Safety and Security with IoT, Bratislava, Slovakia, 24–26 October 2023. [Google Scholar]

- Kalutharage, C.S.; Liu, X.; Chrysoulas, C. Explainable AI and Deep Autoencoders Based Security Framework for IoT Network Attack Certainty (Extended Abstract). In Proceedings of the Attacks and Defenses for the Internet-of-Things, Copenhagen, Denmark, 30 September 2022. [Google Scholar]

- Kalutharage, C.S.; Liu, X.; Chrysoulas, C.; Pitropakis, N.; Papadopoulos, P. Explainable AI-Based DDOS Attack Identification Method for IoT Networks. Computers 2023, 12, 32. [Google Scholar] [CrossRef]

- Tabassum, S.; Parvin, N.; Hossain, N.; Tasnim, A.; Rahman, R.; Hossain, M.I. IoT Network Attack Detection Using XAI and Reliability Analysis. In Proceedings of the 2022 25th International Conference on Computer and Information Technology (ICCIT), Cox’s Bazar, Bangladesh, 17–19 December 2022. [Google Scholar]

- Shtayat, M.M.; Hasan, M.K.; Sulaiman, R.; Islam, S.; Khan, A.U.R. An Explainable Ensemble Deep Learning Approach for Intrusion Detection in Industrial Internet of Things. IEEE Access 2023, 11, 115047–115061. [Google Scholar] [CrossRef]

- Djenouri, Y.; Belhadi, A.; Srivastava, G.; Lin, J.C.W.; Yazidi, A. Interpretable intrusion detection for next generation of Internet of Things. Comput. Commun. 2023, 203, 192–198. [Google Scholar] [CrossRef]

- Keshk, M.; Koroniotis, N.; Pham, N.; Moustafa, N.; Turnbull, B.; Zomaya, A.Y. An explainable deep learning-enabled intrusion detection framework in IoT networks. Inf. Sci. 2023, 639, 119000. [Google Scholar] [CrossRef]

- Sharma, B.; Sharma, L.; Lal, C.; Roy, S. Explainable artificial intelligence for intrusion detection in IoT networks: A deep learning based approach. Expert Syst. Appl. 2024, 238, 121751. [Google Scholar] [CrossRef]

- Ojo, S.; Krichen, M.; Alamro, M.A.; Mihoub, A. TXAI-ADV: Trustworthy XAI for Defending AI Models against Adversarial Attacks in Realistic CIoT. Electronics 2024, 13, 1769. [Google Scholar] [CrossRef]

- Nkoro, E.C.; Njoku, J.N.; Nwakanma, C.I.; Lee, J.M.; Kim, D.S. Zero-Trust Marine Cyberdefense for IoT-Based Communications: An Explainable Approach. Electronics 2024, 13, 276. [Google Scholar] [CrossRef]

- Kumar, R.; Javeed, D.; Aljuhani, A.; Jolfaei, A.; Kumar, P.; Islam, A.K.M.N. Blockchain-Based Authentication and Explainable AI for Securing Consumer IoT Applications. IEEE Trans. Consum. Electron. 2024, 70, 1145–1154. [Google Scholar] [CrossRef]

- Khan, I.A.; Moustafa, N.; Pi, D.; Sallam, K.M.; Zomaya, A.Y.; Li, B. A New Explainable Deep Learning Framework for Cyber Threat Discovery in Industrial IoT Networks. IEEE Internet Things J. 2022, 9, 11604–11613. [Google Scholar] [CrossRef]

- Zolanvari, M.; Yang, Z.; Khan, K.; Jain, R.; Meskin, N. TRUST XAI: Model-Agnostic Explanations for AI With a Case Study on IIoT Security. IEEE Internet Things J. 2023, 10, 2967–2978. [Google Scholar] [CrossRef]

- Siganos, M.; Radoglou-Grammatikis, P.; Kotsiuba, I.; Markakis, E.; Moscholios, I.; Goudos, S.; Sarigiannidis, P. Explainable AI-based Intrusion Detection in the Internet of Things. In Proceedings of the 18th International Conference on Availability, Reliability and Security, Benevento, Italy, 29 August–1 September 2023; Association for Computing Machinery: New York, NY, USA, 2023. [Google Scholar] [CrossRef]

- Gaspar, D.; Silva, P.; Silva, C. Explainable AI for Intrusion Detection Systems: LIME and SHAP Applicability on Multi-Layer Perceptron. IEEE Access 2024, 12, 30164–30175. [Google Scholar] [CrossRef]

- Muna, R.K.; Hossain, M.I.; Alam, M.G.R.; Hassan, M.M.; Ianni, M.; Fortino, G. Demystifying machine learning models of massive IoT attack detection with Explainable AI for sustainable and secure future smart cities. Internet Things 2023, 24, 100919. [Google Scholar] [CrossRef]

- Namrita Gummadi, A.; Napier, J.C.; Abdallah, M. XAI-IoT: An Explainable AI Framework for Enhancing Anomaly Detection in IoT Systems. IEEE Access 2024, 12, 71024–71054. [Google Scholar] [CrossRef]

- Houda, Z.A.E.; Brik, B.; Khoukhi, L. “Why Should I Trust Your IDS?”: An Explainable Deep Learning Framework for Intrusion Detection Systems in Internet of Things Networks. IEEE Open J. Commun. Soc. 2022, 3, 1164–1176. [Google Scholar] [CrossRef]

- Liu, W.; Zhao, F.; Nkenyereye, L.; Rani, S.; Li, K.; Lv, J. XAI Driven Intelligent IoMT Secure Data Management Framework. IEEE J. Biomed. Health Inform. 2024, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Khan, I.A.; Moustafa, N.; Razzak, I.; Tanveer, M.; Pi, D.; Pan, Y.; Ali, B.S. XSRU-IoMT: Explainable simple recurrent units for threat detection in Internet of Medical Things networks. Future Gener. Comput. Syst. 2022, 127, 181–193. [Google Scholar] [CrossRef]

- Pawlicki, M.; Pawlicka, A.; Kozik, R.; Choraś, M. Explainability versus Security: The Unintended Consequences of xAI in Cybersecurity. In Proceedings of the 2nd ACM Workshop on Secure and Trustworthy Deep Learning Systems, Singapore, 2–20 July 2024; Association for Computing Machinery: New York, NY, USA, 2024; pp. 1–7. [Google Scholar] [CrossRef]

- Djenouri, Y.; Belhadi, A.; Srivastava, G.; Lin, J.C.W. When explainable AI meets IoT applications for supervised learning. Clust. Comput. 2022, 26, 2313–2323. [Google Scholar] [CrossRef]

- Rao, A.; Vashistha, S.; Naik, A.; Aditya, S.; Choudhury, M. Tricking LLMs into Disobedience: Formalizing, Analyzing, and Detecting Jailbreaks. arXiv 2024, arXiv:2305.14965. [Google Scholar] [CrossRef]

- García-Magariño, I.; Muttukrishnan, R.; Lloret, J. Human-Centric AI for Trustworthy IoT Systems With Explainable Multilayer Perceptrons. IEEE Access 2019, 7, 125562–125574. [Google Scholar] [CrossRef]

- Knof, H.; Bagave, P.; Boerger, M.; Tcholtchev, N.; Ding, A.Y. Exploring CNN and XAI-based Approaches for Accountable MI Detection in the Context of IoT-enabled Emergency Communication Systems. In Proceedings of the 13th International Conference on the Internet of Things, Nagoya, Japan, 7–10 November 2023; Association for Computing Machinery: New York, NY, USA, 2024; pp. 50–57. [Google Scholar] [CrossRef]

- Dobrovolskis, A.; Kazanavičius, E.; Kižauskienė, L. Building XAI-Based Agents for IoT Systems. Appl. Sci. 2023, 13, 4040. [Google Scholar] [CrossRef]

- Ghasemkhani, B.; Aktas, O.; Birant, D. Balanced K-Star: An Explainable Machine Learning Method for Internet-of-Things-Enabled Predictive Maintenance in Manufacturing. Machines 2023, 11, 322. [Google Scholar] [CrossRef]

- Mansouri, T.; Vadera, S. A Deep Explainable Model for Fault Prediction Using IoT Sensors. IEEE Access 2022, 10, 66933–66942. [Google Scholar] [CrossRef]

- Sinha, A.; Das, D. XAI-LCS: Explainable AI-Based Fault Diagnosis of Low-Cost Sensors. IEEE Sensors Lett. 2023, 7, 6009304. [Google Scholar] [CrossRef]

- Tsakiridis, N.L.; Diamantopoulos, T.; Symeonidis, A.L.; Theocharis, J.B.; Iossifides, A.; Chatzimisios, P.; Pratos, G.; Kouvas, D. Versatile Internet of Things for Agriculture: An eXplainable AI Approach. In Proceedings of the Artificial Intelligence Applications and Innovations, Neos Marmaras, Greece, 5–7 June 2020; Maglogiannis, I., Iliadis, L., Pimenidis, E., Eds.; Springer: Cham, Switzerland, 2020; pp. 180–191. [Google Scholar]

- Kaur, N.; Gupta, L. Enhancing IoT Security in 6G Environment With Transparent AI: Leveraging XGBoost, SHAP and LIME. In Proceedings of the 2024 IEEE 10th International Conference on Network Softwarization (NetSoft), Saint Louis, MO, USA, 24–28 June 2024; pp. 180–184. [Google Scholar] [CrossRef]

- Tasnim, A.; Hossain, N.; Tabassum, S.; Parvin, N. Classification and Explanation of Different Internet of Things (IoT) Network Attacks Using Machine Learning, Deep Learning and XAI. Bachelor’s Thesis, Brac University, Dhaka, Bangladesh, 2022. [Google Scholar]

- Kalakoti, R.; Bahsi, H.; Nõmm, S. Improving IoT Security With Explainable AI: Quantitative Evaluation of Explainability for IoT Botnet Detection. IEEE Internet Things J. 2024, 11, 18237–18254. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Moss, J.; Gordon, J.; Duclos, W.; Liu, Y.; Wang, Q.; Wang, J. Explainable AI in IoT: A Survey of Challenges, Advancements, and Pathways to Trustworthy Automation. Electronics 2025, 14, 4622. https://doi.org/10.3390/electronics14234622

Moss J, Gordon J, Duclos W, Liu Y, Wang Q, Wang J. Explainable AI in IoT: A Survey of Challenges, Advancements, and Pathways to Trustworthy Automation. Electronics. 2025; 14(23):4622. https://doi.org/10.3390/electronics14234622

Chicago/Turabian StyleMoss, Jason, Jeremy Gordon, Wesley Duclos, Yongxin Liu, Qing Wang, and Jian Wang. 2025. "Explainable AI in IoT: A Survey of Challenges, Advancements, and Pathways to Trustworthy Automation" Electronics 14, no. 23: 4622. https://doi.org/10.3390/electronics14234622

APA StyleMoss, J., Gordon, J., Duclos, W., Liu, Y., Wang, Q., & Wang, J. (2025). Explainable AI in IoT: A Survey of Challenges, Advancements, and Pathways to Trustworthy Automation. Electronics, 14(23), 4622. https://doi.org/10.3390/electronics14234622