Towards Robust Text-Based Person Retrieval: A Framework for Correspondence Rectification and Description Synthesis

Abstract

1. Introduction

- We directly address the pervasive yet underexplored problem of annotation noise in TBPS, explicitly targeting both coarse-grained descriptions and mismatched image–text pairs.

- We introduce an integrated approach combining GMM-based noise identification with MLLM-driven text augmentation and filtering, enabling effective noise suppression while enhancing training supervision quality.

- We demonstrate the efficacy of our method through extensive experiments on three public benchmarks, with ablation studies empirically validating each component’s contribution to overall performance.

2. Related Work

2.1. Text-Based Person Search

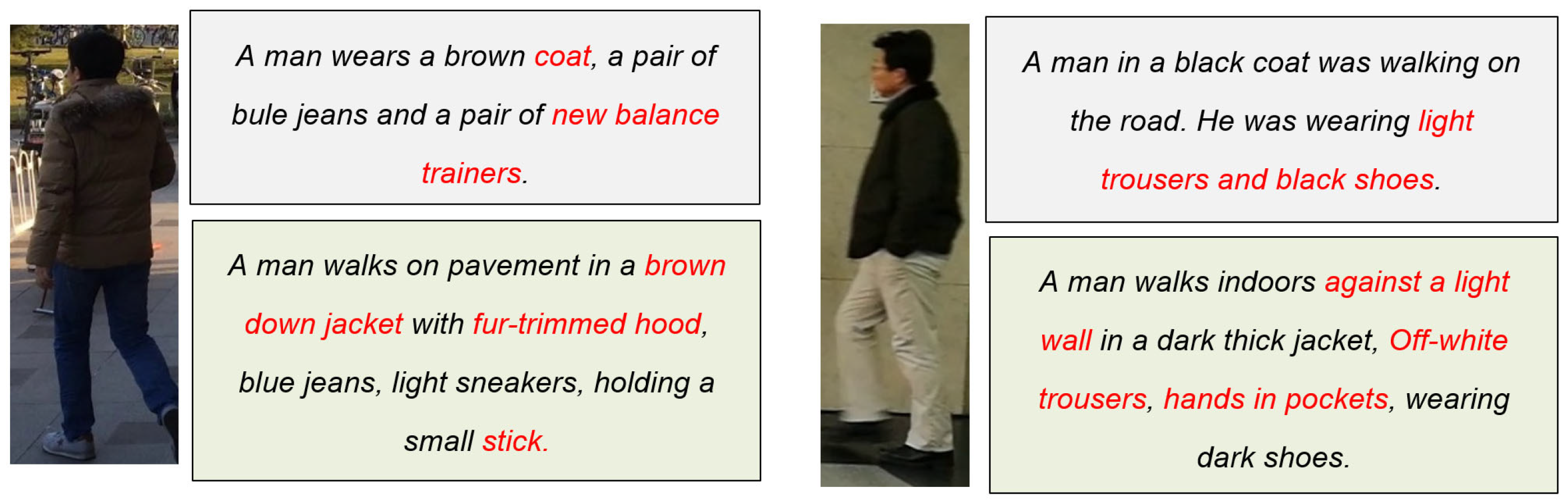

2.2. Learning with Noisy Data

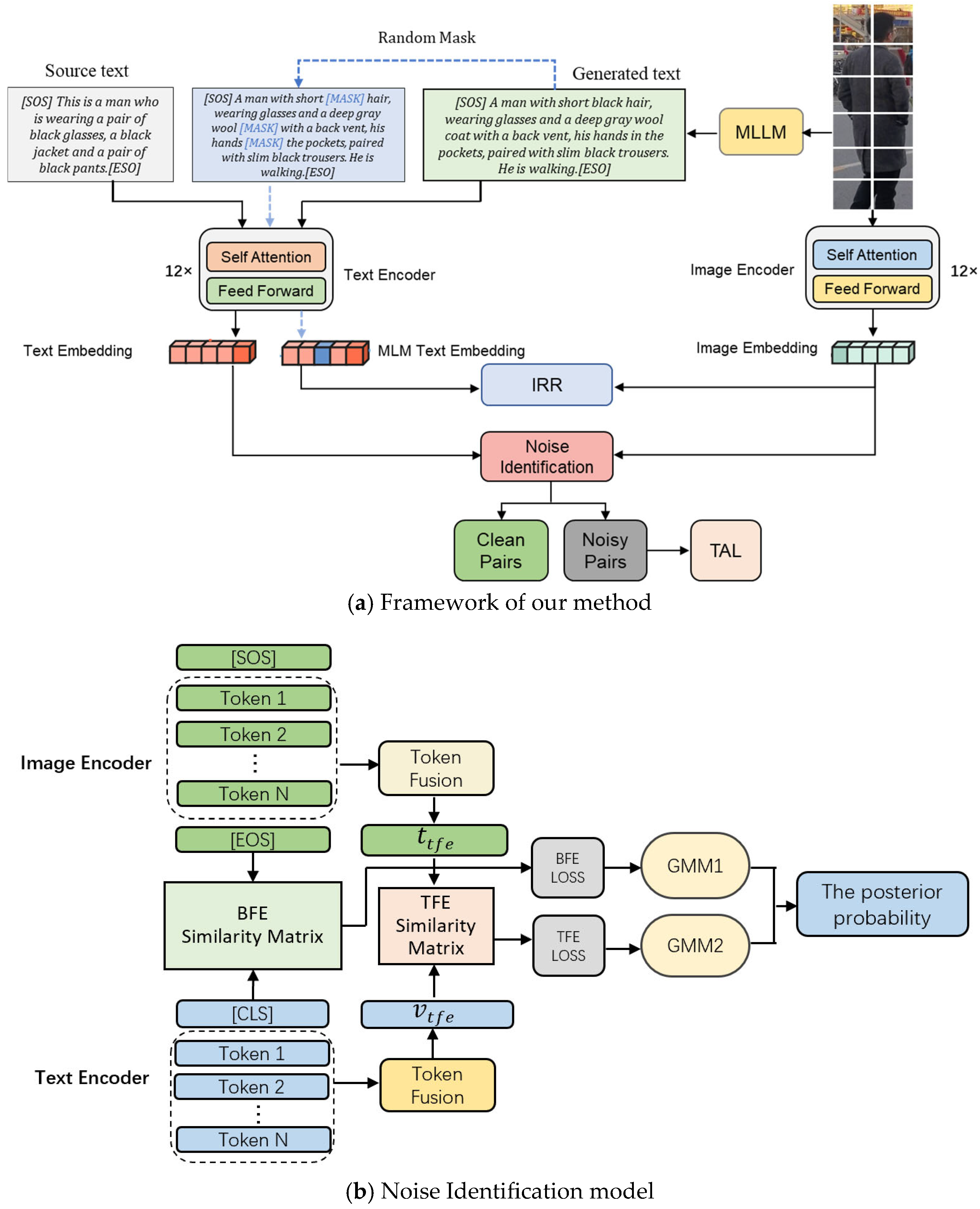

3. Method

3.1. Feature Representations

3.2. Noise Identification

3.3. Pseudo-Text Augmentation

3.4. Loss Function

4. Experiments

4.1. Datasets

4.2. Evaluation Protocols

4.3. Experimental Details

4.4. Comparison with State-of-the-Art Methods

4.4.1. Comparison of ICFG-PEDES

4.4.2. Comparison of RSTPReid

4.4.3. Comparison of CUHK-PEDES

4.5. Ablation Study

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ding, C.; Tao, D. Trunk-Branch Ensemble Convolutional Neural Networks for Video-based Face Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 1002–1014. [Google Scholar] [CrossRef]

- Li, Y.; Fan, H.; Hu, R.; Feichtenhofer, C.; He, K. Scaling language-image pre-training via masking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; pp. 23390–23400. [Google Scholar]

- Miech, A.; Alayrac, J.-B.; Laptev, I.; Sivic, J.; Zisserman, A. Thinking fast and slow: Efficient text-to-visual retrieval with transformers. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 9826–9836. [Google Scholar]

- Niu, K.; Huang, Y.; Ouyang, W.; Wang, L. Improving description-based person re-identification by multi-granularity image-text alignments. IEEE Trans. Image Process. 2020, 29, 5542–5556. [Google Scholar] [CrossRef]

- Chen, T.; Xu, C.; Luo, J. Improving Text-Based Person Search by Spatial Matching and Adaptive Threshold. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; pp. 1879–1887. [Google Scholar]

- Lu, J.; Batra, D.; Parikh, D.; Lee, S. ViLBERT: Pretraining Task-Agnostic Visiolinguistic Representations for Vision-and-Language Tasks. Adv. Neural Inf. Process. Syst. 2019, 32, 13–23. [Google Scholar]

- Wang, G.; Yu, F.; Li, J.; Jia, Q.; Ding, S. Exploiting the textual potential from vision-language pre-training for text-based person search. arXiv 2023, arXiv:2303.04497. [Google Scholar]

- Jiang, D.; Ye, M. Cross-modal implicit relation reasoning and aligning for text-to-image person retrieval. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; pp. 2787–2797. [Google Scholar]

- Li, J.; Selvaraju, R.; Gotmare, A.; Joty, S.; Xiong, C.; Hoi, S.C.H. Align before fuse: Vision and language representation learning with momentum distillation. Adv. Neural Inf. Process. Syst. 2021, 34, 9694–9705. [Google Scholar]

- Wu, Q.; Teney, D.; Wang, P.; Shen, C.; Dick, A.; Hengel, A.v.D. Visual question answering: A survey of methods and datasets. Comput. Vis. Image Underst. 2017, 163, 21–40. [Google Scholar] [CrossRef]

- Tan, W.; Ding, C.; Jiang, J.; Wang, F.; Zhan, Y.; Tao, D. Harnessing the power of mllms for transferable text-to-image person reid. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 17127–17137. [Google Scholar]

- Wu, J.; Gan, W.; Chen, Z.; Wan, S.; Yu, P.S. Multimodal large language models: A survey. In Proceedings of the 2023 IEEE International Conference on Big Data (BigData), Sorrento, Italy, 15–18 December 2023; pp. 2247–2256. [Google Scholar]

- Zhu, D.; Chen, J.; Shen, X.; Li, X.; Elhoseiny, M. Minigpt-4: Enhancing vision-language understanding with advanced large language models. arXiv 2023, arXiv:2304.10592. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C. Learning transferable visual models from natural language supervision. In Proceedings of the 38th International Conference on Machine Learning, (ICML), Vienna, Austria, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Bai, J.; Bai, S.; Yang, S.; Wang, S.; Tan, S.; Wang, P.; Lin, J.; Zhou, C.; Zhou, J. Qwen-vl: A frontier large vision-language model with versatile abilities. arXiv 2023, arXiv:2308.12966. [Google Scholar]

- Zhang, Y.; Lu, H. Deep cross-modal projection learning for image-text matching. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 686–701. [Google Scholar]

- Zheng, Z.; Zheng, L.; Garrett, M.; Yang, Y.; Xu, M.; Shen, Y.-D. Dual-path convolutional image-text embeddings with instance loss. ACM Trans. Multimedia Comput. Commun. Appl. 2020, 16, 1–23. [Google Scholar] [CrossRef]

- Gao, C.; Cai, G.; Jiang, X.; Zheng, F.; Zhang, J.; Gong, Y.; Sun, X. Contextual non-local alignment over full-scale representation for text-based person search. arXiv 2021, arXiv:2101.03036. [Google Scholar]

- Shu, X.; Wen, W.; Wu, H.; Chen, K.; Song, Y.; Qiao, R.; Wang, X. See finer, see more: Implicit modality alignment for text-based person retrieval. In Proceedings of the European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022; Springer: Cham, Switzerland, 2022; pp. 624–641. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Houlsby, N. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Graves, A.; Graves, A. Long short-term memory. In Supervised Sequence Labelling with Recurrent Neural Networks; Springer Nature: Durham, NC, USA, 2012; pp. 37–45. [Google Scholar]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. BERT: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; Volume 1, pp. 4171–4186. [Google Scholar]

- Hossain, M.Z.; Sohel, F.; Shiratuddin, M.F.; Laga, H. A comprehensive survey of deep learning for image captioning. ACM Comput. Surv. (CsUR) 2019, 51, 1–36. [Google Scholar] [CrossRef]

- Cao, M.; Li, S.; Li, J.; Nie, L.; Zhang, M. Image-text retrieval: A survey on recent research and development. arXiv 2022, arXiv:2203.14713. [Google Scholar] [CrossRef]

- Han, X.; He, S.; Zhang, L.; Xiang, T. Text-based person search with limited data. arXiv 2021, arXiv:2110.10807. [Google Scholar] [CrossRef]

- Yan, S.; Dong, N.; Zhang, L.; Tang, J. Clip-driven fine-grained text-image person re-identification. IEEE Trans. Image Process. 2023, 32, 6032–6046. [Google Scholar] [CrossRef]

- Bai, Y.; Cao, M.; Gao, D.; Cao, Z.; Chen, C.; Fan, Z.; Zhang, M. Rasa: Relation and sensitivity aware representation learning for text-based person search. arXiv 2023, arXiv:2305.13653. [Google Scholar]

- Han, H.; Miao, K.; Zheng, Q.; Luo, M. Noisy correspondence learning with meta similarity correction. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; pp. 7517–7526. [Google Scholar]

- Huang, Z.; Niu, G.; Liu, X.; Ding, W.; Xiao, X.; Wu, H.; Peng, X. Learning with noisy correspondence for cross-modal matching. Adv. Neural Inf. Process. Syst. 2021, 34, 29406–29419. [Google Scholar]

- Shao, Z.; Zhang, X.; Ding, C.; Wang, J.; Wang, J. Unified pre-training with pseudo texts for text-to-image person re-identification. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–3 October 2023; pp. 11174–11184. [Google Scholar]

- Arpit, D.; Jastrzębski, S.; Ballas, N.; Krueger, D.; Bengio, E.; Kanwal, M.S.; Lacoste-Julien, S. A closer look at memorization in deep networks. In Proceedings of the 34th International Conference on Machine Learning (ICML), Sydney, Australia, 11 August 2017; pp. 233–242. [Google Scholar]

- Yang, M.; Huang, Z.; Hu, P.; Li, T.; Lv, J.; Peng, X. Learning with twin noisy labels for visible-infrared person re-identification. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 14308–14317. [Google Scholar]

- Hu, P.; Huang, Z.; Peng, D.; Wang, X.; Peng, X. Cross-modal retrieval with partially mismatched pairs. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 9595–9610. [Google Scholar] [CrossRef] [PubMed]

- Yan, S.; Dong, N.; Liu, J.; Zhang, L.; Tang, J. Learning comprehensive representations with richer self for text-to-image person re-identification. In Proceedings of the 31st ACM International Conference on Multimedia, Ottawa, ON, Canada, 29 October–3 November 2023; pp. 6202–6211. [Google Scholar]

- Qin, Y.; Peng, D.; Peng, X.; Wang, X.; Hu, P. Deep evidential learning with noisy correspondence for cross-modal retrieval. In Proceedings of the 30th ACM International Conference on Multimedia, Lisbon, Portugal, 10–14 October 2022; pp. 4948–4956. [Google Scholar]

- Qin, Y.; Chen, Y.; Peng, D.; Peng, X.; Zhou, J.T.; Hu, P. Noisy-correspondence learning for text-to-image person re-identification. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024; pp. 27197–27206. [Google Scholar]

- Ding, Z.; Ding, C.; Shao, Z.; Tao, D. Semantically self-aligned network for text-to-image part-aware person re-identification. arXiv 2021, arXiv:2107.12666. [Google Scholar]

- Han, B.; Yao, Q.; Yu, X.; Niu, G.; Xu, M.; Hu, W.; Sugiyama, M. Co-teaching: Robust training of deep neural networks with extremely noisy labels. Adv. Neural Inf. Process. Syst. 2018, 31, 8527–8537. [Google Scholar]

- Li, J.; Li, D.; Savarese, S.; Hoi, S. Blip-2: Bootstrapping language-image pre-training with frozen image encoders and large language models. In Proceedings of the 40th International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023; pp. 19730–19742. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Amodei, D. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- van den Oord, A.; Li, Y.; Vinyals, O. Representation learning with contrastive predictive coding. arXiv 2018, arXiv:1807.03748. [Google Scholar]

- Faghri, F.; Fleet, D.J.; Kiros, J.R.; Fidler, S. Vse++: Improving visual-semantic embeddings with hard negatives. arXiv 2017, arXiv:1707.05612. [Google Scholar]

- Zhu, A.; Wang, Z.; Li, Y.; Wan, X.; Jin, J.; Wang, T.; Hua, G. Dssl: Deep surroundings-person separation learning for text-based person retrieval. In Proceedings of the 29th ACM International Conference on Multimedia, Chengdu, China, 20–24 October 2021; pp. 209–217. [Google Scholar]

- Li, S.; Xiao, T.; Li, H.; Zhou, B.; Yue, D.; Wang, X. Person search with natural language description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1970–1979. [Google Scholar]

- Ye, M.; Shen, J.; Lin, G.; Xiang, T.; Shao, L.; Hoi, S.C. Deep learning for person re-identification: A survey and outlook. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 2872–2893. [Google Scholar] [CrossRef]

- Yan, S.; Tang, H.; Zhang, L.; Tang, J. Image-specific information suppression and implicit local alignment for text-based person search. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 17973–17986. [Google Scholar] [CrossRef]

- Shao, Z.; Zhang, X.; Fang, M.; Lin, Z.; Wang, J.; Ding, C. Learning granularity-unified representations for text-to-image person re-identification. In Proceedings of the 30th ACM International Conference on Multimedia, Lisbon, Portugal, 10–14 October 2022; pp. 5566–5574. [Google Scholar]

- Fujii, T.; Tarashima, S. Bilma: Bidirectional local-matching for text-based person re-identification. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–3 October 2023; pp. 2786–2790. [Google Scholar]

- Cao, M.; Bai, Y.; Zeng, Z.; Ye, M.; Zhang, M. An empirical study of clip for text-based person search. In Proceedings of the 38th AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; pp. 465–473. [Google Scholar]

- Wang, Z.; Fang, Z.; Wang, J.; Yang, Y. Vitaa: Visual-textual attributes alignment in person search by natural language. In Proceedings of the 16th European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; Springer: Cham, Switzerland, 2020; pp. 402–420. [Google Scholar]

- Ji, Z.; Hu, J.; Liu, D.; Wu, L.Y.; Zhao, Y. Asymmetric cross-scale alignment for text-based person search. IEEE Trans. Multimed. 2022, 25, 7699–7709. [Google Scholar] [CrossRef]

- Li, S.; Cao, M.; Zhang, M. Learning semantic-aligned feature representation for text-based person search. In Proceedings of the ASSP 2022-2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 22–27 May 2022; pp. 2724–2728. [Google Scholar]

- Zhao, Z.; Liu, B.; Lu, Y.; Chu, Q.; Yu, N. Unifying multi-modal uncertainty modeling and semantic alignment for text-to-image person re-identification. In Proceedings of the 38th AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; pp. 7534–7542. [Google Scholar]

| Dataset | Identities | Images | Texts | Train Split | Val Split | Test Split |

|---|---|---|---|---|---|---|

| CUHK-PEDES | 13,003 | 40,206 | 80,412 | 11,003 IDs, 34,054 images, 68,108 texts | - | 2000 IDs/ images |

| ICFG-PEDES | 4102 | 54,522 | 54,522 | 3102 IDs, 34,674 pairs | 50.93 | 1000 IDs, 19,848 pairs |

| RSTPReid | 4101 | 20,505 | 41,010 | 3701 IDs | 200 IDs | 200 IDs |

| Methods | ICFG-PEDES | ||||

|---|---|---|---|---|---|

| R-1 | R-5 | R-10 | mAP | mINP | |

| DSSL [44] | 39.05 | 62.60 | 73.95 | - | - |

| SSAN [38] | 43.50 | 67.80 | 77.15 | - | - |

| IVT [19] | 46.70 | 70.00 | 78.80 | - | - |

| ISANet [47] | 57.73 | 75.42 | 81.72 | ||

| LGUR [48] | 57.42 | 74.97 | 81.45 | - | - |

| BILMA [49] | 63.83 | 80.15 | 85.74 | 38.26 | |

| IRRA [8] | 63.46 | 80.25 | 85.82 | 38.06 | 7.93 |

| TBPS-CLIP [50] | 65.05 | 80.34 | 85.47 | 39.83 | - |

| RaSa [28] | 65.28 | 80.40 | 85.12 | 41.29 | 9.97 |

| RDE [37] | 67.54 | 82.31 | 87.12 | 40.11 | 8.05 |

| Ours | 68.13 | 83.39 | 89.02 | 41.25 | 9.08 |

| Methods | RSTPReid | ||||

|---|---|---|---|---|---|

| R-1 | R-5 | R-10 | mAP | mINP | |

| ViTAA [51] | 50.98 | 68.79 | 75.78 | - | - |

| SSAN [38] | 54.23 | 72.63 | 79.53 | - | - |

| IVT [19] | 56.04 | 73.60 | 80.22 | - | - |

| ACSA [52] | 48.40 | 71.85 | 81.45 | - | - |

| LGUR [48] | 57.42 | 74.97 | 81.45 | - | - |

| BILMA [49] | 61.20 | 81.50 | 88.80 | 48.51 | |

| TP-TPS [7] | 50.65 | 72.45 | 81.20 | 43.11 | - |

| IRRA [8] | 60.20 | 81.30 | 88.20 | 47.17 | 25.82 |

| TBPS-CLIP [50] | 61.95 | 83.55 | 88.75 | 48.26 | - |

| RaSa [28] | 65.90 | 86.50 | 91.35 | 52.31 | 29.23 |

| RDE [37] | 65.42 | 84.92 | 90.01 | 50.93 | 29.02 |

| Ours | 66.31 | 86.87 | 92.01 | 52.41 | 29.01 |

| Methods | CUHK-PEDES | ||||

|---|---|---|---|---|---|

| R-1 | R-5 | R-10 | mAP | mINP | |

| DSSL [44] | 59.89 | 80.41 | 87.56 | - | - |

| SSAN [38] | 61.37 | 80.15 | 86.73 | - | - |

| IVT [19] | 65.59 | 83.11 | 89.21 | - | - |

| ACSA [52] | 63.56 | 81.40 | 87.70 | - | - |

| SAF [53] | 64.13 | 82.62 | 88.40 | 58.61 | - |

| TP-TPS [7] | 70.16 | 86.10 | 90.98 | 66.32 | - |

| IRRA [8] | 73.38 | 89.93 | 93.71 | 66.13 | 50.24 |

| UUMSA [54] | 74.25 | 89.93 | 93.58 | 66.15 | - |

| TBPS-CLIP [50] | 73.54 | 88.19 | 92.35 | 65.38 | - |

| RaSa [28] | 76.51 | 90.29 | 94.25 | 67.49 | 51.11 |

| RDE [37] | 75.94 | 90.21 | 94.12 | 67.56 | 51.44 |

| Ours | 75.98 | 90.34 | 94.32 | 67.49 | 51.37 |

| Methods | ICFG-PEDES | ||||

|---|---|---|---|---|---|

| R-1 | R-5 | R-10 | mAP | mINP | |

| Baseline | 66.12 | 81.76 | 88.01 | 40.12 | 7.94 |

| +IRR | 67.76 | 82.14 | 88.14 | 40.95 | 8.32 |

| +MLLM | 67.88 | 82.16 | 88.71 | 40.84 | 8.49 |

| Our | 68.13 | 83.39 | 89.02 | 41.25 | 9.08 |

| Methods | RSTPReid | ||||

|---|---|---|---|---|---|

| R-1 | R-5 | R-10 | mAP | mINP | |

| Baseline | 64.05 | 84.68 | 88.89 | 50.05 | 27.84 |

| +IRR | 65.92 | 85.19 | 88.56 | 51.43 | 28.61 |

| +MLLM | 65.97 | 85.34 | 88.62 | 50.92 | 28.46 |

| Our | 66.31 | 86.87 | 92.01 | 52.41 | 29.01 |

| Methods | CUHK-PEDES | ||||

|---|---|---|---|---|---|

| R-1 | R-5 | R-10 | mAP | mINP | |

| Baseline | 73.56 | 88.19 | 92.08 | 65.67 | 50.26 |

| +IRR | 74.67 | 89.54 | 93.65 | 67.02 | 50.83 |

| +MLLM | 75.19 | 90.11 | 94.36 | 67.21 | 50.92 |

| Our | 75.98 | 90.34 | 94.32 | 67.49 | 51.37 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, L.; Xiong, L.; Li, W.; Feng, Y. Towards Robust Text-Based Person Retrieval: A Framework for Correspondence Rectification and Description Synthesis. Electronics 2025, 14, 4619. https://doi.org/10.3390/electronics14234619

Yu L, Xiong L, Li W, Feng Y. Towards Robust Text-Based Person Retrieval: A Framework for Correspondence Rectification and Description Synthesis. Electronics. 2025; 14(23):4619. https://doi.org/10.3390/electronics14234619

Chicago/Turabian StyleYu, Longlong, Lian Xiong, Wangdong Li, and Yuxi Feng. 2025. "Towards Robust Text-Based Person Retrieval: A Framework for Correspondence Rectification and Description Synthesis" Electronics 14, no. 23: 4619. https://doi.org/10.3390/electronics14234619

APA StyleYu, L., Xiong, L., Li, W., & Feng, Y. (2025). Towards Robust Text-Based Person Retrieval: A Framework for Correspondence Rectification and Description Synthesis. Electronics, 14(23), 4619. https://doi.org/10.3390/electronics14234619