2. Research Methodology

The research methodology employs a detailed review strategy to minimize bias and produce more reliable and trustworthy findings. In addition to identifying research gaps, challenges, and obstacles worth exploring within the context of Weapon Detection, this review aims to contribute to a comprehensive analysis of the latest literature. This survey examines studies with relevant results and applies them to the Field of Weapon Detection.

Table 1 provides a comparative summary of critical research studies focusing on the weapon detection literature, including topics of interest, the time period covered, and the methods employed. Warsi et al. [

6] studied knife and handgun detection algorithms between 2013 and 2018. Debnath et al. [

7] provide a synthesis of computer vision-based methodologies for automatic gun and knife detection from 1962 to 2020. Yadav et al. [

5] evaluate the performance of classical machine learning and deep learning models for weapon identification, published between 2011 and 2022. Santos et al. [

3] also provide a systematic review of deep learning-based weapon detection research, examining models, datasets, and barriers in the literature from 2014 to 2022. In summary, the table illustrates the progression of weapon detection research over time, including the increasing use of deep learning methods and systematic reviews.

This study distinguishes itself from the existing literature summarized in

Table 1 by its exhaustive, systematic, and data-driven assessment of AI-based weapon detection, conducted in accordance with the PRISMA framework, which supports methodological transparency and rigor. In contrast to previous studies that may have been primarily narrative or case studies that focused on a particular model or dataset, our paper covers a broader temporal range—from 2016 to 2025—and synthesizes an overall description of the findings from 101 research studies on weapon detection, which also presents a quantitative assessment of popular model evaluation metrics: precision, recall, and mean average precision (mAP). Moreover, our study goes beyond reporting on algorithm comparisons. It robustly discusses several areas of evaluation inconsistencies, dataset considerations, and ethical concerns. These findings underscore the importance of research published using standardized benchmarks and privacy-friendly approaches. This multidimensional approach provides not only technical and methodological perspectives but also ethical insights; hence, the review offers an up-to-date, systematic, and practical contribution compared to other studies, serving as a reference point for the development of ethical, reliable, and efficient AI-based surveillance.

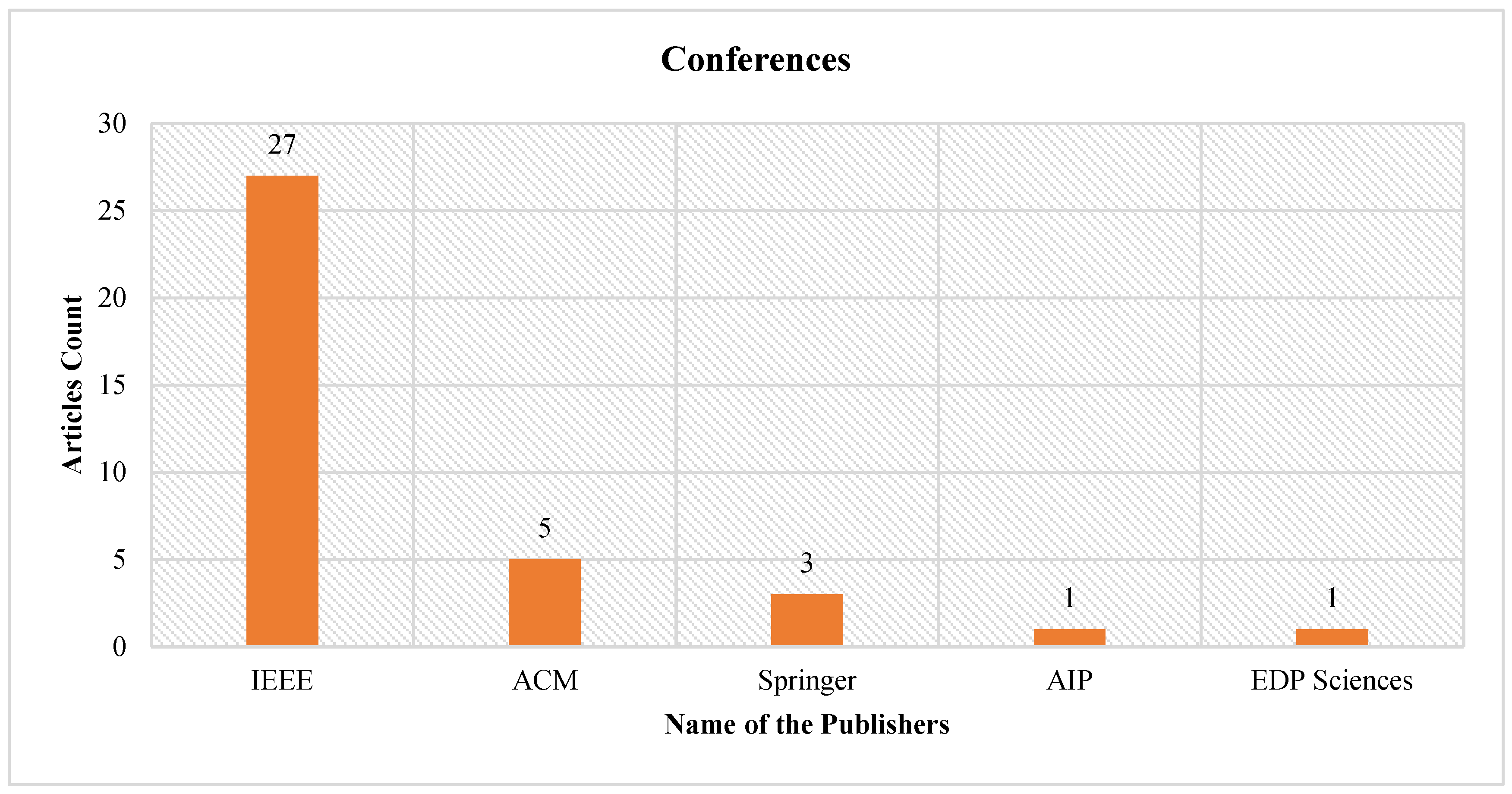

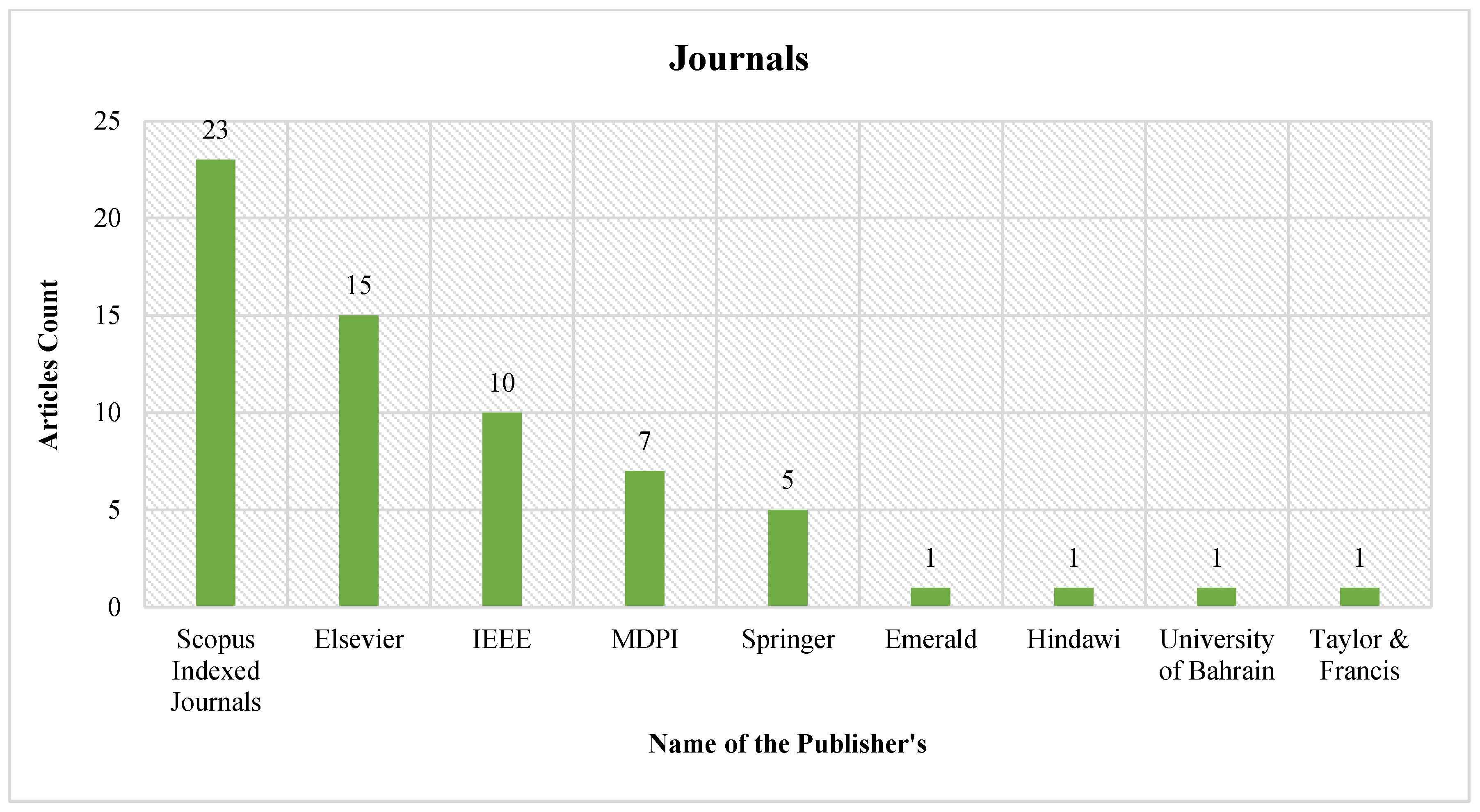

The articles are selected based on their focus on Weapon Detection and the use of Artificial Intelligence, Machine Learning, and Deep Learning. The various sources used as our search databases are shown in

Figure 1 and

Figure 2, which list Conferences and Journals, respectively. These databases include verified conferences and articles.

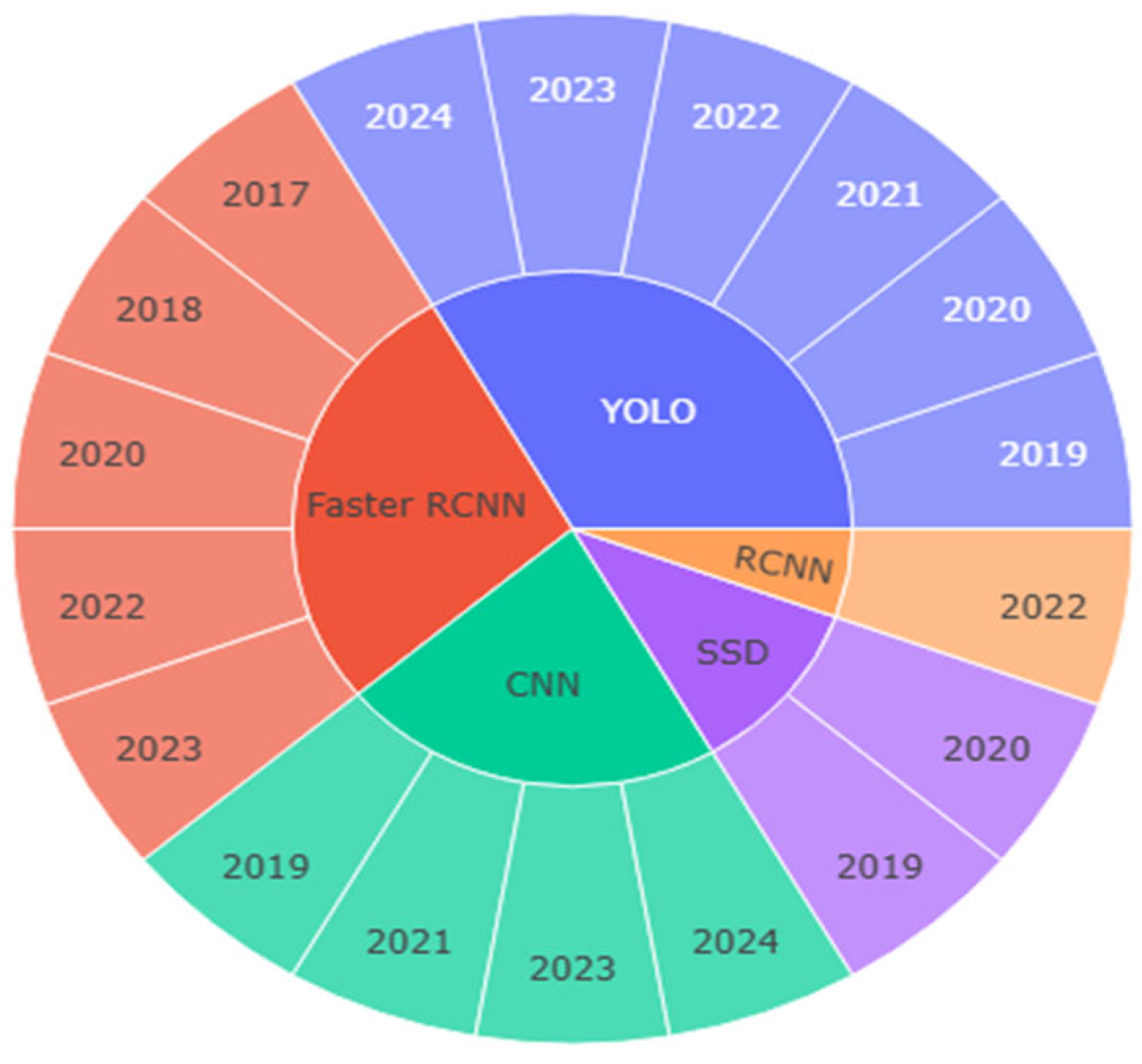

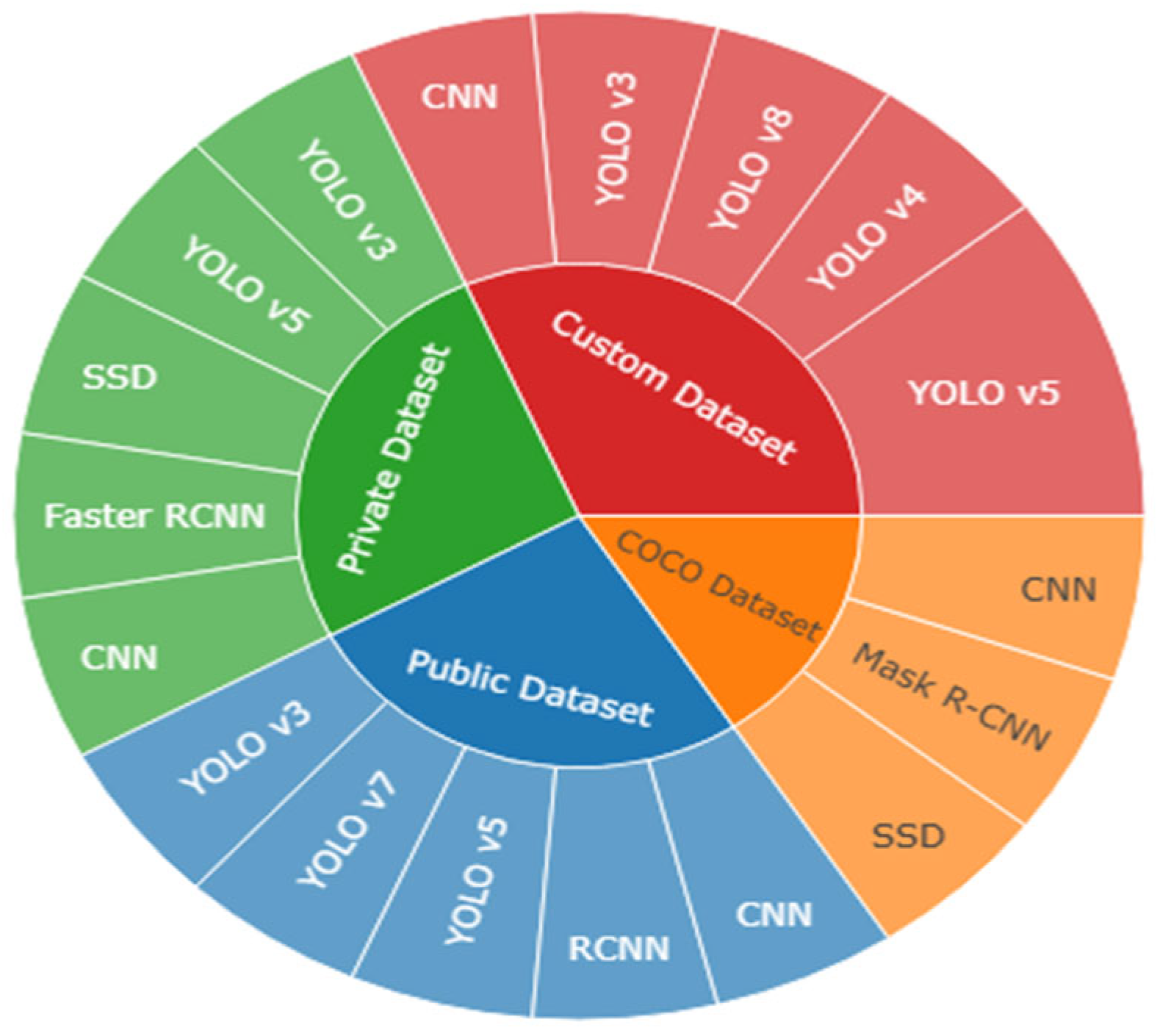

The search selection parameters demonstrate how we focus on the relevant content returned by search engines. Our goal is to find primary publications on our subject that address our research questions. A total of 101 studies were selected from various databases. The study analysis (

Figure 3) included articles published from 2016 to 2025. Many duplicate papers unrelated to the research requirements had to be eliminated. For this process, research studies were selected from seven electronic databases. The number of publications chosen from each of the twelve electronic databases is shown in

Figure 1 and

Figure 2. The papers were categorized into two groups: conference papers and articles. For conference papers, IEEE Xplore leads with 73% of the focus (27 papers), followed by ACM and Springer, with 14% (5 papers) and 8% (3 papers), respectively. With 36% of the articles selected, journals indexed by Scopus received the most attention (23 papers), followed by ScienceDirect (23%; 15 papers), IEEE (16%; 10 papers), and MDPI (11%; 7 papers).

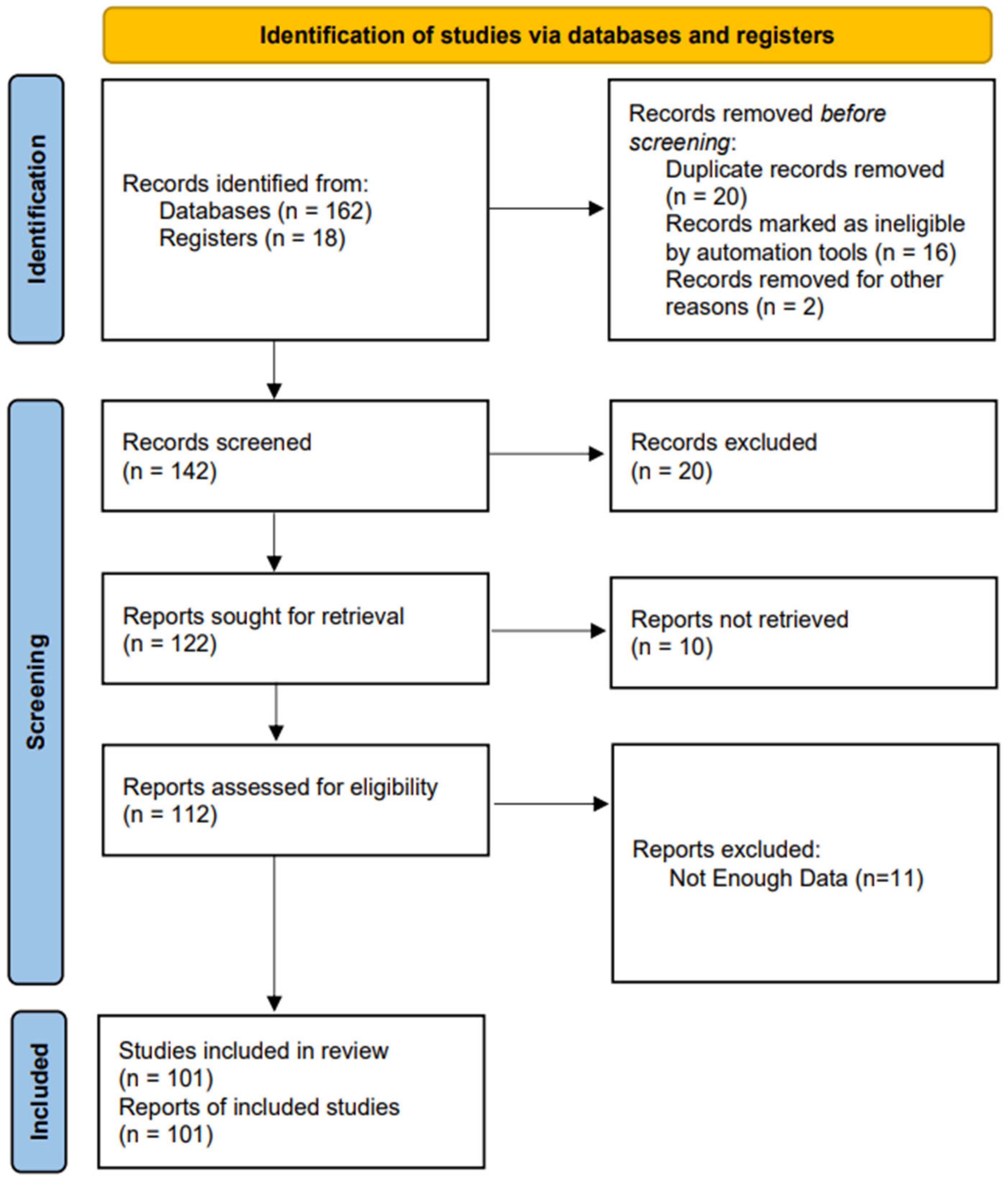

In developing this study, we followed the criteria outlined in the Preferred Reporting Items for Systematic Reviews (PRISMA) to help ensure the quality and repeatability of our review process. For a methodical, peer-reviewed review process, PRISMA (see

Figure 3) provides a standard checklist of guidelines that we closely followed in this work. We developed a review protocol that outlined the criteria for selecting articles, the search protocol, data extraction, and the data analysis process. The inclusion and exclusion criteria are detailed in

Table 2.

To ensure transparency and replicability, we have provided a detailed account of our literature search strategy. The review now indicates that studies were recovered from [list of databases, i.e., Scopus, IEEE Xplore, SpringerLink, ScienceDirect, MDPI, Wiley Online Library] which included predetermined search strings that combined the following keywords: “weapon detection”, “firearm recognition”, “knife detection”, and “surveillance”. As previously stated, searches were conducted over the period 2016–2025, with the last search performed on [30 September 2025]. Only English language publications were considered. A two-phase screening process (i.e., titles and abstracts followed by full-text review) was conducted by multiple reviewers. If there was any disagreement about inclusion decisions, it was resolved through discussion and consensus. The PRISMA flowchart (

Figure 3) and counts have been updated to reflect the current state of the process as reported.

All records were screened in two stages: first, a title and abstract review, followed by a full-text review, applying predetermined inclusion and exclusion criteria to assess each record’s eligibility. Discussions were conducted to maintain objectivity and resolve conflicts with consensus and debate. To apply consistency in the data collection process, a systematic approach of data extraction was developed. The protocol outlined the significant characteristics for documentation, including the year range, dataset type, AI/ML/DL model employed, evaluation metrics (e.g., precision, recall, mAP), and key results. The extraction protocol also suggested the use of standardized forms to maintain uniformity in extraction procedures; the authors independently verified the quality and reliability of the data extraction.

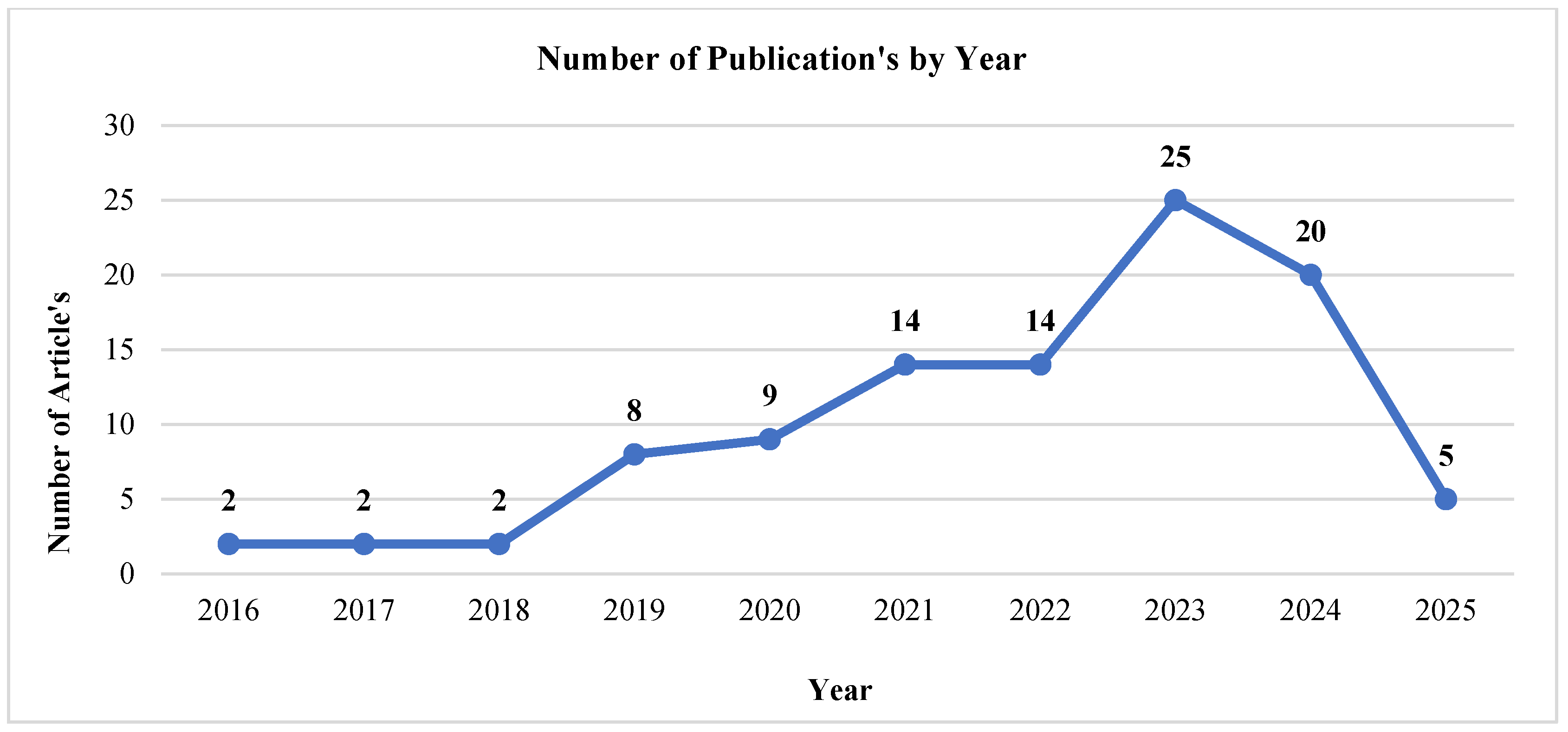

Extraction of Information: The papers were categorized into two groups: conference papers and articles. The process involves examining each document and classifying it by publication year. This results in a list of research studies (both journal and conference publications) along with supporting documentation for each research topic. The study covers research conducted from 2016 to 2025. Significant progress was made in 2023 in identifying and categorizing weapons. The growth in research studies during this period is illustrated in

Figure 4.

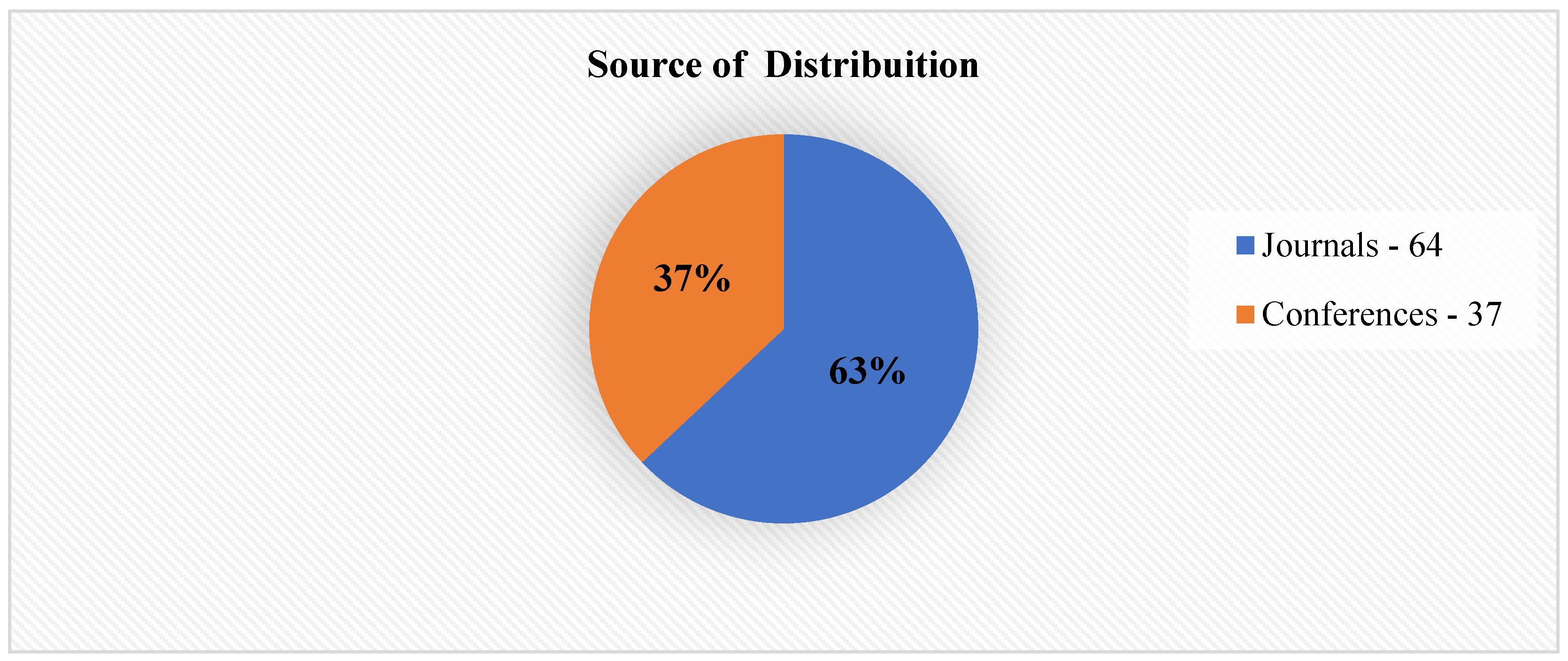

Distribution of Research Papers: An analysis of research articles investigated or shortlisted reveals patterns in Weapon Detection research from 2016 to 2025. Journals and conferences were the two categories into which all the shortlisted studies were divided.

Figure 5 shows the distribution of articles across these two categories: journal publications account for 63%, and conference papers account for 37%.

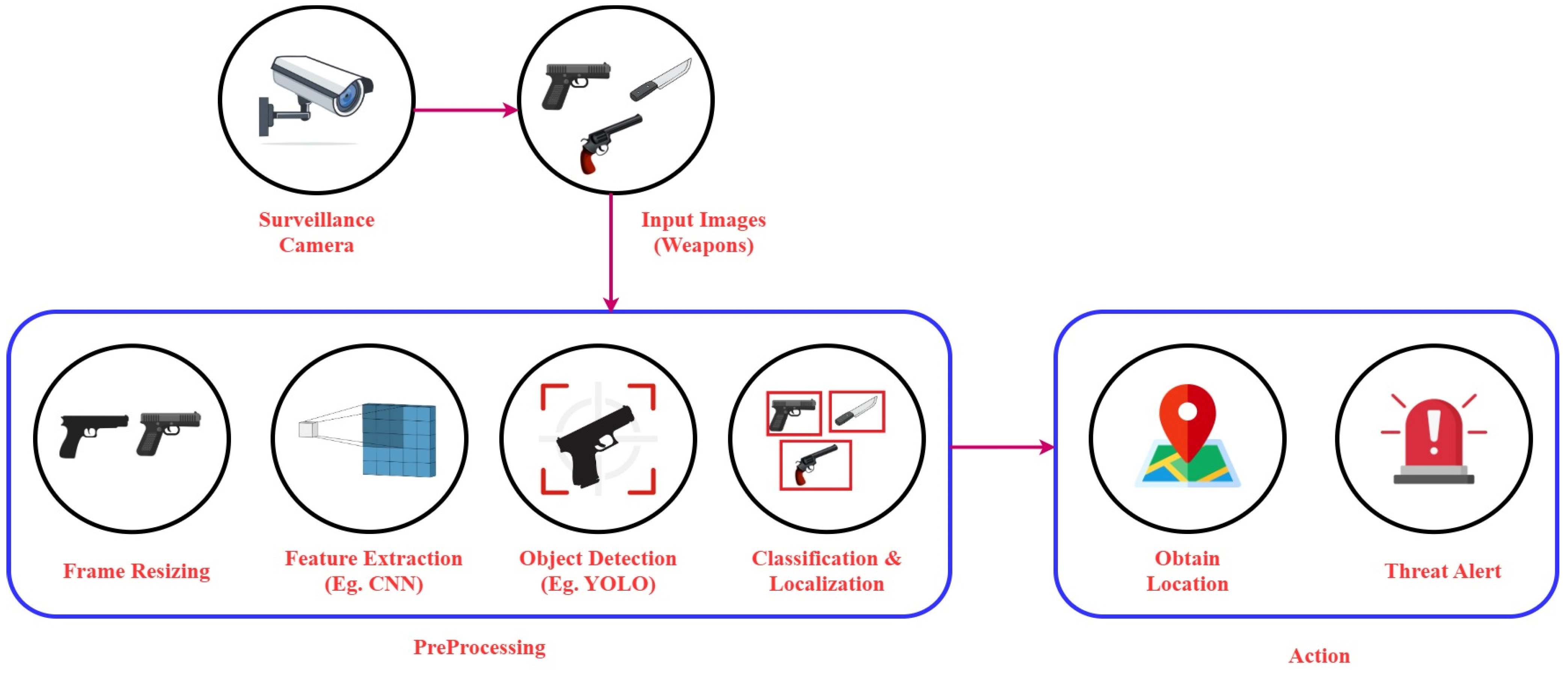

Figure 6 illustrates the comprehensive workflow of an AI-based weapon-detection system that complements surveillance cameras to provide real-time information on potential threats. Surveillance cameras capture video footage and provide continuous images as input, which may contain potential weapons (e.g., knives, guns). Images of potential weapons undergo a preprocessing step that resizes all frames to usable dimensions and omits unnecessary visual features using convolutional neural networks (CNNs). Preprocessed or volunteer frames are submitted for analysis by an object detection model (e.g., YOLO) to report on the identification and localization of the weapon in the images (i.e., bounding box and weapon classification). Once a weapon is identified and localized, the algorithm takes action, determining its location and automatically generating a threat alert to notify security staff of the weapon requiring immediate attention. The demonstration of the threat capture pipeline highlights an efficient, end-to-end AI-driven approach that leverages computer vision, deep learning, and intelligent alerting. The efficiency of functions combines to increase situational awareness and improve safety and security, specifically in surveillance.

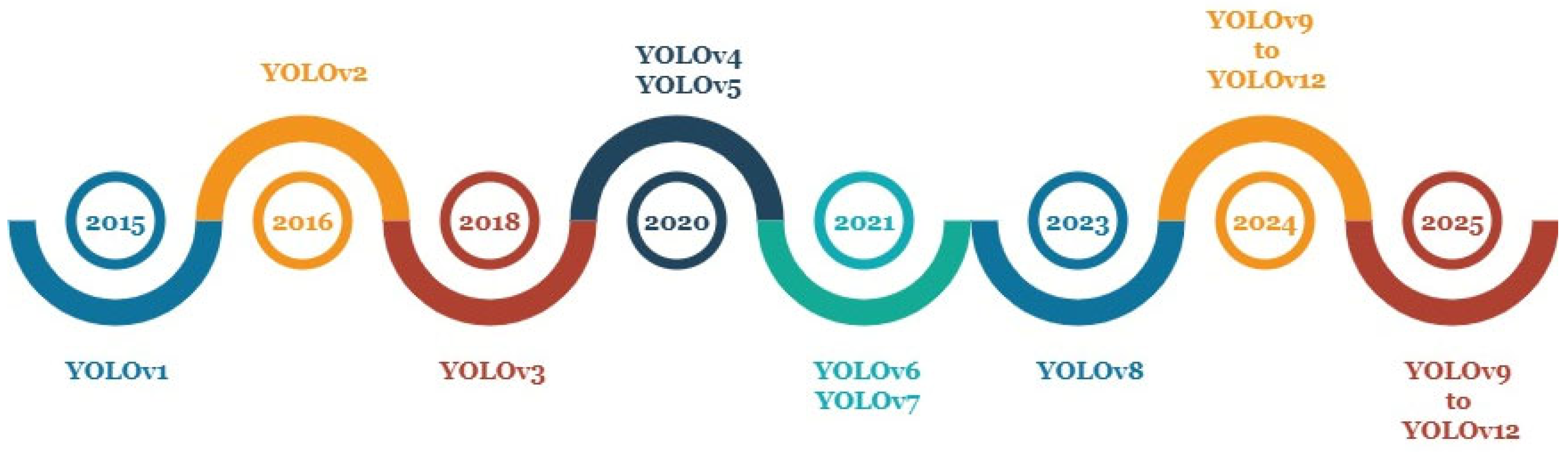

Figure 7 illustrates the chronological timeline of the YOLO (You Only Look Once) family of object detection algorithms, starting from 2015 (the original YOLO), and addresses developments that continued until 2025. The YOLO development process began with the original YOLO implementation, YOLOv1, introduced in 2015. The introduction of YOLOv1 enabled real-time object detection with a single neural network. YOLO development rapidly progressed to YOLOv2 in 2016, using improved accuracy and speed through architecture advances. Following the YOLOv2 release, YOLOv3 introduced improvements in multi-scale detection and feature extraction by using deeper convolutional layers in 2018. YOLOv4 and YOLOv5 were launched in 2020, using the CSPDarknet architecture and incorporating new training-time optimizations. YOLO tracking models offer faster performance and have been widely adopted in real-time computer vision tasks. YOLOv6 and YOLOv7 follow the FIR approach, which was released in 2021. These models utilize optimizations that improve both efficiency and accuracy, making them suitable for edge devices. In 2023, YOLOv8 introduced transformer-based applications and trained heads for the detection task. YOLOv9 through YOLOv12, which emerged between 2024 and 2025, represent the next generation of architecture, integrating transformer augmentation, improved feature fusion, and adaptive learning. In summary, the trajectory of advances in YOLO model families reflects continuous innovations and refinements to YOLO model designs and research, ultimately leading to improvements in real-time object and weapon detection.

4. AI-Enhanced Smart City Surveillance—Weapon Detection: Gun

Legal authorities have faced increasing pressure to control and reduce crime rates in cities as urban populations have grown. Monitoring public use of weapons and having real-time data on criminal incidents are essential. Additionally, firearm-related crime rates are a significant concern globally. Although many countries permit firearm possession, it remains crucial to monitor their public use. Gun violence is one of the most critical public health emergencies today. Real-time gunshot location and identification are vital for military, security, and law enforcement operations. Recent advances in artificial intelligence have enabled the development of effective gunshot recognition systems. Deep learning, a branch of artificial intelligence that mimics the human brain’s function to perform tasks and recognize patterns, plays a key role. Video surveillance for real-time firearm detection in public spaces is among the most effective strategies for preventing and identifying criminal activity.

Figure 8 illustrates the process of detecting weapons using artificial intelligence or other AI techniques.

Hussein et al. [

8] discussed the challenge of using image-processing techniques to detect concealed firearms in public places. The study detects hidden weapons, such as knives and guns, using real-time infrared (IR) and RGB images. The proposed workflow includes image acquisition, preprocessing, fusion, and edge detection, utilizing the Discrete Wavelet Transform (DWT) for image fusion and the Otsu algorithm for segmentation. Images were resized to 500 × 500 pixels and processed with various image operations as part of the experimental setup. Achieving a 99% detection accuracy, the technology outperformed previous methods in precision. For automatic weapon detection, future research suggests implementing this technique in real-time applications. A 99% accuracy was achieved using the Otsu Algorithm for threshold-based segmentation in automatic weapon detection; however, the algorithm could not operate in real time and required further improvements.

Verma et al. [

9] explore the application of Faster R-CNN, a popular object detection model, for detecting handheld guns in public spaces. The Internet Movie Firearm Database (IMFDB) is employed in the study, focusing on weaponry such as shotguns, rifles, and revolvers across a variety of settings, including challenges such as background variation and occlusion. Based on the Faster R-CNN (Region-Based Convolutional Neural Network), specifically a refined VGG-16 architecture, the researchers proposed a gun detection system. The process involved refining gun images from the IMFDB after transferring learning weights from pre-trained models and using VGG-16 as a feature extractor. By using ImageNet-pretrained weights to reduce training time, the model was trained with MatConvNet on a single CPU. Accuracy, true positive rate (TPR), and false positive rate (FPR) were the evaluation metrics. The SVM (Support Vector Machine) classifier achieved a high accuracy of 93.1%, outperforming previous methods such as SIFT (Scale Invariant Feature Transform) and SURF (Speeded Up Robust Features), which had accuracies of 84.26% and 88.67%, respectively. The system achieves high detection accuracy, but the research discusses trade-offs in processing time that may limit its suitability for real-time surveillance without further optimization. For practical deployment, additional research is recommended to substantially reduce computing costs and enhance real-time performance. Faster R-CNN, which efficiently leverages VGG-16 with high accuracy, detects firearms by employing a support vector machine classifier pre-trained on ImageNet. The primary drawback of Faster R-CNN is its computational expense, which limits its real-time capabilities.

Idree et al. [

55] discuss the limitations of police surveillance systems operated by humans, noting that this approach can lead to mistakes and missed detections due to attention fatigue. The researchers recommend using computer vision techniques to automate video surveillance, with a focus on real-time detection of objects, actions, and anomalies. These systems are evaluated using standard, pre-curated, annotated datasets split into training and test sets. The development includes features such as activity labeling (e.g., theft and assault), object identification (e.g., recognizing police officers and detecting weapons), and extended video summaries. The proposed solution is tested within a local police department and implemented with deep neural network models trained on annotated datasets. Although challenges such as distinguishing between normal and abnormal actions remain, early results show promising accuracy. Future efforts should focus on addressing deployment issues and enhancing real-time detection capabilities. The utilization of computer vision provided an efficient method for monitoring weapon activity. However, the performance metrics were neither studied nor unambiguously reported.

de Azevedo Kanehisa et al. [

10] discuss the challenge of identifying firearms in public areas to enhance response times in potentially dangerous situations. The researchers employed the YOLO technique, renowned for its effectiveness in real-time object detection. They utilized a dataset compiled from the Internet Movie Firearms Database (IMFDB), which comprises over 4600 labeled images of firearms. A manually labeled bounding-box method was applied to fine-tune the model for reliable detection after training on labeled images with a 90/10 train-test split. The system demonstrated strong firearm recognition capabilities, achieving a mean average precision (mAP) of 70.72%, 95.73% sensitivity, 97.30% specificity, and 96.26% accuracy, even with partial obstruction, as indicated by experimental results. However, false positives from objects that resemble guns remain a limitation; future research aims to incorporate other imaging modalities, such as infrared, to improve resilience and diversity in detection with lower-quality photos. YOLO with Darknet and CNN provided a robust, practical solution for high-accuracy detection but showed weaknesses on low-quality images, leading to imprecision.

Dubey [

1] discusses the development of a trained model capable of identifying concealed firearms. Manually examining security photos to assess gun-related risks is costly, time-consuming, and prone to human error. This study aims to determine which neural network models, such as Faster R-CNN and SSD, are most suitable for detecting guns in still images. Open-source platforms provided the dataset for this research. An accuracy-based metric may introduce bias toward larger classes, since real-world datasets often contain multiple classes with uneven distributions. The risk of misclassification must also be considered. The model achieved its lowest accuracy of 64% when the image and background had reduced contrast, but it reached 99% accuracy when contrast was high. Due to the slow detection speed—approximately 15 s per object—real-time object detection is currently not feasible. More real-time surveillance images with additional classifications, such as shotgun, rifle, and handgun, could be used to train the model. The Faster R-CNN demonstrated high accuracy and speed in weapon detection, but requires further training on real-time surveillance data to improve its adaptiveness.

Gelana et al. [

2] highlight the lower danger faced by victims in active shooter situations. Their article addresses the challenge of detecting firearms in security camera footage, particularly in high-traffic public areas such as theaters and shopping centers. The dataset, which focuses solely on firearms, contains 1869 positive and 4000 negative images. The proposed method uses a TensorFlow-based Convolutional Neural Network (CNN) for weapon classification, complemented by image processing techniques such as background subtraction and Canny edge detection. A CCTV video dataset is used in the experimental setup to evaluate the algorithm, yielding a detection accuracy of 97.78%, with a sensitivity of 93.84% and a specificity of 99.73%. Although the algorithm is designed for real-time detection, it struggles with concealed weapons and can only identify visible handguns. Future work will involve expanding the dataset and refining the CNN to enhance detection speed and accuracy. A convolutional neural network (CNN) yielded accurate, real-time firearm detection. However, there were still too many false positives, which undermined the reliability of the detection process.

Vallez et al. [

39] discuss reducing false positives in surveillance systems for pistol detection. The data were collected from CCTV cameras using a dataset from the University of Seville in Spain. This was conducted in controlled settings, including 871 photos and 177 firearms with annotations. Initial detection used the Faster R-CNN technique, followed by an autoencoder trained on simulated high school hallway data created with Unreal Engine 4. The autoencoder’s design incorporated reconstruction error to filter out common false positives. The results showed a precision of 77.24 and a 37.9% reduction in false positives, with no loss of detection capability. Future research will explore other detection architectures and improve the autoencoder’s thresholding. The Faster-RCNN model effectively decreased false positives; however, the general framework still needed improvement to be effective at broader weapon detection.

Warsi et al. [

49] argue that, given the current state of the world, automated visual monitoring is crucial for security personnel to identify handguns. The goal of this research is to visually identify firearms in live recordings. The researchers combined their collection of pistols from various angles with the ImageNet dataset to improve results. The YOLOv3 algorithm is employed in the proposed method, while the Faster R-CNN algorithm is used for comparison to evaluate false positives and false negatives. YOLOv3 has a speed advantage over Faster R-CNN. YOLOv3 can process 45 frames per second, whereas Faster R-CNN can process eight frames per second. Two of the four videos outperformed Faster R-CNN in terms of accuracy. The precision for the proposed approach is 96.51%, and the F1 score is 75%. YOLOv3’s fast processing speeds and reasonable accuracy make it suitable for real-time applications. However, this research identifies challenges in detecting small or concealed weapons and suggests further refinement of the model to address these issues. The YOLOv3 model performed fast, reliable detection of a pistol, even under partial occlusion. Performance suffered when low-quality frames were used or when frames obscured the gun.

González et al. [

52] address the challenges of detecting guns in real time through CCTV surveillance. This article introduces a new dataset derived from a university’s actual CCTV system and the creation of synthetic images. The researchers demonstrate that a Faster R-CNN object detector with FPN (Feature Pyramid Network) performs well after training on both real and synthetic images in a real CCTV setting. State-of-the-art weapon recognition was improved through a two-stage training process using a Faster R-CNN with FPN and ResNet-50, resulting in a weapon detection model suitable for quasi-real-time CCTV applications (inference time of 90 ms with an NVIDIA GeForce GTX 1080 Ti). The results showed a confidence level of 0.95 or 0.99 and an IoU of 0.50. Since the primary goal of this study is to detect hazardous items and the researchers are not interested in differentiating them, knives and other weapons were excluded, and only the class “weapon” was used to detect pistols and rifles. The Faster R-CNN model with FPN achieved high detection accuracy when evaluated on CCTV datasets. However, the detection of other types of weapons was not included in the model’s design during the evaluation.

Pang et al. [

56] discuss how this work enhances security by using passive millimeter-wave (PMMW) imaging to detect hidden metallic objects on the human body in real time. Beihang University provided the dataset, which includes 1634 PMMW images showing people carrying metallic weapons in various settings. The proposed method utilizes YOLOv3 (You Only Look Once) in two versions: YOLOv3-13 and YOLOv3-53, which differ in computational load and network depth. Both models employ a one-step detection approach to identify objects at different scales. YOLOv3-53 achieves a maximum mean average precision (mAP) of 95% at 35 frames per second, while YOLOv3-13 reaches 85% mAP at 150 frames per second. Even with limited sample data, this approach is more practical and efficient for real-time detection of weapon contraband on the human body in PMMW images. Future research aims to improve accuracy and adaptability across various scenarios, including those involving thick clothing and diverse environmental conditions. The combination of YOLOv3 and SSD models demonstrated improvements in both detection effectiveness and feasibility. However, this combined approach took longer to train correctly, resulting in improved accuracy in both models.

Vílchez et al. [

11] present improved evaluation accuracy and reduced bias in license exams. The study focuses on automating the detection of bullet impacts on shooting-range silhouettes. The detection model, Mask R-CNN, uses a pre-trained model trained on the COCO dataset. The proposed Bullet Impact Detection (MBID) method employs Mask R-CNN (ResNet 50 and 101) with four steps: preprocessing, impact detection, edge detection, and results evaluation. The dataset contains 600 images with 2401 bullet impacts. Mask R-CNN outperforms other methods, such as YOLOv3, SVM, and the Circular Hough Transform, in object detection, classification, and zone delineation. ResNet 50 achieves 97.6% accuracy, 99.5% precision, and 97.9% recall. Further research could enhance detection accuracy by addressing irregular impact shapes and refining dataset conditions. Mask R-CNN achieved nearly perfect precision and recall, resulting in very high accuracy. The model’s limitation was the artificially small dataset size.

Xu et al. [

57] discuss the issue of low-cost, automated weapon detection in surveillance videos and developed a TensorFlow-based approach using the SSD-MobileNet model for efficient identification. They used a small weapon dataset of 1218 images, mainly from COCO, with the main categories being handgun, shotgun, and rifle. The system extracts key frames from videos based on interframe differences to reduce redundant information. It employs SSD-MobileNet, a lightweight deep neural network for feature extraction, and SSD for detection. The model achieved precision values of 0.8524 at an IoU of 0.5 and 0.7006 at an IoU of 0.75 on an Intel i3 processor paired with an AMD Radeon GPU, demonstrating its reliability across different precision metrics. The system should be reconfigurable, low-cost, and capable of energy-saving through key-frame processing, especially in low-traffic areas. The work also involves increasing training data to improve accuracy and implementing the system in dual operational modes to accommodate various usage scenarios. The study revealed that SSD with CNN enabled the detection of firearms live. However, accuracy decreased when the source video was shot at a lower resolution than the SSD video.

Aftab et al. [

36] discuss the need to create a suitable plan to effectively respond to any terrorist attack on hospitals, airports, shopping centers, schools, universities, colleges, railway stations, passport offices, bus stands, dry ports, and other important private and public locations. The dataset includes images from various sources. An automated system must quickly detect and classify weapons to plan a proper response and minimize damage. This study is based on a Convolutional Neural Network (CNN) model that addresses earlier challenges. It uses datasets compiled on YOLO and is designed for single-use viewing, focusing on real-time firearm identification. The proposed system for detecting and classifying guns has undergone extensive training and testing. The researchers evaluated the system using a 70-30 split rule. Results show that our model performed well, achieving 97.01% accuracy in firearm detection. In the future, researchers plan to use advanced techniques to gather larger datasets and expand the range of categories. The study’s accuracy and F1 scores for CNN-based weapon detection were excellent; however, more data would be needed for generalizability.

Akbulut et al. [

58] highlight deficiencies in existing surveillance systems for quickly detecting guns and underscore the urgent need for improved public safety measures to prevent armed threats. Focusing on handguns and rifles, the research utilizes the Coco dataset and its own dataset to categorize different types of guns. The study uses the YOLO algorithm, trains the model on labeled images, performs live object recognition, and analyzes video frames to identify weapons. Implementation is performed on a PC equipped with a GPU that supports TensorFlow and OpenCV. The speed and accuracy of the YOLO model are evaluated using live video feeds after it has been trained on labeled data. The device achieves 95% accuracy, detecting weapons more quickly and precisely than previous systems. Future research will aim to add new features, such as contextual notifications that follow the movements of recognized guns, and improve the model to reduce false alarms. This article also examines how environmental conditions affect detection accuracy. YOLO’s ease of use and accuracy for efficient, effective detection were limited by the small dataset used in the analysis.

Bhatti et al. [

59] state that the central issue of interest is the real-time identification of firearms in real-life video footage to better protect against and eliminate unlawful acts. The datasets used include a small self-made dataset based on personal images and videos, YouTube videos, open-source GitHub repositories, the University of Granada dataset, and the IMFDB (Internet Movie Firearms Database). The weapons employed are diverse, including primary weapons such as pistols, secondary weapons such as small handheld firearms, and deception items such as cellular phones, metal detectors, and wallets. Some anticipated features of the proposed technique include the use of the most advanced deep learning methods for real-time weapon detection in CCTV footage. Yolov4 outperformed all other algorithms, with an F1-score of 91% and a mean average precision that was 91.73% higher than the previous record. YOLOv4 achieved some of the highest accuracy and reliability across datasets of varying sources, though it primarily focused on small weapons.

Hashmi et al. [

60] focus on utilizing deep learning techniques for real-time weapon detection in video feeds from surveillance systems. The researchers generate a weapons dataset for training by selecting assets and photos from Google Photos. The researchers propose a system that uses Convolutional Neural Networks (CNNs) to process video frames and identify weapons. The researchers in this study have compared two state-of-the-art models for weapon identification: YOLOv3 and YOLOv4. The precision, recall, F1 score, and mAP of YOLOv3 and YOLOv4 are 84%, 85%, 71%, and 78%, and 77.30% and 84.85%, respectively. Afterwards, using a separate, independently created dataset of weapons, the researchers conducted a comparative study. This comparison allows researchers to analyze in greater depth and understand how small changes can lead to better outcomes. To improve weapon detection, researchers will develop a method to augment our dataset with additional photos and enhance the classification process. YOLOv3 and v4, which achieved varying degrees of improvement in accuracy and recall, were resource-intensive. A dataset with a broader reach was provided.

Qi et al. [

61] present that around the world, especially in the US, gun violence is a serious issue. Deep learning techniques have been developed to identify firearms in smart IP cameras and surveillance video cameras, enabling real-time notification to security staff. The lack of large public datasets is a challenge in developing gun detection algorithms. In this study, the researchers first released a dataset containing 51K gun photos annotated for detection. They then collected 51K cropped gun images from various sources for gun categorization. The researchers introduce a gun detection system that uses a cloud server to manage devices, data, and alerts, along with a smart IP camera as an embedded edge device to reduce the false-positive rate further. Based on these findings, they employed ResNet50 as the complex classifier on the cloud server and ResNet18 as the lightweight classifier on the edge device. The accuracy rates are 97.83% and 96.97%, respectively. This edge/cloud framework enables real-world gun detection and is expected to significantly reduce the false-positive rate. The ResNet-based YOLOv3 and CenterNet are comparable in accuracy. However, this produced more false positives, limiting the measure’s viability and reliability.

Ramon et al. [

62] show that one of the highest rates of violence in the world occurs in Latin America, leading to murders, robberies, firearms, and insecurity. Beyond just taking lives, homicide destroys the lives of the victims’ families and the community. It fosters a violent environment that harms institutions, the economy, and society. Due to limited data, the study was difficult and time-consuming. The images used for training were sourced from the internet through various datasets or image searches. To identify different types of firearms in public places, such as shops, ATMs, and streets, researchers used object detection. Four types of firearms were used to train the YOLO v3 and Efficient D0 models: pistols, submachine guns, shotguns, and rifles. The study’s findings indicate that YOLO v3, with an accuracy of 0.80, is the best network for detecting weapons. EfficientNet YOLOv3 performed well with tasks involving firearm detection. However, it would require a more diverse dataset to make consistent predictions.

Ruiz-Santaquiteria et al. [

63] aim to improve weapon detection in surveillance recordings by building on previous methods that rely solely on visual cues, which often fail in low-light, distant, or obstructed views. The primary challenge is accurately recognizing weapons in complex scenes by combining gun features with human body positions to enhance detection precision and minimize false alarms. The dataset comprises images from various sources, including YouTube videos, publicly available firearm datasets, and synthetic images generated from video games. This study introduces a new approach (Hand Region Classifier [HRC] + Pose Data [P]) that integrates human pose information with weapon appearance into a unified system. To generate binary images of postures, key points are first predicted to locate hand regions. Then, the final bounding boxes are formed by merging outputs from different subnetworks. Since the 2D human stance is often used for action or gesture recognition, the Monash data in HRC show a precision, recall, and AP of 96.83%, 20.33%, and 24.72%, respectively. Meanwhile, HRC + P achieves 34.68% (AP), 33.67% (Recall), and 90.18% (Precision). Sometimes, objects are hard to see due to distance, poor lighting, or partial/full obstruction. In these cases, human body posture helps identify firearms that might otherwise go unnoticed. False positives in other image regions can be filtered out because pose data are used only to classify hand regions of detected individuals. Finally, everyday handheld items like wallets, keys, and cell phones can lead to misclassifications or false positives in real-world applications. Further research will address these issues in more detail. The object detectors that utilized deep learning and hand region and position data achieved better results in terms of weapon detection, specifically. Still, because of the low recall, adding false positives did not increase its robustness.

Salido et al. [

50] propose a study on the difficulty of handgun detection in video surveillance images to prevent violent incidents. The dataset they used consists of annotated video surveillance images featuring handguns and includes 6800 images for training. The focus was specifically on handguns. The techniques tested involved three CNN-based methods: RetinaNet, YOLOv3, and Faster R-CNN. They applied a sequence of CNN-based methods to extract features from handguns and used pose information to reduce false positives—the experimental setup involved training and testing three models, with YOLOv3 being the fastest. RetinaNet achieved the highest performance, with an average precision of 96.36% and a recall of 97.23%. Advantages observed included high accuracy with fewer false positives. Limitations noted included lower-quality images, which may not allow detection of small, occluded handguns. Future work recommends deploying the system in real time across more complex environments. The YOLO (You Only Look Once)-based model achieved real-time, low-cost handgun detection with high recall; however, it struggled in crowded scenes or when the handgun was partially occluded.

Ahmed et al. [

64] address the problem of detecting real-time weapons in surveillance footage, a vital aspect of ensuring public safety. They created datasets (Custom Weapons) comprising 8327 images across diverse backgrounds and angles to enhance detection robustness, with a primary focus on handheld weapons, including pistols, revolvers, and rifles. They propose a model based on Scaled-YOLOv4, optimized with TensorRT, and deployed on high-performance GPUs, such as the RTX 2080 Ti, as well as on edge devices, including the Jetson Nano. During preprocessing, they employed mosaic augmentation to enhance the detection of small objects and improve accuracy. The model achieved an mAP of 92.1% and an FPS of 85.7 on an RTX 2080 TI, demonstrating performance suitable for real-time applications. The advantages of this approach include high accuracy, efficient real-time performance, edge deployment capability, reduced latency, and enhanced privacy. Future work is encouraged to address challenges related to false positives and model optimization, especially for low-power devices. Scaled-YOLOv4 demonstrated good detection in both open and crowded scenes; however, there is still room for improvement, particularly with low-resolution video input.

Ashraf et al. [

65] addressed the problem of minimizing false positives and false negatives in the detection of weapons in video surveillance systems. The dataset (an open-source pistol dataset from the University of Granada and the Internet Movie Firearms Database (IMFDB)) consisted of 15,000 images of weapons, including rifles and handguns. The preprocessing techniques included a Gaussian blur to remove background noise. The researchers suggested using the YOLO-V5S algorithm combined with a CNN to improve detection speed and accuracy. The process involved preprocessing the dataset, training YOLO-V5S on resized images to 416 × 416, and applying a Gaussian blur to reduce background distractions. The experimental setup compared the YOLO-V5 model with other models, such as Faster R-CNN, using metrics like precision, recall, and F1 score. The model achieved 99.5% precision and 84.6% recall, with frame processing completed in 0.011 s, making this solution both fast and accurate. The researchers also identified several areas for improvement, such as handling customized weapons that deviate from the typical pistol appearance and employing tested methods to distinguish between objects of similar size and shape, which are significant challenges in weapon detection. Moving forward, they plan to enhance the model by incorporating additional preprocessing techniques, such as brightness control. To reduce false positives and false negatives, they also aim to improve the training set by including videos of moving pistols, custom graphics, and adjusting colors and contrast to increase visibility. YOLOv5 and Faster R-CNN achieved similar accuracy; however, both struggled with images with low brightness and contrast.

Jadhav et al. [

66] emphasize the need for better technologies due to the rise in armed robberies, school shootings, and terrorism. Therefore, this project aims to develop a system that automatically detects weapons in CCTV footage, reducing the number of people required for monitoring and lowering potential risks. The research uses two datasets: one from Kaggle and one from Open Images, each containing 5687 images of weapons (primarily guns, handguns, revolvers, etc.) and images depicting police and judicial services in Pakistan. Several objects are included within the images to minimize false positives caused by confounding objects such as handguns. The study examines the feasibility of two deep learning networks renowned for their effectiveness in object detection: YOLOv4 and Scaled YOLOv4. The process involves image annotation with bounding boxes and the creation of models for weapon recognition using labeled datasets. The performance of each model was evaluated on images and videos using metrics like mAP, precision, and F1 score. Experiments were conducted in a Google Colab environment with GPU acceleration. The models were trained on both the Kaggle (mAP: 100%) and Open Images (mAP: 71.58%) datasets, with object annotations performed using YOLO Label. YOLOv4 achieved an average precision of 86.19%, a loss error of 0.1933, a precision of 79%, and an F1 score of 77%, slightly outperforming Scaled-YOLOv4. Future research aims to further reduce false positives and negatives. The YOLOv4 with CSPDarkNet53 achieved outstanding results in terms of mean Average Precision (mAP) and general accuracy across various datasets. However, the number of false positives and false negatives remained high across all datasets.

Kiran et al. [

67] propose that anomalies are identified and corrected using computer vision. Video surveillance systems capable of recognizing scenes and unusual events are essential for meeting the growing demands for safety, confidentiality, and protection of personal property. Monitoring such activities helps reduce crime and social offenses by identifying disruptive behavior. The approach uses publicly available videos from various sources. The core of the proposed solution involves applying specific AI-based algorithms for weapon detection to enhance existing conventional methods. For weapon detection, the system uses SSD and Faster R-CNN, both based on convolutional neural networks (CNNs). To improve accuracy, the Faster R-CNN was trained with pre-labeled video datasets. Both methods are effective and produce better results, with Faster R-CNN achieving 84.6% accuracy. Faster R-CNN demonstrated higher accuracy. The researchers do not evaluate any other detection methods in this approach. The RCNN achieved good results with accelerated accuracy; however, it required additional training and experimentation with various models to achieve improvements.

Manikandan et al. [

68] describe that, for security reasons, closed-circuit television (CCTV) cameras are installed and monitored in both public and private areas. In home and commercial security, image and video footage are utilized for object detection, identification verification, and rapid response. Different classifications are necessary to detect objects and humans based on features observed in static and motion footage. The collection includes various images and videos of dangerous objects (weapons) observed in CCTV footage. This article introduces an Attuned Object Detection Scheme (AODS) for identifying harmful objects from CCTV inputs. The proposed scheme uses a convolutional neural network (CNN) for object detection and classification. The classification is performed based on features extracted and analyzed by CNN. The method’s performance is validated using metrics such as accuracy, precision, and F1-score. In this approach, training with an external dataset reduced error and complexity by 7.47% and 8.23%, respectively, and increased accuracy by 8.08%. It is expected that future object classification will utilize labels. The CNN-AODS model produced good results in distinguishing the presence of firearms. It was not versatile enough to detect other objects, and the presence of different objects was observed.

Mishra et al. [

13] present this study, which addresses the critical issue of firearm detection in public spaces—a significant concern given the rising gun violence globally. The dataset (open source datasets) used for training and testing includes both real and synthetic images of firearms; it contains over 1000 images compiled from various sources, including those generated with 3D techniques. The proposed method is based on the YOLO object detection model, known for its high speed and accuracy in real-time applications. Among the available versions, YOLOv4 was selected to strike a balance between processing speed and detection accuracy. It can process up to 24 frames per second, meeting the needs of real-time video surveillance. The model was trained on a single GPU with images annotated with bounding boxes for firearm localization. YOLOv4’s architecture features a PANet path-aggregation neck, a YOLOv3 head, and a CSPDarknet53 core. Implementing this new architecture in the backbone and modifying the neck increased the frames per second (FPS) by 12% and the mean average precision (mAP) by 10%. Furthermore, training this neural network on a single GPU simplifies the process. Its fast processing speed enables efficient operation on live feeds from CCTV cameras. However, limitations include developing detection capabilities for other types of weapons and improving performance in low-visibility conditions. YOLOv3 achieved higher Global mAP and FPS scores. However, it limits performance in low-light or occluded scenes. Therefore, it would be restricted for use in real-time, live surveillance of people and traffic.

Rasheed et al. [

69] address the growing problem of thefts in the banking and retail sectors, focusing on the use of artificial intelligence to detect and alert to weapons. The researchers provide a dataset of 7801 images categorized as handguns or rifles, sourced from Google Images, movies, and CCTV footage. A real-time object detection algorithm, “You Only Look Once” (YOLOv5), is proposed to locate guns and rifles. When video frames are captured, YOLOv5 runs to detect objects and sends alerts to local law enforcement. The system works on both mobile and web platforms, using a Raspberry Pi or Jetson Nano for real-time tracking. It is trained on Google Colab for 100 epochs with a batch size of 16. The system connects to a NodeMCU for internet notifications and to a GSM module for communication. After 3 h of training, it achieved a mean average precision (mAP) of 87.8%, with an accuracy of 88% and a recall of 83%. The YOLOv5s version, built with the YOLOv5s modifier, outperformed YOLOv4 and YOLOv3 in terms of faster inference and improved accuracy. However, the system currently relies on visible cameras, which could be improved by integrating firearms detection. High-quality graphics are also desirable, but this could be achieved by running the model on a GPU. While the YOLOv5 model demonstrated strong capabilities in addiction classification, recent versions may offer even better performance.

Al-Mousa et al. [

38] propose that the rate of gun violence has rapidly increased in recent years. The majority of modern security systems depend on human workers to continuously patrol hallways and lobbies. Future closed-circuit television (CCTV) and security systems should be able to identify threats and take appropriate action when necessary, thanks to advances in machine learning, particularly deep learning. The most crucial component of developing any deep learning solution is data. Applications that rely on photos, like these, require large amounts of data. Princess Sumaya University for Technology’s Innovation Lab is where data collection takes place (PSUT). The security system architecture presented in this work leverages deep learning and image processing to detect weapons in real time. To identify individuals carrying different types of weapons, the system processes a video feed and occasionally captures images of them. These images are fed into a convolutional neural network (CNN), which then determines whether or not the image poses a threat. If it does, the system notifies security personnel via a mobile application and provides a picture of the situation. A Raspberry Pi B+ (with an ARM Cortex-A53 1.4 GHz processor, Wi-Fi connectivity, and 1 GB of RAM) is used, placed on a

Table 1. 8 m high, along with a connected camera. The camera is approximately 4.5 m from the lab entrance. To monitor the process, the Raspberry Pi is connected to a display. The system achieved 92.5% accuracy during testing and completed detection in only 1.6 s. Other weapon types, such as knives, rifles, and semi-automatic guns, can be added to the dataset. The CNN model is a fast and reasonably accurate firearm classification algorithm; it would require more data to provide reasonable confidence.

Chatterjee et al. [

46] state that any violence is a shame to our civilized society. However, violence still plays a significant role in our society today and claims many innocent lives every day. Using a gun is one of the most traditional forms of violence. Nowadays, firearm-related fatalities are a global issue. It poses a significant challenge to law enforcement and poses a threat to civilization. The 3698-picture dataset, containing 4703 annotated objects, has been created for the detection of firearms and human faces. The primary images were sourced from the WIDER FACE dataset and the Internet Movie Firearms Database. To detect firearms and human faces, this research employs a range of detection methods, including the latest EfficientDet-based architectures and Faster Region-Based Convolutional Neural Networks (Faster R-CNN). At the post-processing stage, an ensemble approach that utilizes Weighted Box Fusion, Non-Maximum Suppression, and Non-Maximum Weighted procedures has enhanced detection performance in distinguishing between weapons and human faces. The mean average precision scores for mAP0.5, mAP0.75, and mAP [0.500.95] are 77.02%, 16.40%, and 29.73%, respectively, based on the Weighted Box Fusion-based Ensemble Detection Scheme. Among all tested options, these results show the best performance. The work may eventually be adapted for real-time testing, allowing research on its scalability and reliability in real-world applications. The Faster R-CNN model produced good mAP results on the firearm datasets; however, it did not test any in live scenarios.

Doan et al. [

70] present a study on the rise in crime rates driven by heated weapons. Security systems must detect potentially violent situations early. The experimental dataset comprises 6420 224 × 224 photos collected from public sources using the Pistol Detection Dataset, Pistol Classification Dataset, and Sohas Weapon Dataset. These photos feature a diverse range of pistol perspectives and sizes. This research aims to apply YOLOv5, v7, and v8 models to enhance the accuracy and diversity of pistol detection in surveillance cameras. The models YOLOv5-n, YOLOv5-m, YOLOv5-L, YOLOv7-X, YOLOv7-W6, YOLOv7-E6, YOLOv8-l, and YOLOv8-x performed exceptionally well in the hot weapon identification challenge, with the YOLOv8-x model achieving the highest accuracy of 95.6%. Based on the comparison results, the best model is YOLOv7-E6. The testing results show that these YOLO models can be integrated with the latest versions to improve security surveillance systems, enabling early warnings and proactive security measures in critical situations. The YOLOv5–v8 models demonstrated substantial precision and recall, though results fluctuated across devices and environments.

Khalid et al. [

51] focus on identifying and segmenting guns in surveillance videos. The system’s performance was evaluated using a publicly available dataset for weapon detection, which includes images of handguns and other firearms in different contexts. For weapon detection, they utilized a deep learning model called YOLOv5. The detected weapons included handguns, pistols, and revolvers, featuring a variety of grip styles. YOLOv5 is the latest and fastest version of the YOLO family, commonly used in real-time applications. The specific YOLOv5 model used in this study was based on a conventional deep learning framework, with hyperparameters tuned through experimentation. Training was performed on a high-performance GPU to reduce computational load. The system achieved precision of 63%, recall of 25%, and accuracy of 43%. However, it may still struggle to identify partly hidden firearms or very small ones. Enhancing the model’s resistance to occlusions and improving its performance in small-object detection would increase its usability in real-world scenarios. The YOLOv5 image detector achieved high accuracy and robustness; however, it struggled to identify smaller firearms accurately.

Khan et al. [

71] identify the primary concern of this research: the increasing demand for weapon-detection systems capable of recognizing firearms, with a focus on pistols in video feeds to enhance public safety and security. The dataset used in this study is the Custom Pistol Dataset. The weapons are pistols. The algorithm or technique employed is YOLOv5 (You Only Look Once version 5). YOLOv5 is a deep learning architecture for real-time object detection in the YOLO series. It was tested on standard computational platforms suitable for processing real-time video. In a live video stream, the YOLOv5 model was trained to detect pistols with an accuracy of approximately 95%. The system could also detect other items, such as rifles, knives, and other firearms or dangerous objects, expanding its functionality. The YOLOv5 model for detecting pistols was highly sophisticated and performed well, but it should be extended to detect other weapons as well.

Nale et al. [

14] propose that the demand for computer vision-based automated surveillance has grown due to the increasing use of closed-circuit television (CCTV) systems in modern security applications. The primary goal is to minimize human intervention while improving early threat detection and real-time security assessments. In the absence of a pre-existing dataset for real-time detection, the researchers assembled one from various sources, such as Roboflow Computer Vision Datasets, YouTube CCTV recordings, film data, camera images, and online photographs. The proposed weapon detection method prioritizes accuracy and recall in object identification, particularly in challenging conditions such as low-light environments, by combining Detectron2 and YOLOv7. With the YOLOv7 model trained on a new database, object detection algorithms that used Region of Interest (ROI) performed better than those that did not, yielding notable results. It outperformed earlier real-time studies with a mean average precision (mAP) of 87.3%, an F1 score of 91%, and a confidence level of nearly 98%. The research aims to enhance security while also attracting security-conscious tourists and investors, thereby generating economic benefits. Future research will focus on further reducing false positives and negatives, potentially extending to additional classes. The YOLOv7 improved the robustness of the image detection system and enhanced security; however, it still struggles with false positives and negatives.

Nanda et al. [

72] focus on developing an effective video forensics system capable of detecting weapons, disguises, and suspicious activities within video footage. The dataset used contains 1346 images for gun detection and 1043 images for mask detection, collected from Kaggle, GitHub, and other sources. The main features identified are guns and facial masks, which stand out from other devices on the system and help detect any immoral or abnormal behavior. The study employs a custom CNN architecture, combined with the YOLO (You Only Look Once) approach, for object detection. The system provides frame labels for the detected objects: “M” indicates a mask, “NM” indicates no mask, and “G” indicates a gun. The system was run on a computer with an Intel Core i7 processor and an NVIDIA GeForce RTX 2060 graphics card. The YOLO model detected masks with an accuracy of 92.3%, while gun detection accuracy was 100%, surpassing the customized CNN model by 61.5%. The YOLO model outperformed previous methods, especially in real-time applications, achieving higher speed and accuracy. Future improvements could include increasing the sample size to broaden the range of suspicious activities the model can detect and optimizing the CNN further to enhance detection accuracy. The YOLO network achieved excellent accuracy and efficiency in detecting guns; however, the customized CNN performed poorly, and the datasets were too small.

Naseeba et al. [

73] present the application of computer vision (CV) and deep learning (DL) to enhance weapon detection in video surveillance. The fact that the model was trained on a large dataset (Self-Created Image) containing still images and videos of a wide variety of weapons ensures its adaptability and resilience. In this study, researchers use VGG16 and Faster RCNN to automate firearm detection. The method uses manually annotated image datasets. This is surpassed by Faster RCNN, which achieves 0.736 s/frame. VGG16’s frame rate is 1.606 frames per second, by comparison. Faster R-CNN is more accurate, reaching 94.8% compared to VGG16’s 92.5%. The former can achieve live detection due to its higher speed and accuracy. Additionally, it can be trained with larger datasets using GPUs. This approach does not detect various types of weapons or large datasets. Both VGG-16 and Faster R-CNN achieved good accuracy and speed, but they did not generalize beyond specific categories, such as weapon types.

Pullakandam et al. [

74] discussed real-time weapon detection using YOLOv8, optimized for faster, lighter deployment in resource-constrained environments via quantization. Approximately 3000 images, curated from various online sources, were included in the dataset and labeled by type, including guns and knives. The architecture of YOLOv8 features a new anchor-free detection head that improves its precision and flexibility across different object sizes. It achieved reduced model size and faster inference by using a quantization technique. In this regard, YOLOv8 reached 90.1% mAP and reduced processing time by 15%. The experimental results show that it outperforms earlier versions, such as YOLOv5, across most metrics, particularly in terms of computational efficiency, making it well-suited for mobile and edge devices. Limitations, such as sensitivity to low-resolution images, were noted, indicating the need for further work to optimize the quantization model for varying image qualities. While the results for detecting guns in YOLOv5 and YOLOv8 achieved high accuracy, the work presented could benefit from further statistical optimization to improve efficiency.

Rahil et al. [

12] state that it is now crucial to incorporate advanced automatic pistol detection systems into public surveillance cameras to enhance their effectiveness, given the widespread issue of gun violence. The researchers collected and generated their own dataset (COCO) of images featuring various firearms. To improve the model’s performance across multiple environments and reduce its reliance on human security personnel, a carefully curated dataset of diverse firearm images was utilized. This work uses the advanced YOLO-V5 algorithm to provide a comprehensive approach for real-time firearm detection in security footage. The results demonstrate that, even in challenging, complex scenarios, the YOLO-V5 model achieves high accuracy (95%) and recall (92%) in detecting handguns. This research catalyzes further investigation in this exciting area and marks a significant step toward the development of efficient firearm detection systems. This approach addresses an urgent need for improved public safety by automating firearm identification in real-time surveillance footage, offering valuable insights into the capabilities of intelligent surveillance technology. While YOLOv5 produced fast, accurate detection results, the framework required more comprehensive data to achieve scalable, generalizable results.

Raza et al. [

53] address the problem of detecting gunshots to prevent crimes using sound recognition. The dataset consists of 851 audio clips of gunshots from eight gun models, collected from public YouTube videos. The proposed technique involves a novel Discrete Wavelet Transform Random Forest Probabilistic (DWT-RFP) approach for feature extraction and a meta-learning model, Meta-RF-KN (MRK), for classification. The workflow includes extracting Mel-Frequency Cepstral Coefficients (MFCC) features, combining them with probabilistic features, and applying the MRK model for gunshot detection. The experimental setup employed an 80:20 train-test split and was executed in a Google Colab environment with GPU support. The proposed model achieved 99% accuracy, outperforming other state-of-the-art methods. Future improvements include reducing computational complexity and addressing real-world noise and issues related to simultaneous gunfire. The Discrete Wavelet Transform Random Forest Probabilistic (DWT-RFP) detection model demonstrated high accuracy in detecting gunshots, improving on-scene safety; however, background noise and computational cost were significant issues.

Ruiz-Santaquiteria et al. [

40] discuss improving handgun detection in CCTV video recordings by addressing issues of over- and under-detection, especially in challenging monitoring situations. They use a hybrid dataset that combines images from the Guns Movies Database, YouTube clips, synthetic video game data, and the COCO dataset, with a focus on handguns. Their proposed approach integrates visual cues and body pose information via a dual-branch framework that targets hand regions and body pose key points to enhance detection accuracy. The model is developed in TensorFlow using several CNN and transformer-based architectures, such as Darknet-53, ViT, and SEIT, along with additional filtering to reduce false positives. The model achieved excellent results across various complex datasets, with an average precision of 91.73% and exceptional performance on challenging data. Future work aims to reduce dependence on pose estimation accuracy, regardless of camera placement, and to incorporate spatiotemporal data from actual videos to further mitigate false positives. The Vision Transformer, using a CNN architecture, also achieved substantial average precision (AP); however, it was less robust due to high computational requirements and reliance on computationally intensive human pose estimation.

Shah et al. [

75] focus on the challenge of real-time pistol detection to help combat firearm-related crimes in public spaces. Manual CCTV monitoring is a common practice nowadays; unfortunately, most efforts fail due to human error, especially when observation must be maintained for extended periods. This research explains the implementation of the YOLO algorithm within an automated system to reliably and efficiently detect pistols. The dataset (COCO) includes 3000 images of pistols captured under various lighting conditions, quality levels, and camera angles that reflect real-world scenarios. These images were annotated and split into training and validation sets. The study compares the performance of three YOLO versions—YOLOv3, YOLOv4, and YOLOv5—in terms of speed and accuracy. Additionally, by reviewing prior research and analyzing implementation results, a comparative assessment of these models’ performance was conducted. Evaluation metrics focused on precision and recall, which are more relevant than accuracy in object detection. With higher F1 scores and mean average precision, YOLOv5 (mAP50 of 98.60 and F1 score of 98%) outperformed earlier versions. YOLOv5 offers a lightweight, fast, and accurate model. Although this system provides excellent detection capabilities, it currently only detects handguns. Future work should extend the system to recognize all types of firearms and sharp pointed objects. The YOLOv5 method demonstrated exceptional detection precision and speed; however, it was limited to detecting only specific types of guns.

Sumi et al. [

76] discuss concerns about excessive violence, misconduct, and weapon use captured by open security cameras when using YOLOv5 for weapon detection with Information Expansion. The datasets include various weapons, such as rifles, handguns, and blades. These datasets (Custom Dataset, Utility Synthetic Dataset, and Mock Attack Dataset) are used to evaluate the effectiveness of the YOLOv5-based weapon detection system by combining different data augmentation strategies to assess its viability. The method proposed in the article relies on the YOLOv5 (You Only Look Once) algorithm for identifying weapons. The YOLOv5-based weapon detection system was trained using an experimental setup on an NVIDIA GeForce RTX 2080 Ti GPU and CUDA 11. Given the datasets, the proposed weapon detection framework using YOLOv5 achieved promising results, attaining 97% mAP with real data and 93% mAP with synthetic data. Future work should focus on expanding evaluation metrics based on findings, suggesting room for improvement despite the high accuracy achieved with YOLOv5 and data augmentation. Overall, the YOLOv5-based framework demonstrated high precision and a more robust backend; however, it still needed improvement in accuracy.

Wang et al. [

17] address real-time weapon detection in CCTV footage, focusing on gun identification, and find that small objects, such as handguns and rifles, are challenging to detect when heavily cluttered in scenes that change rapidly under varying lighting conditions. They utilize a synthetic dataset generated using the Unity Game Engine, combined with real-world CCTV footage, and augment images from the OpenImg dataset, resulting in a total of 2582 images of handguns and rifles. In this research, an improved YOLO v4 (SCSP-ResNet-based + receptive field boost) is proposed, and the F-PaNet is introduced to enhance small-object detection by expanding spatial information and fusing multi-scale features. Using transfer learning, the method was trained on both synthetic and real-world datasets, achieving a mean Average Precision of 81.75%—an improvement of 7.37% over the baseline—with a 4.2% reduction in inference time when implemented on the Darknet platform. In the future, researchers plan to experiment with more complex synthetic datasets and explore their scalability beyond image classification to other computer vision tasks such as image segmentation and object tracking. SCSP-ResNet YOLOv4 demonstrated robust performance in small-object detection across complex environments. However, the dataset complexity and background variation required improvement.

Arora et al. [

77] discuss the detection of guns and other weapons, a challenging task with many applications in law enforcement, safety, and monitoring. A custom dataset of 9000 images of firearms and heavy weapons, carefully collected from various sources, including the web, public-domain datasets, and private collections, is used to train the YOLOv8 model. The goal is to develop a YOLOv8-based object detection system. The system performed exceptionally well on multiple datasets of gun and weapon photos, demonstrating high accuracy. Its ability to reliably recognize weapons is shown by its effectiveness in real-time applications and an impressive average precision of 90.5%. To keep the system adequate and relevant in the challenge of weapon detection, future research should focus on enhancing its capabilities through dataset expansion, algorithm improvements, and realistic deployment scenarios. The YOLOv8 framework successfully identified firearms from customized datasets. Opportunities for improvement were identified through database enrichment and algorithm enhancements.

Flores et al. [

15] discuss automated, real-time weapon-detection systems enabled by recent advances in deep learning. These systems primarily use body-pose key-point extraction and object-detection frameworks to extract hand-region data as spatial features and to identify body posture. However, the effectiveness of these methods is limited by their inability to contextualize stance and spatial elements. The study uses the Mobile Guns Dataset and the Monash Guns Dataset, which together comprise 155 videos totaling 6558 video frames. The proposed method starts by detecting people’s poses in a video frame using OpenPose to locate hand areas. After cropping the hand region, spatial features are extracted using DarkNet-53. A binary image of a human stance is generated by placing human pose key points on an image. A convolutional neural network subsequently processes this image to extract features related to human pose. Results clearly show that incorporating temporal information enhances the model’s performance in average accuracy, recall, precision, and F1 scores. Future work may explore other temporal representations, such as using transformers or incorporating additional input features, to enhance the model’s capabilities further. OpenPose and DarkNet53 achieved good temporal performance in firearm detection. Future studies may wish to assess different representations of temporal data.

More et al. [

16] address the problem of real-time detection of violence and weapons in video streams to enhance public safety. It utilizes a combination of datasets, including the Hockey Fights Dataset and a composite dataset for violence detection, as well as a custom dataset for weapon detection that encompasses various types of weapons, such as guns, shotguns, and rifles. The proposed technique combines Convolutional Neural Networks (CNN) with Bidirectional Long Short-Term Memory (Bi-LSTM) for violence detection and YOLOv8/YOLOv9 for weapon detection. The system processes video frames, extracts spatial and temporal features for violence classification, and identifies weapons using the YOLO algorithm. The experimental setup involves training models on 30-frame sequences with 100 × 100 pixel dimensions for violence detection and YOLOv8/YOLOv9 for weapon identification. The model achieved a 99.85% training accuracy and 98.19% validation accuracy for violence detection, while YOLOv8 achieved a mean Average Precision (mAP) of 0.805 for weapon detection. Future work suggests optimizing the system to reduce computational time while enhancing real-time detection capabilities. Further, CNN-BiLSTM with YOLOv8 or YOLOv9 improved firearm detection accuracy and security. However, other weapons were not included in the study.

Nadeem et al. [

78] propose that the first research question addresses a key topic: the acquisition and handling of real data for violence detection in computer vision applications. The dataset used is WVD, which includes various types of weapons and violence scenarios, all developed from GTA V. The Weapon Violence Dataset (WVD) employs optical flow, specifically the Dense Gunning–Farnebäck technique. In terms of study design, the experimental setup ensures the systematic and accurate creation and use of synthetic data for violence detection, while also recognizing the strengths and limitations of using such data. Hot Violence Detection: The researchers achieved 87% accuracy in detecting hot violence scenes involving firearms and explosives using models trained on the WVD dataset. The article’s future work and drawbacks suggest that models should be validated with real data to ensure proper functioning in real-world environments. The WVD’s initial detection capability was scalable. However, issues with authenticity and randomness reduced the dataset’s reliability.

Valliappan et al. [

18] present research on audio-based gun detection, particularly useful when visual data are unavailable. The researchers introduce a dataset using YAMNet, a deep learning model for audio classification, comprising 1174 audio samples from 12 types of firearms, collected from sources such as the Gunshot Audio Dataset and the Gunshot Audio Forensics Dataset. In this work, Mel spectrograms were used to transform audio signals into a format that YAMNet could analyze visually. Transfer learning was applied to YAMNet, fine-tuning the model to identify gun types with 94.96% accuracy. Its high accuracy and robustness to noisy audio inputs have made it valuable for forensics and real-time applications. This model uses transfer learning to reduce reliance on large amounts of labeled audio data, thereby improving efficiency. This work highlights potential future improvements, such as enhancing accuracy by expanding audio datasets and exploring more optimized model architectures for deployment on embedded audio devices in real-world scenarios. Audio-visual cues enhanced classification accuracy when both YAMNet and InceptionV3 were utilized. However, using audio data would require more samples and greater diversity.

Yadav et al. [

79] present the specific research question in this investigation: the identification of weapons in CCTV videos under low-light conditions at night. The dataset used in this work is the Custom Nighttime Weapon Dataset, obtained from the internet. It consists of 15,367 images captured in low-light environments or at night. The weapons include pistols and handguns. The use of YOLOv7 aims to combine a deep learning approach with a brightness enhancement feature to improve weapon detection at night. This approach addresses gaps in the literature, particularly in night-time weapon detection, by combining deep learning methods with image enhancement algorithms to improve system performance. The improvements made with YOLOv7-DarkVision over the standard YOLOv7 resulted in approximately a 10% increase in accuracy at night, achieving a precision score of 95.50% and an F1-score of 93.41%. However, the proposed YOLOv7-DarkVision model still faces limitations when deployed in extremely low-light conditions. YOLOv7 achieved a good overall precision and firm performance in low-light and nighttime conditions. But it still had some limitations in some low-lit environments.

You et al. [

80] argue that, for military decision-making and assessing firepower threats, fine-grained detection of military targets is essential because it can provide more precise and comprehensive battlefield situational data. To address the issues of imprecise categorization and low detection accuracy in fine-grained troop target detection, the researchers collected data by extracting images from the Internet, war films, and other sources. They used the Label Image tool to annotate the targets and developed a fine-grained detection dataset of army targets. To improve detection accuracy and reduce misclassification of army targets across various weapons, they propose a fine-grained detection method based on the YOLOv8-AD network. This approach accurately identifies and classifies soldiers armed with different weapons, including firearms and rocket launchers. The Soldier Target dataset achieves an mAP50 of 79.6% with YOLOv8-AD, as indicated by comparative experimental results. The method has an average detection time of 11.2 ms per image, with detection accuracy and recall rates of 75.7% and 74%, respectively, on 1333 random untrained images. It offers a novel approach to automatic weapons and security monitoring, enabling fine-grained detection of military targets. By incorporating temporal data and balancing sample distribution, the researchers aim to mitigate training difficulties in future research and enhance real-time detection performance and efficiency. YOLOv8-AD provided a high level of secure, efficient weapon monitoring. However, the complexity of training makes it difficult to optimize fully.

Table 3 summarizes the earliest survey utilizing Gun Detection.

6. AI-Enhanced Smart City Surveillance—Weapon Detection: Gun and Knife

CCTV cameras were initially used to record live footage from the coverage area, enabling monitoring of individual activities. Artificial intelligence for criminal detection, such as identifying guns and knives in video surveillance, has garnered significant interest from security experts and law enforcement agencies in recent years. Additionally, improving the effectiveness and reliability of video surveillance systems for disaster and crime prevention greatly benefits from the use of artificial intelligence (AI) and deep learning.

Figure 10 illustrates the process of detecting weapons, such as guns and knives, using AI or related techniques.

Grega et al. [

29] present a study addressing the challenge of automatically detecting firearms and knives in CCTV footage, reducing reliance on human operators and enabling rapid response times in the event of a threat. The researchers collected their own dataset of CCTV footage, focusing on images involving firearms, especially handguns, and knives. It proposes a Haar Cascade classifier integrated with OpenCV that primarily detects weapons near human silhouettes within the frame, adding contextual relevance to the detection. The method uses MPEG-7 visual descriptors to enhance object recognition, aiming to balance sensitivity and specificity. The algorithm was tested on real CCTV footage under various conditions, including low resolution and inconsistent lighting. The results demonstrated high success, with firearm detection achieving nearly zero false positives and knife detection exceeding the specificity of similar studies. Training and test sets were created from 8.5 min of footage, with each set containing approximately 12,000 frames. Forty percent of each set included positive examples (firearms in plain sight), while sixty percent included negative examples (no guns, but other objects in hand). When edge histogram features are employed, accuracy improves to 91%. Ongoing work aims to optimize detection for additional weapon types further and enhance real-time performance. Thanks to the hybrid CNN and image processing method, effective real-time performance was enabled with an adequate accuracy of 90%. However, the downside, of course, was that false positives occasionally occurred, which detracted from its reliability.

Navalgund et al. [

30] report that CCTV cameras are frequently used to reduce crime in an area. Despite installing CCTV in both public and private spaces to monitor the environment, crime rates have not decreased. Although the use of weapons is strictly prohibited in places such as ATMs, banks, and specific public areas, the system is tested using datasets of videos and images collected from YouTube and Google. These datasets include burglary, murder, and other illegal activities involving weapons. The proposed method employs the pre-trained deep learning model VGG-19, which detects guns and knives in a person’s hand and identifies when they are pointed at another person. Additionally, the researchers examined how two different pre-trained models, such as GoogleNet InceptionV3, performed during training. VGG19 achieved higher training accuracy, reaching 91%, 93%, and 92%, respectively. Compared with other current crime-detection methods, this proposed approach yields promising results. The researchers do not further explore the analysis methods across multiple approaches. FRCNN with VGG-19 achieved adequate accuracy and recall, thereby improving overall detection performance. However, additional sample training was necessary to allow for successful generalization.

Dwivedi et al. [