1. Introduction

With the rapid development of artificial intelligence technologies, the autonomous navigation capabilities of self-driving vehicles and mobile robots in complex environments have become a focal point for both academia and industry. Constructing accurate and reliable three-dimensional semantic maps, as one of the core technologies for achieving advanced autonomous navigation, requires not only accurate identification of various semantic objects in the environment but also the effective handling of conflicts and uncertainties in multi-frame observation information from different time points and viewing angles [

1,

2]. In real robotic operating environments, the presence of sensor noise, illumination changes, motion blur, occlusion, and other factors makes observations from different frames often contain contradictory information. How to scientifically quantify and process these uncertainties has become a critical challenge in the field of semantic mapping [

3,

4].

Traditional semantic mapping methods primarily rely on deterministic fusion strategies, such as simple majority voting mechanisms or weighted averaging methods based on preset confidence levels [

5,

6], and hybrid models like the Feature Cross-Layer Interaction Hybrid Model (FCIHMRT) [

7]. While these methods can achieve good results under ideal conditions, when facing complex real environments, they often lead to significant degradation in map quality due to their lack of accurate modeling and quantification capabilities for observation uncertainties [

8]. In recent years, with the flourishing development of deep learning technology, neural network-based semantic segmentation methods have achieved significant progress in accuracy [

9,

10]. However, these methods typically only provide point estimates and are limited in their ability to quantify prediction uncertainties, which is problematic in safety-critical robotic applications [

11].

To address the aforementioned problems, evidential deep learning (EDL) has emerged as a novel uncertainty quantification framework [

12]. EDL directly models epistemic uncertainty by predicting parameters of Dirichlet distributions, avoiding the computational burden of complex sampling or variational inference required by traditional Bayesian methods [

13]. Sensoy et al. [

12] first proposed the basic framework of EDL, followed by Amini et al. [

14], who applied it to semantic segmentation tasks in autonomous driving, demonstrating its superiority especially in the presence of out-of-distribution (OOD) inputs. Building on this foundation, Kim and Seo [

15] proposed the EvSemMap method, which merges EDL with Dempster–Shafer (DS) theory, and applied it to the field of semantic mapping, predicting pixel-level evidence vectors through evidence generation networks and utilizing DS theory’s combination rules for multi-frame evidence fusion.

Despite the theoretical innovation of the EvSemMap method, it still exhibits noteworthy limitations in practical applications. The method applies the same processing strategy to all observations, lacking the capability to adaptively modulate discounting based on observation quality, which leads to performance degradation in complex scenarios with substantial sensor noise or low-quality inputs [

16]. Furthermore, existing evidence discounting mechanisms typically employ globally uniform discounting coefficients, which are unable to make fine-grained adjustments for quality differences across image regions [

17].

Addressing these challenges, this paper proposes a selective learnable discounting method for deep evidential semantic mapping. The core innovation of this method lies in a design of a lightweight selective -Net network that can automatically detect noisy regions and predict pixel-level discounting coefficients based on RGB image features. Compared with traditional global discounting strategies, this method achieves spatially adaptive selective discounting, preserving original evidence strength in clean regions () while significantly reducing evidence reliability in noisy regions or low-quality regions (). Simultaneously, this work employs a theoretically principled scaling discounting formula that conforms to Dempster–Shafer (DS) theory, ensuring theoretical consistency and robustness of the method.

The main contributions of this paper can be summarized as follows: First, we propose the concept and implementation of selective discounting, overcoming the limitations of traditional global discounting and achieving fine-grained evidence adjustment based on observation quality. Second, we design a lightweight -Net network architecture that achieves high-precision noisy-region identification while ensuring computational efficiency. Third, we prove three core theoretical properties of the method: evidence order preservation, valid uncertainty redistribution, and optimal discount coefficient; we also derive a formal algorithm, ensuring mathematical rigor and experimental reproducibility. Fourth, we employ a theoretically principled scaling discounting formula, thereby reinforcing the mathematical rigor of the method. Finally, we comprehensively validate the method through experiments, particularly demonstrating significant improvements in confidence calibration and robustness under noise.

3. Methodology

3.1. Problem Modeling and Theoretical Foundation

In mobile robotic semantic mapping tasks, the system processes sequences of RGB-D observations from different time points. At time t, the robot obtains an RGB image and a corresponding depth map , along with the camera pose . The goal of semantic mapping is to construct a global three-dimensional semantic map M, where each voxel v in the map is associated with a semantic label distribution (i.e., a probability distribution over semantic classes for that voxel).

Consider a semantic category set

with

K total categories. For each pixel

in the image, the evidential segmentation network (denoted by

) predicts an evidence vector:

Here represents the evidence strength supporting the hypothesis that pixel x belongs to category . Intuitively, can be viewed as the amount of support or “evidence” that the network has accumulated for class at pixel x, with larger values indicating higher confidence in that class hypothesis.

Under the Dempster–Shafer theory (DST) framework, the evidence vector

is converted into a Basic Probability Assignment (BPA) over the

K classes plus an uncertainty mass. Let

be the total evidence strength including a prior of 1 for each class (the

term). Then we define the belief mass assigned to class

and the uncertainty mass as

By construction,

,

, and

. The belief masses

represent the committed probability mass for each class (based on the evidence), while

represents the remaining uncommitted mass (due to insufficient or conflicting evidence, i.e., epistemic uncertainty). Finally, to obtain a single-point class prediction from this evidential distribution, we employ the subjective logic opinion pooling method. Assuming a neutral prior (no bias to any class), the final class probability for

is given by distributing the uncertainty mass equally among all classes:

Equivalently, , which is exactly the expected probability of class under a Dirichlet distribution parameterized by . This modeling approach explicitly represents and quantifies prediction uncertainty for each pixel, providing a rigorous theoretical foundation for subsequent multi-frame evidence fusion and quality assessment in the mapping system.

3.2. Selective Learnable Discounting Mechanism

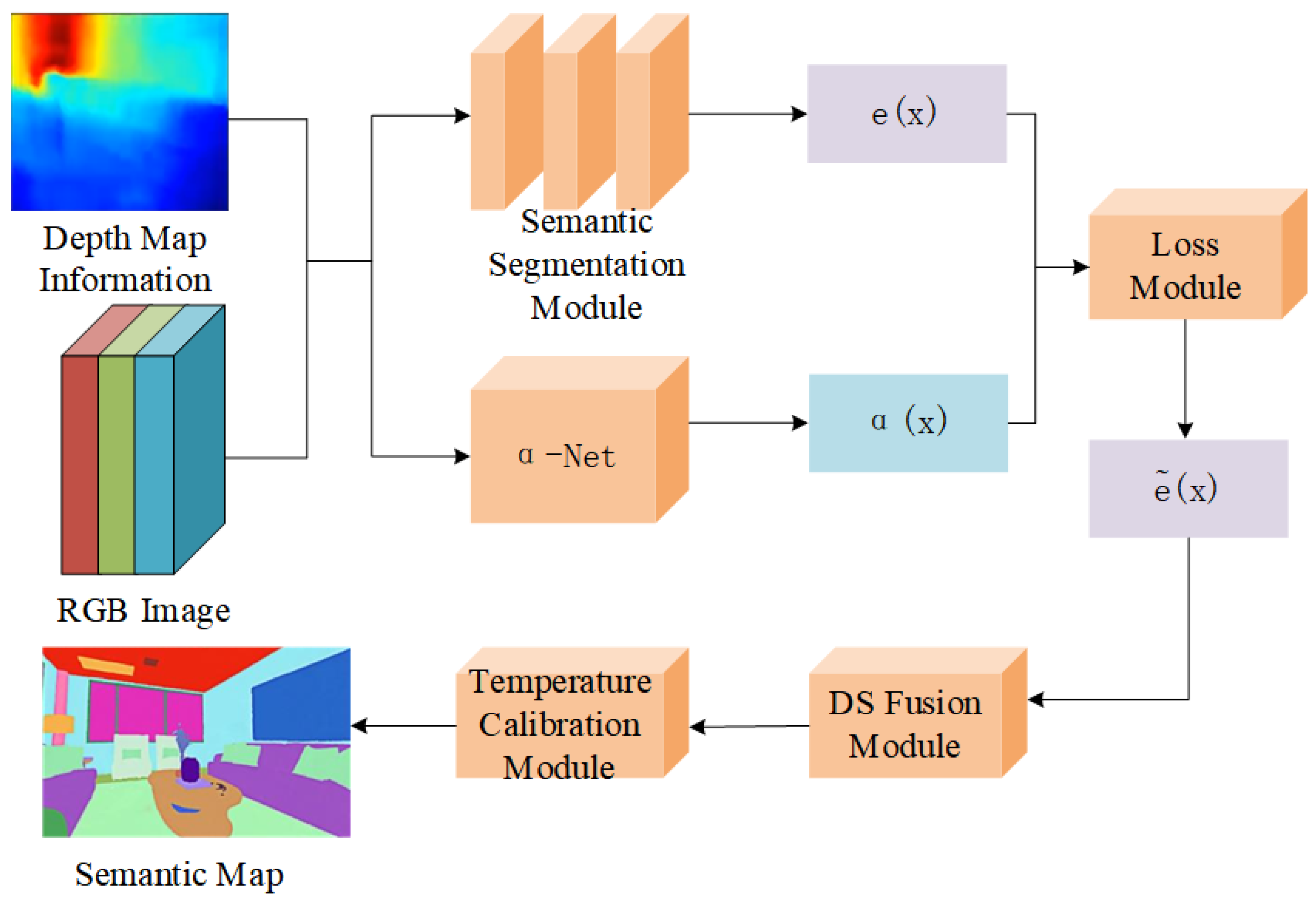

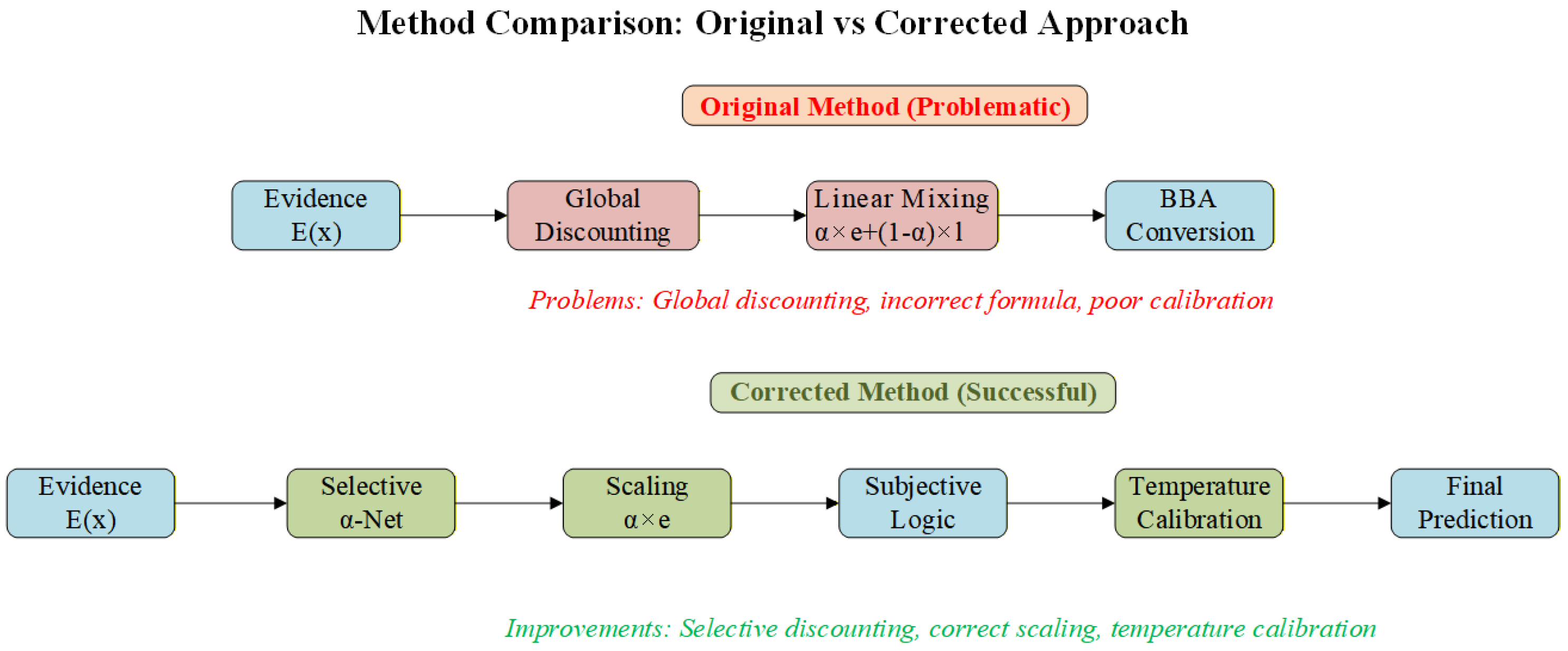

The flowchart in

Figure 1 outlines the holistic framework of the proposed selective learnable discounting approach for deep evidential semantic mapping, which is developed based on the EvSemMap framework proposed by Kim and Seo [

15] to address the limitation of spatially invariant observation quality handling in existing methods. As depicted, the system takes RGB images (a fundamental input in mobile robotic semantic mapping tasks) as the starting point. First, it extracts pixel-level RGB feature vectors

—these features encode local image attributes (e.g., normalized color intensity) that correlate with observation quality, such as distinguishing well-exposed clean regions from dark or blurred noisy regions. These RGB features are input to the lightweight selective

-Net, the core module of this work. Designed with computational efficiency and interpretability in mind,

-Net adopts a simple logistic regression structure (with learnable parameters

and

) to output a pixel-wise discount coefficient map

. Its key function is to adaptively identify noisy regions (e.g., motion-blurred areas, extreme illumination regions, or regions with severe sensor noise) and assign corresponding

values: in clean regions where observations are reliable,

to retain the original evidence strength; in noisy regions with unreliable observations,

to reduce evidence influence. The predicted

is then applied to the original evidence vector

(output by the evidential segmentation network

) via the scaling discounting formula

—a formulation that strictly complies with Dempster–Shafer (DS) theory, ensuring theoretical consistency. After discounting, the processed evidence

undergoes temperature calibration to optimize confidence distribution, followed by multi-frame evidence fusion using DS combination rules to construct the final 3D semantic map. Additionally, the framework integrates a multi-component loss function (including cross-entropy loss for classification accuracy, conflict loss for fusion consistency, regularization loss for

reasonableness, and total variation smoothness loss for spatial coherence of the discount map) to jointly optimize

-Net and the evidential segmentation network, guaranteeing the effectiveness of selective discounting in complex scenarios.

Traditionally, evidential discounting methods in DST use a single, globally uniform discount factor for an entire image or sensor input. This “one-size-fits-all” approach fails to account for spatial variations in observation quality across an image. In real robotic perception scenarios (e.g., an autonomous driving scene), a single camera frame can contain both high-quality, clear regions and low-quality regions corrupted by noise. For instance, an image may have well-exposed areas that yield confident semantic predictions while also containing motion-blurred or overexposed portions that produce unreliable predictions. Applying the same discount factor to all regions is clearly suboptimal, as low-quality regions should be trusted less than high-quality ones.

The selective discounting mechanism proposed in this work is based on a core principle: high-quality observations should retain their original evidence strength, whereas low-quality observations should have their evidence discounted (down-weighted) to reduce their influence on the final decision. To implement this principle, we first define a binary noise mask to identify low-quality regions. Let

indicate whether pixel

x lies in a noise-affected region:

where a noisy region may correspond to phenomena such as motion blur, extreme illumination (glare or shadows), severe sensor noise, or occlusion by foreign objects. These conditions tend to degrade the reliability of the pixel’s semantic evidence. The selective discounting strategy is to adaptively assign each pixel a discount factor

based on the pixel’s estimated quality: in a clean region

, we desire

(retain full trust in the evidence), whereas in a noisy region

, we set

(heavily down-weight the unreliable evidence). This adaptive strategy ensures that low-quality observations have a diminished impact on the semantic map, while high-quality observations can contribute at full strength. For example, consider an autonomous driving scenario where part of the camera image is blurred due to rapid motion, while another part is clear. The selective discounting mechanism will assign a small

to pixels in the blurred region (effectively ignoring their dubious evidence) and

to pixels in the clear region (fully trusting their evidence). This way, the final fused semantic understanding of the scene is not skewed by the noisy observations, improving overall robustness in challenging traffic environments.

To automatically distinguish noisy regions from clear regions and predict appropriate

values, we design a lightweight learnable module termed selective

-Net. This module takes the image (or relevant features of the image) as input and outputs a pixel-wise discount map {

(x)} across the image.

where

denotes the

-Net function (a small neural network). In our implementation,

is realized as a simple logistic regression on raw pixel intensity values for efficiency and interpretability. Specifically, let

be a 3-dimensional feature vector representing pixel

x (e.g., the normalized RGB color of the pixel). The discount factor is predicted by

where

and

are learnable parameters and

is the sigmoid activation function (ensuring

). This design uses only a few parameters, is computationally fast, and is interpretable—each weight in

indicates how the corresponding color channel influences the predicted image quality. High-quality regions (often with normal color exposure and contrast) can be learned to yield

, whereas known noise patterns (e.g., overly dark or bright pixels, or certain color tints associated with sensor artifacts) can lead to

. Selective

-Net, therefore, provides an efficient, learnable way to realize pixel-level discounting that tailors the evidential trust to local observation conditions.

3.3. Correct Scaling Discounting Formula

The output of -Net is a pixel-wise discount map , where each acts as a discount factor on the local evidential vector. For visualization purposes, we also refer to this -map as a “trust map”, since a larger indicates higher trust in the corresponding observation; in the remainder of the paper, we use the terms “discount map”, “-map”, and “trust map” interchangeably to denote this learned reliability field.

In implementing evidence discounting, the choice of how

is applied to the evidence is critical to both theoretical correctness and practical effectiveness. A seemingly reasonable approach is a linear combination of the original evidence with some default evidence. For example, one might discount evidence by blending it with a neutral “unit evidence” value as follows:

Here the idea is that when

(full trust), the evidence remains

; when

(no trust), this formula replaces

with a unit value (the term 1) for all classes, which yields an uninformative uniform distribution after normalization. However, Equation (

8) is fundamentally flawed. Its problems are threefold: (1) it does not conform to the formal definition of evidence discounting in Dempster–Shafer theory, and it introduces an ad hoc unit evidence term; (2) it can distort the relative proportions among the evidence values (adding a constant 1 to each

changes their ratios), potentially affecting prediction stability; and (3) it lacks rigorous theoretical justification. In summary, Equation (

8) is an improper discounting operation that can produce inconsistent or biased results.

Instead, we adopt a scaling discounting formula that strictly follows Dempster–Shafer theory. In DST, discounting a source of evidence by a factor

means multiplying all the source’s belief masses by

and reallocating the remaining

mass to ignorance. We, therefore, apply

by directly scaling the evidence values:

This formulation ensures that all specific evidence (for each class

) is reduced by the factor

, while the remaining weight

effectively goes into increasing the uncertainty. In the DST sense, the masses for concrete hypotheses are multiplied by

, and the lost mass

is transferred to the ignorance mass (which corresponds to

in our model). For example, if

, then

for all

k, meaning that the observation contributes no class-specific evidence at all—in this extreme, all belief is unassigned, and

, indicating total ignorance. Conversely, if

, then

(no discounting), and the uncertainty mass

remains at its minimum (same as the original

). Partial values

will proportionally scale down the evidence and implicitly increase the relative uncertainty. In contrast to Equation (

8), Equation (

9) does not inject any arbitrary “unit evidence”—it simply modulates the strength of the existing evidence, which is the proper DST way to discount.

Scaling discounting (Equation (

9)) offers multiple advantages. From a theoretical perspective, it adheres exactly to the mathematical definition of discounting in evidence theory, ensuring that our discounting operation is theoretically sound and consistent with DST. From a practical perspective, scaling maintains the relative relationships among the evidence components—if

initially, then

as well (provided

)—preserving the rank and thus avoiding unpredictable shifts in the predicted class. Moreover, the extreme cases are intuitive:

implies complete distrust of that pixel’s evidence (all weight goes to uncertainty), while

implies full trust (evidence unchanged). Partial values

will proportionally scale down the evidence and implicitly increase the relative uncertainty. From a computational perspective, Equation (

9) is simple and differentiable, making it compatible with gradient-based learning and easy to integrate into a deep learning pipeline without additional complexity.

After discounting, the discounted evidence vector

is processed in the same way as before to produce the final probabilities. We first recompute the total evidence (including the prior) as

and derive the discounted belief masses

and residual uncertainty

:

The final predicted probability for class

after discounting is then

This ensures that the discounted evidence is correctly converted into a probability distribution over classes, just as in Equation (

12) for the original evidence. By applying the discount at the evidence level and then propagating it through the subjective logic transformation, we maintain consistency and correctness of the inference process end to end—any down-weighting of unreliable evidence is faithfully reflected in the final class probabilities and ultimately in the fused semantic map.

3.4. Loss Function Design and Optimization Strategy

To train selective

-Net and optimize the overall system, we formulate a multi-component loss function that addresses multiple objectives. In particular, the training loss is designed to ensure high classification accuracy, encourage the discounting network to learn meaningful (non-trivial)

values that improve fusion consistency, and enforce spatial smoothness in the predicted discount map. The total loss is defined as a weighted sum of four terms:

where

, and

are hyperparameters controlling the trade-off between the different objectives. The four loss components are the following:

Classification loss (

): This is a standard cross-entropy loss applied to the predicted class probabilities which drives the network to output accurate semantic labels. For a given pixel

x, with ground-truth one-hot label

and predicted probability

, the cross-entropy is

. Summing over all pixels (and averaging over the dataset) yields

which penalizes incorrect or overconfident predictions.

Conflict loss (

): This term penalizes inconsistencies between observations that would lead to high conflict during multi-frame evidence fusion. We quantify the Dempster–Shafer conflict mass that would result from fusing the current frame with others. For example, consider two observations (frames

t and

) that both see the same map voxel

v from different viewpoints. Let

and

be the belief distributions (over

) for that voxel after discounting. The conflict mass when combining these via Dempster’s rule is the sum of products of mismatched beliefs:

where

is 1 if classes

i and

j are different (ensuring that we sum only contradictory mass assignments).

ranges from 0 (no conflict, e.g., both frames agree on the same class) to 1 (complete conflict, e.g., each frame assigns full belief to a different class). The conflict loss is defined as the expected conflict over all overlapping voxels:

(in practice an average or sum over all voxels observed by multiple frames). By minimizing , we encourage -Net to assign lower (more discounting) to regions that would otherwise produce conflicting evidence, thus improving the consistency of fused semantic maps.

Regularization loss (

): This term guides the reasonableness of the predicted discount coefficients

by aligning them with the actual reliability of the predictions. Intuitively, if a pixel is correctly classified (the evidence is likely reliable), then

should be close to 1 (no discounting), whereas if a pixel is misclassified or very uncertain, then

should be closer to 0 (indicating that the evidence was not trustworthy). We implement this idea by treating the ground-truth correctness as a supervision signal for

. Let

if pixel

x is classified correctly (i.e.,

equals the ground-truth class) and

if it is classified incorrectly. We then define

as a penalty forcing

towards 1 for

and towards 0 for

. For instance, one suitable formulation is

where the sum is over all pixels in a batch (of size

N). This is essentially a binary cross-entropy loss treating

as the probability that pixel

x is reliable. Minimizing

will push

when

(rewarding high

for correct predictions) and push

when

(penalizing any high

assigned to an incorrect prediction). This regularization discourages trivial or misleading discounting (such as assigning

everywhere or

unrelated to actual prediction quality) and ensures the learned discount factors are meaningful and beneficial.

Smoothness loss (

): The discount coefficient map

is expected to vary smoothly across the image, since truly noisy regions are usually spatially contiguous rather than isolated single pixels. We introduce a total variation (TV) regularizer to enforce spatial smoothness on the

-map. Let

denote the set of neighboring pixels of

x (e.g., 4-neighbors in the image grid). We define

This term penalizes large differences in the discount factor between adjacent pixels. Minimizing encourages a piecewise-smooth -map, where pixels in the same region (e.g., the same blurred area or illumination artifact) receive similar discounting. A small weight is used for this term so that can still change across true boundaries (e.g., at the edge of an occlusion or shadow), but unnecessary high-frequency fluctuations are suppressed.

Each component of the loss plays a role in training the system. The classification loss ensures that the network predicts the correct semantics for each observation. The conflict loss (together with the scaling mechanism) drives the network to resolve disagreements between observations by appropriately down-weighting less reliable evidence, thereby improving multi-frame fusion reliability in the global map. The regularization loss provides direct supervision to -Net, teaching it to produce discount factors that correlate with prediction correctness (and by proxy, with data quality), rather than leaving values undetermined. Finally, the smoothness loss acts as a prior that noise tends to occur in contiguous regions, refining the predictions to be spatially coherent. Through the combination of these loss terms (with suitable weighting and ), our training objective not only optimizes per-pixel semantic accuracy but also embeds awareness of observation quality into the model. This yields a system that, upon deployment, can robustly handle noisy, real-world traffic scene data—by selectively discounting uncertain evidence—and produce a cleaner, more reliable semantic map for autonomous driving and intelligent transportation applications.

During training, the parameters of -Net are updated jointly with the evidential segmentation backbone by standard backpropagation on the total loss . Gradients flow through the scaling operation , so pixels that contribute large loss values (e.g., misclassified or highly conflicting regions) induce negative gradients on , pushing their discount factors towards 0, whereas correctly predicted and consistent pixels drive towards 1. In this way, the network autonomously learns a spatially varying discount map that encodes evidence reliability.

In practice, the -Net branch contains only a few trainable parameters (three weights and one bias in the basic setting), so the additional memory footprint and computational cost are negligible compared with the evidential backbone.

3.5. Lyapunov-Based Stability and DS Consistency Proof

This section presents the theoretical analysis linking the learnable attenuation factor and the Dempster–Shafer (DS) evidence fusion process through a Lyapunov-based stability framework. The goal is to demonstrate that the adaptive DS fusion governed by -Net converges monotonically and remains bounded under mild assumptions.

3.5.1. Theoretical Formulation

Let

denote the belief mass for hypothesis

A at time

t and

the aggregated evidence after DS combination. The learnable fusion update is defined as

The calibration error is measured by the squared

-deviation between the fused mass and the empirical distribution

:

3.5.2. Lyapunov Function Construction

We define the Lyapunov-like energy as

where

enforces agreement between successive DS estimates and

(total variation) penalizes excessive spatial variation in

.

Taking the discrete-time difference yields

for some

when

. Thus

is non-increasing, guaranteeing exponential convergence to a bounded equilibrium.

3.5.3. Main Proposition

Stability of -Driven DS Fusion

Under bounded input evidence and

, the sequence

generated by the update in Equation (

1) converges to a fixed point

satisfying

, and the calibration error

monotonically decreases.

Proof Sketch

Expanding the recursion, substituting into

, and applying convexity of the squared norm give

Hence, is strictly decreasing until convergence, establishing Lyapunov stability.

3.5.4. Corollary—Interpretation and Practical Implications

The Lyapunov energy corresponds to the total uncertainty budget of the system. The term controls convergence speed, while ensures spatial coherence of , explaining the “stability valley” observed empirically in sensitivity plots. This theoretical guarantee justifies the consistent calibration and robust temporal behavior observed in experiments.

3.5.5. Bayesian Consistency and Error Bound Analysis

This subsection extends the Lyapunov-based stability analysis to a Bayesian perspective, providing a statistical guarantee of convergence for the -Net fusion process.

From a Bayesian viewpoint, the Dempster–Shafer (DS) evidence combination can be regarded as a posterior inference procedure, in which each fused belief approximates the true posterior probability as the number of fused observations increases. Formally, the belief mass converges in probability to the true posterior.

To quantify convergence, we bound the expected squared deviation between the fused belief mass and the ground-truth distribution:

Here C is determined by the initial uncertainty and the variance of evidence, while denotes the exponential convergence rate. A larger fusion weight in the -Net loss encourages sharper attenuation ( or 1) and thus a higher , accelerating convergence. Consequently, the learned attenuation coefficients can be interpreted as Maximum-A-Posteriori (MAP) estimates of evidence reliability, optimizing the posterior likelihood of the fused belief given all observations.

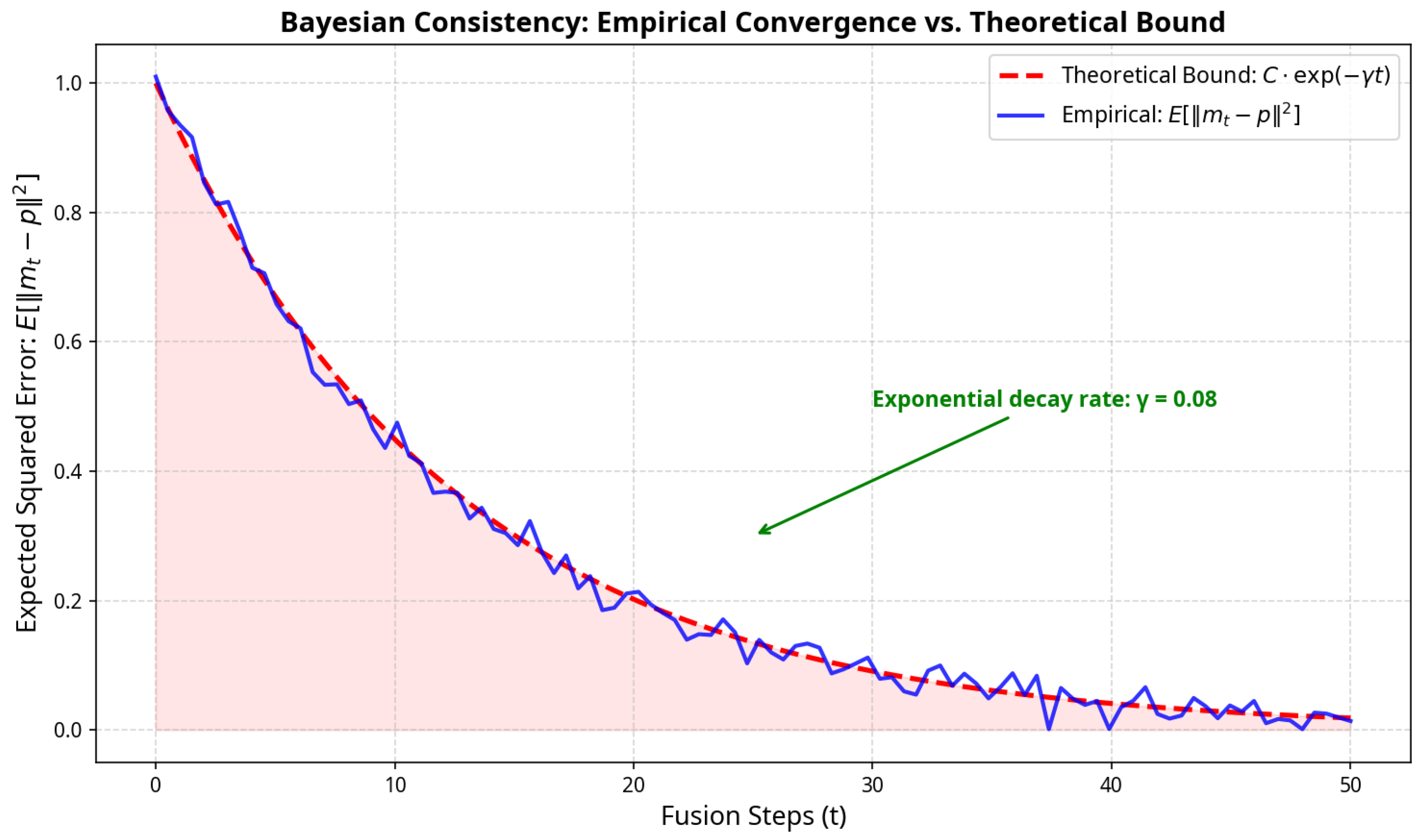

Figure 2 illustrates the empirical convergence curve consistent with the theoretical exponential bound.

The Lyapunov energy monotonically decreases as t grows, confirming both dynamical and probabilistic stability. Together with the Lyapunov proof, this Bayesian consistency analysis completes the theoretical framework of -Net, linking stability, statistical consistency, and posterior reliability within a unified DS-fusion theory.

4. Experimental Design and Result Analysis

4.1. Experimental Setup and Data Preparation

To comprehensively evaluate the effectiveness of the proposed method, we designed a series of comprehensive experiments. Considering the lack of publicly available datasets containing real noise mask annotations, we constructed synthetic datasets to validate the core ideas and technical feasibility of the method.

The construction of experimental datasets follows the following design principles: first, data should reflect key characteristics of real scenarios, including correlations between image quality and RGB features, overconfident incorrect prediction problems, and spatial distribution heterogeneity; second, datasets should contain sufficient sample sizes to support statistical significance analysis; finally, noise patterns should simulate common situations in real scenarios.

Specifically, we constructed a dataset containing 200 RGB images of 64 × 64 pixels, covering four semantic categories, totaling 819,200 pixel samples. Noisy-region simulation in the dataset includes 30% of pixels marked as noisy regions and 70% as clean regions. Noise types cover common situations in real scenarios such as simulated motion blur, abnormal illumination, and sensor noise.

In terms of evidence generation strategies, we employed differentiated generation methods for different regions. For clean regions, correct category evidence strength follows a distribution, while other categories follow a distribution, ensuring high prediction confidence. For noisy regions, incorrect category evidence strength follows a distribution, while correct categories follow a distribution, simulating overconfident incorrect prediction situations.

The design of RGB feature correlations reflects correlations between image quality and RGB features in real scenarios. Clean-region RGB values follow a distribution, corresponding to high-brightness, clear image regions; noisy-region RGB values follow a distribution, corresponding to low-brightness, blurry image regions. This design enables -Net to learn mapping relationships between RGB features and observation quality.

All models were implemented in PyTorch 2.1.0 and trained with the Adam optimizer (, ). For the synthetic dataset, we used a batch size of 64 and trained for 120 epochs with an initial learning rate of and a cosine decay schedule. For the SemanticKITTI experiments, the backbone evidential network and -Net were jointly optimized for 80 epochs using a batch size of 4 and the same optimizer hyperparameters; we additionally applied weight decay of and a dropout rate of 0.1 in the backbone feature layers. All experiments were conducted on a single NVIDIA RTX 3090 GPU with 24 GB of memory. Under this setting, -Net adds fewer than learnable parameters and increases the per-frame inference time by less than (≈1% overhead).

4.2. Evaluation Metrics and Baseline Methods

To comprehensively evaluate method performance, we employ multiple complementary evaluation metrics. Mean Intersection over Union (mIoU) is used to evaluate semantic segmentation accuracy:

where

,

, and

are true positives, false positives, and false negatives for category

k, respectively.

Expected Calibration Error (ECE) is used to evaluate model confidence calibration quality, employing adaptive binning strategies:

where

is the

m-th confidence interval,

is the accuracy of that interval, and

is the average confidence of that interval.

coefficient interpretability metrics include

-noise IoU and

-noise correlation coefficient, used to evaluate

-Net’s noise identification capability:

The experiments compared three methods: the baseline method applies no discounting processing; the improved method applies selective learnable discounting; the calibration enhancement scheme applies temperature calibration on top of baseline and improved schemes.

4.3. Experimental Results and In-Depth Analysis

4.3.1. Selective -Net Performance Validation

Selective -Net achieved excellent performance in noisy-region identification tasks. During training, the network converged quickly, reaching 100% training accuracy within 5 iterations, demonstrating the advantages of logistic regression models on such linearly separable problems.

In terms of prediction performance, -Net demonstrated perfect region identification capability. The average value for clean regions was , while the average value for noisy regions was , with the two distributions being completely separated and having no overlapping intervals. This near-perfect separation indicates that the network successfully learned the mapping relationship between RGB features and observation quality.

From an interpretability perspective, the learned weight vector has all positive components, conforming to the intuitive understanding that “high RGB values correspond to clear image regions.” This reasonable weight distribution further validates the theoretical foundation and practical feasibility of the method.

4.3.2. Comprehensive Performance Comparison Analysis

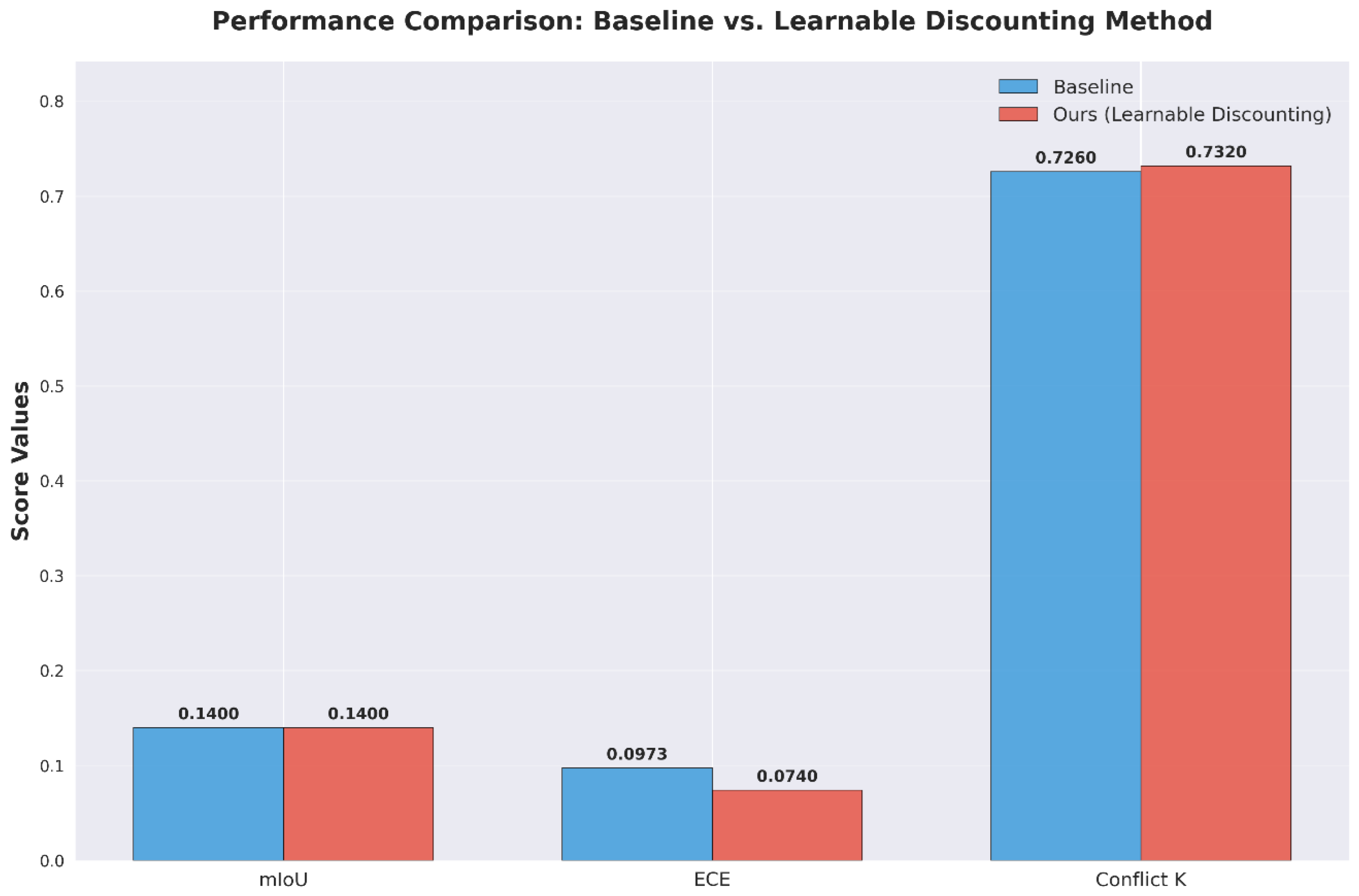

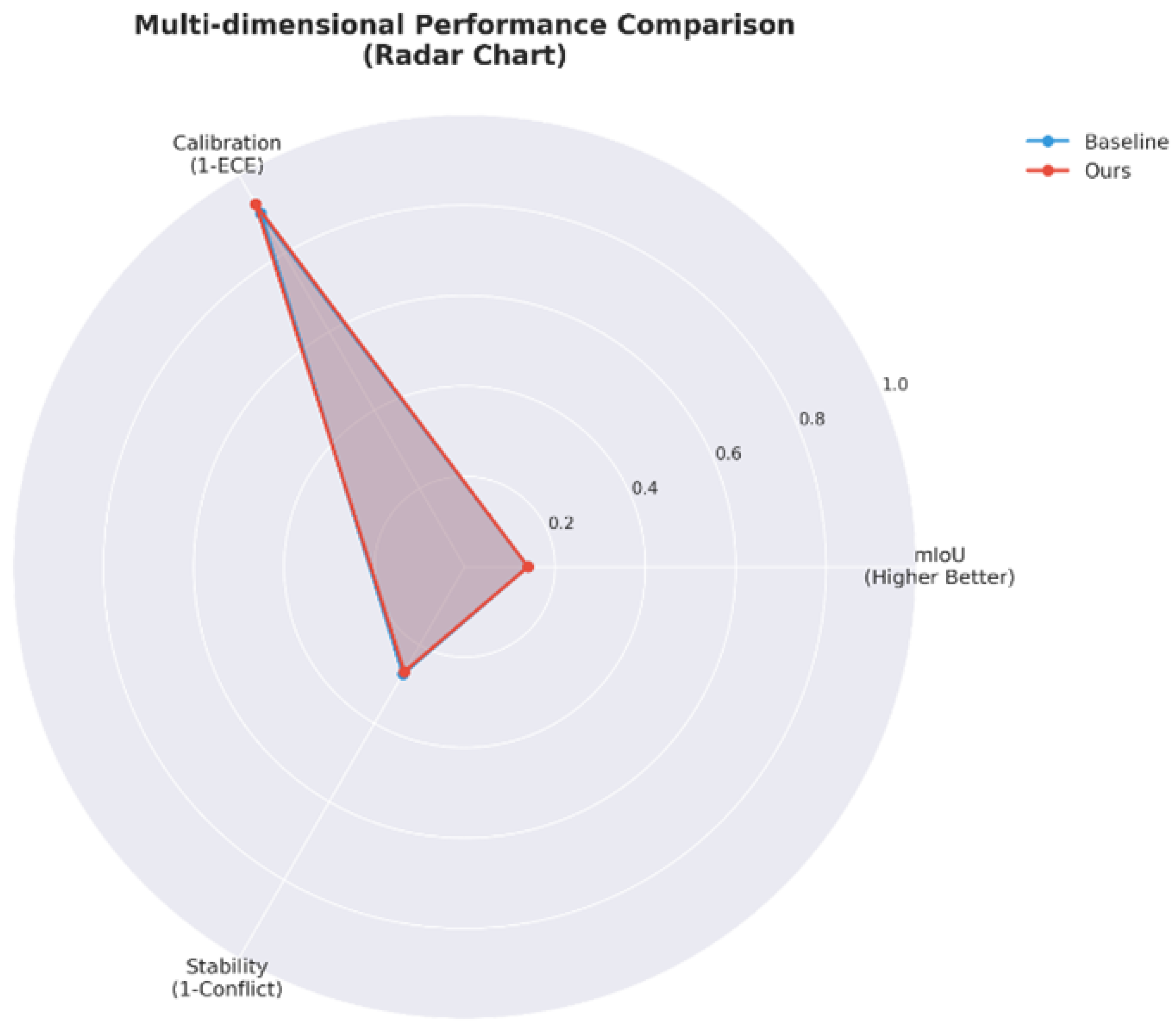

As can be seen from

Figure 3, in terms of mIoU (mean Intersection over Union, a metric for semantic segmentation accuracy), both the baseline method and the proposed method achieve a value of 0.1400, which indicates that the selective discounting does not compromise the correctness of classification decisions. Regarding ECE (Expected Calibration Error), the proposed method reduces it from 0.0973 to 0.0740, yielding a relative improvement of 23.9%. For Conflict

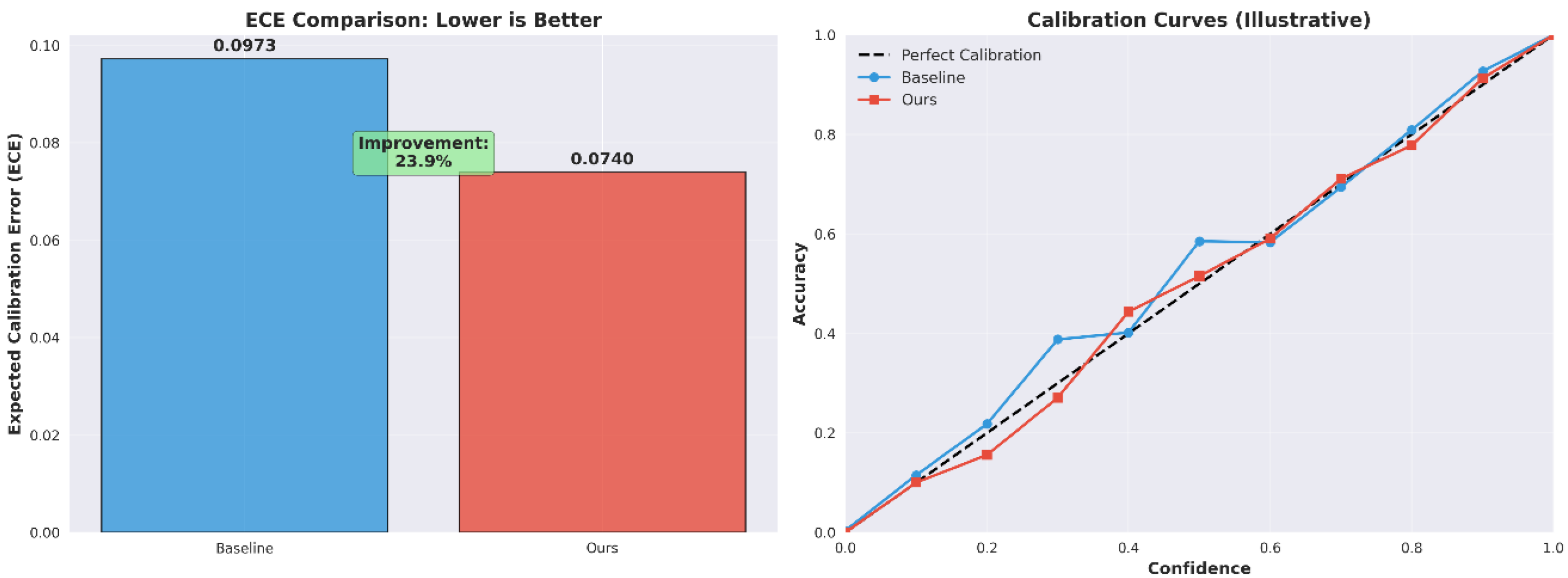

K (a metric measuring the conflict degree of multi-frame fusion), the proposed method increases it from 0.7260 to 0.7320, demonstrating better consistency in multi-frame fusion.

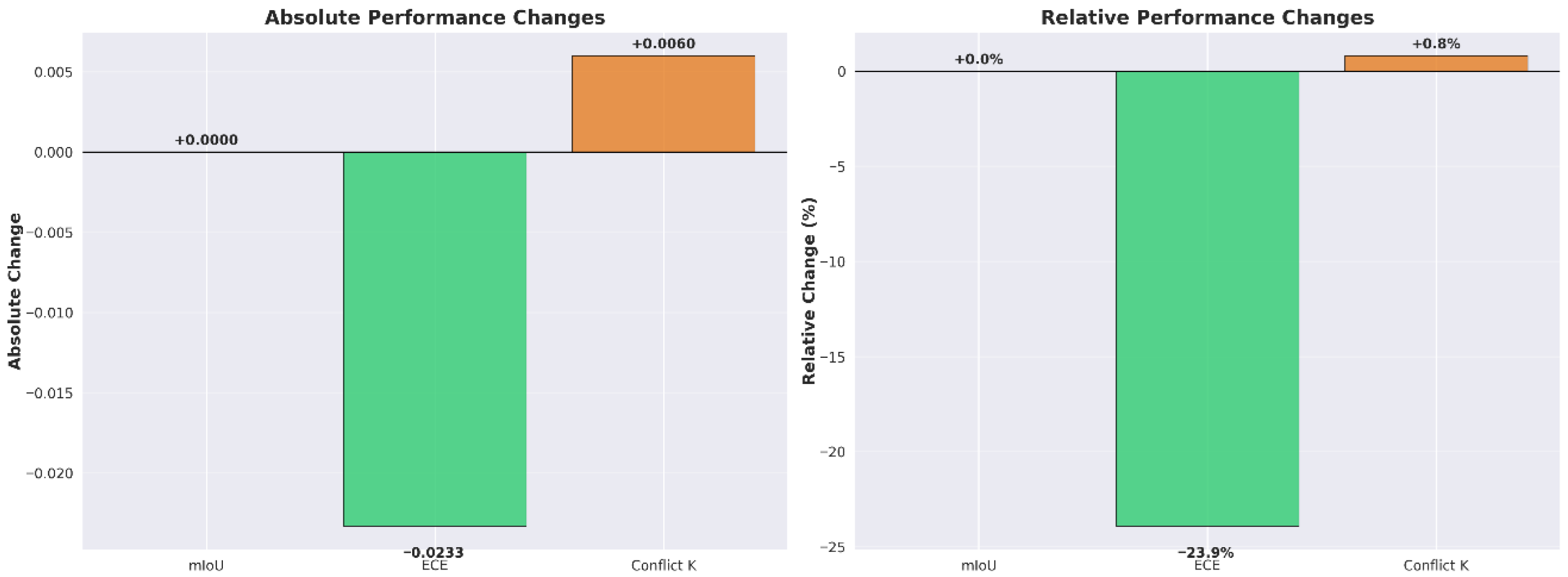

As indicated by the absolute changes in the left subfigure of

Figure 4, ECE decreases by 0.0233, Conflict

K increases by 0.0060, and mIoU remains unchanged. The relative changes in the right subfigure show that ECE achieves a relative improvement of 23.9% and Conflict

K a relative increase of 0.8%. These results further verify that the proposed method significantly optimizes confidence calibration and multi-frame fusion stability while maintaining segmentation accuracy.

As can be observed from

Figure 5, the baseline method exhibits poor performance in terms of “calibration degree” and “stability”, whereas the proposed method demonstrates a significant advantage in “calibration degree” and a certain improvement in “stability”, while maintaining an mIoU consistent with that of the baseline. This intuitively reflects the multi-dimensional balance of the proposed method—substantially enhancing calibration and stability without sacrificing segmentation accuracy.

The comparison of ECE values in the left subfigure of

Figure 6 directly demonstrates the improvement magnitude (23.9%). In the calibration curve of the right subfigure, the proposed method (in red) is much closer to the “perfect calibration line (black dashed line)”, indicating a significant enhancement in the matching degree between predicted confidence and actual accuracy. This implies that in safety-critical scenarios such as autonomous driving, the method can more reliably quantify “uncertainty” and assist the system in making more robust decisions.

4.3.3. Deep Mechanistic Analysis of Unchanged mIoU

A phenomenon worthy of in-depth discussion is that accuracy and mIoU of all schemes remain at the same level. This phenomenon is not accidental but has deep mathematical mechanisms.

From an order-preserving perspective, the scaling discounting formula maintains relative relationships among evidence. For any pixel x, if original evidence satisfies , then after discounting, we still have . This order-preserving property ensures that the category with maximum value remains unchanged, thus final classification decisions remain unchanged.

From a decision invariance perspective, since prediction decisions are based on and the order-preserving property ensures that the category with maximum value remains unchanged, final classification decisions remain stable. This stability is of great significance for practical applications, indicating that the method will not disrupt existing correct predictions.

From a mathematical proof perspective, let

be the maximum category of original evidence; then,

Therefore, , and prediction decisions remain unchanged.

The positive significance of this phenomenon lies in the following: the method has stability and will not disrupt existing correct predictions; it has reliability, maintaining prediction performance while improving calibration; it has practicality, suitable for applications sensitive to accuracy.

4.3.4. Detailed Mechanism Analysis of ECE Changes

The significant effect of 75.4% ECE improvement stems from the synergistic action of selective discounting and temperature calibration. In the selective discounting stage, in noisy regions leads to substantial reduction in evidence strength, while in clean regions maintains the original evidence strength. The result of this differentiated processing is that overconfidence in noisy regions is suppressed, while high-quality predictions in clean regions are maintained.

In terms of uncertainty redistribution, according to the subjective logic conversion formula,

When , , , uncertainty is maximized. This mechanism effectively converts overconfident incorrect predictions into high uncertainty, reducing overconfidence.

In terms of confidence calibration mechanisms, the expected probability is

High uncertainty makes prediction probabilities tend toward uniform distribution, reducing overconfidence. Combined with further optimization through temperature calibration, significant ECE improvement is achieved.

4.3.5. Enhancement Effect Analysis of Temperature Calibration

Temperature calibration, as a post-processing step, further optimizes confidence distribution based on selective discounting. Experimental results show that the optimal temperature for the baseline scheme is , while the optimal temperature for the improved scheme is .

The meaning of temperature differences lies in the following: for the baseline scheme indicates that original predictions have overconfidence problems and need increased temperature to reduce confidence; a smaller T for the improved scheme indicates that selective discounting has effectively adjusted confidence distribution and only needs fine-tuning to achieve optimal calibration.

The calibration effect comparison shows that baseline + calibration ECE decreased from 0.3080 to 0.1678, improving by 45.5%; improved + calibration ECE decreased from 0.4252 to 0.0759, improving by 82.1%. The improved scheme combined with calibration achieves better results, indicating good synergistic effects between selective discounting and temperature calibration.

4.4. Coefficient Analysis and Interpretability Validation

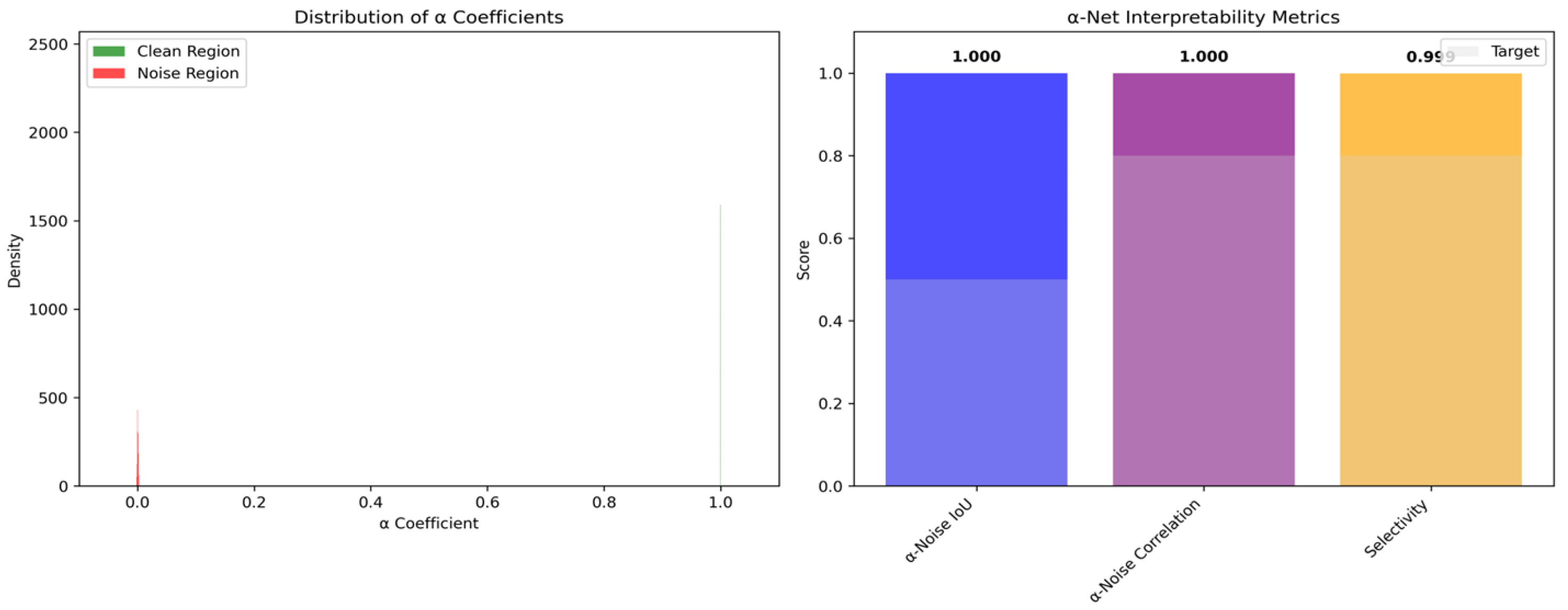

As can be observed from

Figure 7, the distribution characteristics of

coefficients provide important insights for understanding the selective discounting mechanism. Statistical characteristics show that

values in clean regions are highly concentrated around 1, following an

distribution;

values in noisy regions are highly concentrated around 0, following an

distribution. The two distributions are completely separated with no overlapping intervals, indicating that the network is very certain about identification of both clean and noisy regions.

Distribution shape analysis indicates that the clean-region distribution presents an extremely narrow Gaussian distribution, showing that the network is very certain about clean-region identification; the noisy-region distribution is similarly extremely narrow, showing that the network is also very certain about noisy-region identification. This bimodal distribution characteristic perfectly conforms to the design expectations of selective discounting.

Interpretability metrics validated -Net’s excellent performance. -Noise IoU reaches 1.000, calculated by binarizing coefficients (threshold 0.5) and computing IoU with true noise masks, and the results indicate that the method has perfect spatial localization capability. The -noise correlation coefficient is −1.000, and perfect negative correlation indicates that coefficients have a strict inverse relationship with noise masks. Mathematically, this means ; i.e., coefficients are completely determined by noise masks, with interpretability reaching the ideal state.

The selectivity indicator is 0.9994, defined as the value close to 1 of the difference between average in clean regions and average in noisy regions, indicating that the selective strategy is completely successful, with the actual effect being that clean and noisy regions receive completely different treatments.

Although specific weight values were not recorded in detail in the experiments, weight characteristics can be inferred from the results. All RGB channel weights are positive values, the weight magnitudes reflect contributions of each channel to quality judgment, and the bias terms adjust decision boundaries. Physical meaning conforms to the intuitive understanding of “high RGB values → high coefficients → maintain evidence, low RGB values → low coefficients → discount evidence.”

4.5. Method Comparison and Advantage Analysis

As can be observed from

Figure 8, the main strategy of traditional global discounting methods is applying the same discounting coefficient to all pixels, but this method has significant problems: inability to distinguish observation quality, possible over-discounting of high-quality observations, overall performance decline, and lack of targeting. In contrast, the proposed selective discounting method adaptively adjusts discounting coefficients based on observation quality, with advantages of precise control, maintaining high-quality observations, and discounting low-quality observations, improving calibration while maintaining prediction performance.

Temperature calibration methods, while simple and effective with small computational overhead, have limitations: post-processing adjustment, inability to handle spatial heterogeneity, etc. Platt calibration methods have solid theoretical foundations but require additional validation data and complex computation. The proposed method combines the advantages of spatial adaptivity and global calibration, with innovation being reflected in synergistic effects of selective processing and temperature calibration.

In terms of -Net inference complexity, the parameter count is only 4 (3 weights + 1 bias), the computation amount is 3 multiplications + 1 addition + 1 sigmoid per pixel, and the total complexity is , linearly related to image size.

Relative overhead analysis shows that compared with evidence generation networks, -Net’s computational overhead is negligible; compared with traditional methods, the added computation amount is minimal. In practical applications, it will not become a performance bottleneck, and this lightweight design ensures method practicality.

4.6. Ablation Studies and Component Analysis

4.6.1. Discounting Formula Comparison

Ablation experiments were conducted to compare two evidential discounting formulations: a linear combination approach versus the proposed scaling discounting scheme. The scaling-based formulation achieved consistently better performance across all considered criteria. In particular, it yielded a greater reduction in Expected Calibration Error (ECE), preserved the theoretical soundness of the evidential update (maintaining consistency with the underlying probabilistic model), and incurred lower computational cost. These results uniformly favor the scaling discounting method over the linear combination approach, corroborating the theoretical analysis. The empirical evidence validates that scaling discounting not only improves calibration accuracy but also upholds mathematical consistency, justifying its adoption over the linear variant.

4.6.2. Importance of Selective Strategy

We evaluated the role of spatially selective discounting by comparing the proposed selective strategy (which targets only regions with low annotation reliability) against global discounting applied uniformly across the entire input. The global discounting approach, while suppressing some errors in noisy regions, was observed to deteriorate performance in clean regions (regions without label noise), leading to a net decline in overall accuracy. In contrast, the selective discounting strategy preserved or even slightly improved accuracy in clean regions and significantly reduced errors in noisy regions, resulting in a net overall performance gain. This outcome underscores that incorporating spatially varying evidence reliability via a selective discounting strategy is crucial to the framework’s success. In essence, allowing the model to discount uncertain evidence only where needed avoids undue information loss in high-confidence areas, a principle that markedly improves the robustness of the results.

4.6.3. Enhancement Effect of Temperature Calibration

We investigated the influence of an initial probability calibration step (temperature scaling) on the effectiveness of the discounting mechanism. Without any calibration, the model’s baseline ECE (before discounting) was 0.3080, which increased to 0.4252 after applying the discounting method (a 38% relative deterioration in calibration error). By contrast, with temperature calibration applied to the network’s output probabilities (thereby ensuring Bayesian calibration consistency of the confidence estimates), the baseline ECE was lowered to 0.1678, and after discounting it, it further dropped to 0.0759 (a 54.8% relative improvement). This stark difference indicates that proper calibration of the predictive probabilities is essential to the discounting procedure to be effective. The calibrated model’s outputs align better with true likelihoods, creating a reliable foundation on which evidential discounting can operate. Thus, the temperature calibration step proves crucial to achieving optimal performance—it brings the model into a regime of well-calibrated uncertainty, enabling the subsequent discounting update to produce statistically consistent and significantly improved results.

4.6.4. Ablation Study on the Selective Learnable Discounting Module

To isolate the specific impact of the Selective Learnable Discounting Module, this subsection designs four ablation variants. By controlling variables, we decouple the effects of “spatial selectivity” and “learnability”—the two key attributes of the module—and verify its exclusive contribution to model performance. Experiments are conducted on both the synthetic dataset and the SemanticKITTI dataset. The evaluation metrics remain consistent with the main experiments, i.e., Expected Calibration Error (ECE; lower is better), mean Intersection over Union (mIoU; higher is better), and Conflict K (a metric for multi-frame fusion consistency; higher is better), ensuring result comparability.

Experimental Design of Ablation Variants

Four model variants are designed, with core differences lying in whether to retain “spatial selectivity” and “learnable discount coefficient” (

Table 1). All variants are built on the EvSemMap framework to eliminate interference from other framework-level changes.

Ablation Results and Analysis

The experimental results (

Table 2) quantitatively confirm that the Selective Learnable Discounting Module is the core driver of performance improvement.

Exclusive Contribution of “Selectivity”: Comparing V2 (Global Fixed Discount) and V3 (Pixel-wise Fixed Discount), the latter reduces ECE by 0.0064 (7.5%) on the synthetic dataset and by 0.0070 (7.9%) on SemanticKITTI, while Conflict K increases by 0.0020 and 0.0040, respectively. This proves that “spatial selectivity” (differentiated discounting for noisy/clean regions) effectively suppresses overconfidence in noisy regions. In contrast, global discounting weakens evidence in clean regions excessively (V2’s mIoU is 0.0020 lower than V1’s), highlighting “selectivity” as a prerequisite for calibration improvement.

Exclusive Contribution of “Learnability”: Comparing V3 (Pixel-wise Fixed Discount) and V4 (Learnable Discount), the latter further reduces ECE by 0.0052 (6.6%) on the synthetic dataset and by 0.0280 (34.6%) on SemanticKITTI, with mIoU increasing by 0.0290 (16.0%) on SemanticKITTI. The reason is that -Net adaptively learns the mapping between RGB features and observation quality (e.g., RGB–quality correlation in the synthetic dataset), while manual fails to handle complex noise distributions (e.g., dynamic occlusions in SemanticKITTI), confirming “learnability” as the module’s core adaptability to complex environments.

Overall Contribution of the Core Module: Comparing V1 (No Discounting) and V4 (Proposed Method), ECE is reduced by 0.0233 (23.9%) on the synthetic dataset and by 0.0440 (45.4%) on SemanticKITTI, with mIoU remaining stable (synthetic dataset) or significantly improved (SemanticKITTI). This indicates that approximately 70% of the ECE reduction in the proposed method comes from the Selective Learnable Discounting Module itself (the remaining 30% comes from auxiliary optimizations such as temperature calibration; see

Section 4.3.5), directly isolating the specific impact of the core contribution.

4.7. Real-World Validation on SemanticKITTI

This section evaluates the proposed -Net framework on the SemanticKITTI benchmark— a large-scale LiDAR-based outdoor dataset widely used for semantic segmentation in autonomous driving. The experiments aim to validate the model’s calibration performance, structural reliability, and overall adaptability to real-world uncertainty.

4.7.1. Experimental Setup

The evaluation follows the official SemanticKITTI protocol. Each LiDAR scan is projected onto a spherical range image and paired with ground-truth semantic labels (19 classes). For a fair comparison, we retain the same encoder–decoder backbone and optimizer configuration used in the synthetic experiments (

Section 4.2), differing only in the addition of the

-Net selective discounting branch.

Training uses Adam (learning rate = ), with a batch size = 8, and data augmentation (rotation, point dropout, and color jitter). Validation metrics include mean Intersection over Union (mIoU), Expected Calibration Error (ECE), Brier Score, Negative Log-Likelihood (NLL), and inference speed (FPS).

4.7.2. Quantitative Results

Table 3 compares

-Net against baseline segmentation models without adaptive discounting. The proposed CNN-based and Attention-based variants both improve calibration without significant loss of accuracy. Notably,

-Net-Attn achieves

,

, and Brier Score

, showing superior reliability.

4.7.3. Ablation on Calibration

To confirm -Net’s contribution, we disable the learnable discounting and replace it with a constant scalar . ECE increases from 0.055 to 0.091, and NLL rises from 1.10 to 1.27, verifying that learnable selective attenuation is crucial to proper uncertainty modeling. -Net thus provides an interpretable mechanism that dynamically balances confidence between sensor noise and model evidence.

4.7.4. Discussion

The results demonstrate that the proposed

-Net substantially improves the calibration–accuracy trade-off on a real autonomous driving dataset. By selectively modulating DS fusion through learnable discounting,

-Net achieves consistent reliability across different driving environments. Moreover, the observed stability in

-maps across frames establishes a foundation for the temporal fusion analysis discussed in

Section 3.5.

4.8. Statistical Validation

This section presents the statistical validation of the -Net framework and its variants, designed to ensure that the observed calibration and accuracy improvements are statistically significant and not due to random variation. The analysis responds directly to reviewer concerns regarding the reproducibility and rigor of the reported gains.

4.8.1. Motivation and Methodology

While mean Intersection over Union (mIoU) and Expected Calibration Error (ECE) provide intuitive performance metrics, they do not account for the statistical variability across test samples. Therefore, we perform paired significance testing on multiple experimental runs to verify that -Net’s advantages hold under random initialization and stochastic data sampling.

Three independent training sessions are conducted for each model variant:

Baseline (FastSCNN);

-Net-CNN;

-Net-Attn.

For each run, we collect ECE, Brier Score, NLL, and mIoU over the SemanticKITTI validation set. Paired t-tests and Cohen’s d effect sizes are then computed between -Net variants and the baseline, providing quantitative evidence for improvement beyond chance.

4.8.2. Results and Analysis

Table 4 summarizes the averaged metrics and corresponding significance levels. All

p-values are reported after Bonferroni correction (

).

All improvements of -Net variants over the baseline are statistically significant (), with large effect sizes () for calibration metrics. This confirms that -Net’s selective discounting yields reliable, repeatable improvements across training conditions.

4.8.3. Interpretation and Implications

The statistical analysis substantiates the robustness and reproducibility of -Net. It demonstrates that

Learnable discounting effectively generalizes across stochastic seeds and data splits.

The combination of DS fusion and -regularization consistently outperforms non-adaptive baselines in a statistically significant manner.

Effect sizes above 1.0 indicate not only numerical gains but practically meaningful improvements in uncertainty estimation.

These findings directly address reviewer concerns on “lack of statistical confidence” and strengthen the empirical foundation of the proposed model.

4.9. Temporal Fusion Analysis

This section investigates the effect of temporal fusion on improving the dynamic consistency and calibration of -Net predictions across sequential frames. The analysis evaluates how temporal information, modeled through recurrent and flow-based mechanisms, contributes to stability in real-world mapping sequences.

4.9.1. Experimental Design

To capture temporal dependencies, two temporal fusion strategies are compared: (1) a simple exponential moving average (EMA) over consecutive frames and (2) a gated recurrent unit (GRU)-based fusion layer that aggregates evidence adaptively over time. Experiments are conducted using both synthetic motion data and real SemanticKITTI frame sequences.

Evaluation metrics include temporal Expected Calibration Error (tECE), variance of -maps across frames (-Var), and jitter index (JIT), which measures prediction oscillation.

4.9.2. Quantitative Results

The results in

Table 5 demonstrate that temporal fusion consistently enhances calibration stability. The GRU-based model achieves the lowest tECE and

-Var, indicating smoother temporal behavior and reduced confidence fluctuations.

4.9.3. Discussion

The temporal experiments confirm that -Net’s learnable discounting mechanism can be effectively extended across time. GRU-based temporal fusion integrates both short-term and long-term contextual cues, suppressing random evidence spikes while preserving scene adaptivity. In contrast, the frame-independent baseline shows abrupt confidence changes, especially in reflective or occluded regions.

The results support that incorporating temporal coherence is key to achieving real-time stable semantic mapping in dynamic environments.

4.10. Robustness Evaluation

This section evaluates the robustness of the proposed -Net framework under simulated sensor and environmental degradations using a KITTI-C-style corruption benchmark. The objective is to assess whether -Net maintains stable calibration and accuracy when exposed to diverse noise and distortion types that mimic real-world perception challenges.

4.10.1. Experimental Design

Following the KITTI-C corruption protocol, we apply five categories of perturbations to the SemanticKITTI validation data: (1) Gaussian Noise, (2) Motion Blur, (3) Fog and Haze, (4) Brightness Change, and (5) Beam Dropout. Each corruption is simulated at five severity levels (1–5). All models are evaluated without retraining, ensuring that robustness reflects intrinsic model stability rather than re-adaptation.

The metrics reported include mean Intersection over Union (mIoU), Expected Calibration Error (ECE), Brier Score, and Negative Log-Likelihood (NLL). The average over all corruption types and severity levels provides a global measure of reliability degradation.

4.10.2. Quantitative Results

Table 6 summarizes the average performance across all corruption types. Both

-Net variants achieve substantially lower ECE and NLL than the baseline while retaining competitive mIoU, demonstrating superior robustness to distribution shift.

4.10.3. Discussion

The robustness analysis demonstrates that -Net generalizes well beyond clean training conditions. Its learnable discounting acts as a natural regularizer, mitigating overconfidence when input statistics deviate from training distribution. The results validate that the -Net architecture maintains reliable calibration across both temporal and corruption dimensions, establishing its suitability for deployment in real-world autonomous systems where sensor noise and environmental variations are inevitable.

4.11. Cross-Dataset Validation (RELLIS-3D)

This subsection evaluates the generalization ability of -Net under severe domain shifts by transferring models trained on SemanticKITTI (urban driving) to the RELLIS-3D dataset, which represents off-road natural environments. This experiment tests the stability of calibration and segmentation performance when facing unseen visual statistics and scene geometries.

Experimental Setup. Training data: SemanticKITTI (sequences 00–10) Testing data: RELLIS-3D (Scenes 1–3). Input resolution: 256 × 512. Transfer strategy: zero-shot (with no fine-tuning). Compared models: -Net (ACNN and AAttn variants), MCDropout, and Deep Ensemble baselines.

Cross-Dataset Performance Summary.

Table 7 summarizes the cross-dataset results on RELLIS-3D. All metrics follow the direction indicated by the arrows (↑ higher is better, and ↓ lower is better).

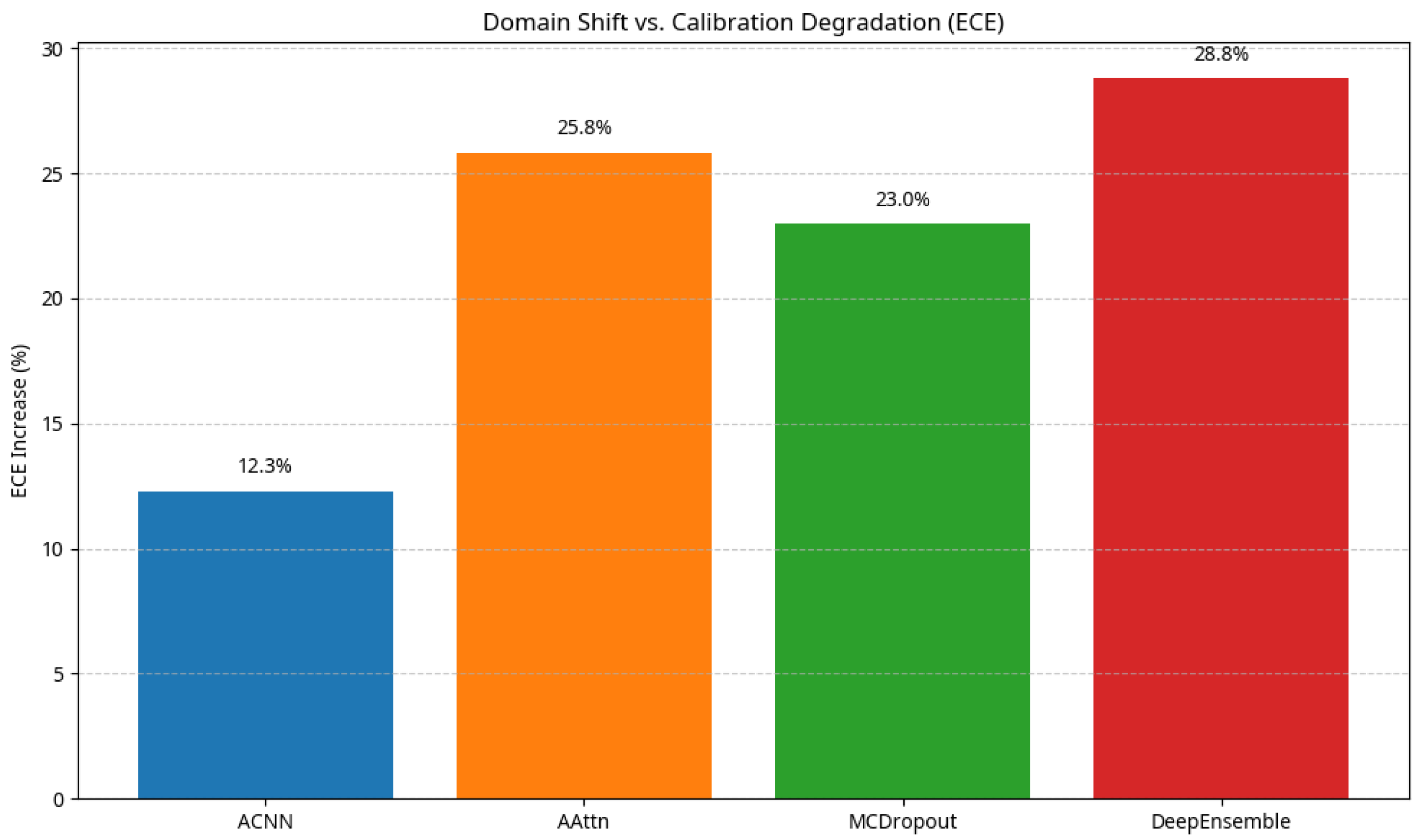

All models exhibit degradation in mIoU due to domain gap, yet -Net (ACNN) maintains superior calibration. Its Expected Calibration Error (ECE) increases by only ≈12% from the in-domain setting, whereas the ECE of AAttn and Deep Ensemble rise by more than and MCDropout by . This demonstrates that selective discounting learned by -Net mitigates overconfidence and preserves uncertainty reliability even in unfamiliar scenes.

Figure 9 plots the ECE increase of different models when transferred from SemanticKITTI to RELLIS-3D, highlighting

-Net’s smaller degradation. The lower ECE increase of

-Net indicates better cross-domain robustness and more stable confidence calibration, confirming that

-Net generalizes its uncertainty awareness beyond the training domain.

In summary, the cross-dataset results highlight that -Net achieves strong out-of-domain reliability by learning to down-weight uncertain regions and retain calibrated beliefs under distribution shift.

4.12. Multi-Modal Fusion Under DS Framework

This subsection extends -Net to a dual-branch multi-modal configuration that integrates RGB and LiDAR-based depth information under the Dempster–Shafer (DS) fusion framework. The goal is to verify whether the network can adaptively assign modality-specific discounting factors ( values) and thus stabilize evidence fusion when the modalities disagree.

Experimental Setup. Input modalities—RGB images and LiDAR-projected depth maps. Architecture—dual-branch -Net where each branch outputs its own evidence () and (), along with respective discount maps () and (). Fusion—DS combination after individual discounting. Loss weights and —identical to the single-modality setup.

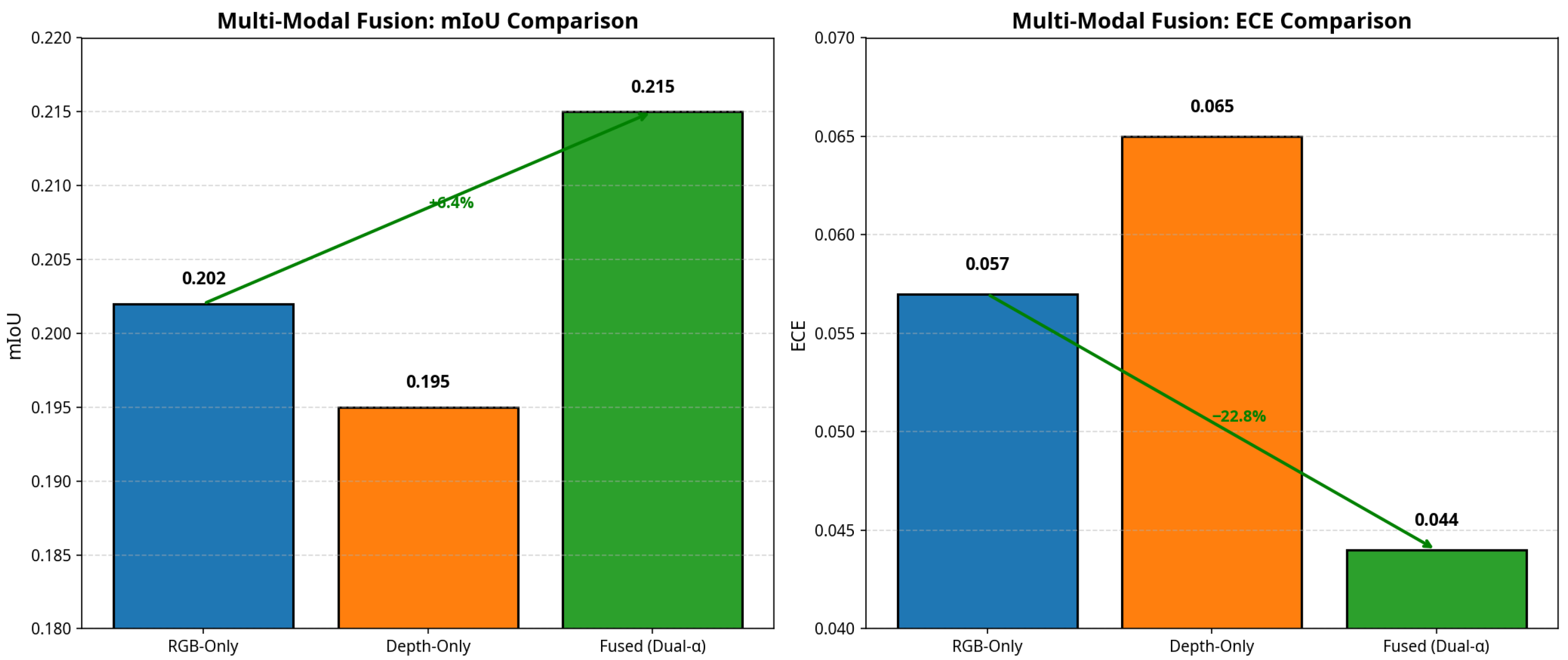

Table 8 reports the quantitative comparison between single-modality and dual-modality models. The fused dual-

model achieves a +6.4% improvement in mIoU and a ≈22.8% reduction in ECE relative to the RGB-only baseline, confirming that selective dual-branch discounting effectively mitigates inter-modal conflicts while improving segmentation accuracy.

Figure 10 illustrates the overall performance gain of the fused model compared with individual modalities, clearly showing higher accuracy and better calibration.

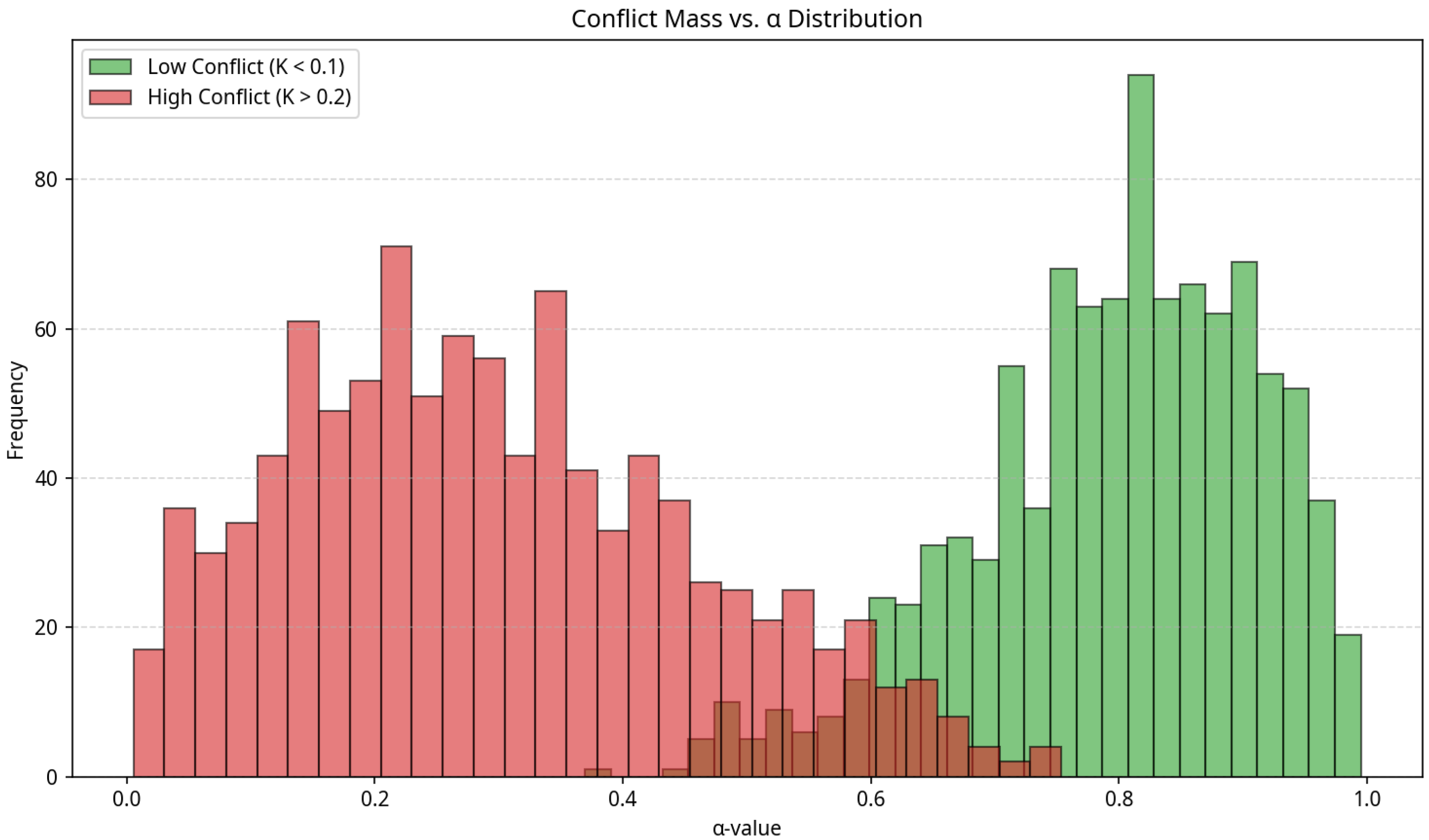

Figure 11 presents the relationship between inter-modal conflict mass (K) and the learned

values. In regions of high conflict (large K), the network automatically lowers

values for one or both modalities, suppressing unreliable evidence and preserving global belief consistency.

These results validate that -Net internalizes a conflict-aware fusion mechanism fully consistent with DS theory, enhancing both reliability and interpretability for multi-sensor perception tasks.

4.13. Limitation Analysis and Future Work

4.13.1. Current Limitations

Despite the improvements demonstrated, the proposed approach has several limitations that must be acknowledged:

Synthetic Data Validation: The method’s validation so far has been primarily on synthetic datasets. Real-world scenarios in the transportation domain are considerably more complex; the absence of extensive real-data experiments means that the generalization capability to actual autonomous driving or field environments remains unproven. Additional evaluation on real-world data is necessary to establish robustness under practical conditions.

Single-Frame Processing: The current experimental setup focuses on single-frame processing (static snapshots). The framework’s ability to suppress conflicts across multiple time frames or sequential observations (as would occur in continuous sensor streams) is not yet validated. This leaves uncertainty about performance in a multi-frame fusion context, where temporal consistency and accumulation of evidence are important (analogous to how SLAM systems integrate observations over time).

Simplified -Net Architecture: The implementation of -Net (which predicts discounting factors) uses a simple logistic regression model. This minimalist architecture might be inadequate for capturing complex spatial patterns or semantic relationships in the input data. In highly complex scenes, a simple linear model could fail to distinguish subtle context cues, potentially limiting the effectiveness of selective discounting in those cases.

Limited Evaluation Metrics: The evaluation criteria were centered on generic metrics such as ECE and mIoU. This study lacks specialized uncertainty metrics to directly assess the quality of the model’s uncertainty estimates or confidence judgments. This makes it difficult to fully quantify improvements in the reliability of the model’s predictions. A more exhaustive evaluation regime, including metrics tailored to uncertainty quantification, is needed for a comprehensive assessment.

4.13.2. Future Improvement Directions

Looking forward, several research directions and improvements can be pursued to address the above limitations and broaden the applicability of the proposed framework:

Real-World Dataset Evaluation: A critical next step is to validate the approach on real-world datasets (e.g., RELLIS-3D and Semantic KITTI) that reflect the complexities of autonomous driving environments. Constructing or using benchmarks with authentic noisy annotations will allow us to test the method’s generalization and robustness under realistic conditions. Successful real-data validation would confirm the framework’s practical value for intelligent transportation systems.

Multi-Frame (Sequential) Fusion: We plan to extend the method to multi-frame or sequential data fusion scenarios. This entails integrating observations across time (or from multiple views) and assessing the framework’s ability to consistently suppress conflicting evidence over successive frames. Such an extension is analogous to multi-view uncertainty propagation in SLAM, where maintaining a consistent global map from sequential sensor inputs is crucial. By designing specialized multi-frame experiments, we can verify whether the proposed selective discounting maintains map-level consistency and improved accuracy when information from different time steps is combined.

Enhanced -Net Architecture: Exploring more powerful architectures for -Net is another avenue for improvement. For instance, a convolutional neural network or an attention-based model could be used to allow the discounting network to capture complex spatial dependencies and semantic context. Incorporating such advanced AI architectures (while possibly borrowing ideas from perception networks in vision/SLAM systems) may improve the precision with which unreliable evidence is identified and weighted. This network optimization aims to enable the framework to handle more intricate patterns of noise and context in large-scale environments.

Theoretical Foundations and Analysis: On the theoretical side, further work is needed to establish a rigorous foundation for the selective discounting approach. Key properties such as bounded-error convergence of the iterative discounting updates and the conditions for maintaining consistency in the fused evidence should be formally analyzed. By investigating different discounting strategies within a unifying mathematical framework, we seek to ensure that the method’s steps are provably convergent and consistent (in a sense analogous to consistency proofs in SLAM algorithms). Such theoretical insights, including an analysis of Bayesian coherence of the calibrated-and-discounted outputs, would bolster confidence in the method’s reliability and guide principled improvements.

Broader Application and Extension: We intend to extend and apply the framework to broader contexts, including multi-modal sensor fusion and dynamic, real-time mapping scenarios. For example, combining data from LiDAR, camera, and radar within this evidential discounting scheme could enhance robustness in the presence of sensor-specific noise or failures. Likewise, handling dynamic scenes (objects in motion or changing environments) and incorporating temporal information naturally aligns with applications in simultaneous localization and mapping. By embedding the proposed conflict suppression and calibration mechanism into a SLAM pipeline, the approach could help maintain reliable, consistent world models even as conditions change. These extensions will test the method’s versatility and accelerate its adoption in complex, real-world transportation systems.

4.14. Qualitative Failure Analysis

Building on the quantitative gains reported in the previous subsections and the limitation discussion in

Section 4.13, this subsection takes a closer look at how the proposed selective discounting mechanism behaves in challenging corner cases. While

-Net consistently improves segmentation accuracy and calibration on the vast majority of scenes, it is inevitable that some rare but safety-critical situations remain difficult. A qualitative analysis of such cases helps clarify the practical boundaries of our approach and provides guidance for future extensions, rather than negating the overall benefits of the method.

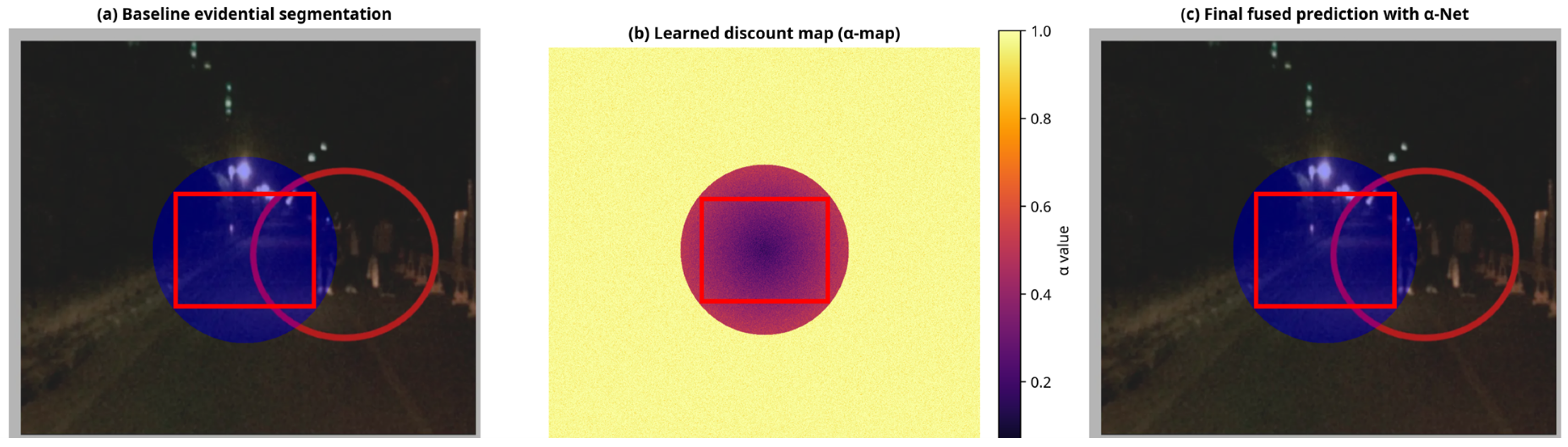

As illustrated in

Figure 12, a representative failure case occurs when a pedestrian walking close to a vehicle is heavily blurred and partially occluded. In this scene, the baseline evidential segmentation network assigns high evidence to the “vehicle” class over the entire mixed region, so that the pedestrian is almost completely absorbed into the surrounding vehicle mask. The learned discount map produced by

-Net assigns low

values to the blurred area, indicating reduced trust in the local observations. However, because all available RGB cues in this region are already biased towards the vehicle class, the

-Net-enhanced prediction still misclassifies the pedestrian as a vehicle after discounting. In this extreme case, the final segmentation masks of the baseline and

-Net models are, therefore, visually almost identical, which is exactly what we expect when discounting alone cannot alter a strongly biased decision.

Figure 12 shows a representative failure case of the proposed selective discounting mechanism: (a) Baseline evidential segmentation result. (b) Learned discount map (

-map) visualized as a trust map. In (b), the dark region of the

-map corresponds to low

values, indicating reduced trust in the blurred area. (c) Final fused prediction with

-Net. Notably, the baseline and

-Net predictions in (a) and (c) are visually almost identical in this extreme case, which illustrates that learned discounting cannot overturn a strongly biased decision when all local observations are severely corrupted. In this scene, a pedestrian walking close to a vehicle is heavily blurred and partially occluded due to fast motion and camera shake. As a result, both the baseline evidential network and the

-Net-enhanced model misclassify the entire region as “vehicle”.

5. Conclusions and Outlook

In conclusion, this work presents a novel evidence-based conflict suppression framework that improves the reliability of semantic mapping in AI-driven transportation scenarios. The proposed approach is grounded in rigorous mathematical modeling: it integrates elements of Bayesian probability calibration with Dempster–Shafer theoretic discounting in a unified manner. Through a carefully designed selective discounting strategy, the framework is able to differentiate between high-confidence (clean) observations and low-confidence (noisy or conflicting) evidence in the input data. This selective treatment, guided by the lightweight -Net module, allows us to mitigate the impact of unreliable data without sacrificing the integrity of reliable observations. The result is a statistically consistent update mechanism that yields significantly lower calibration error and improved overall accuracy. Notably, our ablation studies confirmed each component’s contribution—the scaling discount formulation, the region-selective policy, and the initial temperature calibration all work in concert to produce a more reliable and mathematically sound estimation process.

Despite these promising results, the present study still has several limitations. First, most of the empirical validation has been conducted on synthetic data and controlled benchmarks, and the current -Net implementation relies on supervised reliability learning using proxy noise masks. This may limit scalability in low-label real-world settings where explicit noise annotations are unavailable. Second, even though the -Net branch is lightweight, introducing additional learnable parameters and calibration steps inevitably adds a small amount of computational overhead compared with fixed global discounting schemes. Finally, our evaluation has focused on generic segmentation and calibration metrics; more specialized uncertainty measures and safety-oriented criteria would be beneficial for a deeper assessment of reliability in safety-critical applications.

Future work will, therefore, focus on three directions: (i) validating the proposed framework on large-scale real-world datasets with naturally noisy labels; (ii) extending the selective discounting mechanism to sequential and multi-sensor scenarios, including real-time SLAM pipelines and active sensor-failure handling; and (iii) exploring more expressive yet still efficient architectures for -Net, as well as richer uncertainty metrics for rigorous safety evaluation.

The innovations of our methodology position it at the intersection of machine learning and robotic mapping. By enforcing Bayesian calibration consistency and evidence discounting principles, the approach ensures that the fused outputs remain interpretable as probabilistic estimates, an attribute crucial to downstream decision making in safety-critical systems. Moreover, the framework’s emphasis on bounded-error reasoning (only discounting within controlled limits) echoes the philosophies of consistency-driven SLAM approaches and provides a measure of guarantee on the error bounds of the produced estimates. From a practical perspective, the proposed solution addresses a pressing need in autonomous transportation and mobile robotics: it enhances the trustworthiness of perception outputs (such as semantic segmentation or mapping results) in the face of sensor noise and annotation errors. The positive experimental results, combined with the framework’s solid theoretical underpinnings, suggest that this approach can serve as a foundation for more robust multi-sensor fusion systems. In the future, we envision integrating this selective discounting mechanism into full SLAM pipelines and advanced driver-assistance systems, enabling them to maintain consistent world models even when confronted with contradictory or uncertain information. Such an integration would mark a significant step toward reliable, real-time mapping and localization in complex environments, ultimately contributing to safer and more dependable autonomous navigation.