1. Introduction

AI is revolutionizing several industries, including auditing and accounting [

1,

2,

3,

4,

5,

6,

7]. According to a recent study, AI offers new tools and techniques that have the potential to change present procedures and improve professional accuracy and efficacy [

8,

9,

10,

11]. User attitudes and perceptions have a substantial impact on technology uptake and effectiveness across a wide range of sectors. Davis developed the TAM in 1989, which provides a robust theoretical framework for examining the factors that influence technology acceptance. The TAM argues that perceived utility and ease of use are crucial markers of a person’s willingness to adopt new technologies.

This research utilizes the TAM to evaluate accounting professionals’ attitudes toward the usage of AI in their jobs. It examines usability and perceived utility, among other significant factors such as subjective norm and self-efficacy. Perceived utility is the extent to which users consider that AI will increase their work performance, whereas perceived ease of use (PEOU) is the extent to which users believe utilizing AI systems would be simple and straightforward [

12]. The literature has extensively investigated how AI may affect several sectors, with a focus on how AI might improve decision-making, automate time-consuming operations, and provide predictive insights [

13]. In 2022, Qader and collaborators investigated the effect of the TAM on accounting software adoption rates [

14]. They underlined the necessity of technical readiness, as well as the role of human resources in fostering the adoption of new technologies. Similar findings were achieved by Wicaksono and colleagues [

15], who discovered that perceived usefulness, simplicity of use, and strategic value all had a substantial impact on startup enterprises’ willingness to use accounting information systems.

There is a notable lack of research specifically exploring how users in accounting and auditing perceive AI through the lens of the TAM framework. Lazim and colleagues [

16] applied the TAM to study the acceptance of online learning among accounting students during the COVID-19 pandemic, revealing that PU and ease of use are critical in adopting new educational methods. Additionally, ref. [

17] highlighted the importance of self-efficacy and subjective norms in the adoption of e-learning within accounting education. Furthermore, ref. [

18] found that perceptions of utility and simplicity of use influence technological readiness and AI adoption in accounting. Nonetheless, no research has investigated the use of network analysis to map these views, resulting in a gap in the scientific literature.

The present research aims to close this gap by using a network analysis technique to map user views of AI in accounting and auditing. Network analysis enables a more in-depth knowledge of the links and interdependences between various perceptions and factors, offering a comprehensive view of how individuals interact with and perceive artificial intelligence. Authors [

19] utilized the TAM to evaluate the adoption of big data analytics in auditing, and they found that PU and PEOU directly affect audit quality. Furthermore, recent research [

20] presented a pre-adoption AI evaluation model in enterprises, highlighting the role of employee perceptions in technology acceptance. Schepman and Rodway [

21] verified the validity of the general attitudes toward AI scale, revealing connections with personality and general trust, emphasizing the complexities of views about AI. This novel method not only adds substantial theoretical value by broadening the applicability of TAM, but it also has evidence-based practical implications for the proper use of AI in accounting and auditing.

Literature Review

Davis [

22] created the TAM, which has served as a fundamental framework for comprehending technology adoption in a variety of disciplines. According to the TAM, two major characteristics, PU and PEOU, have a considerable impact on users’ intentions to embrace new technologies. According to Davis [

22], PU refers to the extent a person believes that using a particular technology would help them perform more effectively at work, while PEOU refers to how little effort the technology is thought to need. These ideas are critical to understanding how different industries accept and apply technological innovations.

The comparatively limited application of the TAM in accounting and auditing has highlighted a substantial gap in the literature. Recent research has focused on the TAM’s application in these specialized financial areas, particularly in terms of AI technology integration. For example, Qader et al. [

14] looked at how the TAM affected the adoption of accounting software and emphasized the importance of human resources and technology preparedness. Their findings confirm the TAM and show the importance of PU and PEOU in technological adoption in accounting contexts.

Furthermore, Wicaksono et al. [

15] investigated the impact of perceived utility, ease of use, and strategic value on the adoption of accounting information systems in startups. Their findings indicated that these TAM components are strong drivers of adoption intentions, highlighting the TAM’s usefulness in understanding technology acceptance in the financial sector.

Despite these contributions, there remains a substantial gap in the scientific body of knowledge regarding the application of the TAM to AI technologies, specifically within accounting and auditing. Prior research has predominantly focused on general technology acceptance or examined sectors outside financial services [

3,

4]. Relatively few studies have investigated the perceptions of AI in these specialized fields using the TAM. Lazim et al. [

16] extended the TAM to explore online learning acceptance among accounting students during the COVID-19 pandemic. While their study highlighted the critical role of PU and PEOU, it did not address the specific context of AI in professional accounting practices. Similarly, Khafit et al. [

17] examined e-learning in accounting education, emphasizing the role of self-efficacy and subjective norms, but did not explore AI technologies directly. A recent contribution by Damerji and Salimi [

18] investigated the mediation effects of user perceptions on AI adoption in accounting. Their findings suggest that perceptions of AI’s usefulness and ease of use mediate the relationship between technology readiness and AI adoption. However, their study did not employ network analysis to map these perceptions comprehensively.

The integration of network analysis into TAM research represents a novel approach to mapping user perceptions. Researchers may use network analysis to depict and study the complex interactions and interdependencies across numerous TAM components, including PU, PEOU, AI knowledge, general attitude, AI intention, subjective norms, and self-efficacy [

20,

21]. This study uses network analysis to give a more detailed understanding of how these constructs interact and impact one another in the context of AI adoption in accounting and auditing. Network analysis provides a thorough examination of the direct and indirect interactions between TAM constructs, providing insights into the underlying mechanisms that drive technology acceptance. This approach addresses the gap in the literature by mapping user perceptions of AI in accounting and auditing, a context where previous studies have not fully explored the interplay between different acceptance factors.

However, although the TAM remains widely adopted due to its parsimony and explanatory clarity, alternative theoretical models offer complementary perspectives on technology acceptance. The Unified Theory of Acceptance and Use of Technology (UTAUT) incorporates social influence and facilitating conditions, achieving stronger predictive performance for behavioral intention compared to individual belief-based models [

22]. Its extension, UTAUT2, further enhances explanatory power by integrating hedonic motivation, habit, and facilitating conditions, constructs shown to be relevant in contemporary digital technology adoption settings [

23]. The Theory of Planned Behavior (TPB) conceptualizes user intention as a product of attitudes, subjective norms, and perceived behavioral control, offering a robust lens when individual decision autonomy and normative pressures influence adoption behavior [

24]. Similarly, the Diffusion of Innovations (DOI) theory explains adoption through innovation attributes such as relative advantage, compatibility, and complexity, providing an organization-level perspective that remains highly relevant for AI diffusion research [

25]. Recent systematic evidence confirms DOI’s sustained relevance for emerging technology ecosystems, particularly AI-enabled organizational environments [

26].

Despite the explanatory strengths of these alternative frameworks, the TAM was selected for the present study due to its (1) direct focus on cognitive technology acceptance beliefs, (2) structural parsimony that supports relational analysis without construct over-specification, and (3) optimal compatibility with network analysis, which requires clearly defined, non-hierarchical constructs to map interdependencies between perceptual nodes. Grounded in this theoretical positioning, the present study operationalizes the TAM to investigate AI acceptance among professionals in accounting and auditing.

3. Results

The descriptive statistics for the study’s main variables indicate a range of perceptions and attitudes towards AI in the context of accounting and auditing. The mean scores and standard deviations for each construct were as follows: PU (M = 3.168, SD = 1.137), PEOU (M = 4.053, SD = 0.918), AI knowledge (M = 3.520, SD = 1.088), general attitude towards AI (M = 2.833, SD = 1.081), AI intention (M = 3.654, SD = 0.984), subjective norms (M = 3.400, SD = 1.069), and self-efficacy (M = 2.682, SD = 1.390).

The Kaiser–Meyer–Olkin (KMO) measure of sample adequacy, which was 0.856, indicated that the data was suitable for factor analysis. Individual KMO values for each item varied from 0.694 to 0.930, all exceeding the acceptable threshold of 0.6, demonstrating each variable’s suitability for factor analysis. Bartlett’s sphericity test was significant (X2(210) = 10535.852, p < 0.001), indicating that the correlation matrix was not an identity matrix and the data were eligible for factor analysis.

The results of exploratory factor analysis were corroborated by a significant Chi-squared test for model fit (X2(84) = 151.398, p < 0.001), indicating a statistically significant model that explains the observed variables. These findings lend support to the structure and validity of the adapted TAM in this context, stressing its usefulness in measuring perceptions and intentions about AI in accounting and auditing.

The confirmatory factor analysis (CFA) was conducted to validate the factorial structure of the theoretical model used in the study. The analysis identified seven factors: PU, PEOU, AI knowledge, general attitude, AI intention, subjective norms, and self-efficacy. Each factor was represented by several items, with factor loadings indicating the degree to which each item correlated with its respective underlying construct.

Confirmatory factor analysis (CFA) was applied because the seven latent constructs were theoretically predefined by the TAM and its extensions, rather than emerging from an exploratory process. CFA allows testing of the hypothesized factor structure and provides goodness-of-fit indices that evaluate how well the empirical data conform to the theoretical model. In contrast, exploratory factor analysis (EFA) or Principal Component Analysis (PCA) are appropriate for data-driven construct discovery but do not verify theoretical dimensionality. To check robustness, an additional EFA was performed, yielding an identical seven-factor configuration with loadings above 0.70, confirming the stability of the construct structure obtained through CFA (

Table 1). Reliability and validity were further supported by Cronbach’s α values above 0.80 and AVE values exceeding 0.50.

Items that measure perceived utility include item3PU (0.923), item2PU (0.912), and item1PU (0.724), which account for 13% of the variance. This construct analyzes users’ beliefs about how AI will boost their job effectiveness and efficiency. PEOU is captured by item3PEOU (0.985), item2PEOU (0.775), and item1PEOU (0.778), which together explain 12.7% of the variance. It measures users’ views on how straightforward and effortless it is to operate AI systems in their tasks. The factor of AI Knowledge includes item3AIK (0.831), item2AIK (1.001), and item1AIK (0.865), explaining 12% of the variance. This construct refers to users’ self-assessment of their expertise and familiarity with AI technologies. General attitude towards AI is represented by item3GA (0.810), item2GA (0.867), and item1GA (0.705), accounting for 11.5% of the variance. This construct refers to users’ overall positive feelings about AI’s potential benefits for society and personal well-being. AI intention is assessed by item3AII (0.781), item2AII (0.936), and item1AII (0.734), which together explain 10.5% of the variance. This construct indicates users’ future intentions and plans to incorporate AI into their work routines. Subjective norms are measured by item3SN (0.870), item2SN (0.824), and item1SN (0.962), explaining 10.1% of the variance. It underlines the role of social influence and peer opinions in shaping users’ decisions to use AI technologies. Lastly, self-efficacy is assessed by item3SE (0.919), item2SE (0.952), and item1SE (0.968), accounting for 9.1% of the variance. This construct measures users’ confidence in their ability to effectively learn and use AI technologies.

The model’s fit indices indicate an excellent match to the data, with RMSEA of 0.038 (90% CI: 0.028–0.048), SRMR of 0.007, Tucker-Lewis Index (TLI) of 0.984, and CFI of 0.993. The Bayesian Information Criterion (BIC) was −379.848, indicating the model’s adequacy. These findings demonstrate that the predicted seven-factor model effectively captures the underlying data structure, confirming the distinctness and coherence of the measured constructs.

Strong correlations between the study’s main factors are revealed by the correlation analysis, which uses Pearson’s coefficients and is consistent with the TAM (

Table 2). PU has a significant positive association (r = 0.224,

p < 0.001) with AI intention and a positive correlation (r = 0.462,

p < 0.001) with self-efficacy. This implies that individuals who believe AI systems can be helpful are more likely to think highly of their own abilities to operate them and to intend to do so in the future.

PEOU is directly correlated with AI intention (r = 0.482, p < 0.001) and AI knowledge (r = 0.569, p < 0.001), according to the study. This implies that people are more likely to wish to employ AI systems that are simpler to use and to feel informed about AI. It’s noteworthy to notice that self-efficacy and PEOU have a negative association (r = −0.115, p < 0.01). This could mean that individuals who find AI systems easy to use may not recognize how crucial it is to have strong self-efficacy when using these technologies.

Subjective norms (r = 0.550, p < 0.001) and AI intention (r = 0.541, p < 0.001) have a strong correlation with AI knowledge, suggesting the significance of social factors and awareness in influencing attitudes toward AI adoption. The overall attitude toward AI (r = 0.333, p < 0.001) and AI intention (r = 0.249, p < 0.001) are positively connected with subjective norms, suggesting the impact of social factors and public perceptions on AI intention. The correlation matrix also highlights some interesting findings. For example, there is a negative correlation (r = −0.261, p < 0.001) between self-efficacy and AI knowledge, indicating that having more confidence in using AI may not always translate into knowing more about it. This may suggest a discrepancy between perceived and real skill levels. The TAM paradigm, which contends that perceived utility and ease of use are critical factors influencing technology adoption, is generally supported by these results. The information backs up the theory’s claim that attitudes toward technology and perceived utility are influenced by PEOU, which in turn drives behavioral intentions.

Graph theory provides a mathematical framework for representing complex systems as networks composed of nodes (variables) and edges (statistical connections). In behavioral and information-system research, graph-theoretical modeling allows identification of central or bridging constructs that sustain the overall connectivity of the psychological system. Within the present study, graph-theoretical indices—strength, closeness, and betweenness—were computed to evaluate the relative importance of each TAM component in the acceptance network of accounting professionals. This approach extends traditional correlation or regression analyses by emphasizing the structural topology and interdependence pattern among constructs rather than isolated pairwise relationships.

Building on this theoretical foundation, the current analysis applies graph theory principles to visualize and quantify the relational dynamics among the constructs defined by the TAM. By translating statistical associations into a network structure, this approach highlights how individual beliefs and attitudes interact to form an integrated cognitive system of AI acceptance. The network representation thus serves as both a conceptual and analytical bridge—transforming inter-variable correlations into a topology that reveals clusters of influence, degrees of connectivity, and the relative centrality of each component within the overall model.

Network analysis was selected as the principal analytical method because it allows exploration of complex interrelationships among constructs without assuming unidirectional causality, as in regression or SEM models. While Structural Equation Modeling (SEM) and multiple regression approaches are effective for testing predefined causal paths, they tend to impose hierarchical dependencies that may oversimplify the relational dynamics among variables. In contrast, network modeling conceptualizes each construct as a node within a system of interconnected beliefs, making it possible to identify central, peripheral, and bridging factors that shape technology acceptance holistically. The network was estimated using the Gaussian Graphical Model (GGM) implemented in JASP v.0.18.3 and visualized with the qgraph package. Edge weights represent partial correlations between variables, and centrality metrics—strength, closeness, and betweenness—were computed to quantify the relative importance of each node in maintaining the overall connectivity of the system. Bootstrapped stability analyses were also performed to verify the robustness of the centrality indices.

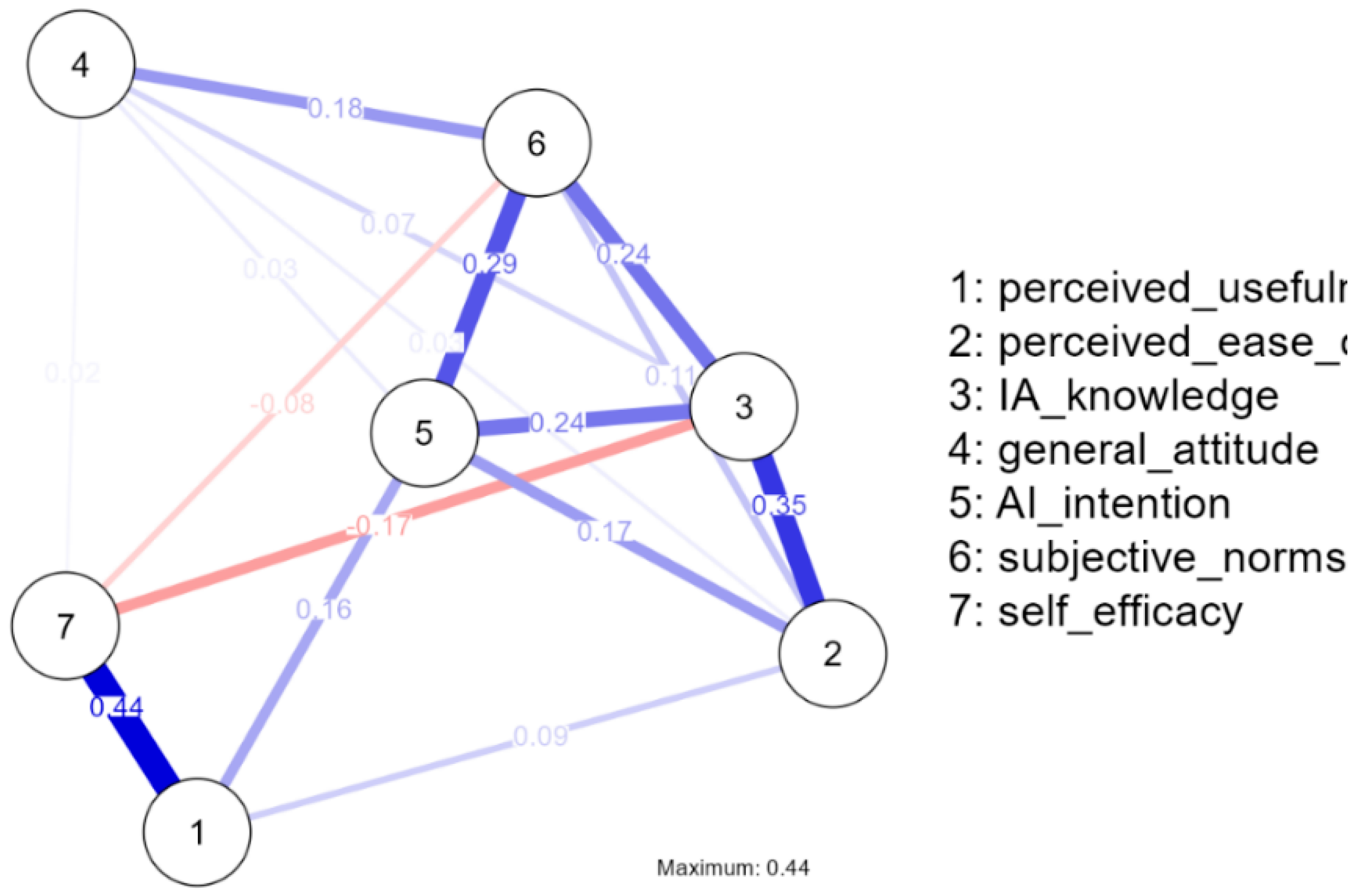

The network analysis identified seven principal variables: PU, PEOU, AI knowledge, general attitude, AI intention, subjective norms, and self-efficacy. The analysis revealed a network consisting of 7 nodes and 16 non-zero edges, with a sparsity of 0.238. This indicates a moderately dense network structure, suggesting a substantial level of interconnectivity among the variables, though not all potential relationships are present, as seen in

Table 3 and

Figure 1.

PU displayed a moderate level of centrality within the network, with a betweenness centrality of −0.545, a closeness centrality of −0.649, and a strength of −0.340. These metrics suggest that while PU is a relevant variable, it does not dominate the network in terms of overall influence or connectivity. PEOU exhibited lower strength and betweenness centrality values (strength = −0.096, betweenness = −0.968), yet it had a higher expected influence score (0.486). This suggests that although PEOU is not essential to the network, it is important in determining how users feel about adopting AI and may have an impact on how useful and effective they think the technology is. With a betweenness centrality of 1.574, a closeness centrality of 1.336, and a strength of 1.324, AI knowledge became apparent as a significant node in the network. This highlights the critical role that AI knowledge plays in affecting other variables in the network, indicating that users’ impressions and subsequent intents toward the adoption of AI in financial and accounting contexts are greatly impacted by a deeper grasp of AI.

General attitude was characterized by relatively low values across all centrality measures (betweenness = −0.968, closeness = −1.481, strength = −1.837), indicating limited centrality and influence in the network. Despite this, the variable’s expected influence was −1.136, suggesting that while it is less connected within the network, general attitudes towards AI could still have meaningful implications for technology acceptance in financial settings. AI intention demonstrated high closeness centrality (0.951) and strength (0.576), reflecting its strong connectivity and influence within the network. This finding is consistent with TAM’s assertion that behavioral intentions are significantly shaped by perceptions of usefulness and ease of use. The high centrality of AI Intention aligns with the model’s emphasis on intention as a critical predictor of actual technology adoption. Subjective Norms also showed substantial influence, with a betweenness centrality of 1.150 and a strength of 0.602. This emphasizes the importance of social influences and peer perceptions in shaping users’ decisions to adopt AI technologies, reinforcing the TAM’s consideration of external social factors in technology acceptance. Self-efficacy was associated with lower scores in betweenness and closeness centrality (−0.121 and −0.509, respectively), and a negative expected influence (−1.653). These results suggest that self-efficacy, while less central, may negatively impact users’ perceptions and adoption of AI technologies if individuals perceive themselves as less capable of effectively utilizing the technology.

The lines linking nodes in

Figure 1 represent the strength and direction of the relationships between variables, the network analysis graph. Red lines denote negative correlations, which show that when one variable rises, the associated variable decreases. On the other hand, blue lines indicate positive correlations, or those in which an increase in one variable is connected to an increase in the related variable. The thickness of the lines represents the strength of these relationships; smaller lines represent weaker links, while thicker lines suggest stronger ties.

4. Discussion

The findings of this study contribute significantly to our understanding of technology acceptance in the accounting and auditing domain by demonstrating how classical constructs of the TAM—PU and PEOU—interact with emerging variables such as AI knowledge and subjective norms in shaping intention to use AI. The moderate score for PU (M = 3.168) alongside the higher score for PEOU (M = 4.053) suggests that while respondents recognised AI as easy to use, its PU remains less firmly established. This pattern resonates with prior work, which found that PEOU often precedes and influences PU but that the transition from use-friendliness to perceived benefit is not automatic [

27].

The validated seven-factor structure—including Subjective Norms, AI Intention, General Attitude, PU, PEOU, and AI Knowledge—extends previous TAM applications in accounting contexts [

28]. Importantly, our network analysis revealed that AI knowledge emerged as a central node (high betweenness and closeness), signalling that the depth of users’ AI awareness plays an essential role in bridging other acceptance variables. This is aligned with findings by [

29], who documented that technology readiness (TR) significantly mediated the relationship between PU/PEOU and AI adoption, underscoring the importance of user competence in AI contexts.

The strong positive correlation between PEOU and AI Knowledge (r = 0.569) and between PEOU and AI Knowledge (r = 0.482) further reinforces the idea that ease of interaction with AI systems fosters greater knowledge acquisition, which in turn supports higher acceptance. This dynamic echoes findings by [

30], who emphasised that PEOU contributes to positive attitudes when users perceive adequate training and system support. Interestingly, the negative correlation between PEOU and self-efficacy (r = −0.115) suggests a nuanced trade-off: when users find AI systems extremely easy, their perceived self-efficacy may become less salient. This phenomenon echoes work by [

31], who observed that high system simplicity can undermine the perceived necessity for self-efficacy, thereby reducing proactive engagement.

Our application of network analysis, overlaying the TAM framework, provides novel insights into the acceptance process. Unlike traditional regression or SEM techniques, which focus on direct causal paths, network modeling treats constructs as inter-connected nodes, allowing us to visualise and quantify the structural topology of AI acceptance. This methodological hybridisation aligns with broader shifts in behavioural-technology research [

32]; such orientations enable scholars to capture system-wide dynamics rather than isolated relationships. In the auditing context, where professionals routinely operate in complex relational systems and under regulatory pressures, this approach is particularly suited—consistent with the audit-specific moderating factors identified [

32].

From a practical standpoint, the findings suggest that accounting and auditing organisations should emphasise usability and knowledge building in tandem. Since PEOU demonstrates a strong association with intention to use AI, system designers and organisational leaders must prioritise intuitive interfaces and user-centred design. Concurrently, because AI knowledge occupies a central position in the network, professional training programmes should focus not just on tool use but on foundational AI literacy [

33]. Attention to subjective norms—which represent social pressure and professional culture—further suggests that leadership endorsement, peer usage modelling, and professional community networks can accelerate AI uptake, echoing findings by [

34].

The relatively lower centrality of self-efficacy and general attitude indicates that while these factors matter, they may play more peripheral roles in the specific network of AI acceptance among accounting professionals. In practice, this means that interventions focused exclusively on boosting confidence may be less effective than those embedding confidence within broader training and usability initiatives—thus shifting the emphasis from individualised self-motivation to integrated system-organisation strategy.

Finally, this study highlights several avenues for future research. First, longitudinal studies could examine how network centralities evolve as AI becomes more embedded in auditing and accounting workflows. Second, cross-cultural comparative studies can validate the network structure of AI acceptance in different regulatory and professional environments (e.g., the Middle East, Asia, Europe). Third, researchers could apply dynamic network models, incorporating temporal stability and change in inter-construct relationships, an approach foreshadowed by Wolfe and collaborators [

35]. Fourth, integrating ethical, governance and accountability dimensions [

36] into network-TAM models would extend the theory toward responsible AI adoption in professional services.

In conclusion, by combining the TAM and network analysis, this study provides a more nuanced map of AI acceptance among accounting and auditing professionals. The results underscore that ease of use and knowledge are essential; usability alone—but coupled with literacy—is the pathway toward meaningful AI adoption in these complex professional settings.

5. Conclusions

This research investigated the acceptance of AI among professionals in accounting and auditing using the TAM and network analysis. The findings reveal that PU, PEOU, subjective norms, and AI knowledge jointly shape users’ intention to adopt AI technologies, while self-efficacy and general attitude play more peripheral roles. The application of network analysis extended traditional TAM approaches by identifying central and bridging variables, highlighting how user cognition, social influence, and knowledge interact dynamically in shaping AI acceptance.

From a practical perspective, the results underscore several directions for professional and organizational practice.

First, accounting and auditing institutions should invest in AI literacy and continuous professional training. Since AI knowledge emerged as the most central node in the network, programs that enhance employees’ conceptual understanding and practical familiarity with AI can significantly increase acceptance and perceived usefulness.

Second, organizations are encouraged to design AI tools emphasizing usability and intuitive interfaces, as ease of use remains a strong determinant of adoption intention.

Third, leadership and peer influence play an important motivational role: by cultivating positive subjective norms—through mentorship, success stories, and transparent communication—organizations can foster a climate that normalizes and values AI integration.

Finally, confidence-building initiatives such as user guides, pilot testing, and feedback loops can reduce anxiety associated with automation and strengthen self-efficacy among employees.

Methodologically, this study demonstrates that combining the TAM with network analysis provides a richer understanding of the structural relationships among acceptance variables. The network approach captures indirect associations and interdependencies that linear models often overlook, offering a system-level perspective useful for both researchers and practitioners aiming to model digital transformation processes.

Several limitations should be acknowledged. The cross-sectional design restricts causal inference, and the use of convenience sampling limits the generalizability of the findings beyond the Romanian accounting sector. Future research should adopt longitudinal and cross-industry designs to capture changes in AI perceptions over time and across professional contexts. Additionally, integrating organizational culture, leadership style, and regulatory environment variables into TAM–network frameworks may yield a more comprehensive picture of technology adoption mechanisms. Finally, mixed-methods approaches combining quantitative modeling with interviews or focus groups could deepen understanding of the motivations and ethical concerns underpinning professional AI use.

This study contributes to the broader endeavor of fostering diversity, ethical awareness, and social responsibility within the evolving landscape of artificial intelligence. By integrating both theoretical and empirical perspectives through the TAM framework, it highlights the importance of responsible and inclusive AI adoption, particularly in professional contexts such as accounting and auditing. Despite its limitations, the research identifies key factors influencing AI acceptance and outlines several promising directions for future investigations aimed at deepening our understanding of technology adoption in financial environments.